Anthill is a small research project in building HA event-driven business apps with specific technologies and trade-offs (focus on transaction, e-commerce, retail).

Ideally I’d like to have a set of tooling to:

- capture a business model in a high-level domain language (more expressive than C#/Scala): scenarios, APIs, event contracts, projections and request handlers;

- use meta-model to generate a performant back-end implementation in a low level systems language with access to fast and stable consensus, networking and storage libraries;

- support rigorous testing and fast deterministic simulation of a cluster from the start;

- invest CPU hours instead of my time to find bugs in the domain model, HA logic, code generation and infrastructure libraries.

Ideally, I’ll reuse as much as possible from the existing body of software and knowledge, simply stitching together existing solutions with Lisp.

The idea is:

- we partition the entire load for scaling;

- each partition runs in a LMAX-style replication, where the master sits behind the load balancer (or load balancing is baked into the client), and processes all requests; followers simply consume events and update their own read models;

- when master fails, new requests are redirected to the next replica;

- if a client wants speed, it can wait for ACK only from the master; if it wants consistency, it would need to launch a transaction involving replica commits;

- if there are any problems - see TAPIR;

- we can either delegate cluster coordination to Consul or embed RAFT library for that;

- we also do a lot of simulation tests (it might be worth just to get a few dedicated servers and keep them humming) with fault injections.

Note: different replicas of the same data (e.g. user account on the owner server and it’s representation in some branch) are treated as separate models and not as different replicas within the same partition.

Note: I will most likely fail around step 2 of this list.

Let’s constrain ourselves. This will be very important for making decisions.

- focus on small operation (1-12 servers) with little money for DevOps;

- make the most out of the available hardware (If I need to do custom Linux images and userspace networking - so be it);

- use fictitious domains for reference implementations.

I’d like to solve problems encountered in similar systems before:

- entanged code that grows very fragile and expensive to maintain over the time;

- inefficient use of the hardware, making it expensive to scale operation to a large number of tenants and processes;

- noticeable DevOps and computing overhead on small scale (e.g. need to have Cassandra, ZooKeeper, Kafka clusters even for a small operation);

- lock-in with existing cloud vendors, inability to move some processes on premises;

- sensitivity to network problems (system relies on good network connection);

- no HA.

The ideal workflow would start with event storming session or any other collaborative way to capture domain model.

Data types and events would could be quickly captured in DSL (currently using Lisp syntax).

(pkg "user"

"Everything about user"

(spec id "User id" core/id)

(spec name "User name" core/name)

(spec email "User email" core/email))

(pkg "tenant"

(type ref "User thumbprint in all actions" [account/id user/id utc])

(evt registered "new account has just been registered" [ref account/id account/name])

(evt user-added "add new user to the system" [ref user/id user/name user/email])

(evt user-renamed "change display name of the user" [ref user/name user/name :as old-name])

(evt account-renamed "change display name of the account" [ref account/name account/name :as old-name]))This information is already enough to generate declarations for types, events, API requests and responses, event handlers:

// Mass - Weight with a dimension

type Mass struct {

WeightScale weight.Scale `json:"weightScale"`

WeightValue float64 `json:"weightValue"`

}

func (r *Mass) validate() (err error) {

return nil

}

func NewMass(weightScale weight.Scale, weightValue float64) *Mass {

return &Mass{weightScale, weightValue}

}This is similar to how DSL Tool (v2) works, so there is nothing new at this point.

In a sense we are using DSL tool to generate large chunks of the codebase similar to Omni sample.

However, with a few more DSL constructs we could generate domain-specific methods for reading and storing data in the local key-value database (e.g. LMDB) database, leveraging binary prefixes, subspaces and tuples to structure and manipulate data. Fortunately, FoundationDB left a lot of material on the subject.

For example for a simple index we could generate something like that:

func DeleteUserEmailIndex(tr db.Transaction, email user.Email) {

tr.Clear(db.Sub(EMAIL_INDEX_SPACE, email))

}

func SetUserEmailIndex(tr db.Transaction, email user.Email, userId user.Id) {

tr.Set(db.Sub(EMAIL_INDEX_SPACE, email), db.EncodeUInt64(userId))

}More complex operations (e.g. updating a large binary view) would require tapping into the serialization format capabilities. By using FlatBuffers or Cap’n Proto we could even perform some operations without memory allocations.

Use-cases like the one below could also be expressed in the DSL (along with the model) and rendered into the target language:

func when_post_inbox_task_then_event_is_published() *env.UseCase {

newTaskId := lang.NewTaskId()

return &env.UseCase{

Name: "When POST /task for inbox, then 2 events are published",

When: spec.PostJSON("/task", seq.Map{

"name": "NewTask",

"inbox": true,

}),

ThenResponse: spec.ReturnJSON(seq.Map{

"name": "NewTask",

"inbox": "true",

"taskId": newTaskId,

}),

ThenEvents: spec.Events(

lang.NewTaskAdded(IgnoreEventId, newTaskId, "NewTask"),

lang.NewTaskMovedToInbox(IgnoreEventId, newTaskId),

),

Where: spec.Where{

newTaskId: "sameTaskId",

IgnoreEventId: "ignore",

},

}

}If we rewrite bus from the Omni project to run all request and event handlers on a single thread, while simulating multiple nodes, this is enough to get started with some trivial simulation runs and failure injections.

At this point almost all code would either be generated from the DSL or imported from the infrastructure libraries, including schema checks and request wrappers (boring to write in go):

// RegisterNewRequest - register a new account

type RegisterNewRequest struct {

AccountName string `json:"accountName"`

UserName string `json:"userName"`

UserEmail string `json:"userEmail"`

}

func (r *RegisterNewRequest) validate() (err error) {

return nil

}

func registerNewRequest(h Handler, req *api.Request) api.Response {

var request RegisterNewRequest

if err := req.ParseBody(&request); err != nil {

return api.BadRequestResponse(err.Error())

}

if err := request.validate(); err != nil {

return api.BadRequestResponse(err.Error())

}

return h.RegisterNew(&request)

}However, actual implementations would need to be provided, according to the generated interfaces:

type Handler interface {

Add(req *AddRequest) api.Response

Delete(req *DeleteRequest) api.Response

Rename(req *RenameRequest) api.Response

Detail(req *DetailRequest) api.Response

}Theoretically, we could even capture bulk of the business logic in clojure (since it mostly deals with the data transformations in our target domains), leaving only the edge cases for the target language.

At this point, the most challenging part would be in building strongly consistent operations on top of unreliable event replication between the replica nodes. Fortunately:

- There is an existing inspiration in papers on commit protocols, state machines (e.g.: Building Consistent Transactions with Inconsistent Replication).

- There is a golang implementation of the Raft consensus protocol (kudos to Hashicorp).

- Codegen could help with generating state machines, while keeping them close to the business logic. After all, businesses have a long history of dealing with race conditions.

- Rigorous simulation testing could help in figuring out the bugs (or giving up early).

In a sense, we are replicating the approach taken by FoundationDB with their Flow language, cluster simulations and the general development approach.

Keep infrastructure libraries and generated implementations open source (they will probably be based on Omni project anyway).

Code generation (part that allows to reduce boilerplate) will be initially kept a trade secret.

I think that solution to these problems could be achieved by implementing following features:

- environment that is a pleasure to work with (highly subjective measurement ultimately related to productivity and delivery of features to real people);

- capturing the essence of the domain models via event-driven design (and reusing all the body of knowledge accumulated over this design);

- good test coverage (event-driven scenarios, cluster simulation, continuous performance testing, fault injection);

- designed to run efficiently on modern and existing hardware (native Linux support);

- simple devops story for HA deployments.

While it is nice to build a system that runs anywhere, doing that would incur extra costs. So I’m aiming to focus only on a Linux/Unix, skipping Windows support.

The goal is to build a system that has maximum throughput, while operating within specific SLAs. As long as response latency stays within the limit, we optimise for the throughput (namely heavy batching disk and network IO operations).

If latency goes above the threshold, we start bouncing back new requests, in order to maintain the SLA.

The system will pull consistency controls into the application logic.

For operation where the cost of eventual consistency or staleness is noticeable for the business, we prefer to that system takes a few more moments to process the request, while double-checking everything.

Examples of such operations are: over-picking, overselling, overdraft.

For operations where we prefer the system to have high availability and throughput (while tolerating possible of eventual consistency), we’d skip tight concurrency controls and let the system reach consistency a few moments after finishing the operation.

Examples: over-draft by a small amount within the account quote, overselling items which could be back-ordered quickly.

As you’ve probably noticed, the same operation could operate under different consistency rules, depending on the situation.

This is an opinionated tech map.

| Tech | I like | Drawbacks for me | |

|---|---|---|---|

| Store | |||

| Cassandra | Adopted and supported, can scale views | DevOps overhead, inefficient use of hw | |

| ScyllaDB | Competent team, more perf than Cassandra | Young, misses some Cassandra features | |

| FoundationDB | Everything, they taught layers well | RIP, no support | |

| SQLite | Embedded, widely used, fast | SQL overhead, roll your own HA | |

| RocksDB | Embedded, fast | key-value, worse performance than LMDB | |

| LMDB | Embedded, fast, predictable and simple | Niche, needs custom data layer and HA | ✓ |

| Platform | |||

| .NET/C# | Great platform and momentum | Linux support is young, limited libs | |

| Java/Scala | Polished Linux, Adopted, good libs | Slow compilation, fat VM | |

| Clojure | Lisp with all Java benefits | Niche adoption and all Java drawbacks | |

| Erlang | Low-latency, REPL, functional, great VM | Latency over throughput | |

| golang | good libs and perf, designed for codegen | Depends on google | ✓ |

| Rust | low-level, burrow, good libs | Slower compilation, depends on Mozilla | ? |

| C++ | low-level, a lot of libraries | Slow compilation, text macros, messy | |

| C | low-level, simple | Essentially a higher assembly language | ? |

| Serialize | |||

| Protobuf | Adopted, schema-based | Memory allocations | |

| msgpack | Adopted, shema-less | Verbose, overhead, memory allocations | |

| Cap’n Proto | Fewer mallocs, fast, opinionated | Depends on a small company, custom IDL | ✓ |

| FlatBuffers | Fewer mallocs, fast, by Google | Depends on Google, custom IDL | ? |

| Custom | Fewer mallocs, fast, can use bitstreams | Is DYI worth it? | ? |

| Network | |||

| HTTP/2 JSON | Fast, adopted, accessible | JSON and HTTP overhead | |

| UDP/Aeron | Avoids TCP/IP overhead, low-latency | Limited libs, needs more effort | ✓ |

| Apache Kafka | Adopted and supported, high scale | DevOps hungry, trying to become a DB |

- golang - simple language with good concurrency and performance, works well with code-generation (gofmt and fast compilation cycle).

- Lisp (Clojure or some flavor of Scheme) - for capturing domain logic and generating golang code.

- LMDB - embedded DB (B-Tree) designed for read-heavy operations. It is very simple and robust.

- Cap’n Proto/FlatBuffers - serialization format that avoids some memory allocations.

- Aeron/UDP with userspace networking - tech from the finance and high-frequency trading. It allows to skip some latencies and costs associated with the traditional use of networking stack.

Ideally it would be nice to have a system that supports:

- 1000 write transactions per second on a (non-virtualized) modern hardware with 2 CPU cores, 7GB or RAM, and a decent SSD.

- 20k reads per second on the same hardware at the same time.

- divide numbers by 2-3 for the virtualized hardware.

Numbers will be adjusted later.

- import Golang Omni backend (based on BeingTheWorst and HPC);

- rewrite it to match the new design (swapping storage to LMDB);

- implement target domains against this library;

- implement Lisp/Scheme DSL to capture domains and counter excessive golang verbosity.

In order to see how the system looks and behaves on a more realistic domain, I’ll use a few target domains:

- Micro-service provider - if you are running your own AWS or GCP tailored for a specific business niche.

- Automated Factory - with robots, assembly lines and order fulfillment.

On September 19th of 2023 AMD finally got its act together and delivered a fast and affordable ML platform running on PCIe backplane (FPGA and ARM SoC, PCIe SSD, AMD-FX and GPU integrated). This came as a total surprise to everybody, but this hardware was a perfect fit for training deep networks (with long-term memory!) via evolutionary algorithms. It was called Apprentice-FX and came with open drivers and software, making it extremely easy to buy, install and start training.

New kinds of businesses started showing up shortly after. People would buy a few of these, capture some aspects of their own expertise in their own field and sell as cheap consulting services to everybody. Micro-transactions and stable BitcoinV3 helped here as well.

New business model required new kind of accounting software - the one that could manage hundreds of thousands of open accounts and thousands of transactions per second.

Resource usage, accounts, profiles, subscriptions, invoices, billing periods, currencies, charges, deposits, balance, etc.

In 2027, further advancements in ML and manufacturing will finally pave way to fully automated warehouses. Pioneered by Amazon (and quickly followed by the rest of the industry), these factories would be built mainly in the deserts, where the land and power are cheap. Except for China, where they would be built everywhere.

These factories would contain large under underground warehouses and automated order fulfillment lines. Humans could order gadgets, clothes, equipment, customizing their orders with different upgrades, colors and accessories. The order would be immediately dispatched to the servicing factory, where a clever combination or logistics, automated manufacturing and transport system would produce a packaged order in a matter of minutes.

Rare and custom orders would need more time to back-order or 3D print.

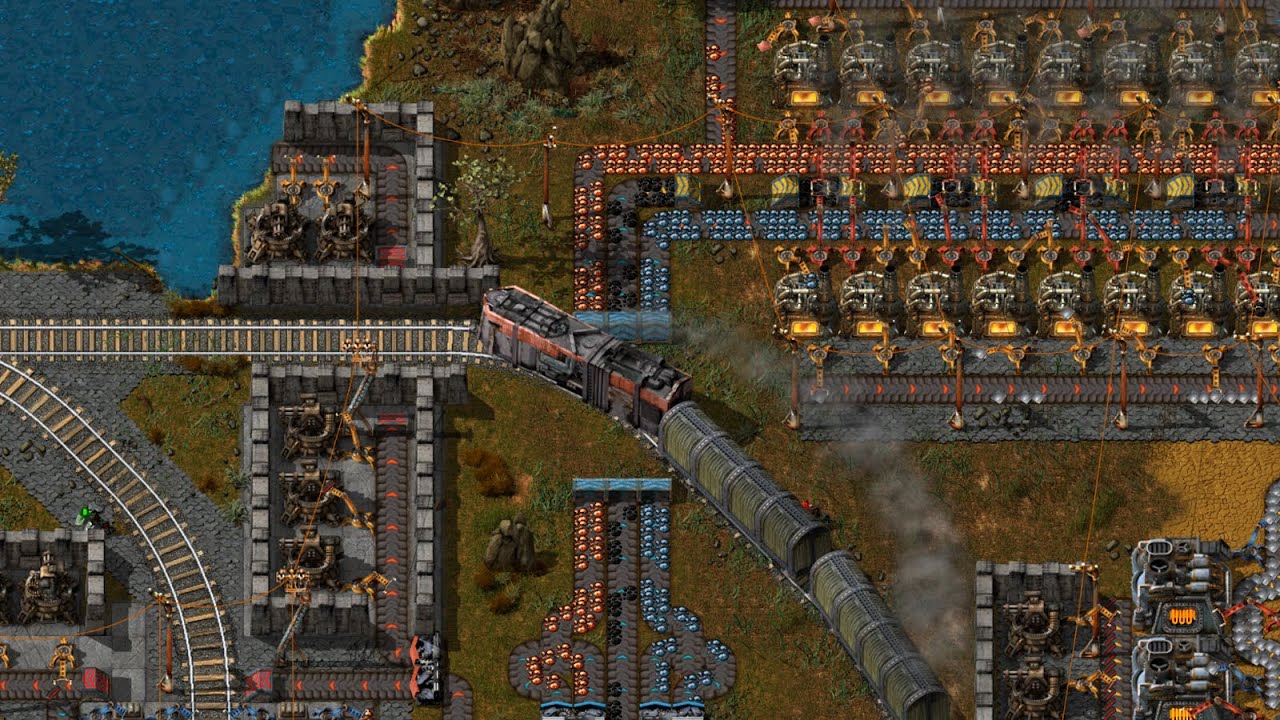

If you played Factorio, then you probably get the idea.

We need to build a software back-end capable of managing thousands of these factories.

Automated factory takes orders and runs them through internal pipelines delivering a packaged product ready for shipping.

An order consists of one or more order items, which are usually shipped together. One order item is one finished product.

This item may either be located in some underground warehouse at the moment of purchase or it even may not exist: require assembly, painting, manufacturing, 3D printing etc. These processes require some materials, equipment and logistic capacity.

In order to fulfill orders within the promised time frames Automated Factory:

- tracks goods, raw materials and equipment available for use at any given point of time;

- uses this information to estimate item availability and order fulfillment times before the checkout;

- manages re-supply (while taking into account vendor SLAs and lead times);

- optimizes use of automated manufacturing equipment and transport lines in order to reduce work in progress and increase factory throughput;

- reacts to any unexpected problems, broken equipment and lost goods (rodents and cockroaches are a frequent problem).

We want to simulate a cluster of nodes on a single thread similar to how FoundationDB used to do (see Testing Distributed Systems w/ Deterministic Simulation).

Linear-feedback shift register and Xorshift generators create pseudo-random number sequences of a good quality quickly. There is a good golang lib for that.