diff --git a/assets/contributors.csv b/assets/contributors.csv

index 8bb5761d47..39019b28e2 100644

--- a/assets/contributors.csv

+++ b/assets/contributors.csv

@@ -48,3 +48,4 @@ Chen Zhang,Zilliz,,,,

Tianyu Li,Arm,,,,

Georgios Mermigkis,VectorCamp,gMerm,georgios-mermigkis,,https://vectorcamp.gr/

Ben Clark,Arm,,,,

+Han Yin,Arm,hanyin-arm,nacosiren,,

diff --git a/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/2-app-scaffolding.md b/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/2-app-scaffolding.md

new file mode 100644

index 0000000000..f643771005

--- /dev/null

+++ b/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/2-app-scaffolding.md

@@ -0,0 +1,197 @@

+---

+title: Scaffold a new Android project

+weight: 2

+

+### FIXED, DO NOT MODIFY

+layout: learningpathall

+---

+

+This learning path will teach you to architect an app following [modern Android architecture](https://developer.android.com/courses/pathways/android-architecture) design with a focus on the [UI layer](https://developer.android.com/topic/architecture/ui-layer).

+

+## Development environment setup

+

+Download and install the latest version of [Android Studio](https://developer.android.com/studio/) on your host machine.

+

+This learning path's instructions and screenshots are taken on macOS with Apple Silicon, but you may choose any of the supported hardware systems as described [here](https://developer.android.com/studio/install).

+

+Upon first installation, open Android Studio and proceed with the default or recommended settings. Accept license agreements and let Android Studio download all the required assets.

+

+Before you proceed to coding, here are some tips that might come handy:

+

+{{% notice Tip %}}

+1. To navigate to a file, simply double-tap `Shift` key and input the file name, then select the correct result using `Up` & `Down` arrow keys and then tap `Enter`.

+

+2. Every time after you copy-paste a code block from this learning path, make sure you **import the correct classes** and resolved the errors. Refer to [this doc](https://www.jetbrains.com/help/idea/creating-and-optimizing-imports.html) to learn more.

+{{% /notice %}}

+

+## Create a new Android project

+

+1. Navigate to **File > New > New Project...**.

+

+2. Select **Empty Views Activity** in **Phone and Tablet** galary as shown below, then click **Next**.

+

+

+3. Proceed with a cool project name and default configurations as shown below. Make sure that **Language** is set to **Kotlin**, and that **Build configuration language** is set to **Kotlin DSL**.

+

+

+### Introduce CameraX dependencies

+

+[CameraX](https://developer.android.com/media/camera/camerax) is a Jetpack library, built to help make camera app development easier. It provides a consistent, easy-to-use API that works across the vast majority of Android devices with a great backward-compatibility.

+

+1. Wait for Android Studio to sync project with Gradle files, this make take up to several minutes.

+

+2. Once project is synced, navigate to `libs.versions.toml` in your project's root directory as shown below. This file serves as the version catalog for all dependencies used in the project.

+

+

+

+{{% notice Info %}}

+

+For more information on version catalogs, please refer to [this doc](https://developer.android.com/build/migrate-to-catalogs).

+

+{{% /notice %}}

+

+3. Append the following line to the end of `[versions]` section. This defines the version of CameraX libraries we will be using.

+```toml

+camerax = "1.4.0"

+```

+

+4. Append the following lines to the end of `[libraries]` section. This declares the group, name and version of CameraX dependencies.

+

+```toml

+camera-core = { group = "androidx.camera", name = "camera-core", version.ref = "camerax" }

+camera-camera2 = { group = "androidx.camera", name = "camera-camera2", version.ref = "camerax" }

+camera-lifecycle = { group = "androidx.camera", name = "camera-lifecycle", version.ref = "camerax" }

+camera-view = { group = "androidx.camera", name = "camera-view", version.ref = "camerax" }

+```

+

+5. Navigate to `build.gradle.kts` in your project's `app` directory, then insert the following lines into `dependencies` block. This introduces the above dependencies into the `app` subproject.

+

+```kotlin

+ implementation(libs.camera.core)

+ implementation(libs.camera.camera2)

+ implementation(libs.camera.lifecycle)

+ implementation(libs.camera.view)

+```

+

+## Enable view binding

+

+1. Within the above `build.gradle.kts` file, append the following lines to the end of `android` block to enable view binding feature.

+

+```kotlin

+ buildFeatures {

+ viewBinding = true

+ }

+```

+

+2. You should be seeing a notification shows up, as shown below. Click **"Sync Now"** to sync your project.

+

+

+

+{{% notice Tip %}}

+

+You may also click the __"Sync Project with Gradle Files"__ button in the toolbar or pressing the corresponding shorcut to start a sync.

+

+

+{{% /notice %}}

+

+3. Navigate to `MainActivity.kt` source file and make following changes. This inflates the layout file into a view binding object and stores it in a member variable within the view controller for easier access later.

+

+

+

+## Configure CameraX preview

+

+1. **Replace** the placeholder "Hello World!" `TextView` within the layout file `activity_main.xml` with a camera preview view:

+

+```xml

+

+```

+

+

+2. Add the following member variables to `MainActivity.kt` to store camera related objects:

+

+```kotlin

+ // Camera

+ private var camera: Camera? = null

+ private var cameraProvider: ProcessCameraProvider? = null

+ private var preview: Preview? = null

+```

+

+3. Add two new private methods named `setupCamera()` and `bindCameraUseCases()` within `MainActivity.kt`:

+

+```kotlin

+ private fun setupCamera() {

+ viewBinding.viewFinder.post {

+ cameraProvider?.unbindAll()

+

+ ProcessCameraProvider.getInstance(baseContext).let {

+ it.addListener(

+ {

+ cameraProvider = it.get()

+

+ bindCameraUseCases()

+ },

+ Dispatchers.Main.asExecutor()

+ )

+ }

+ }

+ }

+

+ private fun bindCameraUseCases() {

+ // TODO: TO BE IMPLEMENTED

+ }

+```

+

+4. Implement the above `bindCameraUseCases()` method:

+

+```kotlin

+private fun bindCameraUseCases() {

+ val cameraProvider = cameraProvider

+ ?: throw IllegalStateException("Camera initialization failed.")

+

+ val cameraSelector =

+ CameraSelector.Builder().requireLensFacing(CameraSelector.LENS_FACING_FRONT).build()

+

+ // Only using the 4:3 ratio because this is the closest to MediaPipe models

+ val resolutionSelector =

+ ResolutionSelector.Builder()

+ .setAspectRatioStrategy(AspectRatioStrategy.RATIO_4_3_FALLBACK_AUTO_STRATEGY)

+ .build()

+ val targetRotation = viewBinding.viewFinder.display.rotation

+

+ // Preview usecase.

+ preview = Preview.Builder()

+ .setResolutionSelector(resolutionSelector)

+ .setTargetRotation(targetRotation)

+ .build()

+

+ // Must unbind the use-cases before rebinding them

+ cameraProvider.unbindAll()

+

+ try {

+ // A variable number of use-cases can be passed here -

+ // camera provides access to CameraControl & CameraInfo

+ camera = cameraProvider.bindToLifecycle(

+ this, cameraSelector, preview,

+ )

+

+ // Attach the viewfinder's surface provider to preview use case

+ preview?.surfaceProvider = viewBinding.viewFinder.surfaceProvider

+ } catch (exc: Exception) {

+ Log.e(TAG, "Use case binding failed", exc)

+ }

+ }

+```

+

+5. Add a [companion object](https://kotlinlang.org/docs/object-declarations.html#companion-objects) to `MainActivity.kt` and declare a `TAG` constant value for `Log` calls to work correctly. This companion object comes handy for us to define all the constants and shared values accessible across the entire class.

+

+```kotlin

+ companion object {

+ private const val TAG = "MainActivity"

+ }

+```

+

+In the next chapter, we will build and run the app to make sure the camera works well.

diff --git a/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/3-camera-permission.md b/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/3-camera-permission.md

new file mode 100644

index 0000000000..1214369767

--- /dev/null

+++ b/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/3-camera-permission.md

@@ -0,0 +1,118 @@

+---

+title: Handle camera permission

+weight: 3

+

+### FIXED, DO NOT MODIFY

+layout: learningpathall

+---

+

+## Run the app on your device

+

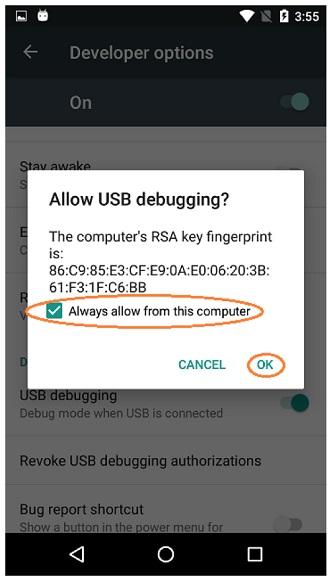

+1. Connect your Android device to your computer via a USB **data** cable. If this is your first time running and debugging Android apps, follow [this guide](https://developer.android.com/studio/run/device#setting-up) and double check this checklist:

+

+ 1. You have enabled **USB debugging** on your Android device following [this doc](https://developer.android.com/studio/debug/dev-options#Enable-debugging).

+

+ 2. You have confirmed by tapping "OK" on your Android device when an **"Allow USB debugging"** dialog pops up, and checked "Always allow from this computer".

+

+

+

+

+2. Make sure your device model name and SDK version correctly show up on the top right toolbar. Click the **"Run"** button to build and run, as described [here](https://developer.android.com/studio/run).

+

+3. After waiting for a while, you should be seeing success notification in Android Studio and the app showing up on your Android device.

+

+4. However, the app shows only a black screen while printing error messages in your [Logcat](https://developer.android.com/tools/logcat) which looks like this:

+

+```

+2024-11-20 11:15:00.398 18782-18818 Camera2CameraImpl com.example.holisticselfiedemo E Camera reopening attempted for 10000ms without success.

+2024-11-20 11:30:13.560 667-707 BufferQueueProducer pid-667 E [SurfaceView - com.example.holisticselfiedemo/com.example.holisticselfiedemo.MainActivity#0](id:29b00000283,api:4,p:2657,c:667) queueBuffer: BufferQueue has been abandoned

+2024-11-20 11:36:13.100 20487-20499 isticselfiedem com.example.holisticselfiedemo E Failed to read message from agent control socket! Retrying: Bad file descriptor

+2024-11-20 11:43:03.408 2709-3807 PackageManager pid-2709 E Permission android.permission.CAMERA isn't requested by package com.example.holisticselfiedemo

+```

+

+5. Worry not. This is expected behavior because we haven't correctly configured this app's [permissions](https://developer.android.com/guide/topics/permissions/overview) yet, therefore Android OS restricts this app's access to camera features due to privacy reasons.

+

+## Request camera permission at runtime

+

+1. Navigate to `manifest.xml` in your `app` subproject's `src/main` path. Declare camera hardware and permission by inserting the following lines into the `` element. Make sure it's **outside** and **above** `` element.

+

+```xml

+

+

+```

+

+2. Navigate to `strings.xml` in your `app` subproject's `src/main/res/values` path. Insert the following lines of text resources, which will be used later.

+

+```xml

+ Camera permission is required to recognize face and hands

+ To grant Camera permission to this app, please go to system settings

+```

+

+3. Navigate to `MainActivity.kt` and add the following permission related values to companion object:

+

+```kotlin

+ // Permissions

+ private val PERMISSIONS_REQUIRED = arrayOf(Manifest.permission.CAMERA)

+ private const val REQUEST_CODE_CAMERA_PERMISSION = 233

+```

+

+4. Add a new method named `hasPermissions()` to check on runtime whether camera permission has been granted:

+

+```kotlin

+ private fun hasPermissions(context: Context) = PERMISSIONS_REQUIRED.all {

+ ContextCompat.checkSelfPermission(context, it) == PackageManager.PERMISSION_GRANTED

+ }

+```

+

+5. Add a condition check in `onCreate()` wrapping `setupCamera()` method, to request camera permission on runtime.

+

+```kotlin

+ if (!hasPermissions(baseContext)) {

+ requestPermissions(

+ arrayOf(Manifest.permission.CAMERA),

+ REQUEST_CODE_CAMERA_PERMISSION

+ )

+ } else {

+ setupCamera()

+ }

+```

+

+6. Override `onRequestPermissionsResult` method to handle permission request results:

+

+```kotlin

+ override fun onRequestPermissionsResult(

+ requestCode: Int,

+ permissions: Array,

+ grantResults: IntArray

+ ) {

+ when (requestCode) {

+ REQUEST_CODE_CAMERA_PERMISSION -> {

+ if (PackageManager.PERMISSION_GRANTED == grantResults.getOrNull(0)) {

+ setupCamera()

+ } else {

+ val messageResId =

+ if (shouldShowRequestPermissionRationale(Manifest.permission.CAMERA))

+ R.string.permission_request_camera_rationale

+ else

+ R.string.permission_request_camera_message

+ Toast.makeText(baseContext, getString(messageResId), Toast.LENGTH_LONG).show()

+ }

+ }

+ else -> super.onRequestPermissionsResult(requestCode, permissions, grantResults)

+ }

+ }

+```

+

+## Verify camera permission

+

+1. Rebuild and run the app. Now you should be seeing a dialog pops up requesting camera permissions!

+

+2. Tap `Allow` or `While using the app` (depending on your Android OS versions), then you should be seeing your own face in the camera preview. Good job!

+

+{{% notice Tip %}}

+Sometimes you might need to restart the app to observe the permission change take effect.

+{{% /notice %}}

+

+In the next chapter, we will introduce MediaPipe vision solutions.

diff --git a/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/4-introduce-mediapipe.md b/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/4-introduce-mediapipe.md

new file mode 100644

index 0000000000..0c743ef948

--- /dev/null

+++ b/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/4-introduce-mediapipe.md

@@ -0,0 +1,434 @@

+---

+title: Integrate MediaPipe solutions

+weight: 4

+

+### FIXED, DO NOT MODIFY

+layout: learningpathall

+---

+

+[MediaPipe Solutions](https://ai.google.dev/edge/mediapipe/solutions/guide) provides a suite of libraries and tools for you to quickly apply artificial intelligence (AI) and machine learning (ML) techniques in your applications.

+

+MediaPipe Tasks provides the core programming interface of the MediaPipe Solutions suite, including a set of libraries for deploying innovative ML solutions onto devices with a minimum of code. It supports multiple platforms, including Android, Web / JavaScript, Python, etc.

+

+## Introduce MediaPipe dependencies

+

+1. Navigate to `libs.versions.toml` and append the following line to the end of `[versions]` section. This defines the version of MediaPipe library we will be using.

+

+```toml

+mediapipe-vision = "0.10.15"

+```

+

+{{% notice Note %}}

+Please stick with this version and do not use newer versions due to bugs and unexpected behaviors.

+{{% /notice %}}

+

+2. Append the following lines to the end of `[libraries]` section. This declares MediaPipe's vision dependency.

+

+```toml

+mediapipe-vision = { group = "com.google.mediapipe", name = "tasks-vision", version.ref = "mediapipe-vision" }

+```

+

+3. Navigate to `build.gradle.kts` in your project's `app` directory, then insert the following line into `dependencies` block, ideally between `implementation` and `testImplementation`.

+

+```kotlin

+implementation(libs.mediapipe.vision)

+```

+

+## Prepare model asset bundles

+

+In this app, we will be using MediaPipe's [Face Landmark Detection](https://ai.google.dev/edge/mediapipe/solutions/vision/face_landmarker) and [Gesture Recognizer](https://ai.google.dev/edge/mediapipe/solutions/vision/gesture_recognizer) solutions, which requires their model asset bundle files to initialize.

+

+Choose one of the two options below that aligns best with your learning needs.

+

+### Basic approach: manual downloading

+

+Simply download the following two files, then move them into the default asset directory: `app/src/main/assets`.

+

+```

+https://storage.googleapis.com/mediapipe-models/face_landmarker/face_landmarker/float16/1/face_landmarker.task

+

+https://storage.googleapis.com/mediapipe-models/gesture_recognizer/gesture_recognizer/float16/1/gesture_recognizer.task

+```

+

+{{% notice Tip %}}

+You might need to create the `assets` directory if not exist.

+{{% /notice %}}

+

+### Advanced approach: configure prebuild download tasks

+

+Gradle doesn't come with a convenient [Task](https://docs.gradle.org/current/userguide/tutorial_using_tasks.html) type to manage downloads, therefore we will introduce [gradle-download-task](https://github.com/michel-kraemer/gradle-download-task) dependency.

+

+1. Again, navigate to `libs.versions.toml`. Append `download = "5.6.0"` to `[versions]` section, and `de-undercouch-download = { id = "de.undercouch.download", version.ref = "download" }` to `[plugins]` section.

+

+2. Again, navigate to `build.gradle.kts` in your project's `app` directory and append `alias(libs.plugins.de.undercouch.download)` to the `plugins` block. This enables the aforementioned _Download_ task plugin in this `app` subproject.

+

+4. Insert the following lines between `plugins` block and `android` block to define the constant values, including: asset directory path and the URLs for both models.

+```kotlin

+val assetDir = "$projectDir/src/main/assets"

+val gestureTaskUrl = "https://storage.googleapis.com/mediapipe-models/gesture_recognizer/gesture_recognizer/float16/1/gesture_recognizer.task"

+val faceTaskUrl = "https://storage.googleapis.com/mediapipe-models/face_landmarker/face_landmarker/float16/1/face_landmarker.task"

+```

+

+5. Insert `import de.undercouch.gradle.tasks.download.Download` into **the top of this file**, then append the following code to **the end of this file**, which hooks two _Download_ tasks to be executed before `preBuild`:

+

+```kotlin

+tasks.register("downloadGestureTaskAsset") {

+ src(gestureTaskUrl)

+ dest("$assetDir/gesture_recognizer.task")

+ overwrite(false)

+}

+

+tasks.register("downloadFaceTaskAsset") {

+ src(faceTaskUrl)

+ dest("$assetDir/face_landmarker.task")

+ overwrite(false)

+}

+

+tasks.named("preBuild") {

+ dependsOn("downloadGestureTaskAsset", "downloadFaceTaskAsset")

+}

+```

+

+## Encapsulate MediaPipe vision tasks in a helper

+

+1. Sync project again.

+

+{{% notice Tip %}}

+Refer to [this section](2-app-scaffolding.md#enable-view-binding) if you need help.

+{{% /notice %}}

+

+2. Now you should be seeing both model asset bundles in your `assets` directory, as shown below:

+

+

+

+3. Now you are ready to import MediaPipe's Face Landmark Detection and Gesture Recognizer into the project. Actually, we have already implemented the code below for you based on [MediaPipe's sample code](https://github.com/google-ai-edge/mediapipe-samples/tree/main/examples). Simply create a new file `HolisticRecognizerHelper.kt` placed in the source directory along with `MainActivity.kt`, then copy paste the code below into it.

+

+```kotlin

+package com.example.holisticselfiedemo

+

+/*

+ * Copyright 2022 The TensorFlow Authors. All Rights Reserved.

+ *

+ * Licensed under the Apache License, Version 2.0 (the "License");

+ * you may not use this file except in compliance with the License.

+ * You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+import android.content.Context

+import android.graphics.Bitmap

+import android.graphics.Matrix

+import android.os.SystemClock

+import android.util.Log

+import androidx.annotation.VisibleForTesting

+import androidx.camera.core.ImageProxy

+import com.google.mediapipe.framework.image.BitmapImageBuilder

+import com.google.mediapipe.framework.image.MPImage

+import com.google.mediapipe.tasks.core.BaseOptions

+import com.google.mediapipe.tasks.core.Delegate

+import com.google.mediapipe.tasks.vision.core.RunningMode

+import com.google.mediapipe.tasks.vision.facelandmarker.FaceLandmarker

+import com.google.mediapipe.tasks.vision.facelandmarker.FaceLandmarkerResult

+import com.google.mediapipe.tasks.vision.gesturerecognizer.GestureRecognizer

+import com.google.mediapipe.tasks.vision.gesturerecognizer.GestureRecognizerResult

+

+class HolisticRecognizerHelper(

+ private var currentDelegate: Int = DELEGATE_GPU,

+ private var runningMode: RunningMode = RunningMode.LIVE_STREAM

+) {

+ var listener: Listener? = null

+

+ private var faceLandmarker: FaceLandmarker? = null

+ private var gestureRecognizer: GestureRecognizer? = null

+

+ fun setup(context: Context) {

+ setupFaceLandmarker(context)

+ setupGestureRecognizer(context)

+ }

+

+ fun shutdown() {

+ faceLandmarker?.close()

+ faceLandmarker = null

+ gestureRecognizer?.close()

+ gestureRecognizer = null

+ }

+

+ // Return running status of the recognizer helper

+ val isClosed: Boolean

+ get() = gestureRecognizer == null && faceLandmarker == null

+

+ // Initialize the Face landmarker using current settings on the

+ // thread that is using it. CPU can be used with Landmarker

+ // that are created on the main thread and used on a background thread, but

+ // the GPU delegate needs to be used on the thread that initialized the Landmarker

+ private fun setupFaceLandmarker(context: Context) {

+ // Set general face landmarker options

+ val baseOptionBuilder = BaseOptions.builder()

+

+ // Use the specified hardware for running the model. Default to CPU

+ when (currentDelegate) {

+ DELEGATE_CPU -> {

+ baseOptionBuilder.setDelegate(Delegate.CPU)

+ }

+

+ DELEGATE_GPU -> {

+ baseOptionBuilder.setDelegate(Delegate.GPU)

+ }

+ }

+

+ baseOptionBuilder.setModelAssetPath(MP_FACE_LANDMARKER_TASK)

+

+ try {

+ val baseOptions = baseOptionBuilder.build()

+ // Create an option builder with base options and specific

+ // options only use for Face Landmarker.

+ val optionsBuilder =

+ FaceLandmarker.FaceLandmarkerOptions.builder()

+ .setBaseOptions(baseOptions)

+ .setMinFaceDetectionConfidence(DEFAULT_FACE_DETECTION_CONFIDENCE)

+ .setMinTrackingConfidence(DEFAULT_FACE_TRACKING_CONFIDENCE)

+ .setMinFacePresenceConfidence(DEFAULT_FACE_PRESENCE_CONFIDENCE)

+ .setNumFaces(FACES_COUNT)

+ .setOutputFaceBlendshapes(true)

+ .setRunningMode(runningMode)

+

+ // The ResultListener and ErrorListener only use for LIVE_STREAM mode.

+ if (runningMode == RunningMode.LIVE_STREAM) {

+ optionsBuilder

+ .setResultListener(this::returnFaceLivestreamResult)

+ .setErrorListener(this::returnLivestreamError)

+ }

+

+ val options = optionsBuilder.build()

+ faceLandmarker =

+ FaceLandmarker.createFromOptions(context, options)

+ } catch (e: IllegalStateException) {

+ listener?.onFaceLandmarkerError(

+ "Face Landmarker failed to initialize. See error logs for " +

+ "details"

+ )

+ Log.e(

+ TAG, "MediaPipe failed to load the task with error: " + e

+ .message

+ )

+ } catch (e: RuntimeException) {

+ // This occurs if the model being used does not support GPU

+ listener?.onFaceLandmarkerError(

+ "Face Landmarker failed to initialize. See error logs for " +

+ "details", GPU_ERROR

+ )

+ Log.e(

+ TAG,

+ "Face Landmarker failed to load model with error: " + e.message

+ )

+ }

+ }

+

+ // Initialize the gesture recognizer using current settings on the

+ // thread that is using it. CPU can be used with recognizers

+ // that are created on the main thread and used on a background thread, but

+ // the GPU delegate needs to be used on the thread that initialized the recognizer

+ private fun setupGestureRecognizer(context: Context) {

+ // Set general recognition options, including number of used threads

+ val baseOptionBuilder = BaseOptions.builder()

+

+ // Use the specified hardware for running the model. Default to CPU

+ when (currentDelegate) {

+ DELEGATE_CPU -> {

+ baseOptionBuilder.setDelegate(Delegate.CPU)

+ }

+

+ DELEGATE_GPU -> {

+ baseOptionBuilder.setDelegate(Delegate.GPU)

+ }

+ }

+

+ baseOptionBuilder.setModelAssetPath(MP_RECOGNIZER_TASK)

+

+ try {

+ val baseOptions = baseOptionBuilder.build()

+ val optionsBuilder =

+ GestureRecognizer.GestureRecognizerOptions.builder()

+ .setBaseOptions(baseOptions)

+ .setMinHandDetectionConfidence(DEFAULT_HAND_DETECTION_CONFIDENCE)

+ .setMinTrackingConfidence(DEFAULT_HAND_TRACKING_CONFIDENCE)

+ .setMinHandPresenceConfidence(DEFAULT_HAND_PRESENCE_CONFIDENCE)

+ .setNumHands(HANDS_COUNT)

+ .setRunningMode(runningMode)

+

+ if (runningMode == RunningMode.LIVE_STREAM) {

+ optionsBuilder

+ .setResultListener(this::returnGestureLivestreamResult)

+ .setErrorListener(this::returnLivestreamError)

+ }

+ val options = optionsBuilder.build()

+ gestureRecognizer =

+ GestureRecognizer.createFromOptions(context, options)

+ } catch (e: IllegalStateException) {

+ listener?.onGestureError(

+ "Gesture recognizer failed to initialize. See error logs for " + "details"

+ )

+ Log.e(

+ TAG,

+ "MP Task Vision failed to load the task with error: " + e.message

+ )

+ } catch (e: RuntimeException) {

+ listener?.onGestureError(

+ "Gesture recognizer failed to initialize. See error logs for " + "details",

+ GPU_ERROR

+ )

+ Log.e(

+ TAG,

+ "MP Task Vision failed to load the task with error: " + e.message

+ )

+ }

+ }

+

+ // Convert the ImageProxy to MP Image and feed it to GestureRecognizer and FaceLandmarker.

+ fun recognizeLiveStream(

+ imageProxy: ImageProxy,

+ ) {

+ val frameTime = SystemClock.uptimeMillis()

+

+ // Copy out RGB bits from the frame to a bitmap buffer

+ val bitmapBuffer = Bitmap.createBitmap(

+ imageProxy.width, imageProxy.height, Bitmap.Config.ARGB_8888

+ )

+ imageProxy.use { bitmapBuffer.copyPixelsFromBuffer(imageProxy.planes[0].buffer) }

+ imageProxy.close()

+

+ val matrix = Matrix().apply {

+ // Rotate the frame received from the camera to be in the same direction as it'll be shown

+ postRotate(imageProxy.imageInfo.rotationDegrees.toFloat())

+

+ // flip image since we only support front camera

+ postScale(-1f, 1f, imageProxy.width.toFloat(), imageProxy.height.toFloat())

+ }

+

+ // Rotate bitmap to match what our model expects

+ val rotatedBitmap = Bitmap.createBitmap(

+ bitmapBuffer,

+ 0,

+ 0,

+ bitmapBuffer.width,

+ bitmapBuffer.height,

+ matrix,

+ true

+ )

+

+ // Convert the input Bitmap object to an MPImage object to run inference

+ val mpImage = BitmapImageBuilder(rotatedBitmap).build()

+

+ recognizeAsync(mpImage, frameTime)

+ }

+

+ // Run hand gesture recognition and face landmarker using MediaPipe Gesture Recognition API

+ @VisibleForTesting

+ fun recognizeAsync(mpImage: MPImage, frameTime: Long) {

+ // As we're using running mode LIVE_STREAM, the recognition result will

+ // be returned in returnLivestreamResult function

+ faceLandmarker?.detectAsync(mpImage, frameTime)

+ gestureRecognizer?.recognizeAsync(mpImage, frameTime)

+ }

+

+ // Return the landmark result to this helper's caller

+ private fun returnFaceLivestreamResult(

+ result: FaceLandmarkerResult,

+ input: MPImage

+ ) {

+ val finishTimeMs = SystemClock.uptimeMillis()

+ val inferenceTime = finishTimeMs - result.timestampMs()

+

+ listener?.onFaceLandmarkerResults(

+ FaceResultBundle(

+ result,

+ inferenceTime,

+ input.height,

+ input.width

+ )

+ )

+ }

+

+ // Return the recognition result to the helper's caller

+ private fun returnGestureLivestreamResult(

+ result: GestureRecognizerResult, input: MPImage

+ ) {

+ val finishTimeMs = SystemClock.uptimeMillis()

+ val inferenceTime = finishTimeMs - result.timestampMs()

+

+ listener?.onGestureResults(

+ GestureResultBundle(

+ listOf(result), inferenceTime, input.height, input.width

+ )

+ )

+ }

+

+ // Return errors thrown during recognition to this Helper's caller

+ private fun returnLivestreamError(error: RuntimeException) {

+ listener?.onGestureError(

+ error.message ?: "An unknown error has occurred"

+ )

+ }

+

+ companion object {

+ val TAG = "HolisticRecognizerHelper ${this.hashCode()}"

+

+ const val DELEGATE_CPU = 0

+ const val DELEGATE_GPU = 1

+

+ private const val MP_FACE_LANDMARKER_TASK = "face_landmarker.task"

+ const val FACES_COUNT = 2

+ const val DEFAULT_FACE_DETECTION_CONFIDENCE = 0.5F

+ const val DEFAULT_FACE_TRACKING_CONFIDENCE = 0.5F

+ const val DEFAULT_FACE_PRESENCE_CONFIDENCE = 0.5F

+ const val DEFAULT_FACE_SHAPE_SCORE_THRESHOLD = 0.2F

+

+ private const val MP_RECOGNIZER_TASK = "gesture_recognizer.task"

+ const val HANDS_COUNT = FACES_COUNT * 2

+ const val DEFAULT_HAND_DETECTION_CONFIDENCE = 0.5F

+ const val DEFAULT_HAND_TRACKING_CONFIDENCE = 0.5F

+ const val DEFAULT_HAND_PRESENCE_CONFIDENCE = 0.5F

+

+

+ const val OTHER_ERROR = 0

+ const val GPU_ERROR = 1

+ }

+

+ interface Listener {

+ fun onFaceLandmarkerResults(resultBundle: FaceResultBundle)

+ fun onFaceLandmarkerError(error: String, errorCode: Int = OTHER_ERROR)

+

+ fun onGestureResults(resultBundle: GestureResultBundle)

+ fun onGestureError(error: String, errorCode: Int = OTHER_ERROR)

+ }

+}

+

+data class FaceResultBundle(

+ val result: FaceLandmarkerResult,

+ val inferenceTime: Long,

+ val inputImageHeight: Int,

+ val inputImageWidth: Int,

+)

+

+data class GestureResultBundle(

+ val results: List,

+ val inferenceTime: Long,

+ val inputImageHeight: Int,

+ val inputImageWidth: Int,

+)

+```

+

+{{% notice Info %}}

+In this learning path we are only configuring the MediaPipe vision solutions to recognize one person with at most two hands in the camera.

+

+If you'd like to experiment with more people, simply change the `FACES_COUNT` constant to be your desired value.

+{{% /notice %}}

+

+In the next chapter, we will connect the dots from this helper class to the UI layer via a ViewModel.

diff --git a/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/5-view-model-setup.md b/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/5-view-model-setup.md

new file mode 100644

index 0000000000..b3b070f106

--- /dev/null

+++ b/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/5-view-model-setup.md

@@ -0,0 +1,169 @@

+---

+title: Manage UI state with ViewModel

+weight: 5

+

+### FIXED, DO NOT MODIFY

+layout: learningpathall

+---

+

+The `ViewModel` class is a [business logic or screen level state holder](https://developer.android.com/topic/architecture/ui-layer/stateholders). It exposes state to the UI and encapsulates related business logic. Its principal advantage is that it caches state and persists it through configuration changes. This means that your UI doesn’t have to fetch data again when navigating between activities, or following configuration changes, such as when rotating the screen.

+

+## Introduce Jetpack Lifecycle dependency

+

+1. Navigate to `libs.versions.toml` and append the following line to the end of `[versions]` section. This defines the version of the Jetpack Lifecycle libraries we will be using.

+

+```toml

+lifecycle = "2.8.7"

+```

+

+2. Insert the following line to `[libraries]` section, ideally between `androidx-appcompat` and `material`. This declares Jetpack Lifecycle ViewModel Kotlin extension.

+

+```toml

+androidx-lifecycle-viewmodel = { group = "androidx.lifecycle", name = "lifecycle-viewmodel-ktx", version.ref = "lifecycle" }

+```

+

+3. Navigate to `build.gradle.kts` in your project's `app` directory, then insert the following line into `dependencies` block, ideally between `implementation(libs.androidx.constraintlayout)` and `implementation(libs.camera.core)`.

+

+```kotlin

+implementation(libs.androidx.lifecycle.viewmodel)

+```

+

+## Access the helper via a ViewModel

+

+1. Create a new file named `MainViewModel.kt` and place it into the same directory of `MainActivity.kt` and other files, then copy the code below into it.

+

+```kotlin

+package com.example.holisticselfiedemo

+

+import android.app.Application

+import android.util.Log

+import androidx.lifecycle.ViewModel

+import androidx.lifecycle.viewModelScope

+import kotlinx.coroutines.launch

+

+class MainViewModel : ViewModel(), HolisticRecognizerHelper.Listener {

+

+ private val holisticRecognizerHelper = HolisticRecognizerHelper()

+

+ fun setupHelper(context: Context) {

+ viewModelScope.launch {

+ holisticRecognizerHelper.apply {

+ listener = this@MainViewModel

+ setup(context)

+ }

+ }

+ }

+

+ fun shutdownHelper() {

+ viewModelScope.launch {

+ holisticRecognizerHelper.apply {

+ listener = null

+ shutdown()

+ }

+ }

+ }

+

+ fun recognizeLiveStream(imageProxy: ImageProxy) {

+ holisticRecognizerHelper.recognizeLiveStream(

+ imageProxy = imageProxy,

+ )

+ }

+

+ override fun onFaceLandmarkerResults(resultBundle: FaceResultBundle) {

+ Log.i(TAG, "Face result: $resultBundle")

+ }

+

+ override fun onFaceLandmarkerError(error: String, errorCode: Int) {

+ Log.e(TAG, "Face landmarker error $errorCode: $error")

+ }

+

+ override fun onGestureResults(resultBundle: GestureResultBundle) {

+ Log.i(TAG, "Gesture result: $resultBundle")

+ }

+

+ override fun onGestureError(error: String, errorCode: Int) {

+ Log.e(TAG, "Gesture recognizer error $errorCode: $error")

+ }

+

+ companion object {

+ private const val TAG = "MainViewModel"

+ }

+}

+```

+

+{{% notice Info %}}

+You might have noticed that success and failure messages are logged with different APIs. For more information on log level guidelines, please refer to [this doc](https://source.android.com/docs/core/tests/debug/understanding-logging#log-level-guidelines).

+{{% /notice %}}

+

+2. Bind `MainViewModel` to `MainActivity` by inserting the following line into `MainActivity.kt`, above the `onCreate` method. Don't forget to import `viewModels` [extension function](https://kotlinlang.org/docs/extensions.html#extension-functions) via `import androidx.activity.viewModels`.

+

+```kotlin

+ private val viewModel: MainViewModel by viewModels()

+```

+

+3. Setup and shutdown the helper's internal MediaPipe tasks upon the app becomes [active and inactive](https://developer.android.com/guide/components/activities/activity-lifecycle#alc).

+

+```kotlin

+ private var isHelperReady = false

+

+ override fun onResume() {

+ super.onResume()

+ viewModel.setupHelper(baseContext)

+ isHelperReady = true

+ }

+

+ override fun onPause() {

+ super.onPause()

+ isHelperReady = false

+ viewModel.shutdownHelper()

+ }

+```

+

+## Feed camera frames into livestream recognition

+

+1. Add a new member variable named `imageAnalysis` to `MainActivity`, along with other camera related member variables:

+

+```kotlin

+private var imageAnalysis: ImageAnalysis? = null

+```

+

+2. Within `MainActivity`'s `bindCameraUseCases()` method, insert the following code after building `preview`, above `cameraProvider.unbindAll()`:

+

+```kotlin

+ // ImageAnalysis. Using RGBA 8888 to match how MediaPipe models work

+ imageAnalysis =

+ ImageAnalysis.Builder()

+ .setResolutionSelector(resolutionSelector)

+ .setTargetRotation(targetRotation)

+ .setBackpressureStrategy(ImageAnalysis.STRATEGY_KEEP_ONLY_LATEST)

+ .setOutputImageFormat(ImageAnalysis.OUTPUT_IMAGE_FORMAT_RGBA_8888)

+ .build()

+ // The analyzer can then be assigned to the instance

+ .also {

+ it.setAnalyzer(

+ // Forcing a serial executor without parallelism

+ // to avoid packets sent to MediaPipe out-of-order

+ Dispatchers.Default.limitedParallelism(1).asExecutor()

+ ) { image ->

+ if (isHelperReady)

+ viewModel.recognizeLiveStream(image)

+ }

+ }

+```

+

+{{% notice Note %}}

+

+`isHelperReady` flag is a lightweight mechanism to prevent camera image frames being sent to helper once we have started shutting down the helper.

+

+{{% /notice %}}

+

+3. Append `imageAnalysis` along with other use cases to `camera`:

+

+```kotlin

+ camera = cameraProvider.bindToLifecycle(

+ this, cameraSelector, preview, imageAnalysis

+ )

+```

+

+4. Build and run the app again. Now you should be seeing `Face result: ...` and `Gesture result: ...` debug messages in your [Logcat](https://developer.android.com/tools/logcat), which proves that MediaPipe tasks are functioning properly. Good job!

+

diff --git a/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/6-flow-data-to-view-1.md b/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/6-flow-data-to-view-1.md

new file mode 100644

index 0000000000..76029594eb

--- /dev/null

+++ b/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/6-flow-data-to-view-1.md

@@ -0,0 +1,369 @@

+---

+title: Flow data to view controller - Events

+weight: 6

+

+### FIXED, DO NOT MODIFY

+layout: learningpathall

+---

+

+[SharedFlow](https://developer.android.com/kotlin/flow/stateflow-and-sharedflow#sharedflow) and [StateFlow](https://developer.android.com/kotlin/flow/stateflow-and-sharedflow#stateflow) are [Kotlin Flow](https://developer.android.com/kotlin/flow) APIs that enable Flows to optimally emit state updates and emit values to multiple consumers.

+

+In this learning path, you will have the opportunity to experiment with both `SharedFlow` and `StateFlow`. This chapter will focus on SharedFlow while the next chapter will focus on StateFlow.

+

+`SharedFlow` is a general-purpose, hot flow that can emit values to multiple subscribers. It is highly configurable, allowing you to set the replay cache size, buffer capacity, etc.

+

+## Expose UI events in SharedFlow

+

+1. Navigate to `MainViewModel` and define a [sealed class](https://kotlinlang.org/docs/sealed-classes.html#declare-a-sealed-class-or-interface) named `UiEvent`, with two [direct subclasses](https://kotlinlang.org/docs/sealed-classes.html#inheritance) named `Face` and `Gesture`.

+

+```kotlin

+ sealed class UiEvent {

+ data class Face(

+ val face: FaceResultBundle

+ ) : UiEvent()

+

+ data class Gesture(

+ val gestures: GestureResultBundle,

+ ) : UiEvent()

+ }

+```

+

+2. Expose a `SharedFlow` named `uiEvents`:

+

+```kotlin

+ private val _uiEvents = MutableSharedFlow(1)

+ val uiEvents: SharedFlow = _uiEvents

+```

+

+{{% notice Info %}}

+This `SharedFlow` is initialized with a replay size of `1`. This retains the most recent value and ensures that new subscribers don't miss the latest event.

+{{% /notice %}}

+

+3. Replace the logging with value emissions in the listener callbacks:

+

+```kotlin

+ override fun onFaceLandmarkerResults(resultBundle: FaceResultBundle) {

+ _uiEvents.tryEmit(UiEvent.Face(resultBundle))

+ }

+

+ override fun onGestureResults(resultBundle: GestureResultBundle) {

+ _uiEvents.tryEmit(UiEvent.Gesture(resultBundle))

+ }

+```

+

+

+## Visualize face and gesture results

+

+To visualize the results of Face Landmark Detection and Gesture Recognition tasks, we have prepared the following code for you based on [MediaPipe's samples](https://github.com/google-ai-edge/mediapipe-samples/tree/main/examples).

+

+1. Create a new file named `FaceLandmarkerOverlayView.kt` and fill in the content below:

+

+```kotlin

+/*

+ * Copyright 2023 The TensorFlow Authors. All Rights Reserved.

+ *

+ * Licensed under the Apache License, Version 2.0 (the "License");

+ * you may not use this file except in compliance with the License.

+ * You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package com.example.holisticselfiedemo

+

+import android.content.Context

+import android.graphics.Canvas

+import android.graphics.Color

+import android.graphics.Paint

+import android.util.AttributeSet

+import android.view.View

+import com.google.mediapipe.tasks.vision.core.RunningMode

+import com.google.mediapipe.tasks.vision.facelandmarker.FaceLandmarker

+import com.google.mediapipe.tasks.vision.facelandmarker.FaceLandmarkerResult

+import kotlin.math.max

+import kotlin.math.min

+

+class FaceLandmarkerOverlayView(context: Context?, attrs: AttributeSet?) :

+ View(context, attrs) {

+

+ private var results: FaceLandmarkerResult? = null

+ private var linePaint = Paint()

+ private var pointPaint = Paint()

+

+ private var scaleFactor: Float = 1f

+ private var imageWidth: Int = 1

+ private var imageHeight: Int = 1

+

+ init {

+ initPaints()

+ }

+

+ fun clear() {

+ results = null

+ linePaint.reset()

+ pointPaint.reset()

+ invalidate()

+ initPaints()

+ }

+

+ private fun initPaints() {

+ linePaint.color = Color.BLUE

+ linePaint.strokeWidth = LANDMARK_STROKE_WIDTH

+ linePaint.style = Paint.Style.STROKE

+

+ pointPaint.color = Color.YELLOW

+ pointPaint.strokeWidth = LANDMARK_STROKE_WIDTH

+ pointPaint.style = Paint.Style.FILL

+ }

+

+ override fun draw(canvas: Canvas) {

+ super.draw(canvas)

+ if (results == null || results!!.faceLandmarks().isEmpty()) {

+ clear()

+ return

+ }

+

+ results?.let { faceLandmarkerResult ->

+

+ for(landmark in faceLandmarkerResult.faceLandmarks()) {

+ for(normalizedLandmark in landmark) {

+ canvas.drawPoint(normalizedLandmark.x() * imageWidth * scaleFactor, normalizedLandmark.y() * imageHeight * scaleFactor, pointPaint)

+ }

+

+ FaceLandmarker.FACE_LANDMARKS_CONNECTORS.forEach {

+ canvas.drawLine(

+ landmark[it.start()].x() * imageWidth * scaleFactor,

+ landmark[it.start()].y() * imageHeight * scaleFactor,

+ landmark[it.end()].x() * imageWidth * scaleFactor,

+ landmark[it.end()].y() * imageHeight * scaleFactor,

+ linePaint)

+ }

+ }

+ }

+ }

+

+ fun setResults(

+ faceLandmarkerResults: FaceLandmarkerResult,

+ imageHeight: Int,

+ imageWidth: Int,

+ runningMode: RunningMode = RunningMode.IMAGE

+ ) {

+ results = faceLandmarkerResults

+

+ this.imageHeight = imageHeight

+ this.imageWidth = imageWidth

+

+ scaleFactor = when (runningMode) {

+ RunningMode.IMAGE,

+ RunningMode.VIDEO -> {

+ min(width * 1f / imageWidth, height * 1f / imageHeight)

+ }

+ RunningMode.LIVE_STREAM -> {

+ // PreviewView is in FILL_START mode. So we need to scale up the

+ // landmarks to match with the size that the captured images will be

+ // displayed.

+ max(width * 1f / imageWidth, height * 1f / imageHeight)

+ }

+ }

+ invalidate()

+ }

+

+ companion object {

+ private const val LANDMARK_STROKE_WIDTH = 8F

+ }

+}

+```

+

+

+2. Create a new file named `GestureOverlayView.kt` and fill in the content below:

+

+```kotlin

+/*

+ * Copyright 2022 The TensorFlow Authors. All Rights Reserved.

+ *

+ * Licensed under the Apache License, Version 2.0 (the "License");

+ * you may not use this file except in compliance with the License.

+ * You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package com.example.holisticselfiedemo

+

+import android.content.Context

+import android.graphics.Canvas

+import android.graphics.Color

+import android.graphics.Paint

+import android.util.AttributeSet

+import android.view.View

+import com.google.mediapipe.tasks.vision.core.RunningMode

+import com.google.mediapipe.tasks.vision.gesturerecognizer.GestureRecognizerResult

+import com.google.mediapipe.tasks.vision.handlandmarker.HandLandmarker

+import kotlin.math.max

+import kotlin.math.min

+

+class GestureOverlayView(context: Context?, attrs: AttributeSet?) :

+ View(context, attrs) {

+

+ private var results: GestureRecognizerResult? = null

+ private var linePaint = Paint()

+ private var pointPaint = Paint()

+

+ private var scaleFactor: Float = 1f

+ private var imageWidth: Int = 1

+ private var imageHeight: Int = 1

+

+ init {

+ initPaints()

+ }

+

+ fun clear() {

+ results = null

+ linePaint.reset()

+ pointPaint.reset()

+ invalidate()

+ initPaints()

+ }

+

+ private fun initPaints() {

+ linePaint.color = Color.BLUE

+ linePaint.strokeWidth = LANDMARK_STROKE_WIDTH

+ linePaint.style = Paint.Style.STROKE

+

+ pointPaint.color = Color.YELLOW

+ pointPaint.strokeWidth = LANDMARK_STROKE_WIDTH

+ pointPaint.style = Paint.Style.FILL

+ }

+

+ override fun draw(canvas: Canvas) {

+ super.draw(canvas)

+ results?.let { gestureRecognizerResult ->

+ for (landmark in gestureRecognizerResult.landmarks()) {

+ for (normalizedLandmark in landmark) {

+ canvas.drawPoint(

+ normalizedLandmark.x() * imageWidth * scaleFactor,

+ normalizedLandmark.y() * imageHeight * scaleFactor,

+ pointPaint)

+ }

+

+ HandLandmarker.HAND_CONNECTIONS.forEach {

+ canvas.drawLine(

+ landmark[it.start()].x() * imageWidth * scaleFactor,

+ landmark[it.start()].y() * imageHeight * scaleFactor,

+ landmark[it.end()].x() * imageWidth * scaleFactor,

+ landmark[it.end()].y() * imageHeight * scaleFactor,

+ linePaint)

+ }

+ }

+ }

+ }

+

+ fun setResults(

+ gestureRecognizerResult: GestureRecognizerResult,

+ imageHeight: Int,

+ imageWidth: Int,

+ runningMode: RunningMode = RunningMode.IMAGE

+ ) {

+ results = gestureRecognizerResult

+

+ this.imageHeight = imageHeight

+ this.imageWidth = imageWidth

+

+ scaleFactor = when (runningMode) {

+ RunningMode.IMAGE,

+ RunningMode.VIDEO -> {

+ min(width * 1f / imageWidth, height * 1f / imageHeight)

+ }

+ RunningMode.LIVE_STREAM -> {

+ // PreviewView is in FILL_START mode. So we need to scale up the

+ // landmarks to match with the size that the captured images will be

+ // displayed.

+ max(width * 1f / imageWidth, height * 1f / imageHeight)

+ }

+ }

+ invalidate()

+ }

+

+ companion object {

+ private const val LANDMARK_STROKE_WIDTH = 8F

+ }

+}

+```

+

+## Update UI in the view controller

+

+1. Add the above two overlay views to `activity_main.xml` layout file:

+

+```xml

+

+

+

+```

+

+2. Collect the new SharedFlow `uiEvents` in `MainActivity` by appending the code below to the end of `onCreate` method, **below** `setupCamera()` method call.

+

+```kotlin

+ lifecycleScope.launch {

+ repeatOnLifecycle(Lifecycle.State.RESUMED) {

+ launch {

+ viewModel.uiEvents.collect { uiEvent ->

+ when (uiEvent) {

+ is MainViewModel.UiEvent.Face -> drawFaces(uiEvent.face)

+ is MainViewModel.UiEvent.Gesture -> drawGestures(uiEvent.gestures)

+ }

+ }

+ }

+ }

+ }

+```

+

+3. Implement `drawFaces` and `drawGestures`:

+

+```kotlin

+ private fun drawFaces(resultBundle: FaceResultBundle) {

+ // Pass necessary information to OverlayView for drawing on the canvas

+ viewBinding.overlayFace.setResults(

+ resultBundle.result,

+ resultBundle.inputImageHeight,

+ resultBundle.inputImageWidth,

+ RunningMode.LIVE_STREAM

+ )

+ // Force a redraw

+ viewBinding.overlayFace.invalidate()

+ }

+```

+

+```kotlin

+ private fun drawGestures(resultBundle: GestureResultBundle) {

+ // Pass necessary information to OverlayView for drawing on the canvas

+ viewBinding.overlayGesture.setResults(

+ resultBundle.results.first(),

+ resultBundle.inputImageHeight,

+ resultBundle.inputImageWidth,

+ RunningMode.LIVE_STREAM

+ )

+ // Force a redraw

+ viewBinding.overlayGesture.invalidate()

+ }

+```

+

+4. Build and run the app again. Now you should be seeing face and gesture overlays on top of the camera preview as shown below. Good job!

+

+

+

diff --git a/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/7-flow-data-to-view-2.md b/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/7-flow-data-to-view-2.md

new file mode 100644

index 0000000000..ca61e998b7

--- /dev/null

+++ b/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/7-flow-data-to-view-2.md

@@ -0,0 +1,146 @@

+---

+title: Flow data to view controller - States

+weight: 7

+

+### FIXED, DO NOT MODIFY

+layout: learningpathall

+---

+

+`StateFlow` is a subclass of SharedFlow and internally use a SharedFlow to manage its emissions. However, it provides a stricter API, ensuring that:

+1. It always has an initial value.

+2. It emits only the latest state.

+3. It cannot configure its replay cache (always `1`).

+

+Therefore, `StateFlow` is a specialized type of `SharedFlow` that represents a state holder, always maintaining the latest value. It is optimized for use cases where you need to observe and react to state changes.

+

+## Expose UI states in StateFlow

+

+1. Expose two `StateFlow`s named `faceOk` and `gestureOk` in `MainViewModel`, indicating whether the subject's face and gesture are ready for a selfie.

+

+```kotlin

+ private val _faceOk = MutableStateFlow(false)

+ val faceOk: StateFlow = _faceOk

+

+ private val _gestureOk = MutableStateFlow(false)

+ val gestureOk: StateFlow = _gestureOk

+```

+

+2. Append the following constant values to `MainViewModel`'s companion object. In this demo app, we are only focusing on smiling faces and thumb-up gestures.

+

+```kotlin

+ private const val FACE_CATEGORY_MOUTH_SMILE = "mouthSmile"

+ private const val GESTURE_CATEGORY_THUMB_UP = "Thumb_Up"

+```

+

+3. Update `onFaceLandmarkerResults` and `onGestureResults` to check if their corresponding results are meeting the conditions above.

+

+```kotlin

+ override fun onFaceLandmarkerResults(resultBundle: FaceResultBundle) {

+ val faceOk = resultBundle.result.faceBlendshapes().getOrNull()?.let { faceBlendShapes ->

+ faceBlendShapes.take(FACES_COUNT).all { shapes ->

+ shapes.filter {

+ it.categoryName().contains(FACE_CATEGORY_MOUTH_SMILE)

+ }.all {

+ it.score() > HolisticRecognizerHelper.DEFAULT_FACE_SHAPE_SCORE_THRESHOLD

+ }

+ }

+ } ?: false

+

+ _faceOk.tryEmit(faceOk)

+ _uiEvents.tryEmit(UiEvent.Face(resultBundle))

+ }

+```

+

+```kotlin

+ override fun onGestureResults(resultBundle: GestureResultBundle) {

+ val gestureOk = resultBundle.results.first().gestures()

+ .take(HolisticRecognizerHelper.HANDS_COUNT)

+ .let { gestures ->

+ gestures.isNotEmpty() && gestures

+ .mapNotNull { it.firstOrNull() }

+ .all { GESTURE_CATEGORY_THUMB_UP == it.categoryName() }

+ }

+

+ _gestureOk.tryEmit(gestureOk)

+ _uiEvents.tryEmit(UiEvent.Gesture(resultBundle))

+ }

+```

+

+## Update UI in the view controller

+

+1. Navigate to the `strings.xml` file in your `app` subproject's `src/main/res/values` path, then append the following text resources:

+

+```xml

+ Face

+ Gesture

+```

+

+2. In the same directory, create a new resource file named `dimens.xml` if not exist, which is used to define layout related dimension values:

+

+```xml

+

+

+ 12dp

+ 36dp

+

+```

+

+3. Navigate to `activity_main.xml` layout file and add the following code to the root `ConstraintLayout`, **below** the two overlay views which you just added in the previous chapter.

+

+```xml

+

+

+

+```

+

+4. Finally, navigate to `MainActivity.kt` and append the following code inside `repeatOnLifecycle(Lifecycle.State.RESUMED)` block, **below** the `launch` block you just added in the previous chapter. This makes sure each of the **three** parallel `launch` runs in its own Coroutine concurrently without blocking each other.

+

+```kotlin

+ launch {

+ viewModel.faceOk.collect {

+ viewBinding.faceReady.isChecked = it

+ }

+ }

+

+ launch {

+ viewModel.gestureOk.collect {

+ viewBinding.gestureReady.isChecked = it

+ }

+ }

+```

+

+5. Build and run the app again. Now you should be seeing two switches on the bottom of the screen as shown below, which turns on and off while you smile and show thumb-up gestures. Good job!

+

+

+

+## Recap on SharedFlow vs StateFlow

+

+This app uses `SharedFlow` for dispatching overlay views' UI events without mandating a specific stateful model, which avoids redundant computation. Meanwhile, it uses `StateFlow` for dispatching condition switches' UI states, which prevents duplicated emission and consequent UI updates.

+

+Here's a breakdown of the differences between `SharedFlow` and `StateFlow`:

+

+| | SharedFlow | StateFlow |

+| --- | --- | --- |

+| Type of Data | Transient events or actions | State or continuously changing data |

+| Initial Value | Not required | Required |

+| Replays to New Subscribers | Configurable with replay (e.g., 0, 1, or more) | Always emits the latest value |

+| Default Behavior | Emits only future values unless replay is set | Retains and emits only the current state |

+| Use Case Examples | Short-lived, one-off events that shouldn't persist as part of the state | Long-lived state that represents the current view's state |

diff --git a/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/8-mediate-flows.md b/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/8-mediate-flows.md

new file mode 100644

index 0000000000..4798ccaddc

--- /dev/null

+++ b/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/8-mediate-flows.md

@@ -0,0 +1,209 @@

+---

+title: Mediate flows to trigger photo capture

+weight: 8

+

+### FIXED, DO NOT MODIFY

+layout: learningpathall

+---

+

+Now you have two independent Flows indicating the conditions of face landmark detection and gesture recognition. The simplest multimodality strategy is to combine multiple source Flows into a single output Flow, which emits consolidated values as the [single source of truth](https://en.wikipedia.org/wiki/Single_source_of_truth) for its observers (collectors) to carry out corresponding actions.

+

+## Combine two Flows into a single Flow

+

+1. Navigate to `MainViewModel` and append the following constant values to its companion object.

+

+ i. The first constant defines how frequently we sample the conditions from each Flow.

+

+ ii. The second constant defines the debounce threshold of the stability check on whether to trigger a photo capture.

+

+```kotlin

+ private const val CONDITION_CHECK_SAMPLING_INTERVAL = 100L

+ private const val CONDITION_CHECK_STABILITY_THRESHOLD = 500L

+```

+

+2. Add a private member variable named `_bothOk`.

+

+```kotlin

+ private val _bothOk =

+ combine(

+ _gestureOk.sample(CONDITION_CHECK_SAMPLING_INTERVAL),

+ _faceOk.sample(CONDITION_CHECK_SAMPLING_INTERVAL),

+ ) { gestureOk, faceOk -> gestureOk && faceOk }

+ .stateIn(viewModelScope, SharingStarted.WhileSubscribed(), false)

+```

+

+{{% notice Note %}}

+Kotlin Flow's [`combine`](https://kotlinlang.org/api/kotlinx.coroutines/kotlinx-coroutines-core/kotlinx.coroutines.flow/combine.html) transformation is equivalent to ReactiveX's [`combineLatest`](https://reactivex.io/documentation/operators/combinelatest.html). It combines emissions from multiple observables, so that each time **any** observable emits, the combinator function is called with the latest values from all sources.

+

+You might need to add `@OptIn(FlowPreview::class)` annotation since `sample` is still in preview. For more information on similar transformations, please refer to [this blog](https://kt.academy/article/cc-flow-combine).

+

+{{% /notice %}}

+

+3. Expose a `SharedFlow` variable which emits a `Unit` whenever the face and gesture conditions are met and stay stable for a while, i.e. `500`ms as defined above. Again, add `@OptIn(FlowPreview::class)` if needed.

+

+```kotlin

+ val captureEvents: SharedFlow = _bothOk

+ .debounce(CONDITION_CHECK_STABILITY_THRESHOLD)

+ .filter { it }

+ .map { }

+ .shareIn(viewModelScope, SharingStarted.WhileSubscribed())

+```

+

+If this code looks confusing to you, please see the explanations below for Kotlin beginners.

+

+{{% notice Info %}}

+

+###### Keyword "it"

+

+The operation `filter { it }` is simplified from `filter { bothOk -> bothOk == true }`.

+

+Since Kotlin allows for implictly calling the single parameter in a lambda `it`, `{ bothOk -> bothOk == true }` is equivalent to `{ it == true }`, and again `{ it }`.

+

+See [this doc](https://kotlinlang.org/docs/lambdas.html#it-implicit-name-of-a-single-parameter) for more details.

+

+{{% /notice %}}

+

+{{% notice Info %}}

+

+###### "Unit" type

+This `SharedFlow` has a generic type `Unit`, which doesn't contain any value. You may think of it as a "pulse" signal.

+

+The operation `map { }` simply maps the upstream `Boolean` value emitted from `_bothOk` to `Unit` regardless their values are true or false. It's simplified from `map { bothOk -> Unit }`, which becomes `map { Unit } ` where the keyword `it` is not used at all. Since an empty block already returns `Unit` implicitly, we don't need to explicitly return it.

+

+{{% /notice %}}

+

+If this still looks confusing, you may also opt to use `SharedFlow` and remove the `map { }` operation. Just note that when you collect this Flow, it doesn't matter whether the emitted `Boolean` values are true or false. In fact, they are always `true` due to the `filter` operation.

+

+## Configure ImageCapture use case

+

+1. Navigate to `MainActivity` and append a `ImageCapture` use case below the other camera related member variables:

+

+```kotlin

+ private var imageCapture: ImageCapture? = null

+```

+

+2. Configure this `ImageCapture` in `bindCameraUseCases()` method:

+

+```kotlin

+ // Image Capture

+ imageCapture = ImageCapture.Builder()

+ .setCaptureMode(ImageCapture.CAPTURE_MODE_MINIMIZE_LATENCY)

+ .setTargetRotation(targetRotation)

+ .build()

+```

+

+3. Again, don't forget to append this use case to `bindToLifecycle`.

+

+```kotlin

+ camera = cameraProvider.bindToLifecycle(

+ this, cameraSelector, preview, imageAnalyzer, imageCapture

+ )

+```

+

+## Execute photo capture with ImageCapture

+

+1. Append the following constant values to `MainActivity`'s companion object. They define the file name format and the [MIME type](https://en.wikipedia.org/wiki/Media_type).

+

+```kotlin

+ // Image capture

+ private const val FILENAME = "yyyy-MM-dd-HH-mm-ss-SSS"

+ private const val PHOTO_TYPE = "image/jpeg"

+```

+

+2. Implement the photo capture logic with a new method named `executeCapturePhoto()`:

+

+```kotin

+ private fun executeCapturePhoto() {

+ imageCapture?.let { imageCapture ->

+ val name = SimpleDateFormat(FILENAME, Locale.US)

+ .format(System.currentTimeMillis())

+ val contentValues = ContentValues().apply {

+ put(MediaStore.MediaColumns.DISPLAY_NAME, name)

+ put(MediaStore.MediaColumns.MIME_TYPE, PHOTO_TYPE)

+ if (Build.VERSION.SDK_INT > Build.VERSION_CODES.P) {

+ val appName = resources.getString(R.string.app_name)

+ put(

+ MediaStore.Images.Media.RELATIVE_PATH,

+ "Pictures/${appName}"

+ )

+ }

+ }

+

+ val outputOptions = ImageCapture.OutputFileOptions

+ .Builder(

+ contentResolver,

+ MediaStore.Images.Media.EXTERNAL_CONTENT_URI,

+ contentValues

+ )

+ .build()

+

+ imageCapture.takePicture(outputOptions, Dispatchers.IO.asExecutor(),

+ object : ImageCapture.OnImageSavedCallback {

+ override fun onError(error: ImageCaptureException) {

+ Log.e(TAG, "Photo capture failed: ${error.message}", error)

+ }

+

+ override fun onImageSaved(outputFileResults: ImageCapture.OutputFileResults) {

+ val savedUri = outputFileResults.savedUri

+ Log.i(TAG, "Photo capture succeeded: $savedUri")

+ }

+ })

+ }

+ }

+```

+

+3. Append the following code to `repeatOnLifecycle(Lifecycle.State.RESUMED)` block in `onCreate` method.

+

+```kotlin

+ launch {

+ viewModel.captureEvents.collect {

+ executeCapturePhoto()

+ }

+ }

+```

+4. Now, even though the photo capture has already been implemented, it's still quite inconvenient for us to check out the logs afterwards to find out whether the photo capture has been successfully executed. Let's add a flash effect UI to explicitly show the users that a photo has been captured.

+

+## Add a flash effect upon capturing photo

+

+1. Navigate to `activity_main.xml` layout file and insert the following `View` element **between** the two overlay views and two `SwitchCompat` views. This is essentially just a white blank view covering the whole surface.

+

+```

+

+```

+

+2. Append the constant value below to the companion object of `MainActivity`, then add a private method named `showFlashEffect()` to animate the above `flashOverlay` view from hidden to shown in `100`ms and then again from shown to hidden in `100`ms.

+

+```kotlin

+ private const val IMAGE_CAPTURE_FLASH_DURATION = 100L

+```

+

+```kotlin

+ private fun showFlashEffect() {

+ viewBinding.flashOverlay.apply {

+ visibility = View.VISIBLE

+ alpha = 0f

+

+ // Fade in and out animation

+ animate()

+ .alpha(1f)

+ .setDuration(IMAGE_CAPTURE_FLASH_DURATION)

+ .withEndAction {

+ animate()

+ .alpha(0f)

+ .setDuration(IMAGE_CAPTURE_FLASH_DURATION)

+ .withEndAction {

+ visibility = View.GONE

+ }

+ }

+ }

+ }

+```

+

+3. Invoke `showFlashEffect()` method in `executeCapturePhoto()` method, **before** invoking `imageCapture.takePicture()`

+

+4. Build and run the app. Try keeping up a smiling face while presenting thumb-up gestures. When you see both switches turn on and stay stable for approximately half a second, the screen should flash white and then a photo should be captured and shows up in your album, which may take a few seconds depending on your Android device's hardware. Good job!

diff --git a/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/9-avoid-redundant-requests.md b/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/9-avoid-redundant-requests.md

new file mode 100644

index 0000000000..99608ce139

--- /dev/null

+++ b/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/9-avoid-redundant-requests.md

@@ -0,0 +1,96 @@

+---

+title: Avoid duplicated photo capture requests

+weight: 9

+

+### FIXED, DO NOT MODIFY

+layout: learningpathall

+---

+

+So far, we have implemented the core logic for mediating MediaPipe's face and gesture task results and executing photo captures. However, the view controller does not communicate its execution results back to the view model. This introduces risks such as photo capture failures, frequent or duplicate requests, and other potential issues.

+

+## Introduce camera readiness state

+

+It is a best practice to complete the data flow cycle by providing callbacks for the view controller's states. This ensures that the view model does not emit values in undesired states, such as when the camera is busy or unavailable.

+

+1. Navigate to `MainViewModel` and add a `MutableStateFlow` named `_isCameraReady` as a private member variable. This keeps track of whether the camera is busy or unavailable.

+

+```kotlin

+ private val _isCameraReady = MutableStateFlow(true)

+```

+

+2. Update the `captureEvents` by combining `_bothOk` and `_isCameraReady`. This ensures that whenever a capture event is dispatched, the camera readiness state is set to false, therefore preventing the next capture event from being dispatched again.

+

+```kotlin

+ val captureEvents: SharedFlow =

+ combine(_bothOk, _isCameraReady) { bothOk, cameraReady -> bothOk to cameraReady}

+ .debounce(CONDITION_CHECK_STABILITY_THRESHOLD)

+ .filter { (bothOK, cameraReady) -> bothOK && cameraReady }

+ .onEach { _isCameraReady.emit(false) }

+ .map {}

+ .shareIn(viewModelScope, SharingStarted.WhileSubscribed())

+```

+

+3. Add a photo capture callback named `onPhotoCaptureComplete()`, which restores the camera readiness state back to true, so that the `captureEvents` resumes emitting once the conditions are met again.

+

+```kotlin

+ fun onPhotoCaptureComplete() {

+ viewModelScope.launch {

+ _isCameraReady.emit(true)

+ }

+ }

+```

+

+4. Navigate to `MainActivity` and invoke `onPhotoCaptureComplete()` method inside `onImageSaved` callback:

+

+```kotlin

+ override fun onImageSaved(outputFileResults: ImageCapture.OutputFileResults) {

+ val savedUri = outputFileResults.savedUri

+ Log.i(TAG, "Photo capture succeeded: $savedUri")

+

+ viewModel.onPhotoCaptureComplete()

+ }

+```

+

+

+## Introduce camera cooldown

+

+The duration of image capture can vary across Android devices due to hardware differences. Additionally, consecutive image captures place a heavy load on the CPU, GPU, camera, and flash memory buffer.

+

+To address this, implementing a simple cooldown mechanism after each photo capture can enhance the user experience while conserving computing resources.

+

+1. Add the following constant value to `MainViewModel`'s companion object. This defines a `3` sec cooldown before marking the camera available again.

+

+```kotlin

+ private const val IMAGE_CAPTURE_DEFAULT_COUNTDOWN = 3000L

+```

+

+2. Add a `delay(IMAGE_CAPTURE_DEFAULT_COUNTDOWN)` before updating `_isCameraBusy` state.

+

+```kotlin

+ fun onPhotoCaptureComplete() {

+ viewModelScope.launch {

+ delay(IMAGE_CAPTURE_DEFAULT_COUNTDOWN)

+ _isCameraBusy.emit(false)

+ }

+ }

+```

+

+{{% notice Info %}}

+You may need to import `kotlinx.coroutines.delay` function.

+{{% /notice %}}

+

+3. Build and run the app again. Now you should notice that photo capture cannot be triggered as frequently as before. Good job!

+

+{{% notice Note %}}

+

+Furthermore, if you remove the `viewModel.onPhotoCaptureComplete()` to simulate something going wrong upon photo capture, the camera won't become available ever again.

+

+However, silently failing without notifying the user is not a good practice for app development. Error handling is omitted in this learning path only for the sake of simplicity.

+

+{{% /notice %}}

+

+## Completed sample code on GitHub

+

+If you run into any difficulties completing this learning path, feel free to check out the [completed sample code](https://github.com/hanyin-arm/sample-android-selfie-app-using-mediapipe-multimodality) and import it into Android Studio.

+

+If you discover a bug, encounter an issue, or have suggestions for improvement, we’d love to hear from you! Please feel free to [open an issue](https://github.com/hanyin-arm/sample-android-selfie-app-using-mediapipe-multimodality/issues/new) with detailed information.

diff --git a/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/_index.md b/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/_index.md

new file mode 100644

index 0000000000..de1a558cca

--- /dev/null

+++ b/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/_index.md

@@ -0,0 +1,46 @@

+---

+title: Build a Hands-Free Selfie app with Modern Android Development and MediaPipe Multimodal AI

+

+minutes_to_complete: 120

+

+who_is_this_for: This is an introductory topic for mobile application developers interested in learning how to build an Android selfie app with MediaPipe, Kotlin flows and CameraX, following the modern Android architecture design.

+

+

+learning_objectives:

+ - Architect a modern Android app with a focus on the UI layer.

+ - Leverage lifecycle-aware components within the MVVM architecture.

+ - Combine MediaPipe's face landmark detection and gesture recognition for a multimodel selfie solution.

+ - Use JetPack CameraX to access camera features.

+ - Use Kotlin Flow APIs to handle multiple asynchronous data streams.

+

+prerequisites:

+ - A development machine compatible with [**Android Studio**](https://developer.android.com/studio).

+ - A recent **physical** Android device (with **front camera**) and a USB **data** cable.

+ - Familiarity with Android development concepts.

+ - Basic knowledge of modern Android architecture.

+ - Basic knowledge of Kotlin programming language, such as [coroutines](https://kotlinlang.org/docs/coroutines-overview.html) and [flows](https://kotlinlang.org/docs/flow.html).

+

+author_primary: Han Yin

+

+### Tags

+skilllevels: Beginner

+subjects: ML

+armips:

+ - ARM Cortex-A

+ - ARM Cortex-X

+ - ARM Mali GPU

+tools_software_languages:

+ - mobile

+ - Android Studio

+ - Kotlin

+ - MediaPipe

+operatingsystems:

+ - Android

+

+

+### FIXED, DO NOT MODIFY

+# ================================================================================

+weight: 1 # _index.md always has weight of 1 to order correctly

+layout: "learningpathall" # All files under learning paths have this same wrapper

+learning_path_main_page: "yes" # This should be surfaced when looking for related content. Only set for _index.md of learning path content.

+---

diff --git a/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/_next-steps.md b/content/learning-paths/smartphones-and-mobile/build-android-selfie-app-using-mediapipe-multimodality/_next-steps.md

new file mode 100644

index 0000000000..7d66d152d0

--- /dev/null