@@ -112,13 +101,12 @@ XTuner is an efficient, flexible and full-featured toolkit for fine-tuning large

- InternLM2

- - InternLM

- - Llama

+ - Llama 3

- Llama 2

+ - Phi-3

- ChatGLM2

- ChatGLM3

- Qwen

- - Baichuan

- Baichuan2

- Mixtral 8x7B

- DeepSeek MoE

@@ -192,7 +180,7 @@ XTuner is an efficient, flexible and full-featured toolkit for fine-tuning large

pip install -e '.[all]'

```

-### Fine-tune [](https://colab.research.google.com/drive/1QAEZVBfQ7LZURkMUtaq0b-5nEQII9G9Z?usp=sharing)

+### Fine-tune

XTuner supports the efficient fine-tune (*e.g.*, QLoRA) for LLMs. Dataset prepare guides can be found on [dataset_prepare.md](./docs/en/user_guides/dataset_prepare.md).

@@ -235,7 +223,7 @@ XTuner supports the efficient fine-tune (*e.g.*, QLoRA) for LLMs. Dataset prepar

xtuner convert pth_to_hf ${CONFIG_NAME_OR_PATH} ${PTH} ${SAVE_PATH}

```

-### Chat [](https://colab.research.google.com/drive/144OuTVyT_GvFyDMtlSlTzcxYIfnRsklq?usp=sharing)

+### Chat

XTuner provides tools to chat with pretrained / fine-tuned LLMs.

@@ -295,6 +283,7 @@ We appreciate all contributions to XTuner. Please refer to [CONTRIBUTING.md](.gi

## 🎖️ Acknowledgement

- [Llama 2](https://github.com/facebookresearch/llama)

+- [DeepSpeed](https://github.com/microsoft/DeepSpeed)

- [QLoRA](https://github.com/artidoro/qlora)

- [LMDeploy](https://github.com/InternLM/lmdeploy)

- [LLaVA](https://github.com/haotian-liu/LLaVA)

diff --git a/README_zh-CN.md b/README_zh-CN.md

index dcc6649ff..c5037d28c 100644

--- a/README_zh-CN.md

+++ b/README_zh-CN.md

@@ -23,6 +23,20 @@

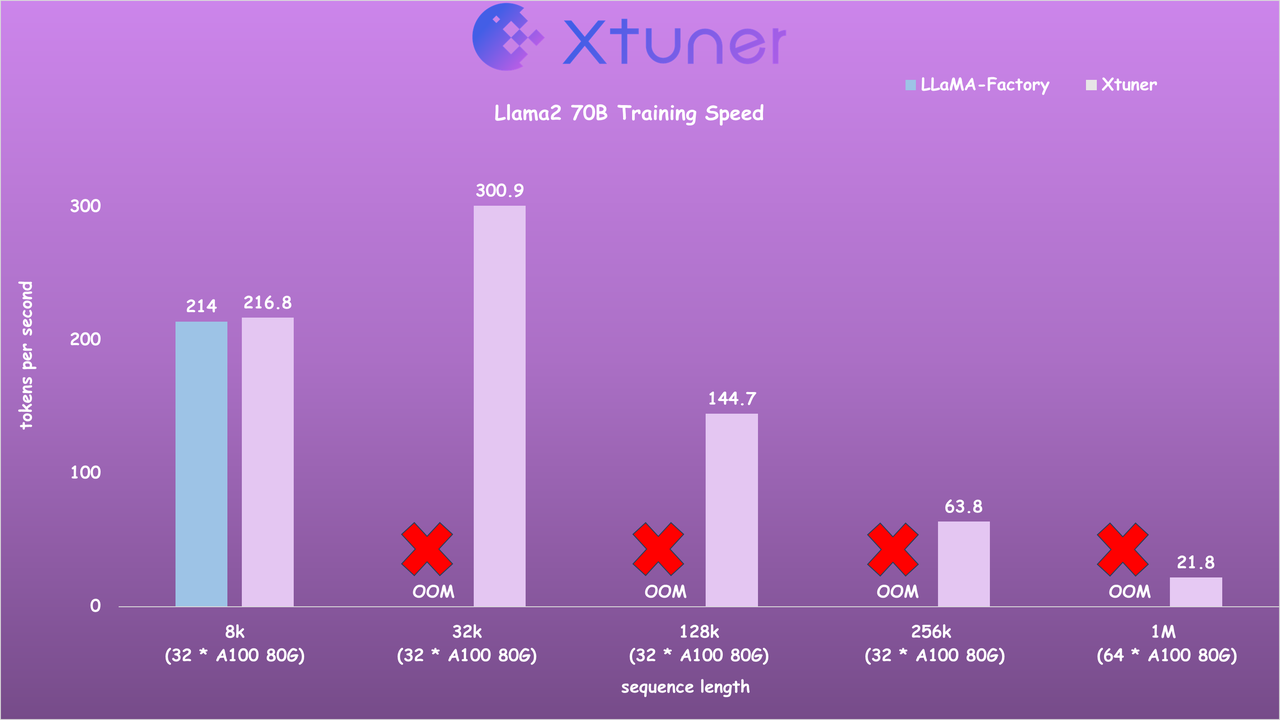

+## 🚀 Speed Benchmark

+

+- XTuner 与 LLaMA-Factory 在 Llama2-7B 模型上的训练效率对比

+

+

+

+

+

+

-

- | 基于插件的对话 🔥🔥🔥 |

-

-

-

-  -

- |

-

-  -

- |

-

-  -

- |

-

-

-

## 🔥 支持列表

@@ -112,13 +101,12 @@ XTuner 是一个高效、灵活、全能的轻量化大模型微调工具库。

- InternLM2

- - InternLM

- - Llama

+ - Llama 3

- Llama 2

+ - Phi-3

- ChatGLM2

- ChatGLM3

- Qwen

- - Baichuan

- Baichuan2

- Mixtral 8x7B

- DeepSeek MoE

@@ -192,7 +180,7 @@ XTuner 是一个高效、灵活、全能的轻量化大模型微调工具库。

pip install -e '.[all]'

```

-### 微调 [](https://colab.research.google.com/drive/1QAEZVBfQ7LZURkMUtaq0b-5nEQII9G9Z?usp=sharing)

+### 微调

XTuner 支持微调大语言模型。数据集预处理指南请查阅[文档](./docs/zh_cn/user_guides/dataset_prepare.md)。

@@ -235,7 +223,7 @@ XTuner 支持微调大语言模型。数据集预处理指南请查阅[文档](.

xtuner convert pth_to_hf ${CONFIG_NAME_OR_PATH} ${PTH} ${SAVE_PATH}

```

-### 对话 [](https://colab.research.google.com/drive/144OuTVyT_GvFyDMtlSlTzcxYIfnRsklq?usp=sharing)

+### 对话

XTuner 提供与大语言模型对话的工具。

@@ -295,6 +283,7 @@ xtuner chat internlm/internlm2-chat-7b --visual-encoder openai/clip-vit-large-pa

## 🎖️ 致谢

- [Llama 2](https://github.com/facebookresearch/llama)

+- [DeepSpeed](https://github.com/microsoft/DeepSpeed)

- [QLoRA](https://github.com/artidoro/qlora)

- [LMDeploy](https://github.com/InternLM/lmdeploy)

- [LLaVA](https://github.com/haotian-liu/LLaVA)

| |