nvidia-docker 2.6.0-1 - not working on Ubuntu WSL2 #1496

Comments

|

Hi @levipereira |

|

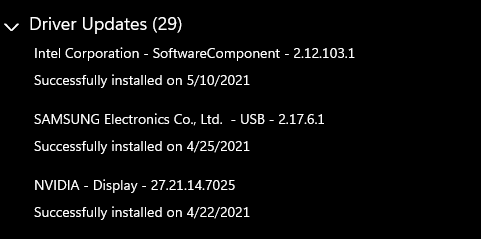

The error was reproduced on Windows 10 Nvidia Driver: |

I have tried upgrade only libnvidia-container1 and same error is raised as follow: |

|

@levipereira seems we have a similar use case :) I am also seeing this on driver 470.25 with $ apt list --installed libnvidia-container1

Listing... Done

libnvidia-container1/bionic,now 1.4.0-1 amd64 [installed,automatic]

$ nvidia-docker run --gpus all nvcr.io/nvidia/k8s/cuda-sample:nbody nbody -gpu -benchmark

docker: Error response from daemon: OCI runtime create failed: container_linux.go:367: starting container process caused: process_linux.go:495: container init caused: Running hook #0:: error running hook: exit status 1, stdout: , stderr: nvidia-container-cli: initialization error: driver error: failed to process request: unknown.

ERRO[0000] error waiting for container: context canceledDowngrading to $ sudo apt-get install nvidia-docker2:amd64=2.5.0-1 nvidia-container-runtime:amd64=3.4.0-1 nvidia-container-toolkit:amd64=1.4.2-1 libnvidia-container-tools:amd64=1.3.3-1 libnvidia-container1:amd64=1.3.3-1

...

$ nvidia-docker run --gpus all nvcr.io/nvidia/k8s/cuda-sample:nbody nbody -gpu -benchmark

docker: Error response from daemon: OCI runtime create failed: container_linux.go:367: starting container process caused: process_linux.go:495: container init caused: Running hook #0:: error running hook: exit status 1, stdout: , stderr: nvidia-container-cli: requirement error: unsatisfied condition: cuda>=11.2, please update your driver to a newer version, or use an earlier cuda container: unknown.

ERRO[0000] error waiting for container: context canceled@levipereira reported this issue earlier, and the current workaround is to run docker with $ nvidia-docker run --env NVIDIA_DISABLE_REQUIRE=1 --gpus all nvcr.io/nvid

ia/k8s/cuda-sample:nbody nbody -gpu -benchmark

Run "nbody -benchmark [-numbodies=<numBodies>]" to measure performance.

-fullscreen (run n-body simulation in fullscreen mode)

-fp64 (use double precision floating point values for simulation)

-hostmem (stores simulation data in host memory)

-benchmark (run benchmark to measure performance)

-numbodies=<N> (number of bodies (>= 1) to run in simulation)

-device=<d> (where d=0,1,2.... for the CUDA device to use)

-numdevices=<i> (where i=(number of CUDA devices > 0) to use for simulation)

-compare (compares simulation results running once on the default GPU and once on the CPU)

-cpu (run n-body simulation on the CPU)

-tipsy=<file.bin> (load a tipsy model file for simulation)

NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

> Windowed mode

> Simulation data stored in video memory

> Single precision floating point simulation

> 1 Devices used for simulation

GPU Device 0: "Pascal" with compute capability 6.1

> Compute 6.1 CUDA device: [NVIDIA GeForce GTX 1080]

20480 bodies, total time for 10 iterations: 14.627 ms

= 286.754 billion interactions per second

= 5735.088 single-precision GFLOP/s at 20 flops per interactionNote that setting |

|

Unfortunately, the primary maintainers of the nvidia container stack (myself included) do not have much visibility into WSL specific issues like these. The WSL code is written / tested by a different group within NVIDIA. We just make sure that any support that does get added for WSL doesn’t interfere with the functionality we have on Linux. I’ve asked the WSL developers to comment here, so hopefully you will get a response soon. |

|

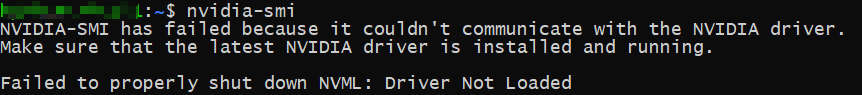

hi everyone, Unfortunately this is a known issue with NVML in our driver and the fix for this issue will be released (very soon) in an upcoming R470 driver release. The new drivers can be downloaded from the WSL page when available: Also - there is another known issue with multi-GPUs that will still be present with the new driver when its released. This new issue is still under debugging and thus CUDA with WSL2 may not work when used with systems with multi-GPUs. |

|

Is there a place to download an older version of the drivers? The page only allows you to download the latest version, which has this feature broken |

|

I'm also having this issue. Following for updates. Thank you! |

|

I ended up finding some potentially dubious third party mirror for an older version of the driver, which solves my issue for now. |

|

Same issue. Following the CUDA on WSL guide here. Both docker examples fail with the same issue. |

|

Same issue, however I could only get 470.14 from the official download page. Workaround didn't work, but reverting to 465.42 seems to fixed it. Please add a page for downloading previous version, having to rely on some mega link is pretty lame. |

@dualvtable So any updates 📦 ? |

|

It seems like the WSL driver page is serving 470.14 at least at the time of this post: Although NVML is broken (verified by a failing |

|

@feynmanliang Unfortunately, this does not work for me. libnvidia-container-tools/bionic,now 1.4.0-1 amd64 [installed,automatic]

libnvidia-container1/bionic,now 1.4.0-1 amd64 [installed,automatic]

nvidia-container-runtime/bionic,now 3.5.0-1 amd64 [installed,automatic]

nvidia-container-toolkit/bionic,now 1.5.0-1 amd64 [installed,automatic]

nvidia-docker2/bionic,now 2.6.0-1 all [installed]I am running Windows Insider Error message: docker: Error response from daemon: OCI runtime create failed: container_linux.go:367: starting container process caused: process_linux.go:495: container init caused: Running hook #0:: error running hook: exit status 1, stdout: , stderr: nvidia-container-cli: initialization error: driver error: failed to process request: unknown. |

Please downgrade nvidia-docker2 to 2.5 nvidia-docker2/bionic,now 2.6.0-1 all [installed] |

|

@levipereira That's what I did. Sorry if it wasn't clear that, in the above, I was showing my upgraded state. When I downgraded, the NVIDIA packages were as below (i.e. what results from cut-pasting the nvidia-docker2:amd64=2.5.0-1

nvidia-container-runtime:amd64=3.4.0-1

nvidia-container-toolkit:amd64=1.4.2-1

libnvidia-container-tools:amd64=1.3.3-1

libnvidia-container1:amd64=1.3.3-1Also, in case you meant to just downgrade nvidia-docker2/bionic,now 2.5.0-1 all [installed]That gave again the same result as I pasted in my previous post. |

|

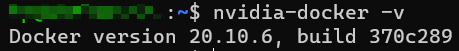

@rkcroc what's the output of |

-rwxr-xr-x 1 root root 71717000 Mar 29 15:10 /usr/bin/docker |

|

@rkcroc strange, I am on the same Windows build as you. I am unaffiliated with NVIDIA so we're both shooting in the dark here ;) Maybe it's driver versions? I am on 470.25, which I got via Windows update (the NVIDIA WSL page was serving me 470.14) |

|

@rkcroc I can't reproduce it with a brand new Ubuntu install. I have driver 470.25 too. |

|

@feynmanliang @onomatopellan Yes, you nailed it. For some reason Windows is not offering me the NVIDIA 470.25 driver update. |

|

Same issue, any update? |

|

@qiangxinglin can you post your output for |

By the way, I don't know whether this is a relevant issue: |

|

I also find an interesting thing: With Docker Desktop ver 3.3.1(63152) But with Docker Desktop ver 3.3.3(64133) The stderr are different in 2 version. |

|

@qiangxinglin What's your Windows build (winver.exe)? And the output of |

|

@onomatopellan Oh my *** god! I rolled back to Docker Desktop 3.3.1(63152), and perform a reboot, it works! |

|

@qiangxinglin Awesome. It was weird that only worked for me. Well, that confirms it's a nvidia-docker bug that should be fixed in a upcoming update/driver. |

|

Guys, Docker Desktop 3.3.1(63152) is the cure! Do not forget to reboot after downgrade :) Do not update to the latest version until fixed! |

More generally speaking: On the docs at https://docs.nvidia.com/cuda/wsl-user-guide/index.html it says "Note that NVIDIA Container Toolkit does not yet support Docker Desktop WSL 2 backend." -- is that advice now simply outdated, so we can expect nvidia docker to work with Docker Desktop v3.3.1? nvidia folks, maybe time to update that part of the docs? More specifically @qiangxinglin -- did you have to downgrade anything else except for Docker Desktop, for example nvidia-docker2 down to 2.5? |

|

Docker Desktop >=3.1.0 should support WSL 2 GPUs (https://docs.docker.com/docker-for-windows/wsl/#gpu-support) so the nvidia documentation is out of date. That said, there are quite a few users who prefer running |

|

@cpbotha No, only the Docker Desktop, but I find new issues, although |

|

I'm having similar issue as well. Downgrading to Docker 3.3.1 or 3.3.2 worked for me, there's something wrong with the 3.3.3 release. Another thing is that |

|

@qiangxinglin |

|

The reason is you should subscribe Windows insider program with ‘dev’

channel, then retry. Remember, the win version should at least be 20000+.

Minxiangliu ***@***.***>于2021年5月25日 周二下午4:54写道:

… @qiangxinglin <https://github.com/qiangxinglin>

I tried all the above things, but none of them worked, please help me.

$ docker run --rm --gpus=all --env NVIDIA_DISABLE_REQUIRE=1 -it nvcr.io/nvidia/k8s/cuda-sample:nbody nbody -gpu -benchmark

> docker: Error response from daemon: OCI runtime create failed: container_linux.go:367: starting container process caused:

process_linux.go:495: container init caused: Running hook #0:: error running hook: exit status 1,

stdout: , stderr: nvidia-container-cli: initialization error: driver error: failed to process request: unknown.

winver: version 2004(OS Build 19041.985)

uname: Linux HLAI005021 5.4.72-microsoft-standard-WSL2 #1 SMP Wed Oct 28 23:40:43 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux

Windows nvidia driver: 470.14

[image: image]

<https://user-images.githubusercontent.com/30144428/119467420-16223d80-bd78-11eb-93d0-81618dd18aea.png>

[image: image]

<https://user-images.githubusercontent.com/30144428/119468382-f93a3a00-bd78-11eb-8d1a-f47d36c392cf.png>

[image: image]

<https://user-images.githubusercontent.com/30144428/119468529-1d961680-bd79-11eb-9ca7-cbf4a3d3e44d.png>

[image: image]

<https://user-images.githubusercontent.com/30144428/119468988-9006f680-bd79-11eb-822b-ed4f7892fdfd.png>

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#1496 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AE3QGORZ74I66QWBEMRZYC3TPNQVFANCNFSM433PA7NA>

.

|

Do you mean that I need to update windows? |

|

Yes, follow the cuda on wsl instructions to upgrade your OS version (google

keyword: windows insider program). Note that it’s quite a big update which

may take you more than 10 minutes.

Minxiangliu ***@***.***>于2021年5月25日 周二下午5:12写道:

… 原因是您應該使用“ dev”頻道訂閱Windows內部程序,然後重試。請記住,獲勝版本至少應為20000+。Minxiangliu *@*

.***>於2021年5月25日週二下午4:54完成:

… <#m_-5782840853630349464_>

@qiangxinglin <https://github.com/qiangxinglin> <

https://github.com/qiangxinglin >我嘗試了上述所有操作,但沒有一個起作用,請幫助我。$ docker run

--rm --gpus = all --env NVIDIA_DISABLE_REQUIRE = 1 -it

nvcr.io/nvidia/k8s/cuda-sample:nbody nbody -gpu -benchmark>

docker:來自守護程序的錯誤響應:OCI運行時創建失敗:

container_linux.go:367:啟動容器進程引起:process_linux.go:495:容器初始化引起:運行鉤子0

::錯誤運行鉤子:退出狀態1,stdout:,stderr:nvidia-container-cli:初始化錯誤:驅動程序錯誤:無法處理請求:未知。winver:版本2004(操作系統內部版本19041.985)別名:Linux

HLAI005021 5.4.72-microsoft-standard-WSL2 #1

<#1>SMP週三10月28日23:40:43 UTC

2020 x86_64 x86_64 x86_64 GNU / Linux Windows nvidia驅動程序:470.14 [image:圖片]

< https://user-images.githubusercontent.com/30144428/119467420-16223d80-bd78-11eb-

93d0-81618dd18aea.png

<https://user-images.githubusercontent.com/30144428/119467420-16223d80-bd78-11eb-93d0-81618dd18aea.png>

> [圖像:圖片] <

https://user-images.githubusercontent.com/30144428/119468382-f93a3a00-bd78-11eb-8d1a-f47d36c392cf.png

> [圖像:圖片] < https://

user-images.githubusercontent.com/30144428/119468529-1d961680-bd79-11eb-9ca7-cbf4a3d3e44d.png

<https://user-images.githubusercontent.com/30144428/119468529-1d961680-bd79-11eb-9ca7-cbf4a3d3e44d.png>

> [image:圖片] < https://user-images.githubusercontent.com/30144428/119468988-9006f680-bd79-

11eb-822b-ed4f7892fdfd.png

<https://user-images.githubusercontent.com/30144428/119468988-9006f680-bd79-11eb-822b-ed4f7892fdfd.png>

> —您收到此郵件

<https://user-images.githubusercontent.com/30144428/119468988-9006f680-bd79-11eb-822b-ed4f7892fdfd.png>是因為有人提到您。直接回复此電子郵件,在GitHub

< #1496

<#1496 (comment)>

上查看(評論)

<#1496 (comment)>>,或取消訂閱<

https://github.com/notifications/unsubscribe-auth/AE3QGORZ74I66QWBEMRZYC3TPNQVFANCNFSM433PA7NA

>。

Do you mean that I need to update windows?

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#1496 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AE3QGOSSDX3FRTNP2P2GQ7TTPNSYRANCNFSM433PA7NA>

.

|

I have updated to the latest: Versino 20H2(OS build 19042.985), but still can't work. |

|

Yes, please make sure winver return version above 20000

Minxiangliu ***@***.***>于2021年5月25日 周二下午5:54写道:

… Yes, follow the cuda on wsl instructions to upgrade your OS version

(google keyword: windows insider program). Note that it’s quite a big

update which may take you more than 10 minutes. Minxiangliu *@*.

*>于2021年5月25日 周二下午5:12写道: … <#m_7196641042567470233_> 原因是您應該使用“

dev”頻道訂閱Windows內部程序,然後重試。請記住,獲勝版本至少應為20000+。Minxiangliu @ .*>於2021年5月25日週二下午4:54完成:

… <#m_-5782840853630349464_> @qiangxinglin

<https://github.com/qiangxinglin> https://github.com/qiangxinglin <

https://github.com/qiangxinglin >我嘗試了上述所有操作,但沒有一個起作用,請幫助我。$ docker run

--rm --gpus = all --env NVIDIA_DISABLE_REQUIRE = 1 -it

nvcr.io/nvidia/k8s/cuda-sample:nbody nbody -gpu -benchmark>

docker:來自守護程序的錯誤響應:OCI運行時創建失敗:

container_linux.go:367:啟動容器進程引起:process_linux.go:495:容器初始化引起:運行鉤子0

::錯誤運行鉤子:退出狀態1,stdout:,stderr:nvidia-container-cli:初始化錯誤:驅動程序錯誤:無法處理請求:未知。winver:版本2004(操作系統內部版本19041.985)別名:Linux

HLAI005021 5.4.72-microsoft-standard-WSL2 #1 <#1

<#1>>SMP週三10月28日23:40:43

UTC 2020 x86_64 x86_64 x86_64 GNU / Linux Windows nvidia驅動程序:470.14

[image:圖片] <

https://user-images.githubusercontent.com/30144428/119467420-16223d80-bd78-11eb-

93d0-81618dd18aea.png

https://user-images.githubusercontent.com/30144428/119467420-16223d80-bd78-11eb-93d0-81618dd18aea.png

> [圖像:圖片] <

https://user-images.githubusercontent.com/30144428/119468382-f93a3a00-bd78-11eb-8d1a-f47d36c392cf.png

> [圖像:圖片] < https://

user-images.githubusercontent.com/30144428/119468529-1d961680-bd79-11eb-9ca7-cbf4a3d3e44d.png

https://user-images.githubusercontent.com/30144428/119468529-1d961680-bd79-11eb-9ca7-cbf4a3d3e44d.png

> [image:圖片] <

https://user-images.githubusercontent.com/30144428/119468988-9006f680-bd79-

11eb-822b-ed4f7892fdfd.png

https://user-images.githubusercontent.com/30144428/119468988-9006f680-bd79-11eb-822b-ed4f7892fdfd.png

> —您收到此郵件 <

https://user-images.githubusercontent.com/30144428/119468988-9006f680-bd79-11eb-822b-ed4f7892fdfd.png>是因為有人提到您。直接回复此電子郵件,在GitHub

< #1496 <#1496 (comment)

<#1496 (comment)>>

上查看(評論) <#1496 (comment)

<#1496 (comment)>>>,或取消訂閱<

https://github.com/notifications/unsubscribe-auth/AE3QGORZ74I66QWBEMRZYC3TPNQVFANCNFSM433PA7NA

>。 Do you mean that I need to update windows? — You are receiving this

because you were mentioned. Reply to this email directly, view it on GitHub

<#1496 (comment)

<#1496 (comment)>>,

or unsubscribe

https://github.com/notifications/unsubscribe-auth/AE3QGOSSDX3FRTNP2P2GQ7TTPNSYRANCNFSM433PA7NA

.

I have updated to the latest: Versino 20H2(OS build 19042.985), But still

can't work.

Is this because my version has not been updated to 20000+?

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#1496 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AE3QGOTHNSYR3JNEBBG6X2DTPNXWFANCNFSM433PA7NA>

.

|

|

@qiangxinglin Beta channel can work? I keep to fail in dev channel(version 21387) update. |

|

@Minxiangliu No, the dev channel is mandantory. If you keep failing on dev, you should seek for help on Microsoft forums. |

|

@qiangxinglin The following is the result of my execution. Am i doing something wrong? winver: 21354.1 |

|

Update your driver to 470.14.

Minxiangliu ***@***.***>于2021年5月27日 周四下午5:49写道:

… @qiangxinglin <https://github.com/qiangxinglin> The following is the

result of my execution. Am i doing something wrong?

***@***.***:~$ docker run --rm -it --env NVIDIA_DISABLE_REQUIRE=1 --gpus=all nvcr.io/nvidia/k8s/cuda-sample:nbody nbody -gpu -benchmark

Run "nbody -benchmark [-numbodies=<numBodies>]" to measure performance.

-fullscreen (run n-body simulation in fullscreen mode)

-fp64 (use double precision floating point values for simulation)

-hostmem (stores simulation data in host memory)

-benchmark (run benchmark to measure performance)

-numbodies=<N> (number of bodies (>= 1) to run in simulation)

-device=<d> (where d=0,1,2.... for the CUDA device to use)

-numdevices=<i> (where i=(number of CUDA devices > 0) to use for simulation)

-compare (compares simulation results running once on the default GPU and once on the CPU)

-cpu (run n-body simulation on the CPU)

-tipsy=<file.bin> (load a tipsy model file for simulation)

NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

Error: only 0 Devices available, 1 requested. Exiting.

[image: image]

<https://user-images.githubusercontent.com/30144428/119805398-caa49680-bf13-11eb-83e1-e47ee70225fb.png>

[image: image]

<https://user-images.githubusercontent.com/30144428/119805432-d2fcd180-bf13-11eb-925d-d6692d2a545a.png>

winver: 21354.1

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#1496 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AE3QGOSYS6YFQSDPPSIOETDTPYIUBANCNFSM433PA7NA>

.

|

Do I need to reinstall Ubuntu after I update the Nvidia driver? |

|

Actually, you do not need your WSL distro to access docker for windows, but

you can enable WSL integration in Docker Desktop settings. Restart Ubuntu

is not needed.

…On Thu, May 27, 2021 at 7:35 PM Minxiangliu ***@***.***> wrote:

Update your driver to 470.14. Minxiangliu *@*.***>于2021年5月27日 周四下午5:49写道:

… <#m_1868860219479446537_>

@qiangxinglin <https://github.com/qiangxinglin>

https://github.com/qiangxinglin The following is the result of my

execution. Am i doing something wrong? ***@***.***:~$ docker run --rm -it

--env NVIDIA_DISABLE_REQUIRE=1 --gpus=all

nvcr.io/nvidia/k8s/cuda-sample:nbody nbody -gpu -benchmark Run "nbody

-benchmark [-numbodies=]" to measure performance. -fullscreen (run n-body

simulation in fullscreen mode) -fp64 (use double precision floating point

values for simulation) -hostmem (stores simulation data in host memory)

-benchmark (run benchmark to measure performance) -numbodies= (number of

bodies (>= 1) to run in simulation) -device= (where d=0,1,2.... for the

CUDA device to use) -numdevices=* (where i=(number of CUDA devices > 0)

to use for simulation) -compare (compares simulation results running once

on the default GPU and once on the CPU) -cpu (run n-body simulation on the

CPU) -tipsy=<file.bin> (load a tipsy model file for simulation) NOTE: The

CUDA Samples are not meant for performance measurements. Results may vary

when GPU Boost is enabled. Error: only 0 Devices available, 1 requested.

Exiting. [image: image]

https://user-images.githubusercontent.com/30144428/119805398-caa49680-bf13-11eb-83e1-e47ee70225fb.png

<https://user-images.githubusercontent.com/30144428/119805398-caa49680-bf13-11eb-83e1-e47ee70225fb.png>

[image: image]

https://user-images.githubusercontent.com/30144428/119805432-d2fcd180-bf13-11eb-925d-d6692d2a545a.png

<https://user-images.githubusercontent.com/30144428/119805432-d2fcd180-bf13-11eb-925d-d6692d2a545a.png>

winver: 21354.1 — You are receiving this because you were mentioned. Reply

to this email directly, view it on GitHub <#1496 (comment)

<#1496 (comment)>>,

or unsubscribe

https://github.com/notifications/unsubscribe-auth/AE3QGOSYS6YFQSDPPSIOETDTPYIUBANCNFSM433PA7NA

<https://github.com/notifications/unsubscribe-auth/AE3QGOSYS6YFQSDPPSIOETDTPYIUBANCNFSM433PA7NA>

.*

* Do I need to reinstall Ubuntu after I update the Nvidia driver?*

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#1496 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AE3QGOQMXO4INPCZI2GTI2LTPYU7DANCNFSM433PA7NA>

.

|

|

@qiangxinglin Unfortunately, an error occurred after the driver was updated to 470.14. |

|

@Minxiangliu If the screenshot is in WSL, then it's intended. There's no driver inside WSL but in your Windows. If your testing program works, then everything is done. |

|

@qiangxinglin I tried to install according to Nvidia and got the following error. Could the cause of the error be my winver problem? |

|

The output does not same as anything talked above. Maybe you could open a

new issue on this. I don’t know either.

…On Fri, May 28, 2021 at 3:25 PM Minxiangliu ***@***.***> wrote:

@qiangxinglin <https://github.com/qiangxinglin> I tried to install

according to Nvidia

<https://docs.nvidia.com/cuda/wsl-user-guide/index.html#setting-containers>

and got the following error.

[image: image]

<https://user-images.githubusercontent.com/30144428/119945588-3d6f4980-bfc8-11eb-852d-bd2d73474acf.png>

Could the cause of the error be my winver problem?

My winver: 21354.1

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#1496 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AE3QGOXZ47KRGHCXKGDQYATTP5ANNANCNFSM433PA7NA>

.

|

OK....I updated windows to the latest and the problem was solved. Thank you very much for your assistance. |

|

Driver 470.76 was released yesterday, and it works well with |

|

Indeed Driver 470.76 fixed the problems I had with nvidia-docker:

|

This is a only solution which works for me!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! Thank u @qiangxinglin |

|

Note for anyone still having this problem: Windows 10 version 21H2 works and has build version in the 19000s. I had to download the update here, as standard windows update process would not update this far for some reason. |

1. Issue or feature description

After run - apt-get upgrade

The bellow packages was updated to last version.

After upgrade above packages nvidia-docker stop working.

2. Steps to reproduce the issue

3. Information to attach (optional if deemed irrelevant)

nvidia-container-cli -k -d /dev/tty infouname -admesgnvidia-smi -adocker versiondpkg -l '*nvidia*'orrpm -qa '*nvidia*'nvidia-container-cli -VWorkaround

Downgrade the below Packages

The text was updated successfully, but these errors were encountered: