diff --git a/.gitattributes b/.gitattributes

new file mode 100644

index 0000000..753b249

--- /dev/null

+++ b/.gitattributes

@@ -0,0 +1 @@

+*.ipynb merge=nbdev-merge

diff --git a/.gitconfig b/.gitconfig

new file mode 100644

index 0000000..9054574

--- /dev/null

+++ b/.gitconfig

@@ -0,0 +1,11 @@

+# Generated by nbdev_install_hooks

+#

+# If you need to disable this instrumentation do:

+# git config --local --unset include.path

+#

+# To restore:

+# git config --local include.path ../.gitconfig

+#

+[merge "nbdev-merge"]

+ name = resolve conflicts with nbdev_fix

+ driver = nbdev_merge %O %A %B %P

diff --git a/.github/workflows/ci.yml b/.github/workflows/ci.yml

new file mode 100644

index 0000000..38afe05

--- /dev/null

+++ b/.github/workflows/ci.yml

@@ -0,0 +1,43 @@

+name: CI

+

+on:

+ push:

+ branches: [main]

+ pull_request:

+ branches: [main]

+ workflow_dispatch:

+

+concurrency:

+ group: ${{ github.workflow }}-${{ github.ref }}

+ cancel-in-progress: true

+

+jobs:

+ run-tests:

+ strategy:

+ fail-fast: false

+ matrix:

+ python-version: ["3.10"] #["3.8", "3.9", "3.10", "3.11"]

+ os: [ubuntu-latest, windows-latest] #[macos-latest, windows-latest, ubuntu-latest]

+ runs-on: ${{ matrix.os }}

+ steps:

+ - name: Clone repo

+ uses: actions/checkout@v3

+

+ - name: Set up environment

+ uses: actions/setup-python@v4

+ with:

+ python-version: ${{ matrix.python-version }}

+

+ - name: setup R

+ uses: r-lib/actions/setup-r@v2

+ with:

+ r-version: "4.3.3"

+ windows-path-include-rtools: true # Whether to add Rtools to the PATH.

+ install-r: true # If “false” use the existing installation in the GitHub Action image

+ update-rtools: true # Update rtools40 compilers and libraries to the latest builds.

+

+ - name: Install the library

+ run: pip install ".[dev]"

+

+ - name: Run tests

+ run: nbdev_test --do_print --timing --n_workers 1

diff --git a/.github/workflows/python-package.yml b/.github/workflows/python-package.yml

index 1fd42a8..d579549 100644

--- a/.github/workflows/python-package.yml

+++ b/.github/workflows/python-package.yml

@@ -5,35 +5,34 @@ name: Python package

on:

push:

- branches: [ master ]

+ branches: [master]

pull_request:

- branches: [ master ]

+ branches: [master]

jobs:

build:

-

runs-on: ubuntu-latest

strategy:

matrix:

- python-version: [3.7, 3.8, 3.9]

+ python-version: ["3.8", "3.9", "3.10", "3.11"]

steps:

- - uses: actions/checkout@v2

- - name: Set up Python ${{ matrix.python-version }}

- uses: actions/setup-python@v2

- with:

- python-version: ${{ matrix.python-version }}

- - name: Install dependencies

- run: |

- python -m pip install --upgrade pip

- pip install flake8 pytest

- if [ -f requirements.txt ]; then pip install -r requirements.txt; fi

- - name: Lint with flake8

- run: |

- # stop the build if there are Python syntax errors or undefined names

- flake8 . --count --select=E9,F63,F7,F82 --show-source --statistics

- # exit-zero treats all errors as warnings. The GitHub editor is 127 chars wide

- flake8 . --count --exit-zero --max-complexity=10 --max-line-length=127 --statistics

- - name: Test with pytest

- run: |

- pytest

+ - uses: actions/checkout@v2

+ - name: Set up Python ${{ matrix.python-version }}

+ uses: actions/setup-python@v2

+ with:

+ python-version: ${{ matrix.python-version }}

+ - name: Install dependencies

+ run: |

+ python -m pip install --upgrade pip

+ pip install flake8 pytest

+ if [ -f requirements.txt ]; then pip install -r requirements.txt; fi

+ - name: Lint with flake8

+ run: |

+ # stop the build if there are Python syntax errors or undefined names

+ flake8 . --count --select=E9,F63,F7,F82 --show-source --statistics

+ # exit-zero treats all errors as warnings. The GitHub editor is 127 chars wide

+ flake8 . --count --exit-zero --max-complexity=10 --max-line-length=127 --statistics

+ - name: Test with pytest

+ run: |

+ pytest

diff --git a/.gitignore b/.gitignore

index d722dc0..183357a 100644

--- a/.gitignore

+++ b/.gitignore

@@ -134,4 +134,6 @@ Untitle*.ipynb

#files

*.csv

-*.ipynb

+

+

+_proc

\ No newline at end of file

diff --git a/MANIFEST.in b/MANIFEST.in

new file mode 100644

index 0000000..5c0e7ce

--- /dev/null

+++ b/MANIFEST.in

@@ -0,0 +1,5 @@

+include settings.ini

+include LICENSE

+include CONTRIBUTING.md

+include README.md

+recursive-exclude * __pycache__

diff --git a/README.md b/README.md

index 0520204..5faa87a 100644

--- a/README.md

+++ b/README.md

@@ -48,16 +48,16 @@ tsfeatures(panel, dict_freqs={'D': 7, 'W': 52})

## List of available features

-| Features |||

-|:--------|:------|:-------------|

-|acf_features|heterogeneity|series_length|

-|arch_stat|holt_parameters|sparsity|

-|count_entropy|hurst|stability|

-|crossing_points|hw_parameters|stl_features|

-|entropy|intervals|unitroot_kpss|

-|flat_spots|lumpiness|unitroot_pp|

-|frequency|nonlinearity||

-|guerrero|pacf_features||

+| Features | | |

+| :-------------- | :-------------- | :------------ |

+| acf_features | heterogeneity | series_length |

+| arch_stat | holt_parameters | sparsity |

+| count_entropy | hurst | stability |

+| crossing_points | hw_parameters | stl_features |

+| entropy | intervals | unitroot_kpss |

+| flat_spots | lumpiness | unitroot_pp |

+| frequency | nonlinearity | |

+| guerrero | pacf_features | |

See the docs for a description of the features. To use a particular feature included in the package you need to import it:

@@ -96,18 +96,18 @@ Observe that this function receives a list of strings instead of a list of funct

### Non-seasonal data (100 Daily M4 time series)

-| feature | diff | feature | diff | feature | diff | feature | diff |

-|:----------------|-------:|:----------------|-------:|:----------------|-------:|:----------------|-------:|

-| e_acf10 | 0 | e_acf1 | 0 | diff2_acf1 | 0 | alpha | 3.2 |

-| seasonal_period | 0 | spike | 0 | diff1_acf10 | 0 | arch_acf | 3.3 |

-| nperiods | 0 | curvature | 0 | x_acf1 | 0 | beta | 4.04 |

-| linearity | 0 | crossing_points | 0 | nonlinearity | 0 | garch_r2 | 4.74 |

-| hw_gamma | 0 | lumpiness | 0 | diff2x_pacf5 | 0 | hurst | 5.45 |

-| hw_beta | 0 | diff1x_pacf5 | 0 | unitroot_kpss | 0 | garch_acf | 5.53 |

-| hw_alpha | 0 | diff1_acf10 | 0 | x_pacf5 | 0 | entropy | 11.65 |

-| trend | 0 | arch_lm | 0 | x_acf10 | 0 |

-| flat_spots | 0 | diff1_acf1 | 0 | unitroot_pp | 0 |

-| series_length | 0 | stability | 0 | arch_r2 | 1.37 |

+| feature | diff | feature | diff | feature | diff | feature | diff |

+| :-------------- | ---: | :-------------- | ---: | :------------ | ---: | :-------- | ----: |

+| e_acf10 | 0 | e_acf1 | 0 | diff2_acf1 | 0 | alpha | 3.2 |

+| seasonal_period | 0 | spike | 0 | diff1_acf10 | 0 | arch_acf | 3.3 |

+| nperiods | 0 | curvature | 0 | x_acf1 | 0 | beta | 4.04 |

+| linearity | 0 | crossing_points | 0 | nonlinearity | 0 | garch_r2 | 4.74 |

+| hw_gamma | 0 | lumpiness | 0 | diff2x_pacf5 | 0 | hurst | 5.45 |

+| hw_beta | 0 | diff1x_pacf5 | 0 | unitroot_kpss | 0 | garch_acf | 5.53 |

+| hw_alpha | 0 | diff1_acf10 | 0 | x_pacf5 | 0 | entropy | 11.65 |

+| trend | 0 | arch_lm | 0 | x_acf10 | 0 |

+| flat_spots | 0 | diff1_acf1 | 0 | unitroot_pp | 0 |

+| series_length | 0 | stability | 0 | arch_r2 | 1.37 |

To replicate this results use:

@@ -118,20 +118,20 @@ python -m tsfeatures.compare_with_r --results_directory /some/path

### Sesonal data (100 Hourly M4 time series)

-| feature | diff | feature | diff | feature | diff | feature | diff |

-|:------------------|-------:|:-------------|-----:|:----------|--------:|:-----------|--------:|

-| series_length | 0 |seas_acf1 | 0 | trend | 2.28 | hurst | 26.02 |

-| flat_spots | 0 |x_acf1|0| arch_r2 | 2.29 | hw_beta | 32.39 |

-| nperiods | 0 |unitroot_kpss|0| alpha | 2.52 | trough | 35 |

-| crossing_points | 0 |nonlinearity|0| beta | 3.67 | peak | 69 |

-| seasonal_period | 0 |diff1_acf10|0| linearity | 3.97 |

-| lumpiness | 0 |x_acf10|0| curvature | 4.8 |

-| stability | 0 |seas_pacf|0| e_acf10 | 7.05 |

-| arch_lm | 0 |unitroot_pp|0| garch_r2 | 7.32 |

-| diff2_acf1 | 0 |spike|0| hw_gamma | 7.32 |

-| diff2_acf10 | 0 |seasonal_strength|0.79| hw_alpha | 7.47 |

-| diff1_acf1 | 0 |e_acf1|1.67| garch_acf | 7.53 |

-| diff2x_pacf5 | 0 |arch_acf|2.18| entropy | 9.45 |

+| feature | diff | feature | diff | feature | diff | feature | diff |

+| :-------------- | ---: | :---------------- | ---: | :-------- | ---: | :------ | ----: |

+| series_length | 0 | seas_acf1 | 0 | trend | 2.28 | hurst | 26.02 |

+| flat_spots | 0 | x_acf1 | 0 | arch_r2 | 2.29 | hw_beta | 32.39 |

+| nperiods | 0 | unitroot_kpss | 0 | alpha | 2.52 | trough | 35 |

+| crossing_points | 0 | nonlinearity | 0 | beta | 3.67 | peak | 69 |

+| seasonal_period | 0 | diff1_acf10 | 0 | linearity | 3.97 |

+| lumpiness | 0 | x_acf10 | 0 | curvature | 4.8 |

+| stability | 0 | seas_pacf | 0 | e_acf10 | 7.05 |

+| arch_lm | 0 | unitroot_pp | 0 | garch_r2 | 7.32 |

+| diff2_acf1 | 0 | spike | 0 | hw_gamma | 7.32 |

+| diff2_acf10 | 0 | seasonal_strength | 0.79 | hw_alpha | 7.47 |

+| diff1_acf1 | 0 | e_acf1 | 1.67 | garch_acf | 7.53 |

+| diff2x_pacf5 | 0 | arch_acf | 2.18 | entropy | 9.45 |

To replicate this results use:

diff --git a/nbs/.gitignore b/nbs/.gitignore

new file mode 100644

index 0000000..075b254

--- /dev/null

+++ b/nbs/.gitignore

@@ -0,0 +1 @@

+/.quarto/

diff --git a/nbs/_quarto.yml b/nbs/_quarto.yml

new file mode 100644

index 0000000..006b406

--- /dev/null

+++ b/nbs/_quarto.yml

@@ -0,0 +1,20 @@

+project:

+ type: website

+

+format:

+ html:

+ theme: cosmo

+ css: styles.css

+ toc: true

+

+website:

+ twitter-card: true

+ open-graph: true

+ repo-actions: [issue]

+ navbar:

+ background: primary

+ search: true

+ sidebar:

+ style: floating

+

+metadata-files: [nbdev.yml, sidebar.yml]

diff --git a/nbs/index.ipynb b/nbs/index.ipynb

new file mode 100644

index 0000000..5998625

--- /dev/null

+++ b/nbs/index.ipynb

@@ -0,0 +1,406 @@

+{

+ "cells": [

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "\n",

+ "# tsfeatures\n",

+ "\n",

+ "Calculates various features from time series data. Python implementation of the R package _[tsfeatures](https://github.com/robjhyndman/tsfeatures)_.\n",

+ "\n",

+ "# Installation\n",

+ "\n",

+ "You can install the *released* version of `tsfeatures` from the [Python package index](pypi.org) with:\n",

+ "\n",

+ "``` python\n",

+ "pip install tsfeatures\n",

+ "```\n",

+ "\n",

+ "# Usage\n",

+ "\n",

+ "The `tsfeatures` main function calculates by default the features used by Montero-Manso, Talagala, Hyndman and Athanasopoulos in [their implementation of the FFORMA model](https://htmlpreview.github.io/?https://github.com/robjhyndman/M4metalearning/blob/master/docs/M4_methodology.html#features).\n",

+ "\n",

+ "```python\n",

+ "from tsfeatures import tsfeatures\n",

+ "```\n",

+ "\n",

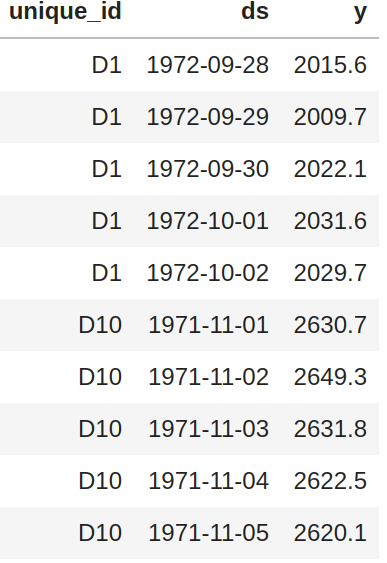

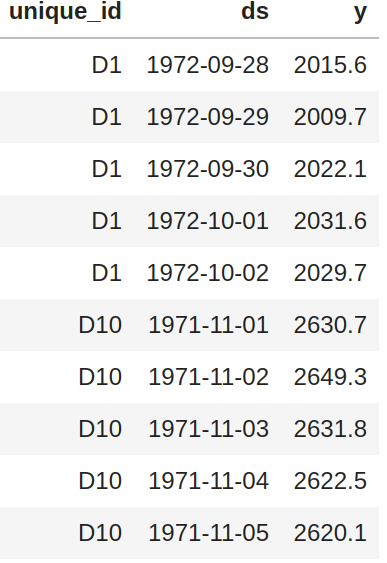

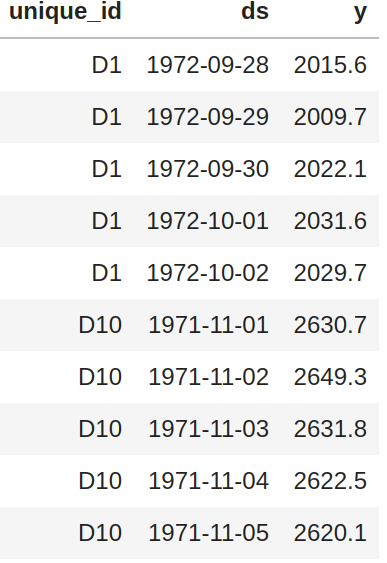

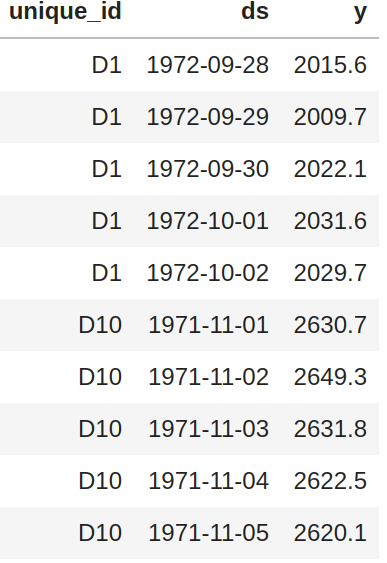

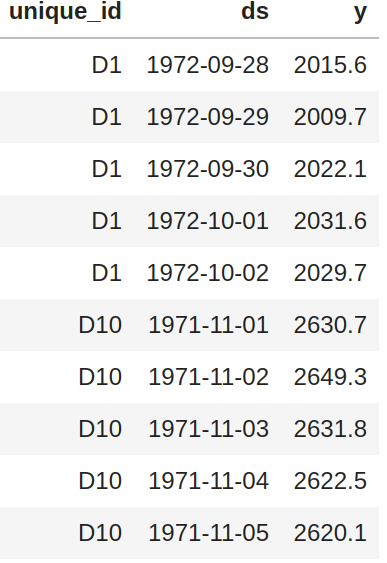

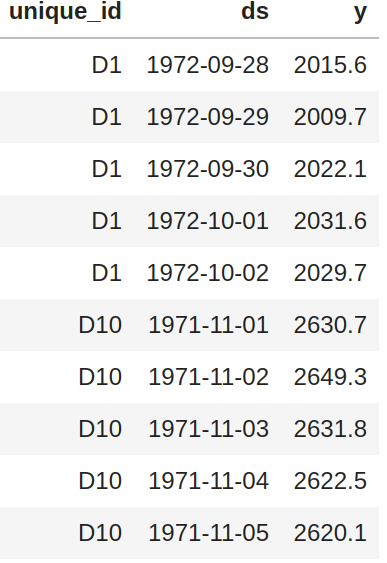

+ "This function receives a panel pandas df with columns `unique_id`, `ds`, `y` and optionally the frequency of the data.\n",

+ "\n",

+ " \n",

+ "\n",

+ "```python\n",

+ "tsfeatures(panel, freq=7)\n",

+ "```\n",

+ "\n",

+ "By default (`freq=None`) the function will try to infer the frequency of each time series (using `infer_freq` from `pandas` on the `ds` column) and assign a seasonal period according to the built-in dictionary `FREQS`:\n",

+ "\n",

+ "```python\n",

+ "FREQS = {'H': 24, 'D': 1,\n",

+ " 'M': 12, 'Q': 4,\n",

+ " 'W':1, 'Y': 1}\n",

+ "```\n",

+ "\n",

+ "You can use your own dictionary using the `dict_freqs` argument:\n",

+ "\n",

+ "```python\n",

+ "tsfeatures(panel, dict_freqs={'D': 7, 'W': 52})\n",

+ "```\n",

+ "\n",

+ "## List of available features\n",

+ "\n",

+ "| Features |||\n",

+ "|:--------|:------|:-------------|\n",

+ "|acf_features|heterogeneity|series_length|\n",

+ "|arch_stat|holt_parameters|sparsity|\n",

+ "|count_entropy|hurst|stability|\n",

+ "|crossing_points|hw_parameters|stl_features|\n",

+ "|entropy|intervals|unitroot_kpss|\n",

+ "|flat_spots|lumpiness|unitroot_pp|\n",

+ "|frequency|nonlinearity||\n",

+ "|guerrero|pacf_features||\n",

+ "\n",

+ "See the docs for a description of the features. To use a particular feature included in the package you need to import it:\n",

+ "\n",

+ "```python\n",

+ "from tsfeatures import acf_features\n",

+ "\n",

+ "tsfeatures(panel, freq=7, features=[acf_features])\n",

+ "```\n",

+ "\n",

+ "You can also define your own function and use it together with the included features:\n",

+ "\n",

+ "```python\n",

+ "def number_zeros(x, freq):\n",

+ "\n",

+ " number = (x == 0).sum()\n",

+ " return {'number_zeros': number}\n",

+ "\n",

+ "tsfeatures(panel, freq=7, features=[acf_features, number_zeros])\n",

+ "```\n",

+ "\n",

+ "`tsfeatures` can handle functions that receives a numpy array `x` and a frequency `freq` (this parameter is needed even if you don't use it) and returns a dictionary with the feature name as a key and its value.\n",

+ "\n",

+ "## R implementation\n",

+ "\n",

+ "You can use this package to call `tsfeatures` from R inside python (you need to have installed R, the packages `forecast` and `tsfeatures`; also the python package `rpy2`):\n",

+ "\n",

+ "```python\n",

+ "from tsfeatures.tsfeatures_r import tsfeatures_r\n",

+ "\n",

+ "tsfeatures_r(panel, freq=7, features=[\"acf_features\"])\n",

+ "```\n",

+ "\n",

+ "Observe that this function receives a list of strings instead of a list of functions.\n",

+ "\n",

+ "## Comparison with the R implementation (sum of absolute differences)\n",

+ "\n",

+ "### Non-seasonal data (100 Daily M4 time series)\n",

+ "\n",

+ "| feature | diff | feature | diff | feature | diff | feature | diff |\n",

+ "|:----------------|-------:|:----------------|-------:|:----------------|-------:|:----------------|-------:|\n",

+ "| e_acf10 | 0 | e_acf1 | 0 | diff2_acf1 | 0 | alpha | 3.2 |\n",

+ "| seasonal_period | 0 | spike | 0 | diff1_acf10 | 0 | arch_acf | 3.3 |\n",

+ "| nperiods | 0 | curvature | 0 | x_acf1 | 0 | beta | 4.04 |\n",

+ "| linearity | 0 | crossing_points | 0 | nonlinearity | 0 | garch_r2 | 4.74 |\n",

+ "| hw_gamma | 0 | lumpiness | 0 | diff2x_pacf5 | 0 | hurst | 5.45 |\n",

+ "| hw_beta | 0 | diff1x_pacf5 | 0 | unitroot_kpss | 0 | garch_acf | 5.53 |\n",

+ "| hw_alpha | 0 | diff1_acf10 | 0 | x_pacf5 | 0 | entropy | 11.65 |\n",

+ "| trend | 0 | arch_lm | 0 | x_acf10 | 0 |\n",

+ "| flat_spots | 0 | diff1_acf1 | 0 | unitroot_pp | 0 |\n",

+ "| series_length | 0 | stability | 0 | arch_r2 | 1.37 |\n",

+ "\n",

+ "To replicate this results use:\n",

+ "\n",

+ "``` console\n",

+ "python -m tsfeatures.compare_with_r --results_directory /some/path\n",

+ " --dataset_name Daily --num_obs 100\n",

+ "```\n",

+ "\n",

+ "### Sesonal data (100 Hourly M4 time series)\n",

+ "\n",

+ "| feature | diff | feature | diff | feature | diff | feature | diff |\n",

+ "|:------------------|-------:|:-------------|-----:|:----------|--------:|:-----------|--------:|\n",

+ "| series_length | 0 |seas_acf1 | 0 | trend | 2.28 | hurst | 26.02 |\n",

+ "| flat_spots | 0 |x_acf1|0| arch_r2 | 2.29 | hw_beta | 32.39 |\n",

+ "| nperiods | 0 |unitroot_kpss|0| alpha | 2.52 | trough | 35 |\n",

+ "| crossing_points | 0 |nonlinearity|0| beta | 3.67 | peak | 69 |\n",

+ "| seasonal_period | 0 |diff1_acf10|0| linearity | 3.97 |\n",

+ "| lumpiness | 0 |x_acf10|0| curvature | 4.8 |\n",

+ "| stability | 0 |seas_pacf|0| e_acf10 | 7.05 |\n",

+ "| arch_lm | 0 |unitroot_pp|0| garch_r2 | 7.32 |\n",

+ "| diff2_acf1 | 0 |spike|0| hw_gamma | 7.32 |\n",

+ "| diff2_acf10 | 0 |seasonal_strength|0.79| hw_alpha | 7.47 |\n",

+ "| diff1_acf1 | 0 |e_acf1|1.67| garch_acf | 7.53 |\n",

+ "| diff2x_pacf5 | 0 |arch_acf|2.18| entropy | 9.45 |\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "[](https://github.com/Nixtla/mlforecast/actions/workflows/ci.yaml)\n",

+ "[](https://pypi.org/project/mlforecast/)\n",

+ "[](https://pypi.org/project/mlforecast/)\n",

+ "[](https://anaconda.org/conda-forge/mlforecast)\n",

+ "[](https://github.com/Nixtla/mlforecast/blob/main/LICENSE)\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# | hide\n",

+ "import nbdev\n",

+ "\n",

+ "nbdev.nbdev_export()"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "\n",

+ "# tsfeatures\n",

+ "\n",

+ "Calculates various features from time series data. Python implementation of the R package _[tsfeatures](https://github.com/robjhyndman/tsfeatures)_.\n",

+ "\n",

+ "# Installation\n",

+ "\n",

+ "You can install the *released* version of `tsfeatures` from the [Python package index](pypi.org) with:\n",

+ "\n",

+ "``` python\n",

+ "pip install tsfeatures\n",

+ "```\n",

+ "\n",

+ "# Usage\n",

+ "\n",

+ "The `tsfeatures` main function calculates by default the features used by Montero-Manso, Talagala, Hyndman and Athanasopoulos in [their implementation of the FFORMA model](https://htmlpreview.github.io/?https://github.com/robjhyndman/M4metalearning/blob/master/docs/M4_methodology.html#features).\n",

+ "\n",

+ "```python\n",

+ "from tsfeatures import tsfeatures\n",

+ "```\n",

+ "\n",

+ "This function receives a panel pandas df with columns `unique_id`, `ds`, `y` and optionally the frequency of the data.\n",

+ "\n",

+ "

\n",

+ "\n",

+ "```python\n",

+ "tsfeatures(panel, freq=7)\n",

+ "```\n",

+ "\n",

+ "By default (`freq=None`) the function will try to infer the frequency of each time series (using `infer_freq` from `pandas` on the `ds` column) and assign a seasonal period according to the built-in dictionary `FREQS`:\n",

+ "\n",

+ "```python\n",

+ "FREQS = {'H': 24, 'D': 1,\n",

+ " 'M': 12, 'Q': 4,\n",

+ " 'W':1, 'Y': 1}\n",

+ "```\n",

+ "\n",

+ "You can use your own dictionary using the `dict_freqs` argument:\n",

+ "\n",

+ "```python\n",

+ "tsfeatures(panel, dict_freqs={'D': 7, 'W': 52})\n",

+ "```\n",

+ "\n",

+ "## List of available features\n",

+ "\n",

+ "| Features |||\n",

+ "|:--------|:------|:-------------|\n",

+ "|acf_features|heterogeneity|series_length|\n",

+ "|arch_stat|holt_parameters|sparsity|\n",

+ "|count_entropy|hurst|stability|\n",

+ "|crossing_points|hw_parameters|stl_features|\n",

+ "|entropy|intervals|unitroot_kpss|\n",

+ "|flat_spots|lumpiness|unitroot_pp|\n",

+ "|frequency|nonlinearity||\n",

+ "|guerrero|pacf_features||\n",

+ "\n",

+ "See the docs for a description of the features. To use a particular feature included in the package you need to import it:\n",

+ "\n",

+ "```python\n",

+ "from tsfeatures import acf_features\n",

+ "\n",

+ "tsfeatures(panel, freq=7, features=[acf_features])\n",

+ "```\n",

+ "\n",

+ "You can also define your own function and use it together with the included features:\n",

+ "\n",

+ "```python\n",

+ "def number_zeros(x, freq):\n",

+ "\n",

+ " number = (x == 0).sum()\n",

+ " return {'number_zeros': number}\n",

+ "\n",

+ "tsfeatures(panel, freq=7, features=[acf_features, number_zeros])\n",

+ "```\n",

+ "\n",

+ "`tsfeatures` can handle functions that receives a numpy array `x` and a frequency `freq` (this parameter is needed even if you don't use it) and returns a dictionary with the feature name as a key and its value.\n",

+ "\n",

+ "## R implementation\n",

+ "\n",

+ "You can use this package to call `tsfeatures` from R inside python (you need to have installed R, the packages `forecast` and `tsfeatures`; also the python package `rpy2`):\n",

+ "\n",

+ "```python\n",

+ "from tsfeatures.tsfeatures_r import tsfeatures_r\n",

+ "\n",

+ "tsfeatures_r(panel, freq=7, features=[\"acf_features\"])\n",

+ "```\n",

+ "\n",

+ "Observe that this function receives a list of strings instead of a list of functions.\n",

+ "\n",

+ "## Comparison with the R implementation (sum of absolute differences)\n",

+ "\n",

+ "### Non-seasonal data (100 Daily M4 time series)\n",

+ "\n",

+ "| feature | diff | feature | diff | feature | diff | feature | diff |\n",

+ "|:----------------|-------:|:----------------|-------:|:----------------|-------:|:----------------|-------:|\n",

+ "| e_acf10 | 0 | e_acf1 | 0 | diff2_acf1 | 0 | alpha | 3.2 |\n",

+ "| seasonal_period | 0 | spike | 0 | diff1_acf10 | 0 | arch_acf | 3.3 |\n",

+ "| nperiods | 0 | curvature | 0 | x_acf1 | 0 | beta | 4.04 |\n",

+ "| linearity | 0 | crossing_points | 0 | nonlinearity | 0 | garch_r2 | 4.74 |\n",

+ "| hw_gamma | 0 | lumpiness | 0 | diff2x_pacf5 | 0 | hurst | 5.45 |\n",

+ "| hw_beta | 0 | diff1x_pacf5 | 0 | unitroot_kpss | 0 | garch_acf | 5.53 |\n",

+ "| hw_alpha | 0 | diff1_acf10 | 0 | x_pacf5 | 0 | entropy | 11.65 |\n",

+ "| trend | 0 | arch_lm | 0 | x_acf10 | 0 |\n",

+ "| flat_spots | 0 | diff1_acf1 | 0 | unitroot_pp | 0 |\n",

+ "| series_length | 0 | stability | 0 | arch_r2 | 1.37 |\n",

+ "\n",

+ "To replicate this results use:\n",

+ "\n",

+ "``` console\n",

+ "python -m tsfeatures.compare_with_r --results_directory /some/path\n",

+ " --dataset_name Daily --num_obs 100\n",

+ "```\n",

+ "\n",

+ "### Sesonal data (100 Hourly M4 time series)\n",

+ "\n",

+ "| feature | diff | feature | diff | feature | diff | feature | diff |\n",

+ "|:------------------|-------:|:-------------|-----:|:----------|--------:|:-----------|--------:|\n",

+ "| series_length | 0 |seas_acf1 | 0 | trend | 2.28 | hurst | 26.02 |\n",

+ "| flat_spots | 0 |x_acf1|0| arch_r2 | 2.29 | hw_beta | 32.39 |\n",

+ "| nperiods | 0 |unitroot_kpss|0| alpha | 2.52 | trough | 35 |\n",

+ "| crossing_points | 0 |nonlinearity|0| beta | 3.67 | peak | 69 |\n",

+ "| seasonal_period | 0 |diff1_acf10|0| linearity | 3.97 |\n",

+ "| lumpiness | 0 |x_acf10|0| curvature | 4.8 |\n",

+ "| stability | 0 |seas_pacf|0| e_acf10 | 7.05 |\n",

+ "| arch_lm | 0 |unitroot_pp|0| garch_r2 | 7.32 |\n",

+ "| diff2_acf1 | 0 |spike|0| hw_gamma | 7.32 |\n",

+ "| diff2_acf10 | 0 |seasonal_strength|0.79| hw_alpha | 7.47 |\n",

+ "| diff1_acf1 | 0 |e_acf1|1.67| garch_acf | 7.53 |\n",

+ "| diff2x_pacf5 | 0 |arch_acf|2.18| entropy | 9.45 |\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "[](https://github.com/Nixtla/mlforecast/actions/workflows/ci.yaml)\n",

+ "[](https://pypi.org/project/mlforecast/)\n",

+ "[](https://pypi.org/project/mlforecast/)\n",

+ "[](https://anaconda.org/conda-forge/mlforecast)\n",

+ "[](https://github.com/Nixtla/mlforecast/blob/main/LICENSE)\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# | hide\n",

+ "import nbdev\n",

+ "\n",

+ "nbdev.nbdev_export()"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "\n",

+ "# tsfeatures\n",

+ "\n",

+ "Calculates various features from time series data. Python implementation of the R package _[tsfeatures](https://github.com/robjhyndman/tsfeatures)_.\n",

+ "\n",

+ "# Installation\n",

+ "\n",

+ "You can install the *released* version of `tsfeatures` from the [Python package index](pypi.org) with:\n",

+ "\n",

+ "``` python\n",

+ "pip install tsfeatures\n",

+ "```\n",

+ "\n",

+ "# Usage\n",

+ "\n",

+ "The `tsfeatures` main function calculates by default the features used by Montero-Manso, Talagala, Hyndman and Athanasopoulos in [their implementation of the FFORMA model](https://htmlpreview.github.io/?https://github.com/robjhyndman/M4metalearning/blob/master/docs/M4_methodology.html#features).\n",

+ "\n",

+ "```python\n",

+ "from tsfeatures import tsfeatures\n",

+ "```\n",

+ "\n",

+ "This function receives a panel pandas df with columns `unique_id`, `ds`, `y` and optionally the frequency of the data.\n",

+ "\n",

+ " \n",

+ "\n",

+ "```python\n",

+ "tsfeatures(panel, freq=7)\n",

+ "```\n",

+ "\n",

+ "By default (`freq=None`) the function will try to infer the frequency of each time series (using `infer_freq` from `pandas` on the `ds` column) and assign a seasonal period according to the built-in dictionary `FREQS`:\n",

+ "\n",

+ "```python\n",

+ "FREQS = {'H': 24, 'D': 1,\n",

+ " 'M': 12, 'Q': 4,\n",

+ " 'W':1, 'Y': 1}\n",

+ "```\n",

+ "\n",

+ "You can use your own dictionary using the `dict_freqs` argument:\n",

+ "\n",

+ "```python\n",

+ "tsfeatures(panel, dict_freqs={'D': 7, 'W': 52})\n",

+ "```\n",

+ "\n",

+ "## List of available features\n",

+ "\n",

+ "| Features |||\n",

+ "|:--------|:------|:-------------|\n",

+ "|acf_features|heterogeneity|series_length|\n",

+ "|arch_stat|holt_parameters|sparsity|\n",

+ "|count_entropy|hurst|stability|\n",

+ "|crossing_points|hw_parameters|stl_features|\n",

+ "|entropy|intervals|unitroot_kpss|\n",

+ "|flat_spots|lumpiness|unitroot_pp|\n",

+ "|frequency|nonlinearity||\n",

+ "|guerrero|pacf_features||\n",

+ "\n",

+ "See the docs for a description of the features. To use a particular feature included in the package you need to import it:\n",

+ "\n",

+ "```python\n",

+ "from tsfeatures import acf_features\n",

+ "\n",

+ "tsfeatures(panel, freq=7, features=[acf_features])\n",

+ "```\n",

+ "\n",

+ "You can also define your own function and use it together with the included features:\n",

+ "\n",

+ "```python\n",

+ "def number_zeros(x, freq):\n",

+ "\n",

+ " number = (x == 0).sum()\n",

+ " return {'number_zeros': number}\n",

+ "\n",

+ "tsfeatures(panel, freq=7, features=[acf_features, number_zeros])\n",

+ "```\n",

+ "\n",

+ "`tsfeatures` can handle functions that receives a numpy array `x` and a frequency `freq` (this parameter is needed even if you don't use it) and returns a dictionary with the feature name as a key and its value.\n",

+ "\n",

+ "## R implementation\n",

+ "\n",

+ "You can use this package to call `tsfeatures` from R inside python (you need to have installed R, the packages `forecast` and `tsfeatures`; also the python package `rpy2`):\n",

+ "\n",

+ "```python\n",

+ "from tsfeatures.tsfeatures_r import tsfeatures_r\n",

+ "\n",

+ "tsfeatures_r(panel, freq=7, features=[\"acf_features\"])\n",

+ "```\n",

+ "\n",

+ "Observe that this function receives a list of strings instead of a list of functions.\n",

+ "\n",

+ "## Comparison with the R implementation (sum of absolute differences)\n",

+ "\n",

+ "### Non-seasonal data (100 Daily M4 time series)\n",

+ "\n",

+ "| feature | diff | feature | diff | feature | diff | feature | diff |\n",

+ "|:----------------|-------:|:----------------|-------:|:----------------|-------:|:----------------|-------:|\n",

+ "| e_acf10 | 0 | e_acf1 | 0 | diff2_acf1 | 0 | alpha | 3.2 |\n",

+ "| seasonal_period | 0 | spike | 0 | diff1_acf10 | 0 | arch_acf | 3.3 |\n",

+ "| nperiods | 0 | curvature | 0 | x_acf1 | 0 | beta | 4.04 |\n",

+ "| linearity | 0 | crossing_points | 0 | nonlinearity | 0 | garch_r2 | 4.74 |\n",

+ "| hw_gamma | 0 | lumpiness | 0 | diff2x_pacf5 | 0 | hurst | 5.45 |\n",

+ "| hw_beta | 0 | diff1x_pacf5 | 0 | unitroot_kpss | 0 | garch_acf | 5.53 |\n",

+ "| hw_alpha | 0 | diff1_acf10 | 0 | x_pacf5 | 0 | entropy | 11.65 |\n",

+ "| trend | 0 | arch_lm | 0 | x_acf10 | 0 |\n",

+ "| flat_spots | 0 | diff1_acf1 | 0 | unitroot_pp | 0 |\n",

+ "| series_length | 0 | stability | 0 | arch_r2 | 1.37 |\n",

+ "\n",

+ "To replicate this results use:\n",

+ "\n",

+ "``` console\n",

+ "python -m tsfeatures.compare_with_r --results_directory /some/path\n",

+ " --dataset_name Daily --num_obs 100\n",

+ "```\n",

+ "\n",

+ "### Sesonal data (100 Hourly M4 time series)\n",

+ "\n",

+ "| feature | diff | feature | diff | feature | diff | feature | diff |\n",

+ "|:------------------|-------:|:-------------|-----:|:----------|--------:|:-----------|--------:|\n",

+ "| series_length | 0 |seas_acf1 | 0 | trend | 2.28 | hurst | 26.02 |\n",

+ "| flat_spots | 0 |x_acf1|0| arch_r2 | 2.29 | hw_beta | 32.39 |\n",

+ "| nperiods | 0 |unitroot_kpss|0| alpha | 2.52 | trough | 35 |\n",

+ "| crossing_points | 0 |nonlinearity|0| beta | 3.67 | peak | 69 |\n",

+ "| seasonal_period | 0 |diff1_acf10|0| linearity | 3.97 |\n",

+ "| lumpiness | 0 |x_acf10|0| curvature | 4.8 |\n",

+ "| stability | 0 |seas_pacf|0| e_acf10 | 7.05 |\n",

+ "| arch_lm | 0 |unitroot_pp|0| garch_r2 | 7.32 |\n",

+ "| diff2_acf1 | 0 |spike|0| hw_gamma | 7.32 |\n",

+ "| diff2_acf10 | 0 |seasonal_strength|0.79| hw_alpha | 7.47 |\n",

+ "| diff1_acf1 | 0 |e_acf1|1.67| garch_acf | 7.53 |\n",

+ "| diff2x_pacf5 | 0 |arch_acf|2.18| entropy | 9.45 |\n",

+ "\n",

+ "\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "[](https://github.com/FedericoGarza/tsfeatures/tree/master)\n",

+ "[](https://pypi.python.org/pypi/tsfeatures/)\n",

+ "[](https://pepy.tech/project/tsfeatures)\n",

+ "[](https://www.python.org/downloads/release/python-370+/)\n",

+ "[](https://github.com/FedericoGarza/tsfeatures/blob/master/LICENSE)\n",

+ "\n",

+ "# tsfeatures\n",

+ "\n",

+ "Calculates various features from time series data. Python implementation of the R package _[tsfeatures](https://github.com/robjhyndman/tsfeatures)_.\n",

+ "\n",

+ "# Installation\n",

+ "\n",

+ "You can install the *released* version of `tsfeatures` from the [Python package index](pypi.org) with:\n",

+ "\n",

+ "``` python\n",

+ "pip install tsfeatures\n",

+ "```\n",

+ "\n",

+ "# Usage\n",

+ "\n",

+ "The `tsfeatures` main function calculates by default the features used by Montero-Manso, Talagala, Hyndman and Athanasopoulos in [their implementation of the FFORMA model](https://htmlpreview.github.io/?https://github.com/robjhyndman/M4metalearning/blob/master/docs/M4_methodology.html#features).\n",

+ "\n",

+ "```python\n",

+ "from tsfeatures import tsfeatures\n",

+ "```\n",

+ "\n",

+ "This function receives a panel pandas df with columns `unique_id`, `ds`, `y` and optionally the frequency of the data.\n",

+ "\n",

+ "

\n",

+ "\n",

+ "```python\n",

+ "tsfeatures(panel, freq=7)\n",

+ "```\n",

+ "\n",

+ "By default (`freq=None`) the function will try to infer the frequency of each time series (using `infer_freq` from `pandas` on the `ds` column) and assign a seasonal period according to the built-in dictionary `FREQS`:\n",

+ "\n",

+ "```python\n",

+ "FREQS = {'H': 24, 'D': 1,\n",

+ " 'M': 12, 'Q': 4,\n",

+ " 'W':1, 'Y': 1}\n",

+ "```\n",

+ "\n",

+ "You can use your own dictionary using the `dict_freqs` argument:\n",

+ "\n",

+ "```python\n",

+ "tsfeatures(panel, dict_freqs={'D': 7, 'W': 52})\n",

+ "```\n",

+ "\n",

+ "## List of available features\n",

+ "\n",

+ "| Features |||\n",

+ "|:--------|:------|:-------------|\n",

+ "|acf_features|heterogeneity|series_length|\n",

+ "|arch_stat|holt_parameters|sparsity|\n",

+ "|count_entropy|hurst|stability|\n",

+ "|crossing_points|hw_parameters|stl_features|\n",

+ "|entropy|intervals|unitroot_kpss|\n",

+ "|flat_spots|lumpiness|unitroot_pp|\n",

+ "|frequency|nonlinearity||\n",

+ "|guerrero|pacf_features||\n",

+ "\n",

+ "See the docs for a description of the features. To use a particular feature included in the package you need to import it:\n",

+ "\n",

+ "```python\n",

+ "from tsfeatures import acf_features\n",

+ "\n",

+ "tsfeatures(panel, freq=7, features=[acf_features])\n",

+ "```\n",

+ "\n",

+ "You can also define your own function and use it together with the included features:\n",

+ "\n",

+ "```python\n",

+ "def number_zeros(x, freq):\n",

+ "\n",

+ " number = (x == 0).sum()\n",

+ " return {'number_zeros': number}\n",

+ "\n",

+ "tsfeatures(panel, freq=7, features=[acf_features, number_zeros])\n",

+ "```\n",

+ "\n",

+ "`tsfeatures` can handle functions that receives a numpy array `x` and a frequency `freq` (this parameter is needed even if you don't use it) and returns a dictionary with the feature name as a key and its value.\n",

+ "\n",

+ "## R implementation\n",

+ "\n",

+ "You can use this package to call `tsfeatures` from R inside python (you need to have installed R, the packages `forecast` and `tsfeatures`; also the python package `rpy2`):\n",

+ "\n",

+ "```python\n",

+ "from tsfeatures.tsfeatures_r import tsfeatures_r\n",

+ "\n",

+ "tsfeatures_r(panel, freq=7, features=[\"acf_features\"])\n",

+ "```\n",

+ "\n",

+ "Observe that this function receives a list of strings instead of a list of functions.\n",

+ "\n",

+ "## Comparison with the R implementation (sum of absolute differences)\n",

+ "\n",

+ "### Non-seasonal data (100 Daily M4 time series)\n",

+ "\n",

+ "| feature | diff | feature | diff | feature | diff | feature | diff |\n",

+ "|:----------------|-------:|:----------------|-------:|:----------------|-------:|:----------------|-------:|\n",

+ "| e_acf10 | 0 | e_acf1 | 0 | diff2_acf1 | 0 | alpha | 3.2 |\n",

+ "| seasonal_period | 0 | spike | 0 | diff1_acf10 | 0 | arch_acf | 3.3 |\n",

+ "| nperiods | 0 | curvature | 0 | x_acf1 | 0 | beta | 4.04 |\n",

+ "| linearity | 0 | crossing_points | 0 | nonlinearity | 0 | garch_r2 | 4.74 |\n",

+ "| hw_gamma | 0 | lumpiness | 0 | diff2x_pacf5 | 0 | hurst | 5.45 |\n",

+ "| hw_beta | 0 | diff1x_pacf5 | 0 | unitroot_kpss | 0 | garch_acf | 5.53 |\n",

+ "| hw_alpha | 0 | diff1_acf10 | 0 | x_pacf5 | 0 | entropy | 11.65 |\n",

+ "| trend | 0 | arch_lm | 0 | x_acf10 | 0 |\n",

+ "| flat_spots | 0 | diff1_acf1 | 0 | unitroot_pp | 0 |\n",

+ "| series_length | 0 | stability | 0 | arch_r2 | 1.37 |\n",

+ "\n",

+ "To replicate this results use:\n",

+ "\n",

+ "``` console\n",

+ "python -m tsfeatures.compare_with_r --results_directory /some/path\n",

+ " --dataset_name Daily --num_obs 100\n",

+ "```\n",

+ "\n",

+ "### Sesonal data (100 Hourly M4 time series)\n",

+ "\n",

+ "| feature | diff | feature | diff | feature | diff | feature | diff |\n",

+ "|:------------------|-------:|:-------------|-----:|:----------|--------:|:-----------|--------:|\n",

+ "| series_length | 0 |seas_acf1 | 0 | trend | 2.28 | hurst | 26.02 |\n",

+ "| flat_spots | 0 |x_acf1|0| arch_r2 | 2.29 | hw_beta | 32.39 |\n",

+ "| nperiods | 0 |unitroot_kpss|0| alpha | 2.52 | trough | 35 |\n",

+ "| crossing_points | 0 |nonlinearity|0| beta | 3.67 | peak | 69 |\n",

+ "| seasonal_period | 0 |diff1_acf10|0| linearity | 3.97 |\n",

+ "| lumpiness | 0 |x_acf10|0| curvature | 4.8 |\n",

+ "| stability | 0 |seas_pacf|0| e_acf10 | 7.05 |\n",

+ "| arch_lm | 0 |unitroot_pp|0| garch_r2 | 7.32 |\n",

+ "| diff2_acf1 | 0 |spike|0| hw_gamma | 7.32 |\n",

+ "| diff2_acf10 | 0 |seasonal_strength|0.79| hw_alpha | 7.47 |\n",

+ "| diff1_acf1 | 0 |e_acf1|1.67| garch_acf | 7.53 |\n",

+ "| diff2x_pacf5 | 0 |arch_acf|2.18| entropy | 9.45 |\n",

+ "\n",

+ "\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "[](https://github.com/FedericoGarza/tsfeatures/tree/master)\n",

+ "[](https://pypi.python.org/pypi/tsfeatures/)\n",

+ "[](https://pepy.tech/project/tsfeatures)\n",

+ "[](https://www.python.org/downloads/release/python-370+/)\n",

+ "[](https://github.com/FedericoGarza/tsfeatures/blob/master/LICENSE)\n",

+ "\n",

+ "# tsfeatures\n",

+ "\n",

+ "Calculates various features from time series data. Python implementation of the R package _[tsfeatures](https://github.com/robjhyndman/tsfeatures)_.\n",

+ "\n",

+ "# Installation\n",

+ "\n",

+ "You can install the *released* version of `tsfeatures` from the [Python package index](pypi.org) with:\n",

+ "\n",

+ "``` python\n",

+ "pip install tsfeatures\n",

+ "```\n",

+ "\n",

+ "# Usage\n",

+ "\n",

+ "The `tsfeatures` main function calculates by default the features used by Montero-Manso, Talagala, Hyndman and Athanasopoulos in [their implementation of the FFORMA model](https://htmlpreview.github.io/?https://github.com/robjhyndman/M4metalearning/blob/master/docs/M4_methodology.html#features).\n",

+ "\n",

+ "```python\n",

+ "from tsfeatures import tsfeatures\n",

+ "```\n",

+ "\n",

+ "This function receives a panel pandas df with columns `unique_id`, `ds`, `y` and optionally the frequency of the data.\n",

+ "\n",

+ " \n",

+ "\n",

+ "```python\n",

+ "tsfeatures(panel, freq=7)\n",

+ "```\n",

+ "\n",

+ "By default (`freq=None`) the function will try to infer the frequency of each time series (using `infer_freq` from `pandas` on the `ds` column) and assign a seasonal period according to the built-in dictionary `FREQS`:\n",

+ "\n",

+ "```python\n",

+ "FREQS = {'H': 24, 'D': 1,\n",

+ " 'M': 12, 'Q': 4,\n",

+ " 'W':1, 'Y': 1}\n",

+ "```\n",

+ "\n",

+ "You can use your own dictionary using the `dict_freqs` argument:\n",

+ "\n",

+ "```python\n",

+ "tsfeatures(panel, dict_freqs={'D': 7, 'W': 52})\n",

+ "```\n",

+ "\n",

+ "## List of available features\n",

+ "\n",

+ "| Features |||\n",

+ "|:--------|:------|:-------------|\n",

+ "|acf_features|heterogeneity|series_length|\n",

+ "|arch_stat|holt_parameters|sparsity|\n",

+ "|count_entropy|hurst|stability|\n",

+ "|crossing_points|hw_parameters|stl_features|\n",

+ "|entropy|intervals|unitroot_kpss|\n",

+ "|flat_spots|lumpiness|unitroot_pp|\n",

+ "|frequency|nonlinearity||\n",

+ "|guerrero|pacf_features||\n",

+ "\n",

+ "See the docs for a description of the features. To use a particular feature included in the package you need to import it:\n",

+ "\n",

+ "```python\n",

+ "from tsfeatures import acf_features\n",

+ "\n",

+ "tsfeatures(panel, freq=7, features=[acf_features])\n",

+ "```\n",

+ "\n",

+ "You can also define your own function and use it together with the included features:\n",

+ "\n",

+ "```python\n",

+ "def number_zeros(x, freq):\n",

+ "\n",

+ " number = (x == 0).sum()\n",

+ " return {'number_zeros': number}\n",

+ "\n",

+ "tsfeatures(panel, freq=7, features=[acf_features, number_zeros])\n",

+ "```\n",

+ "\n",

+ "`tsfeatures` can handle functions that receives a numpy array `x` and a frequency `freq` (this parameter is needed even if you don't use it) and returns a dictionary with the feature name as a key and its value.\n",

+ "\n",

+ " \n",

+ "\n",

+ "# Authors\n",

+ "\n",

+ "* **Federico Garza** - [FedericoGarza](https://github.com/FedericoGarza)\n",

+ "* **Kin Gutierrez** - [kdgutier](https://github.com/kdgutier)\n",

+ "* **Cristian Challu** - [cristianchallu](https://github.com/cristianchallu)\n",

+ "* **Jose Moralez** - [jose-moralez](https://github.com/jose-moralez)\n",

+ "* **Ricardo Olivares** - [rolivaresar](https://github.com/rolivaresar)\n",

+ "* **Max Mergenthaler** - [mergenthaler](https://github.com/mergenthaler)\n"

+ ]

+ }

+ ],

+ "metadata": {

+ "kernelspec": {

+ "display_name": "python3",

+ "language": "python",

+ "name": "python3"

+ }

+ },

+ "nbformat": 4,

+ "nbformat_minor": 4

+}

diff --git a/nbs/m4_data.ipynb b/nbs/m4_data.ipynb

new file mode 100644

index 0000000..03089b2

--- /dev/null

+++ b/nbs/m4_data.ipynb

@@ -0,0 +1,292 @@

+{

+ "cells": [

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "# features\n",

+ "\n",

+ "> Fill in a module description here\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# |default_exp m4_data"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "The autoreload extension is already loaded. To reload it, use:\n",

+ " %reload_ext autoreload\n"

+ ]

+ }

+ ],

+ "source": [

+ "%load_ext autoreload\n",

+ "%autoreload 2"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# |export\n",

+ "import os\n",

+ "import urllib\n",

+ "\n",

+ "import pandas as pd"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# |export\n",

+ "\n",

+ "seas_dict = {\n",

+ " \"Hourly\": {\"seasonality\": 24, \"input_size\": 24, \"output_size\": 48, \"freq\": \"H\"},\n",

+ " \"Daily\": {\"seasonality\": 7, \"input_size\": 7, \"output_size\": 14, \"freq\": \"D\"},\n",

+ " \"Weekly\": {\"seasonality\": 52, \"input_size\": 52, \"output_size\": 13, \"freq\": \"W\"},\n",

+ " \"Monthly\": {\"seasonality\": 12, \"input_size\": 12, \"output_size\": 18, \"freq\": \"M\"},\n",

+ " \"Quarterly\": {\"seasonality\": 4, \"input_size\": 4, \"output_size\": 8, \"freq\": \"Q\"},\n",

+ " \"Yearly\": {\"seasonality\": 1, \"input_size\": 4, \"output_size\": 6, \"freq\": \"D\"},\n",

+ "}"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# |export\n",

+ "\n",

+ "SOURCE_URL = (\n",

+ " \"https://raw.githubusercontent.com/Mcompetitions/M4-methods/master/Dataset/\"\n",

+ ")"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# |export\n",

+ "\n",

+ "\n",

+ "def maybe_download(filename, directory):\n",

+ " \"\"\"Download the data from M4's website, unless it's already here.\n",

+ "\n",

+ " Parameters\n",

+ " ----------\n",

+ " filename: str\n",

+ " Filename of M4 data with format /Type/Frequency.csv. Example: /Test/Daily-train.csv\n",

+ " directory: str\n",

+ " Custom directory where data will be downloaded.\n",

+ " \"\"\"\n",

+ " data_directory = directory + \"/m4\"\n",

+ " train_directory = data_directory + \"/Train/\"\n",

+ " test_directory = data_directory + \"/Test/\"\n",

+ "\n",

+ " os.makedirs(data_directory, exist_ok=True)\n",

+ " os.makedirs(train_directory, exist_ok=True)\n",

+ " os.makedirs(test_directory, exist_ok=True)\n",

+ "\n",

+ " filepath = os.path.join(data_directory, filename)\n",

+ " if not os.path.exists(filepath):\n",

+ " filepath, _ = urllib.request.urlretrieve(SOURCE_URL + filename, filepath)\n",

+ "\n",

+ " size = os.path.getsize(filepath)\n",

+ " print(\"Successfully downloaded\", filename, size, \"bytes.\")\n",

+ "\n",

+ " return filepath"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# |export\n",

+ "\n",

+ "\n",

+ "def m4_parser(dataset_name, directory, num_obs=1000000):\n",

+ " \"\"\"Transform M4 data into a panel.\n",

+ "\n",

+ " Parameters\n",

+ " ----------\n",

+ " dataset_name: str\n",

+ " Frequency of the data. Example: 'Yearly'.\n",

+ " directory: str\n",

+ " Custom directory where data will be saved.\n",

+ " num_obs: int\n",

+ " Number of time series to return.\n",

+ " \"\"\"\n",

+ " data_directory = directory + \"/m4\"\n",

+ " train_directory = data_directory + \"/Train/\"\n",

+ " test_directory = data_directory + \"/Test/\"\n",

+ " freq = seas_dict[dataset_name][\"freq\"]\n",

+ "\n",

+ " m4_info = pd.read_csv(data_directory + \"/M4-info.csv\", usecols=[\"M4id\", \"category\"])\n",

+ " m4_info = m4_info[m4_info[\"M4id\"].str.startswith(dataset_name[0])].reset_index(\n",

+ " drop=True\n",

+ " )\n",

+ "\n",

+ " # Train data\n",

+ " train_path = \"{}{}-train.csv\".format(train_directory, dataset_name)\n",

+ "\n",

+ " train_df = pd.read_csv(train_path, nrows=num_obs)\n",

+ " train_df = train_df.rename(columns={\"V1\": \"unique_id\"})\n",

+ "\n",

+ " train_df = pd.wide_to_long(\n",

+ " train_df, stubnames=[\"V\"], i=\"unique_id\", j=\"ds\"\n",

+ " ).reset_index()\n",

+ " train_df = train_df.rename(columns={\"V\": \"y\"})\n",

+ " train_df = train_df.dropna()\n",

+ " train_df[\"split\"] = \"train\"\n",

+ " train_df[\"ds\"] = train_df[\"ds\"] - 1\n",

+ " # Get len of series per unique_id\n",

+ " len_series = train_df.groupby(\"unique_id\").agg({\"ds\": \"max\"}).reset_index()\n",

+ " len_series.columns = [\"unique_id\", \"len_serie\"]\n",

+ "\n",

+ " # Test data\n",

+ " test_path = \"{}{}-test.csv\".format(test_directory, dataset_name)\n",

+ "\n",

+ " test_df = pd.read_csv(test_path, nrows=num_obs)\n",

+ " test_df = test_df.rename(columns={\"V1\": \"unique_id\"})\n",

+ "\n",

+ " test_df = pd.wide_to_long(\n",

+ " test_df, stubnames=[\"V\"], i=\"unique_id\", j=\"ds\"\n",

+ " ).reset_index()\n",

+ " test_df = test_df.rename(columns={\"V\": \"y\"})\n",

+ " test_df = test_df.dropna()\n",

+ " test_df[\"split\"] = \"test\"\n",

+ " test_df = test_df.merge(len_series, on=\"unique_id\")\n",

+ " test_df[\"ds\"] = test_df[\"ds\"] + test_df[\"len_serie\"] - 1\n",

+ " test_df = test_df[[\"unique_id\", \"ds\", \"y\", \"split\"]]\n",

+ "\n",

+ " df = pd.concat((train_df, test_df))\n",

+ " df = df.sort_values(by=[\"unique_id\", \"ds\"]).reset_index(drop=True)\n",

+ "\n",

+ " # Create column with dates with freq of dataset\n",

+ " len_series = df.groupby(\"unique_id\").agg({\"ds\": \"max\"}).reset_index()\n",

+ " dates = []\n",

+ " for i in range(len(len_series)):\n",

+ " len_serie = len_series.iloc[i, 1]\n",

+ " ranges = pd.date_range(start=\"1970/01/01\", periods=len_serie, freq=freq)\n",

+ " dates += list(ranges)\n",

+ " df.loc[:, \"ds\"] = dates\n",

+ "\n",

+ " df = df.merge(m4_info, left_on=[\"unique_id\"], right_on=[\"M4id\"])\n",

+ " df.drop(columns=[\"M4id\"], inplace=True)\n",

+ " df = df.rename(columns={\"category\": \"x\"})\n",

+ "\n",

+ " X_train_df = df[df[\"split\"] == \"train\"].filter(items=[\"unique_id\", \"ds\", \"x\"])\n",

+ " y_train_df = df[df[\"split\"] == \"train\"].filter(items=[\"unique_id\", \"ds\", \"y\"])\n",

+ " X_test_df = df[df[\"split\"] == \"test\"].filter(items=[\"unique_id\", \"ds\", \"x\"])\n",

+ " y_test_df = df[df[\"split\"] == \"test\"].filter(items=[\"unique_id\", \"ds\", \"y\"])\n",

+ "\n",

+ " X_train_df = X_train_df.reset_index(drop=True)\n",

+ " y_train_df = y_train_df.reset_index(drop=True)\n",

+ " X_test_df = X_test_df.reset_index(drop=True)\n",

+ " y_test_df = y_test_df.reset_index(drop=True)\n",

+ "\n",

+ " return X_train_df, y_train_df, X_test_df, y_test_df"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# |export\n",

+ "\n",

+ "\n",

+ "def prepare_m4_data(dataset_name, directory, num_obs):\n",

+ " \"\"\"Pipeline that obtains M4 times series, tranforms it and\n",

+ " gets naive2 predictions.\n",

+ "\n",

+ " Parameters\n",

+ " ----------\n",

+ " dataset_name: str\n",

+ " Frequency of the data. Example: 'Yearly'.\n",

+ " directory: str\n",

+ " Custom directory where data will be saved.\n",

+ " num_obs: int\n",

+ " Number of time series to return.\n",

+ " \"\"\"\n",

+ " m4info_filename = maybe_download(\"M4-info.csv\", directory)\n",

+ "\n",

+ " dailytrain_filename = maybe_download(\"Train/Daily-train.csv\", directory)\n",

+ " hourlytrain_filename = maybe_download(\"Train/Hourly-train.csv\", directory)\n",

+ " monthlytrain_filename = maybe_download(\"Train/Monthly-train.csv\", directory)\n",

+ " quarterlytrain_filename = maybe_download(\"Train/Quarterly-train.csv\", directory)\n",

+ " weeklytrain_filename = maybe_download(\"Train/Weekly-train.csv\", directory)\n",

+ " yearlytrain_filename = maybe_download(\"Train/Yearly-train.csv\", directory)\n",

+ "\n",

+ " dailytest_filename = maybe_download(\"Test/Daily-test.csv\", directory)\n",

+ " hourlytest_filename = maybe_download(\"Test/Hourly-test.csv\", directory)\n",

+ " monthlytest_filename = maybe_download(\"Test/Monthly-test.csv\", directory)\n",

+ " quarterlytest_filename = maybe_download(\"Test/Quarterly-test.csv\", directory)\n",

+ " weeklytest_filename = maybe_download(\"Test/Weekly-test.csv\", directory)\n",

+ " yearlytest_filename = maybe_download(\"Test/Yearly-test.csv\", directory)\n",

+ " print(\"\\n\")\n",

+ "\n",

+ " X_train_df, y_train_df, X_test_df, y_test_df = m4_parser(\n",

+ " dataset_name, directory, num_obs\n",

+ " )\n",

+ "\n",

+ " return X_train_df, y_train_df, X_test_df, y_test_df"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# |hide\n",

+ "from nbdev.showdoc import *"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# |hide\n",

+ "import nbdev\n",

+ "\n",

+ "nbdev.nbdev_export()"

+ ]

+ }

+ ],

+ "metadata": {

+ "kernelspec": {

+ "display_name": "python3",

+ "language": "python",

+ "name": "python3"

+ }

+ },

+ "nbformat": 4,

+ "nbformat_minor": 4

+}

diff --git a/nbs/nbdev.yml b/nbs/nbdev.yml

new file mode 100644

index 0000000..9085f8f

--- /dev/null

+++ b/nbs/nbdev.yml

@@ -0,0 +1,9 @@

+project:

+ output-dir: _docs

+

+website:

+ title: "tsfeatures"

+ site-url: "https://Nixtla.github.io/tsfeatures/"

+ description: "Calculates various features from time series data. Python implementation of the R package tsfeatures."

+ repo-branch: main

+ repo-url: "https://github.com/Nixtla/tsfeatures"

diff --git a/nbs/styles.css b/nbs/styles.css

new file mode 100644

index 0000000..66ccc49

--- /dev/null

+++ b/nbs/styles.css

@@ -0,0 +1,37 @@

+.cell {

+ margin-bottom: 1rem;

+}

+

+.cell > .sourceCode {

+ margin-bottom: 0;

+}

+

+.cell-output > pre {

+ margin-bottom: 0;

+}

+

+.cell-output > pre, .cell-output > .sourceCode > pre, .cell-output-stdout > pre {

+ margin-left: 0.8rem;

+ margin-top: 0;

+ background: none;

+ border-left: 2px solid lightsalmon;

+ border-top-left-radius: 0;

+ border-top-right-radius: 0;

+}

+

+.cell-output > .sourceCode {

+ border: none;

+}

+

+.cell-output > .sourceCode {

+ background: none;

+ margin-top: 0;

+}

+

+div.description {

+ padding-left: 2px;

+ padding-top: 5px;

+ font-style: italic;

+ font-size: 135%;

+ opacity: 70%;

+}

diff --git a/nbs/tsfeatures.ipynb b/nbs/tsfeatures.ipynb

new file mode 100644

index 0000000..d4b3371

--- /dev/null

+++ b/nbs/tsfeatures.ipynb

@@ -0,0 +1,1753 @@

+{

+ "cells": [

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "# features\n",

+ "\n",

+ "> Fill in a module description here\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# |default_exp tsfeatures"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "%load_ext autoreload\n",

+ "%autoreload 2"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# |export\n",

+ "import os\n",

+ "import warnings\n",

+ "from collections import ChainMap\n",

+ "from functools import partial\n",

+ "from multiprocessing import Pool\n",

+ "from typing import Callable, List, Optional\n",

+ "\n",

+ "import pandas as pd"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# |export\n",

+ "warnings.warn = lambda *a, **kw: False"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# |export\n",

+ "os.environ[\"MKL_NUM_THREADS\"] = \"1\"\n",

+ "os.environ[\"NUMEXPR_NUM_THREADS\"] = \"1\"\n",

+ "os.environ[\"OMP_NUM_THREADS\"] = \"1\""

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# |export\n",

+ "from itertools import groupby\n",

+ "from math import e # maybe change with numpy e\n",

+ "from typing import Dict\n",

+ "\n",

+ "import numpy as np\n",

+ "import pandas as pd\n",

+ "from antropy import spectral_entropy\n",

+ "from arch import arch_model\n",

+ "from scipy.optimize import minimize_scalar\n",

+ "from sklearn.linear_model import LinearRegression\n",

+ "from statsmodels.api import OLS, add_constant\n",

+ "from statsmodels.tsa.ar_model import AR\n",

+ "from statsmodels.tsa.holtwinters import ExponentialSmoothing\n",

+ "from statsmodels.tsa.seasonal import STL\n",

+ "from statsmodels.tsa.stattools import acf, kpss, pacf\n",

+ "from supersmoother import SuperSmoother\n",

+ "\n",

+ "from tsfeatures.utils import *"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "from math import isclose\n",

+ "\n",

+ "from fastcore.test import *\n",

+ "\n",

+ "from tsfeatures.m4_data import *\n",

+ "from tsfeatures.utils import USAccDeaths, WWWusage"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# |export\n",

+ "def acf_features(x: np.array, freq: int = 1) -> Dict[str, float]:\n",

+ " \"\"\"Calculates autocorrelation function features.\n",

+ "\n",

+ " Parameters\n",

+ " ----------\n",

+ " x: numpy array\n",

+ " The time series.\n",

+ " freq: int\n",

+ " Frequency of the time series\n",

+ "\n",

+ " Returns\n",

+ " -------\n",

+ " dict\n",

+ " 'x_acf1': First autocorrelation coefficient.\n",

+ " 'x_acf10': Sum of squares of first 10 autocorrelation coefficients.\n",

+ " 'diff1_acf1': First autocorrelation ciefficient of differenced series.\n",

+ " 'diff1_acf10': Sum of squared of first 10 autocorrelation coefficients\n",

+ " of differenced series.\n",

+ " 'diff2_acf1': First autocorrelation coefficient of twice-differenced series.\n",

+ " 'diff2_acf10': Sum of squared of first 10 autocorrelation coefficients of\n",

+ " twice-differenced series.\n",

+ "\n",

+ " Only for seasonal data (freq > 1).\n",

+ " 'seas_acf1': Autocorrelation coefficient at the first seasonal lag.\n",

+ " \"\"\"\n",

+ " m = freq\n",

+ " size_x = len(x)\n",

+ "\n",

+ " acfx = acf(x, nlags=max(m, 10), fft=False)\n",

+ " if size_x > 10:\n",

+ " acfdiff1x = acf(np.diff(x, n=1), nlags=10, fft=False)\n",

+ " else:\n",

+ " acfdiff1x = [np.nan] * 2\n",

+ "\n",

+ " if size_x > 11:\n",

+ " acfdiff2x = acf(np.diff(x, n=2), nlags=10, fft=False)\n",

+ " else:\n",

+ " acfdiff2x = [np.nan] * 2\n",

+ " # first autocorrelation coefficient\n",

+ "\n",

+ " try:\n",

+ " acf_1 = acfx[1]\n",

+ " except:\n",

+ " acf_1 = np.nan\n",

+ "\n",

+ " # sum of squares of first 10 autocorrelation coefficients\n",

+ " sum_of_sq_acf10 = np.sum((acfx[1:11]) ** 2) if size_x > 10 else np.nan\n",

+ " # first autocorrelation ciefficient of differenced series\n",

+ " diff1_acf1 = acfdiff1x[1]\n",

+ " # sum of squared of first 10 autocorrelation coefficients of differenced series\n",

+ " diff1_acf10 = np.sum((acfdiff1x[1:11]) ** 2) if size_x > 10 else np.nan\n",

+ " # first autocorrelation coefficient of twice-differenced series\n",

+ " diff2_acf1 = acfdiff2x[1]\n",

+ " # Sum of squared of first 10 autocorrelation coefficients of twice-differenced series\n",

+ " diff2_acf10 = np.sum((acfdiff2x[1:11]) ** 2) if size_x > 11 else np.nan\n",

+ "\n",

+ " output = {\n",

+ " \"x_acf1\": acf_1,\n",

+ " \"x_acf10\": sum_of_sq_acf10,\n",

+ " \"diff1_acf1\": diff1_acf1,\n",

+ " \"diff1_acf10\": diff1_acf10,\n",

+ " \"diff2_acf1\": diff2_acf1,\n",

+ " \"diff2_acf10\": diff2_acf10,\n",

+ " }\n",

+ "\n",

+ " if m > 1:\n",

+ " output[\"seas_acf1\"] = acfx[m] if len(acfx) > m else np.nan\n",

+ "\n",

+ " return output"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "def test_acf_features_seasonal():\n",

+ " z = acf_features(USAccDeaths, 12)\n",

+ " assert isclose(len(z), 7)\n",

+ " assert isclose(z[\"x_acf1\"], 0.70, abs_tol=0.01)\n",

+ " assert isclose(z[\"x_acf10\"], 1.20, abs_tol=0.01)\n",

+ " assert isclose(z[\"diff1_acf1\"], 0.023, abs_tol=0.01)\n",

+ " assert isclose(z[\"diff1_acf10\"], 0.27, abs_tol=0.01)\n",

+ " assert isclose(z[\"diff2_acf1\"], -0.48, abs_tol=0.01)\n",

+ " assert isclose(z[\"diff2_acf10\"], 0.74, abs_tol=0.01)\n",

+ " assert isclose(z[\"seas_acf1\"], 0.62, abs_tol=0.01)\n",

+ "\n",

+ "\n",

+ "test_acf_features_seasonal()\n",

+ "\n",

+ "\n",

+ "def test_acf_features_non_seasonal():\n",

+ " z = acf_features(WWWusage, 1)\n",

+ " assert isclose(len(z), 6)\n",

+ " assert isclose(z[\"x_acf1\"], 0.96, abs_tol=0.01)\n",

+ " assert isclose(z[\"x_acf10\"], 4.19, abs_tol=0.01)\n",

+ " assert isclose(z[\"diff1_acf1\"], 0.79, abs_tol=0.01)\n",

+ " assert isclose(z[\"diff1_acf10\"], 1.40, abs_tol=0.01)\n",

+ " assert isclose(z[\"diff2_acf1\"], 0.17, abs_tol=0.01)\n",

+ " assert isclose(z[\"diff2_acf10\"], 0.33, abs_tol=0.01)\n",

+ "\n",

+ "\n",

+ "test_acf_features_non_seasonal()"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# |export\n",

+ "def arch_stat(\n",

+ " x: np.array, freq: int = 1, lags: int = 12, demean: bool = True\n",

+ ") -> Dict[str, float]:\n",

+ " \"\"\"Arch model features.\n",

+ "\n",

+ " Parameters\n",

+ " ----------\n",

+ " x: numpy array\n",

+ " The time series.\n",

+ " freq: int\n",

+ " Frequency of the time series\n",

+ "\n",

+ " Returns\n",

+ " -------\n",

+ " dict\n",

+ " 'arch_lm': R^2 value of an autoregressive model of order lags applied to x**2.\n",

+ " \"\"\"\n",

+ " if len(x) <= lags + 1:\n",

+ " return {\"arch_lm\": np.nan}\n",

+ " if demean:\n",

+ " x = x - np.mean(x)\n",

+ "\n",

+ " size_x = len(x)\n",

+ " mat = embed(x**2, lags + 1)\n",

+ " X = mat[:, 1:]\n",

+ " y = np.vstack(mat[:, 0])\n",

+ "\n",

+ " try:\n",

+ " r_squared = LinearRegression().fit(X, y).score(X, y)\n",

+ " except:\n",

+ " r_squared = np.nan\n",

+ "\n",

+ " return {\"arch_lm\": r_squared}"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "def test_arch_stat_seasonal():\n",

+ " z = arch_stat(USAccDeaths, 12)\n",

+ " test_close(len(z), 1)\n",

+ " test_close(z[\"arch_lm\"], 0.54, eps=0.01)\n",

+ "\n",

+ "\n",

+ "test_arch_stat_seasonal()\n",

+ "\n",

+ "\n",

+ "def test_arch_stat_non_seasonal():\n",

+ " z = arch_stat(WWWusage, 12)\n",

+ " test_close(len(z), 1)\n",

+ " test_close(z[\"arch_lm\"], 0.98, eps=0.01)\n",

+ "\n",

+ "\n",

+ "test_arch_stat_non_seasonal()"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# |export\n",

+ "def count_entropy(x: np.array, freq: int = 1) -> Dict[str, float]:\n",

+ " \"\"\"Count entropy.\n",

+ "\n",

+ " Parameters\n",

+ " ----------\n",

+ " x: numpy array\n",

+ " The time series.\n",

+ " freq: int\n",

+ " Frequency of the time series\n",

+ "\n",

+ " Returns\n",

+ " -------\n",

+ " dict\n",

+ " 'count_entropy': Entropy using only positive data.\n",

+ " \"\"\"\n",

+ " entropy = x[x > 0] * np.log(x[x > 0])\n",

+ " entropy = -entropy.sum()\n",

+ "\n",

+ " return {\"count_entropy\": entropy}"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# |export\n",

+ "def crossing_points(x: np.array, freq: int = 1) -> Dict[str, float]:\n",

+ " \"\"\"Crossing points.\n",

+ "\n",

+ " Parameters\n",

+ " ----------\n",

+ " x: numpy array\n",

+ " The time series.\n",

+ " freq: int\n",

+ " Frequency of the time series\n",

+ "\n",

+ " Returns\n",

+ " -------\n",

+ " dict\n",

+ " 'crossing_points': Number of times that x crosses the median.\n",

+ " \"\"\"\n",

+ " midline = np.median(x)\n",

+ " ab = x <= midline\n",

+ " lenx = len(x)\n",

+ " p1 = ab[: (lenx - 1)]\n",

+ " p2 = ab[1:]\n",

+ " cross = (p1 & (~p2)) | (p2 & (~p1))\n",

+ "\n",

+ " return {\"crossing_points\": cross.sum()}"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# |export\n",

+ "def entropy(x: np.array, freq: int = 1, base: float = e) -> Dict[str, float]:\n",

+ " \"\"\"Calculates sample entropy.\n",

+ "\n",

+ " Parameters\n",

+ " ----------\n",

+ " x: numpy array\n",

+ " The time series.\n",

+ " freq: int\n",

+ " Frequency of the time series\n",

+ "\n",

+ " Returns\n",

+ " -------\n",

+ " dict\n",

+ " 'entropy': Wrapper of the function spectral_entropy.\n",

+ " \"\"\"\n",

+ " try:\n",

+ " with np.errstate(divide=\"ignore\"):\n",

+ " entropy = spectral_entropy(x, 1, normalize=True)\n",

+ " except:\n",

+ " entropy = np.nan\n",

+ "\n",

+ " return {\"entropy\": entropy}"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# |export\n",

+ "def flat_spots(x: np.array, freq: int = 1) -> Dict[str, float]:\n",

+ " \"\"\"Flat spots.\n",

+ "\n",

+ " Parameters\n",

+ " ----------\n",

+ " x: numpy array\n",

+ " The time series.\n",

+ " freq: int\n",

+ " Frequency of the time series\n",

+ "\n",

+ " Returns\n",

+ " -------\n",

+ " dict\n",

+ " 'flat_spots': Number of flat spots in x.\n",

+ " \"\"\"\n",

+ " try:\n",

+ " cutx = pd.cut(x, bins=10, include_lowest=True, labels=False) + 1\n",

+ " except:\n",

+ " return {\"flat_spots\": np.nan}\n",

+ "\n",

+ " rlex = np.array([sum(1 for i in g) for k, g in groupby(cutx)]).max()\n",

+ " return {\"flat_spots\": rlex}"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# |export\n",

+ "def frequency(x: np.array, freq: int = 1) -> Dict[str, float]:\n",

+ " \"\"\"Frequency.\n",

+ "\n",

+ " Parameters\n",

+ " ----------\n",

+ " x: numpy array\n",

+ " The time series.\n",

+ " freq: int\n",

+ " Frequency of the time series\n",

+ "\n",

+ " Returns\n",

+ " -------\n",

+ " dict\n",

+ " 'frequency': Wrapper of freq.\n",

+ " \"\"\"\n",

+ "\n",

+ " return {\"frequency\": freq}"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# |export\n",

+ "def guerrero(\n",

+ " x: np.array, freq: int = 1, lower: int = -1, upper: int = 2\n",

+ ") -> Dict[str, float]:\n",

+ " \"\"\"Applies Guerrero's (1993) method to select the lambda which minimises the\n",

+ " coefficient of variation for subseries of x.\n",

+ "\n",

+ " Parameters\n",

+ " ----------\n",

+ " x: numpy array\n",

+ " The time series.\n",

+ " freq: int\n",

+ " Frequency of the time series.\n",

+ " lower: float\n",

+ " The lower bound for lambda.\n",

+ " upper: float\n",

+ " The upper bound for lambda.\n",

+ "\n",

+ " Returns\n",

+ " -------\n",

+ " dict\n",

+ " 'guerrero': Minimum coefficient of variation for subseries of x.\n",

+ "\n",

+ " References\n",

+ " ----------\n",

+ " [1] Guerrero, V.M. (1993) Time-series analysis supported by power transformations.\n",

+ " Journal of Forecasting, 12, 37–48.\n",

+ " \"\"\"\n",

+ " func_to_min = lambda lambda_par: lambda_coef_var(lambda_par, x=x, period=freq)\n",

+ "\n",

+ " min_ = minimize_scalar(func_to_min, bounds=[lower, upper])\n",

+ " min_ = min_[\"fun\"]\n",

+ "\n",

+ " return {\"guerrero\": min_}"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# |export\n",

+ "def heterogeneity(x: np.array, freq: int = 1) -> Dict[str, float]:\n",

+ " \"\"\"Heterogeneity.\n",

+ "\n",

+ " Parameters\n",

+ " ----------\n",

+ " x: numpy array\n",

+ " The time series.\n",

+ " freq: int\n",

+ " Frequency of the time series\n",

+ "\n",

+ " Returns\n",

+ " -------\n",

+ " dict\n",

+ " 'arch_acf': Sum of squares of the first 12 autocorrelations of the\n",

+ " residuals of the AR model applied to x\n",

+ " 'garch_acf': Sum of squares of the first 12 autocorrelations of the\n",

+ " residuals of the GARCH model applied to x\n",

+ " 'arch_r2': Function arch_stat applied to the residuals of the\n",

+ " AR model applied to x.\n",

+ " 'garch_r2': Function arch_stat applied to the residuals of the GARCH\n",

+ " model applied to x.\n",

+ " \"\"\"\n",

+ " m = freq\n",

+ "\n",

+ " size_x = len(x)\n",

+ " order_ar = min(size_x - 1, np.floor(10 * np.log10(size_x)))\n",

+ " order_ar = int(order_ar)\n",

+ "\n",

+ " try:\n",

+ " x_whitened = AR(x).fit(maxlag=order_ar, ic=\"aic\", trend=\"c\").resid\n",

+ " except:\n",

+ " try:\n",

+ " x_whitened = AR(x).fit(maxlag=order_ar, ic=\"aic\", trend=\"nc\").resid\n",

+ " except:\n",

+ " output = {\n",

+ " \"arch_acf\": np.nan,\n",

+ " \"garch_acf\": np.nan,\n",

+ " \"arch_r2\": np.nan,\n",

+ " \"garch_r2\": np.nan,\n",

+ " }\n",

+ "\n",

+ " return output\n",

+ " # arch and box test\n",

+ " x_archtest = arch_stat(x_whitened, m)[\"arch_lm\"]\n",

+ " LBstat = (acf(x_whitened**2, nlags=12, fft=False)[1:] ** 2).sum()\n",

+ " # Fit garch model\n",

+ " garch_fit = arch_model(x_whitened, vol=\"GARCH\", rescale=False).fit(disp=\"off\")\n",

+ " # compare arch test before and after fitting garch\n",

+ " garch_fit_std = garch_fit.resid\n",

+ " x_garch_archtest = arch_stat(garch_fit_std, m)[\"arch_lm\"]\n",

+ " # compare Box test of squared residuals before and after fittig.garch\n",

+ " LBstat2 = (acf(garch_fit_std**2, nlags=12, fft=False)[1:] ** 2).sum()\n",

+ "\n",

+ " output = {\n",

+ " \"arch_acf\": LBstat,\n",

+ " \"garch_acf\": LBstat2,\n",

+ " \"arch_r2\": x_archtest,\n",

+ " \"garch_r2\": x_garch_archtest,\n",

+ " }\n",

+ "\n",

+ " return output"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# |export\n",

+ "def holt_parameters(x: np.array, freq: int = 1) -> Dict[str, float]:\n",

+ " \"\"\"Fitted parameters of a Holt model.\n",

+ "\n",

+ " Parameters\n",

+ " ----------\n",

+ " x: numpy array\n",

+ " The time series.\n",

+ " freq: int\n",

+ " Frequency of the time series\n",

+ "\n",

+ " Returns\n",

+ " -------\n",

+ " dict\n",

+ " 'alpha': Level paramater of the Holt model.\n",

+ " 'beta': Trend parameter of the Hold model.\n",

+ " \"\"\"\n",

+ " try:\n",

+ " fit = ExponentialSmoothing(x, trend=\"add\", seasonal=None).fit()\n",

+ " params = {\n",

+ " \"alpha\": fit.params[\"smoothing_level\"],\n",

+ " \"beta\": fit.params[\"smoothing_trend\"],\n",

+ " }\n",

+ " except:\n",

+ " params = {\"alpha\": np.nan, \"beta\": np.nan}\n",

+ "\n",

+ " return params"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# |export\n",

+ "def hurst(x: np.array, freq: int = 1) -> Dict[str, float]:\n",

+ " \"\"\"Hurst index.\n",

+ "\n",

+ " Parameters\n",

+ " ----------\n",

+ " x: numpy array\n",

+ " The time series.\n",

+ " freq: int\n",

+ " Frequency of the time series\n",

+ "\n",

+ " Returns\n",

+ " -------\n",

+ " dict\n",

+ " 'hurst': Hurst exponent.\n",

+ " \"\"\"\n",

+ " try:\n",

+ " hurst_index = hurst_exponent(x)\n",

+ " except:\n",

+ " hurst_index = np.nan\n",

+ "\n",

+ " return {\"hurst\": hurst_index}"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# |export\n",

+ "def hw_parameters(x: np.array, freq: int = 1) -> Dict[str, float]:\n",

+ " \"\"\"Fitted parameters of a Holt-Winters model.\n",

+ "\n",

+ " Parameters\n",

+ " ----------\n",

+ " x: numpy array\n",

+ " The time series.\n",

+ " freq: int\n",

+ " Frequency of the time series\n",

+ "\n",

+ " Returns\n",

+ " -------\n",

+ " dict\n",

+ " 'hw_alpha': Level parameter of the HW model.\n",

+ " 'hw_beta': Trend parameter of the HW model.\n",

+ " 'hw_gamma': Seasonal parameter of the HW model.\n",

+ " \"\"\"\n",

+ " try:\n",

+ " fit = ExponentialSmoothing(\n",

+ " x, seasonal_periods=freq, trend=\"add\", seasonal=\"add\"\n",

+ " ).fit()\n",

+ " params = {\n",

+ " \"hw_alpha\": fit.params[\"smoothing_level\"],\n",

+ " \"hw_beta\": fit.params[\"smoothing_trend\"],\n",

+ " \"hw_gamma\": fit.params[\"smoothing_seasonal\"],\n",

+ " }\n",

+ " except:\n",

+ " params = {\"hw_alpha\": np.nan, \"hw_beta\": np.nan, \"hw_gamma\": np.nan}\n",

+ "\n",

+ " return params"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# |export\n",

+ "def intervals(x: np.array, freq: int = 1) -> Dict[str, float]:\n",

+ " \"\"\"Intervals with demand.\n",

+ "\n",

+ " Parameters\n",

+ " ----------\n",

+ " x: numpy array\n",

+ " The time series.\n",

+ " freq: int\n",

+ " Frequency of the time series\n",

+ "\n",

+ " Returns\n",

+ " -------\n",

+ " dict\n",

+ " 'intervals_mean': Mean of intervals with positive values.\n",

+ " 'intervals_sd': SD of intervals with positive values.\n",

+ " \"\"\"\n",

+ " x[x > 0] = 1\n",

+ "\n",

+ " y = [sum(val) for keys, val in groupby(x, key=lambda k: k != 0) if keys != 0]\n",

+ " y = np.array(y)\n",

+ "\n",

+ " return {\"intervals_mean\": np.mean(y), \"intervals_sd\": np.std(y, ddof=1)}"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# |export\n",