New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

m(o)ve over smvs #921

m(o)ve over smvs #921

Conversation

|

Ok @pierotofy and @dakotabenjamin -- this will require some heavy testing. mve seems to have lower memory requirements but increased processing time over smvs. I think for the additional fidelity, the processing time is worth the tradeoff (especially in light of reduced memory usage, but we should test on bigger datasets to be sure. And if we need to have them all run side-by-side, I am open to that too. We could have low, medium, and high fidelity 3D data with opensfm, smvs, and mve point clouds respectively. But, for the sake of simplicity, this pull request is an outright replacement of smvs. Also, I pass no parameters for mve -- the results are quite nice with defaults. We may want to add parameters later, but I decided to keep this simple: given the quality of the improvement, there seemed sense to not over-complicate. |

Improve aspect ratio for many users

Literally string literals

|

I think less is more, so if quality is improved and memory usage is lowered, even though processing time is increased, I think it's a win and smvs can be placed aside for the time being. Is it ready to be tested then? I'd love to start running some tests. |

scripts/mve.py

Outdated

|

|

||

| # config | ||

| config = [ | ||

| "-t%s" % self.params.threads, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We should respect the --max_concurrency parameter when invoking dmrecon?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ah yes, this reminds me of the other hitch -- I am not sure dmrecon respects concurrency values. It'd have to be set with OMP: https://github.com/simonfuhrmann/mve/blob/master/apps/dmrecon/dmrecon.cc#L285

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Interesting, I don't have much experience with OpenMP. Maybe calling dmrecon by setting the OMP_NUM_THREADS environment variable could limit them. https://www.ibm.com/support/knowledgecenter/SSGH2K_13.1.3/com.ibm.xlc1313.aix.doc/compiler_ref/ruomprun4.html

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, I tried that and manually set it to 5 but it used all cores anyway... . But it is c++, there's a good chance I was doing something wrong.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is one I would need help on -- I'm a bit over my head.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Might just be able to port the approach that smvs uses, if their approaches to concurrency are similar enough.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If the user selects mve as the processing option, and also specifies --max-concurrency, then a warning ("Thread limiting is not available for this option and will run on all threads, are you sure you want to continue? [Y/n]

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If we can't limit the threads a warning is fine, but I'd caution with the Y/n prompt, could cause nodeodm to get stuck indefinitely.

|

It is ready to be tested. It should just install and run. |

|

I will make some time for this in the next few days. |

|

👍 |

I am pretty sure I did something wrong here. It usually works as I have it configured, but sometimes failed (I'm sorry I can't be more specific).: Line 121 in 434b810

|

|

Testing in progress, will post updates when I get results. |

|

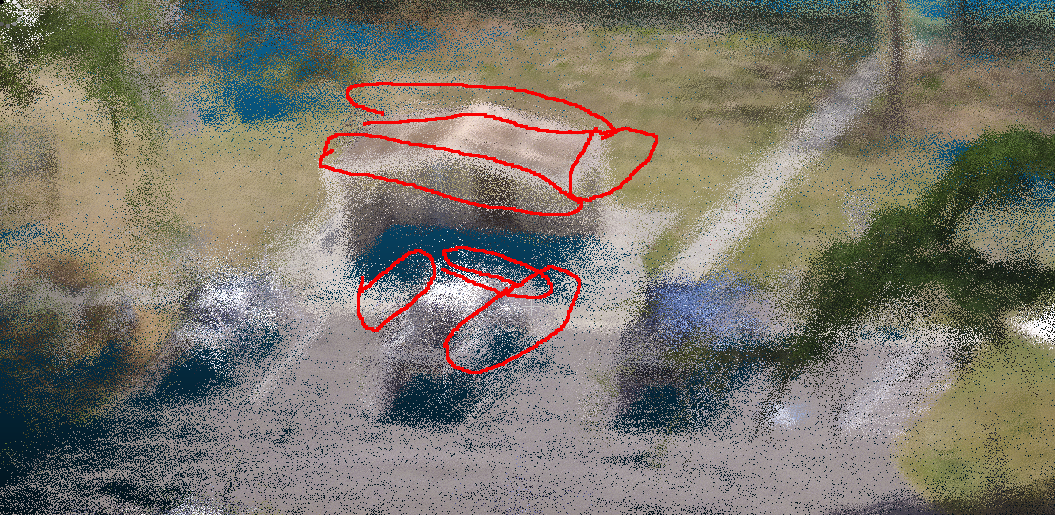

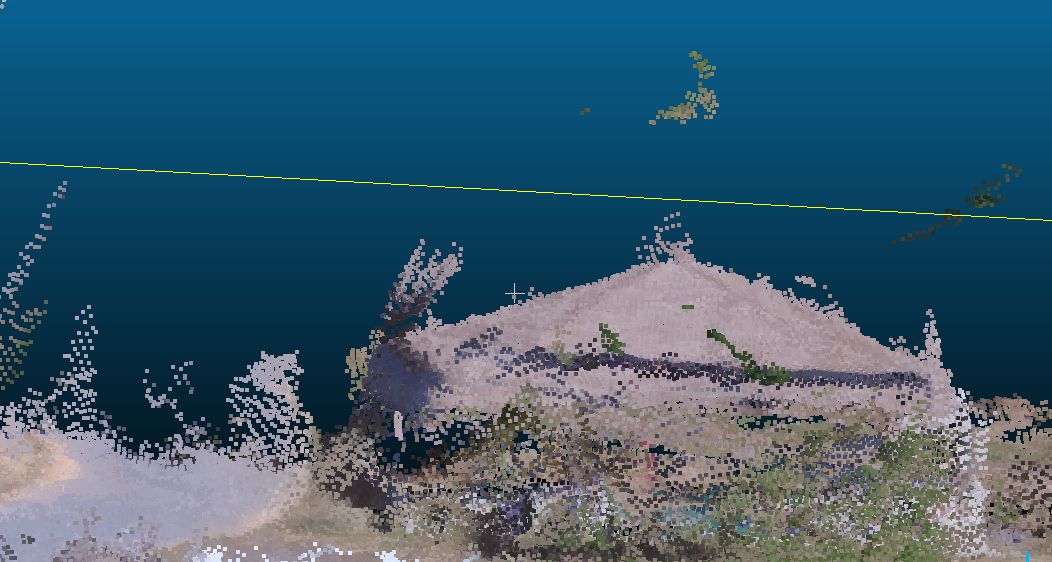

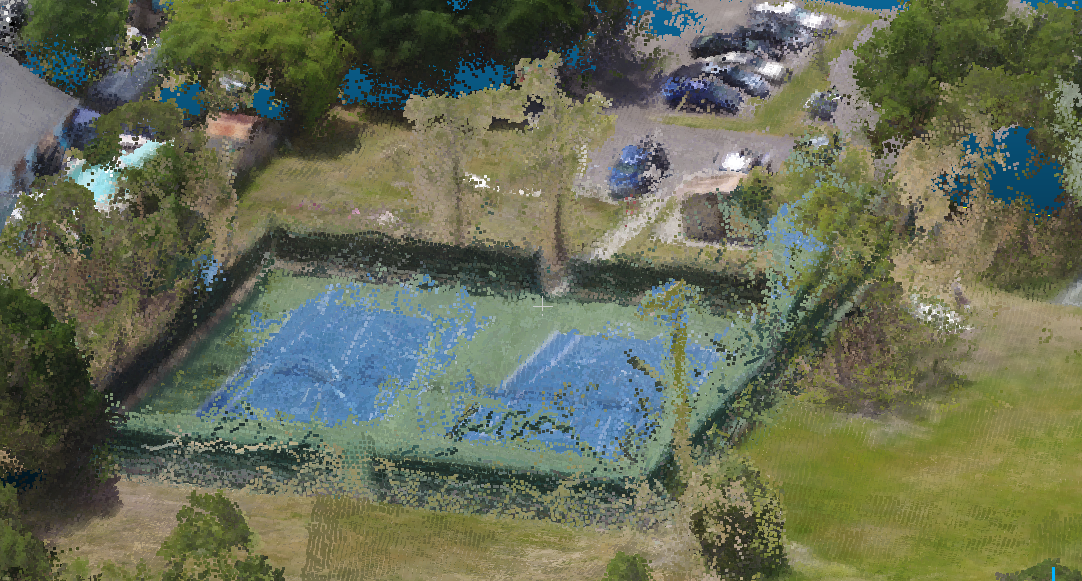

My findings so far:

I'll look for flags that could help us solve one or more of these problems. |

|

The flag we should always pass: Should also pass

https://github.com/simonfuhrmann/mve/wiki/MVE-Users-Guide#multi-view-stereo All options for reference: |

opendm/context.py

Outdated

| makescene_path = os.path.join(superbuild_path, 'src', 'elibs', 'mve', 'apps', 'makescene', 'makescene') #TODO: don't install in source | ||

| smvs_path = os.path.join(superbuild_path, 'src', 'elibs', 'smvs', 'app', 'smvsrecon') | ||

| mve_path = os.path.join(superbuild_path, 'src', 'elibs', 'mve', 'apps', 'dmrecon', 'dmrecon') | ||

| mve_path_pc = os.path.join(superbuild_path, 'src', 'elibs', 'mve', 'apps', 'scene2pset', 'scene2pset -F2') |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

-F2 should not be hard coded as it references the chosen scale value (depends on --max-pixels). If a dataset has really large input images (or really small images), this will fail. I think the value can be looked up by looking at the contents of the .mve folders.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Another alternative is to calculate a scale value ourselves instead of letting MVE sort it out and pass it to both scene2pset (via -F) and to dmrecon (via -s).

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Maybe this is why you noticed a few failures during your tests.

|

As far as |

|

scene2pset parameters: To export confidence values, pass |

|

Overall I think things that could be added/improved:

Finding how to respect |

|

Cool. I’ll dive in. This is an awesome review. |

|

Fixes #970 |

|

@smathermather @indiajohnson-cm this can be tested and pending further issues I think it can be merged into master as-is. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Seg fault did not happen again after more testing. This might be tied to some sort of racing condition, but for the time being I think it's OK to move forward.

@smathermather OK to increase version number and merge in your opinion?

|

Implemented #972 |

Yes to both! Tests are looking good on my end. |

This matches what I was seeing too -- confidence thresholds greater than 0.5 needed for best results. |

smvs added back in by Piero

|

Hmm, I dismissed @dakotabenjamin's review based on a myth I think... . @pierotofy -- smvs is still gone from this pull request, correct? |

|

Yes SMVS is gone. |

|

A sibling docs update that I meant to do as a pull request but did on the active repo (yipe!): Anyway, docs should reflect these changes and more. Let me know if I missed anything. |

m(o)ve over smvs Former-commit-id: 57ca7d9

m(o)ve over smvs Former-commit-id: 57ca7d9

There are one big limitation: I have replaced smvs with mve, but perhaps it should be optioned instead.