New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Disk snapshots dissapearing #2687

Comments

|

In 5.4, there was a problem that next snapshot ID was calculated always as a maximum+1 from the current list of snapshots, which lead to reusing the snapshot IDs (if some of them were deleted from the tail and OpenNebula was restarted in the meanwhile). As part of the fix #2189 we have started using a persistent value from On database upgrade, the one/src/onedb/local/5.4.1_to_5.5.80.rb Lines 236 to 243 in 48664d4

Just a quick note, I might be wrong. |

|

We have:

|

Description

Somewhere after upgrade to One 5.6 my vm disk snapshots on Ceph storage started to misbehave.

Previous disk snapshots taken with one 5.4 are overwritten by new snapshots - snapshot index at least starts with zero index angain. Sometimes also double snapshot indexes.

Snapshots taken with one 5.2 disappear completely from sunstone if I try to make new snapshot with one 5.6

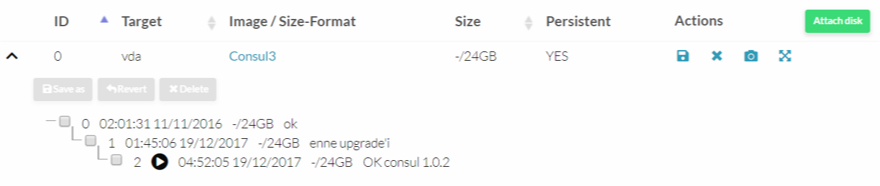

Before taking a new disk snapshot:

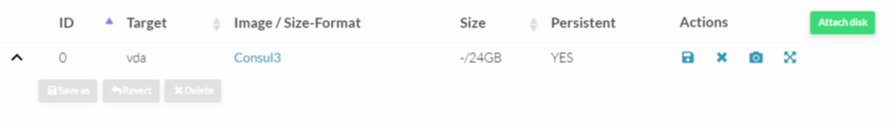

And after

Also I'm unable to take any new disk snapshots on that disk image.

Listing rbd disks snapshots on ceph cluster shows that snapshots are still present

VM log also shows that one is trying to overwrite snapshots:

To Reproduce

Steps to reproduce the behavior.

Expected behavior

Proper snapshots are taken

Details

Additional context

Ceph version 10.2.11

Progress Status

The text was updated successfully, but these errors were encountered: