'

$ git push --set-upstream origin your-branch-for-syncing

```

-

-### Style guide

-

-For documentation strings, 🧨 Diffusers follows the [google style](https://google.github.io/styleguide/pyguide.html).

diff --git a/Makefile b/Makefile

index 94af6d2f1272..8583f59d4110 100644

--- a/Makefile

+++ b/Makefile

@@ -9,8 +9,9 @@ modified_only_fixup:

$(eval modified_py_files := $(shell python utils/get_modified_files.py $(check_dirs)))

@if test -n "$(modified_py_files)"; then \

echo "Checking/fixing $(modified_py_files)"; \

- black $(modified_py_files); \

- ruff $(modified_py_files); \

+ black --preview $(modified_py_files); \

+ isort $(modified_py_files); \

+ flake8 $(modified_py_files); \

else \

echo "No library .py files were modified"; \

fi

@@ -40,8 +41,9 @@ repo-consistency:

# this target runs checks on all files

quality:

- black --check $(check_dirs)

- ruff $(check_dirs)

+ black --check --preview $(check_dirs)

+ isort --check-only $(check_dirs)

+ flake8 $(check_dirs)

doc-builder style src/diffusers docs/source --max_len 119 --check_only --path_to_docs docs/source

python utils/check_doc_toc.py

@@ -55,8 +57,8 @@ extra_style_checks:

# this target runs checks on all files and potentially modifies some of them

style:

- black $(check_dirs)

- ruff $(check_dirs) --fix

+ black --preview $(check_dirs)

+ isort $(check_dirs)

${MAKE} autogenerate_code

${MAKE} extra_style_checks

diff --git a/PHILOSOPHY.md b/PHILOSOPHY.md

deleted file mode 100644

index fbad5948e17e..000000000000

--- a/PHILOSOPHY.md

+++ /dev/null

@@ -1,110 +0,0 @@

-

-

-# Philosophy

-

-🧨 Diffusers provides **state-of-the-art** pretrained diffusion models across multiple modalities.

-Its purpose is to serve as a **modular toolbox** for both inference and training.

-

-We aim at building a library that stands the test of time and therefore take API design very seriously.

-

-In a nutshell, Diffusers is built to be a natural extension of PyTorch. Therefore, most of our design choices are based on [PyTorch's Design Principles](https://pytorch.org/docs/stable/community/design.html#pytorch-design-philosophy). Let's go over the most important ones:

-

-## Usability over Performance

-

-- While Diffusers has many built-in performance-enhancing features (see [Memory and Speed](https://huggingface.co/docs/diffusers/optimization/fp16)), models are always loaded with the highest precision and lowest optimization. Therefore, by default diffusion pipelines are always instantiated on CPU with float32 precision if not otherwise defined by the user. This ensures usability across different platforms and accelerators and means that no complex installations are required to run the library.

-- Diffusers aim at being a **light-weight** package and therefore has very few required dependencies, but many soft dependencies that can improve performance (such as `accelerate`, `safetensors`, `onnx`, etc...). We strive to keep the library as lightweight as possible so that it can be added without much concern as a dependency on other packages.

-- Diffusers prefers simple, self-explainable code over condensed, magic code. This means that short-hand code syntaxes such as lambda functions, and advanced PyTorch operators are often not desired.

-

-## Simple over easy

-

-As PyTorch states, **explicit is better than implicit** and **simple is better than complex**. This design philosophy is reflected in multiple parts of the library:

-- We follow PyTorch's API with methods like [`DiffusionPipeline.to`](https://huggingface.co/docs/diffusers/main/en/api/diffusion_pipeline#diffusers.DiffusionPipeline.to) to let the user handle device management.

-- Raising concise error messages is preferred to silently correct erroneous input. Diffusers aims at teaching the user, rather than making the library as easy to use as possible.

-- Complex model vs. scheduler logic is exposed instead of magically handled inside. Schedulers/Samplers are separated from diffusion models with minimal dependencies on each other. This forces the user to write the unrolled denoising loop. However, the separation allows for easier debugging and gives the user more control over adapting the denoising process or switching out diffusion models or schedulers.

-- Separately trained components of the diffusion pipeline, *e.g.* the text encoder, the unet, and the variational autoencoder, each have their own model class. This forces the user to handle the interaction between the different model components, and the serialization format separates the model components into different files. However, this allows for easier debugging and customization. Dreambooth or textual inversion training

-is very simple thanks to diffusers' ability to separate single components of the diffusion pipeline.

-

-## Tweakable, contributor-friendly over abstraction

-

-For large parts of the library, Diffusers adopts an important design principle of the [Transformers library](https://github.com/huggingface/transformers), which is to prefer copy-pasted code over hasty abstractions. This design principle is very opinionated and stands in stark contrast to popular design principles such as [Don't repeat yourself (DRY)](https://en.wikipedia.org/wiki/Don%27t_repeat_yourself).

-In short, just like Transformers does for modeling files, diffusers prefers to keep an extremely low level of abstraction and very self-contained code for pipelines and schedulers.

-Functions, long code blocks, and even classes can be copied across multiple files which at first can look like a bad, sloppy design choice that makes the library unmaintainable.

-**However**, this design has proven to be extremely successful for Transformers and makes a lot of sense for community-driven, open-source machine learning libraries because:

-- Machine Learning is an extremely fast-moving field in which paradigms, model architectures, and algorithms are changing rapidly, which therefore makes it very difficult to define long-lasting code abstractions.

-- Machine Learning practitioners like to be able to quickly tweak existing code for ideation and research and therefore prefer self-contained code over one that contains many abstractions.

-- Open-source libraries rely on community contributions and therefore must build a library that is easy to contribute to. The more abstract the code, the more dependencies, the harder to read, and the harder to contribute to. Contributors simply stop contributing to very abstract libraries out of fear of breaking vital functionality. If contributing to a library cannot break other fundamental code, not only is it more inviting for potential new contributors, but it is also easier to review and contribute to multiple parts in parallel.

-

-At Hugging Face, we call this design the **single-file policy** which means that almost all of the code of a certain class should be written in a single, self-contained file. To read more about the philosophy, you can have a look

-at [this blog post](https://huggingface.co/blog/transformers-design-philosophy).

-

-In diffusers, we follow this philosophy for both pipelines and schedulers, but only partly for diffusion models. The reason we don't follow this design fully for diffusion models is because almost all diffusion pipelines, such

-as [DDPM](https://huggingface.co/docs/diffusers/v0.12.0/en/api/pipelines/ddpm), [Stable Diffusion](https://huggingface.co/docs/diffusers/v0.12.0/en/api/pipelines/stable_diffusion/overview#stable-diffusion-pipelines), [UnCLIP (Dalle-2)](https://huggingface.co/docs/diffusers/v0.12.0/en/api/pipelines/unclip#overview) and [Imagen](https://imagen.research.google/) all rely on the same diffusion model, the [UNet](https://huggingface.co/docs/diffusers/api/models#diffusers.UNet2DConditionModel).

-

-Great, now you should have generally understood why 🧨 Diffusers is designed the way it is 🤗.

-We try to apply these design principles consistently across the library. Nevertheless, there are some minor exceptions to the philosophy or some unlucky design choices. If you have feedback regarding the design, we would ❤️ to hear it [directly on GitHub](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=&template=feedback.md&title=).

-

-## Design Philosophy in Details

-

-Now, let's look a bit into the nitty-gritty details of the design philosophy. Diffusers essentially consist of three major classes, [pipelines](https://github.com/huggingface/diffusers/tree/main/src/diffusers/pipelines), [models](https://github.com/huggingface/diffusers/tree/main/src/diffusers/models), and [schedulers](https://github.com/huggingface/diffusers/tree/main/src/diffusers/schedulers).

-Let's walk through more in-detail design decisions for each class.

-

-### Pipelines

-

-Pipelines are designed to be easy to use (therefore do not follow [*Simple over easy*](#simple-over-easy) 100%)), are not feature complete, and should loosely be seen as examples of how to use [models](#models) and [schedulers](#schedulers) for inference.

-

-The following design principles are followed:

-- Pipelines follow the single-file policy. All pipelines can be found in individual directories under src/diffusers/pipelines. One pipeline folder corresponds to one diffusion paper/project/release. Multiple pipeline files can be gathered in one pipeline folder, as it’s done for [`src/diffusers/pipelines/stable-diffusion`](https://github.com/huggingface/diffusers/tree/main/src/diffusers/pipelines/stable_diffusion). If pipelines share similar functionality, one can make use of the [#Copied from mechanism](https://github.com/huggingface/diffusers/blob/125d783076e5bd9785beb05367a2d2566843a271/src/diffusers/pipelines/stable_diffusion/pipeline_stable_diffusion_img2img.py#L251).

-- Pipelines all inherit from [`DiffusionPipeline`]

-- Every pipeline consists of different model and scheduler components, that are documented in the [`model_index.json` file](https://huggingface.co/runwayml/stable-diffusion-v1-5/blob/main/model_index.json), are accessible under the same name as attributes of the pipeline and can be shared between pipelines with [`DiffusionPipeline.components`](https://huggingface.co/docs/diffusers/main/en/api/diffusion_pipeline#diffusers.DiffusionPipeline.components) function.

-- Every pipeline should be loadable via the [`DiffusionPipeline.from_pretrained`](https://huggingface.co/docs/diffusers/main/en/api/diffusion_pipeline#diffusers.DiffusionPipeline.from_pretrained) function.

-- Pipelines should be used **only** for inference.

-- Pipelines should be very readable, self-explanatory, and easy to tweak.

-- Pipelines should be designed to build on top of each other and be easy to integrate into higher-level APIs.

-- Pipelines are **not** intended to be feature-complete user interfaces. For future complete user interfaces one should rather have a look at [InvokeAI](https://github.com/invoke-ai/InvokeAI), [Diffuzers](https://github.com/abhishekkrthakur/diffuzers), and [lama-cleaner](https://github.com/Sanster/lama-cleaner)

-- Every pipeline should have one and only one way to run it via a `__call__` method. The naming of the `__call__` arguments should be shared across all pipelines.

-- Pipelines should be named after the task they are intended to solve.

-- In almost all cases, novel diffusion pipelines shall be implemented in a new pipeline folder/file.

-

-### Models

-

-Models are designed as configurable toolboxes that are natural extensions of [PyTorch's Module class](https://pytorch.org/docs/stable/generated/torch.nn.Module.html). They only partly follow the **single-file policy**.

-

-The following design principles are followed:

-- Models correspond to **a type of model architecture**. *E.g.* the [`UNet2DConditionModel`] class is used for all UNet variations that expect 2D image inputs and are conditioned on some context.

-- All models can be found in [`src/diffusers/models`](https://github.com/huggingface/diffusers/tree/main/src/diffusers/models) and every model architecture shall be defined in its file, e.g. [`unet_2d_condition.py`](https://github.com/huggingface/diffusers/blob/main/src/diffusers/models/unet_2d_condition.py), [`transformer_2d.py`](https://github.com/huggingface/diffusers/blob/main/src/diffusers/models/transformer_2d.py), etc...

-- Models **do not** follow the single-file policy and should make use of smaller model building blocks, such as [`attention.py`](https://github.com/huggingface/diffusers/blob/main/src/diffusers/models/attention.py), [`resnet.py`](https://github.com/huggingface/diffusers/blob/main/src/diffusers/models/resnet.py), [`embeddings.py`](https://github.com/huggingface/diffusers/blob/main/src/diffusers/models/embeddings.py), etc... **Note**: This is in stark contrast to Transformers' modeling files and shows that models do not really follow the single-file policy.

-- Models intend to expose complexity, just like PyTorch's module does, and give clear error messages.

-- Models all inherit from `ModelMixin` and `ConfigMixin`.

-- Models can be optimized for performance when it doesn’t demand major code changes, keeps backward compatibility, and gives significant memory or compute gain.

-- Models should by default have the highest precision and lowest performance setting.

-- To integrate new model checkpoints whose general architecture can be classified as an architecture that already exists in Diffusers, the existing model architecture shall be adapted to make it work with the new checkpoint. One should only create a new file if the model architecture is fundamentally different.

-- Models should be designed to be easily extendable to future changes. This can be achieved by limiting public function arguments, configuration arguments, and "foreseeing" future changes, *e.g.* it is usually better to add `string` "...type" arguments that can easily be extended to new future types instead of boolean `is_..._type` arguments. Only the minimum amount of changes shall be made to existing architectures to make a new model checkpoint work.

-- The model design is a difficult trade-off between keeping code readable and concise and supporting many model checkpoints. For most parts of the modeling code, classes shall be adapted for new model checkpoints, while there are some exceptions where it is preferred to add new classes to make sure the code is kept concise and

-readable longterm, such as [UNet blocks](https://github.com/huggingface/diffusers/blob/main/src/diffusers/models/unet_2d_blocks.py) and [Attention processors](https://github.com/huggingface/diffusers/blob/main/src/diffusers/models/cross_attention.py).

-

-### Schedulers

-

-Schedulers are responsible to guide the denoising process for inference as well as to define a noise schedule for training. They are designed as individual classes with loadable configuration files and strongly follow the **single-file policy**.

-

-The following design principles are followed:

-- All schedulers are found in [`src/diffusers/schedulers`](https://github.com/huggingface/diffusers/tree/main/src/diffusers/schedulers).

-- Schedulers are **not** allowed to import from large utils files and shall be kept very self-contained.

-- One scheduler python file corresponds to one scheduler algorithm (as might be defined in a paper).

-- If schedulers share similar functionalities, we can make use of the `#Copied from` mechanism.

-- Schedulers all inherit from `SchedulerMixin` and `ConfigMixin`.

-- Schedulers can be easily swapped out with the [`ConfigMixin.from_config`](https://huggingface.co/docs/diffusers/main/en/api/configuration#diffusers.ConfigMixin.from_config) method as explained in detail [here](./using-diffusers/schedulers.mdx).

-- Every scheduler has to have a `set_num_inference_steps`, and a `step` function. `set_num_inference_steps(...)` has to be called before every denoising process, *i.e.* before `step(...)` is called.

-- Every scheduler exposes the timesteps to be "looped over" via a `timesteps` attribute, which is an array of timesteps the model will be called upon

-- The `step(...)` function takes a predicted model output and the "current" sample (x_t) and returns the "previous", slightly more denoised sample (x_t-1).

-- Given the complexity of diffusion schedulers, the `step` function does not expose all the complexity and can be a bit of a "black box".

-- In almost all cases, novel schedulers shall be implemented in a new scheduling file.

diff --git a/README.md b/README.md

index 76d7df79c813..fc384c9f8fb2 100644

--- a/README.md

+++ b/README.md

@@ -15,150 +15,528 @@

-🤗 Diffusers is the go-to library for state-of-the-art pretrained diffusion models for generating images, audio, and even 3D structures of molecules. Whether you're looking for a simple inference solution or training your own diffusion models, 🤗 Diffusers is a modular toolbox that supports both. Our library is designed with a focus on [usability over performance](https://huggingface.co/docs/diffusers/conceptual/philosophy#usability-over-performance), [simple over easy](https://huggingface.co/docs/diffusers/conceptual/philosophy#simple-over-easy), and [customizability over abstractions](https://huggingface.co/docs/diffusers/conceptual/philosophy#tweakable-contributorfriendly-over-abstraction).

+🤗 Diffusers provides pretrained diffusion models across multiple modalities, such as vision and audio, and serves

+as a modular toolbox for inference and training of diffusion models.

-🤗 Diffusers offers three core components:

+More precisely, 🤗 Diffusers offers:

-- State-of-the-art [diffusion pipelines](https://huggingface.co/docs/diffusers/api/pipelines/overview) that can be run in inference with just a few lines of code.

-- Interchangeable noise [schedulers](https://huggingface.co/docs/diffusers/api/schedulers/overview) for different diffusion speeds and output quality.

-- Pretrained [models](https://huggingface.co/docs/diffusers/api/models) that can be used as building blocks, and combined with schedulers, for creating your own end-to-end diffusion systems.

+- State-of-the-art diffusion pipelines that can be run in inference with just a couple of lines of code (see [src/diffusers/pipelines](https://github.com/huggingface/diffusers/tree/main/src/diffusers/pipelines)). Check [this overview](https://github.com/huggingface/diffusers/tree/main/src/diffusers/pipelines/README.md#pipelines-summary) to see all supported pipelines and their corresponding official papers.

+- Various noise schedulers that can be used interchangeably for the preferred speed vs. quality trade-off in inference (see [src/diffusers/schedulers](https://github.com/huggingface/diffusers/tree/main/src/diffusers/schedulers)).

+- Multiple types of models, such as UNet, can be used as building blocks in an end-to-end diffusion system (see [src/diffusers/models](https://github.com/huggingface/diffusers/tree/main/src/diffusers/models)).

+- Training examples to show how to train the most popular diffusion model tasks (see [examples](https://github.com/huggingface/diffusers/tree/main/examples), *e.g.* [unconditional-image-generation](https://github.com/huggingface/diffusers/tree/main/examples/unconditional_image_generation)).

## Installation

-We recommend installing 🤗 Diffusers in a virtual environment from PyPi or Conda. For more details about installing [PyTorch](https://pytorch.org/get-started/locally/) and [Flax](https://flax.readthedocs.io/en/latest/installation.html), please refer to their official documentation.

+### For PyTorch

-### PyTorch

-

-With `pip` (official package):

+**With `pip`** (official package)

```bash

pip install --upgrade diffusers[torch]

```

-With `conda` (maintained by the community):

+**With `conda`** (maintained by the community)

```sh

conda install -c conda-forge diffusers

```

-### Flax

+### For Flax

-With `pip` (official package):

+**With `pip`**

```bash

pip install --upgrade diffusers[flax]

```

-### Apple Silicon (M1/M2) support

+**Apple Silicon (M1/M2) support**

+

+Please, refer to [the documentation](https://huggingface.co/docs/diffusers/optimization/mps).

+

+## Contributing

+

+We ❤️ contributions from the open-source community!

+If you want to contribute to this library, please check out our [Contribution guide](https://github.com/huggingface/diffusers/blob/main/CONTRIBUTING.md).

+You can look out for [issues](https://github.com/huggingface/diffusers/issues) you'd like to tackle to contribute to the library.

+- See [Good first issues](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22good+first+issue%22) for general opportunities to contribute

+- See [New model/pipeline](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22New+pipeline%2Fmodel%22) to contribute exciting new diffusion models / diffusion pipelines

+- See [New scheduler](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22New+scheduler%22)

-Please refer to the [How to use Stable Diffusion in Apple Silicon](https://huggingface.co/docs/diffusers/optimization/mps) guide.

+Also, say 👋 in our public Discord channel  . We discuss the hottest trends about diffusion models, help each other with contributions, personal projects or

+just hang out ☕.

## Quickstart

-Generating outputs is super easy with 🤗 Diffusers. To generate an image from text, use the `from_pretrained` method to load any pretrained diffusion model (browse the [Hub](https://huggingface.co/models?library=diffusers&sort=downloads) for 4000+ checkpoints):

+In order to get started, we recommend taking a look at two notebooks:

+

+- The [Getting started with Diffusers](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/diffusers_intro.ipynb) [](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/diffusers_intro.ipynb) notebook, which showcases an end-to-end example of usage for diffusion models, schedulers and pipelines.

+ Take a look at this notebook to learn how to use the pipeline abstraction, which takes care of everything (model, scheduler, noise handling) for you, and also to understand each independent building block in the library.

+- The [Training a diffusers model](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/training_example.ipynb) [](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/training_example.ipynb) notebook summarizes diffusion models training methods. This notebook takes a step-by-step approach to training your

+ diffusion models on an image dataset, with explanatory graphics.

+

+## Stable Diffusion is fully compatible with `diffusers`!

+

+Stable Diffusion is a text-to-image latent diffusion model created by the researchers and engineers from [CompVis](https://github.com/CompVis), [Stability AI](https://stability.ai/), [LAION](https://laion.ai/) and [RunwayML](https://runwayml.com/). It's trained on 512x512 images from a subset of the [LAION-5B](https://laion.ai/blog/laion-5b/) database. This model uses a frozen CLIP ViT-L/14 text encoder to condition the model on text prompts. With its 860M UNet and 123M text encoder, the model is relatively lightweight and runs on a GPU with at least 4GB VRAM.

+See the [model card](https://huggingface.co/CompVis/stable-diffusion) for more information.

+

+

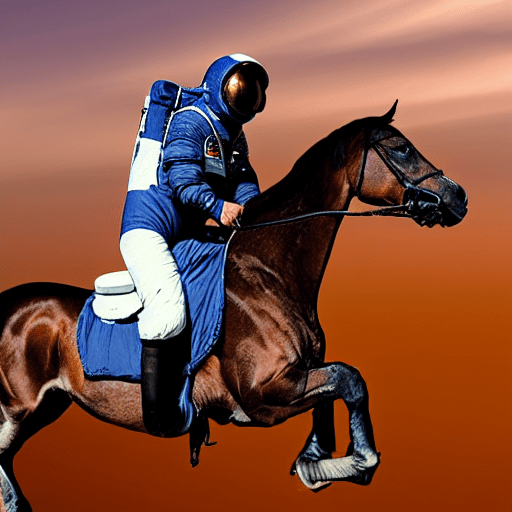

+### Text-to-Image generation with Stable Diffusion

+

+First let's install

+

+```bash

+pip install --upgrade diffusers transformers accelerate

+```

+

+We recommend using the model in [half-precision (`fp16`)](https://pytorch.org/blog/accelerating-training-on-nvidia-gpus-with-pytorch-automatic-mixed-precision/) as it gives almost always the same results as full

+precision while being roughly twice as fast and requiring half the amount of GPU RAM.

```python

-from diffusers import DiffusionPipeline

+import torch

+from diffusers import StableDiffusionPipeline

+

+pipe = StableDiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5", torch_dtype=torch.float16)

+pipe = pipe.to("cuda")

+

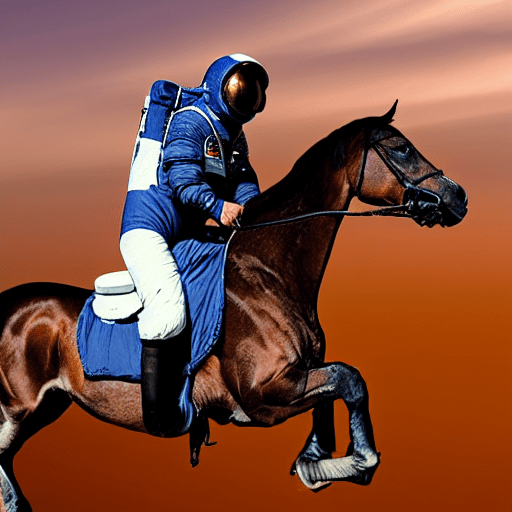

+prompt = "a photo of an astronaut riding a horse on mars"

+image = pipe(prompt).images[0]

+```

+

+#### Running the model locally

+

+You can also simply download the model folder and pass the path to the local folder to the `StableDiffusionPipeline`.

+

+```

+git lfs install

+git clone https://huggingface.co/runwayml/stable-diffusion-v1-5

+```

+

+Assuming the folder is stored locally under `./stable-diffusion-v1-5`, you can run stable diffusion

+as follows:

+

+```python

+pipe = StableDiffusionPipeline.from_pretrained("./stable-diffusion-v1-5")

+pipe = pipe.to("cuda")

+

+prompt = "a photo of an astronaut riding a horse on mars"

+image = pipe(prompt).images[0]

+```

+

+If you are limited by GPU memory, you might want to consider chunking the attention computation in addition

+to using `fp16`.

+The following snippet should result in less than 4GB VRAM.

+

+```python

+pipe = StableDiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5", torch_dtype=torch.float16)

+pipe = pipe.to("cuda")

+

+prompt = "a photo of an astronaut riding a horse on mars"

+pipe.enable_attention_slicing()

+image = pipe(prompt).images[0]

+```

+

+If you wish to use a different scheduler (e.g.: DDIM, LMS, PNDM/PLMS), you can instantiate

+it before the pipeline and pass it to `from_pretrained`.

+

+```python

+from diffusers import LMSDiscreteScheduler

+

+pipe.scheduler = LMSDiscreteScheduler.from_config(pipe.scheduler.config)

+

+prompt = "a photo of an astronaut riding a horse on mars"

+image = pipe(prompt).images[0]

+

+image.save("astronaut_rides_horse.png")

+```

+

+If you want to run Stable Diffusion on CPU or you want to have maximum precision on GPU,

+please run the model in the default *full-precision* setting:

+

+```python

+from diffusers import StableDiffusionPipeline

+

+pipe = StableDiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5")

-pipeline = DiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5")

-pipeline.to("cuda")

-pipeline("An image of a squirrel in Picasso style").images[0]

+# disable the following line if you run on CPU

+pipe = pipe.to("cuda")

+

+prompt = "a photo of an astronaut riding a horse on mars"

+image = pipe(prompt).images[0]

+

+image.save("astronaut_rides_horse.png")

```

-You can also dig into the models and schedulers toolbox to build your own diffusion system:

+### JAX/Flax

+

+Diffusers offers a JAX / Flax implementation of Stable Diffusion for very fast inference. JAX shines specially on TPU hardware because each TPU server has 8 accelerators working in parallel, but it runs great on GPUs too.

+

+Running the pipeline with the default PNDMScheduler:

+

+```python

+import jax

+import numpy as np

+from flax.jax_utils import replicate

+from flax.training.common_utils import shard

+

+from diffusers import FlaxStableDiffusionPipeline

+

+pipeline, params = FlaxStableDiffusionPipeline.from_pretrained(

+ "runwayml/stable-diffusion-v1-5", revision="flax", dtype=jax.numpy.bfloat16

+)

+

+prompt = "a photo of an astronaut riding a horse on mars"

+

+prng_seed = jax.random.PRNGKey(0)

+num_inference_steps = 50

+

+num_samples = jax.device_count()

+prompt = num_samples * [prompt]

+prompt_ids = pipeline.prepare_inputs(prompt)

+

+# shard inputs and rng

+params = replicate(params)

+prng_seed = jax.random.split(prng_seed, jax.device_count())

+prompt_ids = shard(prompt_ids)

+

+images = pipeline(prompt_ids, params, prng_seed, num_inference_steps, jit=True).images

+images = pipeline.numpy_to_pil(np.asarray(images.reshape((num_samples,) + images.shape[-3:])))

+```

+

+**Note**:

+If you are limited by TPU memory, please make sure to load the `FlaxStableDiffusionPipeline` in `bfloat16` precision instead of the default `float32` precision as done above. You can do so by telling diffusers to load the weights from "bf16" branch.

+

+```python

+import jax

+import numpy as np

+from flax.jax_utils import replicate

+from flax.training.common_utils import shard

+

+from diffusers import FlaxStableDiffusionPipeline

+

+pipeline, params = FlaxStableDiffusionPipeline.from_pretrained(

+ "runwayml/stable-diffusion-v1-5", revision="bf16", dtype=jax.numpy.bfloat16

+)

+

+prompt = "a photo of an astronaut riding a horse on mars"

+

+prng_seed = jax.random.PRNGKey(0)

+num_inference_steps = 50

+num_samples = jax.device_count()

+prompt = num_samples * [prompt]

+prompt_ids = pipeline.prepare_inputs(prompt)

+

+# shard inputs and rng

+params = replicate(params)

+prng_seed = jax.random.split(prng_seed, jax.device_count())

+prompt_ids = shard(prompt_ids)

+

+images = pipeline(prompt_ids, params, prng_seed, num_inference_steps, jit=True).images

+images = pipeline.numpy_to_pil(np.asarray(images.reshape((num_samples,) + images.shape[-3:])))

+```

+

+Diffusers also has a Image-to-Image generation pipeline with Flax/Jax

```python

-from diffusers import DDPMScheduler, UNet2DModel

+import jax

+import numpy as np

+import jax.numpy as jnp

+from flax.jax_utils import replicate

+from flax.training.common_utils import shard

+import requests

+from io import BytesIO

from PIL import Image

-import torch

+from diffusers import FlaxStableDiffusionImg2ImgPipeline

+

+def create_key(seed=0):

+ return jax.random.PRNGKey(seed)

+rng = create_key(0)

+

+url = "https://raw.githubusercontent.com/CompVis/stable-diffusion/main/assets/stable-samples/img2img/sketch-mountains-input.jpg"

+response = requests.get(url)

+init_img = Image.open(BytesIO(response.content)).convert("RGB")

+init_img = init_img.resize((768, 512))

+

+prompts = "A fantasy landscape, trending on artstation"

+

+pipeline, params = FlaxStableDiffusionImg2ImgPipeline.from_pretrained(

+ "CompVis/stable-diffusion-v1-4", revision="flax",

+ dtype=jnp.bfloat16,

+)

+

+num_samples = jax.device_count()

+rng = jax.random.split(rng, jax.device_count())

+prompt_ids, processed_image = pipeline.prepare_inputs(prompt=[prompts]*num_samples, image = [init_img]*num_samples)

+p_params = replicate(params)

+prompt_ids = shard(prompt_ids)

+processed_image = shard(processed_image)

+

+output = pipeline(

+ prompt_ids=prompt_ids,

+ image=processed_image,

+ params=p_params,

+ prng_seed=rng,

+ strength=0.75,

+ num_inference_steps=50,

+ jit=True,

+ height=512,

+ width=768).images

+

+output_images = pipeline.numpy_to_pil(np.asarray(output.reshape((num_samples,) + output.shape[-3:])))

+```

+

+Diffusers also has a Text-guided inpainting pipeline with Flax/Jax

+

+```python

+import jax

import numpy as np

+from flax.jax_utils import replicate

+from flax.training.common_utils import shard

+import PIL

+import requests

+from io import BytesIO

-scheduler = DDPMScheduler.from_pretrained("google/ddpm-cat-256")

-model = UNet2DModel.from_pretrained("google/ddpm-cat-256").to("cuda")

-scheduler.set_timesteps(50)

-

-sample_size = model.config.sample_size

-noise = torch.randn((1, 3, sample_size, sample_size)).to("cuda")

-input = noise

-

-for t in scheduler.timesteps:

- with torch.no_grad():

- noisy_residual = model(input, t).sample

- prev_noisy_sample = scheduler.step(noisy_residual, t, input).prev_sample

- input = prev_noisy_sample

-

-image = (input / 2 + 0.5).clamp(0, 1)

-image = image.cpu().permute(0, 2, 3, 1).numpy()[0]

-image = Image.fromarray((image * 255).round().astype("uint8"))

-image

-```

-

-Check out the [Quickstart](https://huggingface.co/docs/diffusers/quicktour) to launch your diffusion journey today!

-

-## How to navigate the documentation

-

-| **Documentation** | **What can I learn?** |

-|---------------------------------------------------------------------|-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

-| Tutorial | A basic crash course for learning how to use the library's most important features like using models and schedulers to build your own diffusion system, and training your own diffusion model. |

-| Loading | Guides for how to load and configure all the components (pipelines, models, and schedulers) of the library, as well as how to use different schedulers. |

-| Pipelines for inference | Guides for how to use pipelines for different inference tasks, batched generation, controlling generated outputs and randomness, and how to contribute a pipeline to the library. |

-| Optimization | Guides for how to optimize your diffusion model to run faster and consume less memory. |

-| [Training](https://huggingface.co/docs/diffusers/training/overview) | Guides for how to train a diffusion model for different tasks with different training techniques. |

-

-## Supported pipelines

-

-| Pipeline | Paper | Tasks |

-|---|---|:---:|

-| [alt_diffusion](./api/pipelines/alt_diffusion) | [**AltDiffusion**](https://arxiv.org/abs/2211.06679) | Image-to-Image Text-Guided Generation |

-| [audio_diffusion](./api/pipelines/audio_diffusion) | [**Audio Diffusion**](https://github.com/teticio/audio-diffusion.git) | Unconditional Audio Generation |

-| [controlnet](./api/pipelines/stable_diffusion/controlnet) | [**ControlNet with Stable Diffusion**](https://arxiv.org/abs/2302.05543) | Image-to-Image Text-Guided Generation |

-| [cycle_diffusion](./api/pipelines/cycle_diffusion) | [**Cycle Diffusion**](https://arxiv.org/abs/2210.05559) | Image-to-Image Text-Guided Generation |

-| [dance_diffusion](./api/pipelines/dance_diffusion) | [**Dance Diffusion**](https://github.com/williamberman/diffusers.git) | Unconditional Audio Generation |

-| [ddpm](./api/pipelines/ddpm) | [**Denoising Diffusion Probabilistic Models**](https://arxiv.org/abs/2006.11239) | Unconditional Image Generation |

-| [ddim](./api/pipelines/ddim) | [**Denoising Diffusion Implicit Models**](https://arxiv.org/abs/2010.02502) | Unconditional Image Generation |

-| [latent_diffusion](./api/pipelines/latent_diffusion) | [**High-Resolution Image Synthesis with Latent Diffusion Models**](https://arxiv.org/abs/2112.10752)| Text-to-Image Generation |

-| [latent_diffusion](./api/pipelines/latent_diffusion) | [**High-Resolution Image Synthesis with Latent Diffusion Models**](https://arxiv.org/abs/2112.10752)| Super Resolution Image-to-Image |

-| [latent_diffusion_uncond](./api/pipelines/latent_diffusion_uncond) | [**High-Resolution Image Synthesis with Latent Diffusion Models**](https://arxiv.org/abs/2112.10752) | Unconditional Image Generation |

-| [paint_by_example](./api/pipelines/paint_by_example) | [**Paint by Example: Exemplar-based Image Editing with Diffusion Models**](https://arxiv.org/abs/2211.13227) | Image-Guided Image Inpainting |

-| [pndm](./api/pipelines/pndm) | [**Pseudo Numerical Methods for Diffusion Models on Manifolds**](https://arxiv.org/abs/2202.09778) | Unconditional Image Generation |

-| [score_sde_ve](./api/pipelines/score_sde_ve) | [**Score-Based Generative Modeling through Stochastic Differential Equations**](https://openreview.net/forum?id=PxTIG12RRHS) | Unconditional Image Generation |

-| [score_sde_vp](./api/pipelines/score_sde_vp) | [**Score-Based Generative Modeling through Stochastic Differential Equations**](https://openreview.net/forum?id=PxTIG12RRHS) | Unconditional Image Generation |

-| [semantic_stable_diffusion](./api/pipelines/semantic_stable_diffusion) | [**Semantic Guidance**](https://arxiv.org/abs/2301.12247) | Text-Guided Generation |

-| [stable_diffusion_text2img](./api/pipelines/stable_diffusion/text2img) | [**Stable Diffusion**](https://stability.ai/blog/stable-diffusion-public-release) | Text-to-Image Generation |

-| [stable_diffusion_img2img](./api/pipelines/stable_diffusion/img2img) | [**Stable Diffusion**](https://stability.ai/blog/stable-diffusion-public-release) | Image-to-Image Text-Guided Generation |

-| [stable_diffusion_inpaint](./api/pipelines/stable_diffusion/inpaint) | [**Stable Diffusion**](https://stability.ai/blog/stable-diffusion-public-release) | Text-Guided Image Inpainting |

-| [stable_diffusion_panorama](./api/pipelines/stable_diffusion/panorama) | [**MultiDiffusion**](https://multidiffusion.github.io/) | Text-to-Panorama Generation |

-| [stable_diffusion_pix2pix](./api/pipelines/stable_diffusion/pix2pix) | [**InstructPix2Pix**](https://github.com/timothybrooks/instruct-pix2pix) | Text-Guided Image Editing|

-| [stable_diffusion_pix2pix_zero](./api/pipelines/stable_diffusion/pix2pix_zero) | [**Zero-shot Image-to-Image Translation**](https://pix2pixzero.github.io/) | Text-Guided Image Editing |

-| [stable_diffusion_attend_and_excite](./api/pipelines/stable_diffusion/attend_and_excite) | [**Attend and Excite for Stable Diffusion**](https://attendandexcite.github.io/Attend-and-Excite/) | Text-to-Image Generation |

-| [stable_diffusion_self_attention_guidance](./api/pipelines/stable_diffusion/self_attention_guidance) | [**Self-Attention Guidance**](https://ku-cvlab.github.io/Self-Attention-Guidance) | Text-to-Image Generation |

-| [stable_diffusion_image_variation](./stable_diffusion/image_variation) | [**Stable Diffusion Image Variations**](https://github.com/LambdaLabsML/lambda-diffusers#stable-diffusion-image-variations) | Image-to-Image Generation |

-| [stable_diffusion_latent_upscale](./stable_diffusion/latent_upscale) | [**Stable Diffusion Latent Upscaler**](https://twitter.com/StabilityAI/status/1590531958815064065) | Text-Guided Super Resolution Image-to-Image |

-| [stable_diffusion_2](./api/pipelines/stable_diffusion_2) | [**Stable Diffusion 2**](https://stability.ai/blog/stable-diffusion-v2-release) | Text-to-Image Generation |

-| [stable_diffusion_2](./api/pipelines/stable_diffusion_2) | [**Stable Diffusion 2**](https://stability.ai/blog/stable-diffusion-v2-release) | Text-Guided Image Inpainting |

-| [stable_diffusion_2](./api/pipelines/stable_diffusion_2) | [**Depth-Conditional Stable Diffusion**](https://github.com/Stability-AI/stablediffusion#depth-conditional-stable-diffusion) | Depth-to-Image Generation |

-| [stable_diffusion_2](./api/pipelines/stable_diffusion_2) | [**Stable Diffusion 2**](https://stability.ai/blog/stable-diffusion-v2-release) | Text-Guided Super Resolution Image-to-Image |

-| [stable_diffusion_safe](./api/pipelines/stable_diffusion_safe) | [**Safe Stable Diffusion**](https://arxiv.org/abs/2211.05105) | Text-Guided Generation |

-| [stable_unclip](./stable_unclip) | **Stable unCLIP** | Text-to-Image Generation |

-| [stable_unclip](./stable_unclip) | **Stable unCLIP** | Image-to-Image Text-Guided Generation |

-| [stochastic_karras_ve](./api/pipelines/stochastic_karras_ve) | [**Elucidating the Design Space of Diffusion-Based Generative Models**](https://arxiv.org/abs/2206.00364) | Unconditional Image Generation |

-| [unclip](./api/pipelines/unclip) | [Hierarchical Text-Conditional Image Generation with CLIP Latents](https://arxiv.org/abs/2204.06125) | Text-to-Image Generation |

-| [versatile_diffusion](./api/pipelines/versatile_diffusion) | [Versatile Diffusion: Text, Images and Variations All in One Diffusion Model](https://arxiv.org/abs/2211.08332) | Text-to-Image Generation |

-| [versatile_diffusion](./api/pipelines/versatile_diffusion) | [Versatile Diffusion: Text, Images and Variations All in One Diffusion Model](https://arxiv.org/abs/2211.08332) | Image Variations Generation |

-| [versatile_diffusion](./api/pipelines/versatile_diffusion) | [Versatile Diffusion: Text, Images and Variations All in One Diffusion Model](https://arxiv.org/abs/2211.08332) | Dual Image and Text Guided Generation |

-| [vq_diffusion](./api/pipelines/vq_diffusion) | [Vector Quantized Diffusion Model for Text-to-Image Synthesis](https://arxiv.org/abs/2111.14822) | Text-to-Image Generation |

-

-## Contribution

-We ❤️ contributions from the open-source community!

-If you want to contribute to this library, please check out our [Contribution guide](https://github.com/huggingface/diffusers/blob/main/CONTRIBUTING.md).

-You can look out for [issues](https://github.com/huggingface/diffusers/issues) you'd like to tackle to contribute to the library.

-- See [Good first issues](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22good+first+issue%22) for general opportunities to contribute

-- See [New model/pipeline](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22New+pipeline%2Fmodel%22) to contribute exciting new diffusion models / diffusion pipelines

-- See [New scheduler](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22New+scheduler%22)

+from diffusers import FlaxStableDiffusionInpaintPipeline

-Also, say 👋 in our public Discord channel

. We discuss the hottest trends about diffusion models, help each other with contributions, personal projects or

+just hang out ☕.

## Quickstart

-Generating outputs is super easy with 🤗 Diffusers. To generate an image from text, use the `from_pretrained` method to load any pretrained diffusion model (browse the [Hub](https://huggingface.co/models?library=diffusers&sort=downloads) for 4000+ checkpoints):

+In order to get started, we recommend taking a look at two notebooks:

+

+- The [Getting started with Diffusers](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/diffusers_intro.ipynb) [](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/diffusers_intro.ipynb) notebook, which showcases an end-to-end example of usage for diffusion models, schedulers and pipelines.

+ Take a look at this notebook to learn how to use the pipeline abstraction, which takes care of everything (model, scheduler, noise handling) for you, and also to understand each independent building block in the library.

+- The [Training a diffusers model](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/training_example.ipynb) [](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/training_example.ipynb) notebook summarizes diffusion models training methods. This notebook takes a step-by-step approach to training your

+ diffusion models on an image dataset, with explanatory graphics.

+

+## Stable Diffusion is fully compatible with `diffusers`!

+

+Stable Diffusion is a text-to-image latent diffusion model created by the researchers and engineers from [CompVis](https://github.com/CompVis), [Stability AI](https://stability.ai/), [LAION](https://laion.ai/) and [RunwayML](https://runwayml.com/). It's trained on 512x512 images from a subset of the [LAION-5B](https://laion.ai/blog/laion-5b/) database. This model uses a frozen CLIP ViT-L/14 text encoder to condition the model on text prompts. With its 860M UNet and 123M text encoder, the model is relatively lightweight and runs on a GPU with at least 4GB VRAM.

+See the [model card](https://huggingface.co/CompVis/stable-diffusion) for more information.

+

+

+### Text-to-Image generation with Stable Diffusion

+

+First let's install

+

+```bash

+pip install --upgrade diffusers transformers accelerate

+```

+

+We recommend using the model in [half-precision (`fp16`)](https://pytorch.org/blog/accelerating-training-on-nvidia-gpus-with-pytorch-automatic-mixed-precision/) as it gives almost always the same results as full

+precision while being roughly twice as fast and requiring half the amount of GPU RAM.

```python

-from diffusers import DiffusionPipeline

+import torch

+from diffusers import StableDiffusionPipeline

+

+pipe = StableDiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5", torch_dtype=torch.float16)

+pipe = pipe.to("cuda")

+

+prompt = "a photo of an astronaut riding a horse on mars"

+image = pipe(prompt).images[0]

+```

+

+#### Running the model locally

+

+You can also simply download the model folder and pass the path to the local folder to the `StableDiffusionPipeline`.

+

+```

+git lfs install

+git clone https://huggingface.co/runwayml/stable-diffusion-v1-5

+```

+

+Assuming the folder is stored locally under `./stable-diffusion-v1-5`, you can run stable diffusion

+as follows:

+

+```python

+pipe = StableDiffusionPipeline.from_pretrained("./stable-diffusion-v1-5")

+pipe = pipe.to("cuda")

+

+prompt = "a photo of an astronaut riding a horse on mars"

+image = pipe(prompt).images[0]

+```

+

+If you are limited by GPU memory, you might want to consider chunking the attention computation in addition

+to using `fp16`.

+The following snippet should result in less than 4GB VRAM.

+

+```python

+pipe = StableDiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5", torch_dtype=torch.float16)

+pipe = pipe.to("cuda")

+

+prompt = "a photo of an astronaut riding a horse on mars"

+pipe.enable_attention_slicing()

+image = pipe(prompt).images[0]

+```

+

+If you wish to use a different scheduler (e.g.: DDIM, LMS, PNDM/PLMS), you can instantiate

+it before the pipeline and pass it to `from_pretrained`.

+

+```python

+from diffusers import LMSDiscreteScheduler

+

+pipe.scheduler = LMSDiscreteScheduler.from_config(pipe.scheduler.config)

+

+prompt = "a photo of an astronaut riding a horse on mars"

+image = pipe(prompt).images[0]

+

+image.save("astronaut_rides_horse.png")

+```

+

+If you want to run Stable Diffusion on CPU or you want to have maximum precision on GPU,

+please run the model in the default *full-precision* setting:

+

+```python

+from diffusers import StableDiffusionPipeline

+

+pipe = StableDiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5")

-pipeline = DiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5")

-pipeline.to("cuda")

-pipeline("An image of a squirrel in Picasso style").images[0]

+# disable the following line if you run on CPU

+pipe = pipe.to("cuda")

+

+prompt = "a photo of an astronaut riding a horse on mars"

+image = pipe(prompt).images[0]

+

+image.save("astronaut_rides_horse.png")

```

-You can also dig into the models and schedulers toolbox to build your own diffusion system:

+### JAX/Flax

+

+Diffusers offers a JAX / Flax implementation of Stable Diffusion for very fast inference. JAX shines specially on TPU hardware because each TPU server has 8 accelerators working in parallel, but it runs great on GPUs too.

+

+Running the pipeline with the default PNDMScheduler:

+

+```python

+import jax

+import numpy as np

+from flax.jax_utils import replicate

+from flax.training.common_utils import shard

+

+from diffusers import FlaxStableDiffusionPipeline

+

+pipeline, params = FlaxStableDiffusionPipeline.from_pretrained(

+ "runwayml/stable-diffusion-v1-5", revision="flax", dtype=jax.numpy.bfloat16

+)

+

+prompt = "a photo of an astronaut riding a horse on mars"

+

+prng_seed = jax.random.PRNGKey(0)

+num_inference_steps = 50

+

+num_samples = jax.device_count()

+prompt = num_samples * [prompt]

+prompt_ids = pipeline.prepare_inputs(prompt)

+

+# shard inputs and rng

+params = replicate(params)

+prng_seed = jax.random.split(prng_seed, jax.device_count())

+prompt_ids = shard(prompt_ids)

+

+images = pipeline(prompt_ids, params, prng_seed, num_inference_steps, jit=True).images

+images = pipeline.numpy_to_pil(np.asarray(images.reshape((num_samples,) + images.shape[-3:])))

+```

+

+**Note**:

+If you are limited by TPU memory, please make sure to load the `FlaxStableDiffusionPipeline` in `bfloat16` precision instead of the default `float32` precision as done above. You can do so by telling diffusers to load the weights from "bf16" branch.

+

+```python

+import jax

+import numpy as np

+from flax.jax_utils import replicate

+from flax.training.common_utils import shard

+

+from diffusers import FlaxStableDiffusionPipeline

+

+pipeline, params = FlaxStableDiffusionPipeline.from_pretrained(

+ "runwayml/stable-diffusion-v1-5", revision="bf16", dtype=jax.numpy.bfloat16

+)

+

+prompt = "a photo of an astronaut riding a horse on mars"

+

+prng_seed = jax.random.PRNGKey(0)

+num_inference_steps = 50

+num_samples = jax.device_count()

+prompt = num_samples * [prompt]

+prompt_ids = pipeline.prepare_inputs(prompt)

+

+# shard inputs and rng

+params = replicate(params)

+prng_seed = jax.random.split(prng_seed, jax.device_count())

+prompt_ids = shard(prompt_ids)

+

+images = pipeline(prompt_ids, params, prng_seed, num_inference_steps, jit=True).images

+images = pipeline.numpy_to_pil(np.asarray(images.reshape((num_samples,) + images.shape[-3:])))

+```

+

+Diffusers also has a Image-to-Image generation pipeline with Flax/Jax

```python

-from diffusers import DDPMScheduler, UNet2DModel

+import jax

+import numpy as np

+import jax.numpy as jnp

+from flax.jax_utils import replicate

+from flax.training.common_utils import shard

+import requests

+from io import BytesIO

from PIL import Image

-import torch

+from diffusers import FlaxStableDiffusionImg2ImgPipeline

+

+def create_key(seed=0):

+ return jax.random.PRNGKey(seed)

+rng = create_key(0)

+

+url = "https://raw.githubusercontent.com/CompVis/stable-diffusion/main/assets/stable-samples/img2img/sketch-mountains-input.jpg"

+response = requests.get(url)

+init_img = Image.open(BytesIO(response.content)).convert("RGB")

+init_img = init_img.resize((768, 512))

+

+prompts = "A fantasy landscape, trending on artstation"

+

+pipeline, params = FlaxStableDiffusionImg2ImgPipeline.from_pretrained(

+ "CompVis/stable-diffusion-v1-4", revision="flax",

+ dtype=jnp.bfloat16,

+)

+

+num_samples = jax.device_count()

+rng = jax.random.split(rng, jax.device_count())

+prompt_ids, processed_image = pipeline.prepare_inputs(prompt=[prompts]*num_samples, image = [init_img]*num_samples)

+p_params = replicate(params)

+prompt_ids = shard(prompt_ids)

+processed_image = shard(processed_image)

+

+output = pipeline(

+ prompt_ids=prompt_ids,

+ image=processed_image,

+ params=p_params,

+ prng_seed=rng,

+ strength=0.75,

+ num_inference_steps=50,

+ jit=True,

+ height=512,

+ width=768).images

+

+output_images = pipeline.numpy_to_pil(np.asarray(output.reshape((num_samples,) + output.shape[-3:])))

+```

+

+Diffusers also has a Text-guided inpainting pipeline with Flax/Jax

+

+```python

+import jax

import numpy as np

+from flax.jax_utils import replicate

+from flax.training.common_utils import shard

+import PIL

+import requests

+from io import BytesIO

-scheduler = DDPMScheduler.from_pretrained("google/ddpm-cat-256")

-model = UNet2DModel.from_pretrained("google/ddpm-cat-256").to("cuda")

-scheduler.set_timesteps(50)

-

-sample_size = model.config.sample_size

-noise = torch.randn((1, 3, sample_size, sample_size)).to("cuda")

-input = noise

-

-for t in scheduler.timesteps:

- with torch.no_grad():

- noisy_residual = model(input, t).sample

- prev_noisy_sample = scheduler.step(noisy_residual, t, input).prev_sample

- input = prev_noisy_sample

-

-image = (input / 2 + 0.5).clamp(0, 1)

-image = image.cpu().permute(0, 2, 3, 1).numpy()[0]

-image = Image.fromarray((image * 255).round().astype("uint8"))

-image

-```

-

-Check out the [Quickstart](https://huggingface.co/docs/diffusers/quicktour) to launch your diffusion journey today!

-

-## How to navigate the documentation

-

-| **Documentation** | **What can I learn?** |

-|---------------------------------------------------------------------|-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

-| Tutorial | A basic crash course for learning how to use the library's most important features like using models and schedulers to build your own diffusion system, and training your own diffusion model. |

-| Loading | Guides for how to load and configure all the components (pipelines, models, and schedulers) of the library, as well as how to use different schedulers. |

-| Pipelines for inference | Guides for how to use pipelines for different inference tasks, batched generation, controlling generated outputs and randomness, and how to contribute a pipeline to the library. |

-| Optimization | Guides for how to optimize your diffusion model to run faster and consume less memory. |

-| [Training](https://huggingface.co/docs/diffusers/training/overview) | Guides for how to train a diffusion model for different tasks with different training techniques. |

-

-## Supported pipelines

-

-| Pipeline | Paper | Tasks |

-|---|---|:---:|

-| [alt_diffusion](./api/pipelines/alt_diffusion) | [**AltDiffusion**](https://arxiv.org/abs/2211.06679) | Image-to-Image Text-Guided Generation |

-| [audio_diffusion](./api/pipelines/audio_diffusion) | [**Audio Diffusion**](https://github.com/teticio/audio-diffusion.git) | Unconditional Audio Generation |

-| [controlnet](./api/pipelines/stable_diffusion/controlnet) | [**ControlNet with Stable Diffusion**](https://arxiv.org/abs/2302.05543) | Image-to-Image Text-Guided Generation |

-| [cycle_diffusion](./api/pipelines/cycle_diffusion) | [**Cycle Diffusion**](https://arxiv.org/abs/2210.05559) | Image-to-Image Text-Guided Generation |

-| [dance_diffusion](./api/pipelines/dance_diffusion) | [**Dance Diffusion**](https://github.com/williamberman/diffusers.git) | Unconditional Audio Generation |

-| [ddpm](./api/pipelines/ddpm) | [**Denoising Diffusion Probabilistic Models**](https://arxiv.org/abs/2006.11239) | Unconditional Image Generation |

-| [ddim](./api/pipelines/ddim) | [**Denoising Diffusion Implicit Models**](https://arxiv.org/abs/2010.02502) | Unconditional Image Generation |

-| [latent_diffusion](./api/pipelines/latent_diffusion) | [**High-Resolution Image Synthesis with Latent Diffusion Models**](https://arxiv.org/abs/2112.10752)| Text-to-Image Generation |

-| [latent_diffusion](./api/pipelines/latent_diffusion) | [**High-Resolution Image Synthesis with Latent Diffusion Models**](https://arxiv.org/abs/2112.10752)| Super Resolution Image-to-Image |

-| [latent_diffusion_uncond](./api/pipelines/latent_diffusion_uncond) | [**High-Resolution Image Synthesis with Latent Diffusion Models**](https://arxiv.org/abs/2112.10752) | Unconditional Image Generation |

-| [paint_by_example](./api/pipelines/paint_by_example) | [**Paint by Example: Exemplar-based Image Editing with Diffusion Models**](https://arxiv.org/abs/2211.13227) | Image-Guided Image Inpainting |

-| [pndm](./api/pipelines/pndm) | [**Pseudo Numerical Methods for Diffusion Models on Manifolds**](https://arxiv.org/abs/2202.09778) | Unconditional Image Generation |

-| [score_sde_ve](./api/pipelines/score_sde_ve) | [**Score-Based Generative Modeling through Stochastic Differential Equations**](https://openreview.net/forum?id=PxTIG12RRHS) | Unconditional Image Generation |

-| [score_sde_vp](./api/pipelines/score_sde_vp) | [**Score-Based Generative Modeling through Stochastic Differential Equations**](https://openreview.net/forum?id=PxTIG12RRHS) | Unconditional Image Generation |

-| [semantic_stable_diffusion](./api/pipelines/semantic_stable_diffusion) | [**Semantic Guidance**](https://arxiv.org/abs/2301.12247) | Text-Guided Generation |

-| [stable_diffusion_text2img](./api/pipelines/stable_diffusion/text2img) | [**Stable Diffusion**](https://stability.ai/blog/stable-diffusion-public-release) | Text-to-Image Generation |

-| [stable_diffusion_img2img](./api/pipelines/stable_diffusion/img2img) | [**Stable Diffusion**](https://stability.ai/blog/stable-diffusion-public-release) | Image-to-Image Text-Guided Generation |

-| [stable_diffusion_inpaint](./api/pipelines/stable_diffusion/inpaint) | [**Stable Diffusion**](https://stability.ai/blog/stable-diffusion-public-release) | Text-Guided Image Inpainting |

-| [stable_diffusion_panorama](./api/pipelines/stable_diffusion/panorama) | [**MultiDiffusion**](https://multidiffusion.github.io/) | Text-to-Panorama Generation |

-| [stable_diffusion_pix2pix](./api/pipelines/stable_diffusion/pix2pix) | [**InstructPix2Pix**](https://github.com/timothybrooks/instruct-pix2pix) | Text-Guided Image Editing|

-| [stable_diffusion_pix2pix_zero](./api/pipelines/stable_diffusion/pix2pix_zero) | [**Zero-shot Image-to-Image Translation**](https://pix2pixzero.github.io/) | Text-Guided Image Editing |

-| [stable_diffusion_attend_and_excite](./api/pipelines/stable_diffusion/attend_and_excite) | [**Attend and Excite for Stable Diffusion**](https://attendandexcite.github.io/Attend-and-Excite/) | Text-to-Image Generation |

-| [stable_diffusion_self_attention_guidance](./api/pipelines/stable_diffusion/self_attention_guidance) | [**Self-Attention Guidance**](https://ku-cvlab.github.io/Self-Attention-Guidance) | Text-to-Image Generation |

-| [stable_diffusion_image_variation](./stable_diffusion/image_variation) | [**Stable Diffusion Image Variations**](https://github.com/LambdaLabsML/lambda-diffusers#stable-diffusion-image-variations) | Image-to-Image Generation |

-| [stable_diffusion_latent_upscale](./stable_diffusion/latent_upscale) | [**Stable Diffusion Latent Upscaler**](https://twitter.com/StabilityAI/status/1590531958815064065) | Text-Guided Super Resolution Image-to-Image |

-| [stable_diffusion_2](./api/pipelines/stable_diffusion_2) | [**Stable Diffusion 2**](https://stability.ai/blog/stable-diffusion-v2-release) | Text-to-Image Generation |

-| [stable_diffusion_2](./api/pipelines/stable_diffusion_2) | [**Stable Diffusion 2**](https://stability.ai/blog/stable-diffusion-v2-release) | Text-Guided Image Inpainting |

-| [stable_diffusion_2](./api/pipelines/stable_diffusion_2) | [**Depth-Conditional Stable Diffusion**](https://github.com/Stability-AI/stablediffusion#depth-conditional-stable-diffusion) | Depth-to-Image Generation |

-| [stable_diffusion_2](./api/pipelines/stable_diffusion_2) | [**Stable Diffusion 2**](https://stability.ai/blog/stable-diffusion-v2-release) | Text-Guided Super Resolution Image-to-Image |

-| [stable_diffusion_safe](./api/pipelines/stable_diffusion_safe) | [**Safe Stable Diffusion**](https://arxiv.org/abs/2211.05105) | Text-Guided Generation |

-| [stable_unclip](./stable_unclip) | **Stable unCLIP** | Text-to-Image Generation |

-| [stable_unclip](./stable_unclip) | **Stable unCLIP** | Image-to-Image Text-Guided Generation |

-| [stochastic_karras_ve](./api/pipelines/stochastic_karras_ve) | [**Elucidating the Design Space of Diffusion-Based Generative Models**](https://arxiv.org/abs/2206.00364) | Unconditional Image Generation |

-| [unclip](./api/pipelines/unclip) | [Hierarchical Text-Conditional Image Generation with CLIP Latents](https://arxiv.org/abs/2204.06125) | Text-to-Image Generation |

-| [versatile_diffusion](./api/pipelines/versatile_diffusion) | [Versatile Diffusion: Text, Images and Variations All in One Diffusion Model](https://arxiv.org/abs/2211.08332) | Text-to-Image Generation |

-| [versatile_diffusion](./api/pipelines/versatile_diffusion) | [Versatile Diffusion: Text, Images and Variations All in One Diffusion Model](https://arxiv.org/abs/2211.08332) | Image Variations Generation |

-| [versatile_diffusion](./api/pipelines/versatile_diffusion) | [Versatile Diffusion: Text, Images and Variations All in One Diffusion Model](https://arxiv.org/abs/2211.08332) | Dual Image and Text Guided Generation |

-| [vq_diffusion](./api/pipelines/vq_diffusion) | [Vector Quantized Diffusion Model for Text-to-Image Synthesis](https://arxiv.org/abs/2111.14822) | Text-to-Image Generation |

-

-## Contribution

-We ❤️ contributions from the open-source community!

-If you want to contribute to this library, please check out our [Contribution guide](https://github.com/huggingface/diffusers/blob/main/CONTRIBUTING.md).

-You can look out for [issues](https://github.com/huggingface/diffusers/issues) you'd like to tackle to contribute to the library.

-- See [Good first issues](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22good+first+issue%22) for general opportunities to contribute

-- See [New model/pipeline](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22New+pipeline%2Fmodel%22) to contribute exciting new diffusion models / diffusion pipelines

-- See [New scheduler](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22New+scheduler%22)

+from diffusers import FlaxStableDiffusionInpaintPipeline

-Also, say 👋 in our public Discord channel  . We discuss the hottest trends about diffusion models, help each other with contributions, personal projects or

-just hang out ☕.

+def download_image(url):

+ response = requests.get(url)

+ return PIL.Image.open(BytesIO(response.content)).convert("RGB")

+img_url = "https://raw.githubusercontent.com/CompVis/latent-diffusion/main/data/inpainting_examples/overture-creations-5sI6fQgYIuo.png"

+mask_url = "https://raw.githubusercontent.com/CompVis/latent-diffusion/main/data/inpainting_examples/overture-creations-5sI6fQgYIuo_mask.png"

+

+init_image = download_image(img_url).resize((512, 512))

+mask_image = download_image(mask_url).resize((512, 512))

+

+pipeline, params = FlaxStableDiffusionInpaintPipeline.from_pretrained("xvjiarui/stable-diffusion-2-inpainting")

+

+prompt = "Face of a yellow cat, high resolution, sitting on a park bench"

+prng_seed = jax.random.PRNGKey(0)

+num_inference_steps = 50

+

+num_samples = jax.device_count()

+prompt = num_samples * [prompt]

+init_image = num_samples * [init_image]

+mask_image = num_samples * [mask_image]

+prompt_ids, processed_masked_images, processed_masks = pipeline.prepare_inputs(prompt, init_image, mask_image)

+

+

+# shard inputs and rng

+params = replicate(params)

+prng_seed = jax.random.split(prng_seed, jax.device_count())

+prompt_ids = shard(prompt_ids)

+processed_masked_images = shard(processed_masked_images)

+processed_masks = shard(processed_masks)

+

+images = pipeline(prompt_ids, processed_masks, processed_masked_images, params, prng_seed, num_inference_steps, jit=True).images

+images = pipeline.numpy_to_pil(np.asarray(images.reshape((num_samples,) + images.shape[-3:])))

+```

+

+### Image-to-Image text-guided generation with Stable Diffusion

+

+The `StableDiffusionImg2ImgPipeline` lets you pass a text prompt and an initial image to condition the generation of new images.

+

+```python

+import requests

+import torch

+from PIL import Image

+from io import BytesIO

+

+from diffusers import StableDiffusionImg2ImgPipeline

+

+# load the pipeline

+device = "cuda"

+model_id_or_path = "runwayml/stable-diffusion-v1-5"

+pipe = StableDiffusionImg2ImgPipeline.from_pretrained(model_id_or_path, torch_dtype=torch.float16)

+

+# or download via git clone https://huggingface.co/runwayml/stable-diffusion-v1-5

+# and pass `model_id_or_path="./stable-diffusion-v1-5"`.

+pipe = pipe.to(device)

+

+# let's download an initial image

+url = "https://raw.githubusercontent.com/CompVis/stable-diffusion/main/assets/stable-samples/img2img/sketch-mountains-input.jpg"

+

+response = requests.get(url)

+init_image = Image.open(BytesIO(response.content)).convert("RGB")

+init_image = init_image.resize((768, 512))

+

+prompt = "A fantasy landscape, trending on artstation"

+

+images = pipe(prompt=prompt, image=init_image, strength=0.75, guidance_scale=7.5).images

+

+images[0].save("fantasy_landscape.png")

+```

+You can also run this example on colab [](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/image_2_image_using_diffusers.ipynb)

+

+### In-painting using Stable Diffusion

+

+The `StableDiffusionInpaintPipeline` lets you edit specific parts of an image by providing a mask and a text prompt.

+

+```python

+import PIL

+import requests

+import torch

+from io import BytesIO

+

+from diffusers import StableDiffusionInpaintPipeline

+

+def download_image(url):

+ response = requests.get(url)

+ return PIL.Image.open(BytesIO(response.content)).convert("RGB")

+

+img_url = "https://raw.githubusercontent.com/CompVis/latent-diffusion/main/data/inpainting_examples/overture-creations-5sI6fQgYIuo.png"

+mask_url = "https://raw.githubusercontent.com/CompVis/latent-diffusion/main/data/inpainting_examples/overture-creations-5sI6fQgYIuo_mask.png"

+

+init_image = download_image(img_url).resize((512, 512))

+mask_image = download_image(mask_url).resize((512, 512))

+

+pipe = StableDiffusionInpaintPipeline.from_pretrained("runwayml/stable-diffusion-inpainting", torch_dtype=torch.float16)

+pipe = pipe.to("cuda")

+

+prompt = "Face of a yellow cat, high resolution, sitting on a park bench"

+image = pipe(prompt=prompt, image=init_image, mask_image=mask_image).images[0]

+```

+

+### Tweak prompts reusing seeds and latents

+

+You can generate your own latents to reproduce results, or tweak your prompt on a specific result you liked.

+Please have a look at [Reusing seeds for deterministic generation](https://huggingface.co/docs/diffusers/main/en/using-diffusers/reusing_seeds).

+

+## Fine-Tuning Stable Diffusion

+

+Fine-tuning techniques make it possible to adapt Stable Diffusion to your own dataset, or add new subjects to it. These are some of the techniques supported in `diffusers`:

+

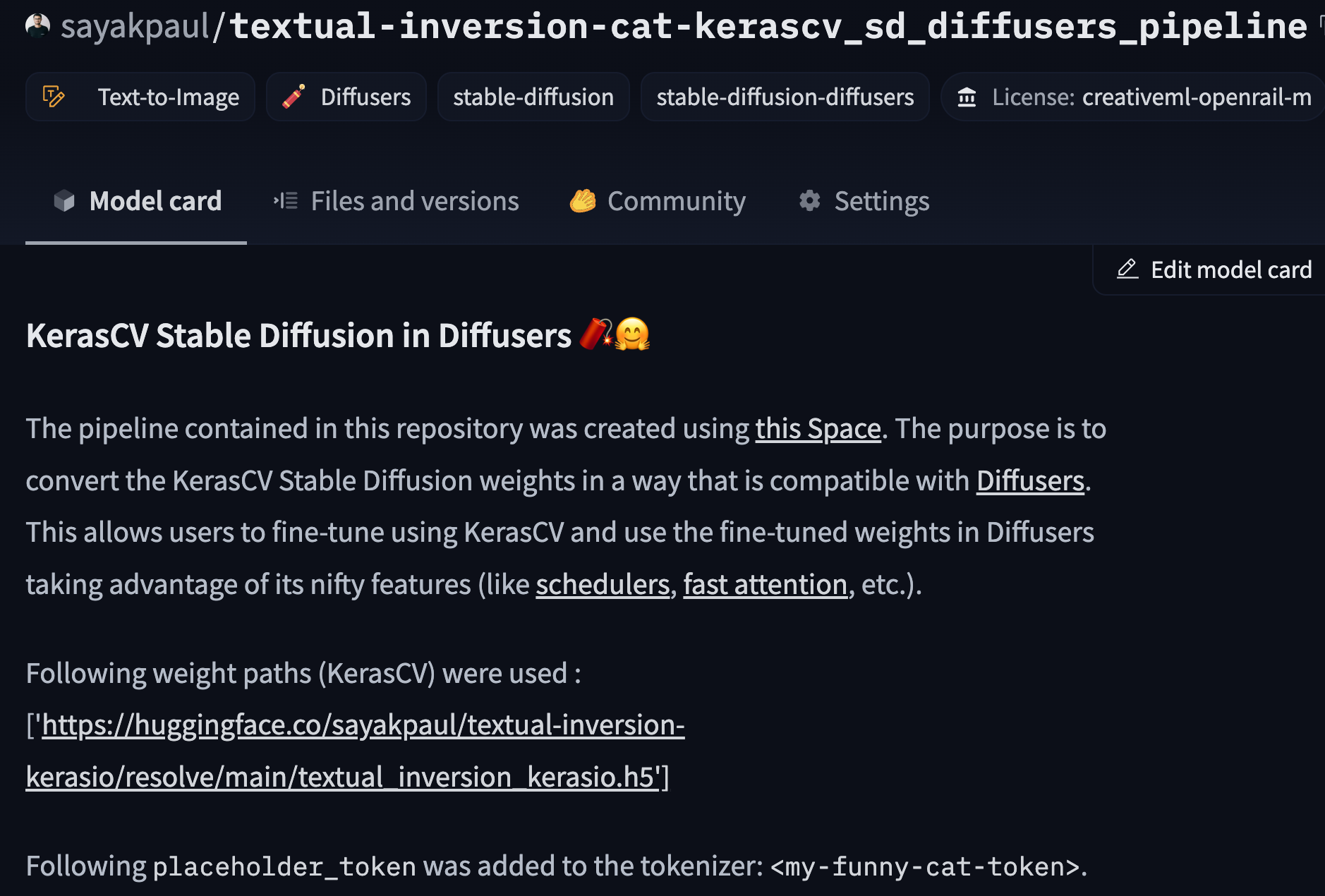

+Textual Inversion is a technique for capturing novel concepts from a small number of example images in a way that can later be used to control text-to-image pipelines. It does so by learning new 'words' in the embedding space of the pipeline's text encoder. These special words can then be used within text prompts to achieve very fine-grained control of the resulting images.

+

+- Textual Inversion. Capture novel concepts from a small set of sample images, and associate them with new "words" in the embedding space of the text encoder. Please, refer to [our training examples](https://github.com/huggingface/diffusers/tree/main/examples/textual_inversion) or [documentation](https://huggingface.co/docs/diffusers/training/text_inversion) to try for yourself.

+

+- Dreambooth. Another technique to capture new concepts in Stable Diffusion. This method fine-tunes the UNet (and, optionally, also the text encoder) of the pipeline to achieve impressive results. Please, refer to [our training example](https://github.com/huggingface/diffusers/tree/main/examples/dreambooth) and [training report](https://huggingface.co/blog/dreambooth) for additional details and training recommendations.

+

+- Full Stable Diffusion fine-tuning. If you have a more sizable dataset with a specific look or style, you can fine-tune Stable Diffusion so that it outputs images following those examples. This was the approach taken to create [a Pokémon Stable Diffusion model](https://huggingface.co/justinpinkney/pokemon-stable-diffusion) (by Justing Pinkney / Lambda Labs), [a Japanese specific version of Stable Diffusion](https://huggingface.co/spaces/rinna/japanese-stable-diffusion) (by [Rinna Co.](https://github.com/rinnakk/japanese-stable-diffusion/) and others. You can start at [our text-to-image fine-tuning example](https://github.com/huggingface/diffusers/tree/main/examples/text_to_image) and go from there.

+

+

+## Stable Diffusion Community Pipelines

+

+The release of Stable Diffusion as an open source model has fostered a lot of interesting ideas and experimentation.

+Our [Community Examples folder](https://github.com/huggingface/diffusers/tree/main/examples/community) contains many ideas worth exploring, like interpolating to create animated videos, using CLIP Guidance for additional prompt fidelity, term weighting, and much more! [Take a look](https://huggingface.co/docs/diffusers/using-diffusers/custom_pipeline_overview) and [contribute your own](https://huggingface.co/docs/diffusers/using-diffusers/contribute_pipeline).

+

+## Other Examples

+

+There are many ways to try running Diffusers! Here we outline code-focused tools (primarily using `DiffusionPipeline`s and Google Colab) and interactive web-tools.

+

+### Running Code

+

+If you want to run the code yourself 💻, you can try out:

+- [Text-to-Image Latent Diffusion](https://huggingface.co/CompVis/ldm-text2im-large-256)

+```python

+# !pip install diffusers["torch"] transformers

+from diffusers import DiffusionPipeline

+

+device = "cuda"

+model_id = "CompVis/ldm-text2im-large-256"

+

+# load model and scheduler

+ldm = DiffusionPipeline.from_pretrained(model_id)

+ldm = ldm.to(device)

+

+# run pipeline in inference (sample random noise and denoise)

+prompt = "A painting of a squirrel eating a burger"

+image = ldm([prompt], num_inference_steps=50, eta=0.3, guidance_scale=6).images[0]

+

+# save image

+image.save("squirrel.png")

+```

+- [Unconditional Diffusion with discrete scheduler](https://huggingface.co/google/ddpm-celebahq-256)

+```python

+# !pip install diffusers["torch"]

+from diffusers import DDPMPipeline, DDIMPipeline, PNDMPipeline

+

+model_id = "google/ddpm-celebahq-256"

+device = "cuda"

+

+# load model and scheduler

+ddpm = DDPMPipeline.from_pretrained(model_id) # you can replace DDPMPipeline with DDIMPipeline or PNDMPipeline for faster inference

+ddpm.to(device)

+

+# run pipeline in inference (sample random noise and denoise)

+image = ddpm().images[0]

+

+# save image

+image.save("ddpm_generated_image.png")

+```

+- [Unconditional Latent Diffusion](https://huggingface.co/CompVis/ldm-celebahq-256)

+- [Unconditional Diffusion with continuous scheduler](https://huggingface.co/google/ncsnpp-ffhq-1024)

+

+**Other Image Notebooks**:

+* [image-to-image generation with Stable Diffusion](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/image_2_image_using_diffusers.ipynb) ,

+* [tweak images via repeated Stable Diffusion seeds](https://colab.research.google.com/github/pcuenca/diffusers-examples/blob/main/notebooks/stable-diffusion-seeds.ipynb) ,

+

+**Diffusers for Other Modalities**:

+* [Molecule conformation generation](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/geodiff_molecule_conformation.ipynb) ,

+* [Model-based reinforcement learning](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/reinforcement_learning_with_diffusers.ipynb) ,

+

+### Web Demos

+If you just want to play around with some web demos, you can try out the following 🚀 Spaces:

+| Model | Hugging Face Spaces |

+|-------------------------------- |------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

+| Text-to-Image Latent Diffusion | [](https://huggingface.co/spaces/CompVis/text2img-latent-diffusion) |

+| Faces generator | [](https://huggingface.co/spaces/CompVis/celeba-latent-diffusion) |

+| DDPM with different schedulers | [](https://huggingface.co/spaces/fusing/celeba-diffusion) |

+| Conditional generation from sketch | [](https://huggingface.co/spaces/huggingface/diffuse-the-rest) |

+| Composable diffusion | [](https://huggingface.co/spaces/Shuang59/Composable-Diffusion) |

+

+## Definitions

+

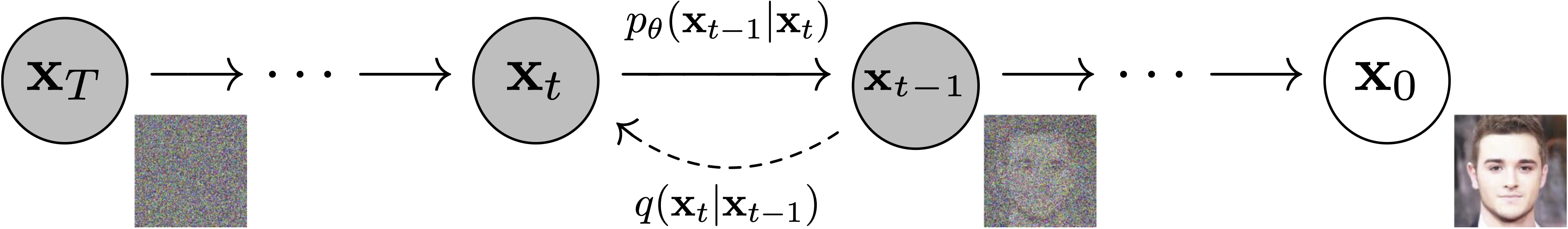

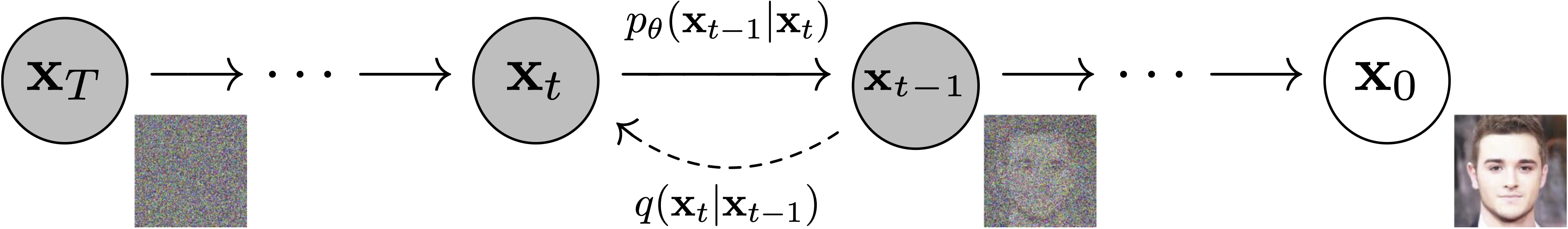

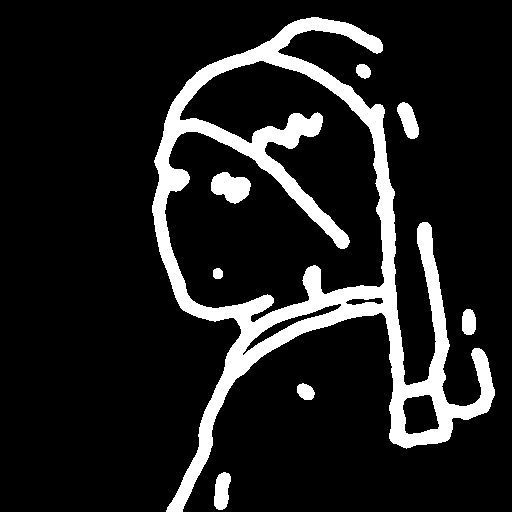

+**Models**: Neural network that models $p_\theta(\mathbf{x}_{t-1}|\mathbf{x}_t)$ (see image below) and is trained end-to-end to *denoise* a noisy input to an image.

+*Examples*: UNet, Conditioned UNet, 3D UNet, Transformer UNet

+

+

. We discuss the hottest trends about diffusion models, help each other with contributions, personal projects or

-just hang out ☕.

+def download_image(url):

+ response = requests.get(url)

+ return PIL.Image.open(BytesIO(response.content)).convert("RGB")

+img_url = "https://raw.githubusercontent.com/CompVis/latent-diffusion/main/data/inpainting_examples/overture-creations-5sI6fQgYIuo.png"

+mask_url = "https://raw.githubusercontent.com/CompVis/latent-diffusion/main/data/inpainting_examples/overture-creations-5sI6fQgYIuo_mask.png"

+

+init_image = download_image(img_url).resize((512, 512))

+mask_image = download_image(mask_url).resize((512, 512))

+

+pipeline, params = FlaxStableDiffusionInpaintPipeline.from_pretrained("xvjiarui/stable-diffusion-2-inpainting")

+

+prompt = "Face of a yellow cat, high resolution, sitting on a park bench"

+prng_seed = jax.random.PRNGKey(0)

+num_inference_steps = 50

+

+num_samples = jax.device_count()

+prompt = num_samples * [prompt]

+init_image = num_samples * [init_image]

+mask_image = num_samples * [mask_image]

+prompt_ids, processed_masked_images, processed_masks = pipeline.prepare_inputs(prompt, init_image, mask_image)

+

+

+# shard inputs and rng

+params = replicate(params)

+prng_seed = jax.random.split(prng_seed, jax.device_count())

+prompt_ids = shard(prompt_ids)

+processed_masked_images = shard(processed_masked_images)

+processed_masks = shard(processed_masks)

+

+images = pipeline(prompt_ids, processed_masks, processed_masked_images, params, prng_seed, num_inference_steps, jit=True).images

+images = pipeline.numpy_to_pil(np.asarray(images.reshape((num_samples,) + images.shape[-3:])))

+```

+

+### Image-to-Image text-guided generation with Stable Diffusion

+

+The `StableDiffusionImg2ImgPipeline` lets you pass a text prompt and an initial image to condition the generation of new images.

+

+```python

+import requests

+import torch

+from PIL import Image

+from io import BytesIO

+

+from diffusers import StableDiffusionImg2ImgPipeline

+

+# load the pipeline

+device = "cuda"

+model_id_or_path = "runwayml/stable-diffusion-v1-5"

+pipe = StableDiffusionImg2ImgPipeline.from_pretrained(model_id_or_path, torch_dtype=torch.float16)

+

+# or download via git clone https://huggingface.co/runwayml/stable-diffusion-v1-5

+# and pass `model_id_or_path="./stable-diffusion-v1-5"`.

+pipe = pipe.to(device)

+

+# let's download an initial image

+url = "https://raw.githubusercontent.com/CompVis/stable-diffusion/main/assets/stable-samples/img2img/sketch-mountains-input.jpg"

+

+response = requests.get(url)

+init_image = Image.open(BytesIO(response.content)).convert("RGB")

+init_image = init_image.resize((768, 512))

+

+prompt = "A fantasy landscape, trending on artstation"

+

+images = pipe(prompt=prompt, image=init_image, strength=0.75, guidance_scale=7.5).images

+

+images[0].save("fantasy_landscape.png")

+```