diff --git a/README.md b/README.md

index 125b4b71..d8f2e5bc 100644

--- a/README.md

+++ b/README.md

@@ -2,7 +2,11 @@

## Open Source & Open Telemetry(OTEL) Observability for LLM applications

-

+

+

+

+

+[](https://railway.app/template/yZGbfC?referralCode=MA2S9H)

---

@@ -10,7 +14,6 @@ Langtrace is an open source observability software which lets you capture, debug

-

## Open Telemetry Support

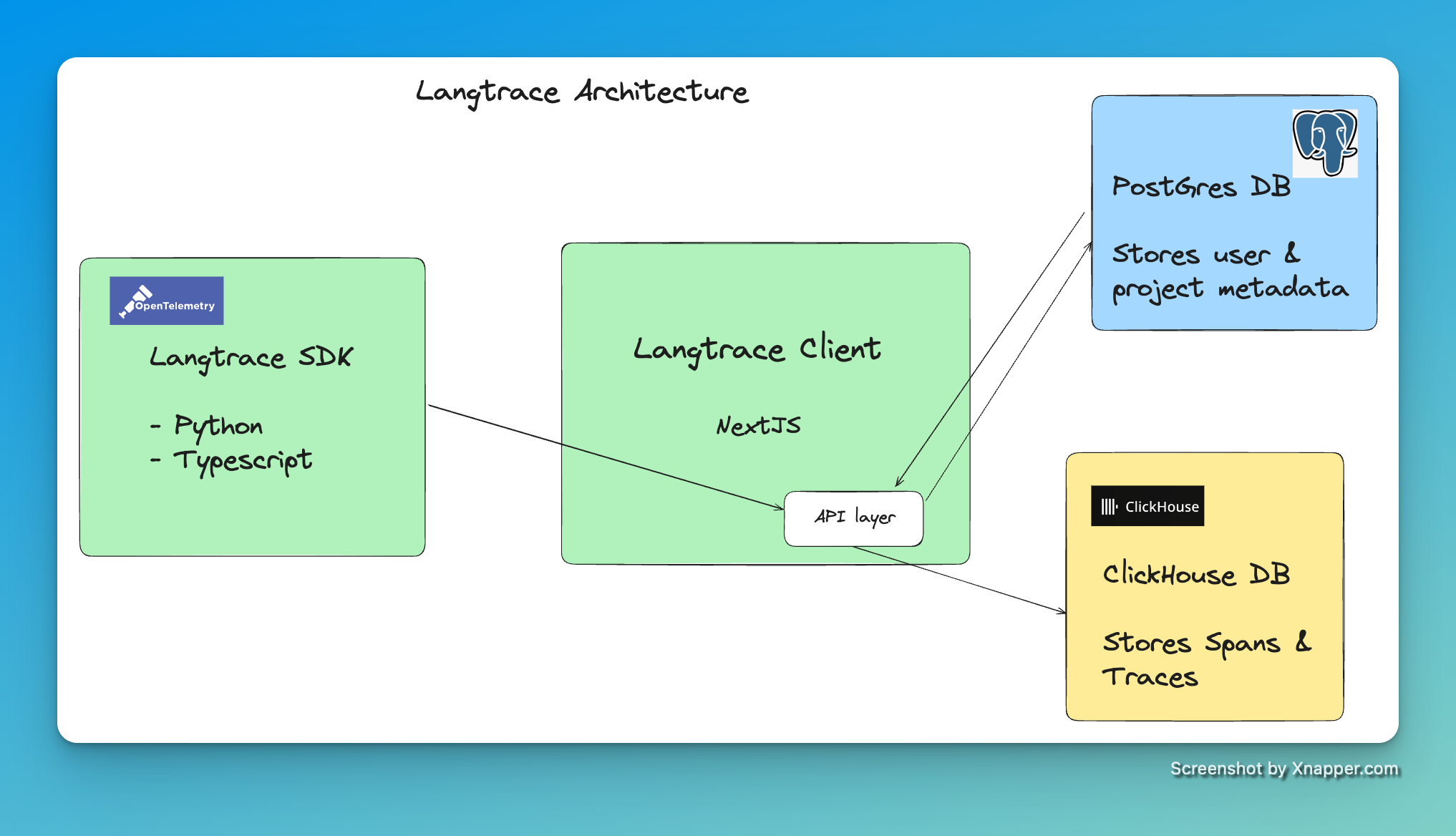

The traces generated by Langtrace adhere to [Open Telemetry Standards(OTEL)](https://opentelemetry.io/docs/concepts/signals/traces/). We are developing [semantic conventions](https://opentelemetry.io/docs/concepts/semantic-conventions/) for the traces generated by this project. You can checkout the current definitions in [this repository](https://github.com/Scale3-Labs/langtrace-trace-attributes/tree/main/schemas). Note: This is an ongoing development and we encourage you to get involved and welcome your feedback.

@@ -73,6 +76,9 @@ To run the Langtrace locally, you have to run three services:

- Postgres database

- Clickhouse database

+> [!IMPORTANT]

+> Checkout [documentation](https://docs.langtrace.ai/hosting/overview) for various deployment options and configurations.

+

Requirements:

- Docker

@@ -94,7 +100,7 @@ The application will be available at `http://localhost:3000`.

> if you wish to build the docker image locally and use it, run the docker compose up command with the `--build` flag.

> [!TIP]

-> to manually pull the docker image from docker hub, run the following command:

+> to manually pull the docker image from [docker hub](https://hub.docker.com/r/scale3labs/langtrace-client/tags), run the following command:

>

> ```bash

> docker pull scale3labs/langtrace-client:latest

@@ -193,6 +199,7 @@ Either you **update the docker compose version** OR **remove the depends_on prop

If clickhouse server is not starting, it is likely that the port 8123 is already in use. You can change the port in the docker-compose file.

+

Install the langtrace SDK in your application by following the same instructions under the Langtrace Cloud section above for sending traces to your self hosted setup.

---

@@ -228,7 +235,6 @@ Langtrace automatically captures traces from the following vendors:

-

---

## Feature Requests and Issues

diff --git a/app/(protected)/project/[project_id]/datasets/page.tsx b/app/(protected)/project/[project_id]/datasets/page.tsx

index ca6b389b..2cc177a3 100644

--- a/app/(protected)/project/[project_id]/datasets/page.tsx

+++ b/app/(protected)/project/[project_id]/datasets/page.tsx

@@ -1,4 +1,4 @@

-import Parent from "@/components/project/dataset/parent";

+import DataSet from "@/components/project/dataset/data-set";

import { authOptions } from "@/lib/auth/options";

import { Metadata } from "next";

import { getServerSession } from "next-auth";

@@ -18,7 +18,7 @@ export default async function Page() {

return (

<>

-

+

);

}

diff --git a/app/(protected)/project/[project_id]/datasets/promptset/[promptset_id]/page.tsx b/app/(protected)/project/[project_id]/datasets/promptset/[promptset_id]/page.tsx

deleted file mode 100644

index 5390ee95..00000000

--- a/app/(protected)/project/[project_id]/datasets/promptset/[promptset_id]/page.tsx

+++ /dev/null

@@ -1,183 +0,0 @@

-"use client";

-

-import { CreatePrompt } from "@/components/project/dataset/create-data";

-import { EditPrompt } from "@/components/project/dataset/edit-data";

-import { Spinner } from "@/components/shared/spinner";

-import { Button } from "@/components/ui/button";

-import { Separator } from "@/components/ui/separator";

-import { Skeleton } from "@/components/ui/skeleton";

-import { PAGE_SIZE } from "@/lib/constants";

-import { Prompt } from "@prisma/client";

-import { ChevronLeft } from "lucide-react";

-import { useParams } from "next/navigation";

-import { useState } from "react";

-import { useBottomScrollListener } from "react-bottom-scroll-listener";

-import { useQuery } from "react-query";

-import { toast } from "sonner";

-

-export default function Promptset() {

- const promptset_id = useParams()?.promptset_id as string;

- const [page, setPage] = useState(1);

- const [totalPages, setTotalPages] = useState(1);

- const [showLoader, setShowLoader] = useState(false);

- const [currentData, setCurrentData] = useState([]);

-

- useBottomScrollListener(() => {

- if (fetchPromptset.isRefetching) {

- return;

- }

- if (page <= totalPages) {

- setShowLoader(true);

- fetchPromptset.refetch();

- }

- });

-

- const fetchPromptset = useQuery({

- queryKey: [promptset_id],

- queryFn: async () => {

- const response = await fetch(

- `/api/promptset?promptset_id=${promptset_id}&page=${page}&pageSize=${PAGE_SIZE}`

- );

- if (!response.ok) {

- const error = await response.json();

- throw new Error(error?.message || "Failed to fetch promptset");

- }

- const result = await response.json();

- return result;

- },

- onSuccess: (data) => {

- // Get the newly fetched data and metadata

- const newData: Prompt[] = data?.promptsets?.Prompt || [];

- const metadata = data?.metadata || {};

-

- // Update the total pages and current page number

- setTotalPages(parseInt(metadata?.total_pages) || 1);

- if (parseInt(metadata?.page) <= parseInt(metadata?.total_pages)) {

- setPage(parseInt(metadata?.page) + 1);

- }

-

- // Merge the new data with the existing data

- if (currentData.length > 0) {

- const updatedData = [...currentData, ...newData];

-

- // Remove duplicates

- const uniqueData = updatedData.filter(

- (v: any, i: number, a: any) =>

- a.findIndex((t: any) => t.id === v.id) === i

- );

-

- setCurrentData(uniqueData);

- } else {

- setCurrentData(newData);

- }

- setShowLoader(false);

- },

- onError: (error) => {

- setShowLoader(false);

- toast.error("Failed to fetch promptset", {

- description: error instanceof Error ? error.message : String(error),

- });

- },

- });

-

- if (fetchPromptset.isLoading || !fetchPromptset.data || !currentData) {

- return ;

- } else {

- return (

-

+ Start by creating the first version of your prompt. Once created,

+ you can test it in the playground with different models and model

+ settings and continue to iterate and add more versions to the

+ prompt.

+

+ );

+}

diff --git a/app/(protected)/project/[project_id]/prompts/page-client.tsx b/app/(protected)/project/[project_id]/prompts/page-client.tsx

index 94815450..8b4eafcf 100644

--- a/app/(protected)/project/[project_id]/prompts/page-client.tsx

+++ b/app/(protected)/project/[project_id]/prompts/page-client.tsx

@@ -1,6 +1,5 @@

"use client";

-import { AddtoPromptset } from "@/components/shared/add-to-promptset";

import { Spinner } from "@/components/shared/spinner";

import { Button } from "@/components/ui/button";

import { Checkbox } from "@/components/ui/checkbox";

@@ -8,7 +7,6 @@ import { Separator } from "@/components/ui/separator";

import { Skeleton } from "@/components/ui/skeleton";

import { PAGE_SIZE } from "@/lib/constants";

import { extractSystemPromptFromLlmInputs } from "@/lib/utils";

-import { CheckCircledIcon } from "@radix-ui/react-icons";

import { ChevronDown, ChevronRight, RabbitIcon } from "lucide-react";

import { useParams } from "next/navigation";

import { useState } from "react";

@@ -42,7 +40,7 @@ export default function PageClient({ email }: { email: string }) {

queryKey: [`fetch-prompts-${projectId}-query`],

queryFn: async () => {

const response = await fetch(

- `/api/prompt?projectId=${projectId}&page=${page}&pageSize=${PAGE_SIZE}`

+ `/api/span-prompt?projectId=${projectId}&page=${page}&pageSize=${PAGE_SIZE}`

);

if (!response.ok) {

const error = await response.json();

@@ -130,9 +128,6 @@ export default function PageClient({ email }: { email: string }) {

return (

-

-

-

These prompts are automatically captured from your traces. The

accuracy of these prompts are calculated based on the evaluation done

@@ -145,7 +140,6 @@ export default function PageClient({ email }: { email: string }) {

- Get started by creating your first prompt set.

+ Get started by creating your first prompt registry.

- Prompt Sets help you categorize and manage a set of prompts. Say

- you would like to group the prompts that give an accuracy of 90%

- of more. You can use the eval tab to add new records to any of

- the prompt sets.

+ A Prompt registry is a collection of versioned prompts all

+ related to a single prompt. You can create a prompt registry,

+ add a prompt and continue to update and version the prompt. You

+ can also access the prompt using the API and use it in your

+ application.

+ {" "}

+ Note: We do not store your API keys and we use the browser store to

+ save it ONLY for the session. Clearing the browser cache will remove

+ the keys.

+