-

-

Notifications

You must be signed in to change notification settings - Fork 104

ChatGPT attempts new questions from the questions channel #820

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

…iting on time per user. Include modal when no option is included for formatting question to ChatGPT.

- Variable name clean up - Command now only uses modal - Remove optional s from string

- CACHE_DURATION -> COMMAND_COOLDOWN - Unwind if statement

… allow for correct path to indicate to users that something went wrong.

…question. So far, all the system does is read the title and attempt to answer the question. More refinements to come.

…ould come from and if it's asked at all. Code has an error on line 143 of HelpSystemHelper.java.

|

Mind sharing some screenshots of the flow? Much easier to look at than starring at the code only. Also, you gotta resolve that merge conflict :) Seems you worked from the wrong branch and now you have the other unrelated changes in this branch as well, which causes the merge conflict. You have to discard those changes and correctly rebase on |

application/src/main/java/org/togetherjava/tjbot/features/help/HelpSystemHelper.java

Outdated

Show resolved

Hide resolved

application/src/main/java/org/togetherjava/tjbot/features/help/HelpSystemHelper.java

Outdated

Show resolved

Hide resolved

application/src/main/java/org/togetherjava/tjbot/features/help/HelpSystemHelper.java

Outdated

Show resolved

Hide resolved

application/src/main/java/org/togetherjava/tjbot/features/help/HelpSystemHelper.java

Outdated

Show resolved

Hide resolved

application/src/main/java/org/togetherjava/tjbot/features/help/HelpSystemHelper.java

Show resolved

Hide resolved

This comment was marked as outdated.

This comment was marked as outdated.

This comment was marked as outdated.

This comment was marked as outdated.

This comment was marked as duplicate.

This comment was marked as duplicate.

…sage if possible OR use the title and a shortened version of the message. Appending tags still possible. - Increase to ChatGptService timeout time. Otherwise, responses would just fail for even slightly complex questions. Also change temperature, which should create more strictly technical answers. - Tags are only appended if the question is still less than the maximum length. - Also included info log test to determine what the final question asked is. - Changed thrown error to mentioning slash command to users instead of silently failing. - Minor string edits.

- Create method to return information on /chatgpt, used if no useful response or error from AI. - Improve substring clipping to include space for question construction.

… checking if string contains desired sequence.

application/src/main/java/org/togetherjava/tjbot/features/help/HelpSystemHelper.java

Outdated

Show resolved

Hide resolved

- Remove need for question mark in title requirement.

Zabuzard

left a comment

Zabuzard

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

mind sharing up2date pictures of the conversation and the error cases? ideally put them into the description of the PR.

not sure if u did that, but i wanted to have no message at all (just silence) if chatgpt has an issue - did u do that? or does it still respond with some fallback "there was an error blablabla"

application/src/main/java/org/togetherjava/tjbot/features/help/HelpSystemHelper.java

Outdated

Show resolved

Hide resolved

application/src/main/java/org/togetherjava/tjbot/features/help/HelpSystemHelper.java

Outdated

Show resolved

Hide resolved

application/src/main/java/org/togetherjava/tjbot/features/help/HelpSystemHelper.java

Outdated

Show resolved

Hide resolved

application/src/main/java/org/togetherjava/tjbot/features/help/HelpSystemHelper.java

Outdated

Show resolved

Hide resolved

application/src/main/java/org/togetherjava/tjbot/features/help/HelpSystemHelper.java

Outdated

Show resolved

Hide resolved

- Pull out question preparation code into own function - Use orElseThrow() instead of get() - Use toLowerCase(Locale.US) - Update StringBuilder variable name to questionBuilder. - Remove potentially erroneous comment

I updated the description to show these paths. This shows what messages are sent and you can see if you like it or not. |

application/src/main/java/org/togetherjava/tjbot/features/help/HelpSystemHelper.java

Outdated

Show resolved

Hide resolved

application/src/main/java/org/togetherjava/tjbot/features/help/HelpSystemHelper.java

Outdated

Show resolved

Hide resolved

application/src/main/java/org/togetherjava/tjbot/features/help/HelpSystemHelper.java

Outdated

Show resolved

Hide resolved

| .flatMap(threadChannel::sendMessage) | ||

| .flatMap(embed -> threadChannel | ||

| .sendMessageEmbeds(HelpSystemHelper.embedWith(aiResponse))); | ||

| } catch (NoSuchElementException e) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

never use exceptions for logic that u could also check with simple if-else. in particular here, the Optional API has a very rich orElse API, make use of it.

answer.orElseGet(() -> {

logger.warn(...);

return useChatGptFallbackMessage(...);

});There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

While I appreciate what the Optional API can do, I think how I have this setup will require some reworking to fix. I use useChatGptFallBackMessage to create a RestAction<Message>. This is required since inside that function it used mentionGuildSlashCommand which creates a RestAction<String>. From looking at the RestAction documentation, this is no way to get the content of the message out (beyond reflection or blocking the thread which is not desired from other code I wrote). If I were able to get it out as a String then the other method would have to be rewritten. For now, I have replaced it with a simple check if the optiona is empty and then logging and returning the fallback message. Let me know if there is some other way I should be doing this.

application/src/main/java/org/togetherjava/tjbot/features/help/HelpSystemHelper.java

Outdated

Show resolved

Hide resolved

| private Optional<String> prepareChatGptQuestion(ThreadChannel threadChannel, | ||

| String questionFirstMessage) { | ||

| String questionTitle = threadChannel.getName(); | ||

| StringBuilder questionBuilder = new StringBuilder(MAX_QUESTION_LENGTH); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

i suppose questions are rarely max length, are they? try to go for something that is more common in practice, maybe max / 2?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

You would be surprised just how fast 200 characters gets filled up. Just taking some sample questions that are on the forum right now they all are around 200 or exceed 200 characters (MAX_LENGTH as defined of now). If the person includes almost any code (and with Java being verbose) the characters are destroyed. It might even be good if we carve out code in the future and just try to get to the human language bits of the question.

The other bit is that I add the tags to the question if a question is too short thus pushing up question lengths.

| if (questionTitle.length() + questionFirstMessage.length() > MAX_QUESTION_LENGTH) { | ||

| // - 1 for the space that will be added afterward. | ||

| questionFirstMessage = questionFirstMessage.substring(0, | ||

| (MAX_QUESTION_LENGTH - 1) - questionTitle.length()); | ||

| } | ||

|

|

||

| questionBuilder.append(questionTitle).append(" ").append(questionFirstMessage); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

i think it would be easier to read if the order would be changed here. like:

questionBuilder.append(title).append(" ");

String shortQuestion = question.substring(0, questionBuilder.length());

questionBuilder.append(shortQuestion);Then the comment is also not needed anymore, since its understandable.

U have to see how that if fits into the mix though.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

questionBuilder.append(questionTitle).append(" ");

if (originalQuestion.length() > MAX_QUESTION_LENGTH - questionBuilder.length()) {

originalQuestion = originalQuestion.substring(0, MAX_QUESTION_LENGTH - questionBuilder.length());

}

questionBuilder.append(originalQuestion);

This is what I got after some refactoring. More or less readable?

application/src/main/java/org/togetherjava/tjbot/features/help/HelpSystemHelper.java

Outdated

Show resolved

Hide resolved

| private RestAction<Message> createMessages(ThreadChannel threadChannel) { | ||

| return sendHelperHeadsUp(threadChannel).flatMap(Message::pin) | ||

| .flatMap(any -> helper.sendExplanationMessage(threadChannel)); | ||

| .flatMap(any -> helper.sendExplanationMessage(threadChannel)) | ||

| .flatMap(any -> threadChannel.retrieveMessageById(threadChannel.getIdLong()) | ||

| .flatMap(message -> helper.constructChatGptAttempt(threadChannel, | ||

| message.getContentRaw()))); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

in the past, this helper method returned the explanation message, now it returns the chat gpt message.

please double check all its current users to make sure thats okay with them

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It still returns the explanation message. It only has one usage which is in HelpThreadCreatedListener:

private void handleHelpThreadCreated(ThreadChannel threadChannel) {

helper.writeHelpThreadToDatabase(threadChannel.getOwnerIdLong(), threadChannel);

Runnable createMessages = () -> {

try {

createMessages(threadChannel).queue();

} catch (Exception e) {

logger.error(

"Unknown error while creating messages after help-thread ({}) creation",

threadChannel.getId(), e);

}

};

// The creation is delayed, because otherwise it could be too fast and be executed

// after Discord created the thread, but before Discord send OPs initial message.

// Sending messages at that moment is not allowed.

SERVICE.schedule(createMessages, 5, TimeUnit.SECONDS);

}

This is called by another function onChannelCreate which checks to ensure that what is being created is a help thread.

Is this what you mean? I think this works since we want to send this along with each new helper thread created. The messages created also appears to be the ping to the helpers and the explanation messages. Seems like a good place to extend the functionality. But I can appreciate that it might need a new place to live. But it seems like it would still be part of the queue() mentioned in the above code. Let me know what you think.

- Change from 'member' to 'human' - Refactor 'questionFirstMessage' to 'originalQuestion' - Refactor code for creating question to improve readability in case of adding question text versus remaining characters for question. - Include new tagBuilder StringBuilder to decrease insert calls down to one. - Remove throw block which housed logic.

application/src/main/java/org/togetherjava/tjbot/features/help/HelpSystemHelper.java

Show resolved

Hide resolved

- Limit length of question string moved to creation of question string - Remove test for bad AI answer (produces false negatives)

application/src/main/java/org/togetherjava/tjbot/features/help/HelpSystemHelper.java

Outdated

Show resolved

Hide resolved

- Remove * imports

|

Kudos, SonarCloud Quality Gate passed! |

|

|

||

| String question = questionOptional.orElseThrow(); | ||

| logger.debug("The final question sent to chatGPT: {}", question); | ||

| chatGPTAnswer = chatGptService.ask(question); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What if the response is over the character limit? ChatGPT often gives really big responses, so I can't imagine this to not happen.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yup, that's already an issue with /chatgpt that is on master. It silently crashes if it's over the character limit.

And just cutting the answer to fit the limit is a bad solution, obviously. What's the point of getting 80% of the answer, if it's a code example or a step-by-step guide? Code won't work, guide is incomplete and useless.

So one solution is to prompt it to fit the answer within limits, and test it to make sure it works well. With a hard cutoff if a limit is reached.

Another would be to post lengthier answers as a file.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What if we simply ask ChatGPT to limit the answer to a certain limit. (within 4000 characters)

ChatGPT can still exceed that limit, so we'd still require a fallback.

But it should work for most cases, and we don't cut the answer of or anything.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yeah, that's one of my proposed solutions.

We either tell it to fit the answer withing the limit (limit - 500 for optimal results), with a fallback. Or we let it post the full answer as a file.

I like both of them. First one is short and neat, no files. My only worry is that the quality of the answers might lack. For example, can you fit a coherent guide how to install and setup something, with examples, within the limit.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Tested few examples, it seems embed length is enough for most guides with examples.

My worry with embeds is that they are too narrow for code, it might ruin the formatting. Since code would be needed in almost every answer, it's something to consider.

And 2000 char limit or regular messages is likely not enough for bigger answers. Close, but not quite there.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Tested few examples, it seems embed length is enough for most guides with examples.

My worry with embeds is that they are too narrow for code, it might ruin the formatting. Since code would be needed in almost every answer, it's something to consider.And 2000 char limit or regular messages is likely not enough for bigger answers. Close, but not quite there.

Thus, we should fit answers in a file?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Try embeds first, for cosistency. Post a picture of a more complicated answer with code examples.

Files are the last restort, since they are kinda ugly and weird UI wise. You know, getting a file in response to a bot command.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

A file is fine for PC, you have good UX then, not as good as just a message but it's fine.

On phone, yeah uh, have fun opening notepad on your phone to read it.

- Increase of timeout time to two minutes. - Initialize and send setup message to AI per query to restrict answers to limit. ChatGptServiceTest - Include new testing suite. - Include tests to check response time and functioning of error detection. - Test for length of response from AI to be less than 2000 characters. ChatGptCommand - Creation of local variables for better flexibility and readability. HelpSystemHelper - Rename variable to better describe use.

- Refactoring to accommodate above change in other classes amd methods. - Refactor HelpThreadCreatedListener to make creating AI response its own method. - Refactor HelpSystemHelper.constructChatGptAttempt() to place all required messages from AI response into embeds when answering a question.

…y to only break up by new lines and try not to be in code when doing so. - Change messages sent from ChatGPT from embeds into plain messages.

|

This pull request is stale because it has been open 30 days with no activity. Remove stale label, comment or add the valid label or this will be closed in 5 days. |

- Add ChatGptServiceTest.java for testing AI responses of different length - Add response.txt which contains test AI responses (just one for now). - Refactor ChatGptService to not include response parsing/break up. Moved to new util class AIResponseParser

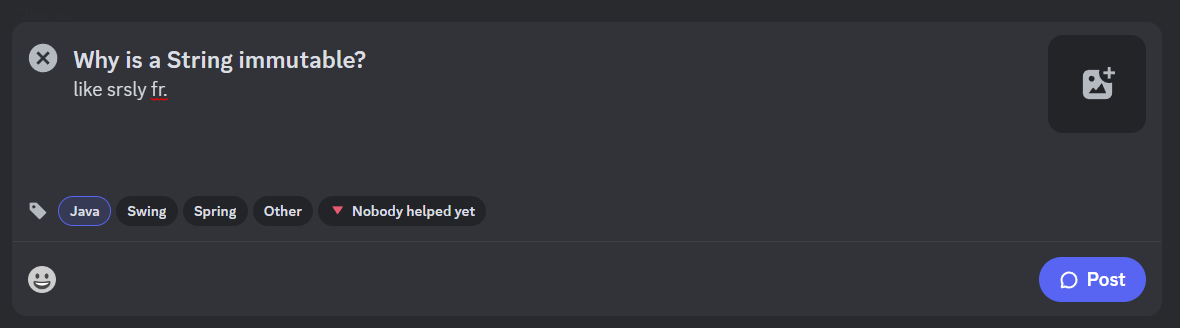

Code execution from user perspective/birds-eye view:

ROSY PATH

Step 1) Enter your question into the questions forum. The question is formed from the title and question message. The title is always include and the message takes up the remaining space we have set for messages.

Additionally, the tags selected by the user are appended to the front of the question to provide more context to ChatGPT. From just a quick look at questions in the forum on the main discord, people ask questions without proper context, much like how titles doesn't include anything about Java since its implied by the asking in this discord. However, users generally seem good at adding the tags for the question.

Step 2) If a suitable question is found, it is sent to ChatGPT. Once the response returns it is checked to see if it was a bad answer. The answer is sent as an embed of an explanation message: it explains that the next message is AI generated, someone should be long soon, and you can use /chatgpt to ask the AI more questions. All this is sent AFTER the original help thread messages (format your code like this, provide details, close the thread when done).

ERROR PATH

Should at any point an error be produced, then all is returned is a message about how you have use /chatgpt to ask questions while you wait for a human. It is like the normal path except for an answer from ChatGPT.

REASONS FOR ERRORS