-

Notifications

You must be signed in to change notification settings - Fork 86

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Added max_batches as a public parameter #1320

Conversation

Codecov Report

@@ Coverage Diff @@

## main #1320 +/- ##

==========================================

+ Coverage 99.95% 99.95% +0.01%

==========================================

Files 213 213

Lines 13560 13575 +15

==========================================

+ Hits 13553 13568 +15

Misses 7 7

Continue to review full report at Codecov.

|

|

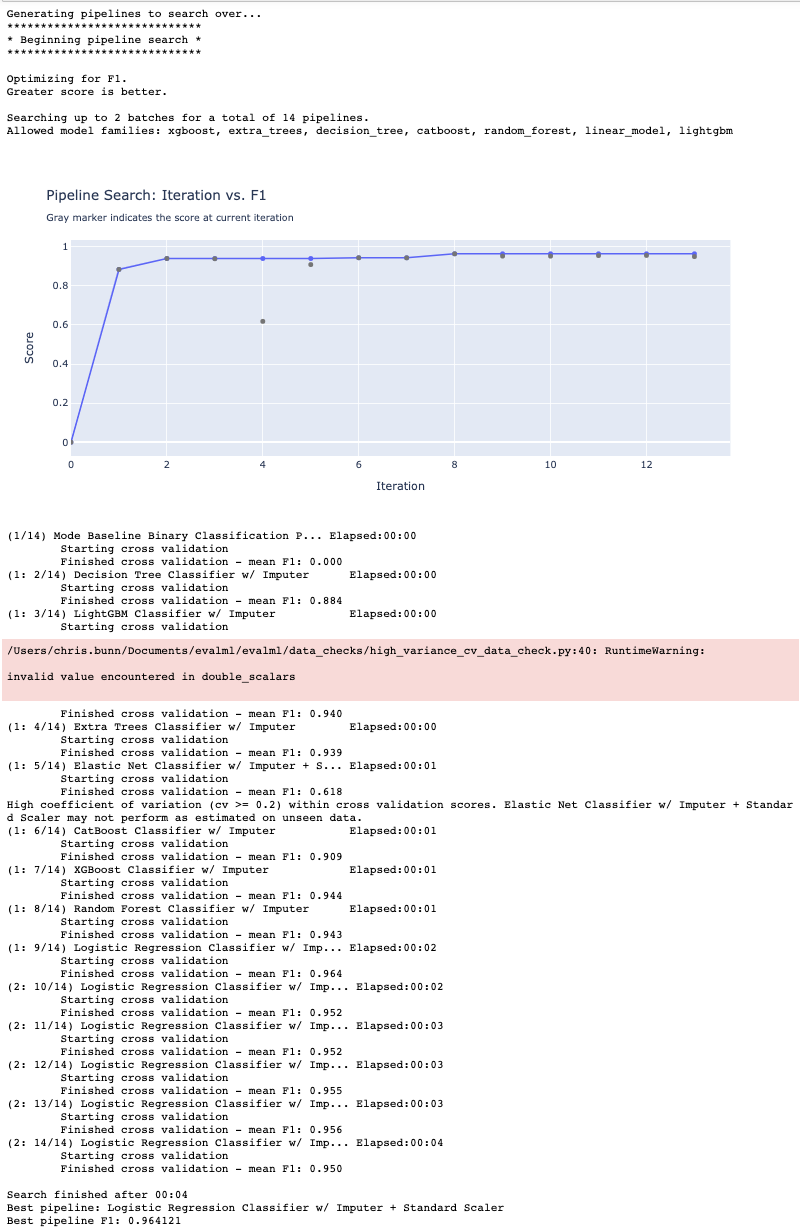

See screenshot above for changes to the progress output. I updated the docstrings, but any suggestions for additional documentation edits are very welcome. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@christopherbunn Looks great!

In terms of formatting the progress - maybe we can do something like Batch 1: Iteration 5/14 to make it self explanatory? I think it might be a good idea to display the batch number regardless of whether the user specified max_batches. Whatever we decide, the formatting for the baseline pipeline iteration should be the same as the other iterations. It looks different in the screenshot you posted.

I'm curious what others think. I think what you have now is great too.

| if check_output: | ||

| assert f"Searching up to {max_batches} batches for a total of {n_automl_pipelines} pipelines." in caplog.text | ||

| for i in range(1, max_batches): | ||

| assert f"({i}: " in caplog.text |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

👍

@freddyaboulton I think that's a good idea. I was worried that It would look a bit off formatting-wise but just prototyping it a bit and it doesn't look as bad as I thought it would.

My reasoning for not having the same formatting is that I think the baseline is not officially considered as part of any batches. That being said, maybe we can mark it as a "Baseline" pipeline or something like that. Alternatively, it could be "Batch 0"? The formatting would look a bit more consistent but I think it could potentially be confusing as well Taking both of these things into account, the new output could look like this: |

|

@christopherbunn I think the output looks good! I am ok with referring to the baseline as |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@christopherbunn looks good! I left a few comments.

evalml/automl/automl_search.py

Outdated

| @@ -86,6 +86,7 @@ def __init__(self, | |||

| tuner_class=None, | |||

| verbose=True, | |||

| optimize_thresholds=False, | |||

| max_batches=None, | |||

| _max_batches=None): | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@christopherbunn because we named _max_batches as a "private" input arg, let's delete it in this PR in favor of max_batches. This is why we kept it private, to test it out before renaming to make it public!

docs/source/release_notes.rst

Outdated

| @@ -33,9 +34,9 @@ Release Notes | |||

| .. warning:: | |||

|

|

|||

| **Breaking Changes** | |||

| * ``__max_batches`` will be deprecated in favor of ``max_batches`` as it is now public :pr:`1320` | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Please update to:

Make

max_batchesargument toAutoMLSearch.searchpublic

docs/source/release_notes.rst

Outdated

| @@ -11,6 +11,7 @@ Release Notes | |||

| * Added percent-better-than-baseline for all objectives to automl.results :pr:`1244` | |||

| * Added ``HighVarianceCVDataCheck`` and replaced synonymous warning in ``AutoMLSearch`` :pr:`1254` | |||

| * Added `PCA Transformer` component for dimensionality reduction :pr:`1270` | |||

| * Added ``max_batches`` parameter to `AutoMLSearch` :pr:`1320` | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We added this previously, so can you change this to

Make

max_batchesargument toAutoMLSearch.searchpublic

evalml/automl/automl_search.py

Outdated

| if _max_batches: | ||

| if not max_batches: | ||

| max_batches = _max_batches | ||

| logger.warning("`_max_batches` will be deprecated in the next release. Use `max_batches` instead.") |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

RE comments above, let's delete this, and _max_batches, entirely, in favor of max_batches

| self.max_batches = max_batches | ||

| # This is the default value for IterativeAlgorithm - setting this explicitly makes sure that | ||

| # the behavior of max_batches does not break if IterativeAlgorithm is changed. | ||

| self._pipelines_per_batch = 5 |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Could we add _pipelines_per_batch as an automl search parameter as well? I do think we should keep that one private while we're getting parallel support in place, and then once we're confident we wanna keep it we can consider making it public. But it'll be nice to have external control over this for perf testing purposes. Ok to do this separately, or file a separate issue.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sure. I'll file this as a separate issue.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks!

| @@ -435,7 +443,9 @@ def search(self, X, y, data_checks="auto", feature_types=None, show_iteration_pl | |||

| logger.info("Optimizing for %s. " % self.objective.name) | |||

| logger.info("{} score is better.\n".format('Greater' if self.objective.greater_is_better else 'Lower')) | |||

|

|

|||

| if self.max_iterations is not None: | |||

| if self.max_batches is not None: | |||

| logger.info(f"Searching up to {self.max_batches} batches for a total of {self.max_iterations} pipelines. ") | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

👍

| if self.max_batches: | ||

| update_pipeline(logger, desc, len(self._results['pipeline_results']) + 1, self.max_iterations, self._start, self.current_batch) | ||

| else: | ||

| update_pipeline(logger, desc, len(self._results['pipeline_results']) + 1, self.max_iterations, self._start) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Please get the current batch number from AutoMLAlgorithm instead:

self._automl_algorithm.batch_number

evalml/utils/logger.py

Outdated

| status_update_format = "({current_batch}: {current_iteration}/{max_iterations}) {pipeline_name} Elapsed:{time_elapsed}" | ||

| else: | ||

| status_update_format = "({current_iteration}/{max_iterations}) {pipeline_name} Elapsed:{time_elapsed}" | ||

| format_params = {'current_batch': current_batch, 'max_iterations': max_iterations, 'current_iteration': current_iteration} | ||

| else: | ||

| status_update_format = "{pipeline_name} Elapsed: {time_elapsed}" | ||

| format_params = {} |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Let's clean this method up:

elapsed_time = time_elapsed(start_time)

if current_batch is not None:

logger.info(f"({current_batch}: {current_iteration}/{max_iterations}) {pipeline_name} Elapsed:{elapsed_time}")

elif max_iterations is not None:

logger.info(f"{current_iteration}/{max_iterations}) {pipeline_name} Elapsed:{elapsed_time}")

else:

logger.info(f"{pipeline_name} Elapsed: {elapsed_time}")fdcad09

to

6b85481

Compare

6b85481

to

af33f1a

Compare

Ah I see, that makes sense. In that case, I'll count the baseline pipeline as Batch 1. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM! Just left one question

evalml/automl/automl_search.py

Outdated

| @@ -591,7 +596,7 @@ def _add_baseline_pipelines(self, X, y): | |||

| desc = desc.ljust(self._MAX_NAME_LEN) | |||

|

|

|||

| update_pipeline(logger, desc, len(self._results['pipeline_results']) + 1, self.max_iterations, | |||

| self._start) | |||

| self._start, '1') | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Why is '1' passed in as a string instead of an int?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hmm yeah I think its fine to pass as an int or a string, right? Its the current batch number, going into a format string in update_pipeline

Actually, @christopherbunn, can we please use self._automl_algorithm.batch_number here instead? The automl algorithm is responsible for keeping track of the batch number. In this case it'll start at 0

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@christopherbunn great!

I left a couple comments about updating the batch number so it starts at 1 instead of 0 in the display output, and always using self._automl_algorithm.batch_number to get the batch number. I also left a suggestion for a test to check the log output is correct.

Otherwise, looks good!

| @@ -489,7 +491,10 @@ def search(self, X, y, data_checks="auto", feature_types=None, show_iteration_pl | |||

| desc = desc[:self._MAX_NAME_LEN - 3] + "..." | |||

| desc = desc.ljust(self._MAX_NAME_LEN) | |||

|

|

|||

| update_pipeline(logger, desc, len(self._results['pipeline_results']) + 1, self.max_iterations, self._start) | |||

| if self.max_batches: | |||

| update_pipeline(logger, desc, len(self._results['pipeline_results']) + 1, self.max_iterations, self._start, self._automl_algorithm.batch_number) | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@christopherbunn I see we're passing this batch number directly to the output. Does it start at 0, or at 1? RE someone else's comment, I think the batch should start at 1 in the log output.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Aha, I read further. Here's what I suggest:

- Everywhere we call

update_pipeline, pass the batch number in usingself._automl_algorithm.batch_number. This will be 0 for the first batch - In

update_pipeline, update the format string to print{current_batch+1}instead of{current_batch}

Sound good?

We should also add unit test coverage which checks that the right batch number prints out. My suggestion:

- Set

max_iterations = len(get_estimators(self.problem_type))+1 + _pipelines_per_batch*2to run 3 batches - Mock pipeline fit/score to avoid long runtime

- Run search, and using the

caplogfixture, assert thatBatch 1appearslen(get_estimators(self.problem_type)) + 1times, andBatch 2andBatch 3each appear_pipelines_per_batchtimes.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The test case sounds good, I'll add that in.

In update_pipeline, update the format string to print {current_batch+1} instead of {current_batch}

@dsherry because the pipeline is counted as its own separate batch, the number of batches would actually be off by one since batch_number is incremented by 1 when next_batch() is called. Since next_batch() is called for the first batch before the output is seen, Batch 1 actually shows up as Batch 2 with {current_batch+1}.

I could:

- Keep

{current_batch+1}and move where we increment batch number to when the results are reported back - Have the baseline pipeline show as batch 0 and use

self._automl_algorithm.batch_number - Override the output for the baseline (and not use

self._automl_algorithm.batch_number) and show it as either batch 1, "baseline", or "base"

Imo 1 has the potential to get messy since we're modifying IterativeAlgorithm so I want to avoid that. I'm leaning towards 2 although 3 seems fine with me as well. If we wanted "base" or "baseline" for 3, then I'll add another case for update_pipeline()

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ah got it. Thanks.

I'll throw one more option on the pile:

2b. Label the baseline pipeline as batch 1 instead of batch 0. Use self._automl_algorithm.batch_number everywhere else, meaning the 1st batch shows up as batch 1, etc.

I think I agree with what you had before for the baseline in _add_baseline_pipelines, and we should keep the baseline pipeline displayed as "batch 1" along with the others.

But really, either 2 or 2b seem fine to me.

Sound good?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

2b works for me, I'll manually set the baseline as batch 1 then. Thanks!

evalml/automl/automl_search.py

Outdated

| @@ -591,7 +596,7 @@ def _add_baseline_pipelines(self, X, y): | |||

| desc = desc.ljust(self._MAX_NAME_LEN) | |||

|

|

|||

| update_pipeline(logger, desc, len(self._results['pipeline_results']) + 1, self.max_iterations, | |||

| self._start) | |||

| self._start, '1') | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hmm yeah I think its fine to pass as an int or a string, right? Its the current batch number, going into a format string in update_pipeline

Actually, @christopherbunn, can we please use self._automl_algorithm.batch_number here instead? The automl algorithm is responsible for keeping track of the batch number. In this case it'll start at 0

422c794

to

ac28961

Compare

ac28961

to

62a05aa

Compare

Updated search progress look (for

max_batches=2)Resolves #1294