diff --git a/.github/workflows/github-actions.yml b/.github/workflows/github-actions.yml

index 506fe2484..3062edddb 100644

--- a/.github/workflows/github-actions.yml

+++ b/.github/workflows/github-actions.yml

@@ -38,7 +38,7 @@ jobs:

# If you want to matrix build , you can append the following list.

matrix:

go_version:

- - 1.16

+ - 1.18

os:

- ubuntu-latest

diff --git a/.github/workflows/release.yml b/.github/workflows/release.yml

index b71067d33..091ac3268 100644

--- a/.github/workflows/release.yml

+++ b/.github/workflows/release.yml

@@ -20,7 +20,7 @@

name: Release

on:

release:

- types: [created]

+ types: [ created ]

jobs:

releases-matrix:

@@ -29,9 +29,9 @@ jobs:

strategy:

matrix:

# build and publish in parallel: linux/386, linux/amd64, windows/386, windows/amd64, darwin/amd64

- goos: [linux, windows]

- goarch: ["386", amd64, arm]

- exclude:

+ goos: [ linux, windows ]

+ goarch: [ "386", amd64, arm ]

+ exclude:

- goarch: "arm"

goos: windows

@@ -42,6 +42,6 @@ jobs:

github_token: ${{ secrets.GITHUB_TOKEN }}

goos: ${{ matrix.goos }}

goarch: ${{ matrix.goarch }}

- goversion: "https://golang.org/dl/go1.16.15.linux-amd64.tar.gz"

+ goversion: "https://go.dev/dl/go1.18.3.linux-amd64.tar.gz"

project_path: "./cmd/arana"

binary_name: "arana"

diff --git a/.github/workflows/reviewdog.yml b/.github/workflows/reviewdog.yml

index 39ac5fbff..6a7425b32 100644

--- a/.github/workflows/reviewdog.yml

+++ b/.github/workflows/reviewdog.yml

@@ -30,4 +30,5 @@ jobs:

- name: golangci-lint

uses: reviewdog/action-golangci-lint@v2

with:

- go_version: "1.16"

+ go_version: "1.18"

+ golangci_lint_version: "v1.46.2" # use latest version by default

diff --git a/.pre-commit-config.yaml b/.pre-commit-config.yaml

index 2845a2862..503044c5a 100644

--- a/.pre-commit-config.yaml

+++ b/.pre-commit-config.yaml

@@ -19,6 +19,6 @@

# See https://pre-commit.com/hooks.html for more hooks

repos:

- repo: http://github.com/golangci/golangci-lint

- rev: v1.42.1

+ rev: v1.46.2

hooks:

- id: golangci-lint

diff --git a/Dockerfile b/Dockerfile

index 2b20788aa..f4dc11141 100644

--- a/Dockerfile

+++ b/Dockerfile

@@ -1,5 +1,22 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one or more

+# contributor license agreements. See the NOTICE file distributed with

+# this work for additional information regarding copyright ownership.

+# The ASF licenses this file to You under the Apache License, Version 2.0

+# (the "License"); you may not use this file except in compliance with

+# the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+#

+

# builder layer

-FROM golang:1.16-alpine AS builder

+FROM golang:1.18-alpine AS builder

RUN apk add --no-cache upx

diff --git a/Makefile b/Makefile

index 2cd0ab391..c67702973 100644

--- a/Makefile

+++ b/Makefile

@@ -38,6 +38,9 @@ docker-build:

integration-test:

@go clean -testcache

go test -tags integration -v ./test/...

+ go test -tags integration-db_tbl -v ./integration_test/scene/db_tbl/...

+ go test -tags integration-db -v ./integration_test/scene/db/...

+ go test -tags integration-tbl -v ./integration_test/scene/tbl/...

clean:

@rm -rf coverage.txt

@@ -50,4 +53,4 @@ prepareLic:

.PHONY: license

license: prepareLic

- $(GO_LICENSE_CHECKER) -v -a -r -i vendor $(LICENSE_DIR)/license.txt . go && [[ -z `git status -s` ]]

\ No newline at end of file

+ $(GO_LICENSE_CHECKER) -v -a -r -i vendor $(LICENSE_DIR)/license.txt . go && [[ -z `git status -s` ]]

diff --git a/README.md b/README.md

index a91e6d5cd..e8898d9ca 100644

--- a/README.md

+++ b/README.md

@@ -1,48 +1,87 @@

-# arana

-[](https://github.com/arana-db/arana/blob/master/LICENSE)

+# Arana

+

+

+

+

+

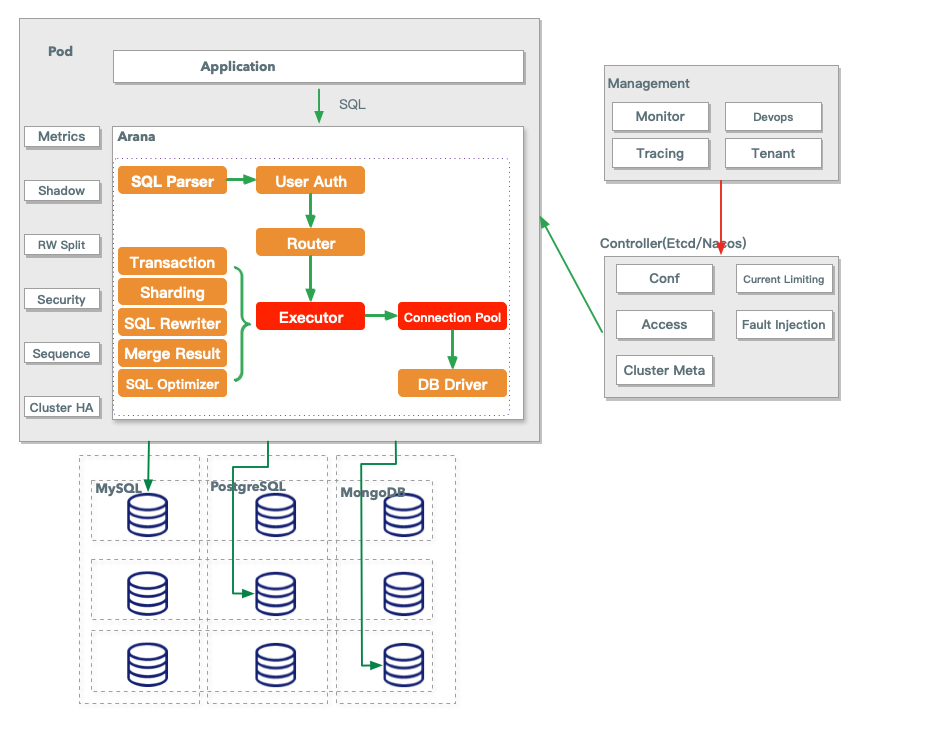

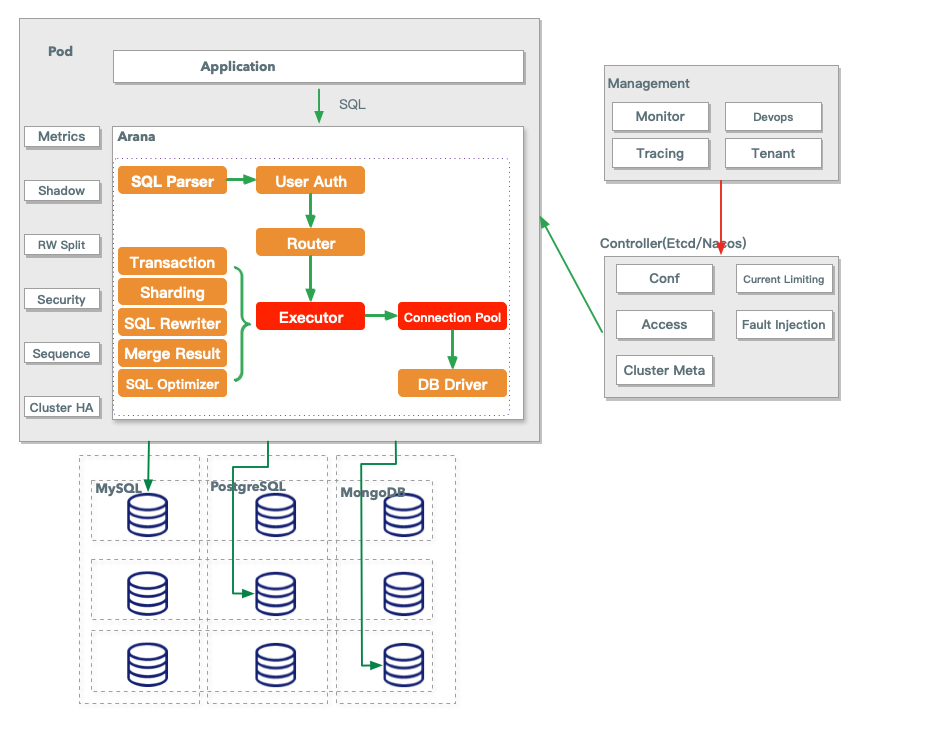

## Features

-| feature | complete |

-| -- | -- |

-| single db proxy | √ |

-| read write splitting | × |

-| tracing | × |

-| metrics | × |

-| sql audit | × |

-| sharding | × |

-| multi tenant | × |

+| **Feature** | **Complete** |

+|:-----------------------:|:------------:|

+| Single DB Proxy | √ |

+| Read Write Splitting | √ |

+| Sharding | √ |

+| Multi Tenant | √ |

+| Distributed Primary Key | WIP |

+| Distributed Transaction | WIP |

+| Shadow Table | WIP |

+| Database Mesh | WIP |

+| Tracing / Metrics | WIP |

+| SQL Audit | Roadmap |

+| Data encrypt / decrypt | Roadmap |

## Getting started

+Please reference this link [Getting Started](https://github.com/arana-db/arana/discussions/172)

+

```

arana start -c ${configFilePath}

```

### Prerequisites

-+ MySQL server 5.7+

++ Go 1.18+

++ MySQL Server 5.7+

## Design and implementation

## Roadmap

## Built With

-- [tidb](https://github.com/pingcap/tidb) - The sql parser used

+

+- [TiDB](https://github.com/pingcap/tidb) - The SQL parser used

## Contact

+Arana Chinese Community Meeting Time: **Every Saturday At 9:00PM GMT+8**

+

+

+

## Features

-| feature | complete |

-| -- | -- |

-| single db proxy | √ |

-| read write splitting | × |

-| tracing | × |

-| metrics | × |

-| sql audit | × |

-| sharding | × |

-| multi tenant | × |

+| **Feature** | **Complete** |

+|:-----------------------:|:------------:|

+| Single DB Proxy | √ |

+| Read Write Splitting | √ |

+| Sharding | √ |

+| Multi Tenant | √ |

+| Distributed Primary Key | WIP |

+| Distributed Transaction | WIP |

+| Shadow Table | WIP |

+| Database Mesh | WIP |

+| Tracing / Metrics | WIP |

+| SQL Audit | Roadmap |

+| Data encrypt / decrypt | Roadmap |

## Getting started

+Please reference this link [Getting Started](https://github.com/arana-db/arana/discussions/172)

+

```

arana start -c ${configFilePath}

```

### Prerequisites

-+ MySQL server 5.7+

++ Go 1.18+

++ MySQL Server 5.7+

## Design and implementation

## Roadmap

## Built With

-- [tidb](https://github.com/pingcap/tidb) - The sql parser used

+

+- [TiDB](https://github.com/pingcap/tidb) - The SQL parser used

## Contact

+Arana Chinese Community Meeting Time: **Every Saturday At 9:00PM GMT+8**

+

+ +

## Contributing

+Thanks for your help improving the project! We are so happy to have you! We have a contributing guide to help you get involved in the Arana project.

+

## License

Arana software is licenced under the Apache License Version 2.0. See the [LICENSE](https://github.com/arana-db/arana/blob/master/LICENSE) file for details.

diff --git a/README_CN.md b/README_CN.md

index ca05c93ba..a957e4604 100644

--- a/README_CN.md

+++ b/README_CN.md

@@ -2,7 +2,7 @@

[](https://github.com/arana-db/arana/blob/master/LICENSE)

[](https://codecov.io/gh/arana-db/arana)

-

+

## 简介 | [English](https://github.com/arana-db/arana/blob/master/README.md)

diff --git a/README_ZH.md b/README_ZH.md

index 96d9c4684..ca91e1874 100644

--- a/README_ZH.md

+++ b/README_ZH.md

@@ -2,7 +2,7 @@

[](https://github.com/arana-db/arana/blob/master/LICENSE)

[](https://codecov.io/gh/arana-db/arana)

-

+

## 简介

diff --git a/cmd/main.go b/cmd/main.go

index e0da5f65c..ad35125d5 100644

--- a/cmd/main.go

+++ b/cmd/main.go

@@ -27,9 +27,7 @@ import (

_ "github.com/arana-db/arana/cmd/tools"

)

-var (

- Version = "0.1.0"

-)

+var Version = "0.1.0"

func main() {

rootCommand := &cobra.Command{

diff --git a/cmd/start/start.go b/cmd/start/start.go

index f6ee9de5f..28d232147 100644

--- a/cmd/start/start.go

+++ b/cmd/start/start.go

@@ -49,7 +49,7 @@ const slogan = `

/ _ | / _ \/ _ | / |/ / _ |

/ __ |/ , _/ __ |/ / __ |

/_/ |_/_/|_/_/ |_/_/|_/_/ |_|

-High performance, powerful DB Mesh.

+Arana, A High performance & Powerful DB Mesh sidecar.

_____________________________________________

`

diff --git a/conf/config.yaml b/conf/config.yaml

index 21721426d..5caeab8b1 100644

--- a/conf/config.yaml

+++ b/conf/config.yaml

@@ -40,40 +40,76 @@ data:

type: mysql

sql_max_limit: -1

tenant: arana

- conn_props:

- capacity: 10

- max_capacity: 20

- idle_timeout: 60

groups:

- name: employees_0000

nodes:

- - name: arana-node-1

+ - name: node0

host: arana-mysql

port: 3306

username: root

password: "123456"

- database: employees

+ database: employees_0000

weight: r10w10

- labels:

- zone: shanghai

- conn_props:

- readTimeout: "1s"

- writeTimeout: "1s"

- parseTime: true

- loc: Local

- charset: utf8mb4,utf8

-

+ parameters:

+ maxAllowedPacket: 256M

+ - name: node0_r_0

+ host: arana-mysql

+ port: 3306

+ username: root

+ password: "123456"

+ database: employees_0000_r

+ weight: r0w0

+ parameters:

+ maxAllowedPacket: 256M

+ - name: employees_0001

+ nodes:

+ - name: node1

+ host: arana-mysql

+ port: 3306

+ username: root

+ password: "123456"

+ database: employees_0001

+ weight: r10w10

+ parameters:

+ maxAllowedPacket: 256M

+ - name: employees_0002

+ nodes:

+ - name: node2

+ host: arana-mysql

+ port: 3306

+ username: root

+ password: "123456"

+ database: employees_0002

+ weight: r10w10

+ parameters:

+ maxAllowedPacket: 256M

+ - name: employees_0003

+ nodes:

+ - name: node3

+ host: arana-mysql

+ port: 3306

+ username: root

+ password: "123456"

+ database: employees_0003

+ weight: r10w10

+ parameters:

+ maxAllowedPacket: 256M

sharding_rule:

tables:

- name: employees.student

allow_full_scan: true

db_rules:

+ - column: uid

+ type: scriptExpr

+ expr: parseInt($value % 32 / 8)

tbl_rules:

- column: uid

+ type: scriptExpr

expr: $value % 32

+ step: 32

topology:

- db_pattern: employees_0000

- tbl_pattern: student_${0000...0031}

+ db_pattern: employees_${0000..0003}

+ tbl_pattern: student_${0000..0031}

attributes:

sqlMaxLimit: -1

diff --git a/docker-compose.yaml b/docker-compose.yaml

index c66cf692d..7a1548fc1 100644

--- a/docker-compose.yaml

+++ b/docker-compose.yaml

@@ -18,7 +18,7 @@

version: "3"

services:

mysql:

- image: mysql:8.0

+ image: mysql:5.7

container_name: arana-mysql

networks:

- local

@@ -41,7 +41,7 @@ services:

arana:

build: .

container_name: arana

- image: aranadb/arana:latest

+ image: aranadb/arana:master

networks:

- local

ports:

diff --git a/docs/pics/arana-architecture.png b/docs/pics/arana-architecture.png

new file mode 100644

index 000000000..775fa8dcb

Binary files /dev/null and b/docs/pics/arana-architecture.png differ

diff --git a/docs/pics/arana-blue.png b/docs/pics/arana-blue.png

new file mode 100644

index 000000000..90d31b8c4

Binary files /dev/null and b/docs/pics/arana-blue.png differ

diff --git a/docs/pics/arana-db-blue.png b/docs/pics/arana-db-blue.png

new file mode 100644

index 000000000..93f3d4014

Binary files /dev/null and b/docs/pics/arana-db-blue.png differ

diff --git a/docs/pics/arana-db-v0.2.sketch b/docs/pics/arana-db-v0.2.sketch

new file mode 100644

index 000000000..a97994827

Binary files /dev/null and b/docs/pics/arana-db-v0.2.sketch differ

diff --git a/docs/pics/arana-main.png b/docs/pics/arana-main.png

new file mode 100644

index 000000000..559c90dd4

Binary files /dev/null and b/docs/pics/arana-main.png differ

diff --git a/docs/pics/dingtalk-group.jpeg b/docs/pics/dingtalk-group.jpeg

new file mode 100644

index 000000000..638128143

Binary files /dev/null and b/docs/pics/dingtalk-group.jpeg differ

diff --git a/go.mod b/go.mod

index 7d19bd53a..23d592959 100644

--- a/go.mod

+++ b/go.mod

@@ -1,16 +1,15 @@

module github.com/arana-db/arana

-go 1.16

+go 1.18

require (

- github.com/arana-db/parser v0.2.1

+ github.com/arana-db/parser v0.2.3

github.com/bwmarrin/snowflake v0.3.0

github.com/cespare/xxhash/v2 v2.1.2

github.com/dop251/goja v0.0.0-20220422102209-3faab1d8f20e

github.com/dubbogo/gost v1.12.3

github.com/go-playground/validator/v10 v10.10.1

github.com/go-sql-driver/mysql v1.6.0

- github.com/golang/groupcache v0.0.0-20210331224755-41bb18bfe9da // indirect

github.com/golang/mock v1.5.0

github.com/hashicorp/golang-lru v0.5.4

github.com/lestrrat-go/strftime v1.0.5

@@ -19,21 +18,105 @@ require (

github.com/olekukonko/tablewriter v0.0.5

github.com/pkg/errors v0.9.1

github.com/prometheus/client_golang v1.11.0

- github.com/prometheus/common v0.28.0 // indirect

github.com/spf13/cobra v1.2.1

- github.com/stretchr/testify v1.7.0

+ github.com/stretchr/testify v1.7.1

github.com/testcontainers/testcontainers-go v0.12.0

github.com/tidwall/gjson v1.14.0

go.etcd.io/etcd/api/v3 v3.5.1

go.etcd.io/etcd/client/v3 v3.5.0

go.etcd.io/etcd/server/v3 v3.5.0-alpha.0

+ go.opentelemetry.io/otel v1.7.0

+ go.opentelemetry.io/otel/trace v1.7.0

go.uber.org/atomic v1.9.0

- go.uber.org/multierr v1.7.0

go.uber.org/zap v1.19.1

- golang.org/x/crypto v0.0.0-20220427172511-eb4f295cb31f // indirect

+ golang.org/x/exp v0.0.0-20220613132600-b0d781184e0d

golang.org/x/sync v0.0.0-20210220032951-036812b2e83c

- golang.org/x/sys v0.0.0-20220429233432-b5fbb4746d32 // indirect

+ gopkg.in/yaml.v3 v3.0.0-20210107192922-496545a6307b

+)

+

+require (

+ github.com/Azure/go-ansiterm v0.0.0-20170929234023-d6e3b3328b78 // indirect

+ github.com/Microsoft/go-winio v0.4.17-0.20210211115548-6eac466e5fa3 // indirect

+ github.com/Microsoft/hcsshim v0.8.16 // indirect

+ github.com/aliyun/alibaba-cloud-sdk-go v1.61.18 // indirect

+ github.com/beorn7/perks v1.0.1 // indirect

+ github.com/buger/jsonparser v1.1.1 // indirect

+ github.com/cenkalti/backoff v2.2.1+incompatible // indirect

+ github.com/containerd/cgroups v0.0.0-20210114181951-8a68de567b68 // indirect

+ github.com/containerd/containerd v1.5.0-beta.4 // indirect

+ github.com/coreos/go-semver v0.3.0 // indirect

+ github.com/coreos/go-systemd/v22 v22.3.2 // indirect

+ github.com/davecgh/go-spew v1.1.1 // indirect

+ github.com/dlclark/regexp2 v1.4.1-0.20201116162257-a2a8dda75c91 // indirect

+ github.com/docker/distribution v2.7.1+incompatible // indirect

+ github.com/docker/docker v20.10.11+incompatible // indirect

+ github.com/docker/go-connections v0.4.0 // indirect

+ github.com/docker/go-units v0.4.0 // indirect

+ github.com/dustin/go-humanize v1.0.0 // indirect

+ github.com/form3tech-oss/jwt-go v3.2.2+incompatible // indirect

+ github.com/go-errors/errors v1.0.1 // indirect

+ github.com/go-logr/logr v1.2.3 // indirect

+ github.com/go-logr/stdr v1.2.2 // indirect

+ github.com/go-playground/locales v0.14.0 // indirect

+ github.com/go-playground/universal-translator v0.18.0 // indirect

+ github.com/go-sourcemap/sourcemap v2.1.3+incompatible // indirect

+ github.com/gogo/protobuf v1.3.2 // indirect

+ github.com/golang/groupcache v0.0.0-20210331224755-41bb18bfe9da // indirect

+ github.com/golang/protobuf v1.5.2 // indirect

+ github.com/google/btree v1.0.0 // indirect

+ github.com/google/uuid v1.3.0 // indirect

+ github.com/gopherjs/gopherjs v0.0.0-20190910122728-9d188e94fb99 // indirect

+ github.com/gorilla/websocket v1.4.2 // indirect

+ github.com/grpc-ecosystem/go-grpc-middleware v1.2.2 // indirect

+ github.com/grpc-ecosystem/go-grpc-prometheus v1.2.0 // indirect

+ github.com/grpc-ecosystem/grpc-gateway v1.16.0 // indirect

+ github.com/inconshreveable/mousetrap v1.0.0 // indirect

+ github.com/jmespath/go-jmespath v0.4.0 // indirect

+ github.com/jonboulle/clockwork v0.2.2 // indirect

+ github.com/json-iterator/go v1.1.11 // indirect

+ github.com/leodido/go-urn v1.2.1 // indirect

+ github.com/magiconair/properties v1.8.5 // indirect

+ github.com/mattn/go-runewidth v0.0.9 // indirect

+ github.com/matttproud/golang_protobuf_extensions v1.0.2-0.20181231171920-c182affec369 // indirect

+ github.com/moby/sys/mount v0.2.0 // indirect

+ github.com/moby/sys/mountinfo v0.5.0 // indirect

+ github.com/moby/term v0.0.0-20201216013528-df9cb8a40635 // indirect

+ github.com/modern-go/concurrent v0.0.0-20180306012644-bacd9c7ef1dd // indirect

+ github.com/modern-go/reflect2 v1.0.1 // indirect

+ github.com/morikuni/aec v0.0.0-20170113033406-39771216ff4c // indirect

+ github.com/opencontainers/go-digest v1.0.0 // indirect

+ github.com/opencontainers/image-spec v1.0.1 // indirect

+ github.com/opencontainers/runc v1.0.2 // indirect

+ github.com/pingcap/errors v0.11.5-0.20210425183316-da1aaba5fb63 // indirect

+ github.com/pingcap/log v0.0.0-20210625125904-98ed8e2eb1c7 // indirect

+ github.com/pmezard/go-difflib v1.0.0 // indirect

+ github.com/prometheus/client_model v0.2.0 // indirect

+ github.com/prometheus/common v0.28.0 // indirect

+ github.com/prometheus/procfs v0.6.0 // indirect

+ github.com/sirupsen/logrus v1.8.1 // indirect

+ github.com/soheilhy/cmux v0.1.5-0.20210205191134-5ec6847320e5 // indirect

+ github.com/spf13/pflag v1.0.5 // indirect

+ github.com/tidwall/match v1.1.1 // indirect

+ github.com/tidwall/pretty v1.2.0 // indirect

+ github.com/tmc/grpc-websocket-proxy v0.0.0-20201229170055-e5319fda7802 // indirect

+ github.com/xiang90/probing v0.0.0-20190116061207-43a291ad63a2 // indirect

+ go.etcd.io/bbolt v1.3.5 // indirect

+ go.etcd.io/etcd/client/pkg/v3 v3.5.0 // indirect

+ go.etcd.io/etcd/client/v2 v2.305.0 // indirect

+ go.etcd.io/etcd/pkg/v3 v3.5.0-alpha.0 // indirect

+ go.etcd.io/etcd/raft/v3 v3.5.0-alpha.0 // indirect

+ go.opencensus.io v0.23.0 // indirect

+ go.uber.org/multierr v1.6.0 // indirect

+ golang.org/x/crypto v0.0.0-20211215153901-e495a2d5b3d3 // indirect

+ golang.org/x/net v0.0.0-20211112202133-69e39bad7dc2 // indirect

+ golang.org/x/sys v0.0.0-20220128215802-99c3d69c2c27 // indirect

+ golang.org/x/text v0.3.7 // indirect

+ golang.org/x/time v0.0.0-20201208040808-7e3f01d25324 // indirect

google.golang.org/genproto v0.0.0-20211104193956-4c6863e31247 // indirect

google.golang.org/grpc v1.42.0 // indirect

- gopkg.in/yaml.v3 v3.0.0-20210107192922-496545a6307b

+ google.golang.org/protobuf v1.27.1 // indirect

+ gopkg.in/ini.v1 v1.62.0 // indirect

+ gopkg.in/natefinch/lumberjack.v2 v2.0.0 // indirect

+ gopkg.in/yaml.v2 v2.4.0 // indirect

+ sigs.k8s.io/yaml v1.2.0 // indirect

)

diff --git a/go.sum b/go.sum

index a2d502ace..c3cc775e1 100644

--- a/go.sum

+++ b/go.sum

@@ -94,8 +94,8 @@ github.com/aliyun/alibaba-cloud-sdk-go v1.61.18/go.mod h1:v8ESoHo4SyHmuB4b1tJqDH

github.com/antihax/optional v1.0.0/go.mod h1:uupD/76wgC+ih3iEmQUL+0Ugr19nfwCT1kdvxnR2qWY=

github.com/apache/thrift v0.12.0/go.mod h1:cp2SuWMxlEZw2r+iP2GNCdIi4C1qmUzdZFSVb+bacwQ=

github.com/apache/thrift v0.13.0/go.mod h1:cp2SuWMxlEZw2r+iP2GNCdIi4C1qmUzdZFSVb+bacwQ=

-github.com/arana-db/parser v0.2.1 h1:a885V+OIABmqYHYbLP2QWZbn+/TE0mZJd8dafWY7F6Y=

-github.com/arana-db/parser v0.2.1/go.mod h1:y4hxIPieC5T26aoNd44XiWXNunC03kUQW0CI3NKaYTk=

+github.com/arana-db/parser v0.2.3 h1:zLZcx0/oidlHnw/GZYE78NuvwQkHUv2Xtrm2IwyZasA=

+github.com/arana-db/parser v0.2.3/go.mod h1:/XA29bplweWSEAjgoM557ZCzhBilSawUlHcZFjOeDAc=

github.com/armon/circbuf v0.0.0-20150827004946-bbbad097214e/go.mod h1:3U/XgcO3hCbHZ8TKRvWD2dDTCfh9M9ya+I9JpbB7O8o=

github.com/armon/go-metrics v0.0.0-20180917152333-f0300d1749da/go.mod h1:Q73ZrmVTwzkszR9V5SSuryQ31EELlFMUz1kKyl939pY=

github.com/armon/go-radix v0.0.0-20180808171621-7fddfc383310/go.mod h1:ufUuZ+zHj4x4TnLV4JWEpy2hxWSpsRywHrMgIH9cCH8=

@@ -136,7 +136,6 @@ github.com/cenkalti/backoff v2.2.1+incompatible/go.mod h1:90ReRw6GdpyfrHakVjL/QH

github.com/census-instrumentation/opencensus-proto v0.2.1/go.mod h1:f6KPmirojxKA12rnyqOA5BBL4O983OfeGPqjHWSTneU=

github.com/certifi/gocertifi v0.0.0-20191021191039-0944d244cd40 h1:xvUo53O5MRZhVMJAxWCJcS5HHrqAiAG9SJ1LpMu6aAI=

github.com/certifi/gocertifi v0.0.0-20191021191039-0944d244cd40/go.mod h1:sGbDF6GwGcLpkNXPUTkMRoywsNa/ol15pxFe6ERfguA=

-github.com/cespare/xxhash v1.1.0 h1:a6HrQnmkObjyL+Gs60czilIUGqrzKutQD6XZog3p+ko=

github.com/cespare/xxhash v1.1.0/go.mod h1:XrSqR1VqqWfGrhpAt58auRo0WTKS1nRRg3ghfAqPWnc=

github.com/cespare/xxhash/v2 v2.1.1/go.mod h1:VGX0DQ3Q6kWi7AoAeZDth3/j3BFtOZR5XLFGgcrjCOs=

github.com/cespare/xxhash/v2 v2.1.2 h1:YRXhKfTDauu4ajMg1TPgFO5jnlC2HCbmLXMcTG5cbYE=

@@ -244,7 +243,6 @@ github.com/coreos/go-semver v0.3.0 h1:wkHLiw0WNATZnSG7epLsujiMCgPAc9xhjJ4tgnAxmf

github.com/coreos/go-semver v0.3.0/go.mod h1:nnelYz7RCh+5ahJtPPxZlU+153eP4D4r3EedlOD2RNk=

github.com/coreos/go-systemd v0.0.0-20161114122254-48702e0da86b/go.mod h1:F5haX7vjVVG0kc13fIWeqUViNPyEJxv/OmvnBo0Yme4=

github.com/coreos/go-systemd v0.0.0-20180511133405-39ca1b05acc7/go.mod h1:F5haX7vjVVG0kc13fIWeqUViNPyEJxv/OmvnBo0Yme4=

-github.com/coreos/go-systemd v0.0.0-20190321100706-95778dfbb74e h1:Wf6HqHfScWJN9/ZjdUKyjop4mf3Qdd+1TvvltAvM3m8=

github.com/coreos/go-systemd v0.0.0-20190321100706-95778dfbb74e/go.mod h1:F5haX7vjVVG0kc13fIWeqUViNPyEJxv/OmvnBo0Yme4=

github.com/coreos/go-systemd/v22 v22.0.0/go.mod h1:xO0FLkIi5MaZafQlIrOotqXZ90ih+1atmu1JpKERPPk=

github.com/coreos/go-systemd/v22 v22.1.0/go.mod h1:xO0FLkIi5MaZafQlIrOotqXZ90ih+1atmu1JpKERPPk=

@@ -352,6 +350,11 @@ github.com/go-logfmt/logfmt v0.4.0/go.mod h1:3RMwSq7FuexP4Kalkev3ejPJsZTpXXBr9+V

github.com/go-logfmt/logfmt v0.5.0/go.mod h1:wCYkCAKZfumFQihp8CzCvQ3paCTfi41vtzG1KdI/P7A=

github.com/go-logr/logr v0.1.0/go.mod h1:ixOQHD9gLJUVQQ2ZOR7zLEifBX6tGkNJF4QyIY7sIas=

github.com/go-logr/logr v0.2.0/go.mod h1:z6/tIYblkpsD+a4lm/fGIIU9mZ+XfAiaFtq7xTgseGU=

+github.com/go-logr/logr v1.2.2/go.mod h1:jdQByPbusPIv2/zmleS9BjJVeZ6kBagPoEUsqbVz/1A=

+github.com/go-logr/logr v1.2.3 h1:2DntVwHkVopvECVRSlL5PSo9eG+cAkDCuckLubN+rq0=

+github.com/go-logr/logr v1.2.3/go.mod h1:jdQByPbusPIv2/zmleS9BjJVeZ6kBagPoEUsqbVz/1A=

+github.com/go-logr/stdr v1.2.2 h1:hSWxHoqTgW2S2qGc0LTAI563KZ5YKYRhT3MFKZMbjag=

+github.com/go-logr/stdr v1.2.2/go.mod h1:mMo/vtBO5dYbehREoey6XUKy/eSumjCCveDpRre4VKE=

github.com/go-ole/go-ole v1.2.6/go.mod h1:pprOEPIfldk/42T2oK7lQ4v4JSDwmV0As9GaiUsvbm0=

github.com/go-openapi/jsonpointer v0.19.2/go.mod h1:3akKfEdA7DF1sugOqz1dVQHBcuDBPKZGEoHC/NkiQRg=

github.com/go-openapi/jsonpointer v0.19.3/go.mod h1:Pl9vOtqEWErmShwVjC8pYs9cog34VGT37dQOVbmoatg=

@@ -444,8 +447,8 @@ github.com/google/go-cmp v0.5.3/go.mod h1:v8dTdLbMG2kIc/vJvl+f65V22dbkXbowE6jgT/

github.com/google/go-cmp v0.5.4/go.mod h1:v8dTdLbMG2kIc/vJvl+f65V22dbkXbowE6jgT/gNBxE=

github.com/google/go-cmp v0.5.5/go.mod h1:v8dTdLbMG2kIc/vJvl+f65V22dbkXbowE6jgT/gNBxE=

github.com/google/go-cmp v0.5.6/go.mod h1:v8dTdLbMG2kIc/vJvl+f65V22dbkXbowE6jgT/gNBxE=

-github.com/google/go-cmp v0.5.7 h1:81/ik6ipDQS2aGcBfIN5dHDB36BwrStyeAQquSYCV4o=

github.com/google/go-cmp v0.5.7/go.mod h1:n+brtR0CgQNWTVd5ZUFpTBC8YFBDLK/h/bpaJ8/DtOE=

+github.com/google/go-cmp v0.5.8 h1:e6P7q2lk1O+qJJb4BtCQXlK8vWEO8V1ZeuEdJNOqZyg=

github.com/google/gofuzz v1.0.0/go.mod h1:dBl0BpW6vV/+mYPU4Po3pmUjxk6FQPldtuIdl/M65Eg=

github.com/google/gofuzz v1.1.0/go.mod h1:dBl0BpW6vV/+mYPU4Po3pmUjxk6FQPldtuIdl/M65Eg=

github.com/google/martian v2.1.0+incompatible/go.mod h1:9I4somxYTbIHy5NJKHRl3wXiIaQGbYVAs8BPL6v8lEs=

@@ -471,8 +474,9 @@ github.com/google/uuid v1.3.0/go.mod h1:TIyPZe4MgqvfeYDBFedMoGGpEw/LqOeaOT+nhxU+

github.com/googleapis/gax-go/v2 v2.0.4/go.mod h1:0Wqv26UfaUD9n4G6kQubkQ+KchISgw+vpHVxEJEs9eg=

github.com/googleapis/gax-go/v2 v2.0.5/go.mod h1:DWXyrwAJ9X0FpwwEdw+IPEYBICEFu5mhpdKc/us6bOk=

github.com/googleapis/gnostic v0.4.1/go.mod h1:LRhVm6pbyptWbWbuZ38d1eyptfvIytN3ir6b65WBswg=

-github.com/gopherjs/gopherjs v0.0.0-20181017120253-0766667cb4d1 h1:EGx4pi6eqNxGaHF6qqu48+N2wcFQ5qg5FXgOdqsJ5d8=

github.com/gopherjs/gopherjs v0.0.0-20181017120253-0766667cb4d1/go.mod h1:wJfORRmW1u3UXTncJ5qlYoELFm8eSnnEO6hX4iZ3EWY=

+github.com/gopherjs/gopherjs v0.0.0-20190910122728-9d188e94fb99 h1:twflg0XRTjwKpxb/jFExr4HGq6on2dEOmnL6FV+fgPw=

+github.com/gopherjs/gopherjs v0.0.0-20190910122728-9d188e94fb99/go.mod h1:wJfORRmW1u3UXTncJ5qlYoELFm8eSnnEO6hX4iZ3EWY=

github.com/gorilla/context v1.1.1/go.mod h1:kBGZzfjB9CEq2AlWe17Uuf7NDRt0dE0s8S51q0aT7Yg=

github.com/gorilla/handlers v0.0.0-20150720190736-60c7bfde3e33/go.mod h1:Qkdc/uu4tH4g6mTK6auzZ766c4CA0Ng8+o/OAirnOIQ=

github.com/gorilla/mux v1.6.2/go.mod h1:1lud6UwP+6orDFRuTfBEV8e9/aOM/c4fVVCaMa2zaAs=

@@ -536,8 +540,11 @@ github.com/j-keck/arping v0.0.0-20160618110441-2cf9dc699c56/go.mod h1:ymszkNOg6t

github.com/jehiah/go-strftime v0.0.0-20171201141054-1d33003b3869/go.mod h1:cJ6Cj7dQo+O6GJNiMx+Pa94qKj+TG8ONdKHgMNIyyag=

github.com/jmespath/go-jmespath v0.0.0-20160202185014-0b12d6b521d8/go.mod h1:Nht3zPeWKUH0NzdCt2Blrr5ys8VGpn0CEB0cQHVjt7k=

github.com/jmespath/go-jmespath v0.0.0-20160803190731-bd40a432e4c7/go.mod h1:Nht3zPeWKUH0NzdCt2Blrr5ys8VGpn0CEB0cQHVjt7k=

-github.com/jmespath/go-jmespath v0.0.0-20180206201540-c2b33e8439af h1:pmfjZENx5imkbgOkpRUYLnmbU7UEFbjtDA2hxJ1ichM=

github.com/jmespath/go-jmespath v0.0.0-20180206201540-c2b33e8439af/go.mod h1:Nht3zPeWKUH0NzdCt2Blrr5ys8VGpn0CEB0cQHVjt7k=

+github.com/jmespath/go-jmespath v0.4.0 h1:BEgLn5cpjn8UN1mAw4NjwDrS35OdebyEtFe+9YPoQUg=

+github.com/jmespath/go-jmespath v0.4.0/go.mod h1:T8mJZnbsbmF+m6zOOFylbeCJqk5+pHWvzYPziyZiYoo=

+github.com/jmespath/go-jmespath/internal/testify v1.5.1 h1:shLQSRRSCCPj3f2gpwzGwWFoC7ycTf1rcQZHOlsJ6N8=

+github.com/jmespath/go-jmespath/internal/testify v1.5.1/go.mod h1:L3OGu8Wl2/fWfCI6z80xFu9LTZmf1ZRjMHUOPmWr69U=

github.com/jonboulle/clockwork v0.1.0/go.mod h1:Ii8DK3G1RaLaWxj9trq07+26W01tbo22gdxWY5EU2bo=

github.com/jonboulle/clockwork v0.2.2 h1:UOGuzwb1PwsrDAObMuhUnj0p5ULPj8V/xJ7Kx9qUBdQ=

github.com/jonboulle/clockwork v0.2.2/go.mod h1:Pkfl5aHPm1nk2H9h0bjmnJD/BcgbGXUBGnn1kMkgxc8=

@@ -647,7 +654,6 @@ github.com/munnerz/goautoneg v0.0.0-20191010083416-a7dc8b61c822/go.mod h1:+n7T8m

github.com/mwitkow/go-conntrack v0.0.0-20161129095857-cc309e4a2223/go.mod h1:qRWi+5nqEBWmkhHvq77mSJWrCKwh8bxhgT7d/eI7P4U=

github.com/mwitkow/go-conntrack v0.0.0-20190716064945-2f068394615f/go.mod h1:qRWi+5nqEBWmkhHvq77mSJWrCKwh8bxhgT7d/eI7P4U=

github.com/mxk/go-flowrate v0.0.0-20140419014527-cca7078d478f/go.mod h1:ZdcZmHo+o7JKHSa8/e818NopupXU1YMK5fe1lsApnBw=

-github.com/nacos-group/nacos-sdk-go v1.0.8 h1:8pEm05Cdav9sQgJSv5kyvlgfz0SzFUUGI3pWX6SiSnM=

github.com/nacos-group/nacos-sdk-go v1.0.8/go.mod h1:hlAPn3UdzlxIlSILAyOXKxjFSvDJ9oLzTJ9hLAK1KzA=

github.com/nacos-group/nacos-sdk-go/v2 v2.0.1 h1:jEZjqdCDSt6ZFtl628UUwON21GxwJ+lEN/PDamQOzgU=

github.com/nacos-group/nacos-sdk-go/v2 v2.0.1/go.mod h1:SlhyCAv961LcZ198XpKfPEQqlJWt2HkL1fDLas0uy/w=

@@ -860,8 +866,9 @@ github.com/stretchr/testify v1.3.0/go.mod h1:M5WIy9Dh21IEIfnGCwXGc5bZfKNJtfHm1UV

github.com/stretchr/testify v1.4.0/go.mod h1:j7eGeouHqKxXV5pUuKE4zz7dFj8WfuZ+81PSLYec5m4=

github.com/stretchr/testify v1.5.1/go.mod h1:5W2xD1RspED5o8YsWQXVCued0rvSQ+mT+I5cxcmMvtA=

github.com/stretchr/testify v1.6.1/go.mod h1:6Fq8oRcR53rry900zMqJjRRixrwX3KX962/h/Wwjteg=

-github.com/stretchr/testify v1.7.0 h1:nwc3DEeHmmLAfoZucVR881uASk0Mfjw8xYJ99tb5CcY=

github.com/stretchr/testify v1.7.0/go.mod h1:6Fq8oRcR53rry900zMqJjRRixrwX3KX962/h/Wwjteg=

+github.com/stretchr/testify v1.7.1 h1:5TQK59W5E3v0r2duFAb7P95B6hEeOyEnHRa8MjYSMTY=

+github.com/stretchr/testify v1.7.1/go.mod h1:6Fq8oRcR53rry900zMqJjRRixrwX3KX962/h/Wwjteg=

github.com/subosito/gotenv v1.2.0/go.mod h1:N0PQaV/YGNqwC0u51sEeR/aUtSLEXKX9iv69rRypqCw=

github.com/syndtr/gocapability v0.0.0-20170704070218-db04d3cc01c8/go.mod h1:hkRG7XYTFWNJGYcbNJQlaLq0fg1yr4J4t/NcTQtrfww=

github.com/syndtr/gocapability v0.0.0-20180916011248-d98352740cb2/go.mod h1:hkRG7XYTFWNJGYcbNJQlaLq0fg1yr4J4t/NcTQtrfww=

@@ -915,7 +922,6 @@ go.etcd.io/bbolt v1.3.3/go.mod h1:IbVyRI1SCnLcuJnV2u8VeU0CEYM7e686BmAb1XKL+uU=

go.etcd.io/bbolt v1.3.5 h1:XAzx9gjCb0Rxj7EoqcClPD1d5ZBxZJk0jbuoPHenBt0=

go.etcd.io/bbolt v1.3.5/go.mod h1:G5EMThwa9y8QZGBClrRx5EY+Yw9kAhnjy3bSjsnlVTQ=

go.etcd.io/etcd v0.0.0-20191023171146-3cf2f69b5738/go.mod h1:dnLIgRNXwCJa5e+c6mIZCrds/GIG4ncV9HhK5PX7jPg=

-go.etcd.io/etcd v0.5.0-alpha.5.0.20200910180754-dd1b699fc489 h1:1JFLBqwIgdyHN1ZtgjTBwO+blA6gVOmZurpiMEsETKo=

go.etcd.io/etcd v0.5.0-alpha.5.0.20200910180754-dd1b699fc489/go.mod h1:yVHk9ub3CSBatqGNg7GRmsnfLWtoW60w4eDYfh7vHDg=

go.etcd.io/etcd/api/v3 v3.5.0-alpha.0/go.mod h1:mPcW6aZJukV6Aa81LSKpBjQXTWlXB5r74ymPoSWa3Sw=

go.etcd.io/etcd/api/v3 v3.5.0/go.mod h1:cbVKeC6lCfl7j/8jBhAK6aIYO9XOjdptoxU/nLQcPvs=

@@ -946,6 +952,10 @@ go.opencensus.io v0.22.4/go.mod h1:yxeiOL68Rb0Xd1ddK5vPZ/oVn4vY4Ynel7k9FzqtOIw=

go.opencensus.io v0.22.5/go.mod h1:5pWMHQbX5EPX2/62yrJeAkowc+lfs/XD7Uxpq3pI6kk=

go.opencensus.io v0.23.0 h1:gqCw0LfLxScz8irSi8exQc7fyQ0fKQU/qnC/X8+V/1M=

go.opencensus.io v0.23.0/go.mod h1:XItmlyltB5F7CS4xOC1DcqMoFqwtC6OG2xF7mCv7P7E=

+go.opentelemetry.io/otel v1.7.0 h1:Z2lA3Tdch0iDcrhJXDIlC94XE+bxok1F9B+4Lz/lGsM=

+go.opentelemetry.io/otel v1.7.0/go.mod h1:5BdUoMIz5WEs0vt0CUEMtSSaTSHBBVwrhnz7+nrD5xk=

+go.opentelemetry.io/otel/trace v1.7.0 h1:O37Iogk1lEkMRXewVtZ1BBTVn5JEp8GrJvP92bJqC6o=

+go.opentelemetry.io/otel/trace v1.7.0/go.mod h1:fzLSB9nqR2eXzxPXb2JW9IKE+ScyXA48yyE4TNvoHqU=

go.opentelemetry.io/proto/otlp v0.7.0/go.mod h1:PqfVotwruBrMGOCsRd/89rSnXhoiJIqeYNgFYFoEGnI=

go.uber.org/atomic v1.3.2/go.mod h1:gD2HeocX3+yG+ygLZcrzQJaqmWj9AIm7n08wl/qW/PE=

go.uber.org/atomic v1.4.0/go.mod h1:gD2HeocX3+yG+ygLZcrzQJaqmWj9AIm7n08wl/qW/PE=

@@ -960,9 +970,8 @@ go.uber.org/goleak v1.1.11-0.20210813005559-691160354723/go.mod h1:cwTWslyiVhfpK

go.uber.org/multierr v1.1.0/go.mod h1:wR5kodmAFQ0UK8QlbwjlSNy0Z68gJhDJUG5sjR94q/0=

go.uber.org/multierr v1.3.0/go.mod h1:VgVr7evmIr6uPjLBxg28wmKNXyqE9akIJ5XnfpiKl+4=

go.uber.org/multierr v1.5.0/go.mod h1:FeouvMocqHpRaaGuG9EjoKcStLC43Zu/fmqdUMPcKYU=

+go.uber.org/multierr v1.6.0 h1:y6IPFStTAIT5Ytl7/XYmHvzXQ7S3g/IeZW9hyZ5thw4=

go.uber.org/multierr v1.6.0/go.mod h1:cdWPpRnG4AhwMwsgIHip0KRBQjJy5kYEpYjJxpXp9iU=

-go.uber.org/multierr v1.7.0 h1:zaiO/rmgFjbmCXdSYJWQcdvOCsthmdaHfr3Gm2Kx4Ec=

-go.uber.org/multierr v1.7.0/go.mod h1:7EAYxJLBy9rStEaz58O2t4Uvip6FSURkq8/ppBp95ak=

go.uber.org/tools v0.0.0-20190618225709-2cfd321de3ee/go.mod h1:vJERXedbb3MVM5f9Ejo0C68/HhF8uaILCdgjnY+goOA=

go.uber.org/zap v1.9.1/go.mod h1:vwi/ZaCAaUcBkycHslxD9B2zi4UTXhF60s6SWpuDF0Q=

go.uber.org/zap v1.10.0/go.mod h1:vwi/ZaCAaUcBkycHslxD9B2zi4UTXhF60s6SWpuDF0Q=

@@ -987,9 +996,8 @@ golang.org/x/crypto v0.0.0-20191011191535-87dc89f01550/go.mod h1:yigFU9vqHzYiE8U

golang.org/x/crypto v0.0.0-20200622213623-75b288015ac9/go.mod h1:LzIPMQfyMNhhGPhUkYOs5KpL4U8rLKemX1yGLhDgUto=

golang.org/x/crypto v0.0.0-20200728195943-123391ffb6de/go.mod h1:LzIPMQfyMNhhGPhUkYOs5KpL4U8rLKemX1yGLhDgUto=

golang.org/x/crypto v0.0.0-20201002170205-7f63de1d35b0/go.mod h1:LzIPMQfyMNhhGPhUkYOs5KpL4U8rLKemX1yGLhDgUto=

+golang.org/x/crypto v0.0.0-20211215153901-e495a2d5b3d3 h1:0es+/5331RGQPcXlMfP+WrnIIS6dNnNRe0WB02W0F4M=

golang.org/x/crypto v0.0.0-20211215153901-e495a2d5b3d3/go.mod h1:IxCIyHEi3zRg3s0A5j5BB6A9Jmi73HwBIUl50j+osU4=

-golang.org/x/crypto v0.0.0-20220427172511-eb4f295cb31f h1:OeJjE6G4dgCY4PIXvIRQbE8+RX+uXZyGhUy/ksMGJoc=

-golang.org/x/crypto v0.0.0-20220427172511-eb4f295cb31f/go.mod h1:IxCIyHEi3zRg3s0A5j5BB6A9Jmi73HwBIUl50j+osU4=

golang.org/x/exp v0.0.0-20181106170214-d68db9428509/go.mod h1:CJ0aWSM057203Lf6IL+f9T1iT9GByDxfZKAQTCR3kQA=

golang.org/x/exp v0.0.0-20190121172915-509febef88a4/go.mod h1:CJ0aWSM057203Lf6IL+f9T1iT9GByDxfZKAQTCR3kQA=

golang.org/x/exp v0.0.0-20190306152737-a1d7652674e8/go.mod h1:CJ0aWSM057203Lf6IL+f9T1iT9GByDxfZKAQTCR3kQA=

@@ -1002,6 +1010,8 @@ golang.org/x/exp v0.0.0-20200119233911-0405dc783f0a/go.mod h1:2RIsYlXP63K8oxa1u0

golang.org/x/exp v0.0.0-20200207192155-f17229e696bd/go.mod h1:J/WKrq2StrnmMY6+EHIKF9dgMWnmCNThgcyBT1FY9mM=

golang.org/x/exp v0.0.0-20200224162631-6cc2880d07d6/go.mod h1:3jZMyOhIsHpP37uCMkUooju7aAi5cS1Q23tOzKc+0MU=

golang.org/x/exp v0.0.0-20200331195152-e8c3332aa8e5/go.mod h1:4M0jN8W1tt0AVLNr8HDosyJCDCDuyL9N9+3m7wDWgKw=

+golang.org/x/exp v0.0.0-20220613132600-b0d781184e0d h1:vtUKgx8dahOomfFzLREU8nSv25YHnTgLBn4rDnWZdU0=

+golang.org/x/exp v0.0.0-20220613132600-b0d781184e0d/go.mod h1:Kr81I6Kryrl9sr8s2FK3vxD90NdsKWRuOIl2O4CvYbA=

golang.org/x/image v0.0.0-20190227222117-0694c2d4d067/go.mod h1:kZ7UVZpmo3dzQBMxlp+ypCbDeSB+sBbTgSJuh5dn5js=

golang.org/x/image v0.0.0-20190802002840-cff245a6509b/go.mod h1:FeLwcggjj3mMvU+oOTbSwawSJRM1uh48EjtB4UJZlP0=

golang.org/x/lint v0.0.0-20181026193005-c67002cb31c3/go.mod h1:UVdnD1Gm6xHRNCYTkRU2/jEulfH38KcIWyp/GAMgvoE=

@@ -1199,9 +1209,8 @@ golang.org/x/sys v0.0.0-20210816074244-15123e1e1f71/go.mod h1:oPkhp1MJrh7nUepCBc

golang.org/x/sys v0.0.0-20211025201205-69cdffdb9359/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

golang.org/x/sys v0.0.0-20211109184856-51b60fd695b3/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

golang.org/x/sys v0.0.0-20220111092808-5a964db01320/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

+golang.org/x/sys v0.0.0-20220128215802-99c3d69c2c27 h1:XDXtA5hveEEV8JB2l7nhMTp3t3cHp9ZpwcdjqyEWLlo=

golang.org/x/sys v0.0.0-20220128215802-99c3d69c2c27/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

-golang.org/x/sys v0.0.0-20220429233432-b5fbb4746d32 h1:Js08h5hqB5xyWR789+QqueR6sDE8mk+YvpETZ+F6X9Y=

-golang.org/x/sys v0.0.0-20220429233432-b5fbb4746d32/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

golang.org/x/term v0.0.0-20201126162022-7de9c90e9dd1/go.mod h1:bj7SfCRtBDWHUb9snDiAeCFNEtKQo2Wmx5Cou7ajbmo=

golang.org/x/text v0.0.0-20170915032832-14c0d48ead0c/go.mod h1:NqM8EUOU14njkJ3fqMW+pc6Ldnwhi/IjpwHt7yyuwOQ=

golang.org/x/text v0.3.0/go.mod h1:NqM8EUOU14njkJ3fqMW+pc6Ldnwhi/IjpwHt7yyuwOQ=

@@ -1285,7 +1294,6 @@ golang.org/x/tools v0.1.5/go.mod h1:o0xws9oXOQQZyjljx8fwUC0k7L1pTE6eaCbjGeHmOkk=

golang.org/x/xerrors v0.0.0-20190717185122-a985d3407aa7/go.mod h1:I/5z698sn9Ka8TeJc9MKroUUfqBBauWjQqLJ2OPfmY0=

golang.org/x/xerrors v0.0.0-20191011141410-1b5146add898/go.mod h1:I/5z698sn9Ka8TeJc9MKroUUfqBBauWjQqLJ2OPfmY0=

golang.org/x/xerrors v0.0.0-20191204190536-9bdfabe68543/go.mod h1:I/5z698sn9Ka8TeJc9MKroUUfqBBauWjQqLJ2OPfmY0=

-golang.org/x/xerrors v0.0.0-20200804184101-5ec99f83aff1 h1:go1bK/D/BFZV2I8cIQd1NKEZ+0owSTG1fDTci4IqFcE=

golang.org/x/xerrors v0.0.0-20200804184101-5ec99f83aff1/go.mod h1:I/5z698sn9Ka8TeJc9MKroUUfqBBauWjQqLJ2OPfmY0=

google.golang.org/api v0.0.0-20160322025152-9bf6e6e569ff/go.mod h1:4mhQ8q/RsB7i+udVvVy5NUi08OU8ZlA0gRVgrF7VFY0=

google.golang.org/api v0.3.1/go.mod h1:6wY9I6uQWHQ8EM57III9mq/AjF+i8G65rmVagqKMtkk=

diff --git a/integration_test/config/db/config.yaml b/integration_test/config/db/config.yaml

new file mode 100644

index 000000000..d9eaf3d96

--- /dev/null

+++ b/integration_test/config/db/config.yaml

@@ -0,0 +1,98 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one or more

+# contributor license agreements. See the NOTICE file distributed with

+# this work for additional information regarding copyright ownership.

+# The ASF licenses this file to You under the Apache License, Version 2.0

+# (the "License"); you may not use this file except in compliance with

+# the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+#

+

+kind: ConfigMap

+apiVersion: "1.0"

+metadata:

+ name: arana-config

+data:

+ listeners:

+ - protocol_type: mysql

+ server_version: 5.7.0

+ socket_address:

+ address: 0.0.0.0

+ port: 13306

+

+ tenants:

+ - name: arana

+ users:

+ - username: arana

+ password: "123456"

+ - username: dksl

+ password: "123456"

+

+ clusters:

+ - name: employees

+ type: mysql

+ sql_max_limit: -1

+ tenant: arana

+ groups:

+ - name: employees_0000

+ nodes:

+ - name: node0

+ host: arana-mysql

+ port: 3306

+ username: root

+ password: "123456"

+ database: employees_0000

+ weight: r10w10

+ - name: employees_0001

+ nodes:

+ - name: node1

+ host: arana-mysql

+ port: 3306

+ username: root

+ password: "123456"

+ database: employees_0001

+ weight: r10w10

+ - name: employees_0002

+ nodes:

+ - name: node2

+ host: arana-mysql

+ port: 3306

+ username: root

+ password: "123456"

+ database: employees_0002

+ weight: r10w10

+ - name: employees_0003

+ nodes:

+ - name: node3

+ host: arana-mysql

+ port: 3306

+ username: root

+ password: "123456"

+ database: employees_0003

+ weight: r10w10

+

+ sharding_rule:

+ tables:

+ - name: employees.student

+ allow_full_scan: true

+ db_rules:

+ - column: uid

+ type: scriptExpr

+ expr: parseInt($value % 32 / 8)

+ step: 32

+ tbl_rules:

+ - column: uid

+ type: scriptExpr

+ expr: parseInt(0)

+ topology:

+ db_pattern: employees_${0000...0003}

+ tbl_pattern: student_0000

+ attributes:

+ sqlMaxLimit: -1

diff --git a/integration_test/config/db/data.yaml b/integration_test/config/db/data.yaml

new file mode 100644

index 000000000..112cb3c18

--- /dev/null

+++ b/integration_test/config/db/data.yaml

@@ -0,0 +1,30 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one or more

+# contributor license agreements. See the NOTICE file distributed with

+# this work for additional information regarding copyright ownership.

+# The ASF licenses this file to You under the Apache License, Version 2.0

+# (the "License"); you may not use this file except in compliance with

+# the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+#

+

+kind: DataSet

+metadata:

+ tables:

+ - name: "order"

+ columns:

+ - name: "name"

+ type: "string"

+ - name: "value"

+ type: "string"

+data:

+ - name: "order"

+ value:

+ - ["test", "test1"]

diff --git a/integration_test/config/db/expected.yaml b/integration_test/config/db/expected.yaml

new file mode 100644

index 000000000..e16b99a92

--- /dev/null

+++ b/integration_test/config/db/expected.yaml

@@ -0,0 +1,30 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one or more

+# contributor license agreements. See the NOTICE file distributed with

+# this work for additional information regarding copyright ownership.

+# The ASF licenses this file to You under the Apache License, Version 2.0

+# (the "License"); you may not use this file except in compliance with

+# the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+#

+

+kind: excepted

+metadata:

+ tables:

+ - name: "order"

+ columns:

+ - name: "name"

+ type: "string"

+ - name: "value"

+ type: "string"

+data:

+ - name: "order"

+ value:

+ - ["test", "test1"]

diff --git a/integration_test/config/db_tbl/config.yaml b/integration_test/config/db_tbl/config.yaml

new file mode 100644

index 000000000..4b421d229

--- /dev/null

+++ b/integration_test/config/db_tbl/config.yaml

@@ -0,0 +1,104 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one or more

+# contributor license agreements. See the NOTICE file distributed with

+# this work for additional information regarding copyright ownership.

+# The ASF licenses this file to You under the Apache License, Version 2.0

+# (the "License"); you may not use this file except in compliance with

+# the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+#

+

+kind: ConfigMap

+apiVersion: "1.0"

+metadata:

+ name: arana-config

+data:

+ listeners:

+ - protocol_type: mysql

+ server_version: 5.7.0

+ socket_address:

+ address: 0.0.0.0

+ port: 13306

+

+ tenants:

+ - name: arana

+ users:

+ - username: arana

+ password: "123456"

+ - username: dksl

+ password: "123456"

+

+ clusters:

+ - name: employees

+ type: mysql

+ sql_max_limit: -1

+ tenant: arana

+ groups:

+ - name: employees_0000

+ nodes:

+ - name: node0

+ host: arana-mysql

+ port: 3306

+ username: root

+ password: "123456"

+ database: employees_0000

+ weight: r10w10

+ - name: node0_r_0

+ host: arana-mysql

+ port: 3306

+ username: root

+ password: "123456"

+ database: employees_0000_r

+ weight: r0w0

+ - name: employees_0001

+ nodes:

+ - name: node1

+ host: arana-mysql

+ port: 3306

+ username: root

+ password: "123456"

+ database: employees_0001

+ weight: r10w10

+ - name: employees_0002

+ nodes:

+ - name: node2

+ host: arana-mysql

+ port: 3306

+ username: root

+ password: "123456"

+ database: employees_0002

+ weight: r10w10

+ - name: employees_0003

+ nodes:

+ - name: node3

+ host: arana-mysql

+ port: 3306

+ username: root

+ password: "123456"

+ database: employees_0003

+ weight: r10w10

+

+ sharding_rule:

+ tables:

+ - name: employees.student

+ allow_full_scan: true

+ db_rules:

+ - column: uid

+ type: scriptExpr

+ expr: parseInt($value % 32 / 8)

+ tbl_rules:

+ - column: uid

+ type: scriptExpr

+ expr: $value % 32

+ topology:

+ db_pattern: employees_${0000..0003}

+ tbl_pattern: student_${0000..0031}

+ attributes:

+ sqlMaxLimit: -1

diff --git a/integration_test/config/db_tbl/data.yaml b/integration_test/config/db_tbl/data.yaml

new file mode 100644

index 000000000..112cb3c18

--- /dev/null

+++ b/integration_test/config/db_tbl/data.yaml

@@ -0,0 +1,30 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one or more

+# contributor license agreements. See the NOTICE file distributed with

+# this work for additional information regarding copyright ownership.

+# The ASF licenses this file to You under the Apache License, Version 2.0

+# (the "License"); you may not use this file except in compliance with

+# the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+#

+

+kind: DataSet

+metadata:

+ tables:

+ - name: "order"

+ columns:

+ - name: "name"

+ type: "string"

+ - name: "value"

+ type: "string"

+data:

+ - name: "order"

+ value:

+ - ["test", "test1"]

diff --git a/integration_test/config/db_tbl/expected.yaml b/integration_test/config/db_tbl/expected.yaml

new file mode 100644

index 000000000..542d37822

--- /dev/null

+++ b/integration_test/config/db_tbl/expected.yaml

@@ -0,0 +1,30 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one or more

+# contributor license agreements. See the NOTICE file distributed with

+# this work for additional information regarding copyright ownership.

+# The ASF licenses this file to You under the Apache License, Version 2.0

+# (the "License"); you may not use this file except in compliance with

+# the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+#

+

+kind: excepted

+metadata:

+ tables:

+ - name: "sequence"

+ columns:

+ - name: "name"

+ type: "string"

+ - name: "value"

+ type: "string"

+data:

+ - name: "sequence"

+ value:

+ - ["1", "2"]

diff --git a/integration_test/config/tbl/config.yaml b/integration_test/config/tbl/config.yaml

new file mode 100644

index 000000000..3eb3564bb

--- /dev/null

+++ b/integration_test/config/tbl/config.yaml

@@ -0,0 +1,70 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one or more

+# contributor license agreements. See the NOTICE file distributed with

+# this work for additional information regarding copyright ownership.

+# The ASF licenses this file to You under the Apache License, Version 2.0

+# (the "License"); you may not use this file except in compliance with

+# the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+#

+

+kind: ConfigMap

+apiVersion: "1.0"

+metadata:

+ name: arana-config

+data:

+ listeners:

+ - protocol_type: mysql

+ server_version: 5.7.0

+ socket_address:

+ address: 0.0.0.0

+ port: 13306

+

+ tenants:

+ - name: arana

+ users:

+ - username: arana

+ password: "123456"

+ - username: dksl

+ password: "123456"

+

+ clusters:

+ - name: employees

+ type: mysql

+ sql_max_limit: -1

+ tenant: arana

+ groups:

+ - name: employees_0000

+ nodes:

+ - name: node0

+ host: arana-mysql

+ port: 3306

+ username: root

+ password: "123456"

+ database: employees_0000

+ weight: r10w10

+

+ sharding_rule:

+ tables:

+ - name: employees.student

+ allow_full_scan: true

+ db_rules:

+ - column: uid

+ type: scriptExpr

+ expr: parseInt(0)

+ tbl_rules:

+ - column: uid

+ type: scriptExpr

+ expr: $value % 32

+ topology:

+ db_pattern: employees_0000

+ tbl_pattern: student_${0000..0031}

+ attributes:

+ sqlMaxLimit: -1

diff --git a/integration_test/config/tbl/data.yaml b/integration_test/config/tbl/data.yaml

new file mode 100644

index 000000000..112cb3c18

--- /dev/null

+++ b/integration_test/config/tbl/data.yaml

@@ -0,0 +1,30 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one or more

+# contributor license agreements. See the NOTICE file distributed with

+# this work for additional information regarding copyright ownership.

+# The ASF licenses this file to You under the Apache License, Version 2.0

+# (the "License"); you may not use this file except in compliance with

+# the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+#

+

+kind: DataSet

+metadata:

+ tables:

+ - name: "order"

+ columns:

+ - name: "name"

+ type: "string"

+ - name: "value"

+ type: "string"

+data:

+ - name: "order"

+ value:

+ - ["test", "test1"]

diff --git a/integration_test/config/tbl/expected.yaml b/integration_test/config/tbl/expected.yaml

new file mode 100644

index 000000000..e16b99a92

--- /dev/null

+++ b/integration_test/config/tbl/expected.yaml

@@ -0,0 +1,30 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one or more

+# contributor license agreements. See the NOTICE file distributed with

+# this work for additional information regarding copyright ownership.

+# The ASF licenses this file to You under the Apache License, Version 2.0

+# (the "License"); you may not use this file except in compliance with

+# the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+#

+

+kind: excepted

+metadata:

+ tables:

+ - name: "order"

+ columns:

+ - name: "name"

+ type: "string"

+ - name: "value"

+ type: "string"

+data:

+ - name: "order"

+ value:

+ - ["test", "test1"]

diff --git a/integration_test/scene/db/integration_test.go b/integration_test/scene/db/integration_test.go

new file mode 100644

index 000000000..c7188fcba

--- /dev/null

+++ b/integration_test/scene/db/integration_test.go

@@ -0,0 +1,115 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package test

+

+import (

+ "strings"

+ "testing"

+)

+

+import (

+ _ "github.com/go-sql-driver/mysql"

+

+ "github.com/stretchr/testify/assert"

+ "github.com/stretchr/testify/suite"

+)

+

+import (

+ "github.com/arana-db/arana/test"

+)

+

+type IntegrationSuite struct {

+ *test.MySuite

+}

+

+func TestSuite(t *testing.T) {

+ su := test.NewMySuite(

+ test.WithMySQLServerAuth("root", "123456"),

+ test.WithMySQLDatabase("employees"),

+ test.WithConfig("../integration_test/config/db/config.yaml"),

+ test.WithScriptPath("../integration_test/scripts/db"),

+ test.WithTestCasePath("../../testcase/casetest.yaml"),

+ // WithDevMode(), // NOTICE: UNCOMMENT IF YOU WANT TO DEBUG LOCAL ARANA SERVER!!!

+ )

+ suite.Run(t, &IntegrationSuite{su})

+}

+

+func (s *IntegrationSuite) TestDBScene() {

+ var (

+ db = s.DB()

+ t = s.T()

+ )

+ tx, err := db.Begin()

+ assert.NoError(t, err, "should begin a new tx")

+

+ cases := s.TestCases()

+ for _, sqlCase := range cases.ExecCases {

+ for _, sense := range sqlCase.Sense {

+ if strings.TrimSpace(sense) == "db" {

+ params := strings.Split(sqlCase.Parameters, ",")

+ args := make([]interface{}, 0, len(params))

+ for _, param := range params {

+ k, _ := test.GetValueByType(param)

+ args = append(args, k)

+ }

+

+ // Execute sql

+ result, err := tx.Exec(sqlCase.SQL, args...)

+ assert.NoError(t, err, "exec not right")

+ err = sqlCase.ExpectedResult.CompareRow(result)

+ assert.NoError(t, err, err)

+ }

+ }

+ }

+

+ for _, sqlCase := range cases.QueryRowCases {

+ for _, sense := range sqlCase.Sense {

+ if strings.TrimSpace(sense) == "db" {

+ params := strings.Split(sqlCase.Parameters, ",")

+ args := make([]interface{}, 0, len(params))

+ for _, param := range params {

+ k, _ := test.GetValueByType(param)

+ args = append(args, k)

+ }

+

+ result := tx.QueryRow(sqlCase.SQL, args...)

+ err = sqlCase.ExpectedResult.CompareRow(result)

+ assert.NoError(t, err, err)

+ }

+ }

+ }

+

+ for _, sqlCase := range cases.QueryRowsCases {

+ s.LoadExpectedDataSetPath(sqlCase.ExpectedResult.Value)

+ for _, sense := range sqlCase.Sense {

+ if strings.TrimSpace(sense) == "db" {

+ params := strings.Split(sqlCase.Parameters, ",")

+ args := make([]interface{}, 0, len(params))

+ for _, param := range params {

+ k, _ := test.GetValueByType(param)

+ args = append(args, k)

+ }

+

+ result, err := db.Query(sqlCase.SQL, args...)

+ assert.NoError(t, err, err)

+ err = sqlCase.ExpectedResult.CompareRows(result, s.ExpectedDataset())

+ assert.NoError(t, err, err)

+ }

+ }

+ }

+}

diff --git a/integration_test/scene/db_tbl/integration_test.go b/integration_test/scene/db_tbl/integration_test.go

new file mode 100644

index 000000000..e7878729a

--- /dev/null

+++ b/integration_test/scene/db_tbl/integration_test.go

@@ -0,0 +1,96 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package test

+

+import (

+ "strings"

+ "testing"

+)

+

+import (

+ _ "github.com/go-sql-driver/mysql" // register mysql

+

+ "github.com/stretchr/testify/assert"

+ "github.com/stretchr/testify/suite"

+)

+

+import (

+ "github.com/arana-db/arana/test"

+)

+

+type IntegrationSuite struct {

+ *test.MySuite

+}

+

+func TestSuite(t *testing.T) {

+ su := test.NewMySuite(

+ test.WithMySQLServerAuth("root", "123456"),

+ test.WithMySQLDatabase("employees"),

+ test.WithConfig("../integration_test/config/db_tbl/config.yaml"),

+ test.WithScriptPath("../integration_test/scripts/db_tbl"),

+ test.WithTestCasePath("../../testcase/casetest.yaml"),

+ // WithDevMode(), // NOTICE: UNCOMMENT IF YOU WANT TO DEBUG LOCAL ARANA SERVER!!!

+ )

+ suite.Run(t, &IntegrationSuite{su})

+}

+

+func (s *IntegrationSuite) TestDBTBLScene() {

+ var (

+ db = s.DB()

+ t = s.T()

+ )

+ tx, err := db.Begin()

+ assert.NoError(t, err, "should begin a new tx")

+

+ cases := s.TestCases()

+ for _, sqlCase := range cases.ExecCases {

+ for _, sense := range sqlCase.Sense {

+ if strings.Compare(strings.TrimSpace(sense), "db_tbl") == 1 {

+ params := strings.Split(sqlCase.Parameters, ",")

+ args := make([]interface{}, 0, len(params))

+ for _, param := range params {

+ k, _ := test.GetValueByType(param)

+ args = append(args, k)

+ }

+

+ // Execute sql

+ result, err := tx.Exec(sqlCase.SQL, args...)

+ assert.NoError(t, err, "exec not right")

+ err = sqlCase.ExpectedResult.CompareRow(result)

+ assert.NoError(t, err, err)

+ }

+ }

+ }

+

+ for _, sqlCase := range cases.QueryRowCases {

+ for _, sense := range sqlCase.Sense {

+ if strings.Compare(strings.TrimSpace(sense), "db_tbl") == 1 {

+ params := strings.Split(sqlCase.Parameters, ",")

+ args := make([]interface{}, 0, len(params))

+ for _, param := range params {

+ k, _ := test.GetValueByType(param)

+ args = append(args, k)

+ }

+

+ result := tx.QueryRow(sqlCase.SQL, args...)

+ err = sqlCase.ExpectedResult.CompareRow(result)

+ assert.NoError(t, err, err)

+ }

+ }

+ }

+}

diff --git a/integration_test/scene/tbl/integration_test.go b/integration_test/scene/tbl/integration_test.go

new file mode 100644

index 000000000..cc95a5853

--- /dev/null

+++ b/integration_test/scene/tbl/integration_test.go

@@ -0,0 +1,97 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package test

+

+import (

+ "strings"

+ "testing"

+)

+

+import (

+ _ "github.com/go-sql-driver/mysql" // register mysql

+

+ "github.com/stretchr/testify/assert"

+ "github.com/stretchr/testify/suite"

+)

+

+import (

+ "github.com/arana-db/arana/test"

+)

+

+type IntegrationSuite struct {

+ *test.MySuite

+}

+

+func TestSuite(t *testing.T) {

+ su := test.NewMySuite(

+ test.WithMySQLServerAuth("root", "123456"),

+ test.WithMySQLDatabase("employees"),

+ test.WithConfig("../integration_test/config/tbl/config.yaml"),

+ test.WithScriptPath("../integration_test/scripts/tbl"),

+ test.WithTestCasePath("../../testcase/casetest.yaml"),

+ // WithDevMode(), // NOTICE: UNCOMMENT IF YOU WANT TO DEBUG LOCAL ARANA SERVER!!!

+ )

+ suite.Run(t, &IntegrationSuite{su})

+}

+

+func (s *IntegrationSuite) TestDBTBLScene() {

+ var (

+ db = s.DB()

+ t = s.T()

+ )

+ tx, err := db.Begin()

+ assert.NoError(t, err, "should begin a new tx")

+

+ cases := s.TestCases()

+ for _, sqlCase := range cases.ExecCases {

+ for _, sense := range sqlCase.Sense {

+ if strings.Compare(strings.TrimSpace(sense), "tbl") == 1 {

+ params := strings.Split(sqlCase.Parameters, ",")

+ args := make([]interface{}, 0, len(params))

+ for _, param := range params {

+ k, _ := test.GetValueByType(param)

+ args = append(args, k)

+ }

+

+ // Execute sql

+ result, err := tx.Exec(sqlCase.SQL, args...)

+ assert.NoError(t, err, "exec not right")

+ err = sqlCase.ExpectedResult.CompareRow(result)

+ assert.NoError(t, err, err)

+ }

+ }

+ }

+

+ for _, sqlCase := range cases.QueryRowCases {

+ for _, sense := range sqlCase.Sense {

+ if strings.Compare(strings.TrimSpace(sense), "tbl") == 1 {

+ params := strings.Split(sqlCase.Parameters, ",")

+ args := make([]interface{}, 0, len(params))

+ for _, param := range params {

+ k, _ := test.GetValueByType(param)

+ args = append(args, k)

+ }

+

+ result := tx.QueryRow(sqlCase.SQL, args...)

+ err = sqlCase.ExpectedResult.CompareRow(result)

+ assert.NoError(t, err, err)

+ }

+ }

+ }

+

+}

diff --git a/integration_test/scripts/db/init.sql b/integration_test/scripts/db/init.sql

new file mode 100644

index 000000000..744c67674

--- /dev/null

+++ b/integration_test/scripts/db/init.sql

@@ -0,0 +1,113 @@

+--

+-- Licensed to the Apache Software Foundation (ASF) under one or more

+-- contributor license agreements. See the NOTICE file distributed with

+-- this work for additional information regarding copyright ownership.

+-- The ASF licenses this file to You under the Apache License, Version 2.0

+-- (the "License"); you may not use this file except in compliance with

+-- the License. You may obtain a copy of the License at

+--

+-- http://www.apache.org/licenses/LICENSE-2.0

+--

+-- Unless required by applicable law or agreed to in writing, software

+-- distributed under the License is distributed on an "AS IS" BASIS,

+-- WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+-- See the License for the specific language governing permissions and

+-- limitations under the License.

+--

+

+-- Sample employee database

+-- See changelog table for details

+-- Copyright (C) 2007,2008, MySQL AB

+--

+-- Original data created by Fusheng Wang and Carlo Zaniolo

+-- http://www.cs.aau.dk/TimeCenter/software.htm

+-- http://www.cs.aau.dk/TimeCenter/Data/employeeTemporalDataSet.zip

+--

+-- Current schema by Giuseppe Maxia

+-- Data conversion from XML to relational by Patrick Crews

+--

+-- This work is licensed under the

+-- Creative Commons Attribution-Share Alike 3.0 Unported License.

+-- To view a copy of this license, visit

+-- http://creativecommons.org/licenses/by-sa/3.0/ or send a letter to

+-- Creative Commons, 171 Second Street, Suite 300, San Francisco,

+-- California, 94105, USA.

+--

+-- DISCLAIMER

+-- To the best of our knowledge, this data is fabricated, and

+-- it does not correspond to real people.

+-- Any similarity to existing people is purely coincidental.

+--

+

+CREATE DATABASE IF NOT EXISTS employees_0000 CHARACTER SET utf8mb4 COLLATE utf8mb4_unicode_ci;

+

+USE employees_0000;

+

+SELECT 'CREATING DATABASE STRUCTURE' as 'INFO';

+

+DROP TABLE IF EXISTS dept_emp,

+ dept_manager,

+ titles,

+ salaries,

+ employees,

+ departments;

+

+/*!50503 set default_storage_engine = InnoDB */;

+/*!50503 select CONCAT('storage engine: ', @@default_storage_engine) as INFO */;

+

+CREATE TABLE employees (

+ emp_no INT NOT NULL,

+ birth_date DATE NOT NULL,

+ first_name VARCHAR(14) NOT NULL,

+ last_name VARCHAR(16) NOT NULL,

+ gender ENUM ('M','F') NOT NULL,

+ hire_date DATE NOT NULL,

+ PRIMARY KEY (emp_no)

+);

+

+CREATE TABLE departments (

+ dept_no CHAR(4) NOT NULL,

+ dept_name VARCHAR(40) NOT NULL,

+ PRIMARY KEY (dept_no),

+ UNIQUE KEY (dept_name)

+);

+

+CREATE TABLE dept_manager (

+ emp_no INT NOT NULL,

+ dept_no CHAR(4) NOT NULL,

+ from_date DATE NOT NULL,

+ to_date DATE NOT NULL,

+ FOREIGN KEY (emp_no) REFERENCES employees (emp_no) ON DELETE CASCADE,

+ FOREIGN KEY (dept_no) REFERENCES departments (dept_no) ON DELETE CASCADE,

+ PRIMARY KEY (emp_no,dept_no)

+);

+

+CREATE TABLE dept_emp (

+ emp_no INT NOT NULL,

+ dept_no CHAR(4) NOT NULL,

+ from_date DATE NOT NULL,

+ to_date DATE NOT NULL,

+ FOREIGN KEY (emp_no) REFERENCES employees (emp_no) ON DELETE CASCADE,

+ FOREIGN KEY (dept_no) REFERENCES departments (dept_no) ON DELETE CASCADE,

+ PRIMARY KEY (emp_no,dept_no)

+);

+

+CREATE TABLE titles (

+ emp_no INT NOT NULL,

+ title VARCHAR(50) NOT NULL,

+ from_date DATE NOT NULL,

+ to_date DATE,

+ FOREIGN KEY (emp_no) REFERENCES employees (emp_no) ON DELETE CASCADE,

+ PRIMARY KEY (emp_no,title, from_date)

+)

+;

+

+CREATE TABLE salaries (

+ emp_no INT NOT NULL,

+ salary INT NOT NULL,

+ from_date DATE NOT NULL,

+ to_date DATE NOT NULL,

+ FOREIGN KEY (emp_no) REFERENCES employees (emp_no) ON DELETE CASCADE,

+ PRIMARY KEY (emp_no, from_date)

+)

+;

diff --git a/integration_test/scripts/db/sequence.sql b/integration_test/scripts/db/sequence.sql

new file mode 100644

index 000000000..0df0add1c

--- /dev/null

+++ b/integration_test/scripts/db/sequence.sql

@@ -0,0 +1,31 @@

+--

+-- Licensed to the Apache Software Foundation (ASF) under one or more

+-- contributor license agreements. See the NOTICE file distributed with

+-- this work for additional information regarding copyright ownership.

+-- The ASF licenses this file to You under the Apache License, Version 2.0

+-- (the "License"); you may not use this file except in compliance with

+-- the License. You may obtain a copy of the License at

+--

+-- http://www.apache.org/licenses/LICENSE-2.0

+--

+-- Unless required by applicable law or agreed to in writing, software

+-- distributed under the License is distributed on an "AS IS" BASIS,

+-- WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+-- See the License for the specific language governing permissions and

+-- limitations under the License.

+--

+

+CREATE DATABASE IF NOT EXISTS employees_0000 CHARACTER SET utf8mb4 COLLATE utf8mb4_unicode_ci;

+

+CREATE TABLE IF NOT EXISTS `employees_0000`.`sequence`

+(

+ `id` BIGINT UNSIGNED NOT NULL AUTO_INCREMENT,

+ `name` VARCHAR(64) NOT NULL,

+ `value` BIGINT NOT NULL,

+ `step` INT NOT NULL DEFAULT 10000,

+ `created_at` DATETIME NOT NULL DEFAULT CURRENT_TIMESTAMP,

+ `modified_at` DATETIME NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

+ PRIMARY KEY (`id`),

+ UNIQUE KEY `uk_name` (`name`)

+) ENGINE = InnoDB

+ DEFAULT CHARSET = utf8mb4;

diff --git a/integration_test/scripts/db/sharding.sql b/integration_test/scripts/db/sharding.sql

new file mode 100644

index 000000000..1f22d4036

--- /dev/null

+++ b/integration_test/scripts/db/sharding.sql

@@ -0,0 +1,83 @@

+--

+-- Licensed to the Apache Software Foundation (ASF) under one or more

+-- contributor license agreements. See the NOTICE file distributed with

+-- this work for additional information regarding copyright ownership.

+-- The ASF licenses this file to You under the Apache License, Version 2.0

+-- (the "License"); you may not use this file except in compliance with

+-- the License. You may obtain a copy of the License at

+--

+-- http://www.apache.org/licenses/LICENSE-2.0

+--

+-- Unless required by applicable law or agreed to in writing, software

+-- distributed under the License is distributed on an "AS IS" BASIS,

+-- WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+-- See the License for the specific language governing permissions and

+-- limitations under the License.

+--

+

+CREATE DATABASE IF NOT EXISTS employees_0000 CHARACTER SET utf8mb4 COLLATE utf8mb4_unicode_ci;

+CREATE DATABASE IF NOT EXISTS employees_0001 CHARACTER SET utf8mb4 COLLATE utf8mb4_unicode_ci;

+CREATE DATABASE IF NOT EXISTS employees_0002 CHARACTER SET utf8mb4 COLLATE utf8mb4_unicode_ci;

+CREATE DATABASE IF NOT EXISTS employees_0003 CHARACTER SET utf8mb4 COLLATE utf8mb4_unicode_ci;

+

+CREATE TABLE IF NOT EXISTS `employees_0000`.`student_0000`

+(

+ `id` BIGINT(20) UNSIGNED NOT NULL AUTO_INCREMENT,

+ `uid` BIGINT(20) UNSIGNED NOT NULL,

+ `name` VARCHAR(255) NOT NULL,

+ `score` DECIMAL(6,2) DEFAULT '0',

+ `nickname` VARCHAR(255) DEFAULT NULL,

+ `gender` TINYINT(4) NULL,

+ `birth_year` SMALLINT(5) UNSIGNED DEFAULT '0',

+ `created_at` DATETIME NOT NULL DEFAULT CURRENT_TIMESTAMP,

+ `modified_at` DATETIME NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

+ PRIMARY KEY (`id`),

+ UNIQUE KEY `uk_uid` (`uid`)

+) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;

+

+CREATE TABLE IF NOT EXISTS `employees_0001`.`student_0000`

+(

+ `id` BIGINT(20) UNSIGNED NOT NULL AUTO_INCREMENT,

+ `uid` BIGINT(20) UNSIGNED NOT NULL,

+ `name` VARCHAR(255) NOT NULL,

+ `score` DECIMAL(6,2) DEFAULT '0',

+ `nickname` VARCHAR(255) DEFAULT NULL,

+ `gender` TINYINT(4) NULL,

+ `birth_year` SMALLINT(5) UNSIGNED DEFAULT '0',

+ `created_at` DATETIME NOT NULL DEFAULT CURRENT_TIMESTAMP,

+ `modified_at` DATETIME NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

+ PRIMARY KEY (`id`),

+ UNIQUE KEY `uk_uid` (`uid`)

+) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;

+

+CREATE TABLE IF NOT EXISTS `employees_0002`.`student_0000`

+(

+ `id` BIGINT(20) UNSIGNED NOT NULL AUTO_INCREMENT,

+ `uid` BIGINT(20) UNSIGNED NOT NULL,

+ `name` VARCHAR(255) NOT NULL,

+ `score` DECIMAL(6,2) DEFAULT '0',

+ `nickname` VARCHAR(255) DEFAULT NULL,

+ `gender` TINYINT(4) NULL,

+ `birth_year` SMALLINT(5) UNSIGNED DEFAULT '0',

+ `created_at` DATETIME NOT NULL DEFAULT CURRENT_TIMESTAMP,

+ `modified_at` DATETIME NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

+ PRIMARY KEY (`id`),

+ UNIQUE KEY `uk_uid` (`uid`)

+) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;

+

+CREATE TABLE IF NOT EXISTS `employees_0003`.`student_0000`

+(

+ `id` BIGINT(20) UNSIGNED NOT NULL AUTO_INCREMENT,

+ `uid` BIGINT(20) UNSIGNED NOT NULL,

+ `name` VARCHAR(255) NOT NULL,

+ `score` DECIMAL(6,2) DEFAULT '0',

+ `nickname` VARCHAR(255) DEFAULT NULL,

+ `gender` TINYINT(4) NULL,

+ `birth_year` SMALLINT(5) UNSIGNED DEFAULT '0',

+ `created_at` DATETIME NOT NULL DEFAULT CURRENT_TIMESTAMP,

+ `modified_at` DATETIME NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

+ PRIMARY KEY (`id`),

+ UNIQUE KEY `uk_uid` (`uid`)

+) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;

+

+INSERT INTO employees_0000.student_0000 VALUES (1, 1, 'scott', 95, 'nc_scott', 0, 16, NOW(), NOW());

diff --git a/integration_test/scripts/db_tbl/init.sql b/integration_test/scripts/db_tbl/init.sql

new file mode 100644

index 000000000..744c67674

--- /dev/null

+++ b/integration_test/scripts/db_tbl/init.sql

@@ -0,0 +1,113 @@

+--

+-- Licensed to the Apache Software Foundation (ASF) under one or more

+-- contributor license agreements. See the NOTICE file distributed with

+-- this work for additional information regarding copyright ownership.

+-- The ASF licenses this file to You under the Apache License, Version 2.0

+-- (the "License"); you may not use this file except in compliance with

+-- the License. You may obtain a copy of the License at

+--

+-- http://www.apache.org/licenses/LICENSE-2.0

+--

+-- Unless required by applicable law or agreed to in writing, software

+-- distributed under the License is distributed on an "AS IS" BASIS,

+-- WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+-- See the License for the specific language governing permissions and

+-- limitations under the License.

+--

+

+-- Sample employee database

+-- See changelog table for details

+-- Copyright (C) 2007,2008, MySQL AB

+--

+-- Original data created by Fusheng Wang and Carlo Zaniolo

+-- http://www.cs.aau.dk/TimeCenter/software.htm

+-- http://www.cs.aau.dk/TimeCenter/Data/employeeTemporalDataSet.zip

+--

+-- Current schema by Giuseppe Maxia

+-- Data conversion from XML to relational by Patrick Crews

+--

+-- This work is licensed under the

+-- Creative Commons Attribution-Share Alike 3.0 Unported License.

+-- To view a copy of this license, visit

+-- http://creativecommons.org/licenses/by-sa/3.0/ or send a letter to

+-- Creative Commons, 171 Second Street, Suite 300, San Francisco,

+-- California, 94105, USA.

+--

+-- DISCLAIMER

+-- To the best of our knowledge, this data is fabricated, and