New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

grpc server gets stuck under stress test #112

Comments

|

Thanks for reporting. I've not yet been able to reproduce this. Just to make sure I got everything right:

|

1、yes, 5318Y cpu model, 2 physical cpus, 48 cores, 96 processors. Additional, I found the problem can not be reproduced after I comment out all stdout print code in this test example even without core binding. I'm confused about the phenomenon. |

|

Can you try to replace |

Indeed it is, after GOMAXPROCS set 26 or 27, I can not reproduce. |

|

Thanks. Meanwhile I've been able to reproduce the bug. A first fix will be included in the next EGo release. |

|

@thomasten I also encountered the same issue using the same test case like @zcc35357949, also without numact do the binding, in case we run into the same case, I uploaded the stack trace files generated by pstack, hope they could help. |

|

@thomasten two stack trace files as their name show, one for egohost process, another for ego run process. |

|

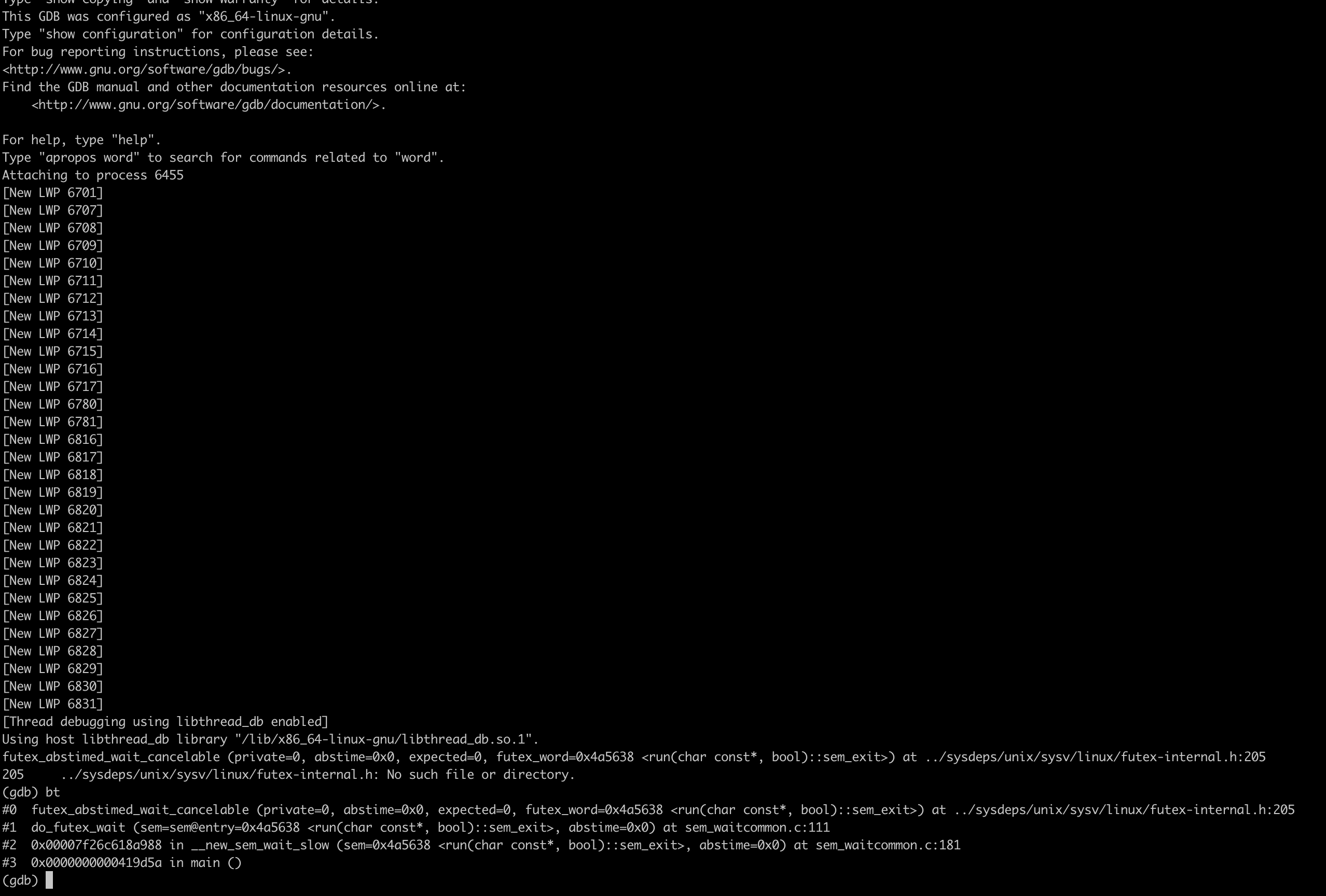

@thomasten gdb stacks for |

|

Thanks @Glenrun. How many cores does your machine have? |

|

@thomasten I have 56 physical cores, 112 logic cores: It is not easy to reproduce in my environment with |

|

@thomasten what is the next release date? |

Thanks for doing this, it's helpful.

Kind of. Previously, GOMAXPROCS was limited to 2, so the problem couldn't occur.

Maybe next week |

|

@thomasten Nice, thanks for reply |

|

@thomasten we are waiting for the new release, is there any update? |

|

EGo v0.4.1 is out including the fix. Sorry for the delay. |

EGo v0.4.1 (3a78efd) |

|

@zcc35357949 sorry to hear that. Can you archive the directory of your sample project and attach it to this issue? |

|

helloworld.zip |

I run the above benchmark command in the same test machine. The problem occurs. |

|

Thanks. Does it work if you remove GOMAXPROCS from enclave.json? |

After remove GOMAXPROCS, the problem occurs less often. But it still exists. |

|

ok, we'll investigate |

|

@thomasten I also reproduced this issue on v0.4.1, attached pstack trace when it occurred. |

Hi, @thomasten , is there any progress? |

|

Yes, I think I found the root cause and have a fix that seems to work regardless of the GOMAXPROCS value. But this needs some more testing. |

@thomasten Thanks for update, waiting for the new release. |

|

v0.4.2 contains the fix. Please try it out. |

@thomasten currently it seems the issue was already fixed, thanks! |

|

We consider this to be fixed. Feel free to reopen if there are still problems. |

Issue description

CPU:Intel 5318Y

kernel: 5.12.19

os: debian 10

EGO:v0.4.0 (ecc1a70)

Grpc server which starts with

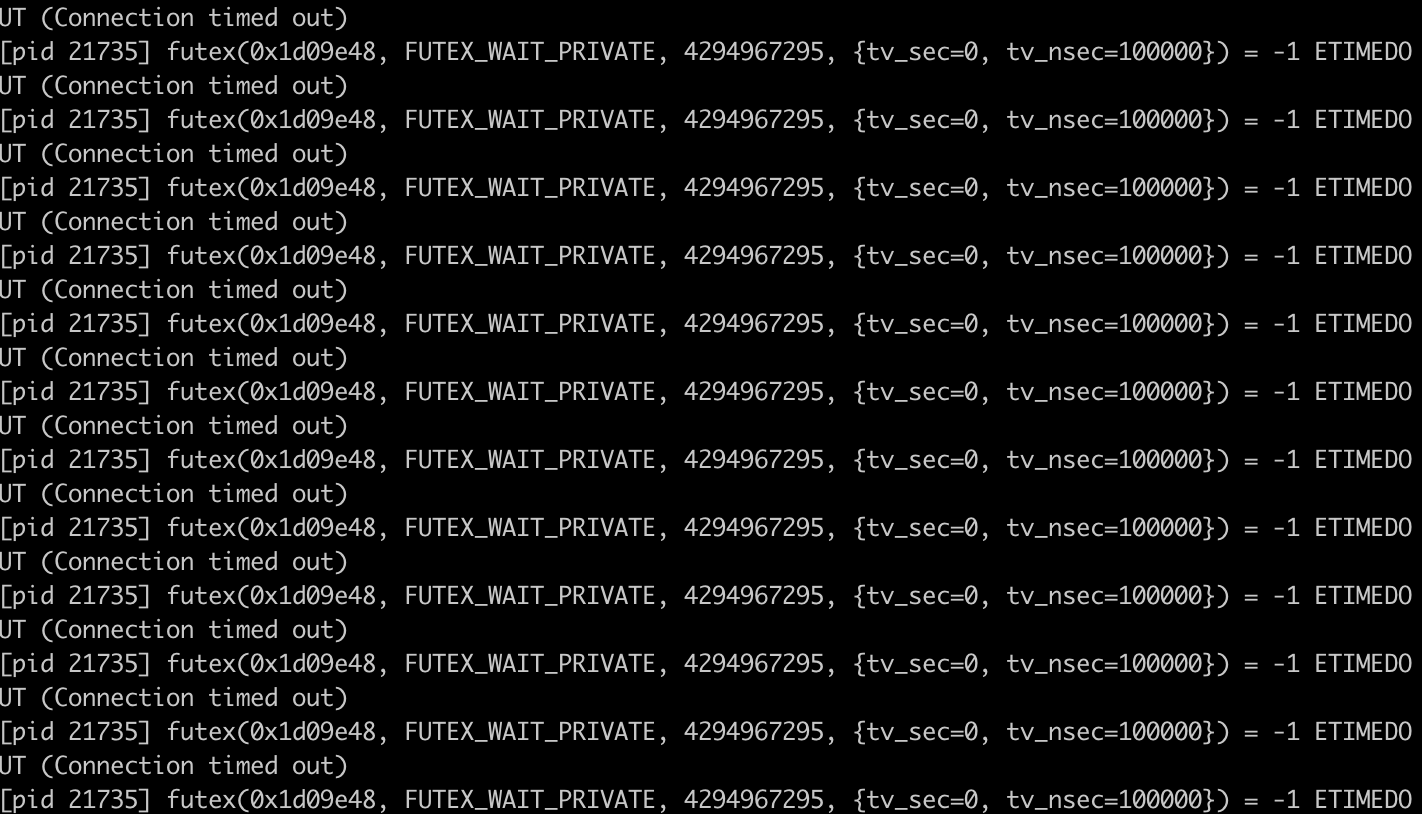

ego runwill become stuck and can not deal with any requests after low stress test.straceresult:htopresult:I try to use numactl to bind server pid in serveral cpus, and the problem can not be reproduced any more.

To reproduce

sample code:

Steps to reproduce the behavior:

ghz -c 5000 -n 50000 --insecure --proto helloworld.proto --import-paths=/mnt/storage09/ego/samples/test/platform/vendor/googleapis,/mnt/storage09/ego/samples/test/platform/vendor/ --call helloworld.Greeter/SayHello -d '{"name":"xxx"}' 127.0.0.1:50051

Expected behavior

Additional info / screenshot

The text was updated successfully, but these errors were encountered: