-

Notifications

You must be signed in to change notification settings - Fork 37

Update plugin interface to use deploy method and handle ssh; add Sentry support #134

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

This is a questionable improvement over the previous state. While it does use the recommended method for logging within Celery (get_task_logger), it requires setting the logger name to a string ("cloudlaunch") so Django logging setup recognizes the logger. Otherwise, if using __name__, we'd have to define a custom Django logger for each plugin. As a consequence, the log formatter needed to be updated to show full file path so we see what module a record is coming from.

Lastly, all this was done to try and get Exceptions raised within a task to register with the Django loggers without needing to log the error message before raising the exception. However, this continues not to work and instead requires an explicit log message.

|

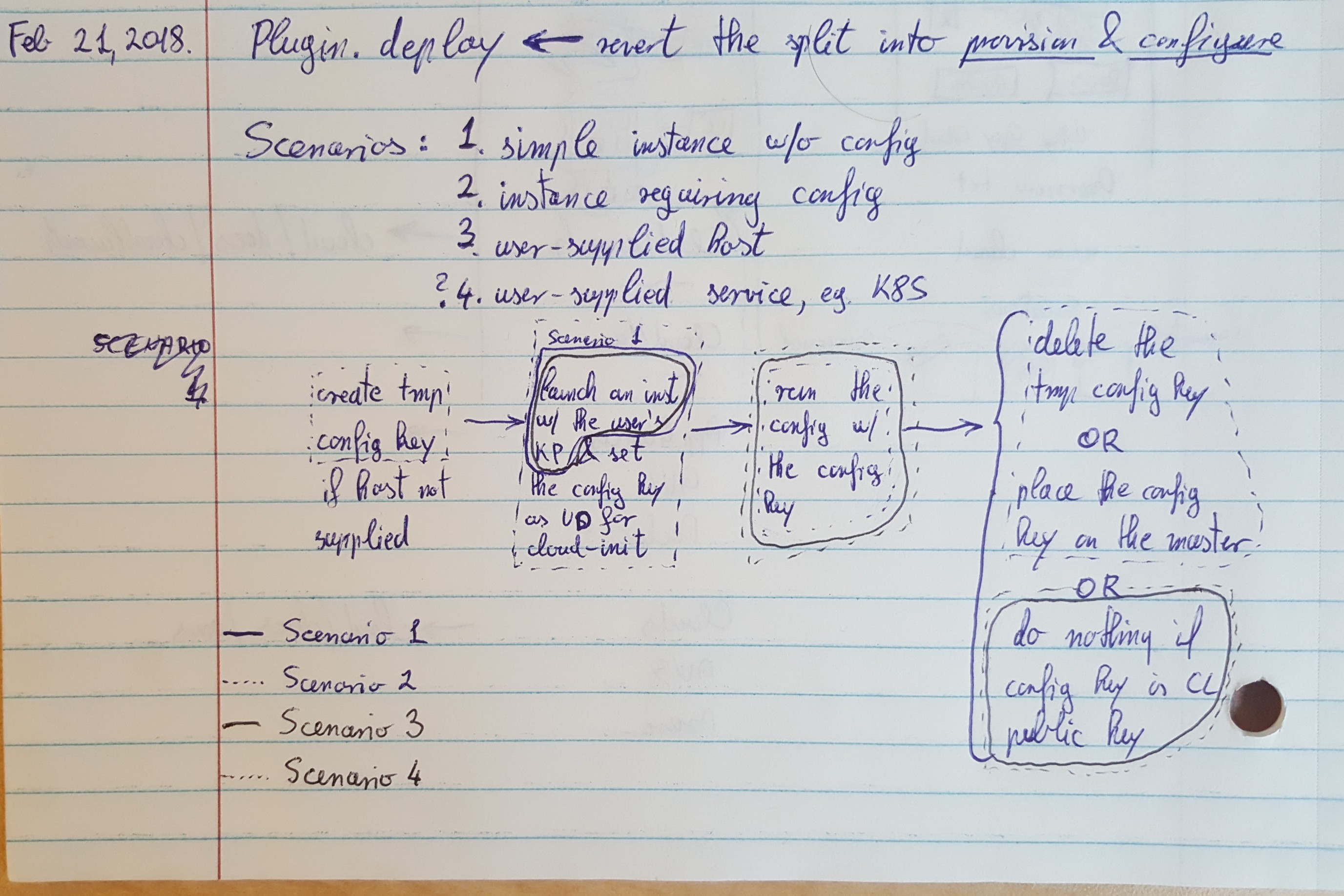

After a bit of discussion, there's an undesirable side-effect here with the key pair management. In order for CloudLaunch to be able to configure a launched instance it needs an ssh key, which it creates before launching and uses it to perform the config steps. Ideally, this key is deleted at the end of the configuration process so the server no longer has access to the instance. However, because only one key pair can be associated with an instance, this key will also be presented to the user for download and access to the instance, meaning that it cannot be deleted. It will also mean that each launch would require a new/different key. One thing that can be done instead is for the configuration step to place a user's key on the instance before deleting the temporary one. However, this requires CloudLaunch to have user's public key, which is not something that can be obtained from the cloud providers. To get around this, CloudLaunch will store public keys as part of the user profile. As a result, when listing key pairs on CloudLaunch, a user will get a listing of key pairs from their profile vs. the provider. CloudLaunch can then create a temporary key to use for the configuration step and replace it with the user's key at the end. If no public key exists, CloudLaunch will store the first key it creates. |

This code was written before we changed & finalized the plans on how to deal with instance config via plugins so rather than just deleting it, this commit can be used as a reference if we ever decide to reintroduce a notion of user profile for CloudLaunch and the associated public keys.

|

Another pivot in this process due to complications related to key management. Namely, needing to swap from a CloudLaunch configuration key and a user ssh login key in a robust way across different plugin deployment models seemed like it was going to cause issues with reliability. So we've decided to revert the initial split of the |

|

@nuwang can you take a look and see if this looks ok to you as well? It's working and pretty much ready for merge. Ideally, we'd generalize the Ansible execution into a util class for other plugins to use and allow other tools to be used instead of Ansible but for the current effort, this should be sufficient. Some docs about the |

| * ``cloud_config``: A dict containing cloud | ||

| infrastructure specific | ||

| configuration for this app | ||

| * ``cloud_user_data``: An object returned by |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Perhaps validated_config instead of user_data?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Given we have app_config and provider_config as the top level parameters in this method, I guess this should then be called validated_app_config but why is the top level app_config not validated then, which doesn't seem ideal. Further, all this field is ever used for is instance user data. We're also hoping to get away from using instance user data for anything but passing the config ssh key so I feel it's perhaps ok to just leave it as is.

nuwang

left a comment

nuwang

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM

| CloudLaunch's public key | ||

| but this value should not | ||

| be supplied. | ||

| * ``ssh_private_key``: Public portion of an ssh |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Should be "private portion"

| key. This should not be | ||

| supplied by a user and | ||

| is intended only for | ||

| internal use. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

An example of a dict structure may be useful.

| def launch_app(self, provider, task, name, cloud_config, | ||

| app_config, user_data): | ||

| """Initiate the app launch process.""" | ||

| def _create_ssh_key(self): |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This function is available in cloudbridge.cloud.base.helpers. Can we refactor to use that instead?

| log.info("Adding a cloud-init config public ssh key to user data") | ||

| user_data += """ | ||

| #cloud-config | ||

| ssh_authorized_keys: |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Is it allowed to have multiple ssh_authorized_keys sections in user_data? Just wondering in case user_data already contains user supplied keys, but probably not something we need to necessarily address right now.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Agree. Given we've never used this in the past let's just see how things go.

| First clone an playbook repo if not already available, configure the | ||

| Ansible inventory and run the playbook. | ||

| First clone an playbook from the supplied repo if not already |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

typo: "clone a playbook"

| 'w') as f: | ||

| f.writelines(pk) | ||

| # Create an inventory file | ||

| i, _ = urllib.request.urlretrieve(inventory) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Use requests instead of urllib?

| while (not self._check_ssh(host, pk=ssh_private_key, user=user) or | ||

| timeout > 200): | ||

| log.info("Waiting for ssh on {0}...".format(inst.name)) | ||

| log.info("Waiting for ssh on %s...", host) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Using the retry decorator from the retrying package could simplify this? e.g.

https://github.com/gvlproject/cloudbridge/blob/8d705b05d107fd25370de534bbdc1bb64ac42e7b/cloudbridge/cloud/providers/aws/resources.py#L354

From PR #134

This is useful for Travis at least

No description provided.