Control an avatar synced to an Unity AR Foundation body tracking controlled robot

Body Tracking with ARKit works very well as does the Unity integration into AR Foundation. However, the rig that Apple provides, as well as the version Unity includes in their sample project have some complexities that have made working with them challenging.

While initially I had thought to replace the sample controlled robot model I found it difficult. The ARKit rig is not like other rigs (7 spine bones, 4 neck bones, different orientations, etc.) in common usage. The Unity version has no avatar associated with it so you are unable to access some of the built in HumanBones and retargeting functionality normally available. Attempts to rig my own version failed for various reasons.

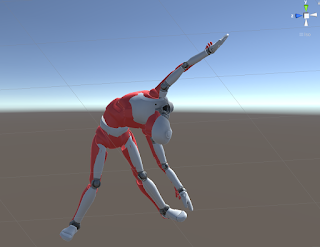

My solution was to keep the controlled robot and pair its movements to a second avatar and, if desired, overlay the positions and hide the controlled robot. Here I'm connecting the armature from the recently updated 3rd Person Unity Starter Assets to the AR Foundation samples ControlledRobot asset.

The primary requirement is to map the avatar bones to the controlled robot bones and the code synchronizes the respective joint rotations. I'm sure there are more elegant solutions, but I thought I would post my version on GitHub in case it helps anyone else attempting this. Feel free to make suggestions or point towards better solutions that may be out there.

There are still issues with initial positions and offsets especially when live on an iOS device, and models lose tracking and experience jitter every now and than, so still a work in process.

- Apple documentation:

- https://developer.apple.com/documentation/arkit/content_anchors/rigging_a_model_for_motion_capture

- AR Foundation Sample Project:

- https://github.com/Unity-Technologies/arfoundation-samples