diff --git a/.clang-format b/.clang-format

new file mode 100644

index 0000000000..17de768cbe

--- /dev/null

+++ b/.clang-format

@@ -0,0 +1,7 @@

+---

+BasedOnStyle: LLVM

+---

+Language: Cpp

+# Force pointers to the type for C++.

+DerivePointerAlignment: false

+PointerAlignment: Left

diff --git a/.gitignore b/.gitignore

new file mode 100644

index 0000000000..503569b57a

--- /dev/null

+++ b/.gitignore

@@ -0,0 +1,5 @@

+*.pyc

+.idea

+horovod.egg-info

+dist

+build

diff --git a/LICENSE b/LICENSE

new file mode 100644

index 0000000000..f70e1e0f41

--- /dev/null

+++ b/LICENSE

@@ -0,0 +1,249 @@

+ Horovod

+ Copyright 2017 Uber Technologies, Inc.

+

+ Licensed under the Apache License, Version 2.0 (the "License");

+ you may not use this file except in compliance with the License.

+ You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+ Unless required by applicable law or agreed to in writing, software

+ distributed under the License is distributed on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ See the License for the specific language governing permissions and

+ limitations under the License.

+

+ Horovod includes:

+

+ FlatBuffers

+ Copyright (c) 2014 Google Inc.

+

+ Licensed under the Apache License, Version 2.0 (the "License");

+ you may not use this file except in compliance with the License.

+ You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+ Unless required by applicable law or agreed to in writing, software

+ distributed under the License is distributed on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ See the License for the specific language governing permissions and

+ limitations under the License.

+

+ baidu-research/tensorflow-allreduce

+ Copyright (c) 2015, The TensorFlow Authors.

+

+ Licensed under the Apache License, Version 2.0 (the "License");

+ you may not use this file except in compliance with the License.

+ You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+ Unless required by applicable law or agreed to in writing, software

+ distributed under the License is distributed on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ See the License for the specific language governing permissions and

+ limitations under the License.

+

+

+ Apache License

+ Version 2.0, January 2004

+ http://www.apache.org/licenses/

+

+ TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

+

+ 1. Definitions.

+

+ "License" shall mean the terms and conditions for use, reproduction,

+ and distribution as defined by Sections 1 through 9 of this document.

+

+ "Licensor" shall mean the copyright owner or entity authorized by

+ the copyright owner that is granting the License.

+

+ "Legal Entity" shall mean the union of the acting entity and all

+ other entities that control, are controlled by, or are under common

+ control with that entity. For the purposes of this definition,

+ "control" means (i) the power, direct or indirect, to cause the

+ direction or management of such entity, whether by contract or

+ otherwise, or (ii) ownership of fifty percent (50%) or more of the

+ outstanding shares, or (iii) beneficial ownership of such entity.

+

+ "You" (or "Your") shall mean an individual or Legal Entity

+ exercising permissions granted by this License.

+

+ "Source" form shall mean the preferred form for making modifications,

+ including but not limited to software source code, documentation

+ source, and configuration files.

+

+ "Object" form shall mean any form resulting from mechanical

+ transformation or translation of a Source form, including but

+ not limited to compiled object code, generated documentation,

+ and conversions to other media types.

+

+ "Work" shall mean the work of authorship, whether in Source or

+ Object form, made available under the License, as indicated by a

+ copyright notice that is included in or attached to the work

+ (an example is provided in the Appendix below).

+

+ "Derivative Works" shall mean any work, whether in Source or Object

+ form, that is based on (or derived from) the Work and for which the

+ editorial revisions, annotations, elaborations, or other modifications

+ represent, as a whole, an original work of authorship. For the purposes

+ of this License, Derivative Works shall not include works that remain

+ separable from, or merely link (or bind by name) to the interfaces of,

+ the Work and Derivative Works thereof.

+

+ "Contribution" shall mean any work of authorship, including

+ the original version of the Work and any modifications or additions

+ to that Work or Derivative Works thereof, that is intentionally

+ submitted to Licensor for inclusion in the Work by the copyright owner

+ or by an individual or Legal Entity authorized to submit on behalf of

+ the copyright owner. For the purposes of this definition, "submitted"

+ means any form of electronic, verbal, or written communication sent

+ to the Licensor or its representatives, including but not limited to

+ communication on electronic mailing lists, source code control systems,

+ and issue tracking systems that are managed by, or on behalf of, the

+ Licensor for the purpose of discussing and improving the Work, but

+ excluding communication that is conspicuously marked or otherwise

+ designated in writing by the copyright owner as "Not a Contribution."

+

+ "Contributor" shall mean Licensor and any individual or Legal Entity

+ on behalf of whom a Contribution has been received by Licensor and

+ subsequently incorporated within the Work.

+

+ 2. Grant of Copyright License. Subject to the terms and conditions of

+ this License, each Contributor hereby grants to You a perpetual,

+ worldwide, non-exclusive, no-charge, royalty-free, irrevocable

+ copyright license to reproduce, prepare Derivative Works of,

+ publicly display, publicly perform, sublicense, and distribute the

+ Work and such Derivative Works in Source or Object form.

+

+ 3. Grant of Patent License. Subject to the terms and conditions of

+ this License, each Contributor hereby grants to You a perpetual,

+ worldwide, non-exclusive, no-charge, royalty-free, irrevocable

+ (except as stated in this section) patent license to make, have made,

+ use, offer to sell, sell, import, and otherwise transfer the Work,

+ where such license applies only to those patent claims licensable

+ by such Contributor that are necessarily infringed by their

+ Contribution(s) alone or by combination of their Contribution(s)

+ with the Work to which such Contribution(s) was submitted. If You

+ institute patent litigation against any entity (including a

+ cross-claim or counterclaim in a lawsuit) alleging that the Work

+ or a Contribution incorporated within the Work constitutes direct

+ or contributory patent infringement, then any patent licenses

+ granted to You under this License for that Work shall terminate

+ as of the date such litigation is filed.

+

+ 4. Redistribution. You may reproduce and distribute copies of the

+ Work or Derivative Works thereof in any medium, with or without

+ modifications, and in Source or Object form, provided that You

+ meet the following conditions:

+

+ (a) You must give any other recipients of the Work or

+ Derivative Works a copy of this License; and

+

+ (b) You must cause any modified files to carry prominent notices

+ stating that You changed the files; and

+

+ (c) You must retain, in the Source form of any Derivative Works

+ that You distribute, all copyright, patent, trademark, and

+ attribution notices from the Source form of the Work,

+ excluding those notices that do not pertain to any part of

+ the Derivative Works; and

+

+ (d) If the Work includes a "NOTICE" text file as part of its

+ distribution, then any Derivative Works that You distribute must

+ include a readable copy of the attribution notices contained

+ within such NOTICE file, excluding those notices that do not

+ pertain to any part of the Derivative Works, in at least one

+ of the following places: within a NOTICE text file distributed

+ as part of the Derivative Works; within the Source form or

+ documentation, if provided along with the Derivative Works; or,

+ within a display generated by the Derivative Works, if and

+ wherever such third-party notices normally appear. The contents

+ of the NOTICE file are for informational purposes only and

+ do not modify the License. You may add Your own attribution

+ notices within Derivative Works that You distribute, alongside

+ or as an addendum to the NOTICE text from the Work, provided

+ that such additional attribution notices cannot be construed

+ as modifying the License.

+

+ You may add Your own copyright statement to Your modifications and

+ may provide additional or different license terms and conditions

+ for use, reproduction, or distribution of Your modifications, or

+ for any such Derivative Works as a whole, provided Your use,

+ reproduction, and distribution of the Work otherwise complies with

+ the conditions stated in this License.

+

+ 5. Submission of Contributions. Unless You explicitly state otherwise,

+ any Contribution intentionally submitted for inclusion in the Work

+ by You to the Licensor shall be under the terms and conditions of

+ this License, without any additional terms or conditions.

+ Notwithstanding the above, nothing herein shall supersede or modify

+ the terms of any separate license agreement you may have executed

+ with Licensor regarding such Contributions.

+

+ 6. Trademarks. This License does not grant permission to use the trade

+ names, trademarks, service marks, or product names of the Licensor,

+ except as required for reasonable and customary use in describing the

+ origin of the Work and reproducing the content of the NOTICE file.

+

+ 7. Disclaimer of Warranty. Unless required by applicable law or

+ agreed to in writing, Licensor provides the Work (and each

+ Contributor provides its Contributions) on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

+ implied, including, without limitation, any warranties or conditions

+ of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

+ PARTICULAR PURPOSE. You are solely responsible for determining the

+ appropriateness of using or redistributing the Work and assume any

+ risks associated with Your exercise of permissions under this License.

+

+ 8. Limitation of Liability. In no event and under no legal theory,

+ whether in tort (including negligence), contract, or otherwise,

+ unless required by applicable law (such as deliberate and grossly

+ negligent acts) or agreed to in writing, shall any Contributor be

+ liable to You for damages, including any direct, indirect, special,

+ incidental, or consequential damages of any character arising as a

+ result of this License or out of the use or inability to use the

+ Work (including but not limited to damages for loss of goodwill,

+ work stoppage, computer failure or malfunction, or any and all

+ other commercial damages or losses), even if such Contributor

+ has been advised of the possibility of such damages.

+

+ 9. Accepting Warranty or Additional Liability. While redistributing

+ the Work or Derivative Works thereof, You may choose to offer,

+ and charge a fee for, acceptance of support, warranty, indemnity,

+ or other liability obligations and/or rights consistent with this

+ License. However, in accepting such obligations, You may act only

+ on Your own behalf and on Your sole responsibility, not on behalf

+ of any other Contributor, and only if You agree to indemnify,

+ defend, and hold each Contributor harmless for any liability

+ incurred by, or claims asserted against, such Contributor by reason

+ of your accepting any such warranty or additional liability.

+

+ END OF TERMS AND CONDITIONS

+

+ APPENDIX: How to apply the Apache License to your work.

+

+ To apply the Apache License to your work, attach the following

+ boilerplate notice, with the fields enclosed by brackets "[]"

+ replaced with your own identifying information. (Don't include

+ the brackets!) The text should be enclosed in the appropriate

+ comment syntax for the file format. We also recommend that a

+ file or class name and description of purpose be included on the

+ same "printed page" as the copyright notice for easier

+ identification within third-party archives.

+

+ Copyright [yyyy] [name of copyright owner]

+

+ Licensed under the Apache License, Version 2.0 (the "License");

+ you may not use this file except in compliance with the License.

+ You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+ Unless required by applicable law or agreed to in writing, software

+ distributed under the License is distributed on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ See the License for the specific language governing permissions and

+ limitations under the License.

\ No newline at end of file

diff --git a/MANIFEST.in b/MANIFEST.in

new file mode 100644

index 0000000000..e24a4c600f

--- /dev/null

+++ b/MANIFEST.in

@@ -0,0 +1,2 @@

+recursive-include * *.h *.cc

+include README.md

diff --git a/README.md b/README.md

new file mode 100644

index 0000000000..2f003bf6f8

--- /dev/null

+++ b/README.md

@@ -0,0 +1,289 @@

+# Horovod

+

+Horovod is a distributed training framework for TensorFlow. The goal of Horovod is to make distributed Deep Learning

+fast and easy to use.

+

+# Install

+

+To install Horovod:

+

+1. Install [Open MPI](https://www.open-mpi.org/).

+

+2. Install the `horovod` pip package.

+

+```bash

+$ pip install horovod

+```

+

+This basic installation is good for laptops and for getting to know Horovod.

+If you're installing Horovod on a server with GPUs, read the [Horovod on GPU](#gpu) section.

+

+# Concepts

+

+Horovod core principles are based on [MPI](http://mpi-forum.org/) concepts such as *size*, *rank*,

+*local rank*, *allreduce*, *allgather* and *broadcast*. These are best explained by example. Say we launched

+a training script on 4 servers, each having 4 GPUs. If we launched one copy of the script per GPU:

+

+1. *Size* would be the number of processes, in this case 16.

+

+2. *Rank* would be the unique process ID from 0 to 15 (*size* - 1).

+

+3. *Local rank* would be the unique process ID within the server from 0 to 3.

+

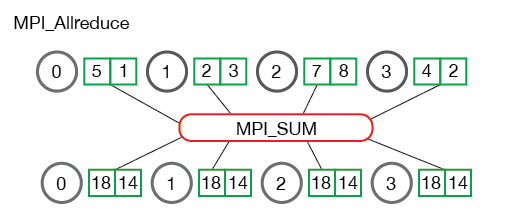

+4. *Allreduce* is an operation that aggregates data among multiple processes and distributes

+ results back to them. *Allreduce* is used to average dense tensors. Here's an illustration from the

+ [MPI Tutorial](http://mpitutorial.com/tutorials/mpi-reduce-and-allreduce/):

+

+

+

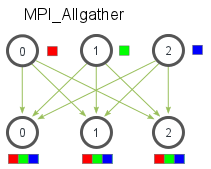

+5. *Allgather* is an operation that gathers data from all processes on every process. *Allgather* is used to collect

+ values of sparse tensors. Here's an illustration from the [MPI Tutorial](http://mpitutorial.com/tutorials/mpi-scatter-gather-and-allgather/):

+

+

+

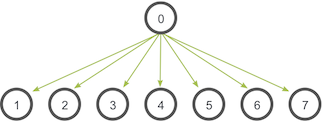

+6. *Broadcast* is an operation that broadcasts data from one process, identified by root rank, onto every other process.

+ Here's an illustration from the [MPI Tutorial](http://mpitutorial.com/tutorials/mpi-broadcast-and-collective-communication/):

+

+

+

+# Usage

+

+To use Horovod, make the following additions to your program:

+

+1. Run `hvd.init()`.

+

+2. Pin a server GPU to be used by this process using `config.gpu_options.visible_device_list`.

+ With the typical setup of one GPU per process, this can be set to *local rank*.

+

+3. Wrap optimizer in `hvd.DistributedOptimizer`. The distributed optimizer delegates gradient computation

+ to the original optimizer, averages gradients using *allreduce* or *allgather*, and then applies those averaged

+ gradients.

+

+4. Add `hvd.BroadcastGlobalVariablesHook(0)` to broadcast initial variable states from rank 0 to all other

+ processes. Alternatively, if you're not using `MonitoredTrainingSession`, you can simply execute the

+ `hvd.broadcast_global_variables` op after global variables have been initialized.

+

+Example (full MNIST training example is available [here](examples/tensorflow_mnist.py)):

+

+```python

+import tensorflow as tf

+import horovod.tensorflow as hvd

+

+

+# Initialize Horovod

+hvd.init()

+

+# Pin GPU to be used to process local rank (one GPU per process)

+config = tf.ConfigProto()

+config.gpu_options.visible_device_list = str(hvd.local_rank())

+

+# Build model...

+loss = ...

+opt = tf.train.AdagradOptimizer(0.01)

+

+# Add Horovod Distributed Optimizer

+opt = hvd.DistributedOptimizer(opt)

+

+# Add hook to broadcast variables from rank 0 to all other processes during

+# initialization.

+hooks = [hvd.BroadcastGlobalVariablesHook(0)]

+

+# Make training operation

+train_op = opt.minimize(loss)

+

+# The MonitoredTrainingSession takes care of session initialization,

+# restoring from a checkpoint, saving to a checkpoint, and closing when done

+# or an error occurs.

+with tf.train.MonitoredTrainingSession(checkpoint_dir="/tmp/train_logs",

+ config=config,

+ hooks=hooks) as mon_sess:

+ while not mon_sess.should_stop():

+ # Perform synchronous training.

+ mon_sess.run(train_op)

+```

+

+To run on a machine with 4 GPUs:

+

+```bash

+$ mpirun -np 4 python train.py

+```

+

+## Horovod on GPU

+

+To use Horovod on GPU, read the options below and see which one applies to you best.

+

+### Have GPUs?

+

+In most situations, using NCCL 2 will significantly improve performance over the CPU version. NCCL 2 provides the *allreduce*

+operation optimized for NVIDIA GPUs and a variety of networking devices, such as InfiniBand or RoCE.

+

+1. Install [NCCL 2](https://developer.nvidia.com/nccl).

+

+2. Install [Open MPI](https://www.open-mpi.org/).

+

+3. Install the `horovod` pip package.

+

+```bash

+$ HOROVOD_GPU_ALLREDUCE=NCCL pip install horovod

+```

+

+**Note**: Some networks with a high computation to communication ratio benefit from doing allreduce on CPU, even if a

+GPU version is available. Inception V3 is an example of such network. To force allreduce to happen on CPU, pass

+`device_dense='/cpu:0'` to `hvd.DistributedOptimizer`:

+

+```python

+opt = hvd.DistributedOptimizer(opt, device_dense='/cpu:0')

+```

+

+### Advanced: Have GPUs and networking with GPUDirect?

+

+[GPUDirect](https://developer.nvidia.com/gpudirect) allows GPUs to transfer memory among each other without CPU

+involvement, which significantly reduces latency and load on CPU. NCCL 2 is able to use GPUDirect automatically for

+*allreduce* operation if it detects it.

+

+Additionally, Horovod uses *allgather* and *broadcast* operations from MPI. They are used for averaging sparse tensors

+that are typically used for embeddings, and for broadcasting initial state. To speed these operations up with GPUDirect,

+make sure your MPI implementation supports CUDA and add `HOROVOD_GPU_ALLGATHER=MPI HOROVOD_GPU_BROADCAST=MPI` to the pip

+command.

+

+1. Install [NCCL 2](https://developer.nvidia.com/nccl).

+

+2. Install [Open MPI](https://www.open-mpi.org/).

+

+3. Install the `horovod` pip package.

+

+```bash

+$ HOROVOD_GPU_ALLREDUCE=NCCL HOROVOD_GPU_ALLGATHER=MPI HOROVOD_GPU_BROADCAST=MPI pip install horovod

+```

+

+**Note**: Allgather allocates an output tensor which is proportionate to the number of processes participating in the

+training. If you find yourself running out of GPU memory, you can force allreduce to happen on CPU by passing

+`device_sparse='/cpu:0'` to `hvd.DistributedOptimizer`:

+

+```python

+opt = hvd.DistributedOptimizer(opt, device_sparse='/cpu:0')

+```

+

+### Advanced: Have MPI optimized for your network?

+

+If you happen to have network hardware not supported by NCCL 2 or your MPI vendor's implementation on GPU is faster,

+you can also use the pure MPI version of *allreduce*, *allgather* and *broadcast* on GPU.

+

+1. Make sure your MPI implementation is installed.

+

+2. Install the `horovod` pip package.

+

+```bash

+$ HOROVOD_GPU_ALLREDUCE=MPI HOROVOD_GPU_ALLGATHER=MPI HOROVOD_GPU_BROADCAST=MPI pip install horovod

+```

+

+## Inference

+

+What about inference? Inference may be done outside of the Python script that was used to train the model. If you do this, it

+will not have references to the Horovod library.

+

+To run inference on a checkpoint generated by the Horovod-enabled training script you should optimize the graph and only

+keep operations necessary for a forward pass through network. The [Optimize for Inference](https://github.com/tensorflow/tensorflow/blob/master/tensorflow/python/tools/optimize_for_inference.py)

+script from the TensorFlow repository will do that for you.

+

+If you want to convert your checkpoint to [Frozen Graph](https://github.com/tensorflow/tensorflow/blob/master/tensorflow/python/tools/freeze_graph.py),

+you should do so after doing the optimization described above, otherwise the [Freeze Graph](https://github.com/tensorflow/tensorflow/blob/master/tensorflow/python/tools/freeze_graph.py)

+script will fail to load Horovod op:

+

+```

+ValueError: No op named HorovodAllreduce in defined operations.

+```

+

+## Troubleshooting

+

+### Import TensorFlow failed during installation

+

+1. Is TensorFlow installed?

+

+If you see the error message below, it means that TensorFlow is not installed. Please install TensorFlow before installing

+Horovod.

+

+```

+error: import tensorflow failed, is it installed?

+

+Traceback (most recent call last):

+ File "/tmp/pip-OfE_YX-build/setup.py", line 29, in fully_define_extension

+ import tensorflow as tf

+ImportError: No module named tensorflow

+```

+

+2. Are the CUDA libraries available?

+

+If you see the error message below, it means that TensorFlow cannot be loaded. If you're installing Horovod into a container

+on a machine without GPUs, you may use CUDA stub drivers to work around the issue.

+

+```

+error: import tensorflow failed, is it installed?

+

+Traceback (most recent call last):

+ File "/tmp/pip-41aCq9-build/setup.py", line 29, in fully_define_extension

+ import tensorflow as tf

+ File "/usr/local/lib/python2.7/dist-packages/tensorflow/__init__.py", line 24, in

+ from tensorflow.python import *

+ File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/__init__.py", line 49, in

+ from tensorflow.python import pywrap_tensorflow

+ File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/pywrap_tensorflow.py", line 52, in

+ raise ImportError(msg)

+ImportError: Traceback (most recent call last):

+ File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/pywrap_tensorflow.py", line 41, in

+ from tensorflow.python.pywrap_tensorflow_internal import *

+ File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/pywrap_tensorflow_internal.py", line 28, in

+ _pywrap_tensorflow_internal = swig_import_helper()

+ File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/pywrap_tensorflow_internal.py", line 24, in swig_import_helper

+ _mod = imp.load_module('_pywrap_tensorflow_internal', fp, pathname, description)

+ImportError: libcuda.so.1: cannot open shared object file: No such file or directory

+```

+

+To use CUDA stub drivers:

+

+```bash

+# temporary add stub drivers to ld.so.cache

+$ ldconfig /usr/local/cuda/lib64/stubs

+

+# install Horovod, add other HOROVOD_* environment variables as necessary

+$ pip install horovod

+

+# revert to standard libraries

+$ ldconfig

+```

+

+### Running out of memory

+

+If you notice that your program is running out of GPU memory and multiple processes

+are being placed on the same GPU, it's likely that your program (or its dependencies)

+create a `tf.Session` that does not use the `config` that pins specific GPU.

+

+If possible, track down the part of program that uses these additional `tf.Session`s and pass

+the same configuration.

+

+Alternatively, you can place following snippet in the beginning of your program to ask TensorFlow

+to minimize the amount of memory it will pre-allocate on each GPU:

+

+```python

+small_cfg = tf.ConfigProto()

+small_cfg.gpu_options.allow_growth = True

+with tf.Session(config=small_cfg):

+ pass

+```

+

+As a last resort, you can **replace** setting `config.gpu_options.visible_device_list`

+with different code:

+

+```python

+# Pin GPU to be used

+import os

+os.environ['CUDA_VISIBLE_DEVICES'] = str(hvd.local_rank())

+```

+

+**Note**: Setting `CUDA_VISIBLE_DEVICES` is incompatible with `config.gpu_options.visible_device_list`.

+

+Setting `CUDA_VISIBLE_DEVICES` has additional disadvantage for GPU version - CUDA will not be able to use IPC, which

+will likely cause NCCL and MPI and to fail. In order to disable IPC in NCCL and MPI and allow it to fallback to shared

+memory, use:

+* `export NCCL_P2P_DISABLE=1` for NCCL.

+* `--mca btl_smcuda_use_cuda_ipc 0` flag for OpenMPI and similar flags for other vendors.

diff --git a/examples/tensorflow_mnist.py b/examples/tensorflow_mnist.py

new file mode 100644

index 0000000000..63795bf16a

--- /dev/null

+++ b/examples/tensorflow_mnist.py

@@ -0,0 +1,110 @@

+# Copyright 2017 Uber Technologies, Inc. All Rights Reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+# ==============================================================================

+#!/usr/bin/env python

+

+import tensorflow as tf

+import horovod.tensorflow as hvd

+layers = tf.contrib.layers

+learn = tf.contrib.learn

+

+tf.logging.set_verbosity(tf.logging.INFO)

+

+

+def conv_model(feature, target, mode):

+ """2-layer convolution model."""

+ # Convert the target to a one-hot tensor of shape (batch_size, 10) and

+ # with a on-value of 1 for each one-hot vector of length 10.

+ target = tf.one_hot(tf.cast(target, tf.int32), 10, 1, 0)

+

+ # Reshape feature to 4d tensor with 2nd and 3rd dimensions being

+ # image width and height final dimension being the number of color channels.

+ feature = tf.reshape(feature, [-1, 28, 28, 1])

+

+ # First conv layer will compute 32 features for each 5x5 patch

+ with tf.variable_scope('conv_layer1'):

+ h_conv1 = layers.conv2d(

+ feature, 32, kernel_size=[5, 5], activation_fn=tf.nn.relu)

+ h_pool1 = tf.nn.max_pool(

+ h_conv1, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

+

+ # Second conv layer will compute 64 features for each 5x5 patch.

+ with tf.variable_scope('conv_layer2'):

+ h_conv2 = layers.conv2d(

+ h_pool1, 64, kernel_size=[5, 5], activation_fn=tf.nn.relu)

+ h_pool2 = tf.nn.max_pool(

+ h_conv2, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

+ # reshape tensor into a batch of vectors

+ h_pool2_flat = tf.reshape(h_pool2, [-1, 7 * 7 * 64])

+

+ # Densely connected layer with 1024 neurons.

+ h_fc1 = layers.dropout(

+ layers.fully_connected(

+ h_pool2_flat, 1024, activation_fn=tf.nn.relu),

+ keep_prob=0.5,

+ is_training=mode == tf.contrib.learn.ModeKeys.TRAIN)

+

+ # Compute logits (1 per class) and compute loss.

+ logits = layers.fully_connected(h_fc1, 10, activation_fn=None)

+ loss = tf.losses.softmax_cross_entropy(target, logits)

+

+ return tf.argmax(logits, 1), loss

+

+

+def main(_):

+ # Initialize Horovod.

+ hvd.init()

+

+ # Download and load MNIST dataset.

+ mnist = learn.datasets.mnist.read_data_sets('MNIST-data-%d' % hvd.rank())

+

+ # Build model...

+ with tf.name_scope('input'):

+ image = tf.placeholder(tf.float32, [None, 784], name='image')

+ label = tf.placeholder(tf.float32, [None], name='label')

+ predict, loss = conv_model(image, label, tf.contrib.learn.ModeKeys.TRAIN)

+

+ opt = tf.train.RMSPropOptimizer(0.01)

+

+ # Add Horovod Distributed Optimizer.

+ opt = hvd.DistributedOptimizer(opt)

+

+ global_step = tf.contrib.framework.get_or_create_global_step()

+ train_op = opt.minimize(loss, global_step=global_step)

+

+ # BroadcastGlobalVariablesHook broadcasts variables from rank 0 to all other

+ # processes during initialization.

+ hooks = [hvd.BroadcastGlobalVariablesHook(0),

+ tf.train.StopAtStepHook(last_step=100),

+ tf.train.LoggingTensorHook(tensors={'step': global_step, 'loss': loss},

+ every_n_iter=10),

+ ]

+

+ # Pin GPU to be used to process local rank (one GPU per process)

+ config = tf.ConfigProto()

+ config.gpu_options.allow_growth = True

+ config.gpu_options.visible_device_list = str(hvd.local_rank())

+

+ # The MonitoredTrainingSession takes care of session initialization,

+ # restoring from a checkpoint, saving to a checkpoint, and closing when done

+ # or an error occurs.

+ with tf.train.SingularMonitoredSession(hooks=hooks, config=config) as mon_sess:

+ while not mon_sess.should_stop():

+ # Run a training step synchronously.

+ image_, label_ = mnist.train.next_batch(100)

+ mon_sess.run(train_op, feed_dict={image: image_, label: label_})

+

+

+if __name__ == "__main__":

+ tf.app.run()

diff --git a/examples/tensorflow_word2vec.py b/examples/tensorflow_word2vec.py

new file mode 100644

index 0000000000..bd4f2f9efa

--- /dev/null

+++ b/examples/tensorflow_word2vec.py

@@ -0,0 +1,245 @@

+# Copyright 2015 The TensorFlow Authors. All Rights Reserved.

+# Modifications copyright (C) 2017 Uber Technologies, Inc.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+# ==============================================================================

+"""Basic word2vec example."""

+

+from __future__ import absolute_import

+from __future__ import division

+from __future__ import print_function

+

+import collections

+import math

+import os

+import random

+import zipfile

+

+import numpy as np

+from six.moves import urllib

+from six.moves import xrange # pylint: disable=redefined-builtin

+import tensorflow as tf

+import horovod.tensorflow as hvd

+

+# Initialize Horovod.

+hvd.init()

+

+

+# Step 1: Download the data.

+url = 'http://mattmahoney.net/dc/text8.zip'

+

+

+def maybe_download(filename, expected_bytes):

+ """Download a file if not present, and make sure it's the right size."""

+ if not os.path.exists(filename):

+ filename, _ = urllib.request.urlretrieve(url, filename)

+ statinfo = os.stat(filename)

+ if statinfo.st_size == expected_bytes:

+ print('Found and verified', filename)

+ else:

+ print(statinfo.st_size)

+ raise Exception(

+ 'Failed to verify ' + url + '. Can you get to it with a browser?')

+ return filename

+

+filename = maybe_download('text8-%d.zip' % hvd.rank(), 31344016)

+

+

+# Read the data into a list of strings.

+def read_data(filename):

+ """Extract the first file enclosed in a zip file as a list of words."""

+ with zipfile.ZipFile(filename) as f:

+ data = tf.compat.as_str(f.read(f.namelist()[0])).split()

+ return data

+

+vocabulary = read_data(filename)

+print('Data size', len(vocabulary))

+

+# Step 2: Build the dictionary and replace rare words with UNK token.

+vocabulary_size = 50000

+

+

+def build_dataset(words, n_words):

+ """Process raw inputs into a dataset."""

+ count = [['UNK', -1]]

+ count.extend(collections.Counter(words).most_common(n_words - 1))

+ dictionary = dict()

+ for word, _ in count:

+ dictionary[word] = len(dictionary)

+ data = list()

+ unk_count = 0

+ for word in words:

+ if word in dictionary:

+ index = dictionary[word]

+ else:

+ index = 0 # dictionary['UNK']

+ unk_count += 1

+ data.append(index)

+ count[0][1] = unk_count

+ reversed_dictionary = dict(zip(dictionary.values(), dictionary.keys()))

+ return data, count, dictionary, reversed_dictionary

+

+data, count, dictionary, reverse_dictionary = build_dataset(vocabulary,

+ vocabulary_size)

+del vocabulary # Hint to reduce memory.

+print('Most common words (+UNK)', count[:5])

+print('Sample data', data[:10], [reverse_dictionary[i] for i in data[:10]])

+

+

+# Step 3: Function to generate a training batch for the skip-gram model.

+def generate_batch(batch_size, num_skips, skip_window):

+ assert num_skips <= 2 * skip_window

+ # Adjust batch_size to match num_skips

+ batch_size = batch_size // num_skips * num_skips

+ span = 2 * skip_window + 1 # [ skip_window target skip_window ]

+ # Backtrack a little bit to avoid skipping words in the end of a batch

+ data_index = random.randint(0, len(data) - span - 1)

+ batch = np.ndarray(shape=(batch_size), dtype=np.int32)

+ labels = np.ndarray(shape=(batch_size, 1), dtype=np.int32)

+ buffer = collections.deque(maxlen=span)

+ for _ in range(span):

+ buffer.append(data[data_index])

+ data_index = (data_index + 1) % len(data)

+ for i in range(batch_size // num_skips):

+ target = skip_window # target label at the center of the buffer

+ targets_to_avoid = [skip_window]

+ for j in range(num_skips):

+ while target in targets_to_avoid:

+ target = random.randint(0, span - 1)

+ targets_to_avoid.append(target)

+ batch[i * num_skips + j] = buffer[skip_window]

+ labels[i * num_skips + j, 0] = buffer[target]

+ buffer.append(data[data_index])

+ data_index = (data_index + 1) % len(data)

+ return batch, labels

+

+batch, labels = generate_batch(batch_size=8, num_skips=2, skip_window=1)

+for i in range(8):

+ print(batch[i], reverse_dictionary[batch[i]],

+ '->', labels[i, 0], reverse_dictionary[labels[i, 0]])

+

+# Step 4: Build and train a skip-gram model.

+

+max_batch_size = 128

+embedding_size = 128 # Dimension of the embedding vector.

+skip_window = 1 # How many words to consider left and right.

+num_skips = 2 # How many times to reuse an input to generate a label.

+

+# We pick a random validation set to sample nearest neighbors. Here we limit the

+# validation samples to the words that have a low numeric ID, which by

+# construction are also the most frequent.

+valid_size = 16 # Random set of words to evaluate similarity on.

+valid_window = 100 # Only pick dev samples in the head of the distribution.

+valid_examples = np.random.choice(valid_window, valid_size, replace=False)

+num_sampled = 64 # Number of negative examples to sample.

+

+graph = tf.Graph()

+

+with graph.as_default():

+

+ # Input data.

+ train_inputs = tf.placeholder(tf.int32, shape=[None])

+ train_labels = tf.placeholder(tf.int32, shape=[None, 1])

+ valid_dataset = tf.constant(valid_examples, dtype=tf.int32)

+

+ # Look up embeddings for inputs.

+ embeddings = tf.Variable(

+ tf.random_uniform([vocabulary_size, embedding_size], -1.0, 1.0))

+ embed = tf.nn.embedding_lookup(embeddings, train_inputs)

+

+ # Construct the variables for the NCE loss

+ nce_weights = tf.Variable(

+ tf.truncated_normal([vocabulary_size, embedding_size],

+ stddev=1.0 / math.sqrt(embedding_size)))

+ nce_biases = tf.Variable(tf.zeros([vocabulary_size]))

+

+ # Compute the average NCE loss for the batch.

+ # tf.nce_loss automatically draws a new sample of the negative labels each

+ # time we evaluate the loss.

+ loss = tf.reduce_mean(

+ tf.nn.nce_loss(weights=nce_weights,

+ biases=nce_biases,

+ labels=train_labels,

+ inputs=embed,

+ num_sampled=num_sampled,

+ num_classes=vocabulary_size))

+

+ # Construct the SGD optimizer using a learning rate of 1.0.

+ optimizer = tf.train.GradientDescentOptimizer(1.0)

+

+ # Add Horovod Distributed Optimizer.

+ optimizer = hvd.DistributedOptimizer(optimizer)

+

+ train_op = optimizer.minimize(loss)

+

+ # Compute the cosine similarity between minibatch examples and all embeddings.

+ norm = tf.sqrt(tf.reduce_sum(tf.square(embeddings), 1, keep_dims=True))

+ normalized_embeddings = embeddings / norm

+ valid_embeddings = tf.nn.embedding_lookup(

+ normalized_embeddings, valid_dataset)

+ similarity = tf.matmul(

+ valid_embeddings, normalized_embeddings, transpose_b=True)

+

+ # Add variable initializer.

+ init = tf.global_variables_initializer()

+

+ # Broadcast variables from rank 0 to all other processes.

+ bcast = hvd.broadcast_global_variables(0)

+

+# Step 5: Begin training.

+num_steps = 100001

+

+# Pin GPU to be used to process local rank (one GPU per process)

+config = tf.ConfigProto()

+config.gpu_options.allow_growth = True

+config.gpu_options.visible_device_list = str(hvd.local_rank())

+

+with tf.Session(graph=graph, config=config) as session:

+ # We must initialize all variables before we use them.

+ init.run()

+ bcast.run()

+ print('Initialized')

+

+ average_loss = 0

+ for step in xrange(num_steps):

+ # simulate various sentence length by randomization

+ batch_size = random.randint(max_batch_size // 2, max_batch_size)

+ batch_inputs, batch_labels = generate_batch(

+ batch_size, num_skips, skip_window)

+ feed_dict = {train_inputs: batch_inputs, train_labels: batch_labels}

+

+ # We perform one update step by evaluating the optimizer op (including it

+ # in the list of returned values for session.run()

+ _, loss_val = session.run([train_op, loss], feed_dict=feed_dict)

+ average_loss += loss_val

+

+ if step % 2000 == 0:

+ if step > 0:

+ average_loss /= 2000

+ # The average loss is an estimate of the loss over the last 2000 batches.

+ print('Average loss at step ', step, ': ', average_loss)

+ average_loss = 0

+

+ # Note that this is expensive (~20% slowdown if computed every 500 steps)

+ if step % 10000 == 0:

+ sim = similarity.eval()

+ for i in xrange(valid_size):

+ valid_word = reverse_dictionary[valid_examples[i]]

+ top_k = 8 # number of nearest neighbors

+ nearest = (-sim[i, :]).argsort()[1:top_k + 1]

+ log_str = 'Nearest to %s:' % valid_word

+ for k in xrange(top_k):

+ close_word = reverse_dictionary[nearest[k]]

+ log_str = '%s %s,' % (log_str, close_word)

+ print(log_str)

+ final_embeddings = normalized_embeddings.eval()

diff --git a/horovod/__init__.py b/horovod/__init__.py

new file mode 100644

index 0000000000..e69de29bb2

diff --git a/horovod/tensorflow/__init__.py b/horovod/tensorflow/__init__.py

new file mode 100644

index 0000000000..f859e01b95

--- /dev/null

+++ b/horovod/tensorflow/__init__.py

@@ -0,0 +1,214 @@

+# Copyright 2016 The TensorFlow Authors. All Rights Reserved.

+# Modifications copyright (C) 2017 Uber Technologies, Inc.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+# ==============================================================================

+# pylint: disable=g-short-docstring-punctuation

+"""## Communicating Between Processes with MPI

+

+TensorFlow natively provides inter-device communication through send and

+receive ops and inter-node communication through Distributed TensorFlow, based

+on the same send and receive abstractions. On HPC clusters where Infiniband or

+other high-speed node interconnects are available, these can end up being

+insufficient for synchronous data-parallel training (without asynchronous

+gradient descent). This module implements a variety of MPI ops which can take

+advantage of hardware-specific MPI libraries for efficient communication.

+"""

+

+from __future__ import absolute_import

+from __future__ import division

+from __future__ import print_function

+

+import tensorflow as tf

+

+from horovod.tensorflow.mpi_ops import size

+from horovod.tensorflow.mpi_ops import rank

+from horovod.tensorflow.mpi_ops import local_rank

+from horovod.tensorflow.mpi_ops import allgather

+from horovod.tensorflow.mpi_ops import broadcast

+from horovod.tensorflow.mpi_ops import _allreduce

+from horovod.tensorflow.mpi_ops import init

+

+

+def allreduce(tensor, average=True, device_dense='', device_sparse=''):

+ """Perform an allreduce on a tf.Tensor or tf.IndexedSlices.

+

+ Arguments:

+ tensor: tf.Tensor, tf.Variable, or tf.IndexedSlices to reduce.

+ The shape of the input must be identical across all ranks.

+ average: If True, computes the average over all ranks.

+ Otherwise, computes the sum over all ranks.

+ device_dense: Device to be used for dense tensors. Uses GPU by default

+ if Horovod was build with HOROVOD_GPU_ALLREDUCE.

+ device_sparse: Device to be used for sparse tensors. Uses GPU by default

+ if Horovod was build with HOROVOD_GPU_ALLGATHER.

+

+ This function performs a bandwidth-optimal ring allreduce on the input

+ tensor. If the input is an tf.IndexedSlices, the function instead does an

+ allgather on the values and the indices, effectively doing an allreduce on

+ the represented tensor.

+ """

+ if isinstance(tensor, tf.IndexedSlices):

+ with tf.device(device_sparse):

+ # For IndexedSlices, do two allgathers intead of an allreduce.

+ horovod_size = tf.cast(size(), tensor.values.dtype)

+ values = allgather(tensor.values)

+ indices = allgather(tensor.indices)

+

+ # To make this operation into an average, divide all gathered values by

+ # the Horovod size.

+ new_values = tf.div(values, horovod_size) if average else values

+ return tf.IndexedSlices(new_values, indices,

+ dense_shape=tensor.dense_shape)

+ else:

+ with tf.device(device_dense):

+ horovod_size = tf.cast(size(), tensor.dtype)

+ summed_tensor = _allreduce(tensor)

+ new_tensor = (tf.div(summed_tensor, horovod_size)

+ if average else summed_tensor)

+ return new_tensor

+

+

+def broadcast_global_variables(root_rank):

+ """Broadcasts all global variables from root rank to all other processes.

+

+ Arguments:

+ root_rank: rank of the process from which global variables will be broadcasted

+ to all other processes.

+ """

+ return tf.group(*[tf.assign(var, broadcast(var, root_rank))

+ for var in tf.global_variables()])

+

+

+class BroadcastGlobalVariablesHook(tf.train.SessionRunHook):

+ """SessionRunHook that will broadcast all global variables from root rank

+ to all other processes during initialization."""

+

+ def __init__(self, root_rank, device=''):

+ """Construct a new BroadcastGlobalVariablesHook that will broadcast all

+ global variables from root rank to all other processes during initialization.

+

+ Args:

+ root_rank:

+ Rank that will send data, other ranks will receive data.

+ device:

+ Device to be used for broadcasting. Uses GPU by default

+ if Horovod was build with HOROVOD_GPU_BROADCAST.

+ """

+ self.root_rank = root_rank

+ self.bcast_op = None

+ self.device = device

+

+ def begin(self):

+ if not self.bcast_op:

+ with tf.device(self.device):

+ self.bcast_op = broadcast_global_variables(self.root_rank)

+

+ def after_create_session(self, session, coord):

+ session.run(self.bcast_op)

+

+

+class DistributedOptimizer(tf.train.Optimizer):

+ """An optimizer that wraps another tf.Optimizer, using an allreduce to

+ average gradient values before applying gradients to model weights."""

+

+ def __init__(self, optimizer, name=None, use_locking=False, device_dense='',

+ device_sparse=''):

+ """Construct a new DistributedOptimizer, which uses another optimizer

+ under the hood for computing single-process gradient values and

+ applying gradient updates after the gradient values have been averaged

+ across all the Horovod ranks.

+

+ Args:

+ optimizer:

+ Optimizer to use for computing gradients and applying updates.

+ name:

+ Optional name prefix for the operations created when applying

+ gradients. Defaults to "Distributed" followed by the provided

+ optimizer type.

+ use_locking:

+ Whether to use locking when updating variables.

+ See Optimizer.__init__ for more info.

+ device_dense:

+ Device to be used for dense tensors. Uses GPU by default

+ if Horovod was build with HOROVOD_GPU_ALLREDUCE.

+ device_sparse:

+ Device to be used for sparse tensors. Uses GPU by default

+ if Horovod was build with HOROVOD_GPU_ALLGATHER.

+ """

+ if name is None:

+ name = "Distributed{}".format(type(optimizer).__name__)

+

+ self._optimizer = optimizer

+ self._device_dense = device_dense

+ self._device_sparse = device_sparse

+ super(DistributedOptimizer, self).__init__(

+ name=name, use_locking=use_locking)

+

+ def compute_gradients(self, *args, **kwargs):

+ """Compute gradients of all trainable variables.

+

+ See Optimizer.compute_gradients() for more info.

+

+ In DistributedOptimizer, compute_gradients() is overriden to also

+ allreduce the gradients before returning them.

+ """

+ gradients = (super(DistributedOptimizer, self)

+ .compute_gradients(*args, **kwargs))

+ if size() > 1:

+ with tf.name_scope(self._name + "_Allreduce"):

+ return [(allreduce(gradient, device_dense=self._device_dense,

+ device_sparse=self._device_sparse), var)

+ for (gradient, var) in gradients]

+ else:

+ return gradients

+

+ def _apply_dense(self, *args, **kwargs):

+ """Calls this same method on the underlying optimizer."""

+ return self._optimizer._apply_dense(*args, **kwargs)

+

+ def _resource_apply_dense(self, *args, **kwargs):

+ """Calls this same method on the underlying optimizer."""

+ return self._optimizer._resource_apply_dense(*args, **kwargs)

+

+ def _resource_apply_sparse_duplicate_indices(self, *args, **kwargs):

+ """Calls this same method on the underlying optimizer."""

+ return self._optimizer._resource_apply_sparse_duplicate_indices(*args, **kwargs)

+

+ def _resource_apply_sparse(self, *args, **kwargs):

+ """Calls this same method on the underlying optimizer."""

+ return self._optimizer._resource_apply_sparse(*args, **kwargs)

+

+ def _apply_sparse_duplicate_indices(self, *args, **kwargs):

+ """Calls this same method on the underlying optimizer."""

+ return self._optimizer._apply_sparse_duplicate_indices(*args, **kwargs)

+

+ def _apply_sparse(self, *args, **kwargs):

+ """Calls this same method on the underlying optimizer."""

+ return self._optimizer._apply_sparse(*args, **kwargs)

+

+ def _prepare(self, *args, **kwargs):

+ """Calls this same method on the underlying optimizer."""

+ return self._optimizer._prepare(*args, **kwargs)

+

+ def _create_slots(self, *args, **kwargs):

+ """Calls this same method on the underlying optimizer."""

+ return self._optimizer._create_slots(*args, **kwargs)

+

+ def _valid_dtypes(self, *args, **kwargs):

+ """Calls this same method on the underlying optimizer."""

+ return self._optimizer._valid_dtypes(*args, **kwargs)

+

+ def _finish(self, *args, **kwargs):

+ """Calls this same method on the underlying optimizer."""

+ return self._optimizer._finish(*args, **kwargs)

diff --git a/horovod/tensorflow/hash_vector.h b/horovod/tensorflow/hash_vector.h

new file mode 100644

index 0000000000..b2d9f30565

--- /dev/null

+++ b/horovod/tensorflow/hash_vector.h

@@ -0,0 +1,38 @@

+// Copyright 2017 Uber Technologies, Inc. All Rights Reserved.

+//

+// Licensed under the Apache License, Version 2.0 (the "License");

+// you may not use this file except in compliance with the License.

+// You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+// =============================================================================

+

+#ifndef HOROVOD_HASH_VECTOR_H

+#define HOROVOD_HASH_VECTOR_H

+

+#include

+

+namespace std {

+

+template struct hash> {

+ typedef std::vector argument_type;

+ typedef std::size_t result_type;

+

+ result_type operator()(argument_type const& in) const {

+ size_t size = in.size();

+ size_t seed = 0;

+ for (size_t i = 0; i < size; i++)

+ seed ^= std::hash()(in[i]) + 0x9e3779b9 + (seed << 6) + (seed >> 2);

+ return seed;

+ }

+};

+

+} // namespace std

+

+#endif //HOROVOD_HASH_VECTOR_H

diff --git a/horovod/tensorflow/mpi_message.cc b/horovod/tensorflow/mpi_message.cc

new file mode 100644

index 0000000000..1a8d4d12b6

--- /dev/null

+++ b/horovod/tensorflow/mpi_message.cc

@@ -0,0 +1,246 @@

+// Copyright 2016 The TensorFlow Authors. All Rights Reserved.

+// Modifications copyright (C) 2017 Uber Technologies, Inc.

+//

+// Licensed under the Apache License, Version 2.0 (the "License");

+// you may not use this file except in compliance with the License.

+// You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+// =============================================================================

+

+#include "mpi_message.h"

+#include "wire/mpi_message_generated.h"

+#include

+

+namespace horovod {

+namespace tensorflow {

+

+const std::string& MPIDataType_Name(MPIDataType value) {

+ switch (value) {

+ case TF_MPI_UINT8:

+ static const std::string uint8("uint8");

+ return uint8;

+ case TF_MPI_INT8:

+ static const std::string int8("int8");

+ return int8;

+ case TF_MPI_UINT16:

+ static const std::string uint16("uint16");

+ return uint16;

+ case TF_MPI_INT16:

+ static const std::string int16("int16");

+ return int16;

+ case TF_MPI_INT32:

+ static const std::string int32("int32");

+ return int32;

+ case TF_MPI_INT64:

+ static const std::string int64("int64");

+ return int64;

+ case TF_MPI_FLOAT32:

+ static const std::string float32("float32");

+ return float32;

+ case TF_MPI_FLOAT64:

+ static const std::string float64("float64");

+ return float64;

+ default:

+ static const std::string unknown("");

+ return unknown;

+ }

+}

+

+const std::string& MPIRequest::RequestType_Name(RequestType value) {

+ switch (value) {

+ case RequestType::ALLREDUCE:

+ static const std::string allreduce("ALLREDUCE");

+ return allreduce;

+ case RequestType::ALLGATHER:

+ static const std::string allgather("ALLGATHER");

+ return allgather;

+ case RequestType::BROADCAST:

+ static const std::string broadcast("BROADCAST");

+ return broadcast;

+ default:

+ static const std::string unknown("");

+ return unknown;

+ }

+}

+

+int32_t MPIRequest::request_rank() const { return request_rank_; }

+

+void MPIRequest::set_request_rank(int32_t value) { request_rank_ = value; }

+

+MPIRequest::RequestType MPIRequest::request_type() const {

+ return request_type_;

+}

+

+void MPIRequest::set_request_type(RequestType value) { request_type_ = value; }

+

+MPIDataType MPIRequest::tensor_type() const { return tensor_type_; }

+

+void MPIRequest::set_tensor_type(MPIDataType value) { tensor_type_ = value; }

+

+const std::string& MPIRequest::tensor_name() const { return tensor_name_; }

+

+void MPIRequest::set_tensor_name(const std::string& value) {

+ tensor_name_ = value;

+}

+

+int32_t MPIRequest::root_rank() const { return root_rank_; }

+

+void MPIRequest::set_root_rank(int32_t value) { root_rank_ = value; }

+

+int32_t MPIRequest::device() const { return device_; }

+

+void MPIRequest::set_device(int32_t value) { device_ = value; }

+

+const std::vector& MPIRequest::tensor_shape() const {

+ return tensor_shape_;

+}

+

+void MPIRequest::set_tensor_shape(const std::vector& value) {

+ tensor_shape_ = value;

+}

+

+void MPIRequest::add_tensor_shape(int64_t value) {

+ tensor_shape_.push_back(value);

+}

+

+void MPIRequest::ParseFromString(MPIRequest& request,

+ const std::string& input) {

+ auto obj = flatbuffers::GetRoot((uint8_t*)input.c_str());

+ request.set_request_rank(obj->request_rank());

+ request.set_request_type((MPIRequest::RequestType)obj->request_type());

+ request.set_tensor_type((MPIDataType)obj->tensor_type());

+ request.set_tensor_name(obj->tensor_name()->str());

+ request.set_root_rank(obj->root_rank());

+ request.set_device(obj->device());

+ request.set_tensor_shape(std::vector(obj->tensor_shape()->begin(),

+ obj->tensor_shape()->end()));

+}

+

+void MPIRequest::SerializeToString(MPIRequest& request, std::string& output) {

+ flatbuffers::FlatBufferBuilder builder(1024);

+ wire::MPIRequestBuilder request_builder(builder);

+ request_builder.add_request_rank(request.request_rank());

+ request_builder.add_request_type(

+ (wire::MPIRequestType)request.request_type());

+ request_builder.add_tensor_type((wire::MPIDataType)request.tensor_type());

+ request_builder.add_tensor_name(builder.CreateString(request.tensor_name()));

+ request_builder.add_root_rank(request.root_rank());

+ request_builder.add_device(request.device());

+ request_builder.add_tensor_shape(

+ builder.CreateVector(request.tensor_shape()));

+ auto obj = request_builder.Finish();

+ builder.Finish(obj);

+

+ uint8_t* buf = builder.GetBufferPointer();

+ auto size = builder.GetSize();

+ output = std::string((char*)buf, size);

+}

+

+const std::string& MPIResponse::ResponseType_Name(ResponseType value) {

+ switch (value) {

+ case ResponseType::ALLREDUCE:

+ static const std::string allreduce("ALLREDUCE");

+ return allreduce;

+ case ResponseType::ALLGATHER:

+ static const std::string allgather("ALLGATHER");

+ return allgather;

+ case ResponseType::BROADCAST:

+ static const std::string broadcast("BROADCAST");

+ return broadcast;

+ case ResponseType::ERROR:

+ static const std::string error("ERROR");

+ return error;

+ case ResponseType::DONE:

+ static const std::string done("DONE");

+ return done;

+ case ResponseType::SHUTDOWN:

+ static const std::string shutdown("SHUTDOWN");

+ return shutdown;

+ default:

+ static const std::string unknown("");

+ return unknown;

+ }

+}

+

+MPIResponse::ResponseType MPIResponse::response_type() const {

+ return response_type_;

+}

+

+void MPIResponse::set_response_type(ResponseType value) {

+ response_type_ = value;

+}

+

+const std::string& MPIResponse::tensor_name() const { return tensor_name_; }

+

+void MPIResponse::set_tensor_name(const std::string& value) {

+ tensor_name_ = value;

+}

+

+const std::string& MPIResponse::error_message() const { return error_message_; }

+

+void MPIResponse::set_error_message(const std::string& value) {

+ error_message_ = value;

+}

+

+const std::vector& MPIResponse::devices() const { return devices_; }

+

+void MPIResponse::set_devices(const std::vector& value) {

+ devices_ = value;

+}

+

+void MPIResponse::add_devices(int32_t value) { devices_.push_back(value); }

+

+const std::vector& MPIResponse::tensor_sizes() const {

+ return tensor_sizes_;

+}

+

+void MPIResponse::set_tensor_sizes(const std::vector& value) {

+ tensor_sizes_ = value;

+}

+

+void MPIResponse::add_tensor_sizes(int64_t value) {

+ tensor_sizes_.push_back(value);

+}

+

+void MPIResponse::ParseFromString(MPIResponse& response,

+ const std::string& input) {

+ auto obj = flatbuffers::GetRoot((uint8_t*)input.c_str());

+ response.set_response_type((MPIResponse::ResponseType)obj->response_type());

+ response.set_tensor_name(obj->tensor_name()->str());

+ response.set_error_message(obj->error_message()->str());

+ response.set_devices(

+ std::vector(obj->devices()->begin(), obj->devices()->end()));

+ response.set_tensor_sizes(std::vector(obj->tensor_sizes()->begin(),

+ obj->tensor_sizes()->end()));

+}

+

+void MPIResponse::SerializeToString(MPIResponse& response,

+ std::string& output) {

+ flatbuffers::FlatBufferBuilder builder(1024);

+ wire::MPIResponseBuilder response_builder(builder);

+ response_builder.add_response_type(

+ (wire::MPIResponseType)response.response_type());

+ response_builder.add_tensor_name(

+ builder.CreateString(response.tensor_name()));

+ response_builder.add_error_message(

+ builder.CreateString(response.error_message()));

+ response_builder.add_devices(builder.CreateVector(response.devices()));

+ response_builder.add_tensor_sizes(

+ builder.CreateVector(response.tensor_sizes()));

+ auto obj = response_builder.Finish();

+ builder.Finish(obj);

+

+ uint8_t* buf = builder.GetBufferPointer();

+ auto size = builder.GetSize();

+ output = std::string((char*)buf, size);

+}

+

+} // namespace tensorflow

+} // namespace horovod

diff --git a/horovod/tensorflow/mpi_message.h b/horovod/tensorflow/mpi_message.h

new file mode 100644

index 0000000000..6da559219a

--- /dev/null

+++ b/horovod/tensorflow/mpi_message.h

@@ -0,0 +1,142 @@

+// Copyright 2016 The TensorFlow Authors. All Rights Reserved.

+// Modifications copyright (C) 2017 Uber Technologies, Inc.

+//

+// Licensed under the Apache License, Version 2.0 (the "License");

+// you may not use this file except in compliance with the License.

+// You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+// =============================================================================

+

+#ifndef HOROVOD_MPI_MESSAGE_H

+#define HOROVOD_MPI_MESSAGE_H

+

+#include

+#include

+

+namespace horovod {

+namespace tensorflow {

+

+enum MPIDataType {

+ TF_MPI_UINT8 = 0,

+ TF_MPI_INT8 = 1,

+ TF_MPI_UINT16 = 2,

+ TF_MPI_INT16 = 3,

+ TF_MPI_INT32 = 4,

+ TF_MPI_INT64 = 5,

+ TF_MPI_FLOAT32 = 6,

+ TF_MPI_FLOAT64 = 7

+};

+

+const std::string& MPIDataType_Name(MPIDataType value);

+

+// An MPIRequest is a message sent from a rank greater than zero to the

+// coordinator (rank zero), informing the coordinator of an operation that

+// the rank wants to do and the tensor that it wants to apply the operation to.

+class MPIRequest {

+public:

+ enum RequestType { ALLREDUCE = 0, ALLGATHER = 1, BROADCAST = 2 };

+

+ static const std::string& RequestType_Name(RequestType value);

+

+ // The request rank is necessary to create a consistent ordering of results,

+ // for example in the allgather where the order of outputs should be sorted

+ // by rank.

+ int32_t request_rank() const;

+ void set_request_rank(int32_t value);

+

+ RequestType request_type() const;

+ void set_request_type(RequestType value);

+

+ MPIDataType tensor_type() const;

+ void set_tensor_type(MPIDataType value);

+

+ const std::string& tensor_name() const;

+ void set_tensor_name(const std::string& value);

+

+ int32_t root_rank() const;

+ void set_root_rank(int32_t value);

+

+ int32_t device() const;

+ void set_device(int32_t value);

+

+ const std::vector& tensor_shape() const;

+ void set_tensor_shape(const std::vector& value);

+ void add_tensor_shape(int64_t value);

+

+ static void ParseFromString(MPIRequest& request, const std::string& input);

+ static void SerializeToString(MPIRequest& request, std::string& output);

+

+private:

+ int32_t request_rank_;

+ RequestType request_type_;

+ MPIDataType tensor_type_;

+ int32_t root_rank_;

+ int32_t device_;

+ std::string tensor_name_;

+ std::vector tensor_shape_;

+};

+

+// An MPIResponse is a message sent from the coordinator (rank zero) to a rank

+// greater than zero, informing the rank of an operation should be performed

+// now. If the operation requested would result in an error (for example, due

+// to a type or shape mismatch), then the MPIResponse can contain an error and

+// an error message instead. Finally, an MPIResponse can be a DONE message (if

+// there are no more tensors to reduce on this tick of the background loop) or

+// SHUTDOWN if all MPI processes should shut down.

+class MPIResponse {

+public:

+ enum ResponseType {

+ ALLREDUCE = 0,

+ ALLGATHER = 1,

+ BROADCAST = 2,

+ ERROR = 3,

+ DONE = 4,

+ SHUTDOWN = 5

+ };

+

+ static const std::string& ResponseType_Name(ResponseType value);

+

+ ResponseType response_type() const;

+ void set_response_type(ResponseType value);

+

+ // Empty if the type is DONE or SHUTDOWN.

+ const std::string& tensor_name() const;

+ void set_tensor_name(const std::string& value);

+

+ // Empty unless response_type is ERROR.

+ const std::string& error_message() const;

+ void set_error_message(const std::string& value);

+

+ const std::vector& devices() const;

+ void set_devices(const std::vector& value);

+ void add_devices(int32_t value);

+

+ // Empty unless response_type is ALLGATHER.

+ // These tensor sizes are the dimension zero sizes of all the input matrices,

+ // indexed by the rank.

+ const std::vector& tensor_sizes() const;

+ void set_tensor_sizes(const std::vector& value);

+ void add_tensor_sizes(int64_t value);

+

+ static void ParseFromString(MPIResponse& response, const std::string& input);

+ static void SerializeToString(MPIResponse& response, std::string& output);

+

+private:

+ ResponseType response_type_;

+ std::string tensor_name_;

+ std::string error_message_;

+ std::vector devices_;

+ std::vector tensor_sizes_;

+};

+

+} // namespace tensorflow

+} // namespace horovod

+

+#endif // HOROVOD_MPI_MESSAGE_H

diff --git a/horovod/tensorflow/mpi_ops.cc b/horovod/tensorflow/mpi_ops.cc

new file mode 100644

index 0000000000..27ee4b94b9

--- /dev/null

+++ b/horovod/tensorflow/mpi_ops.cc

@@ -0,0 +1,1451 @@

+// Copyright 2016 The TensorFlow Authors. All Rights Reserved.

+// Modifications copyright (C) 2017 Uber Technologies, Inc.

+//

+// Licensed under the Apache License, Version 2.0 (the "License");

+// you may not use this file except in compliance with the License.

+// You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+// =============================================================================

+

+#include

+#include

+#include

+

+#include "tensorflow/core/framework/op.h"

+#include "tensorflow/core/framework/op_kernel.h"

+#include "tensorflow/core/framework/shape_inference.h"

+

+#define EIGEN_USE_THREADS

+

+#if HAVE_CUDA

+#include "tensorflow/stream_executor/stream.h"

+#include

+#endif

+

+#if HAVE_NCCL

+#include

+#endif

+

+#define OMPI_SKIP_MPICXX

+#include "mpi.h"

+#include "mpi_message.h"

+#include "hash_vector.h"

+

+/*

+ * Allreduce, Allgather and Broadcast Ops for TensorFlow.

+ *

+ * TensorFlow natively provides inter-device communication through send and

+ * receive ops and inter-node communication through Distributed TensorFlow,

+ * based on the same send and receive abstractions. These end up being

+ * insufficient for synchronous data-parallel training on HPC clusters where

+ * Infiniband or other high-speed interconnects are available. This module

+ * implements MPI ops for allgather, allreduce and broadcast, which do

+ * optimized gathers, reductions and broadcasts and can take advantage of

+ * hardware-optimized communication libraries through the MPI implementation.

+ *

+ * The primary logic of the allreduce, allgather and broadcast are in MPI and

+ * NCCL implementations. The background thread which facilitates MPI operations

+ * is run in BackgroundThreadLoop(). The provided ops are:

+ * – HorovodAllreduce:

+ * Perform an allreduce on a Tensor, returning the sum

+ * across all MPI processes in the global communicator.

+ * – HorovodAllgather:

+ * Perform an allgather on a Tensor, returning the concatenation of

+ * the tensor on the first dimension across all MPI processes in the

+ * global communicator.

+ * - HorovodBroadcast:

+ * Perform a broadcast on a Tensor, broadcasting Tensor

+ * value from root rank to all other ranks.

+ *

+ * Additionally, this library provides C APIs to initialize Horovod and query

+ * rank, local rank and world size. These are used in Python directly through

+ * ctypes.

+ */

+

+using namespace tensorflow;

+

+namespace horovod {

+namespace tensorflow {

+

+namespace {

+

+// Device ID used for CPU.

+#define CPU_DEVICE_ID -1

+

+// Use void pointer for ready event if CUDA is not present to avoid linking

+// error.

+#if HAVE_CUDA

+#define GPU_EVENT_IF_CUDA perftools::gputools::Event*

+#else

+#define GPU_EVENT_IF_CUDA void*

+#endif

+

+// A callback to call after the MPI communication completes. Since the

+// allreduce and allgather ops are asynchronous, this callback is what resumes

+// computation after the reduction is completed.

+typedef std::function StatusCallback;

+

+// Table storing Tensors to be reduced, keyed by unique name.

+// This table contains everything necessary to do the reduction.

+typedef struct {

+ // Operation context.

+ OpKernelContext* context;

+ // Input tensor.

+ Tensor tensor;

+ // Pre-allocated output tensor.

+ Tensor* output;

+ // Root rank for broadcast operation.

+ int root_rank;

+ // Event indicating that data is ready.

+ GPU_EVENT_IF_CUDA ready_event;

+ // GPU to do reduction on, or CPU_DEVICE_ID in case of CPU.

+ int device;

+ // A callback to call with the status.

+ StatusCallback callback;

+} TensorTableEntry;

+typedef std::unordered_map TensorTable;

+

+// Table for storing Tensor metadata on rank zero. This is used for error

+// checking, stall checking and size calculations, as well as determining

+// when a reduction is ready to be done (when all nodes are ready to do it).

+typedef std::unordered_map<

+ std::string,

+ std::tuple, std::chrono::system_clock::time_point>>

+ MessageTable;

+

+// The global state required for the MPI ops.

+//

+// MPI is a library that stores a lot of global per-program state and often

+// requires running on a single thread. As a result, we have to have a single

+// background thread responsible for all MPI operations, and communicate with

+// that background thread through global state.

+struct HorovodGlobalState {

+ // An atomic boolean which is set to true when background thread is started.

+ // This ensures that only one background thread is spawned.

+ std::atomic_flag initialize_flag = ATOMIC_FLAG_INIT;

+

+ // A mutex that needs to be used whenever MPI operations are done.

+ std::mutex mutex;

+

+ // Tensors waiting to be allreduced or allgathered.

+ TensorTable tensor_table;

+

+ // Queue of MPI requests waiting to be sent to the coordinator node.

+ std::queue message_queue;

+

+ // Background thread running MPI communication.

+ std::thread background_thread;

+

+ // Whether the background thread should shutdown.

+ bool shut_down = false;

+

+ // Only exists on the coordinator node (rank zero). Maintains a count of

+ // how many nodes are ready to allreduce every tensor (keyed by tensor

+ // name) and time point when tensor started allreduce op.

+ std::unique_ptr message_table;

+

+ // Time point when coordinator last checked for stalled tensors.

+ std::chrono::system_clock::time_point last_stall_check;

+

+ // Whether MPI_Init has been completed on the background thread.

+ bool initialization_done = false;

+

+ // The MPI rank, local rank, and size.

+ int rank = 0;

+ int local_rank = 0;

+ int size = 1;

+

+// The CUDA stream used for data transfers and within-allreduce operations.

+// A naive implementation would use the TensorFlow StreamExecutor CUDA

+// stream. However, the allreduce and allgather require doing memory copies

+// and kernel executions (for accumulation of values on the GPU). However,

+// the subsequent operations must wait for those operations to complete,

+// otherwise MPI (which uses its own stream internally) will begin the data

+// transfers before the CUDA calls are complete. In order to wait for those

+// CUDA operations, if we were using the TensorFlow stream, we would have to

+// synchronize that stream; however, other TensorFlow threads may be

+// submitting more work to that stream, so synchronizing on it can cause the

+// allreduce to be delayed, waiting for compute totally unrelated to it in

+// other parts of the graph. Overlaying memory transfers and compute during

+// backpropagation is crucial for good performance, so we cannot use the

+// TensorFlow stream, and must use our own stream.

+#if HAVE_NCCL

+ std::unordered_map streams;

+ std::unordered_map, ncclComm_t> nccl_comms;

+#endif

+

+ ~HorovodGlobalState() {