diff --git a/docs/source/en/_toctree.yml b/docs/source/en/_toctree.yml

index a7f938fa662f..ab42a9a1b00e 100644

--- a/docs/source/en/_toctree.yml

+++ b/docs/source/en/_toctree.yml

@@ -58,6 +58,8 @@

- sections:

- local: using-diffusers/textual_inversion_inference

title: Textual inversion

+ - local: using-diffusers/ip_adapter

+ title: IP-Adapter

- local: training/distributed_inference

title: Distributed inference with multiple GPUs

- local: using-diffusers/reusing_seeds

diff --git a/docs/source/en/api/loaders/ip_adapter.md b/docs/source/en/api/loaders/ip_adapter.md

index 18da05122fc4..8805092d92fc 100644

--- a/docs/source/en/api/loaders/ip_adapter.md

+++ b/docs/source/en/api/loaders/ip_adapter.md

@@ -12,11 +12,11 @@ specific language governing permissions and limitations under the License.

# IP-Adapter

-[IP-Adapter](https://hf.co/papers/2308.06721) is a lightweight adapter that enables prompting a diffusion model with an image. This method decouples the cross-attention layers of the image and text features. The image features are generated from an image encoder. Files generated from IP-Adapter are only ~100MBs.

+[IP-Adapter](https://hf.co/papers/2308.06721) is a lightweight adapter that enables prompting a diffusion model with an image. This method decouples the cross-attention layers of the image and text features. The image features are generated from an image encoder.

-Learn how to load an IP-Adapter checkpoint and image in the [IP-Adapter](../../using-diffusers/loading_adapters#ip-adapter) loading guide.

+Learn how to load an IP-Adapter checkpoint and image in the IP-Adapter [loading](../../using-diffusers/loading_adapters#ip-adapter) guide, and you can see how to use it in the [usage](../../using-diffusers/ip_adapter) guide.

diff --git a/docs/source/en/using-diffusers/ip_adapter.md b/docs/source/en/using-diffusers/ip_adapter.md

new file mode 100644

index 000000000000..b37ef15fc6af

--- /dev/null

+++ b/docs/source/en/using-diffusers/ip_adapter.md

@@ -0,0 +1,467 @@

+

+

+# IP-Adapter

+

+[IP-Adapter](https://hf.co/papers/2308.06721) is an image prompt adapter that can be plugged into diffusion models to enable image prompting without any changes to the underlying model. Furthermore, this adapter can be reused with other models finetuned from the same base model and it can be combined with other adapters like [ControlNet](../using-diffusers/controlnet). The key idea behind IP-Adapter is the *decoupled cross-attention* mechanism which adds a separate cross-attention layer just for image features instead of using the same cross-attention layer for both text and image features. This allows the model to learn more image-specific features.

+

+> [!TIP]

+> Learn how to load an IP-Adapter in the [Load adapters](../using-diffusers/loading_adapters#ip-adapter) guide, and make sure you check out the [IP-Adapter Plus](../using-diffusers/loading_adapters#ip-adapter-plus) section which requires manually loading the image encoder.

+

+This guide will walk you through using IP-Adapter for various tasks and use cases.

+

+## General tasks

+

+Let's take a look at how to use IP-Adapter's image prompting capabilities with the [`StableDiffusionXLPipeline`] for tasks like text-to-image, image-to-image, and inpainting. We also encourage you to try out other pipelines such as Stable Diffusion, LCM-LoRA, ControlNet, T2I-Adapter, or AnimateDiff!

+

+In all the following examples, you'll see the [`~loaders.IPAdapterMixin.set_ip_adapter_scale`] method. This method controls the amount of text or image conditioning to apply to the model. A value of `1.0` means the model is only conditioned on the image prompt. Lowering this value encourages the model to produce more diverse images, but they may not be as aligned with the image prompt. Typically, a value of `0.5` achieves a good balance between the two prompt types and produces good results.

+

+

+

+

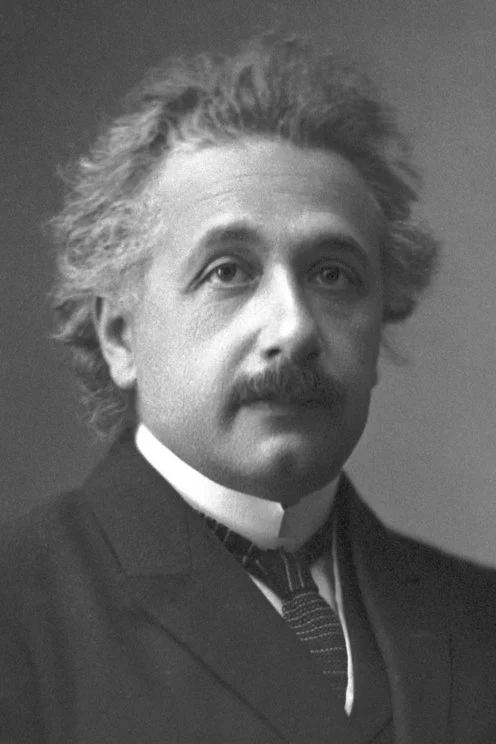

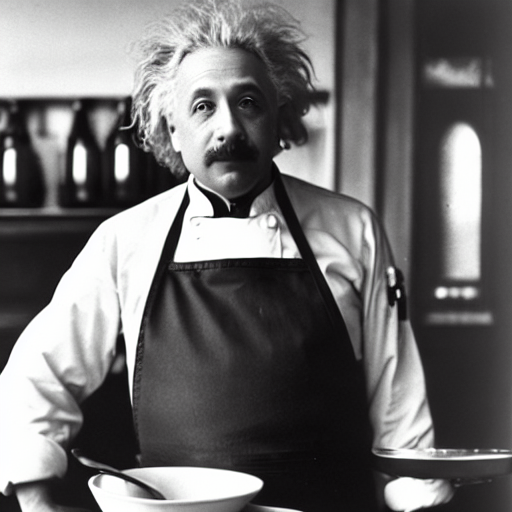

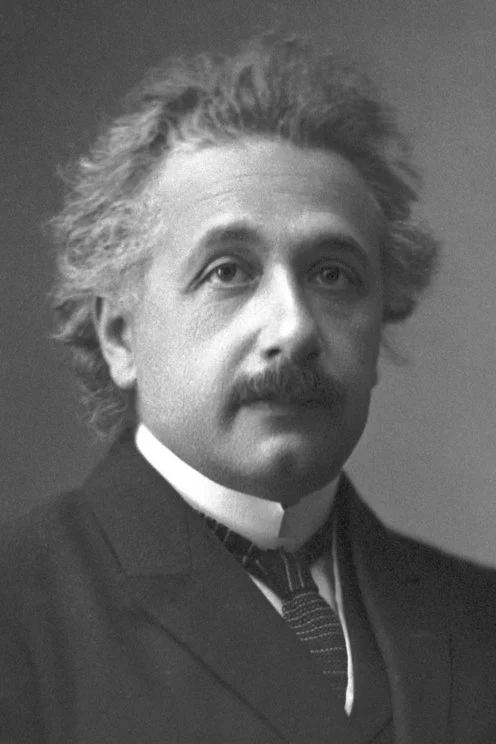

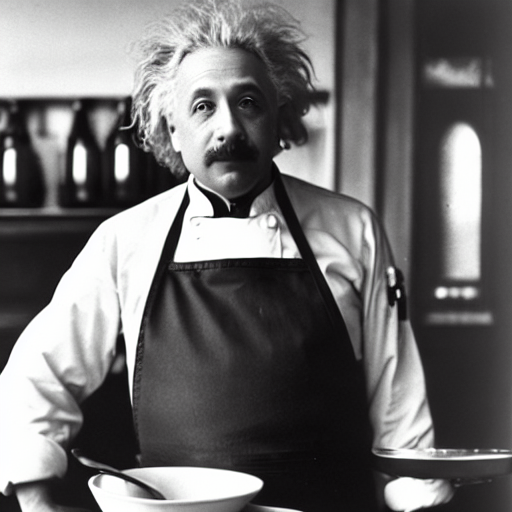

+Crafting the precise text prompt to generate the image you want can be difficult because it may not always capture what you'd like to express. Adding an image alongside the text prompt helps the model better understand what it should generate and can lead to more accurate results.

+

+Load a Stable Diffusion XL (SDXL) model and insert an IP-Adapter into the model with the [`~loaders.IPAdapterMixin.load_ip_adapter`] method. Use the `subfolder` parameter to load the SDXL model weights.

+

+```py

+from diffusers import AutoPipelineForText2Image

+from diffusers.utils import load_image

+import torch

+

+pipeline = AutoPipelineForText2Image.from_pretrained("stabilityai/stable-diffusion-xl-base-1.0", torch_dtype=torch.float16).to("cuda")

+pipeline.load_ip_adapter("h94/IP-Adapter", subfolder="sdxl_models", weight_name="ip-adapter_sdxl.bin")

+pipeline.set_ip_adapter_scale(0.6)

+```

+

+Create a text prompt and load an image prompt before passing them to the pipeline to generate an image.

+

+```py

+image = load_image("https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/ip_adapter_diner.png")

+generator = torch.Generator(device="cpu").manual_seed(0)

+images = pipeline(

+ prompt="a polar bear sitting in a chair drinking a milkshake",

+ ip_adapter_image=image,

+ negative_prompt="deformed, ugly, wrong proportion, low res, bad anatomy, worst quality, low quality",

+ num_inference_steps=100,

+ generator=generator,

+).images

+images[0]

+```

+

+

+

+

+

IP-Adapter image

+

+

+

+

generated image

+

+

+

+

+

original image

+

+

+

+

IP-Adapter image

+

+

+

+

generated image

+

+

+

+

+

original image

+

+

+

+

IP-Adapter image

+

+

+

+

generated image

+

+

+

+

+

IP-Adapter image

+

+

+

+

generated video

+

+

+

+

+

IP-Adapter image

+

+

+

+

generated image

+

+

+

+

+

IP-Adapter image of face

+

+

+

+

IP-Adapter style images

+

+

+

+

+

+

+

+

+

IP-Adapter image

+

+

+

+

depth map

+

+

+

+

-

-

-You can use the [`~loaders.IPAdapterMixin.set_ip_adapter_scale`] method to adjust the text prompt and image prompt condition ratio. If you're only using the image prompt, you should set the scale to `1.0`. You can lower the scale to get more generation diversity, but it'll be less aligned with the prompt.

-`scale=0.5` can achieve good results in most cases when you use both text and image prompts.

-

-

-IP-Adapter also works great with Image-to-Image and Inpainting pipelines. See below examples of how you can use it with Image-to-Image and Inpaint.

-

-

-

-

-```py

-from diffusers import AutoPipelineForImage2Image

-import torch

-from diffusers.utils import load_image

-

-pipeline = AutoPipelineForImage2Image.from_pretrained("runwayml/stable-diffusion-v1-5", torch_dtype=torch.float16).to("cuda")

-

-image = load_image("https://huggingface.co/datasets/YiYiXu/testing-images/resolve/main/vermeer.jpg")

-ip_image = load_image("https://huggingface.co/datasets/YiYiXu/testing-images/resolve/main/river.png")

+### IP-Adapter Plus

-pipeline.load_ip_adapter("h94/IP-Adapter", subfolder="models", weight_name="ip-adapter_sd15.bin")

-generator = torch.Generator(device="cpu").manual_seed(33)

-images = pipeline(

- prompt='best quality, high quality',

- image = image,

- ip_adapter_image=ip_image,

- num_inference_steps=50,

- generator=generator,

- strength=0.6,

-).images

-images[0]

-```

+IP-Adapter relies on an image encoder to generate image features. If the IP-Adapter repository contains a `image_encoder` subfolder, the image encoder is automatically loaded and registed to the pipeline. Otherwise, you'll need to explicitly load the image encoder with a [`~transformers.CLIPVisionModelWithProjection`] model and pass it to the pipeline.

-

-

+This is the case for *IP-Adapter Plus* checkpoints which use the ViT-H image encoder.

```py

-from diffusers import AutoPipelineForInpaint

-import torch

-from diffusers.utils import load_image

-

-pipeline = AutoPipelineForInpaint.from_pretrained("runwayml/stable-diffusion-v1-5", torch_dtype=torch.float).to("cuda")

-

-image = load_image("https://huggingface.co/datasets/YiYiXu/testing-images/resolve/main/inpaint_image.png")

-mask = load_image("https://huggingface.co/datasets/YiYiXu/testing-images/resolve/main/mask.png")

-ip_image = load_image("https://huggingface.co/datasets/YiYiXu/testing-images/resolve/main/girl.png")

-

-image = image.resize((512, 768))

-mask = mask.resize((512, 768))

-

-pipeline.load_ip_adapter("h94/IP-Adapter", subfolder="models", weight_name="ip-adapter_sd15.bin")

-

-generator = torch.Generator(device="cpu").manual_seed(33)

-images = pipeline(

- prompt='best quality, high quality',

- image = image,

- mask_image = mask,

- ip_adapter_image=ip_image,

- negative_prompt="monochrome, lowres, bad anatomy, worst quality, low quality",

- num_inference_steps=50,

- generator=generator,

- strength=0.5,

-).images

-images[0]

-```

-

-

-

-

-IP-Adapters can also be used with [SDXL](../api/pipelines/stable_diffusion/stable_diffusion_xl.md)

-

-```python

-from diffusers import AutoPipelineForText2Image

-from diffusers.utils import load_image

-import torch

-

-pipeline = AutoPipelineForText2Image.from_pretrained(

- "stabilityai/stable-diffusion-xl-base-1.0",

- torch_dtype=torch.float16

-).to("cuda")

-

-image = load_image("https://huggingface.co/datasets/sayakpaul/sample-datasets/resolve/main/watercolor_painting.jpeg")

-

-pipeline.load_ip_adapter("h94/IP-Adapter", subfolder="sdxl_models", weight_name="ip-adapter_sdxl.bin")

-

-generator = torch.Generator(device="cpu").manual_seed(33)

-image = pipeline(

- prompt="best quality, high quality",

- ip_adapter_image=image,

- negative_prompt="monochrome, lowres, bad anatomy, worst quality, low quality",

- num_inference_steps=25,

- generator=generator,

-).images[0]

-image.save("sdxl_t2i.png")

-```

-

-

-

-

-

input image

-

-

-

-

adapted image

-

-

-

-

-

input image

-

-

-

-

output image

-

-

-

-

style input image

-

-

-

-

face input image

-

-

-

-

output image

-

-

-

-

-

input image

-

-

-

-

adapted image

-

-

+

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+ +

+ +

+  +

+  +

+

-

-  -

-  -

-  -

-