diff --git a/docs/source/en/_toctree.yml b/docs/source/en/_toctree.yml

index 2f4651ba3417..828a79f63cfd 100644

--- a/docs/source/en/_toctree.yml

+++ b/docs/source/en/_toctree.yml

@@ -29,10 +29,8 @@

title: Load community pipelines and components

- local: using-diffusers/schedulers

title: Load schedulers and models

- - local: using-diffusers/using_safetensors

- title: Load safetensors

- local: using-diffusers/other-formats

- title: Load different Stable Diffusion formats

+ title: Model files and layouts

- local: using-diffusers/loading_adapters

title: Load adapters

- local: using-diffusers/push_to_hub

diff --git a/docs/source/en/using-diffusers/other-formats.md b/docs/source/en/using-diffusers/other-formats.md

index 13efe7854ddc..c0f2a76b459d 100644

--- a/docs/source/en/using-diffusers/other-formats.md

+++ b/docs/source/en/using-diffusers/other-formats.md

@@ -10,167 +10,260 @@ an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express o

specific language governing permissions and limitations under the License.

-->

-# Load different Stable Diffusion formats

+# Model files and layouts

[[open-in-colab]]

-Stable Diffusion models are available in different formats depending on the framework they're trained and saved with, and where you download them from. Converting these formats for use in 🤗 Diffusers allows you to use all the features supported by the library, such as [using different schedulers](schedulers) for inference, [building your custom pipeline](write_own_pipeline), and a variety of techniques and methods for [optimizing inference speed](../optimization/opt_overview).

+Diffusion models are saved in various file types and organized in different layouts. Diffusers stores model weights as safetensors files in *Diffusers-multifolder* layout and it also supports loading files (like safetensors and ckpt files) from a *single-file* layout which is commonly used in the diffusion ecosystem.

-

+Each layout has its own benefits and use cases, and this guide will show you how to load the different files and layouts, and how to convert them.

-We highly recommend using the `.safetensors` format because it is more secure than traditional pickled files which are vulnerable and can be exploited to execute any code on your machine (learn more in the [Load safetensors](using_safetensors) guide).

+## Files

-

+PyTorch model weights are typically saved with Python's [pickle](https://docs.python.org/3/library/pickle.html) utility as ckpt or bin files. However, pickle is not secure and pickled files may contain malicious code that can be executed. This vulnerability is a serious concern given the popularity of model sharing. To address this security issue, the [Safetensors](https://hf.co/docs/safetensors) library was developed as a secure alternative to pickle, which saves models as safetensors files.

-This guide will show you how to convert other Stable Diffusion formats to be compatible with 🤗 Diffusers.

+### safetensors

-## PyTorch .ckpt

+> [!TIP]

+> Learn more about the design decisions and why safetensor files are preferred for saving and loading model weights in the [Safetensors audited as really safe and becoming the default](https://blog.eleuther.ai/safetensors-security-audit/) blog post.

-The checkpoint - or `.ckpt` - format is commonly used to store and save models. The `.ckpt` file contains the entire model and is typically several GBs in size. While you can load and use a `.ckpt` file directly with the [`~StableDiffusionPipeline.from_single_file`] method, it is generally better to convert the `.ckpt` file to 🤗 Diffusers so both formats are available.

+[Safetensors](https://hf.co/docs/safetensors) is a safe and fast file format for securely storing and loading tensors. Safetensors restricts the header size to limit certain types of attacks, supports lazy loading (useful for distributed setups), and has generally faster loading speeds.

-There are two options for converting a `.ckpt` file: use a Space to convert the checkpoint or convert the `.ckpt` file with a script.

+Make sure you have the [Safetensors](https://hf.co/docs/safetensors) library installed.

-### Convert with a Space

+```py

+!pip install safetensors

+```

-The easiest and most convenient way to convert a `.ckpt` file is to use the [SD to Diffusers](https://huggingface.co/spaces/diffusers/sd-to-diffusers) Space. You can follow the instructions on the Space to convert the `.ckpt` file.

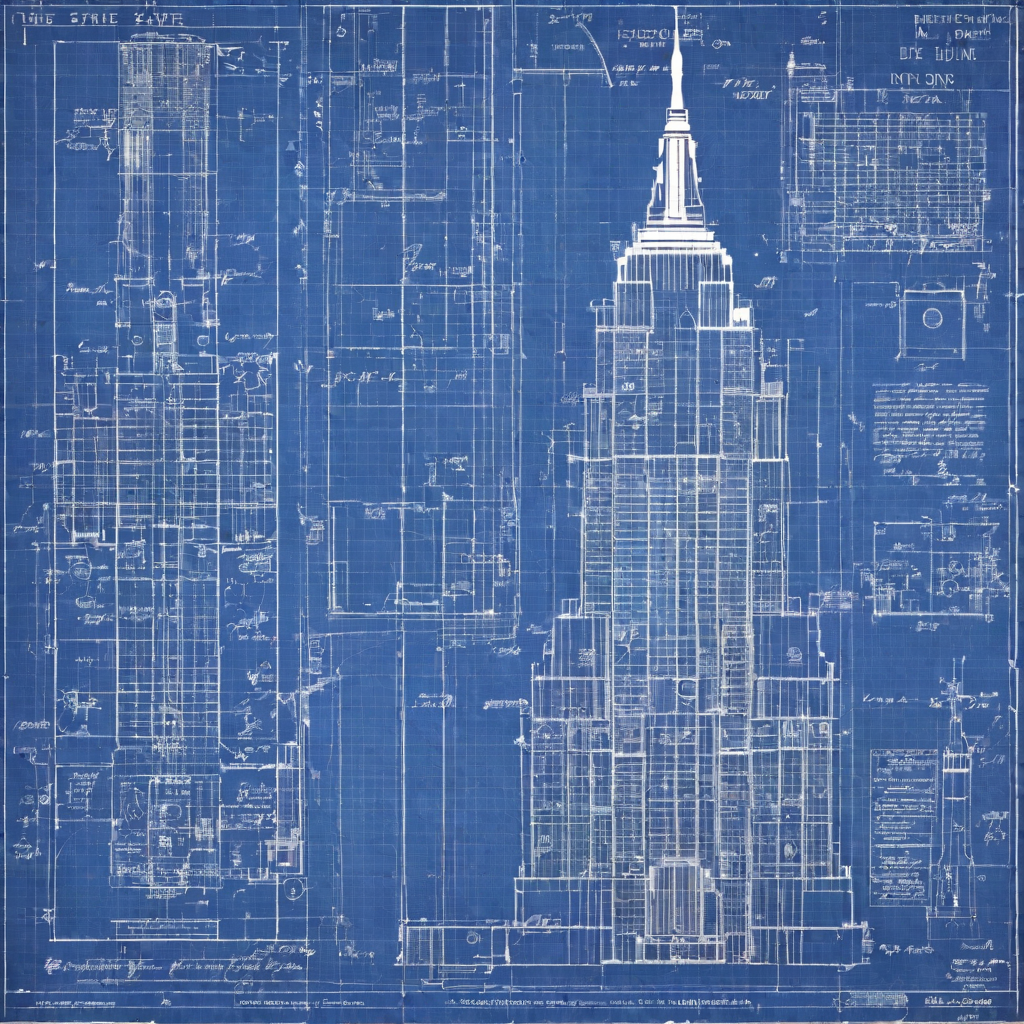

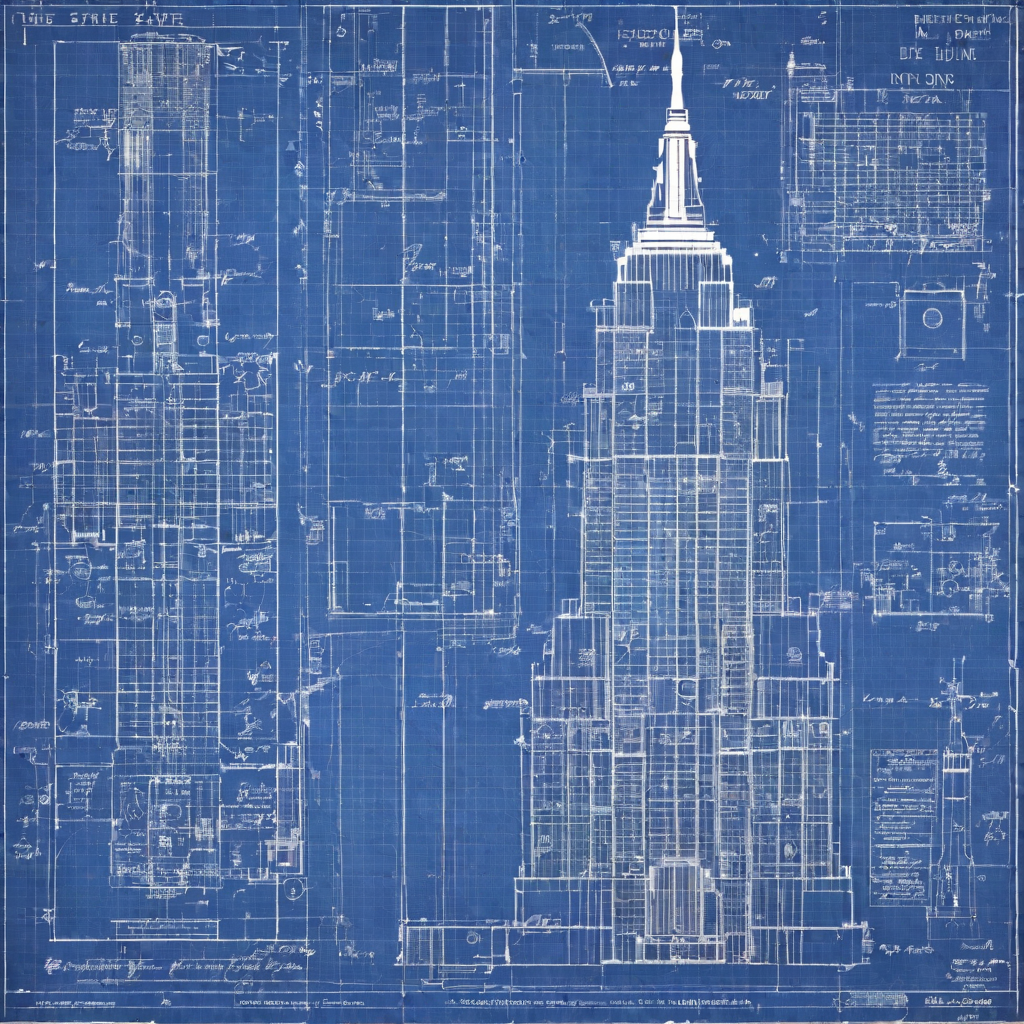

+Safetensors stores weights in a safetensors file. Diffusers loads safetensors files by default if they're available and the Safetensors library is installed. There are two ways safetensors files can be organized:

-This approach works well for basic models, but it may struggle with more customized models. You'll know the Space failed if it returns an empty pull request or error. In this case, you can try converting the `.ckpt` file with a script.

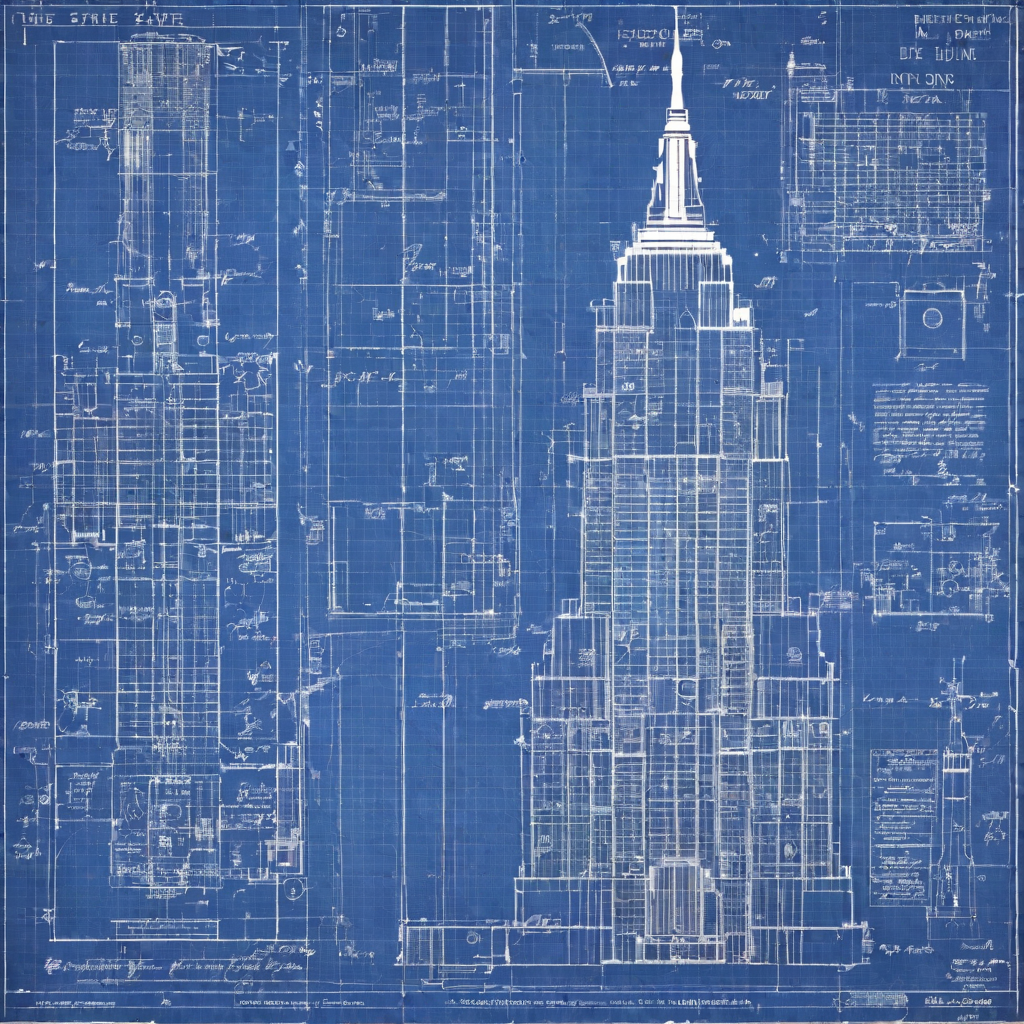

+1. Diffusers-multifolder layout: there may be several separate safetensors files, one for each pipeline component (text encoder, UNet, VAE), organized in subfolders (check out the [runwayml/stable-diffusion-v1-5](https://hf.co/runwayml/stable-diffusion-v1-5/tree/main) repository as an example)

+2. single-file layout: all the model weights may be saved in a single file (check out the [WarriorMama777/OrangeMixs](https://hf.co/WarriorMama777/OrangeMixs/tree/main/Models/AbyssOrangeMix) repository as an example)

-### Convert with a script

+

+

-🤗 Diffusers provides a [conversion script](https://github.com/huggingface/diffusers/blob/main/scripts/convert_original_stable_diffusion_to_diffusers.py) for converting `.ckpt` files. This approach is more reliable than the Space above.

+Use the [`~DiffusionPipeline.from_pretrained`] method to load a model with safetensors files stored in multiple folders.

-Before you start, make sure you have a local clone of 🤗 Diffusers to run the script and log in to your Hugging Face account so you can open pull requests and push your converted model to the Hub.

+```py

+from diffusers import DiffusionPipeline

-```bash

-huggingface-cli login

+pipeline = DiffusionPipeline.from_pretrained(

+ "runwayml/stable-diffusion-v1-5",

+ use_safetensors=True

+)

```

-To use the script:

+

+

-1. Git clone the repository containing the `.ckpt` file you want to convert. For this example, let's convert this [TemporalNet](https://huggingface.co/CiaraRowles/TemporalNet) `.ckpt` file:

+Use the [`~loaders.FromSingleFileMixin.from_single_file`] method to load a model with all the weights stored in a single safetensors file.

-```bash

-git lfs install

-git clone https://huggingface.co/CiaraRowles/TemporalNet

+```py

+from diffusers import StableDiffusionPipeline

+

+pipeline = StableDiffusionPipeline.from_single_file(

+ "https://huggingface.co/WarriorMama777/OrangeMixs/blob/main/Models/AbyssOrangeMix/AbyssOrangeMix.safetensors"

+)

```

-2. Open a pull request on the repository where you're converting the checkpoint from:

+

+

-```bash

-cd TemporalNet && git fetch origin refs/pr/13:pr/13

-git checkout pr/13

-```

+#### LoRA files

-3. There are several input arguments to configure in the conversion script, but the most important ones are:

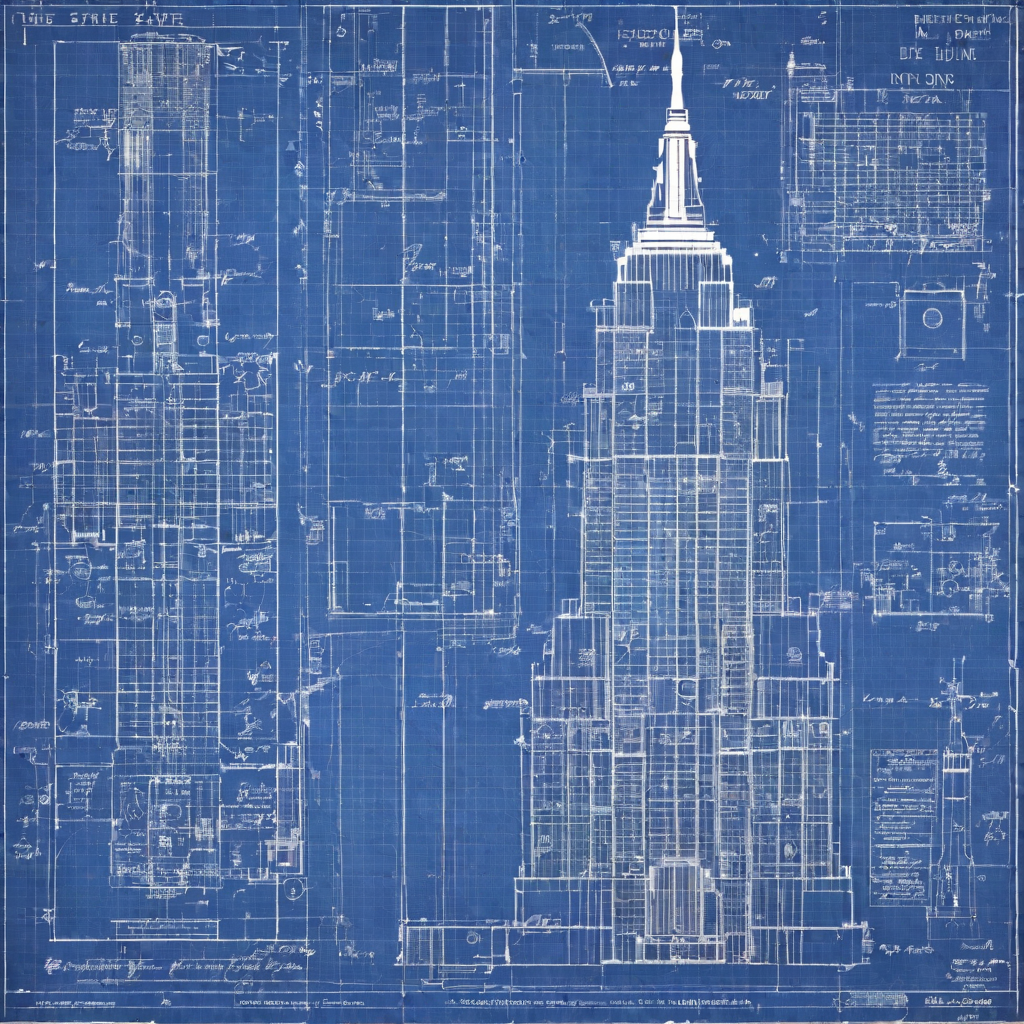

+[LoRA](https://hf.co/docs/peft/conceptual_guides/adapter#low-rank-adaptation-lora) is a lightweight adapter that is fast and easy to train, making them especially popular for generating images in a certain way or style. These adapters are commonly stored in a safetensors file, and are widely popular on model sharing platforms like [civitai](https://civitai.com/).

- - `checkpoint_path`: the path to the `.ckpt` file to convert.

- - `original_config_file`: a YAML file defining the configuration of the original architecture. If you can't find this file, try searching for the YAML file in the GitHub repository where you found the `.ckpt` file.

- - `dump_path`: the path to the converted model.

+LoRAs are loaded into a base model with the [`~loaders.LoraLoaderMixin.load_lora_weights`] method.

- For example, you can take the `cldm_v15.yaml` file from the [ControlNet](https://github.com/lllyasviel/ControlNet/tree/main/models) repository because the TemporalNet model is a Stable Diffusion v1.5 and ControlNet model.

+```py

+from diffusers import StableDiffusionXLPipeline

+import torch

-4. Now you can run the script to convert the `.ckpt` file:

+# base model

+pipeline = StableDiffusionXLPipeline.from_pretrained(

+ "Lykon/dreamshaper-xl-1-0", torch_dtype=torch.float16, variant="fp16"

+).to("cuda")

-```bash

-python ../diffusers/scripts/convert_original_stable_diffusion_to_diffusers.py --checkpoint_path temporalnetv3.ckpt --original_config_file cldm_v15.yaml --dump_path ./ --controlnet

-```

+# download LoRA weights

+!wget https://civitai.com/api/download/models/168776 -O blueprintify.safetensors

-5. Once the conversion is done, upload your converted model and test out the resulting [pull request](https://huggingface.co/CiaraRowles/TemporalNet/discussions/13)!

+# load LoRA weights

+pipeline.load_lora_weights(".", weight_name="blueprintify.safetensors")

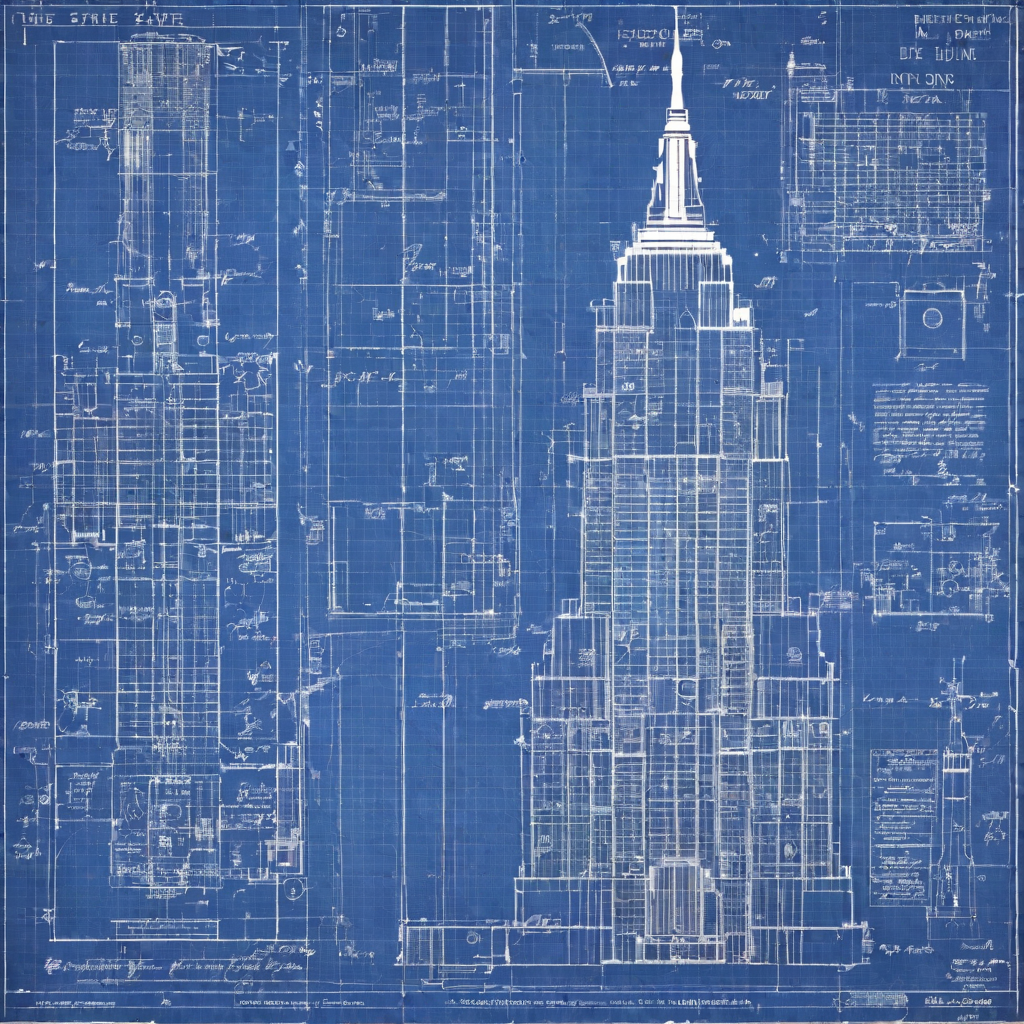

+prompt = "bl3uprint, a highly detailed blueprint of the empire state building, explaining how to build all parts, many txt, blueprint grid backdrop"

+negative_prompt = "lowres, cropped, worst quality, low quality, normal quality, artifacts, signature, watermark, username, blurry, more than one bridge, bad architecture"

-```bash

-git push origin pr/13:refs/pr/13

+image = pipeline(

+ prompt=prompt,

+ negative_prompt=negative_prompt,

+ generator=torch.manual_seed(0),

+).images[0]

+image

```

-## Keras .pb or .h5

-

-

+

+

+

+

+

+

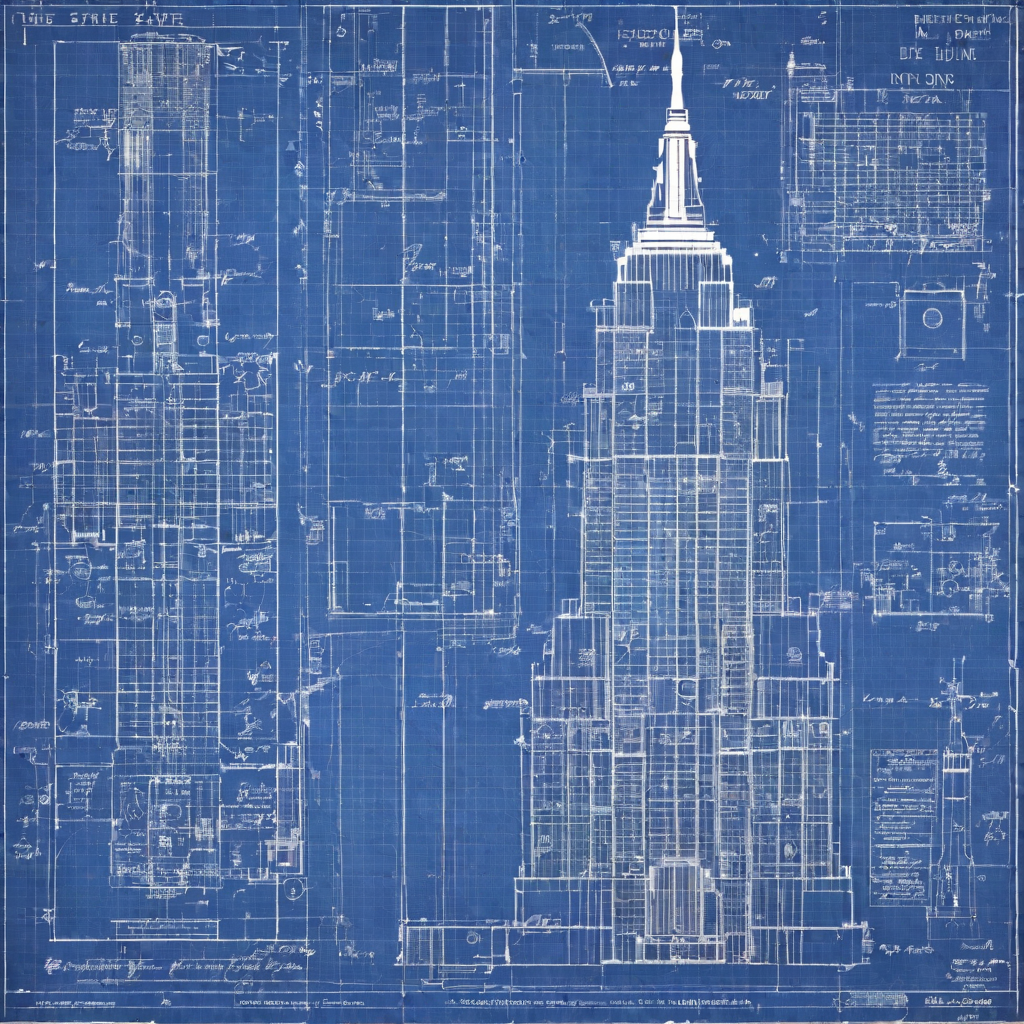

multifolder layout

+

+

+

+

UNet subfolder

+

+

+

+

-

-

+

+ +

+ +

+ +

+  +

+  +

+ -

-