New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Ratelimit:All requests are responded with 429 when redis down #12115

Comments

|

@fnp927 : Thanks for reporting this! |

|

@gargnupur this was reported in the slack channel as well. when redis failures occur, we send back a default quota result, which is 0 granted quota. it looks like that was intentional. i'm not sure what the correct thing to do here is, except it probably is better to send back an error and let the policy fail_open/fail_closed setting take over. |

|

Thanks Doug for the info.. policy fail_open/fail_closed setting: where do we define this? are you referring to network_fail_open policy here: https://sourcegraph.com/github.com/istio/proxy@ce051fe8e813e19c87cabae5fb12643d4c063edb/-/blob/include/istio/mixerclient/options.h#L42:8 ? |

|

@gargnupur Yes, and that settings is populated from https://github.com/istio/api/blob/master/mesh/v1alpha1/config.proto#L61 and pod annotations. Propagating an error to the client seems reasonable to me. |

This is so that we can result status when we are not able to fetch quota and check call can fail based on fail-open policy set by user. Issue: istio/istio#12115

* Add a status field in QuotaResult This is so that we can result status when we are not able to fetch quota and check call can fail based on fail-open policy set by user. Issue: istio/istio#12115 * Fix build

|

@gargnupur what's left here? |

|

Reopening this PR: #12507 and using the status field added in PR istio/api@657d9f2. Will try to get to these next week.. |

This is the QuotaResult that is used by grpcServer in istio.io/istio and we can set this when not able to fetch quota and check call can fail in proxy based on fail-open policy set by user. Ref: istio/istio#12115

* Add a Status field in CheckResponse Quota Result This is the QuotaResult that is used by grpcServer in istio.io/istio and we can set this when not able to fetch quota and check call can fail in proxy based on fail-open policy set by user. Ref: istio/istio#12115 * Run make proto-commit

|

@kyessenov @douglas-reid could one of you guide #15390 into master and then cherrypick into the release-1.3 branch? |

|

I've approved that PR, but I'm not 100% certain that that is the only bit needed. I think we'll want to check the proxy code to be sure that the quota status returns are being handled appropriately. |

|

@duderino after some basic manual testing with the PR changes, I'm convinced that we need a change to: https://github.com/istio/proxy/blob/master/src/istio/mixerclient/client_impl.cc#L286-L288 as well. |

|

@douglas-reid how should that code change? |

|

@duderino istio/proxy#2401 is my attempt at getting it right. Curious for your thoughts. |

|

@douglas-reid lgtm. Looks like a nice addition to 1.3 Please close this issue if there is no more work remaining |

|

I believe this can be closed now. |

|

@kyessenov @douglas-reid @gargnupur We are still seeing this issue on istio 1.3.5 after a bit of stagnant traffic. Steps to reproduce:

The |

|

It seems proxy use cache sometimes and make a "RESOURCE_EXHAUSTED:Quota is exhausted " decision instead of remote call and retrieving the UNAVAILABLE status from mixer server and do fail open . |

Some of the requests are still responsed with 429 when redis restartThanks for the previous work and I've already updated my mixer which merged the code fixed for this bug. |

|

I'm also facing the same issue with Istio 1.3.8. When Redis is up, Quotas are working properly. If I delete Redis, then all requests are responded with 429. Mixer logs are showing the following:

|

|

The same issue with Istio 1.3.0. Just as @idouba said. It may be caused by the cache check and prefetch. Please help to check the quota_cache. |

|

I'm also facing the same issue with Istio 1.3.0. As @fnp927 @wsdlll @idouba said above, when I restart the redis, all requests during restart are responded with 429 . After disable quota cache, all requests during restart are responded with 200. Is there any other way to solve this problem besides setting disable_quota_cache=true? |

|

As mentioned above, after setting disable_quota_cache=true, all requests during redis restart are responded with 200, but the performance decline is serious, reaching more than 50%.

ps: the test command is as follows @douglas-reid @gargnupur any help appreciated |

|

@PeterPanHaiHai : apologize for the late reply. |

|

Closing as per no response |

Describe the bug

I applied redis rate limit function on my cluster. And use the shell script visit it 10 times every second. The script only prints the response status code. We can see from the picture below, it runs good(200 means pass and 429 means too many requests).

However,when redis down. All requests would be responded with 429 (“too many requests”).

Is this design reasonable? From my point of view, the rate limit function should not be applied on the system when part of it encounters errors.

Expected behavior

Rate limit function should not work when something wrong.

Version

Istio version : 1.0.0

Environment

Istio 1.0.0

Some configurations

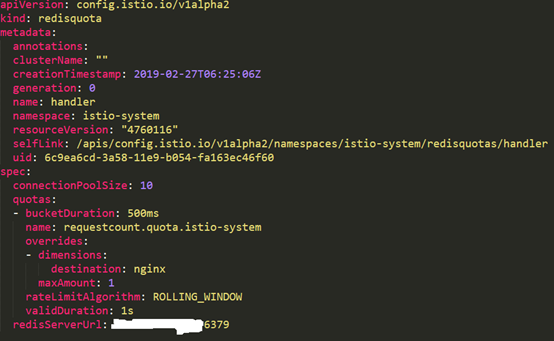

Redis quota:

quotaSpecBinding:

shell scripts

Thanks

The text was updated successfully, but these errors were encountered: