diff --git a/.github/workflows/checkbot.yml b/.github/workflows/checkbot.yml

index 1df8637e4..eff890ea2 100644

--- a/.github/workflows/checkbot.yml

+++ b/.github/workflows/checkbot.yml

@@ -5,9 +5,26 @@ on:

jobs:

# check that CML container builds properly

build-container:

- if: contains(github.event.comment.body, '/tests')

+ if: startsWith(github.event.comment.body, '/tests')

runs-on: [ubuntu-18.04]

steps:

+ - name: React Seen

+ uses: actions/github-script@v2

+ with:

+ script: |

+ const perm = await github.repos.getCollaboratorPermissionLevel({

+ owner: context.repo.owner, repo: context.repo.repo,

+ username: context.payload.comment.user.login})

+ if (!["admin", "write"].includes(perm.data.permission)){

+ github.reactions.createForIssueComment({

+ owner: context.repo.owner, repo: context.repo.repo,

+ comment_id: context.payload.comment.id, content: "laugh"})

+ throw "Permission denied for user " + context.payload.comment.user.login

+ }

+ github.reactions.createForIssueComment({

+ owner: context.repo.owner, repo: context.repo.repo,

+ comment_id: context.payload.comment.id, content: "eyes"})

+ github-token: ${{ secrets.TEST_GITHUB_TOKEN }}

- uses: actions/checkout@v2

- name: Build & Publish test image

run: |

@@ -110,9 +127,26 @@ jobs:

run: |

nvidia-smi

chatbot:

- if: contains(github.event.comment.body, '/cml-')

+ if: startsWith(github.event.comment.body, '/cml-')

runs-on: [ubuntu-latest]

steps:

+ - name: React Seen

+ uses: actions/github-script@v2

+ with:

+ script: |

+ const perm = await github.repos.getCollaboratorPermissionLevel({

+ owner: context.repo.owner, repo: context.repo.repo,

+ username: context.payload.comment.user.login})

+ if (!["admin", "write"].includes(perm.data.permission)){

+ github.reactions.createForIssueComment({

+ owner: context.repo.owner, repo: context.repo.repo,

+ comment_id: context.payload.comment.id, content: "laugh"})

+ throw "Permission denied for user " + context.payload.comment.user.login

+ }

+ github.reactions.createForIssueComment({

+ owner: context.repo.owner, repo: context.repo.repo,

+ comment_id: context.payload.comment.id, content: "eyes"})

+ github-token: ${{ secrets.TEST_GITHUB_TOKEN }}

- uses: actions/checkout@v2

- name: chatops

id: chatops

@@ -133,4 +167,4 @@ jobs:

run: |

npm ci

sudo npm link

- ${{steps.chatops.outputs.COMMAND}}

+ # ${{steps.chatops.outputs.COMMAND}}

diff --git a/README.md b/README.md

index 366d8b725..85dbe0867 100644

--- a/README.md

+++ b/README.md

@@ -1,5 +1,5 @@

-  +

+

[](https://github.com/iterative/setup-cml)

@@ -11,10 +11,10 @@ projects. Use it to automate parts of your development workflow, including model

training and evaluation, comparing ML experiments across your project history,

and monitoring changing datasets.

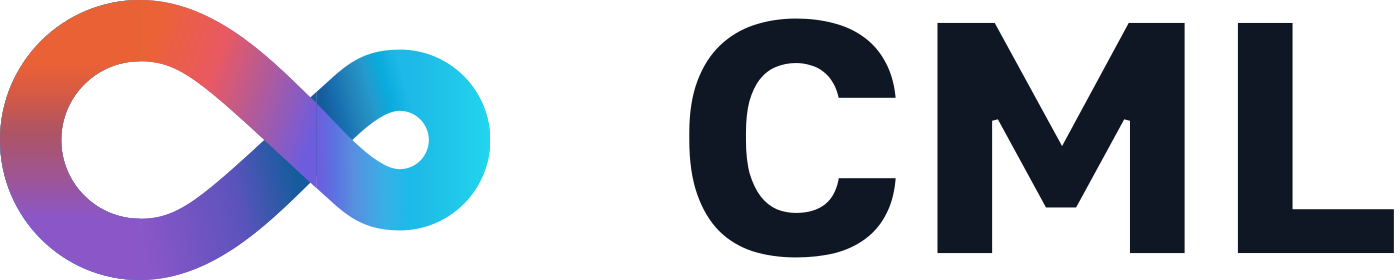

- _On every pull request, CML helps you

-automatically train and evaluate models, then generates a visual report with

-results and metrics. Above, an example report for a

-[neural style transfer model](https://github.com/iterative/cml_cloud_case)._

+ _On

+every pull request, CML helps you automatically train and evaluate models, then

+generates a visual report with results and metrics. Above, an example report for

+a [neural style transfer model](https://github.com/iterative/cml_cloud_case)._

We built CML with these principles in mind:

@@ -149,7 +149,7 @@ cml-publish graph.png --md >> report.md

> [you will need to create a Personal Access Token](https://github.com/iterative/cml/wiki/CML-with-GitLab#variables)

> for this example to work.

-

+

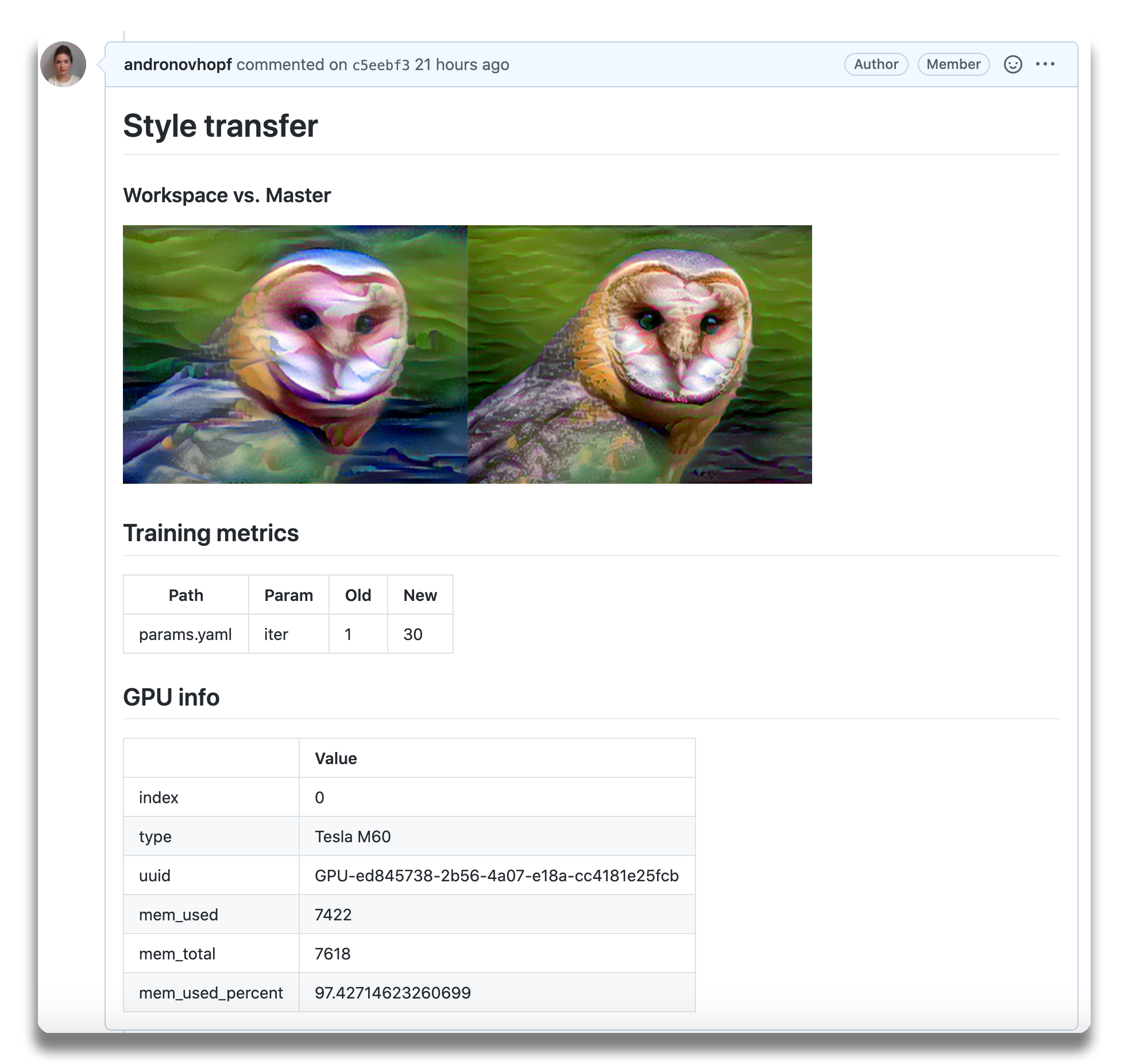

> :warning: The following steps can all be done in the GitHub browser interface.

> However, to follow along with the commands, we recommend cloning your fork to

@@ -197,13 +197,13 @@ git push origin experiment

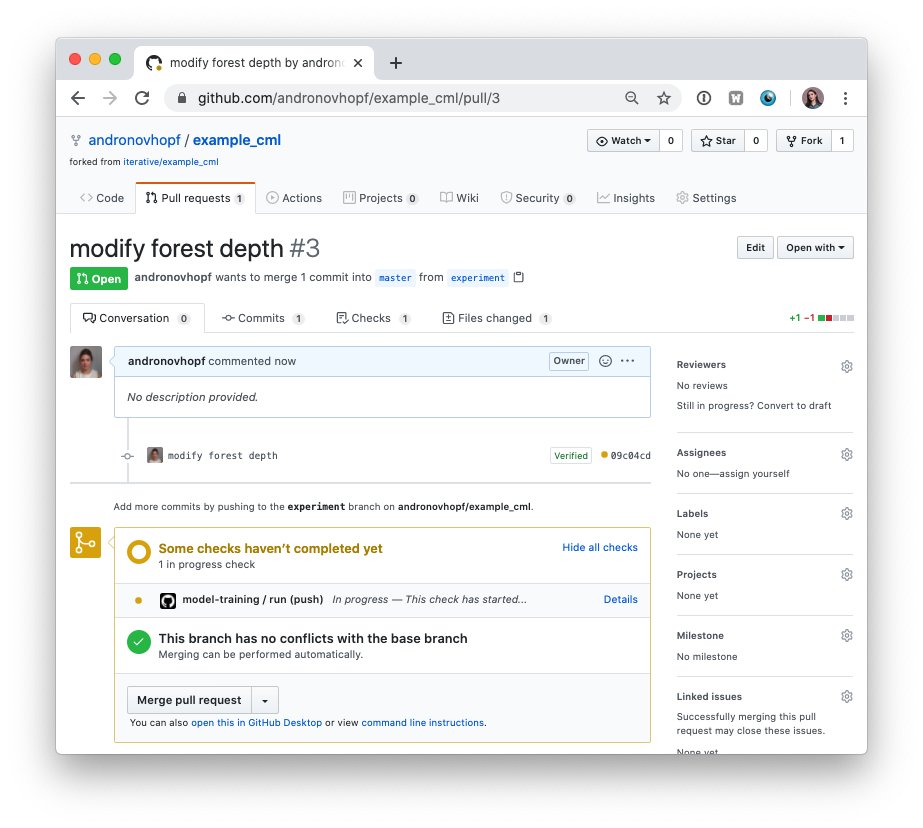

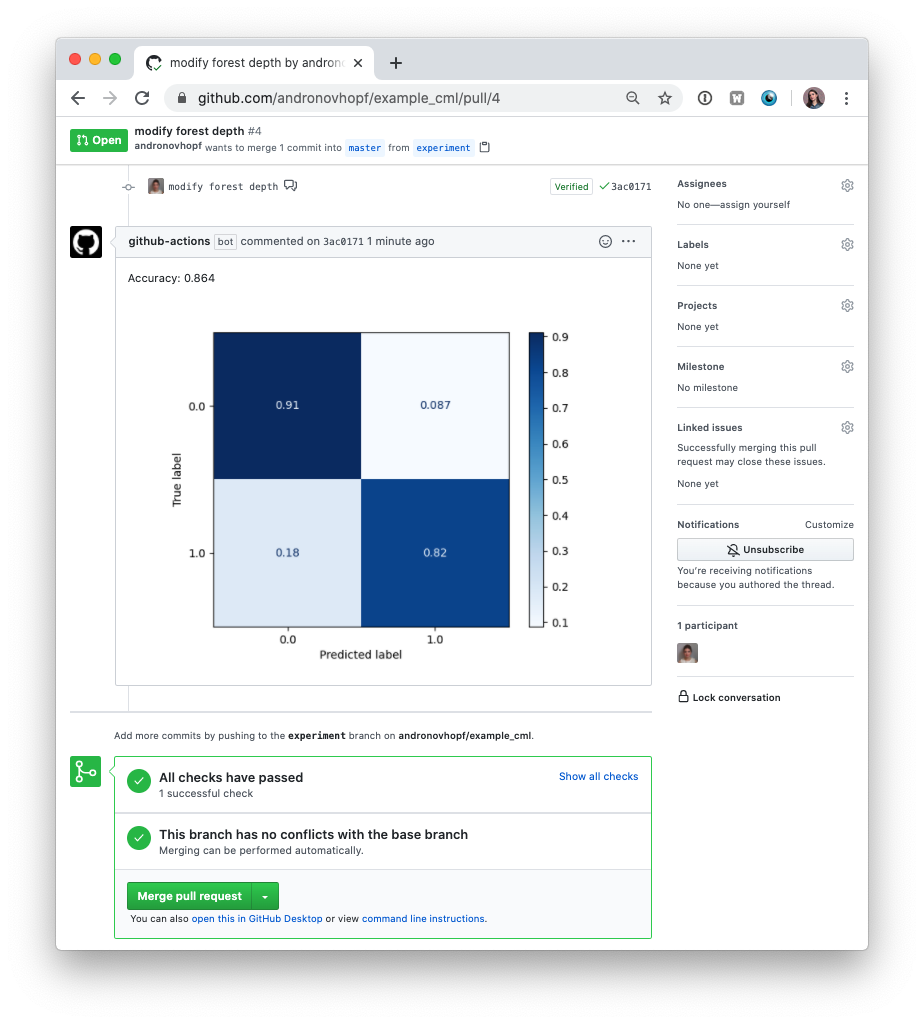

5. In GitHub, open up a Pull Request to compare the `experiment` branch to

`master`.

-

+

Shortly, you should see a comment from `github-actions` appear in the Pull

Request with your CML report. This is a result of the `cml-send-comment`

function in your workflow.

-

+

This is the outline of the CML workflow:

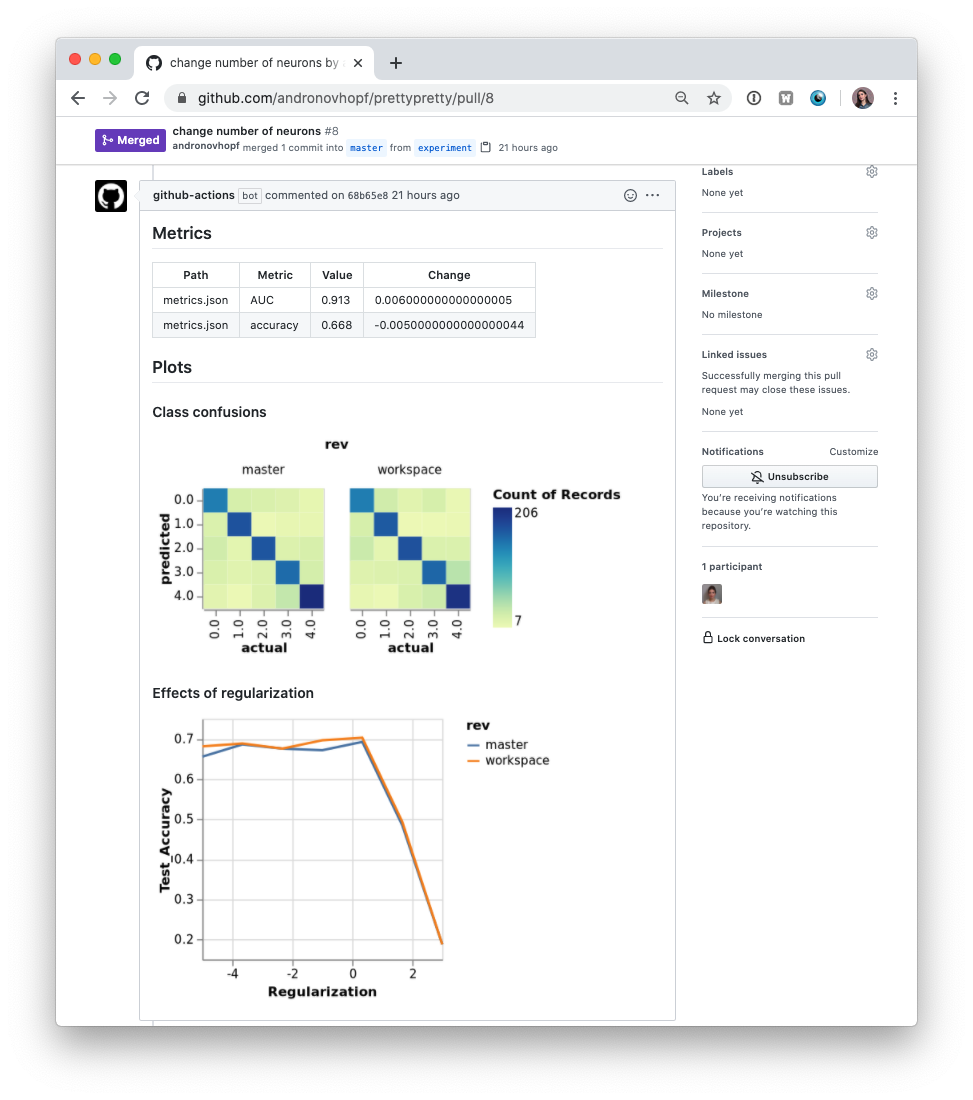

@@ -223,7 +223,7 @@ downloaded from external sources. [DVC](https://dvc.org) is a common way to

bring data to your CML runner. DVC also lets you visualize how metrics differ

between commits to make reports like this:

-

+

The `.github/workflows/cml.yaml` file used to create this report is:

diff --git a/bin/cml-pr.js b/bin/cml-pr.js

index 065c85729..2584947e4 100755

--- a/bin/cml-pr.js

+++ b/bin/cml-pr.js

@@ -4,7 +4,6 @@ const print = console.log;

console.log = console.error;

const yargs = require('yargs');

-const decamelize = require('decamelize-keys');

const CML = require('../src/cml').default;

const { GIT_REMOTE, GIT_USER_NAME, GIT_USER_EMAIL } = require('../src/cml');

@@ -12,37 +11,34 @@ const { GIT_REMOTE, GIT_USER_NAME, GIT_USER_EMAIL } = require('../src/cml');

const run = async (opts) => {

const globs = opts._.length ? opts._ : undefined;

const cml = new CML(opts);

- print((await cml.pr_create({ ...opts, globs })) || '');

+ print((await cml.prCreate({ ...opts, globs })) || '');

};

-const opts = decamelize(

- yargs

- .strict()

- .usage('Usage: $0 ... ')

- .describe('md', 'Output in markdown format [](url).')

- .boolean('md')

- .default('remote', GIT_REMOTE)

- .describe('remote', 'Sets git remote.')

- .default('user-email', GIT_USER_EMAIL)

- .describe('user-email', 'Sets git user email.')

- .default('user-name', GIT_USER_NAME)

- .describe('user-name', 'Sets git user name.')

- .default('repo')

- .describe(

- 'repo',

- 'Specifies the repo to be used. If not specified is extracted from the CI ENV.'

- )

- .default('token')

- .describe(

- 'token',

- 'Personal access token to be used. If not specified in extracted from ENV REPO_TOKEN.'

- )

- .default('driver')

- .choices('driver', ['github', 'gitlab'])

- .describe('driver', 'If not specify it infers it from the ENV.')

- .help('h').argv

-);

-

+const opts = yargs

+ .strict()

+ .usage('Usage: $0 ... ')

+ .describe('md', 'Output in markdown format [](url).')

+ .boolean('md')

+ .default('remote', GIT_REMOTE)

+ .describe('remote', 'Sets git remote.')

+ .default('user-email', GIT_USER_EMAIL)

+ .describe('user-email', 'Sets git user email.')

+ .default('user-name', GIT_USER_NAME)

+ .describe('user-name', 'Sets git user name.')

+ .default('repo')

+ .describe(

+ 'repo',

+ 'Specifies the repo to be used. If not specified is extracted from the CI ENV.'

+ )

+ .default('token')

+ .describe(

+ 'token',

+ 'Personal access token to be used. If not specified in extracted from ENV REPO_TOKEN.'

+ )

+ .default('driver')

+ .choices('driver', ['github', 'gitlab'])

+ .describe('driver', 'If not specify it infers it from the ENV.')

+ .help('h').argv;

run(opts).catch((e) => {

console.error(e);

process.exit(1);

diff --git a/bin/cml-publish.js b/bin/cml-publish.js

index 970c263f5..a244f1138 100755

--- a/bin/cml-publish.js

+++ b/bin/cml-publish.js

@@ -4,9 +4,8 @@ const print = console.log;

console.log = console.error;

const fs = require('fs').promises;

-const pipe_args = require('../src/pipe-args');

+const pipeArgs = require('../src/pipe-args');

const yargs = require('yargs');

-const decamelize = require('decamelize-keys');

const CML = require('../src/cml').default;

@@ -28,53 +27,51 @@ const run = async (opts) => {

else await fs.writeFile(file, output);

};

-pipe_args.load('binary');

-const data = pipe_args.piped_arg();

-const opts = decamelize(

- yargs

- .strict()

- .usage(`Usage: $0 `)

- .describe('md', 'Output in markdown format [title || name](url).')

- .boolean('md')

- .describe('md', 'Output in markdown format [title || name](url).')

- .default('title')

- .describe('title', 'Markdown title [title](url) or .')

- .alias('title', 't')

- .boolean('native')

- .describe(

- 'native',

- "Uses driver's native capabilities to upload assets instead of CML's storage. Currently only available for GitLab CI."

- )

- .alias('native', 'gitlab-uploads')

- .boolean('rm-watermark')

- .describe('rm-watermark', 'Avoid CML watermark.')

- .default('mime-type')

- .describe(

- 'mime-type',

- 'Specifies the mime-type. If not set guess it from the content.'

- )

- .default('file')

- .describe(

- 'file',

- 'Append the output to the given file. Create it if does not exist.'

- )

- .alias('file', 'f')

- .default('repo')

- .describe(

- 'repo',

- 'Specifies the repo to be used. If not specified is extracted from the CI ENV.'

- )

- .default('token')

- .describe(

- 'token',

- 'Personal access token to be used. If not specified, extracted from ENV REPO_TOKEN, GITLAB_TOKEN, GITHUB_TOKEN, or BITBUCKET_TOKEN.'

- )

- .default('driver')

- .choices('driver', ['github', 'gitlab'])

- .describe('driver', 'If not specify it infers it from the ENV.')

- .help('h')

- .demand(data ? 0 : 1).argv

-);

+pipeArgs.load('binary');

+const data = pipeArgs.pipedArg();

+const opts = yargs

+ .strict()

+ .usage(`Usage: $0 `)

+ .describe('md', 'Output in markdown format [title || name](url).')

+ .boolean('md')

+ .describe('md', 'Output in markdown format [title || name](url).')

+ .default('title')

+ .describe('title', 'Markdown title [title](url) or .')

+ .alias('title', 't')

+ .boolean('native')

+ .describe(

+ 'native',

+ "Uses driver's native capabilities to upload assets instead of CML's storage. Currently only available for GitLab CI."

+ )

+ .alias('native', 'gitlab-uploads')

+ .boolean('rm-watermark')

+ .describe('rm-watermark', 'Avoid CML watermark.')

+ .default('mime-type')

+ .describe(

+ 'mime-type',

+ 'Specifies the mime-type. If not set guess it from the content.'

+ )

+ .default('file')

+ .describe(

+ 'file',

+ 'Append the output to the given file. Create it if does not exist.'

+ )

+ .alias('file', 'f')

+ .default('repo')

+ .describe(

+ 'repo',

+ 'Specifies the repo to be used. If not specified is extracted from the CI ENV.'

+ )

+ .default('token')

+ .describe(

+ 'token',

+ 'Personal access token to be used. If not specified, extracted from ENV REPO_TOKEN, GITLAB_TOKEN, GITHUB_TOKEN, or BITBUCKET_TOKEN.'

+ )

+ .default('driver')

+ .choices('driver', ['github', 'gitlab'])

+ .describe('driver', 'If not specify it infers it from the ENV.')

+ .help('h')

+ .demand(data ? 0 : 1).argv;

run({ ...opts, data }).catch((e) => {

console.error(e);

diff --git a/bin/cml-runner.js b/bin/cml-runner.js

index 6886709bf..89edae82c 100755

--- a/bin/cml-runner.js

+++ b/bin/cml-runner.js

@@ -5,7 +5,6 @@ const { homedir } = require('os');

const fs = require('fs').promises;

const yargs = require('yargs');

-const decamelize = require('decamelize-keys');

const { exec, randid, sleep } = require('../src/utils');

const tf = require('../src/terraform');

@@ -41,17 +40,17 @@ const shutdown = async (opts) => {

let { error, cloud } = opts;

const { name, workdir = '' } = opts;

- const tf_path = workdir;

+ const tfPath = workdir;

console.log(

JSON.stringify({ level: error ? 'error' : 'info', status: 'terminated' })

);

if (error) console.error(error);

- const unregister_runner = async () => {

+ const unregisterRunner = async () => {

try {

console.log('Unregistering runner...');

- await cml.unregister_runner({ name });

+ await cml.unregisterRunner({ name });

console.log('\tSuccess');

} catch (err) {

console.error('\tFailed');

@@ -59,7 +58,7 @@ const shutdown = async (opts) => {

}

};

- const shutdown_docker_machine = async () => {

+ const shutdownDockerMachine = async () => {

console.log('docker-machine destroy...');

console.log(

'Docker machine is deprecated and this will be removed!! Check how to deploy using our tf provider.'

@@ -72,25 +71,25 @@ const shutdown = async (opts) => {

}

};

- const shutdown_tf = async () => {

- const { tf_resource } = opts;

+ const shutdownTf = async () => {

+ const { tfResource } = opts;

- if (!tf_resource) {

+ if (!tfResource) {

console.log(`\tNo TF resource found`);

return;

}

try {

- await tf.destroy({ dir: tf_path });

+ await tf.destroy({ dir: tfPath });

} catch (err) {

console.error(`\tFailed Terraform destroy: ${err.message}`);

error = err;

}

};

- const destroy_terraform = async () => {

+ const destroyTerraform = async () => {

try {

- console.log(await tf.destroy({ dir: tf_path }));

+ console.log(await tf.destroy({ dir: tfPath }));

} catch (err) {

console.error(`\tFailed destroying terraform: ${err.message}`);

error = err;

@@ -98,90 +97,90 @@ const shutdown = async (opts) => {

};

if (cloud) {

- await destroy_terraform();

+ await destroyTerraform();

} else {

- RUNNER_LAUNCHED && (await unregister_runner());

+ RUNNER_LAUNCHED && (await unregisterRunner());

console.log(

`\tDestroy scheduled: ${RUNNER_DESTROY_DELAY} seconds remaining.`

);

await sleep(RUNNER_DESTROY_DELAY);

- DOCKER_MACHINE && (await shutdown_docker_machine());

- await shutdown_tf();

+ DOCKER_MACHINE && (await shutdownDockerMachine());

+ await shutdownTf();

}

process.exit(error ? 1 : 0);

};

-const run_cloud = async (opts) => {

- const { cloud_ssh_private_visible } = opts;

+const runCloud = async (opts) => {

+ const { cloudSshPrivateVisible } = opts;

- const run_terraform = async (opts) => {

+ const runTerraform = async (opts) => {

console.log('Terraform apply...');

const { token, repo, driver } = cml;

const {

labels,

- idle_timeout,

+ idleTimeout,

name,

single,

cloud,

- cloud_region: region,

- cloud_type: type,

- cloud_gpu: gpu,

- cloud_hdd_size: hdd_size,

- cloud_ssh_private: ssh_private,

- cloud_spot: spot,

- cloud_spot_price: spot_price,

- cloud_startup_script: startup_script,

- tf_file,

+ cloudRegion: region,

+ cloudType: type,

+ cloudGpu: gpu,

+ cloudHddSize: hddSize,

+ cloudSshPrivate: sshPrivate,

+ cloudSpot: spot,

+ cloudSpotPrice: spotPrice,

+ cloudStartupScript: startupScript,

+ tfFile,

workdir

} = opts;

- const tf_path = workdir;

- const tf_main_path = join(tf_path, 'main.tf');

+ const tfPath = workdir;

+ const tfMainPath = join(tfPath, 'main.tf');

let tpl;

- if (tf_file) {

- tpl = await fs.writeFile(tf_main_path, await fs.readFile(tf_file));

+ if (tfFile) {

+ tpl = await fs.writeFile(tfMainPath, await fs.readFile(tfFile));

} else {

if (gpu === 'tesla')

console.log(

'GPU model "tesla" has been deprecated; please use "v100" instead.'

);

- tpl = tf.iterative_cml_runner_tpl({

+ tpl = tf.iterativeCmlRunnerTpl({

repo,

token,

driver,

labels,

- idle_timeout,

+ idleTimeout,

name,

single,

cloud,

region,

type,

gpu: gpu === 'tesla' ? 'v100' : gpu,

- hdd_size,

- ssh_private,

+ hddSize,

+ sshPrivate,

spot,

- spot_price,

- startup_script

+ spotPrice,

+ startupScript

});

}

- await fs.writeFile(tf_main_path, tpl);

- await tf.init({ dir: tf_path });

- await tf.apply({ dir: tf_path });

+ await fs.writeFile(tfMainPath, tpl);

+ await tf.init({ dir: tfPath });

+ await tf.apply({ dir: tfPath });

- const tfstate_path = join(tf_path, 'terraform.tfstate');

- const tfstate = await tf.load_tfstate({ path: tfstate_path });

+ const tfStatePath = join(tfPath, 'terraform.tfstate');

+ const tfstate = await tf.loadTfState({ path: tfStatePath });

return tfstate;

};

console.log('Deploying cloud runner plan...');

- const tfstate = await run_terraform(opts);

+ const tfstate = await runTerraform(opts);

const { resources } = tfstate;

for (let i = 0; i < resources.length; i++) {

const resource = resources[i];

@@ -192,30 +191,31 @@ const run_cloud = async (opts) => {

for (let j = 0; j < instances.length; j++) {

const instance = instances[j];

- if (!cloud_ssh_private_visible) {

- instance.attributes.ssh_private = '[MASKED]';

+ if (!cloudSshPrivateVisible) {

+ instance.attributes.sshPrivate = '[MASKED]';

}

+ instance.attributes.token = '[MASKED]';

console.log(JSON.stringify(instance));

}

}

}

};

-const run_local = async (opts) => {

+const runLocal = async (opts) => {

console.log(`Launching ${cml.driver} runner`);

- const { workdir, name, labels, single, idle_timeout } = opts;

+ const { workdir, name, labels, single, idleTimeout } = opts;

- const proc = await cml.start_runner({

+ const proc = await cml.startRunner({

workdir,

name,

labels,

single,

- idle_timeout

+ idleTimeout

});

- const data_handler = (data) => {

- const log = cml.parse_runner_log({ data });

+ const dataHandler = (data) => {

+ const log = cml.parseRunnerLog({ data });

log && console.log(JSON.stringify(log));

if (log && log.status === 'job_started') {

@@ -225,16 +225,16 @@ const run_local = async (opts) => {

RUNNER_JOBS_RUNNING.pop();

}

};

- proc.stderr.on('data', data_handler);

- proc.stdout.on('data', data_handler);

+ proc.stderr.on('data', dataHandler);

+ proc.stdout.on('data', dataHandler);

proc.on('uncaughtException', () => shutdown(opts));

proc.on('SIGINT', () => shutdown(opts));

proc.on('SIGTERM', () => shutdown(opts));

proc.on('SIGQUIT', () => shutdown(opts));

- if (parseInt(idle_timeout) !== 0) {

+ if (parseInt(idleTimeout) !== 0) {

const watcher = setInterval(() => {

- RUNNER_TIMEOUT_TIMER >= idle_timeout &&

+ RUNNER_TIMEOUT_TIMER >= idleTimeout &&

shutdown(opts) &&

clearInterval(watcher);

@@ -260,36 +260,35 @@ const run = async (opts) => {

labels,

name,

reuse,

- tf_resource

+ tfResource

} = opts;

cml = new CML({ driver, repo, token });

- await tf.check_min_version();

+ await tf.checkMinVersion();

// prepare tf

- if (tf_resource) {

- const tf_path = workdir;

- const { tf_resource } = opts;

-

- await fs.mkdir(tf_path, { recursive: true });

- const tf_main_path = join(tf_path, 'main.tf');

- const tpl = tf.iterative_provider_tpl();

- await fs.writeFile(tf_main_path, tpl);

- await tf.init({ dir: tf_path });

- await tf.apply({ dir: tf_path });

- const path = join(tf_path, 'terraform.tfstate');

- const tfstate = await tf.load_tfstate({ path });

+ if (tfResource) {

+ const tfPath = workdir;

+

+ await fs.mkdir(tfPath, { recursive: true });

+ const tfMainPath = join(tfPath, 'main.tf');

+ const tpl = tf.iterativeProviderTpl();

+ await fs.writeFile(tfMainPath, tpl);

+ await tf.init({ dir: tfPath });

+ await tf.apply({ dir: tfPath });

+ const path = join(tfPath, 'terraform.tfstate');

+ const tfstate = await tf.loadTfState({ path });

tfstate.resources = [

- JSON.parse(Buffer.from(tf_resource, 'base64').toString('utf-8'))

+ JSON.parse(Buffer.from(tfResource, 'base64').toString('utf-8'))

];

- await tf.save_tfstate({ tfstate, path });

+ await tf.saveTfState({ tfstate, path });

}

// if (name !== NAME) {

- await cml.repo_token_check();

+ await cml.repoTokenCheck();

- if (await cml.runner_by_name({ name })) {

+ if (await cml.runnerByName({ name })) {

if (!reuse)

throw new Error(

`Runner name ${name} is already in use. Please change the name or terminate the other runner.`

@@ -298,7 +297,7 @@ const run = async (opts) => {

process.exit(0);

}

- if (reuse && (await cml.runners_by_labels({ labels })).length > 0) {

+ if (reuse && (await cml.runnersByLabels({ labels })).length > 0) {

console.log(`Reusing existing runners with the ${labels} labels...`);

process.exit(0);

}

@@ -308,98 +307,97 @@ const run = async (opts) => {

await fs.mkdir(workdir, { recursive: true });

} catch (err) {}

- if (cloud) await run_cloud(opts);

- else await run_local(opts);

+ if (cloud) await runCloud(opts);

+ else await runLocal(opts);

};

-const opts = decamelize(

- yargs

- .strict()

- .usage(`Usage: $0`)

- .default('labels', RUNNER_LABELS)

- .describe(

- 'labels',

- 'One or more user-defined labels for this runner (delimited with commas)'

- )

- .default('idle-timeout', RUNNER_IDLE_TIMEOUT)

- .describe(

- 'idle-timeout',

- 'Time in seconds for the runner to be waiting for jobs before shutting down. Setting it to 0 disables automatic shutdown'

- )

- .default('name', RUNNER_NAME)

- .describe('name', 'Name displayed in the repository once registered')

-

- .boolean('single')

- .default('single', RUNNER_SINGLE)

- .describe('single', 'Exit after running a single job')

- .boolean('reuse')

- .default('reuse', RUNNER_REUSE)

- .describe(

- 'reuse',

- "Don't launch a new runner if an existing one has the same name or overlapping labels"

- )

-

- .default('driver', RUNNER_DRIVER)

- .describe(

- 'driver',

- 'Platform where the repository is hosted. If not specified, it will be inferred from the environment'

- )

- .choices('driver', ['github', 'gitlab'])

- .default('repo', RUNNER_REPO)

- .describe(

- 'repo',

- 'Repository to be used for registering the runner. If not specified, it will be inferred from the environment'

- )

- .default('token', REPO_TOKEN)

- .describe(

- 'token',

- 'Personal access token to register a self-hosted runner on the repository. If not specified, it will be inferred from the environment'

- )

- .default('cloud')

- .describe('cloud', 'Cloud to deploy the runner')

- .choices('cloud', ['aws', 'azure', 'kubernetes'])

- .default('cloud-region', 'us-west')

- .describe(

- 'cloud-region',

- 'Region where the instance is deployed. Choices: [us-east, us-west, eu-west, eu-north]. Also accepts native cloud regions'

- )

- .default('cloud-type')

- .describe(

- 'cloud-type',

- 'Instance type. Choices: [m, l, xl]. Also supports native types like i.e. t2.micro'

- )

- .default('cloud-gpu')

- .describe('cloud-gpu', 'GPU type.')

- .choices('cloud-gpu', ['nogpu', 'k80', 'v100', 'tesla'])

- .coerce('cloud-gpu-type', (val) => (val === 'nogpu' ? null : val))

- .default('cloud-hdd-size')

- .describe('cloud-hdd-size', 'HDD size in GB')

- .default('cloud-ssh-private', '')

- .describe(

- 'cloud-ssh-private',

- 'Custom private RSA SSH key. If not provided an automatically generated throwaway key will be used'

- )

- .boolean('cloud-ssh-private-visible')

- .describe(

- 'cloud-ssh-private-visible',

- 'Show the private SSH key in the output with the rest of the instance properties (not recommended)'

- )

- .boolean('cloud-spot')

- .describe('cloud-spot', 'Request a spot instance')

- .default('cloud-spot-price', '-1')

- .describe(

- 'cloud-spot-price',

- 'Maximum spot instance bidding price in USD. Defaults to the current spot bidding price'

- )

- .default('cloud-startup-script', '')

- .describe(

- 'cloud-startup-script',

- 'Run the provided Base64-encoded Linux shell script during the instance initialization'

- )

- .default('tf_resource')

- .hide('tf_resource')

- .help('h').argv

-);

+const opts = yargs

+ .strict()

+ .usage(`Usage: $0`)

+ .default('labels', RUNNER_LABELS)

+ .describe(

+ 'labels',

+ 'One or more user-defined labels for this runner (delimited with commas)'

+ )

+ .default('idle-timeout', RUNNER_IDLE_TIMEOUT)

+ .describe(

+ 'idle-timeout',

+ 'Time in seconds for the runner to be waiting for jobs before shutting down. Setting it to 0 disables automatic shutdown'

+ )

+ .default('name', RUNNER_NAME)

+ .describe('name', 'Name displayed in the repository once registered')

+

+ .boolean('single')

+ .default('single', RUNNER_SINGLE)

+ .describe('single', 'Exit after running a single job')

+ .boolean('reuse')

+ .default('reuse', RUNNER_REUSE)

+ .describe(

+ 'reuse',

+ "Don't launch a new runner if an existing one has the same name or overlapping labels"

+ )

+

+ .default('driver', RUNNER_DRIVER)

+ .describe(

+ 'driver',

+ 'Platform where the repository is hosted. If not specified, it will be inferred from the environment'

+ )

+ .choices('driver', ['github', 'gitlab'])

+ .default('repo', RUNNER_REPO)

+ .describe(

+ 'repo',

+ 'Repository to be used for registering the runner. If not specified, it will be inferred from the environment'

+ )

+ .default('token', REPO_TOKEN)

+ .describe(

+ 'token',

+ 'Personal access token to register a self-hosted runner on the repository. If not specified, it will be inferred from the environment'

+ )

+ .default('cloud')

+ .describe('cloud', 'Cloud to deploy the runner')

+ .choices('cloud', ['aws', 'azure', 'kubernetes'])

+ .default('cloud-region', 'us-west')

+ .describe(

+ 'cloud-region',

+ 'Region where the instance is deployed. Choices: [us-east, us-west, eu-west, eu-north]. Also accepts native cloud regions'

+ )

+ .default('cloud-type')

+ .describe(

+ 'cloud-type',

+ 'Instance type. Choices: [m, l, xl]. Also supports native types like i.e. t2.micro'

+ )

+ .default('cloud-gpu')

+ .describe('cloud-gpu', 'GPU type.')

+ .choices('cloud-gpu', ['nogpu', 'k80', 'v100', 'tesla'])

+ .coerce('cloud-gpu-type', (val) => (val === 'nogpu' ? null : val))

+ .default('cloud-hdd-size')

+ .describe('cloud-hdd-size', 'HDD size in GB')

+ .default('cloud-ssh-private', '')

+ .describe(

+ 'cloud-ssh-private',

+ 'Custom private RSA SSH key. If not provided an automatically generated throwaway key will be used'

+ )

+ .boolean('cloud-ssh-private-visible')

+ .describe(

+ 'cloud-ssh-private-visible',

+ 'Show the private SSH key in the output with the rest of the instance properties (not recommended)'

+ )

+ .boolean('cloud-spot')

+ .describe('cloud-spot', 'Request a spot instance')

+ .default('cloud-spot-price', '-1')

+ .describe(

+ 'cloud-spot-price',

+ 'Maximum spot instance bidding price in USD. Defaults to the current spot bidding price'

+ )

+ .default('cloud-startup-script', '')

+ .describe(

+ 'cloud-startup-script',

+ 'Run the provided Base64-encoded Linux shell script during the instance initialization'

+ )

+ .default('tf-resource')

+ .hide('tf-resource')

+ .alias('tf-resource', 'tf_resource')

+ .help('h').argv;

run(opts).catch((error) => {

shutdown({ ...opts, error });

diff --git a/bin/cml-send-comment.js b/bin/cml-send-comment.js

index 2335dd3e4..6ee5d1034 100755

--- a/bin/cml-send-comment.js

+++ b/bin/cml-send-comment.js

@@ -4,7 +4,6 @@ console.log = console.error;

const fs = require('fs').promises;

const yargs = require('yargs');

-const decamelize = require('decamelize-keys');

const CML = require('../src/cml').default;

@@ -12,40 +11,38 @@ const run = async (opts) => {

const path = opts._[0];

const report = await fs.readFile(path, 'utf-8');

const cml = new CML(opts);

- await cml.comment_create({ ...opts, report });

+ await cml.commentCreate({ ...opts, report });

};

-const opts = decamelize(

- yargs

- .strict()

- .usage('Usage: $0 ')

- .default('commit-sha')

- .describe(

- 'commit-sha',

- 'Commit SHA linked to this comment. Defaults to HEAD.'

- )

- .alias('commit-sha', 'head-sha')

- .boolean('rm-watermark')

- .describe(

- 'rm-watermark',

- 'Avoid watermark. CML needs a watermark to be able to distinguish CML reports from other comments in order to provide extra functionality.'

- )

- .default('repo')

- .describe(

- 'repo',

- 'Specifies the repo to be used. If not specified is extracted from the CI ENV.'

- )

- .default('token')

- .describe(

- 'token',

- 'Personal access token to be used. If not specified in extracted from ENV REPO_TOKEN.'

- )

- .default('driver')

- .choices('driver', ['github', 'gitlab'])

- .describe('driver', 'If not specify it infers it from the ENV.')

- .help('h')

- .demand(1).argv

-);

+const opts = yargs

+ .strict()

+ .usage('Usage: $0 ')

+ .default('commit-sha')

+ .describe(

+ 'commit-sha',

+ 'Commit SHA linked to this comment. Defaults to HEAD.'

+ )

+ .alias('commit-sha', 'head-sha')

+ .boolean('rm-watermark')

+ .describe(

+ 'rm-watermark',

+ 'Avoid watermark. CML needs a watermark to be able to distinguish CML reports from other comments in order to provide extra functionality.'

+ )

+ .default('repo')

+ .describe(

+ 'repo',

+ 'Specifies the repo to be used. If not specified is extracted from the CI ENV.'

+ )

+ .default('token')

+ .describe(

+ 'token',

+ 'Personal access token to be used. If not specified in extracted from ENV REPO_TOKEN.'

+ )

+ .default('driver')

+ .choices('driver', ['github', 'gitlab'])

+ .describe('driver', 'If not specify it infers it from the ENV.')

+ .help('h')

+ .demand(1).argv;

run(opts).catch((e) => {

console.error(e);

diff --git a/bin/cml-send-github-check.js b/bin/cml-send-github-check.js

index 731f24559..db50148ed 100755

--- a/bin/cml-send-github-check.js

+++ b/bin/cml-send-github-check.js

@@ -4,7 +4,6 @@ console.log = console.error;

const fs = require('fs').promises;

const yargs = require('yargs');

-const decamelize = require('decamelize-keys');

const CML = require('../src/cml').default;

const CHECK_TITLE = 'CML Report';

@@ -13,46 +12,40 @@ const run = async (opts) => {

const path = opts._[0];

const report = await fs.readFile(path, 'utf-8');

const cml = new CML({ ...opts });

- await cml.check_create({ ...opts, report });

+ await cml.checkCreate({ ...opts, report });

};

-const opts = decamelize(

- yargs

- .strict()

- .usage('Usage: $0 ')

- .describe(

- 'commit-sha',

- 'Commit SHA linked to this comment. Defaults to HEAD.'

- )

- .alias('commit-sha', 'head-sha')

- .default(

- 'conclusion',

- 'success',

- 'Sets the conclusion status of the check.'

- )

- .choices('conclusion', [

- 'success',

- 'failure',

- 'neutral',

- 'cancelled',

- 'skipped',

- 'timed_out'

- ])

- .default('title', CHECK_TITLE)

- .describe('title', 'Sets title of the check.')

- .default('repo')

- .describe(

- 'repo',

- 'Specifies the repo to be used. If not specified is extracted from the CI ENV.'

- )

- .default('token')

- .describe(

- 'token',

- 'Personal access token to be used. If not specified in extracted from ENV REPO_TOKEN.'

- )

- .help('h')

- .demand(1).argv

-);

+const opts = yargs

+ .strict()

+ .usage('Usage: $0 ')

+ .describe(

+ 'commit-sha',

+ 'Commit SHA linked to this comment. Defaults to HEAD.'

+ )

+ .alias('commit-sha', 'head-sha')

+ .default('conclusion', 'success', 'Sets the conclusion status of the check.')

+ .choices('conclusion', [

+ 'success',

+ 'failure',

+ 'neutral',

+ 'cancelled',

+ 'skipped',

+ 'timed_out'

+ ])

+ .default('title', CHECK_TITLE)

+ .describe('title', 'Sets title of the check.')

+ .default('repo')

+ .describe(

+ 'repo',

+ 'Specifies the repo to be used. If not specified is extracted from the CI ENV.'

+ )

+ .default('token')

+ .describe(

+ 'token',

+ 'Personal access token to be used. If not specified in extracted from ENV REPO_TOKEN.'

+ )

+ .help('h')

+ .demand(1).argv;

run(opts).catch((e) => {

console.error(e);

diff --git a/bin/cml-tensorboard-dev.js b/bin/cml-tensorboard-dev.js

index d374858a6..236b6df5f 100755

--- a/bin/cml-tensorboard-dev.js

+++ b/bin/cml-tensorboard-dev.js

@@ -9,11 +9,11 @@ const { spawn } = require('child_process');

const { homedir } = require('os');

const tempy = require('tempy');

-const { exec, watermark_uri } = require('../src/utils');

+const { exec, watermarkUri } = require('../src/utils');

const { TB_CREDENTIALS } = process.env;

-const close_fd = (fd) => {

+const closeFd = (fd) => {

try {

fd.close();

} catch (err) {

@@ -30,7 +30,7 @@ const run = async (opts) => {

name,

description,

title,

- 'rm-watermark': rm_watermark

+ rmWatermark

} = opts;

// set credentials

@@ -40,21 +40,21 @@ const run = async (opts) => {

// launch tensorboard on background

const help = await exec('tensorboard dev upload -h');

- const extra_params_found =

+ const extraParamsFound =

(name || description) && help.indexOf('--description') >= 0;

- const extra_params = extra_params_found

+ const extraParams = extraParamsFound

? `--name "${name}" --description "${description}"`

: '';

- const command = `tensorboard dev upload --logdir ${logdir} ${extra_params}`;

- const stdout_path = tempy.file({ extension: 'log' });

- const stdout_fd = await fs.open(stdout_path, 'a');

- const stderr_path = tempy.file({ extension: 'log' });

- const stderr_fd = await fs.open(stderr_path, 'a');

+ const command = `tensorboard dev upload --logdir ${logdir} ${extraParams}`;

+ const stdoutPath = tempy.file({ extension: 'log' });

+ const stdoutFd = await fs.open(stdoutPath, 'a');

+ const stderrPath = tempy.file({ extension: 'log' });

+ const stderrFd = await fs.open(stderrPath, 'a');

const proc = spawn(command, [], {

detached: true,

shell: true,

- stdio: ['ignore', stdout_fd, stderr_fd]

+ stdio: ['ignore', stdoutFd, stderrFd]

});

proc.unref();

@@ -64,29 +64,29 @@ const run = async (opts) => {

// reads stdout every 5 secs to find the tb uri

setInterval(async () => {

- const stdout_data = await fs.readFile(stdout_path, 'utf8');

+ const stdoutData = await fs.readFile(stdoutPath, 'utf8');

const regex = /(https?:\/\/[^\s]+)/;

- const matches = stdout_data.match(regex);

+ const matches = stdoutData.match(regex);

if (matches.length) {

let output = matches[0];

- if (!rm_watermark) output = watermark_uri({ uri: output, type: 'tb' });

+ if (!rmWatermark) output = watermarkUri({ uri: output, type: 'tb' });

if (md) output = `[${title || name}](${output})`;

if (!file) print(output);

else await fs.appendFile(file, output);

- close_fd(stdout_fd) && close_fd(stderr_fd);

+ closeFd(stdoutFd) && closeFd(stderrFd);

process.exit(0);

}

}, 1 * 5 * 1000);

// waits 1 min before dies

setTimeout(async () => {

- close_fd(stdout_fd) && close_fd(stderr_fd);

- console.error(await fs.readFile(stderr_path, 'utf8'));

+ closeFd(stdoutFd) && closeFd(stderrFd);

+ console.error(await fs.readFile(stderrPath, 'utf8'));

throw new Error('Tensorboard took too long! Canceled.');

}, 1 * 60 * 1000);

};

diff --git a/bin/cml-tensorboard-dev.test.js b/bin/cml-tensorboard-dev.test.js

index 104255489..08f8a3c00 100644

--- a/bin/cml-tensorboard-dev.test.js

+++ b/bin/cml-tensorboard-dev.test.js

@@ -1,18 +1,18 @@

jest.setTimeout(200000);

-const { exec, is_proc_running, sleep } = require('../src/utils');

+const { exec, isProcRunning, sleep } = require('../src/utils');

const CREDENTIALS =

'{"refresh_token": "1//03FiVnGk2xhnNCgYIARAAGAMSNwF-L9IrPH8FOOVWEYUihFDToqxyLArxfnbKFmxEfhzys_KYVVzBisYlAy225w4HaX3ais5TV_Q", "token_uri": "https://oauth2.googleapis.com/token", "client_id": "373649185512-8v619h5kft38l4456nm2dj4ubeqsrvh6.apps.googleusercontent.com", "client_secret": "pOyAuU2yq2arsM98Bw5hwYtr", "scopes": ["openid", "https://www.googleapis.com/auth/userinfo.email"], "type": "authorized_user"}';

-const is_tb_running = async () => {

+const isTbRunning = async () => {

await sleep(2);

- const is_running = await is_proc_running({ name: 'tensorboard' });

+ const isRunning = await isProcRunning({ name: 'tensorboard' });

- return is_running;

+ return isRunning;

};

-const rm_tb_dev_experiment = async (tb_output) => {

- const id = /experiment\/([a-zA-Z0-9]{22})/.exec(tb_output)[1];

+const rmTbDevExperiment = async (tbOutput) => {

+ const id = /experiment\/([a-zA-Z0-9]{22})/.exec(tbOutput)[1];

await exec(`tensorboard dev delete --experiment_id ${id}`);

};

@@ -53,10 +53,10 @@ describe('CML e2e', () => {

--logdir logs --name '${name}' --description '${desc}'`

);

- const is_running = await is_tb_running();

- await rm_tb_dev_experiment(output);

+ const isRunning = await isTbRunning();

+ await rmTbDevExperiment(output);

- expect(is_running).toBe(true);

+ expect(isRunning).toBe(true);

expect(output.startsWith(`[${title}](https://`)).toBe(true);

expect(output.includes('cml=tb')).toBe(true);

});

diff --git a/imgs/GitLab_CML_report.png b/imgs/GitLab_CML_report.png

deleted file mode 100644

index 2ddc09ae4..000000000

Binary files a/imgs/GitLab_CML_report.png and /dev/null differ

diff --git a/imgs/action_in_progress.png b/imgs/action_in_progress.png

deleted file mode 100644

index 450bd89e3..000000000

Binary files a/imgs/action_in_progress.png and /dev/null differ

diff --git a/imgs/bitbucket_cloud_pr.png b/imgs/bitbucket_cloud_pr.png

deleted file mode 100644

index 3142e901a..000000000

Binary files a/imgs/bitbucket_cloud_pr.png and /dev/null differ

diff --git a/imgs/cloud_case_snapshot.png b/imgs/cloud_case_snapshot.png

deleted file mode 100644

index f6d864849..000000000

Binary files a/imgs/cloud_case_snapshot.png and /dev/null differ

diff --git a/imgs/cml_first_report.png b/imgs/cml_first_report.png

deleted file mode 100644

index 06c3c7a93..000000000

Binary files a/imgs/cml_first_report.png and /dev/null differ

diff --git a/imgs/dark_logo.png b/imgs/dark_logo.png

deleted file mode 100644

index bebb284c1..000000000

Binary files a/imgs/dark_logo.png and /dev/null differ

diff --git a/imgs/dvc_cml_long_report.png b/imgs/dvc_cml_long_report.png

deleted file mode 100644

index 750c04c67..000000000

Binary files a/imgs/dvc_cml_long_report.png and /dev/null differ

diff --git a/imgs/dvc_confusions.png b/imgs/dvc_confusions.png

deleted file mode 100644

index cd7803eab..000000000

Binary files a/imgs/dvc_confusions.png and /dev/null differ

diff --git a/imgs/dvc_diff.png b/imgs/dvc_diff.png

deleted file mode 100644

index 5e973b667..000000000

Binary files a/imgs/dvc_diff.png and /dev/null differ

diff --git a/imgs/dvc_metric.png b/imgs/dvc_metric.png

deleted file mode 100644

index 4b7ddc747..000000000

Binary files a/imgs/dvc_metric.png and /dev/null differ

diff --git a/imgs/fork_project.png b/imgs/fork_project.png

deleted file mode 100644

index 8d3f36f30..000000000

Binary files a/imgs/fork_project.png and /dev/null differ

diff --git a/imgs/github_cloud_case_lessshadow.png b/imgs/github_cloud_case_lessshadow.png

deleted file mode 100644

index faaf1ae03..000000000

Binary files a/imgs/github_cloud_case_lessshadow.png and /dev/null differ

diff --git a/imgs/logo_v2.png b/imgs/logo_v2.png

deleted file mode 100644

index c406c2ad0..000000000

Binary files a/imgs/logo_v2.png and /dev/null differ

diff --git a/imgs/make_pr.png b/imgs/make_pr.png

deleted file mode 100644

index 8933b1829..000000000

Binary files a/imgs/make_pr.png and /dev/null differ

diff --git a/imgs/title_strip.png b/imgs/title_strip.png

deleted file mode 100644

index 8cedaf239..000000000

Binary files a/imgs/title_strip.png and /dev/null differ

diff --git a/imgs/title_strip_trim.png b/imgs/title_strip_trim.png

deleted file mode 100644

index 871dbc2e5..000000000

Binary files a/imgs/title_strip_trim.png and /dev/null differ

diff --git a/package-lock.json b/package-lock.json

index d41574702..f00bd5512 100644

--- a/package-lock.json

+++ b/package-lock.json

@@ -1,6 +1,6 @@

{

"name": "@dvcorg/cml",

- "version": "0.4.3",

+ "version": "0.4.4",

"lockfileVersion": 1,

"requires": true,

"dependencies": {

@@ -1758,15 +1758,6 @@

"resolved": "https://registry.npmjs.org/decamelize/-/decamelize-1.2.0.tgz",

"integrity": "sha1-9lNNFRSCabIDUue+4m9QH5oZEpA="

},

- "decamelize-keys": {

- "version": "1.1.0",

- "resolved": "https://registry.npmjs.org/decamelize-keys/-/decamelize-keys-1.1.0.tgz",

- "integrity": "sha1-0XGoeTMlKAfrPLYdwcFEXQeN8tk=",

- "requires": {

- "decamelize": "^1.1.0",

- "map-obj": "^1.0.0"

- }

- },

"decode-uri-component": {

"version": "0.2.0",

"resolved": "https://registry.npmjs.org/decode-uri-component/-/decode-uri-component-0.2.0.tgz",

@@ -5619,11 +5610,6 @@

"integrity": "sha1-wyq9C9ZSXZsFFkW7TyasXcmKDb8=",

"dev": true

},

- "map-obj": {

- "version": "1.0.1",

- "resolved": "https://registry.npmjs.org/map-obj/-/map-obj-1.0.1.tgz",

- "integrity": "sha1-2TPOuSBdgr3PSIb2dCvcK03qFG0="

- },

"map-visit": {

"version": "1.0.0",

"resolved": "https://registry.npmjs.org/map-visit/-/map-visit-1.0.0.tgz",

diff --git a/package.json b/package.json

index 5b8879485..8762dc117 100644

--- a/package.json

+++ b/package.json

@@ -1,6 +1,6 @@

{

"name": "@dvcorg/cml",

- "version": "0.4.3",

+ "version": "0.4.4",

"author": {

"name": "DVC",

"url": "http://cml.dev"

@@ -60,7 +60,6 @@

"dependencies": {

"@actions/core": "^1.2.5",

"@actions/github": "^4.0.0",

- "decamelize-keys": "^1.1.0",

"form-data": "^3.0.0",

"fs-extra": "^9.0.1",

"git-url-parse": "^11.4.0",

@@ -91,6 +90,6 @@

"lint-staged": "^10.0.8",

"prettier": "^2.1.1"

},

- "description": "

",

+ "description": "

",

"homepage": "https://github.com/iterative/cml#readme"

}

diff --git a/src/cml.js b/src/cml.js

index ed08d7c25..b7df63add 100644

--- a/src/cml.js

+++ b/src/cml.js

@@ -1,13 +1,13 @@

const { execSync } = require('child_process');

-const git_url_parse = require('git-url-parse');

-const strip_auth = require('strip-url-auth');

+const gitUrlParse = require('git-url-parse');

+const stripAuth = require('strip-url-auth');

const globby = require('globby');

const git = require('simple-git/promise')('./');

const Gitlab = require('./drivers/gitlab');

const Github = require('./drivers/github');

const BitBucketCloud = require('./drivers/bitbucket_cloud');

-const { upload, exec, watermark_uri } = require('./utils');

+const { upload, exec, watermarkUri } = require('./utils');

const {

GITHUB_REPOSITORY,

@@ -23,32 +23,32 @@ const GITHUB = 'github';

const GITLAB = 'gitlab';

const BB = 'bitbucket';

-const uri_no_trailing_slash = (uri) => {

+const uriNoTrailingSlash = (uri) => {

return uri.endsWith('/') ? uri.substr(0, uri.length - 1) : uri;

};

-const git_remote_url = (opts = {}) => {

+const gitRemoteUrl = (opts = {}) => {

const { remote = GIT_REMOTE } = opts;

const url = execSync(`git config --get remote.${remote}.url`).toString(

'utf8'

);

- return strip_auth(git_url_parse(url).toString('https').replace('.git', ''));

+ return stripAuth(gitUrlParse(url).toString('https'));

};

-const infer_token = () => {

+const inferToken = () => {

const {

REPO_TOKEN,

- repo_token,

+ repo_token: repoToken,

GITHUB_TOKEN,

GITLAB_TOKEN,

BITBUCKET_TOKEN

} = process.env;

return (

- REPO_TOKEN || repo_token || GITHUB_TOKEN || GITLAB_TOKEN || BITBUCKET_TOKEN

+ REPO_TOKEN || repoToken || GITHUB_TOKEN || GITLAB_TOKEN || BITBUCKET_TOKEN

);

};

-const infer_driver = (opts = {}) => {

+const inferDriver = (opts = {}) => {

const { repo } = opts;

if (repo && repo.includes('github.com')) return GITHUB;

if (repo && repo.includes('gitlab.com')) return GITLAB;

@@ -59,7 +59,7 @@ const infer_driver = (opts = {}) => {

if (BITBUCKET_REPO_UUID) return BB;

};

-const get_driver = (opts) => {

+const getDriver = (opts) => {

const { driver, repo, token } = opts;

if (!driver) throw new Error('driver not set');

@@ -74,58 +74,61 @@ class CML {

constructor(opts = {}) {

const { driver, repo, token } = opts;

- this.repo = uri_no_trailing_slash(repo || git_remote_url());

- this.token = token || infer_token();

- this.driver = driver || infer_driver({ repo: this.repo });

+ this.repo = uriNoTrailingSlash(repo || gitRemoteUrl()).replace(

+ /\.git$/,

+ ''

+ );

+ this.token = token || inferToken();

+ this.driver = driver || inferDriver({ repo: this.repo });

}

- async head_sha() {

- const { sha } = get_driver(this);

+ async headSha() {

+ const { sha } = getDriver(this);

return sha || (await exec(`git rev-parse HEAD`));

}

async branch() {

- const { branch } = get_driver(this);

+ const { branch } = getDriver(this);

return branch || (await exec(`git branch --show-current`));

}

- async comment_create(opts = {}) {

+ async commentCreate(opts = {}) {

const {

- report: user_report,

- commit_sha = await this.head_sha(),

- rm_watermark

+ report: userReport,

+ commitSha = await this.headSha(),

+ rmWatermark

} = opts;

- const watermark = rm_watermark

+ const watermark = rmWatermark

? ''

: ' \n\n ';

- const report = `${user_report}${watermark}`;

+ const report = `${userReport}${watermark}`;

- return await get_driver(this).comment_create({

+ return await getDriver(this).commentCreate({

...opts,

report,

- commit_sha

+ commitSha

});

}

- async check_create(opts = {}) {

- const { head_sha = await this.head_sha() } = opts;

+ async checkCreate(opts = {}) {

+ const { headSha = await this.headSha() } = opts;

- return await get_driver(this).check_create({ ...opts, head_sha });

+ return await getDriver(this).checkCreate({ ...opts, headSha });

}

async publish(opts = {}) {

- const { title = '', md, native, gitlab_uploads, rm_watermark } = opts;

+ const { title = '', md, native, gitlabUploads, rmWatermark } = opts;

let mime, uri;

- if (native || gitlab_uploads) {

- ({ mime, uri } = await get_driver(this).upload(opts));

+ if (native || gitlabUploads) {

+ ({ mime, uri } = await getDriver(this).upload(opts));

} else {

({ mime, uri } = await upload(opts));

}

- if (!rm_watermark) {

+ if (!rmWatermark) {

const [, type] = mime.split('/');

- uri = watermark_uri({ uri, type });

+ uri = watermarkUri({ uri, type });

}

if (md && mime.match('(image|video)/.*'))

@@ -136,11 +139,11 @@ class CML {

return uri;

}

- async runner_token() {

- return await get_driver(this).runner_token();

+ async runnerToken() {

+ return await getDriver(this).runnerToken();

}

- parse_runner_log(opts = {}) {

+ parseRunnerLog(opts = {}) {

let { data } = opts;

if (!data) return;

@@ -199,47 +202,47 @@ class CML {

}

}

- async start_runner(opts = {}) {

- return await get_driver(this).start_runner(opts);

+ async startRunner(opts = {}) {

+ return await getDriver(this).startRunner(opts);

}

- async register_runner(opts = {}) {

- return await get_driver(this).register_runner(opts);

+ async registerRunner(opts = {}) {

+ return await getDriver(this).registerRunner(opts);

}

- async unregister_runner(opts = {}) {

- return await get_driver(this).unregister_runner(opts);

+ async unregisterRunner(opts = {}) {

+ return await getDriver(this).unregisterRunner(opts);

}

- async runner_by_name(opts = {}) {

- return await get_driver(this).runner_by_name(opts);

+ async runnerByName(opts = {}) {

+ return await getDriver(this).runnerByName(opts);

}

- async runners_by_labels(opts = {}) {

- return await get_driver(this).runners_by_labels(opts);

+ async runnersByLabels(opts = {}) {

+ return await getDriver(this).runnersByLabels(opts);

}

- async repo_token_check() {

+ async repoTokenCheck() {

try {

- await this.runner_token();

+ await this.runnerToken();

} catch (err) {

- throw new Error(

- 'REPO_TOKEN does not have enough permissions to access workflow API'

- );

+ if (err.message === 'Bad credentials')

+ err.message += ', REPO_TOKEN should be a personal access token';

+ throw err;

}

}

- async pr_create(opts = {}) {

- const driver = get_driver(this);

+ async prCreate(opts = {}) {

+ const driver = getDriver(this);

const {

remote = GIT_REMOTE,

- user_email = GIT_USER_EMAIL,

- user_name = GIT_USER_NAME,

+ userEmail = GIT_USER_EMAIL,

+ userName = GIT_USER_NAME,

globs = ['dvc.lock', '.gitignore'],

md

} = opts;

- const render_pr = (url) => {

+ const renderPr = (url) => {

if (md)

return `[CML's ${

this.driver === GITLAB ? 'Merge' : 'Pull'

@@ -261,35 +264,35 @@ class CML {

return;

}

- const sha = await this.head_sha();

- const sha_short = sha.substr(0, 8);

+ const sha = await this.headSha();

+ const shaShort = sha.substr(0, 8);

const target = await this.branch();

- const source = `${target}-cml-pr-${sha_short}`;

+ const source = `${target}-cml-pr-${shaShort}`;

- const branch_exists = (

+ const branchExists = (

await exec(

`git ls-remote $(git config --get remote.${remote}.url) ${source}`

)

).includes(source);

- if (branch_exists) {

+ if (branchExists) {

const prs = await driver.prs();

const { url } =

prs.find(

(pr) => source.endsWith(pr.source) && target.endsWith(pr.target)

) || {};

- if (url) return render_pr(url);

+ if (url) return renderPr(url);

} else {

- await exec(`git config --local user.email "${user_email}"`);

- await exec(`git config --local user.name "${user_name}"`);

+ await exec(`git config --local user.email "${userEmail}"`);

+ await exec(`git config --local user.name "${userName}"`);

if (CI) {

if (this.driver === GITLAB) {

const repo = new URL(this.repo);

repo.password = this.token;

- repo.username = driver.user_name;

+ repo.username = driver.userName;

await exec(`git remote rm ${remote}`);

await exec(`git remote add ${remote} "${repo.toString()}.git"`);

@@ -299,26 +302,26 @@ class CML {

await exec(`git checkout -B ${target} ${sha}`);

await exec(`git checkout -b ${source}`);

await exec(`git add ${paths.join(' ')}`);

- await exec(`git commit -m "CML PR for ${sha_short} [skip ci]"`);

+ await exec(`git commit -m "CML PR for ${shaShort} [skip ci]"`);

await exec(`git push --set-upstream ${remote} ${source}`);

}

- const title = `CML PR for ${target} ${sha_short}`;

+ const title = `CML PR for ${target} ${shaShort}`;

const description = `

Automated commits for ${this.repo}/commit/${sha} created by CML.

`;

- const url = await driver.pr_create({

+ const url = await driver.prCreate({

source,

target,

title,

description

});

- return render_pr(url);

+ return renderPr(url);

}

- log_error(e) {

+ logError(e) {

console.error(e.message);

}

}

diff --git a/src/cml.test.js b/src/cml.test.js

index 5a6396921..b307b4923 100644

--- a/src/cml.test.js

+++ b/src/cml.test.js

@@ -60,21 +60,21 @@ describe('Github tests', () => {

test('Comment should succeed with a valid sha', async () => {

const report = '## Test comment';

- await new CML({ repo: REPO }).comment_create({ report, commit_sha: SHA });

+ await new CML({ repo: REPO }).commentCreate({ report, commitSha: SHA });

});

test('Comment should fail with a invalid sha', async () => {

- let catched_err;

+ let caughtErr;

try {

const report = '## Test comment';

- const commit_sha = 'invalid_sha';

+ const commitSha = 'invalid_sha';

- await new CML({ repo: REPO }).comment_create({ report, commit_sha });

+ await new CML({ repo: REPO }).commentCreate({ report, commitSha });

} catch (err) {

- catched_err = err.message;

+ caughtErr = err.message;

}

- expect(catched_err).toBe('No commit found for SHA: invalid_sha');

+ expect(caughtErr).toBe('No commit found for SHA: invalid_sha');

});

});

@@ -108,7 +108,7 @@ describe('Gitlab tests', () => {

const output = await new CML({ repo: REPO }).publish({

path,

- gitlab_uploads: true

+ gitlabUploads: true

});

expect(output.startsWith('https://')).toBe(true);

@@ -123,7 +123,7 @@ describe('Gitlab tests', () => {

path,

md: true,

title,

- gitlab_uploads: true

+ gitlabUploads: true

});

expect(output.startsWith(').toBe(true);

@@ -139,7 +139,7 @@ describe('Gitlab tests', () => {

path,

md: true,

title,

- gitlab_uploads: true

+ gitlabUploads: true

});

expect(output.startsWith(`[${title}](https://`)).toBe(true);

@@ -164,7 +164,7 @@ describe('Gitlab tests', () => {

});

test('Publish should fail with an invalid driver', async () => {

- let catched_err;

+ let caughtErr;

try {

const path = `${__dirname}/../assets/logo.pdf`;

await new CML({ repo: REPO, driver: 'invalid' }).publish({

@@ -173,28 +173,28 @@ describe('Gitlab tests', () => {

native: true

});

} catch (err) {

- catched_err = err.message;

+ caughtErr = err.message;

}

- expect(catched_err).not.toBeUndefined();

+ expect(caughtErr).not.toBeUndefined();

});

test('Comment should succeed with a valid sha', async () => {

const report = '## Test comment';

- await new CML({ repo: REPO }).comment_create({ report, commit_sha: SHA });

+ await new CML({ repo: REPO }).commentCreate({ report, commitSha: SHA });

});

test('Comment should fail with a invalid sha', async () => {

- let catched_err;

+ let caughtErr;

try {

const report = '## Test comment';

- const commit_sha = 'invalid_sha';

+ const commitSha = 'invalid_sha';

- await new CML({ repo: REPO }).comment_create({ report, commit_sha });

+ await new CML({ repo: REPO }).commentCreate({ report, commitSha });

} catch (err) {

- catched_err = err.message;

+ caughtErr = err.message;

}

- expect(catched_err).toBe('Not Found');

+ expect(caughtErr).toBe('Not Found');

});

});

diff --git a/src/drivers/bitbucket_cloud.js b/src/drivers/bitbucket_cloud.js

index 62ea008c6..1f6c097da 100644

--- a/src/drivers/bitbucket_cloud.js

+++ b/src/drivers/bitbucket_cloud.js

@@ -16,41 +16,41 @@ class BitBucketCloud {

const { protocol, host, pathname } = new URL(this.repo);

this.repo_origin = `${protocol}//${host}`;

this.api = 'https://api.bitbucket.org/2.0';

- this.project_path = encodeURIComponent(pathname.substring(1));

+ this.projectPath = encodeURIComponent(pathname.substring(1));

}

}

- async comment_create(opts = {}) {

- const { project_path } = this;

- const { commit_sha, report } = opts;

+ async commentCreate(opts = {}) {

+ const { projectPath } = this;

+ const { commitSha, report } = opts;

// Make a comment in the commit

- const commit_endpoint = `/repositories/${project_path}/commit/${commit_sha}/comments/`;

- const commit_body = JSON.stringify({ content: { raw: report } });

- const commit_output = await this.request({

- endpoint: commit_endpoint,

+ const commitEndpoint = `/repositories/${projectPath}/commit/${commitSha}/comments/`;

+ const commitBody = JSON.stringify({ content: { raw: report } });

+ const commitOutput = await this.request({

+ endpoint: commitEndpoint,

method: 'POST',

- body: commit_body

+ body: commitBody

});

// Check for a corresponding PR. If it exists, also put the comment there.

- const get_pr_endpt = `/repositories/${project_path}/commit/${commit_sha}/pullrequests`;

- const { values: prs } = await this.request({ endpoint: get_pr_endpt });

+ const getPrEndpt = `/repositories/${projectPath}/commit/${commitSha}/pullrequests`;

+ const { values: prs } = await this.request({ endpoint: getPrEndpt });

if (prs && prs.length) {

for (const pr of prs) {

try {

// Append a watermark to the report with a link to the commit

- const commit_link = commit_sha.substr(0, 7);

- const long_report = `${commit_link} \n${report}`;

- const pr_body = JSON.stringify({ content: { raw: long_report } });

+ const commitLink = commitSha.substr(0, 7);

+ const longReport = `${commitLink} \n${report}`;

+ const prBody = JSON.stringify({ content: { raw: longReport } });

// Write a comment on the PR

- const pr_endpoint = `/repositories/${project_path}/pullrequests/${pr.id}/comments`;

+ const prEndpoint = `/repositories/${projectPath}/pullrequests/${pr.id}/comments`;

await this.request({

- endpoint: pr_endpoint,

+ endpoint: prEndpoint,

method: 'POST',

- body: pr_body

+ body: prBody

});

} catch (err) {

console.debug(err.message);

@@ -58,10 +58,10 @@ class BitBucketCloud {

}

}

- return commit_output;

+ return commitOutput;

}

- async check_create() {

+ async checkCreate() {

throw new Error('BitBucket Cloud does not support check!');

}

@@ -69,28 +69,28 @@ class BitBucketCloud {

throw new Error('BitBucket Cloud does not support upload!');

}

- async runner_token() {

- throw new Error('BitBucket Cloud does not support runner_token!');

+ async runnerToken() {

+ throw new Error('BitBucket Cloud does not support runnerToken!');

}

- async register_runner(opts = {}) {

- throw new Error('BitBucket Cloud does not support register_runner!');

+ async registerRunner(opts = {}) {

+ throw new Error('BitBucket Cloud does not support registerRunner!');

}

- async unregister_runner(opts = {}) {

- throw new Error('BitBucket Cloud does not support unregister_runner!');

+ async unregisterRunner(opts = {}) {

+ throw new Error('BitBucket Cloud does not support unregisterRunner!');

}

- async runner_by_name(opts = {}) {

- throw new Error('BitBucket Cloud does not support runner_by_name!');

+ async runnerByName(opts = {}) {

+ throw new Error('BitBucket Cloud does not support runnerByName!');

}

- async runners_by_labels(opts = {}) {

+ async runnersByLabels(opts = {}) {

throw new Error('BitBucket Cloud does not support runner_by_labels!');

}

- async pr_create(opts = {}) {

- const { project_path } = this;

+ async prCreate(opts = {}) {

+ const { projectPath } = this;

const { source, target, title, description } = opts;

const body = JSON.stringify({

@@ -107,7 +107,7 @@ class BitBucketCloud {

}

}

});

- const endpoint = `/repositories/${project_path}/pullrequests/`;

+ const endpoint = `/repositories/${projectPath}/pullrequests/`;

const {

links: {

html: { href }

@@ -122,10 +122,10 @@ class BitBucketCloud {

}

async prs(opts = {}) {

- const { project_path } = this;

+ const { projectPath } = this;

const { state = 'OPEN' } = opts;

- const endpoint = `/repositories/${project_path}/pullrequests?state=${state}`;

+ const endpoint = `/repositories/${projectPath}/pullrequests?state=${state}`;

const { values: prs } = await this.request({ endpoint });

return prs.map((pr) => {

@@ -178,9 +178,9 @@ class BitBucketCloud {

return BITBUCKET_BRANCH;

}

- get user_email() {}

+ get userEmail() {}

- get user_name() {}

+ get userName() {}

}

module.exports = BitBucketCloud;

diff --git a/src/drivers/bitbucket_cloud.test.js b/src/drivers/bitbucket_cloud.test.js

index 98fc8062a..f619ea876 100644

--- a/src/drivers/bitbucket_cloud.test.js

+++ b/src/drivers/bitbucket_cloud.test.js

@@ -13,12 +13,12 @@ describe('Non Enviromental tests', () => {

});

test('Comment', async () => {

const report = '## Test comment';

- const commit_sha = SHA;

+ const commitSha = SHA;

- await client.comment_create({ report, commit_sha });

+ await client.commentCreate({ report, commitSha });

});

test('Check', async () => {

- await expect(client.check_create()).rejects.toThrow(

+ await expect(client.checkCreate()).rejects.toThrow(

'BitBucket Cloud does not support check!'

);

});

@@ -29,8 +29,8 @@ describe('Non Enviromental tests', () => {

);

});

test('Runner token', async () => {

- await expect(client.runner_token()).rejects.toThrow(

- 'BitBucket Cloud does not support runner_token!'

+ await expect(client.runnerToken()).rejects.toThrow(

+ 'BitBucket Cloud does not support runnerToken!'

);

});

});

diff --git a/src/drivers/github.js b/src/drivers/github.js

index a77434400..140d52614 100644

--- a/src/drivers/github.js

+++ b/src/drivers/github.js

@@ -18,13 +18,13 @@ const {

GITHUB_EVENT_NAME

} = process.env;

-const branch_name = (branch) => {

+const branchName = (branch) => {

if (!branch) return;

return branch.replace(/refs\/(head|tag)s\//, '');

};

-const owner_repo = (opts) => {

+const ownerRepo = (opts) => {

let owner, repo;

const { uri } = opts;

@@ -41,16 +41,16 @@ const owner_repo = (opts) => {

const octokit = (token, repo) => {

if (!token) throw new Error('token not found');

- const octokit_options = {};

+ const octokitOptions = {};

if (!repo.includes('github.com')) {

// GitHub Enterprise, use the: repo URL host + '/api/v3' - as baseURL

// as per: https://developer.github.com/enterprise/v3/enterprise-admin/#endpoint-urls

const { host } = new url.URL(repo);

- octokit_options.baseUrl = `https://${host}/api/v3`;

+ octokitOptions.baseUrl = `https://${host}/api/v3`;

}

- return github.getOctokit(token, octokit_options);

+ return github.getOctokit(token, octokitOptions);

};

class Github {

@@ -64,30 +64,30 @@ class Github {

this.token = token;

}

- owner_repo(opts = {}) {

+ ownerRepo(opts = {}) {

const { uri = this.repo } = opts;

- return owner_repo({ uri });

+ return ownerRepo({ uri });

}

- async comment_create(opts = {}) {

- const { report: body, commit_sha } = opts;

+ async commentCreate(opts = {}) {

+ const { report: body, commitSha } = opts;

- const { url: commit_url } = await octokit(

+ const { url: commitUrl } = await octokit(

this.token,

this.repo

).repos.createCommitComment({

- ...owner_repo({ uri: this.repo }),

+ ...ownerRepo({ uri: this.repo }),

body,

- commit_sha

+ commit_sha: commitSha

});

- return commit_url;

+ return commitUrl;

}

- async check_create(opts = {}) {

+ async checkCreate(opts = {}) {

const {

report,

- head_sha,

+ headSha,

title = CHECK_TITLE,

started_at = new Date(),

completed_at = new Date(),

@@ -97,8 +97,8 @@ class Github {

const name = title;

return await octokit(this.token, this.repo).checks.create({

- ...owner_repo({ uri: this.repo }),

- head_sha,

+ ...ownerRepo({ uri: this.repo }),

+ head_sha: headSha,

started_at,

completed_at,

conclusion,

@@ -112,8 +112,8 @@ class Github {

throw new Error('Github does not support publish!');

}

- async runner_token() {

- const { owner, repo } = owner_repo({ uri: this.repo });

+ async runnerToken() {

+ const { owner, repo } = ownerRepo({ uri: this.repo });

const { actions } = octokit(this.token, this.repo);

if (typeof repo !== 'undefined') {

@@ -136,38 +136,38 @@ class Github {

return token;

}

- async register_runner() {

- throw new Error('Github does not support register_runner!');

+ async registerRunner() {

+ throw new Error('Github does not support registerRunner!');

}

- async unregister_runner(opts) {

+ async unregisterRunner(opts) {

const { name } = opts;

- const { owner, repo } = owner_repo({ uri: this.repo });

+ const { owner, repo } = ownerRepo({ uri: this.repo });

const { actions } = octokit(this.token, this.repo);

- const { id: runner_id } = await this.runner_by_name({ name });

+ const { id: runnerId } = await this.runnerByName({ name });

if (typeof repo !== 'undefined') {

await actions.deleteSelfHostedRunnerFromRepo({

owner,

repo,

- runner_id

+ runnerId

});

} else {

await actions.deleteSelfHostedRunnerFromOrg({

org: owner,

- runner_id

+ runnerId

});

}

}

- async start_runner(opts) {

+ async startRunner(opts) {

const { workdir, single, name, labels } = opts;

try {

- const runner_cfg = resolve(workdir, '.runner');

+ const runnerCfg = resolve(workdir, '.runner');

try {

- await fs.unlink(runner_cfg);

+ await fs.unlink(runnerCfg);

} catch (e) {

const arch = process.platform === 'darwin' ? 'osx-x64' : 'linux-x64';

const ver = '2.274.2';

@@ -182,7 +182,7 @@ class Github {

`${resolve(

workdir,

'config.sh'

- )} --token "${await this.runner_token()}" --url "${

+ )} --token "${await this.runnerToken()}" --url "${

this.repo

}" --name "${name}" --labels "${labels}" --work "${resolve(

workdir,

@@ -198,8 +198,8 @@ class Github {

}

}

- async get_runners(opts = {}) {

- const { owner, repo } = owner_repo({ uri: this.repo });

+ async getRunners(opts = {}) {

+ const { owner, repo } = ownerRepo({ uri: this.repo });

const { actions } = octokit(this.token, this.repo);

let runners = [];

@@ -223,16 +223,16 @@ class Github {

return runners;

}

- async runner_by_name(opts = {}) {

+ async runnerByName(opts = {}) {

const { name } = opts;

- const runners = await this.get_runners(opts);

+ const runners = await this.getRunners(opts);

const runner = runners.filter((runner) => runner.name === name)[0];

if (runner) return { id: runner.id, name: runner.name };

}

- async runners_by_labels(opts = {}) {

+ async runnersByLabels(opts = {}) {

const { labels } = opts;

- const runners = await this.get_runners(opts);

+ const runners = await this.getRunners(opts);

return runners

.filter((runner) =>

labels

@@ -244,13 +244,13 @@ class Github {

.map((runner) => ({ id: runner.id, name: runner.name }));

}

- async pr_create(opts = {}) {

+ async prCreate(opts = {}) {

const { source: head, target: base, title, description: body } = opts;

- const { owner, repo } = owner_repo({ uri: this.repo });

+ const { owner, repo } = ownerRepo({ uri: this.repo });

const { pulls } = octokit(this.token, this.repo);

const {

- data: { html_url }

+ data: { html_url: htmlUrl }

} = await pulls.create({

owner,

repo,

@@ -260,12 +260,12 @@ class Github {

body

});

- return html_url;

+ return htmlUrl;

}

async prs(opts = {}) {

const { state = 'open' } = opts;

- const { owner, repo } = owner_repo({ uri: this.repo });

+ const { owner, repo } = ownerRepo({ uri: this.repo });

const { pulls } = octokit(this.token, this.repo);

const { data: prs } = await pulls.list({

@@ -282,8 +282,8 @@ class Github {

} = pr;

return {

url,

- source: branch_name(source),

- target: branch_name(target)

+ source: branchName(source),

+ target: branchName(target)

};

});

}

@@ -296,14 +296,14 @@ class Github {

}

get branch() {

- return branch_name(GITHUB_REF);

+ return branchName(GITHUB_REF);

}

- get user_email() {

+ get userEmail() {

return 'action@github.com';

}

- get user_name() {

+ get userName() {

return 'GitHub Action';

}

}

diff --git a/src/drivers/github.test.js b/src/drivers/github.test.js

index 87e426885..6a9cf68f0 100644

--- a/src/drivers/github.test.js

+++ b/src/drivers/github.test.js

@@ -15,7 +15,7 @@ describe('Non Enviromental tests', () => {

expect(client.repo).toBe(REPO);

expect(client.token).toBe(TOKEN);

- const { owner, repo } = client.owner_repo();

+ const { owner, repo } = client.ownerRepo();

const parts = REPO.split('/');

expect(owner).toBe(parts[parts.length - 2]);

expect(repo).toBe(parts[parts.length - 1]);

@@ -23,9 +23,9 @@ describe('Non Enviromental tests', () => {

test('Comment', async () => {

const report = '## Test comment';

- const commit_sha = SHA;

+ const commitSha = SHA;

- await client.comment_create({ report, commit_sha });

+ await client.commentCreate({ report, commitSha });

});

test('Publish', async () => {

@@ -35,7 +35,7 @@ describe('Non Enviromental tests', () => {

});

test('Runner token', async () => {

- const output = await client.runner_token();

+ const output = await client.runnerToken();

expect(output.length).toBe(29);

});

});

diff --git a/src/drivers/gitlab.js b/src/drivers/gitlab.js

index 0715a86e8..85a9dd677 100644

--- a/src/drivers/gitlab.js

+++ b/src/drivers/gitlab.js

@@ -6,7 +6,7 @@ const fs = require('fs').promises;

const fse = require('fs-extra');

const { resolve } = require('path');

-const { fetch_upload_data, download, exec } = require('../utils');

+const { fetchUploadData, download, exec } = require('../utils');

const {

IN_DOCKER,

@@ -27,20 +27,20 @@ class Gitlab {

this.repo = repo;

}

- async project_path() {

- const repo_base = await this.repo_base();

- const project_path = encodeURIComponent(

- this.repo.replace(repo_base, '').substr(1)

+ async projectPath() {

+ const repoBase = await this.repoBase();

+ const projectPath = encodeURIComponent(

+ this.repo.replace(repoBase, '').substr(1)

);

- return project_path;

+ return projectPath;

}

- async repo_base() {

- if (this._detected_base) return this._detected_base;

+ async repoBase() {

+ if (this.detectedBase) return this.detectedBase;

const { origin, pathname } = new URL(this.repo);

- const possible_bases = await Promise.all(

+ const possibleBases = await Promise.all(

pathname

.split('/')

.filter(Boolean)

@@ -53,21 +53,28 @@ class Gitlab {

.version

)

return path;

- } catch (error) {}

+ } catch (error) {

+ return error;

+ }

})

);

- this._detected_base = possible_bases.find(Boolean);

- if (!this._detected_base) throw new Error('GitLab API not found');

+ this.detectedBase = possibleBases.find(

+ (base) => base.constructor !== Error

+ );

+ if (!this.detectedBase) {

+ if (possibleBases.length) throw possibleBases[0];

+ throw new Error('Invalid repository address');

+ }

- return this._detected_base;

+ return this.detectedBase;

}

- async comment_create(opts = {}) {

- const { commit_sha, report } = opts;

+ async commentCreate(opts = {}) {

+ const { commitSha, report } = opts;

- const project_path = await this.project_path();

- const endpoint = `/projects/${project_path}/repository/commits/${commit_sha}/comments`;

+ const projectPath = await this.projectPath();

+ const endpoint = `/projects/${projectPath}/repository/commits/${commitSha}/comments`;

const body = new URLSearchParams();

body.append('note', report);

@@ -76,16 +83,16 @@ class Gitlab {

return output;

}

- async check_create() {

+ async checkCreate() {

throw new Error('Gitlab does not support check!');

}

async upload(opts = {}) {

const { repo } = this;

- const project_path = await this.project_path();

- const endpoint = `/projects/${project_path}/uploads`;

- const { size, mime, data } = await fetch_upload_data(opts);

+ const projectPath = await this.projectPath();

+ const endpoint = `/projects/${projectPath}/uploads`;

+ const { size, mime, data } = await fetchUploadData(opts);

const body = new FormData();

body.append('file', data);

@@ -94,19 +101,19 @@ class Gitlab {

return { uri: `${repo}${url}`, mime, size };

}

- async runner_token() {

- const project_path = await this.project_path();

- const endpoint = `/projects/${project_path}`;

+ async runnerToken() {

+ const projectPath = await this.projectPath();

+ const endpoint = `/projects/${projectPath}`;

- const { runners_token } = await this.request({ endpoint });

+ const { runners_token: runnersToken } = await this.request({ endpoint });

- return runners_token;

+ return runnersToken;

}

- async register_runner(opts = {}) {

+ async registerRunner(opts = {}) {

const { tags, name } = opts;

- const token = await this.runner_token();

+ const token = await this.runnerToken();

const endpoint = `/runners`;