diff --git a/src/css/custom.css b/src/css/custom.css

index 1df207d36..a3dcdd48d 100644

--- a/src/css/custom.css

+++ b/src/css/custom.css

@@ -1465,11 +1465,11 @@ article.markdown p {

/* Heading hierarchy - clear visual distinction */

article.markdown h1 {

margin-top: 0;

- margin-bottom: 1rem;

+ margin-bottom: 0.9rem;

font-size: 2.5rem;

font-weight: 800;

letter-spacing: -0.03em;

- line-height: 1.2;

+ line-height: 1.4;

color: #111827;

}

diff --git a/src/theme/Heading/styles.module.css b/src/theme/Heading/styles.module.css

index ba959ca0e..6c7043c19 100644

--- a/src/theme/Heading/styles.module.css

+++ b/src/theme/Heading/styles.module.css

@@ -4,7 +4,7 @@ the browser does not scroll that anchor behind the navbar

See https://twitter.com/JoshWComeau/status/1332015868725891076

*/

.anchorWithStickyNavbar {

- scroll-margin-top: calc(var(--ifm-navbar-height) + 0.5rem);

+ scroll-margin-top: calc(var(--ifm-navbar-height) + 0.2rem);

}

.anchorWithHideOnScrollNavbar {

diff --git a/static/img/custom-function.png b/static/img/custom-function.png

new file mode 100644

index 000000000..f747d03be

Binary files /dev/null and b/static/img/custom-function.png differ

diff --git a/static/img/global-functions.png b/static/img/global-functions.png

new file mode 100644

index 000000000..ad98ac8aa

Binary files /dev/null and b/static/img/global-functions.png differ

diff --git a/static/img/global-vars.png b/static/img/global-vars.png

new file mode 100644

index 000000000..6d2225f05

Binary files /dev/null and b/static/img/global-vars.png differ

diff --git a/static/img/multi-assertion.png b/static/img/multi-assertion.png

new file mode 100644

index 000000000..9dc739f9c

Binary files /dev/null and b/static/img/multi-assertion.png differ

diff --git a/static/img/schema-assertion.png b/static/img/schema-assertion.png

new file mode 100644

index 000000000..cfc3d765a

Binary files /dev/null and b/static/img/schema-assertion.png differ

diff --git a/static/img/var-extraction.png b/static/img/var-extraction.png

new file mode 100644

index 000000000..c8e2079c8

Binary files /dev/null and b/static/img/var-extraction.png differ

diff --git a/static/img/var-injection.png b/static/img/var-injection.png

new file mode 100644

index 000000000..7b15c7eb7

Binary files /dev/null and b/static/img/var-injection.png differ

diff --git a/tailwind.config.js b/tailwind.config.js

index 252cdaf25..9703b5dc6 100644

--- a/tailwind.config.js

+++ b/tailwind.config.js

@@ -28,6 +28,7 @@ module.exports = {

color: "var(--ifm-color)",

h1: {

color: "var(--ifm-color)",

+ lineHeight: "1.5",

},

h2: {

color: "var(--ifm-color)",

@@ -98,6 +99,13 @@ module.exports = {

},

},

},

+ lg: {

+ css: {

+ h1: {

+ lineHeight: "1.2",

+ },

+ },

+ },

},

transitionDelay: {

3000: "3000ms",

diff --git a/versioned_docs/version-3.0.0/running-keploy/generate-api-tests-using-ai.md b/versioned_docs/version-3.0.0/running-keploy/generate-api-tests-using-ai.md

index abc6d846c..359c777a5 100644

--- a/versioned_docs/version-3.0.0/running-keploy/generate-api-tests-using-ai.md

+++ b/versioned_docs/version-3.0.0/running-keploy/generate-api-tests-using-ai.md

@@ -17,13 +17,15 @@ This guide will help you generate automated API tests using Keploy's AI-based te

- Go to the [Keploy Console](https://app.keploy.io/api-testing/generate)

- Upload your API input (schema, collection, or curl)

-- 👊 Hit **Generate Tests** and Keploy will parse your input, hit the API, and generate validated test flows with response-based assertions.

+- 👊 Keploy’s engine triggers live requests against your target environment to capture the full execution context—including the API response and the resulting

+ dependency interactions (DB queries, internal gRPC calls)—creating a deterministic 'Digital Twin' of the transaction.

- If private endpoints are detected, follow the agent setup prompt

- Review and edit your generated tests

## ⚠️ Private Endpoint API Warning

-If your API is not publicly accessible, Keploy will show a warning when attempting to reach those endpoints. **To resolve this**, install the Keploy Agent proxy locally

+If your API is not publicly accessible, Keploy will show a warning when attempting to reach those endpoints. **To resolve this**, install the Keploy Agent proxy locally.

+The Agent acts as a local interceptor that uses eBPF to record your service's interactions with its dependencies (Databases, APIs), ensuring tests can be replayed without environment setup.

### Keploy Agent Installation

@@ -33,7 +35,8 @@ If your API is not publicly accessible, Keploy will show a warning when attempti

| **Linux** | - Run the following in your terminal:

`curl -L -O https://keploy-enterprise.s3.us-west-2.amazonaws.com/releases/latest/keploy-agent-linux-amd64.tar.gz`

`tar -xzf keploy-agent-linux-amd64.tar.gz`

`./keploy-agent`

|

| **Windows** | - [Download](https://keploy-enterprise.s3.us-west-2.amazonaws.com/releases/latest/Keploy+Agent-windows_amd64.zip)

Extract the zip file and Launch the Keploy Agent.

|

-> ✅ Once installed and running, return to the Keploy Console and hit **Generate API Tests**. The agent will proxy your local API to allow secure test generation.

+> ✅ Once installed and running, return to the Keploy Console and hit **Generate API Tests**. The Keploy Agent creates a secure bridge to your local or private

+ environment, enabling our engine to interact with your service as if it were in production, capturing the underlying dependency calls (DB, 3rd party APIs) without code changes.

## BEST Practices for BEST Test Output

diff --git a/versioned_docs/version-4.0.0/running-keploy/api-testing-add-suite.md b/versioned_docs/version-4.0.0/running-keploy/api-testing-add-suite.md

new file mode 100644

index 000000000..3402aec8b

--- /dev/null

+++ b/versioned_docs/version-4.0.0/running-keploy/api-testing-add-suite.md

@@ -0,0 +1,67 @@

+---

+id: api-testing-add-suite

+title: Adding New Test Suites

+description: Guide to adding to new Suites using "Add New"

+sidebar_label: Adding New Suite

+tags:

+ - api-testing

+ - test-organization

+ - test-suite

+ - test-management

+---

+import ProductTier from '@site/src/components/ProductTier';

+

+

+

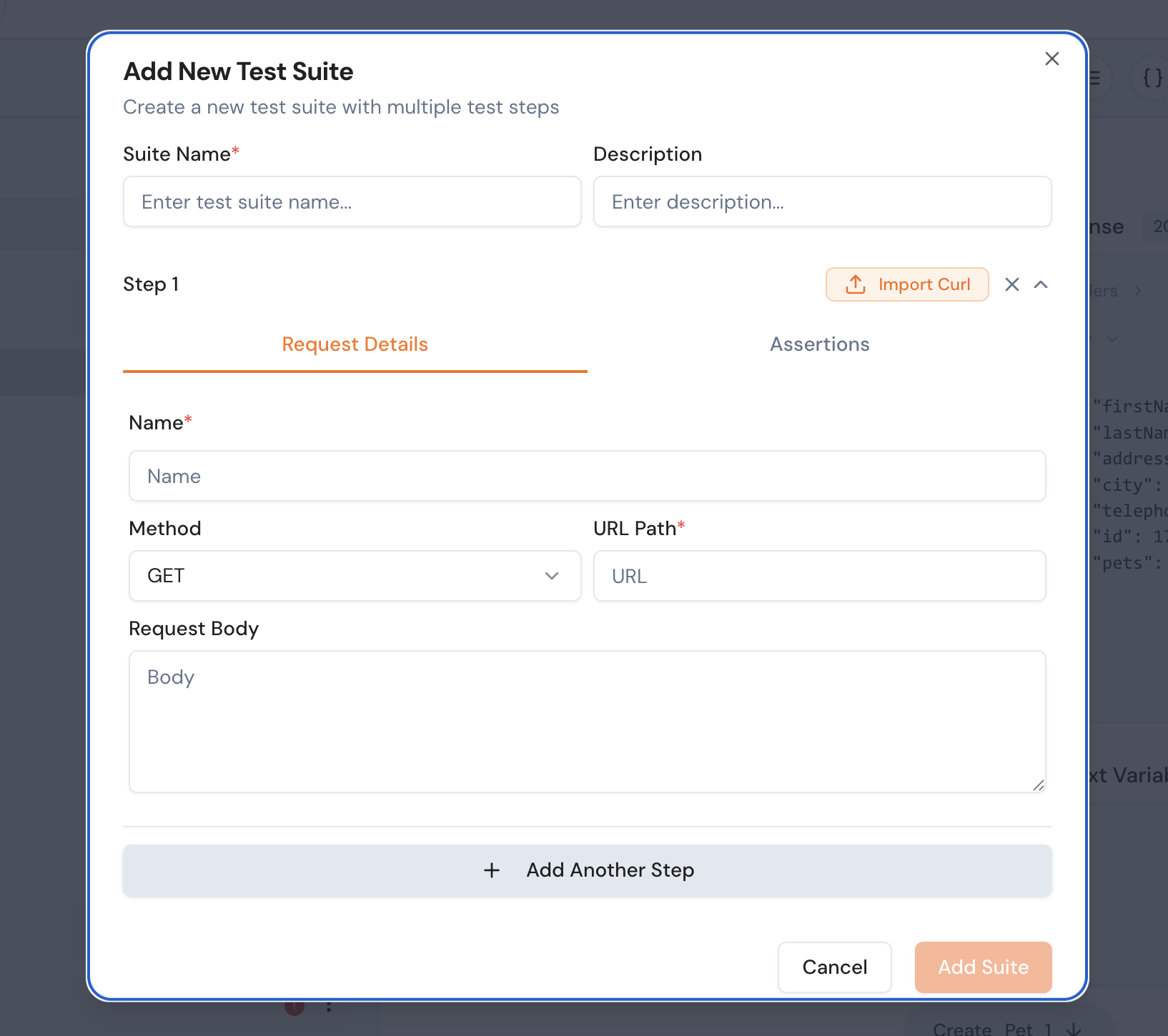

+In this guide, we will walk through the process of adding a test suite in Keploy. Users can either manually provide the details for the test suite or directly import a curl command to create one.

+

+## Steps to Add a Test Suite

+

+1. **Click on the Plus Button**

+ - Navigate to the test suite section in the Keploy interface.

+ - Click on the `+` button to add a new test suite.

+

+2. **Provide Test Suite Details**

+ - Fill in the following fields:

+ - **Name**: Enter a unique name for the test suite.

+ - **Details**: Provide a brief description of the test suite.

+ - **Request**: Specify the request details, such as the HTTP method, URL, headers, and body.

+ - **Assertions**: Define any Assertions related to the test suite.

+

+

+3. **Import a Curl Command (Optional)**

+ - If you have a curl command, you can directly import it to create the test suite.

+ - Paste the curl command in the provided input field.

+ - Keploy will automatically parse the curl command and populate the test suite details.

+

+4. **Save the Test Suite**

+ - Once all the details are filled in, click on the `Save` button to create the test suite.

+

+## Example

+

+### Manual Entry

+```json

+{

+ "name": "User Authentication",

+ "details": "Tests the login functionality.",

+ "request": {

+ "method": "POST",

+ "url": "https://api.example.com/login",

+ "headers": {

+ "Content-Type": "application/json"

+ },

+ "body": {

+ "username": "test_user",

+ "password": "secure_password"

+ }

+ }}

+```

+

+### Importing a Curl Command

+```bash

+curl -X POST https://api.example.com/login \

+ -H "Content-Type: application/json" \

+ -d '{"username": "test_user", "password": "secure_password"}'

+```

+

+By following these steps, you can easily create and manage test suites in Keploy.

\ No newline at end of file

diff --git a/versioned_docs/version-4.0.0/running-keploy/api-testing-adding-labels.md b/versioned_docs/version-4.0.0/running-keploy/api-testing-adding-labels.md

new file mode 100644

index 000000000..de7c2a91c

--- /dev/null

+++ b/versioned_docs/version-4.0.0/running-keploy/api-testing-adding-labels.md

@@ -0,0 +1,134 @@

+---

+id: api-testing-adding-labels

+title: Adding Labels to Test Suites

+description: Guide to creating and assigning labels to test suites individually or in bulk

+sidebar_label: Adding Labels

+tags:

+ - api-testing

+ - test-organization

+ - labels

+ - test-management

+---

+

+import ProductTier from '@site/src/components/ProductTier';

+

+

+

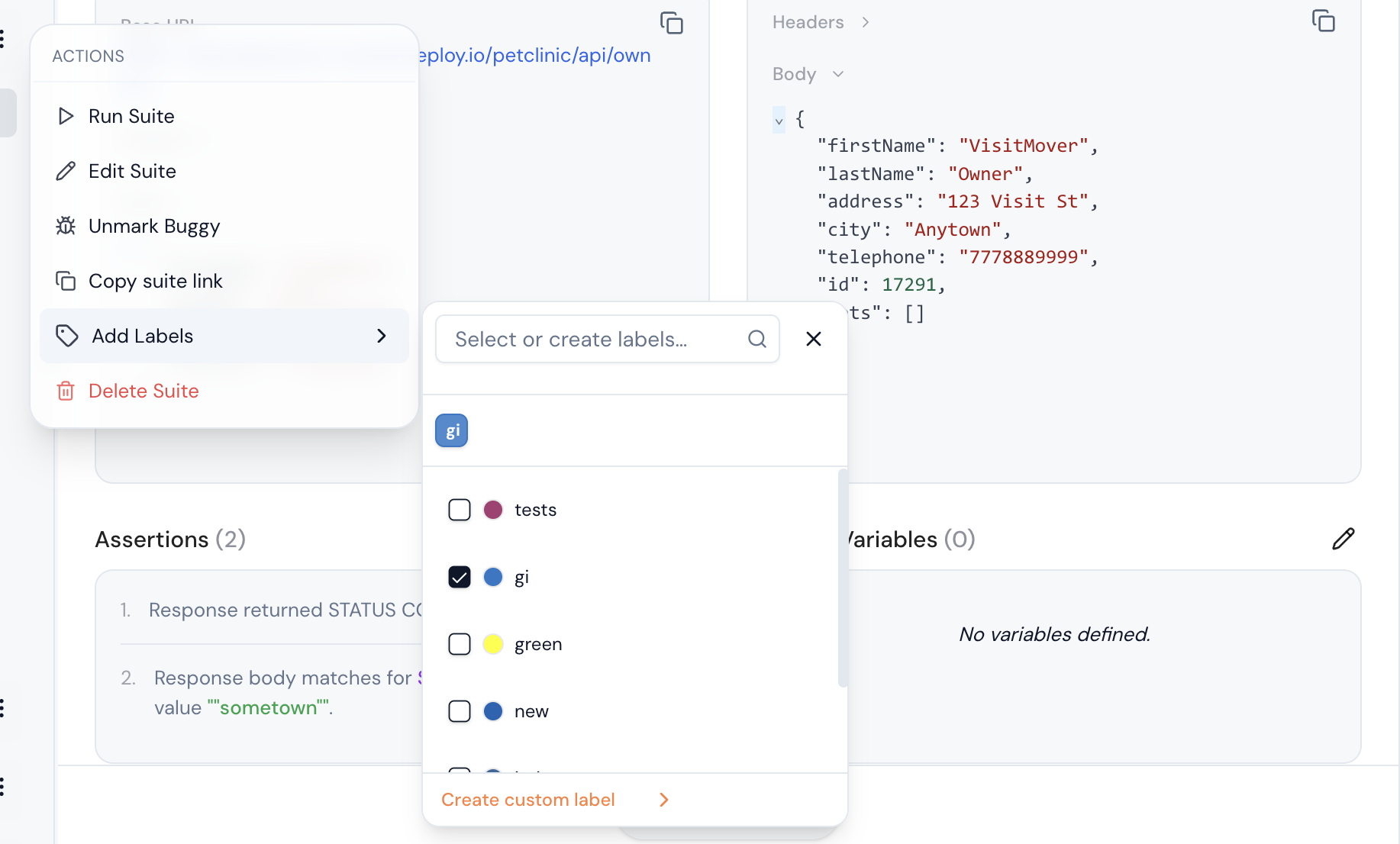

+Labels help you organize, categorize, and filter your test suites effectively. Keploy provides flexible labeling options that allow you to add labels to individual test suites or multiple suites at once.

+

+## Overview

+

+The labeling system includes:

+

+- **Individual Labeling**: Add labels to specific test suites

+- **Bulk Labeling**: Apply labels to multiple suites simultaneously

+- **Label Management**: Create, edit, and delete custom labels

+- **Filtering**: Use labels to filter and organize your test collection

+

+## Adding Labels to Individual Test Suites

+

+### Method 1: Using the Three Dots Menu

+

+1. **Navigate to Test Suites**

+ - Go to your Test Suites Dashboard

+

+2. **Access Suite Options**

+ - Locate the test suite you want to label

+ - Click the **three dots (⋮)** menu next to the test suite name

+

+3. **Select Add Label Option**

+ - From the dropdown menu, click **"Add Labels"**

+

+

+

+## Label Assignment Interface

+

+When you open the label assignment dialog, you'll see:

+

+### Existing Labels Section

+```

+🏷️ Available Labels

+├── 📊 Priority

+│ ├── high-priority (P0)

+│ ├── medium-priority(P1)

+│ └── low-priority(P2)

+```

+

+### Assigning Existing Labels

+

+1. **Browse Categories**

+ - Expand label categories to see available options

+ - Use the search box to find specific labels quickly

+

+2. **Select Labels**

+ - Click on labels to select them

+ - Selected labels will be highlighted or marked with a checkmark ✓

+ - You can select multiple labels from different categories

+

+3. **Apply Labels**

+ - Review your selections in the "Selected Labels" preview

+ - Click **"Apply Labels"** to assign them to the test suite

+

+## Creating New Labels

+

+### Creating During Assignment

+

+1. **Open Label Dialog**

+ - Follow the steps above to open the label assignment interface

+

+2. **Create New Label**

+ - Click **"Create Custom Label"**

+ - Enter label details in the creation form

+

+3. **Label Creation Form**

+ - For Example:

+ ```

+ Label Name: [smoke-critical]

+ Color: [🔴] (color picker)

+ ```

+

+## Bulk Label Assignment

+

+### Using Checkbox Selection

+

+1. **Select Multiple Suites**

+ - Navigate to the Test Suites list

+ - Use checkboxes to select multiple test suites

+ - After selecting suites, Click **"Add Labels"** or **"Create Custom Labels"** button

+

+## Label Management Best Practices

+

+### Naming Conventions

+

+1. **Use Consistent Formatting**

+ ```

+ ✅ Good Examples:

+ - team-frontend

+ - priority-high

+ - env-production

+ - type-smoke-test

+

+ ❌ Avoid:

+ - TeamFrontend

+ - HIGH_PRIORITY

+ - prod env

+ - smoke test type

+ ```

+

+2. **Category-Based Organization**

+ ```

+ Priority: critical, high, medium, low

+ Environment: production, staging, development

+ Type: smoke, regression, integration, e2e

+ Team: frontend, backend, qa, devops

+ Status: active, deprecated, experimental

+ ```

+

+## Related Features

+

+- **[Test Suite Management](./api-testing-edit-suites.md)**: Edit and organize test suites

+- **[Selective Test Execution](./api-testing-running-selective.md)**: Run tests using label filters

+- **[Test Reports](./api-testing-sharing-reports.md)**: Generate reports filtered by labels

+- **[Custom Assertions](./api-testing-custom-assertions.md)**: Create assertions for labeled suites

+

+Labels are a powerful organizational tool that help you maintain order in large test collections and enable efficient test management workflows.

\ No newline at end of file

diff --git a/versioned_docs/version-4.0.0/running-keploy/api-testing-assertion-tree.md b/versioned_docs/version-4.0.0/running-keploy/api-testing-assertion-tree.md

new file mode 100644

index 000000000..a89527c4b

--- /dev/null

+++ b/versioned_docs/version-4.0.0/running-keploy/api-testing-assertion-tree.md

@@ -0,0 +1,115 @@

+---

+id: api-testing-assertion-tree

+title: Assertion Tree

+sidebar_label: Assertion Tree

+description: Visualize and manage your entire test flow in a structured tree format

+tags:

+ - API testing

+ - test visualization

+ - assertion tree

+ - test flow

+ - automation

+keywords:

+ - test suite visualization

+ - assertion tree

+ - API flow

+ - test step editor

+ - visual test builder

+---

+

+import ProductTier from '@site/src/components/ProductTier';

+

+

+

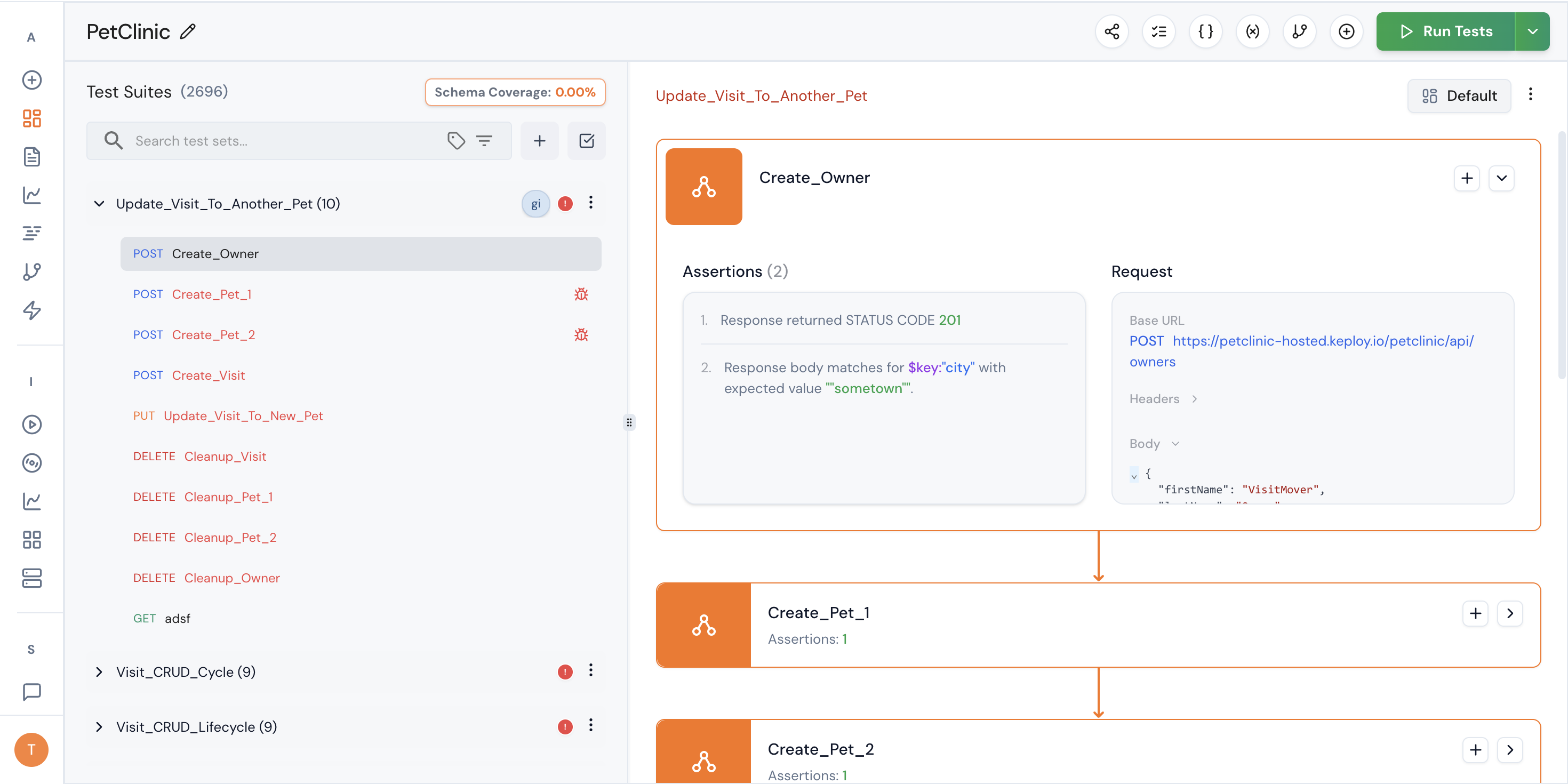

+## Assertion Tree

+

+The **Assertion Tree** allows you to visualize and manage your entire test suite in a structured, hierarchical format.

+

+Instead of viewing tests as isolated steps, the Assertion Tree gives you a complete flow-level perspective — including requests, responses, and assertions — in one interactive interface.

+

+---

+

+## How to Access the Assertion Tree

+

+1. Navigate to an individual **Test Suite**

+2. Click on the **"Visualize"** button

+3. The system renders the full test suite in a **tree format**

+

+---

+

+

+## What You Can See

+

+The Assertion Tree provides a visual representation of:

+

+- All test steps in execution order

+- Request details for each step

+- Attached assertions

+

+Each node in the tree represents a test step and contains:

+

+- Request configuration

+- Associated assertions

+- Execution dependencies

+

+This makes it easier to understand how your test suite behaves as a complete workflow.

+

+## What You Can Do

+

+The Assertion Tree is fully interactive. You can:

+

+### 1. View Complete Flow

+Understand the entire API workflow from start to finish without switching between screens.

+

+### 2. Inspect Assertions Inline

+Quickly see which assertions are attached to each step, including:

+

+- Status code validations

+- JSON validations

+- Header validations

+- Schema validations

+- Custom function validations

+

+### 3. Add a New Step in the Flow

+

+You can insert a new test step directly within the tree.

+

+This allows you to:

+

+- Expand an existing workflow

+- Add conditional validation steps

+- Introduce additional API calls

+- Build multi-step integration flows

+

+The new step becomes part of the structured execution sequence.

+

+## Why Use the Assertion Tree?

+

+The Assertion Tree is particularly useful when:

+

+- Your test suite contains multiple API calls

+- You are testing end-to-end workflows

+- Business logic spans multiple requests

+- You need clarity on how validations are structured

+- You want a visual representation instead of linear editing

+

+It transforms test management from a flat list into a structured execution graph.

+

+## Typical Use Cases

+

+- Authentication → Resource Creation → Validation → Cleanup flows

+- Multi-step payment processing validations

+- E-commerce checkout journeys

+- Webhook-triggered event testing

+- Integration testing across services

+

+## Best Practices

+

+- Use the tree view to design full workflows before adding assertions

+- Keep each step focused on a single responsibility

+- Attach assertions at the correct step level

+- Review flow dependencies to avoid unintended execution order

+- Use visualization to debug failing multi-step tests faster

+

+

+The Assertion Tree enables you to design, inspect, and extend complex API workflows with clarity and precision — all from a single visual interface.

diff --git a/versioned_docs/version-4.0.0/running-keploy/api-testing-buggy-suites.md b/versioned_docs/version-4.0.0/running-keploy/api-testing-buggy-suites.md

new file mode 100644

index 000000000..31f3289fe

--- /dev/null

+++ b/versioned_docs/version-4.0.0/running-keploy/api-testing-buggy-suites.md

@@ -0,0 +1,137 @@

+---

+id: api-testing-buggy-suites

+title: Buggy Test Suites

+description: Guide to viewing and debugging failed test suites generated by Keploy

+sidebar_label: Buggy Suites

+tags:

+ - api-testing

+ - debugging

+ - test-failures

+ - troubleshooting

+---

+import ProductTier from '@site/src/components/ProductTier';

+

+

+

+When Keploy generates tests, some test cases might fail due to various reasons such as endpoint issues, data mismatches, or API changes. The buggy test suites page helps you identify, understand, and fix these failing tests.

+

+## Viewing Buggy Test Suites

+

+Navigate to the test suites generated with the red exclamation icon to view all test suites that contain failing test cases. Each buggy suite displays:

+

+- **Suite Name**: The name of the test suite containing failed tests

+- **Test Steps**: Steps in the suite

+- **Failure Reason**: Reason why the test suite is buggy

+

+## Understanding Failure Reasons

+

+For each buggy test suite, you can find the detailed explanations of why tests are marked as buggy. Common failure reasons include:

+

+### 1. Endpoint Not Found (404 Errors)

+

+**Example Failure Reason:**

+```

+The response returned a 404 status code for the 'Create Owner' step, indicating the endpoint '/owners' was not found. This contradicts the documented cURL examples and schema, which show that this endpoint should exist and return a 201 status code upon successful creation.

+```

+

+**What this means:**

+- The API endpoint that was working during recording is no longer available

+- The endpoint URL might have changed

+- The API server might be down or misconfigured

+

+**How to fix:**

+1. Verify the endpoint URL is correct

+2. Check if the API server is running

+3. Review API documentation for any endpoint changes

+4. Update the test suite if the endpoint has moved

+

+### 2. Schema Validation Failures

+

+**Example Failure Reason:**

+```

+Response schema validation failed. Expected property 'id' of type 'number' but received 'string'. The API response structure has changed from the recorded version.

+```

+

+**What this means:**

+- The API response format has changed since recording

+- Data types don't match the expected schema

+- New required fields might have been added

+

+### 3. Authentication Issues

+

+**Example Failure Reason:**

+```

+Authentication failed with 401 Unauthorized. The API key or token used during recording may have expired or been revoked.

+```

+

+**What this means:**

+- API credentials have expired or changed

+- Authentication method has been updated

+- Permission levels may have changed

+

+## Assertion Failures

+

+The buggy suites page provides detailed assertion failure information to help you understand exactly what went wrong:

+

+### Response Status Assertions

+```yaml

+Expected: 201 Created

+Actual: 404 Not Found

+Assertion: status_code_equals

+Message: The endpoint returned an unexpected status code

+```

+

+### Response Body Assertions

+```yaml

+Expected: {"id": 123, "name": "John Doe", "email": "john@example.com"}

+Actual: {"error": "User not found", "code": 404}

+Assertion: json_body_equals

+Message: Response body structure completely different from expected

+```

+

+## Debugging Actions

+

+For each buggy test case, you can take several debugging actions:

+

+### 1. View Full Test Details

+Click on any failed test to see:

+- Complete request details (URL, headers, body)

+- Full response details (status, headers, body)

+- All assertion results with expected vs actual values

+

+### 2. Compare with Recorded Version

+View the side-by-side comparison between:

+- **Original Recording**: The request/response captured during recording

+- **Current Execution**: The actual request/response during test execution

+- **Differences Highlighted**: Visual indicators showing what changed

+

+### 3. Manual Test Execution

+Test the endpoint manually to verify:

+```bash

+# Example manual cURL test

+curl -X POST \

+ 'https://api.example.com/owners' \

+ -H 'Content-Type: application/json' \

+ -H 'Authorization: Bearer your-token' \

+ -d '{

+ "name": "John Doe",

+ "email": "john@example.com"

+ }'

+```

+

+### 4. Update Test Expectations

+If the API behavior has legitimately changed:

+1. **Re-record the test**: Capture new expected behavior

+2. **Update assertions**: Modify expected values to match new API

+3. **Add new test cases**: Cover additional scenarios if needed

+

+## Getting Help

+

+If you're unable to resolve buggy test suites:

+

+1. **Check Documentation**: Review API documentation for recent changes

+2. **Contact Support**: Reach out to the development team for API-related issues

+3. **Community Forums**: Ask questions in Keploy community channels

+4. **Share Test Details**: Provide complete test execution logs when seeking help

+

+Remember, buggy test suites often indicate real issues with your API or environment. Use them as an early warning system to maintain API quality and reliability.

\ No newline at end of file

diff --git a/versioned_docs/version-4.0.0/running-keploy/api-testing-bulk-assertions.md b/versioned_docs/version-4.0.0/running-keploy/api-testing-bulk-assertions.md

new file mode 100644

index 000000000..11300bd9f

--- /dev/null

+++ b/versioned_docs/version-4.0.0/running-keploy/api-testing-bulk-assertions.md

@@ -0,0 +1,85 @@

+---

+id: api-testing-bulk-assertions

+title: Bulk Assertions and Schema Validation

+description: Guide to performing bulk assertions across multiple endpoints, methods, and status codes

+sidebar_label: Bulk Assertions

+tags:

+ - api-testing

+ - bulk-assertions

+ - schema-assertions

+ - test-validation

+ - test-management

+---

+

+import ProductTier from '@site/src/components/ProductTier';

+

+

+

+This guide explains how to perform bulk assertions in Keploy, allowing you to validate multiple test cases across different endpoints, HTTP methods, and status codes simultaneously.

+

+## What are Schema Bulk Assertions?

+

+Schema assertions allow you to validate the structure and format of API responses in bulk so that you can choose specific fields from the entire response body to assert

+

+## How to Perform Bulk Assertions

+

+### 1. Filter Your Test Suites

+

+First, use the filtering options to narrow down the tests you want to assert:

+- **Filter by Endpoint**: Select specific API endpoints

+- **Filter by HTTP Method**: Choose methods like GET, POST, PUT, DELETE, etc.

+- **Filter by Status Code**: Filter by response status codes (2xx, 4xx, 5xx, etc.)

+- **Filter by Test Suite**: Select specific test suite collections

+

+### 2. Choose Assertion Fields

+

+Select the configure schema assertions option

+From the entire response body, you can choose which fields to assert:

+- **Response Body Fields**: Select individual fields from the JSON response

+- **Data Types**: Ensure fields have correct types (string, number, boolean, etc.)

+- **Required Fields**: Verify that mandatory fields are present

+

+### 4. Apply Schema Assertions

+

+Schema assertions validate the structure of your API responses:

+- **Field Presence**: Ensure required fields exist in the response

+- **Data Type Validation**: Verify that fields have the correct data type

+- **Format Validation**: Check formats like email, URL, date, etc.

+

+### 5. Save and Execute

+

+- Review the selected assertions

+- Apply the assertions to all selected test cases

+- Execute the tests to validate against the defined schema

+

+## Example Use Cases

+

+### Example: Asserting User Endpoints

+```

+Filter by:

+- Endpoint: /api/v1/users/*

+- HTTP Method: GET

+- Status Code: 200

+

+Bulk Assert:

+- Response contains: id, name, email

+- Data types: id (number), name (string), email (string)

+- Email format validation

+```

+

+

+## Benefits of Bulk Assertions

+

+- **Time Efficiency**: Apply assertions to multiple tests simultaneously

+- **Consistency**: Ensure uniform validation across similar endpoints

+- **Maintainability**: Update assertions for multiple tests at once

+

+## Tips for Effective Schema Assertions

+

+- **Keep schemas DRY**: Reuse common schema patterns across different endpoints

+- **Test edge cases**: Include assertions for empty arrays, null values, and optional fields

+- **Validate error responses**: Ensure error messages follow a consistent schema

+- **Use realistic data**: Test with production-like data for accurate validation

+- **Regular updates**: Update schemas when API contracts change

+

+By leveraging bulk assertions and schema validation, you can ensure comprehensive API testing while minimizing manual effort and maintaining high test coverage across your application.

\ No newline at end of file

diff --git a/versioned_docs/version-4.0.0/running-keploy/api-testing-chrome-extension.md b/versioned_docs/version-4.0.0/running-keploy/api-testing-chrome-extension.md

index 587c8e4ec..64c8f8dcb 100644

--- a/versioned_docs/version-4.0.0/running-keploy/api-testing-chrome-extension.md

+++ b/versioned_docs/version-4.0.0/running-keploy/api-testing-chrome-extension.md

@@ -15,7 +15,7 @@ Install the extension, hit **Record API Calls**, exercise your web app, then pre

## What the API Test Recorder does

-**Browser-side traffic capture** — Sniffs XHR / fetch calls as you click around.

+**Browser-side traffic capture** — Captures full-stack XHR/fetch traffic, including headers, cookies, and authentication context, ensuring the recorded flows are ready for authenticated replay.

**Instant replay formats** — Export the captured calls as cURL, JSON, or native Keploy YAML.

@@ -43,7 +43,7 @@ Install the extension, hit **Record API Calls**, exercise your web app, then pre

4. Watch the live counters:

- **Captured calls** – total XHR/fetch requests intercepted.

- **Complete req/resp** – pairs where both request _and_ response were fully captured.

-5. If the count of req/res is lower than expected, hit **Debug** to repair missing pairs.

+5. Keploy automatically synchronizes asynchronous background requests. Use the **Debug** feature to repair any pending request/response pairs before generating your suite.

Example :

```

diff --git a/versioned_docs/version-4.0.0/running-keploy/api-testing-custom-assertions.md b/versioned_docs/version-4.0.0/running-keploy/api-testing-custom-assertions.md

new file mode 100644

index 000000000..7a934d0ec

--- /dev/null

+++ b/versioned_docs/version-4.0.0/running-keploy/api-testing-custom-assertions.md

@@ -0,0 +1,129 @@

+---

+id: api-testing-custom-assertions

+title: Custom Assertions

+sidebar_label: Custom Assertions

+description: Define powerful validation rules for your API tests in Keploy

+tags:

+ - API testing

+ - assertions

+ - validation

+ - schema validation

+ - automation

+keywords:

+ - status code validation

+ - JSON assertions

+ - header validation

+ - schema validation

+ - custom functions

+

+---

+

+import ProductTier from '@site/src/components/ProductTier';

+

+

+

+Custom assertions allow you to precisely validate API responses beyond basic status checks.

+

+Keploy supports the following assertion categories:

+

+| Scenario | Recommended Assertion |

+|----------|----------------------|

+| Exact status code validation | Status Code |

+| Accept any success response | Status Code Class |

+| Partial JSON validation | JSON Contains |

+| Strict field validation | JSON Equal |

+| Response structure consistency | Schema |

+| Dynamic value comparison | Custom Function |

+| Validate only important fields | Selected Fields |

+| Security header enforcement | Header Exists / Header Equal |

+

+

+## For specific Selected Fields

+

+### Selected Fields

+Allows you to validate only specific parts of a response instead of the entire body.

+

+Useful when:

+- Response includes dynamic metadata

+- You want to ignore volatile fields (timestamps, request IDs, etc.)

+- Only certain business-critical fields matter

+

+## Custom Functions (Advanced Validation)

+

+For complex validation logic, Keploy supports custom functions inside assertions.

+

+Custom functions allow you to:

+- Write JavaScript expressions

+- Perform conditional validation

+- Compare multiple fields

+- Validate dynamic calculations

+- Enforce business rules

+

+### Example Use Cases

+- Validate `totalAmount = sum(lineItems)`

+- Ensure timestamp is within last 5 minutes

+- Compare response field with environment variable

+- Validate custom encryption or hashing logic

+

+### Example: E-commerce Order Validation

+

+Consider an e-commerce API that returns order details. You want to validate that the total amount equals the sum of all line items plus tax.

+

+**API Response:**

+```json

+{

+ "orderId": "ORD-12345",

+ "items": [

+ { "name": "Laptop", "price": 1200.00, "quantity": 1 },

+ { "name": "Mouse", "price": 25.50, "quantity": 2 }

+ ],

+ "subtotal": 1251.00,

+ "tax": 125.10,

+ "total": 1376.10,

+ "timestamp": "2026-02-11T10:30:00Z"

+}

+```

+

+**Custom Function for Total Validation:**

+```javascript

+// Validate that total = subtotal + tax

+function validateOrderTotal(response) {

+ const data = JSON.parse(response.body);

+ const expectedTotal = data.subtotal + data.tax;

+ const actualTotal = data.total;

+

+ return {

+ passed: Math.abs(expectedTotal - actualTotal) < 0.01, // Handle floating point precision

+ message: `Expected total ${expectedTotal}, but got ${actualTotal}`

+ };

+}

+

+// Validate that subtotal matches sum of line items

+function validateSubtotal(response) {

+ const data = JSON.parse(response.body);

+ const calculatedSubtotal = data.items.reduce((sum, item) => {

+ return sum + (item.price * item.quantity);

+ }, 0);

+

+ return {

+ passed: Math.abs(calculatedSubtotal - data.subtotal) < 0.01,

+ message: `Calculated subtotal ${calculatedSubtotal}, but API returned ${data.subtotal}`

+ };

+}

+```

+

+**Usage in Keploy:**

+1. Navigate to your test step editor

+2. Add a new assertion

+3. Select "Custom Function" as assertion type

+4. Paste your custom function code

+5. The function will execute during test runs and validate your business logic

+

+## Best Practices

+

+- **Prefer Schema validation** for dynamic APIs

+- **Use JSON Equal** only when strict comparison is necessary

+- **Avoid over-validating** volatile fields

+- **Use Custom Functions** for business logic validation

+- **Combine multiple assertions** for stronger test reliability

+- **Keep assertions focused and readable**

diff --git a/versioned_docs/version-4.0.0/running-keploy/api-testing-edit-assertions.md b/versioned_docs/version-4.0.0/running-keploy/api-testing-edit-assertions.md

new file mode 100644

index 000000000..429de14d2

--- /dev/null

+++ b/versioned_docs/version-4.0.0/running-keploy/api-testing-edit-assertions.md

@@ -0,0 +1,73 @@

+---

+id: api-testing-edit-assertions

+title: Editing Test Suites and Custom Assertions

+description: Guide to editing test suites with custom variables and assertion functions

+sidebar_label: Edit Assertions

+tags:

+ - api-testing

+ - edit-assertions

+ - custom-variables

+ - custom-functions

+ - test-management

+---

+

+import ProductTier from '@site/src/components/ProductTier';

+

+

+

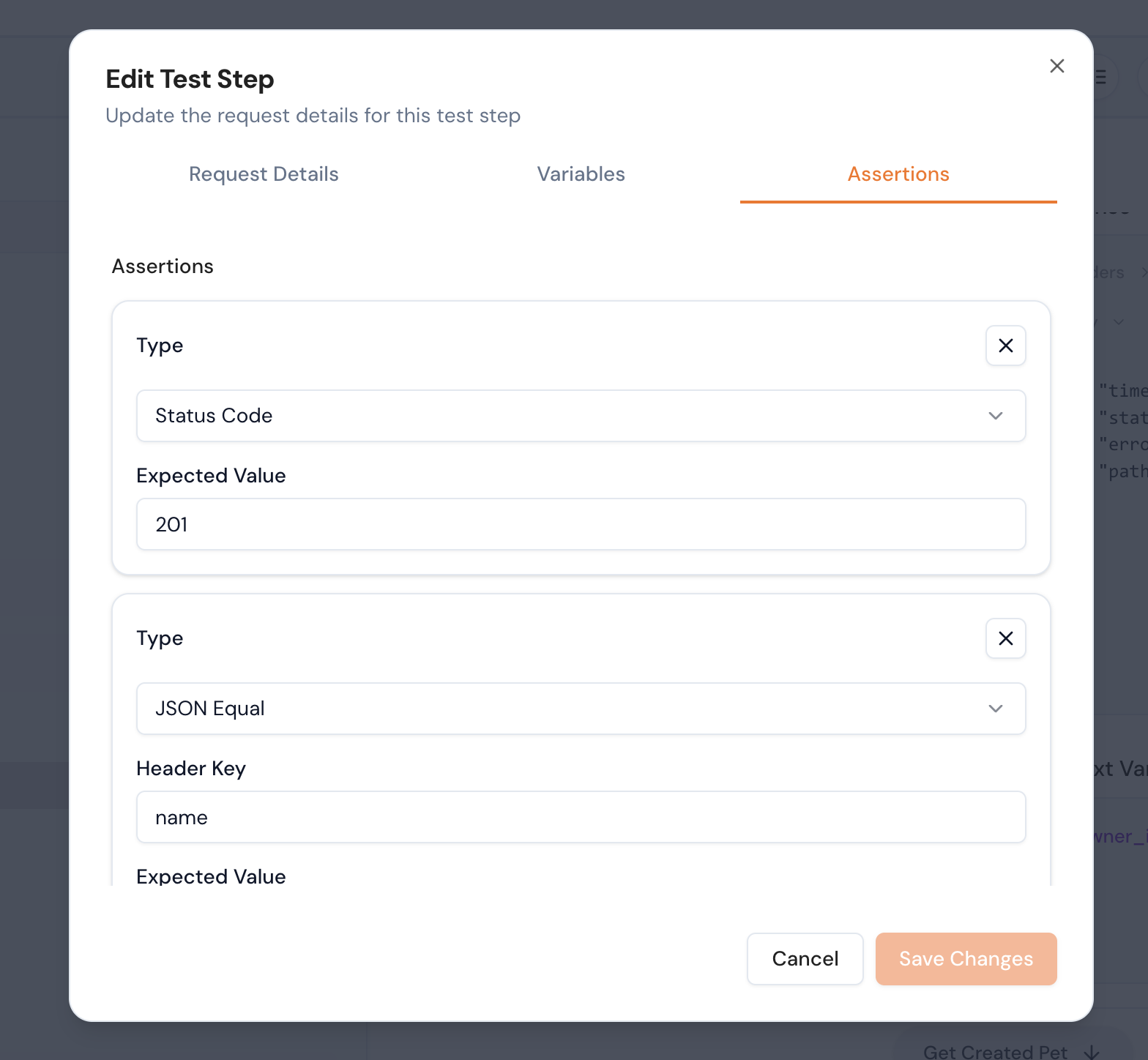

+This guide explains how to edit test suites in Keploy, including adding custom variables to URLs and request bodies, and creating custom assertion functions for advanced test validation.

+

+## Overview

+

+Editing a test suite allows you to:

+

+- Modify API request details (URL, headers, body, method)

+- Create and manage **global** and **local** variables

+- Update or replace existing assertions

+- Add **custom assertion functions**

+- Write reusable validation logic for request or response

+

+This gives you fine-grained control over how your APIs are validated.

+

+

+## Accessing Test Suite Edit Mode

+

+### Step 1: Navigate to Test Suites

+

+1. Go to your **Test Suites**

+2. Select **"Edit Test Step"**

+3. The Test Step Editor will open

+

+

+

+Editing the Request

+

+Inside the Test Step Editor, you can modify:

+

+```yaml

+Method: GET

+URL: https://api.example.com/users/{{user_id}}

+

+Headers:

+ Authorization: Bearer {{auth_token}}

+

+Body:

+ email: "{{email}}"

+```

+

+You can:

+

+- Change HTTP method

+- Update endpoint path

+- Modify headers

+- Edit JSON payload

+- Inject variables into any field

+

+## Editing Existing Assertions

+You can choose from multiple assertion categories:

+- Status Code Assertion

+- Header Assertion

+- Body / JSON Path Assertion

+- Schema Assertion

+

+

diff --git a/versioned_docs/version-4.0.0/running-keploy/api-testing-edit-suites.md b/versioned_docs/version-4.0.0/running-keploy/api-testing-edit-suites.md

new file mode 100644

index 000000000..bafc361f8

--- /dev/null

+++ b/versioned_docs/version-4.0.0/running-keploy/api-testing-edit-suites.md

@@ -0,0 +1,85 @@

+---

+id: api-testing-edit-suites

+title: Edit Test Suites

+sidebar_label: Edit Test Suites

+description: Editing test suites for API tests

+tags:

+ - API testing

+ - webhooks

+ - integration

+ - custom validation

+ - policy enforcement

+keywords:

+ - webhook

+ - API testing

+ - PreExecute

+ - PostExecute

+ - external validation

+ - custom logic

+---

+

+import ProductTier from '@site/src/components/ProductTier';

+

+

+

+This guide will help you edit test suites in Keploy to customize your API testing workflow.

+

+## Editing Test Suite Details

+

+To modify test suite settings like name and description:

+

+1. Navigate to your test suite in the Keploy dashboard

+2. Click on the **three dots (⋯)** helper menu in the test suite you want to modify

+3. Select **"Edit Suite"** from the dropdown menu

+4. Update the suite name, description, and other details as needed

+5. Save your changes

+

+## Editing Individual Test Steps

+

+To modify specific test requests and responses:

+

+1. Go to the individual test step within your test suite

+2. Click on **"Edit Step"** to open the test editor

+3. You can now modify:

+ - Request details (URL, headers, body, parameters)

+ - HTTP method

+ - Request payload

+

+### Adding and Editing Assertions

+

+Assertions help validate your API responses. To add or edit assertions:

+

+1. In the test step editor, navigate to the assertions section

+2. Add new assertions or modify existing ones

+3. You can validate:

+ - Response status codes

+ - Response body content

+ - Response headers

+

+### Custom Functions in Assertions

+

+Keploy supports custom functions for advanced assertion logic. You can:

+

+- Create custom validation functions

+- Use JavaScript expressions for complex validations

+- Reference external validation logic

+

+For detailed information on custom functions, [reference here](#).

+### Creating and Using Variables

+

+Variables allow you to create reusable values across your test suite:

+

+1. **URL Base Path Variables**: Define base URLs that can be reused across multiple tests

+2. **Environment Variables**: Set different values for different testing environments

+3. **Dynamic Variables**: Create variables that change during test execution

+

+## How to Create Variables

+

+For in detail usage of variables refer here :

+

+## Best Practices

+

+- **Use descriptive names**: Give your test suites and individual tests clear, descriptive names that explain their purpose

+- **Group related tests**: Organize tests logically within suites (e.g., user authentication, payment processing, etc.)

+- **Keep suites focused**: Each test suite should test a specific feature or workflow

+- **Test multiple aspects**: Include assertions for status codes, response structure, and business logic

diff --git a/versioned_docs/version-4.0.0/running-keploy/api-testing-filter-suites.md b/versioned_docs/version-4.0.0/running-keploy/api-testing-filter-suites.md

new file mode 100644

index 000000000..3cea7f7df

--- /dev/null

+++ b/versioned_docs/version-4.0.0/running-keploy/api-testing-filter-suites.md

@@ -0,0 +1,78 @@

+---

+id: api-testing-filter-suites

+title: Using Filtering in Test Suites

+description: Guide to add filters for test suites

+sidebar_label: Filter Test Suites

+tags:

+ - api-testing

+ - filter-suites

+ - test-suite

+ - test-management

+---

+

+import ProductTier from '@site/src/components/ProductTier';

+

+

+

+This guide explains how to filter test suites in Keploy to quickly find and manage your API tests. You can apply various filters to narrow down your test suites based on different criteria.

+

+## Available Filter Options

+

+Keploy provides multiple filtering options to help you efficiently locate and organize your test suites:

+

+### 1. Filter by Test Suite

+- Filter test suites by their name or identifier

+- Quickly locate specific test suites from a large collection

+- Use search functionality to find test suites by partial name matching

+

+### 2. Filter by Status Code

+- Filter tests based on HTTP response status codes

+- Common status code filters:

+ - **2xx Success**: 200 OK, 201 Created, 204 No Content, etc.

+ - **3xx Redirection**: 301 Moved Permanently, 302 Found, 304 Not Modified, etc.

+ - **4xx Client Errors**: 400 Bad Request, 401 Unauthorized, 404 Not Found, etc.

+ - **5xx Server Errors**: 500 Internal Server Error, 502 Bad Gateway, 503 Service Unavailable, etc.

+- Useful for identifying failing tests or specific response patterns

+

+### 3. Filter by HTTP Method

+- Filter tests based on the HTTP request method:

+ - **GET**: Retrieve data from the server

+ - **POST**: Submit data to create new resources

+ - **PUT**: Update existing resources

+ - **PATCH**: Partially update resources

+ - **DELETE**: Remove resources

+ - **OPTIONS**: Get communication options

+ - **HEAD**: Get headers without body

+- Helps organize tests by the type of operation being tested

+

+### 4. Filter by Endpoint

+- Filter tests based on the API endpoint or URL path

+- Search by:

+ - Full endpoint URL

+ - Partial path matching

+ - Endpoint patterns

+- Useful for testing specific API routes or services

+

+## How to Apply Filters

+

+- Access the Filter Panel

+- Select Filter Criteria

+- Click "Apply" or the filters will be applied automatically

+

+## Example Use Cases

+

+### Finding Failed Tests

+```

+Filter by Status Code: 4xx, 5xx

+```

+This will show all tests that resulted in client or server errors.

+

+## Benefits of Filtering

+

+- **Faster Navigation**: Quickly find specific tests without scrolling through long lists

+- **Better Organization**: Group and view related tests together

+- **Debugging Efficiency**: Isolate failing tests or problematic endpoints

+- **Test Analysis**: Understand patterns in your API behavior

+- **Maintenance**: Easier to update or remove tests for specific endpoints or methods

+

+By using these filtering options, you can efficiently manage and analyze your test suites.

\ No newline at end of file

diff --git a/versioned_docs/version-4.0.0/running-keploy/api-testing-fixing-ai.md b/versioned_docs/version-4.0.0/running-keploy/api-testing-fixing-ai.md

new file mode 100644

index 000000000..de618d8b7

--- /dev/null

+++ b/versioned_docs/version-4.0.0/running-keploy/api-testing-fixing-ai.md

@@ -0,0 +1,132 @@

+---

+id: api-testing-fix-with-ai

+title: Fix with AI

+sidebar_label: Fix with AI

+description: Automatically normalize and repair failing test suites using AI

+tags:

+ - API testing

+ - AI automation

+ - test normalization

+ - test maintenance

+ - debugging

+keywords:

+ - AI test fixing

+ - normalize test suite

+ - failing tests

+ - automated test repair

+ - intelligent assertions

+---

+

+import ProductTier from '@site/src/components/ProductTier';

+

+

+

+## Fix with AI

+

+**Fix with AI** helps you automatically repair and normalize failing test suites using intelligent analysis.

+

+Instead of manually editing requests, assertions, or schema mismatches, you can provide instructions to the AI, and it will adjust the suite accordingly.

+

+This significantly reduces test maintenance effort when APIs evolve.

+

+---

+

+## When to Use Fix with AI

+

+Use this feature when:

+

+- A test suite fails after backend changes

+- Response fields were renamed or restructured

+- Dynamic fields are causing frequent assertion failures

+- Schema mismatches occur

+- You want to normalize outdated validations

+- You want to clean up over-strict assertions

+

+---

+

+## How It Works

+

+1. Navigate to a **Failing Test Suite**

+2. Click **Fix with AI**

+3. Provide instructions describing what needs to be corrected

+

+Example instructions:

+- "Normalize dynamic fields like timestamps and request IDs"

+- "Update schema based on latest API response"

+- "Ignore volatile metadata fields"

+- "Fix assertion mismatches based on new response structure"

+- "Relax strict JSON equality checks"

+

+4. Submit your instructions

+5. AI analyzes the failure and updates the test suite accordingly

+

+---

+

+## What the AI Can Modify

+

+The AI can intelligently update:

+

+- JSON assertions

+- Schema validations

+- Header validations

+- Status code expectations

+- Dynamic field handling

+- Selected field configurations

+- Request payload mismatches

+

+It ensures the suite reflects the current API behavior while preserving intended validation logic.

+

+---

+

+## Example Scenario

+

+### Problem

+Your API now returns:

+

+```json

+{

+ "id": 123,

+ "email": "user@example.com",

+ "createdAt": "2026-02-11T10:30:00Z"

+}

+```

+

+Previously, your test expected strict equality including `createdAt`.

+

+The test fails due to timestamp variance.

+

+### Instruction to AI

+"Normalize dynamic fields like `createdAt` and ignore timestamp differences."

+

+### Result

+AI updates the assertion to:

+

+- Use Schema validation instead of strict equality

+- Exclude or normalize the `createdAt` field

+- Keep critical business validations intact

+

+The test suite now passes without weakening important checks.

+

+---

+

+## Normalization Behavior

+

+When you ask the AI to "normalize" a suite, it may:

+

+- Replace strict JSON Equal with Schema validation

+- Convert full-body comparison into Selected Fields

+- Remove volatile fields from assertions

+- Adjust regex for dynamic headers

+- Update expected status codes if API behavior changed intentionally

+

+Normalization focuses on making tests stable without reducing meaningful validation.

+

+---

+

+## Best Practices

+

+- **Be specific in your instructions**

+- **Clearly mention which fields should be ignored or updated**

+- **Review AI-generated changes before finalizing**

+- **Use normalization for dynamic fields, not business logic errors**

+- **Keep critical validations strict**

\ No newline at end of file

diff --git a/versioned_docs/version-4.0.0/running-keploy/api-testing-functions.md b/versioned_docs/version-4.0.0/running-keploy/api-testing-functions.md

new file mode 100644

index 000000000..5c0d4066d

--- /dev/null

+++ b/versioned_docs/version-4.0.0/running-keploy/api-testing-functions.md

@@ -0,0 +1,51 @@

+---

+id: api-testing-functions

+title: Functions & Schema Assertions

+sidebar_label: Functions & Assertions

+description: Enforce response integrity with schema validation and extend testing logic with Global Custom Functions.

+---

+

+## B. Functions & Assertions: Logic & Integrity

+

+Assertions define the **"Pass/Fail"** criteria for your tests. While standard checks confirm status codes, comprehensive auditing requires validating data integrity through **Schema** and **Logical** validations.

+

+- **Schema Assertions**: These verify that an API consistently returns the correct data types (e.g., string, integer), preventing breaking changes from reaching production.

+- **Custom Functions**: Use these for complex logic that simple matching cannot handle, such as verifying if a timestamp falls within a specific range.

+- **Global Functions**: Repeatable logic can be saved to the Global library for team-wide reuse.

+

+

+

+## Hands-On: Schema Assertions

+

+### 1. Selecting Fields to Assert

+Choose specifically which parts of a JSON response Keploy should validate during a replay.

+

+ +

+**Example Context:** In this window, we are targeting a `GET` request on the `/visits/-1` endpoint that expects a `400` status code. Under **Response Fields**, specific keys like `className` and `exMessage` are selected. Toggling these ensures Keploy validates that these fields are returned as the correct data type (strings) during every replay.

+

+### 2. Multi-Type Assertions

+Stack different assertion types, such as verifying a status code while simultaneously checking if a specific field contains a certain value.

+

+

+

+**Example Context:** In this window, we are targeting a `GET` request on the `/visits/-1` endpoint that expects a `400` status code. Under **Response Fields**, specific keys like `className` and `exMessage` are selected. Toggling these ensures Keploy validates that these fields are returned as the correct data type (strings) during every replay.

+

+### 2. Multi-Type Assertions

+Stack different assertion types, such as verifying a status code while simultaneously checking if a specific field contains a certain value.

+

+ +

+**Example Context:** This setup enforces two rules simultaneously:

+1. **Status Code**: Validates that the server returns a `201` (Created).

+2. **JSON Contains**: Specifically checks the `city` header/field to ensure it matches the expected value `"sometown"`.

+

+## Custom & Global Functions

+

+### 1. Writing Inline Logic

+Access the `request` and `response` objects directly to create complex rules within the assertion panel.

+

+

+

+**Example Context:** This setup enforces two rules simultaneously:

+1. **Status Code**: Validates that the server returns a `201` (Created).

+2. **JSON Contains**: Specifically checks the `city` header/field to ensure it matches the expected value `"sometown"`.

+

+## Custom & Global Functions

+

+### 1. Writing Inline Logic

+Access the `request` and `response` objects directly to create complex rules within the assertion panel.

+

+ +

+**Example Context:** The editor shows a function signature: `function customFunction(request, response)`. This gives you direct access to the full objects, allowing you to write JavaScript logic to determine if the test should pass based on dynamic conditions.

+

+### 2. Global Function Library

+Save repeatable validation rules to the Global Custom Functions library so they can be reused across your entire development team.

+

+

+

+**Example Context:** The editor shows a function signature: `function customFunction(request, response)`. This gives you direct access to the full objects, allowing you to write JavaScript logic to determine if the test should pass based on dynamic conditions.

+

+### 2. Global Function Library

+Save repeatable validation rules to the Global Custom Functions library so they can be reused across your entire development team.

+

+ +

+

+**Example Context:** The library shows reusable logic stored globally. For example, the `customFunction` at the bottom demonstrates an `assert.greater(response.status, 399)` utility, creating a standardized rule to verify error-level status codes across multiple suites.

\ No newline at end of file

diff --git a/versioned_docs/version-4.0.0/running-keploy/api-testing-generation-history.md b/versioned_docs/version-4.0.0/running-keploy/api-testing-generation-history.md

new file mode 100644

index 000000000..1680855c2

--- /dev/null

+++ b/versioned_docs/version-4.0.0/running-keploy/api-testing-generation-history.md

@@ -0,0 +1,172 @@

+---

+id: api-testing-generation-history

+title: Test Generation History

+description: Guide to viewing and managing test generation history with job tracking and status monitoring

+sidebar_label: Generation History

+tags:

+ - api-testing

+ - generation-history

+ - test-generation

+ - job-tracking

+ - test-management

+---

+import ProductTier from '@site/src/components/ProductTier';

+

+

+

+This guide explains how to use the generation history to track and manage your test generation jobs.

+

+## Generation History Features

+

+### Job Information Display

+

+For each generation job, you can view:

+

+1. **Job ID**: Unique identifier for each test generation run

+2. **Generation Statistics**:

+ - **Accepted**: Number of test suites that passed validation

+ - **Recovered**: Number of test suites that were recovered from errors

+ - **Rejected**: Number of test suites that failed validation

+ - **Buggy**: Number of test suites with identified issues

+3. **Input Details**: The inputs and configurations used for that particular generation

+4. **Timestamp**: When the generation job was executed

+5. **Status**: Overall status of the generation job (Completed, In Progress, Failed)

+

+### Viewing Generation Details

+

+To view details of a specific generation:

+

+1. **Navigate to Generation History**

+ - Go to the generation history section in Keploy

+ - View the list of all generation jobs

+

+2. **Review Job Statistics**

+ - See the breakdown of accepted, recovered, rejected, and buggy test suites

+

+3. **Check Input Parameters**

+ - View the inputs used for that generation

+ - Review configuration settings and parameters

+ - Understand what led to specific results

+

+## Working with Rejected Test Suites

+

+### Adding Rejected Tests to Current Suite

+

+If you find rejected test suites that you want to include:

+

+1. **Locate Rejected Tests**

+ - Browse through the generation history

+ - Identify jobs with rejected test suites

+

+2. **Click the Plus Icon**

+ - Click on the **+** (plus) icon next to any rejected test suite

+ - This action will add the rejected test to your current list of test suites

+

+## Understanding Test Statuses

+

+### Accepted Tests ✅

+- Tests that passed all validation checks

+- Successfully generated and ready to use

+- No issues detected in the test suite

+

+### Rejected Tests ❌

+- Tests that failed validation checks

+- May have incorrect assertions or invalid configurations

+- Require manual review and fixes

+- Can be added back to the test suite list for modification

+

+### Buggy Tests 🐛

+- Tests with identified bugs or issues

+- May have inconsistent behavior or errors

+- Need investigation and debugging

+- Review test logic and inputs

+

+## Example Generation History View

+

+```

+┌─────────────────────────────────────────────────────────────────┐

+│ Generation History │

+├─────────────────────────────────────────────────────────────────┤

+│ │

+│ Job ID: gen-2026-02-13-001 │

+│ Timestamp: 2026-02-13 10:30:45 │

+│ Status: Completed │

+│ │

+│ Statistics: │

+│ ✅ Accepted: 45 │

+│ ❌ Rejected: 8 │

+│ 🐛 Buggy: 3 │

+│ │

+│ Inputs Used: │

+│ - Endpoints: /api/v1/users, /api/v1/products │

+│ - Methods: GET, POST, PUT │

+│ - Recording Duration: 5 minutes │

+│ - Agent: Local Agent v2.1.0 │

+│ │

+│ Rejected Tests: [+] Add to Suite │

+│ │

+├─────────────────────────────────────────────────────────────────┤

+│ │

+│ Job ID: gen-2026-02-12-005 │

+│ Timestamp: 2026-02-12 16:22:10 │

+│ Status: Completed │

+│ │

+│ Statistics: │

+│ ✅ Accepted: 32 │

+│ ❌ Rejected: 15 │

+│ 🐛 Buggy: 7 │

+│ │

+│ Inputs Used: │

+│ - Endpoints: /api/v2/orders │

+│ - Methods: GET, DELETE │

+│ - Recording Duration: 3 minutes │

+│ - Agent: Browser Extension │

+│ │

+│ Rejected Tests: [+] Add to Suite │

+│ │

+└─────────────────────────────────────────────────────────────────┘

+```

+

+## Analyzing Generation Trends

+

+### Success Rate Analysis

+- Track the percentage of accepted vs rejected tests over time

+- Identify patterns in test generation quality

+- Optimize inputs based on historical data

+

+### Input Optimization

+- Review which inputs led to higher acceptance rates

+- Compare different configurations and their outcomes

+- Refine your test generation strategy

+

+### Error Patterns

+- Identify common reasons for test rejection

+- Address recurring bugs or issues

+- Improve test generation quality

+

+## Best Practices

+

+1. **Regular Review**

+ - Check generation history regularly

+ - Monitor acceptance rates and trends

+ - Address rejected tests promptly

+

+2. **Learn from Rejected Tests**

+ - Analyze why tests were rejected

+ - Improve input parameters for future generations

+ - Document common issues and solutions

+

+3. **Recover and Reuse**

+ - Use the plus icon to recover rejected tests

+ - Fix and validate rejected test suites

+ - Build a comprehensive test coverage

+

+4. **Track Performance**

+ - Monitor the number of buggy tests

+ - Identify problematic endpoints or methods

+ - Improve API stability based on insights

+

+5. **Maintain Clean History**

+ - Archive old generation jobs periodically

+ - Focus on recent and relevant generations

+ - Keep track of successful generation patterns

diff --git a/versioned_docs/version-4.0.0/running-keploy/api-testing-local-agent.md b/versioned_docs/version-4.0.0/running-keploy/api-testing-local-agent.md

new file mode 100644

index 000000000..348196975

--- /dev/null

+++ b/versioned_docs/version-4.0.0/running-keploy/api-testing-local-agent.md

@@ -0,0 +1,45 @@

+---

+id: api-testing-local-agent

+title: Using Keploy Local Agent

+description: Guide to adding to recording and generating test suites using local agent

+sidebar_label: Local Agent

+tags:

+ - api-testing

+ - local-agent

+ - test-suite

+ - test-management

+---

+import ProductTier from '@site/src/components/ProductTier';

+

+

+

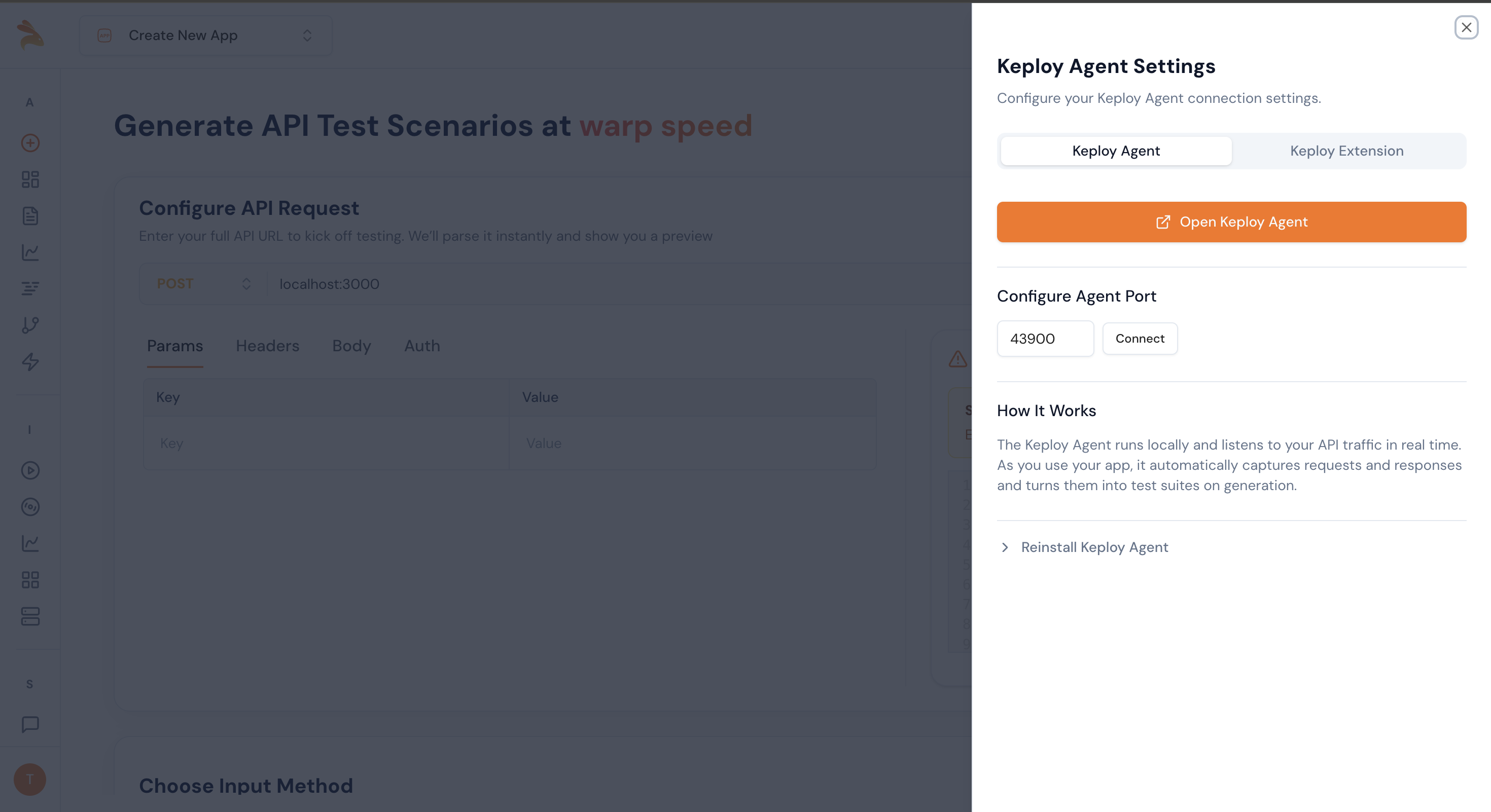

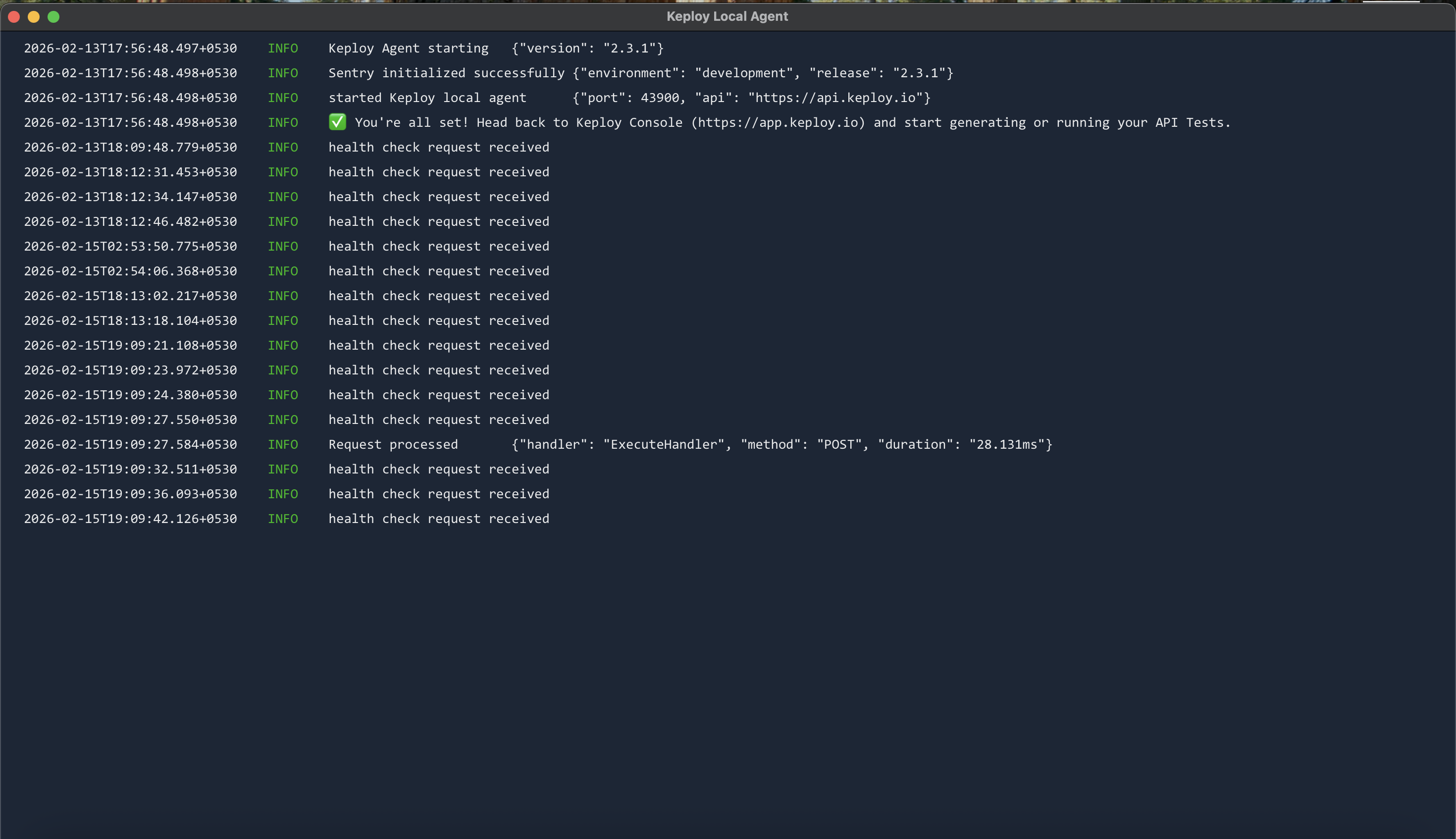

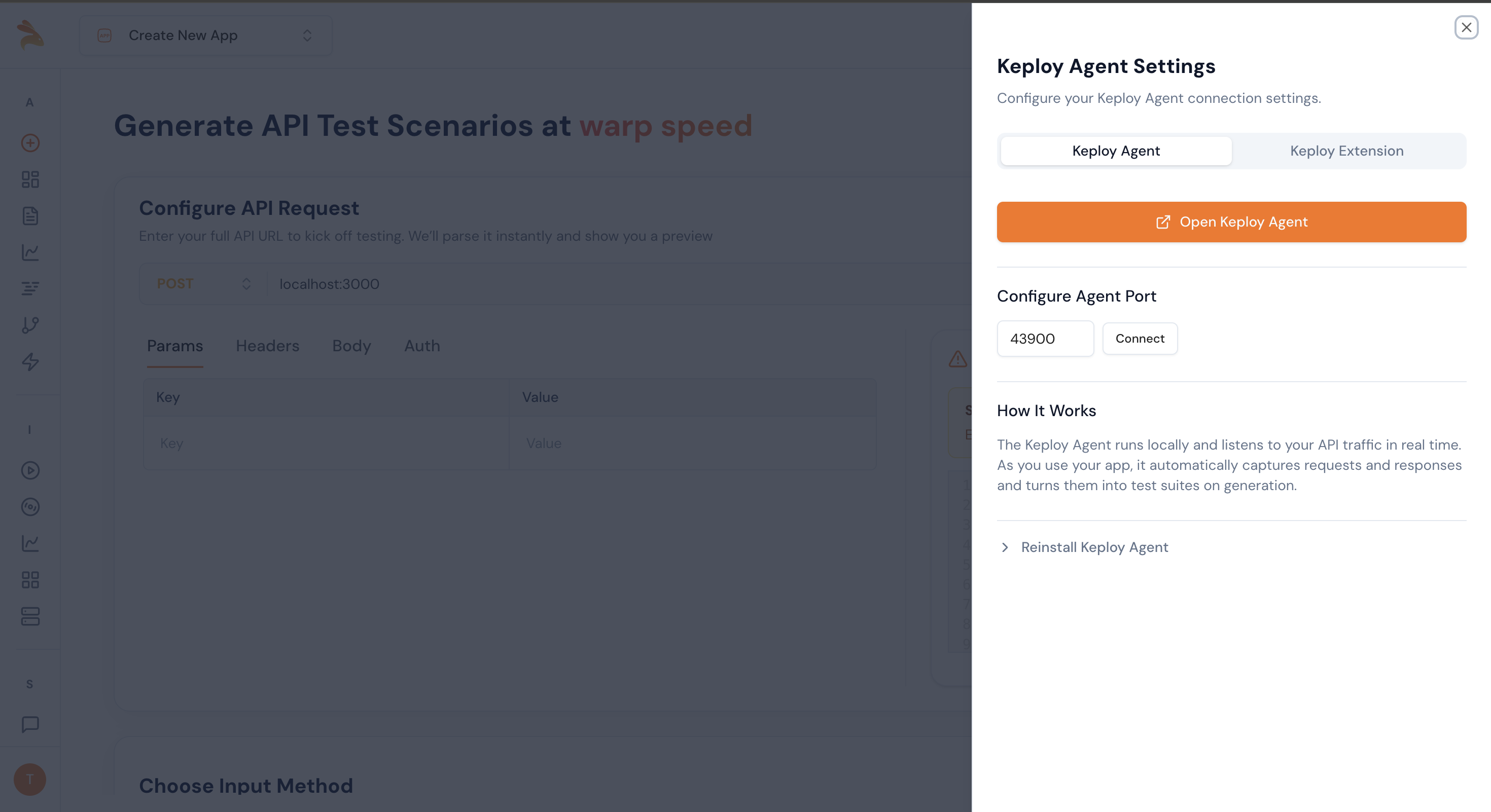

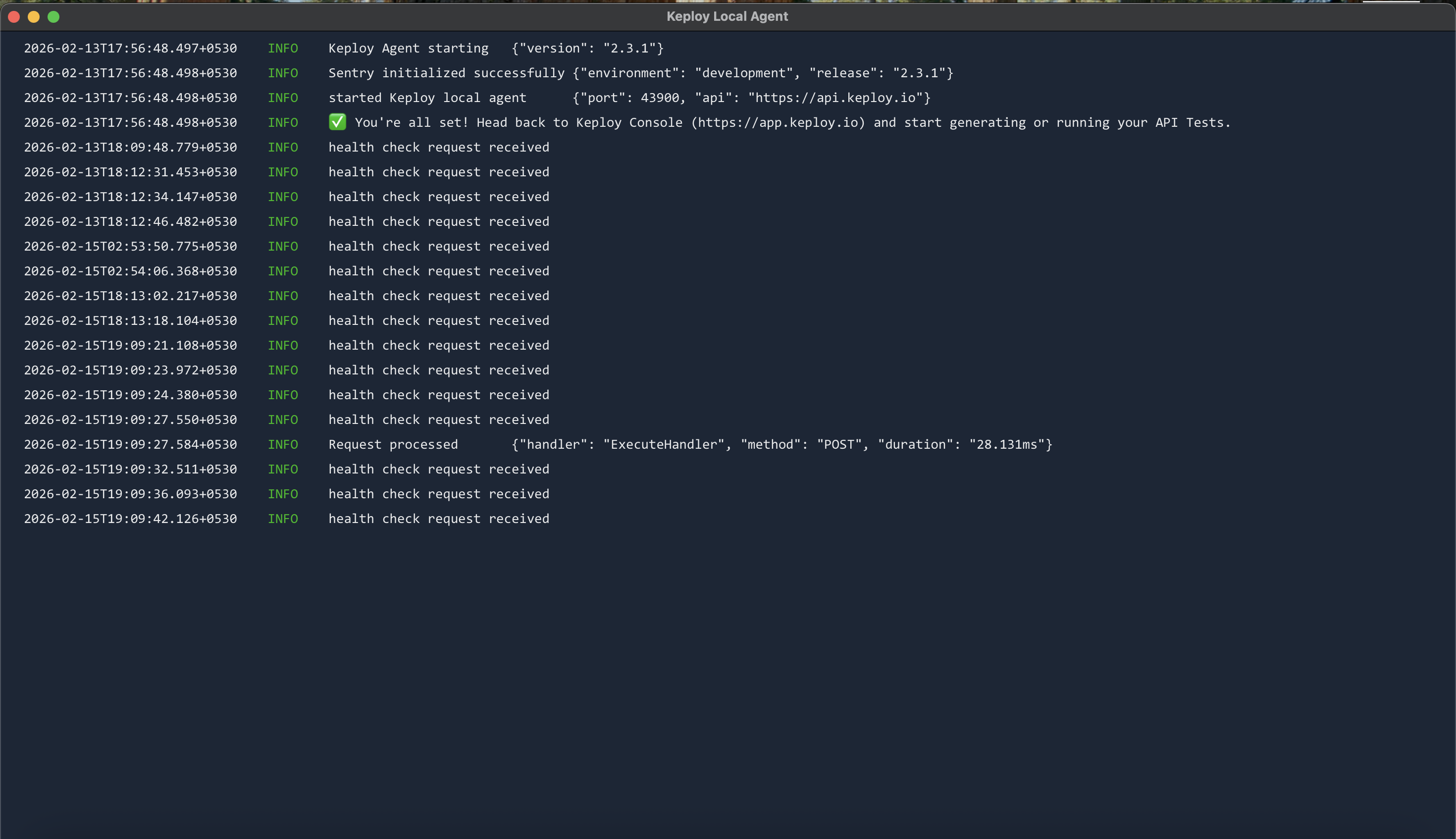

+This guide explains how to use the local agent to test private or local endpoints. Follow the steps below to set up and use the local agent effectively.

+

+## Steps to Use the Local Agent

+

+1. **Enter the Endpoint URL**

+ - Enter the endpoint URL you want to test. This can be a private URL or any other endpoint.

+ - You can also enter a localhost link

+

+2. **Download the Keploy Agent**

+ - Based on your device configuration, download the Keploy agent:

+ - **Windows**

+ - **Mac**

+ - **Linux**

+

+

+3. **Start the Keploy Agent**

+ - Once the agent is downloaded, start it on your device.

+ - Open the agent interface to ensure it is running and ready to connect.

+

+

+

+4. **Record API Calls**

+ - The Keploy agent will automatically record the API calls you make.

+ - It will capture the responses and start generating test suites based on the recorded calls.

+

+5. **Troubleshooting Connection Issues**

+ - Ensure that the agent is running and the endpoint URL is correct.

+ - If the local agent fails to connect, you can use the Keploy extension as an alternative [Record API Tests via Chrome Extension](https://keploy.io/docs/running-keploy/api-testing-chrome-extension/)

+

+By following these steps, you can efficiently use the local agent to test your APIs and generate test suites automatically.

\ No newline at end of file

diff --git a/versioned_docs/version-4.0.0/running-keploy/api-testing-mark-unbuggy.md b/versioned_docs/version-4.0.0/running-keploy/api-testing-mark-unbuggy.md

new file mode 100644

index 000000000..b15975303

--- /dev/null

+++ b/versioned_docs/version-4.0.0/running-keploy/api-testing-mark-unbuggy.md

@@ -0,0 +1,77 @@

+---

+id: api-testing-mark-unbuggy

+title: Mark Test Suite as Unbuggy

+description: Guide to marking test suites as unbuggy after fixing issues

+sidebar_label: Mark as Unbuggy

+tags:

+ - api-testing

+ - test-management

+ - suite-status

+ - debugging

+---

+

+import ProductTier from '@site/src/components/ProductTier';

+

+

+

+After resolving issues in a buggy test suite, you can mark it as unbuggy to indicate that the problems have been addressed and the suite is functioning correctly again.

+

+## When to Mark a Suite as Unbuggy

+

+Mark a test suite as unbuggy when:

+

+- All test failures have been resolved

+- API endpoints are working as expected

+- Schema validations are passing

+- Authentication issues have been fixed

+- Test assertions are now accurate

+- The underlying API issues have been corrected

+

+## How to Mark a Suite as Unbuggy

+

+### Step 1: Navigate to the Test Suite

+

+1. Navigate to the **Test Suites** section

+2. Click on the specific test suite you want to mark as unbuggy

+

+### Step 2: Access Suite Options

+

+Once you're on the test suite page:

+

+1. Look for the **three dots (⋮)** menu next to the test suite name

+2. Select **"Unmark Buggy"** from the dropdown options

+

+## What Happens When You Mark a Suite as Unbuggy

+

+### Immediate Changes

+

+- **Status Update**: The suite status changes from "Buggy" to "Active"

+- **Future Runs**: The suite will run normally in subsequent test executions

+- **Reporting**: The suite will be included in standard test reports

+- **Monitoring**: Keploy will continue monitoring the suite for new issues

+- **History**: The previous buggy status and resolution are logged in the suite history

+

+### 1. API Endpoint Restored

+

+**Scenario**: A 404 error was resolved by fixing the API endpoint

+

+**Before marking unbuggy**:

+```bash

+# Verify the endpoint is working

+curl -X POST https://api.example.com/owners \

+ -H "Content-Type: application/json" \

+ -d '{"name": "Test Owner"}'

+```

+

+### 2. Schema Issues Fixed

+

+**Scenario**: Response schema validation was fixed by updating the API

+

+**Verification steps**:

+1. Check that response format matches expectations

+2. Verify all required fields are present

+3. Confirm data types are correct

+

+This ensures that suites are automatically marked as unbuggy when automated fixes resolve issues.

+

+Remember: Marking a suite as unbuggy should only be done after thoroughly verifying that all issues have been resolved and the suite is functioning correctly.

\ No newline at end of file

diff --git a/versioned_docs/version-4.0.0/running-keploy/api-testing-run-report.md b/versioned_docs/version-4.0.0/running-keploy/api-testing-run-report.md

new file mode 100644

index 000000000..721074be6

--- /dev/null

+++ b/versioned_docs/version-4.0.0/running-keploy/api-testing-run-report.md

@@ -0,0 +1,206 @@

+---

+id: api-testing-run-report

+title: Test Run Reports

+description: Guide to viewing and analyzing test run reports with detailed execution results and filtering

+sidebar_label: Test Run Reports

+tags:

+ - api-testing

+ - run-reports

+ - test-execution

+ - test-results

+ - test-management

+---

+import ProductTier from '@site/src/components/ProductTier';

+

+

+

+This guide explains how to use the run report page to track and analyze your test execution results.

+

+## Run Report List View

+

+### Report Summary Information

+

+For each test run, you can view:

+

+1. **Report ID**: Unique identifier for the test run

+2. **Ran on**: Timestamp when the test run was executed

+3. **Ran by**: User or system that initiated the test run

+4. **Total Suites**: Total number of test suites executed

+5. **Status Distribution**:

+ - **Pass**: Number of test suites that passed ✅

+ - **Fail**: Number of test suites that failed ❌

+ - **Buggy**: Number of test suites with bugs 🐛

+

+### Viewing Report List

+

+1. **Navigate to Run Reports**

+ - Go to the run reports section in Keploy

+ - View the list of all test execution runs

+

+2. **Review Report Summary**

+ - See the overall pass/fail/buggy distribution

+ - Identify problematic test runs at a glance

+ - Track test execution history

+

+## Detailed Report View

+

+### Accessing Detailed Results

+

+Click on any report from the list to view detailed execution results:

+

+1. **Click on Report ID**

+ - Select a report to view full details

+ - Access comprehensive test execution information

+

+2. **View Test Results**

+ - See detailed breakdown of all test suites

+ - Identify which tests passed, failed, or are buggy

+ - Review execution metrics and timings

+

+### Understanding Test Results

+

+#### Passed Tests ✅

+- Tests that successfully completed all assertions

+- All validations matched expected results

+- No errors or warnings during execution

+

+#### Failed Tests ❌

+- Tests that did not meet assertion criteria

+- **Failure Reasons Displayed**:

+ - Assertion mismatches

+ - Unexpected response values

+ - Status code mismatches

+ - Timeout errors

+- **Association Failures**:

+ - Failures from dependent services or associations

+ - External API failures affecting the test

+ - Database connection issues

+

+#### Buggy Tests

+- Tests with identified bugs or inconsistent behavior

+- **Buggy Reasons Displayed**:

+ - Shown on top of the particular test step

+ - Detailed error messages and stack traces

+ - Intermittent failures or race conditions

+ - Data inconsistencies

+

+## Filtering Test Results

+

+The run report page provides powerful filtering options to help you analyze specific test results:

+

+### Available Filters

+

+#### 1. Filter by Suite Status

+Filter tests based on their execution outcome:

+- **Passed**: Show only successful tests

+- **Failed**: Show only failed tests

+- **Buggy**: Show only buggy tests

+- **All**: View all test results

+

+#### 2. Filter by Status Code

+Filter by HTTP response status codes:

+- **2xx Success**: 200 OK, 201 Created, 204 No Content

+- **3xx Redirection**: 301, 302, 304

+- **4xx Client Errors**: 400, 401, 403, 404

+- **5xx Server Errors**: 500, 502, 503, 504

+- **Custom Code**: Filter by specific status codes

+

+#### 3. Filter by HTTP Method

+Filter tests by request method:

+- **GET**: Retrieve operations

+- **POST**: Create operations

+- **PUT**: Update operations

+- **PATCH**: Partial update operations

+- **DELETE**: Delete operations

+- **OPTIONS, HEAD**: Other HTTP methods

+

+#### 4. Filter by Endpoint

+Filter by API endpoint or URL path:

+- Full endpoint URL

+- Partial path matching

+- Wildcard patterns

+- Multiple endpoints selection

+

+### Applying Filters

+

+1. **Open Filter Panel**

+ - Click on the filter icon in the report view

+ - Select desired filter criteria

+ - You can also apply multiple filters simultaneously

+

+

+## Detailed Test Step Information

+

+### Viewing Step-by-Step Results

+

+For each test case, you can see:

+

+1. **Test Steps Breakdown**

+ - Individual steps within each test

+ - Request and response details

+ - Execution time for each step

+

+2. **Buggy Reasons on Test Steps**

+ - Detailed error messages displayed on top of the affected step

+ - Root cause analysis

+ - Stack traces when available

+ - Suggested fixes or actions

+

+3. **Failure Reasons from Assertions**

+ - Expected vs actual values comparison

+ - Schema validation errors

+ - Assertion failure details

+

+4. **Association Failures**

+ - Failures from dependent services

+ - External API errors

+ - Database or integration issues

+ - Cascading failure analysis

+

+## Example Report View

+

+```

+┌─────────────────────────────────────────────────────────────────┐

+│ Run Reports │

+├─────────────────────────────────────────────────────────────────┤

+│ │

+│ Report ID: run-2026-02-13-001 │

+│ Ran On: 2026-02-13 14:25:30 │

+│ Ran by: john.doe@example.com │

+│ Total Suites: 150 │

+│ │

+│ Distribution: │

+│ ✅ Pass: 125 (83%) │

+│ ❌ Fail: 18 (12%) │

+│ 🐛 Buggy: 7 (5%) │

+│ │

+│ [Test Suite Details] │

+│ │

+├─────────────────────────────────────────────────────────────────┤

+│ │

+│ Report ID: run-2026-02-13-002 │

+│ Created: 2026-02-13 10:15:22 │

+│ Creator: Automated CI/CD │

+│ Total Suites: 200 │

+│ │

+│ Distribution: │

+│ ✅ Pass: 180 (90%) │

+│ ❌ Fail: 15 (7.5%) │

+│ 🐛 Buggy: 5 (2.5%) │

+│ │

+│ [Test Suite Details] │

+│ │

+└─────────────────────────────────────────────────────────────────┘

+```

+

+## Benefits of Run Reports

+

+- **Comprehensive Testing Visibility**: Complete view of test execution results

+- **Quick Issue Identification**: Easily spot failures and bugs

+- **Detailed Diagnostics**: Step-by-step failure analysis

+- **Association Tracking**: Monitor external dependencies

+- **Historical Tracking**: Maintain test execution history

+- **Team Collaboration**: Share results and insights with team

+- **Data-Driven Decisions**: Use metrics to improve test quality

+

+By leveraging the run report features, you can maintain high-quality APIs, quickly identify and fix issues, and ensure comprehensive test coverage across your application.

\ No newline at end of file

diff --git a/versioned_docs/version-4.0.0/running-keploy/api-testing-running-selective.md b/versioned_docs/version-4.0.0/running-keploy/api-testing-running-selective.md

new file mode 100644

index 000000000..d1e8756a0

--- /dev/null

+++ b/versioned_docs/version-4.0.0/running-keploy/api-testing-running-selective.md

@@ -0,0 +1,101 @@

+---

+id: api-testing-running-selective

+title: Running Selective Test Suites

+description: Guide to selecting and running specific test suites using checkboxes and bulk actions

+sidebar_label: Selective Test Execution

+tags:

+ - api-testing

+ - test-execution

+ - bulk-actions

+ - test-management

+---

+

+

+import ProductTier from '@site/src/components/ProductTier';

+

+

+

+Keploy allows you to select specific test suites from your test collection and perform bulk actions like running tests, deleting suites, or adding labels.

+

+## Overview

+

+The selective test suite feature provides:

+

+- **Checkbox Selection**: Choose individual test suites or select all

+- **Bulk Actions**: Perform actions on multiple suites simultaneously

+- **Filtered Execution**: Run only the tests you've selected

+

+## Selecting Test Suites

+

+### Individual Selection

+

+1. **Navigate to Test Suites**

+ - Go to your Test Suite dashboard

+ - The test suites have a checkbox on the top for multi selection

+

+### Bulk Selection Options

+

+#### Select All Suites

+```

+☑️ Select All (at the top of the list)

+```

+- Checkbox at the top of the suite list

+- Selects all visible test suites on the current page

+- Useful for applying actions to your entire test collection

+

+#### Select by Filter

+1. Apply filters (status, tags, creation date, etc.)

+2. Use "Select All" to choose all filtered results

+3. Only suites matching your criteria will be selected

+

+## Available Actions

+

+Once you've selected test suites, several bulk actions become available:

+

+### 1. Run Selected Tests

+

+**Button**: **Run Selector**

+

+**What it does**:

+- Executes all test cases within the selected suites

+- Runs tests in sequential order

+- Provides consolidated results for all selected suites

+

+### 2. Delete Selected Suites

+

+**Button**: **Delete Selected**

+

+**What it does**:

+- Permanently removes selected test suites

+- Deletes all test cases within those suites

+

+**Safety Features**:

+- Confirmation dialog before deletion

+- Shows list of suites to be deleted

+

+### 3. Add Labels

+

+**Button**: **Add Labels**

+

+**What it does**:

+- Adds labels/tags to selected test suites

+- Helps organize and categorize tests

+

+**Sample Label Types**:

+- **Priority**: `high`, `medium`, `low`

+- **Category**: `smoke`, `regression`, `integration`

+- **Custom**: Any custom label you define

+

+## Best Practices

+

+ - Verify all intended suites are selected

+ - Check that no critical tests are missing

+

+## Related Features

+

+- **[Test Suite Management](./api-testing-edit-suites.md)**: Edit and organize test suites

+- **[Buggy Test Suites](./api-testing-buggy-suites.md)**: Handle failing test suites

+- **[Test Reports](./api-testing-sharing-reports.md)**: View and share execution results

+- **[Custom Assertions](./api-testing-custom-assertions.md)**: Define custom validation rules

+

+The selective test execution feature gives you fine-grained control over your test suite management, enabling efficient testing workflows and better resource utilization.

\ No newline at end of file

diff --git a/versioned_docs/version-4.0.0/running-keploy/api-testing-schema-coverage.md b/versioned_docs/version-4.0.0/running-keploy/api-testing-schema-coverage.md

new file mode 100644

index 000000000..e60b7f2fc

--- /dev/null

+++ b/versioned_docs/version-4.0.0/running-keploy/api-testing-schema-coverage.md

@@ -0,0 +1,146 @@

+---

+id: api-testing-schema-coverage

+title: Schema Coverage and Generation

+description: Guide to viewing schema coverage and generating tests for missing coverage

+sidebar_label: Schema Coverage

+tags:

+ - api-testing