New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Connecting Functional API models together #4205

Comments

|

You should connect the output layer of the first network to the input layer of the second network. Something like: |

|

@dieuwkehupkes but (there's always a BUT!) when I use Thanks again |

|

Not sure, what did you do exactly? Wasn't discriminator your second model? What did you call your stacked model? Maybe you put the wrong functions when you compiled it? |

|

@fchollet @dieuwkehupkes Generator => Input : I want one of the discriminator outputs to be a MAE loss which regresses to an RGB Image and the other output to be the normal discriminator output (0 or 1) to discriminate whether the input is fake or not... the diagrams have been shown in the previous comment. The usual way (without the regressor) is to use 'Binary Cross-Entropy' loss for both the Generator and the Discriminator and also the model which consists of them both... But right now, I don't know how to choose the loss for the models... what kind of loss should I give the generator to account for both the discriminator's 'MAE' and 'BCE' losses ? here's what I did : This settings gives me 3 Losses whenever I call |

|

The way you setup the loss (putting a different one for the two output units) seems fine. Maybe the third 'loss' you see during training is actually a default metric? I have trouble reproducing the issue, could you maybe past your code in a gist and send the link? |

|

Sure... Here's the gist. Thanks! |

|

I get an error when running your code because there is still a reference to a folder higher in your directory. When I call discriminator_on_generator.loss I just get two losses, as you specified. I then ran predict_on_batch for discriminator_on_generator with some random arrays as in and output, and then I finally understood what you mean. The three losses you get are: the overall loss of the network, the loss of the first output layer and the loss of the second output layer. If you are ever in doubt about the numbers some training output, you can put That solve your problem? |

|

Thanks... I learned so much! It finally started working even though I think the architecture is useless... But it seems no matter what parameters I set, the discriminator does its own thing while the generator does it the other way around! Even though the Discriminator has 3 losses but the overall loss isn't exactly the sum of 2 losses and when we have 2 losses I'm not sure how the update is propagated to the generator... from the outputs I checked it seems after a while they start overriding each other's changes and becoming devastative instead of useful... I should think up an architecture where the Discriminator helps the Regressor and vice versa... Nevertheless, Thanks for the help and your time... You really helped me out! |

|

Glad to hear that, happy to help! If you have some more questions just lmk :) |

|

@dieuwkehupkes I'm having a similar issue but using a model as a few intermediate layers. def Generator():

# INPUTS

vq = keras.layers.Input(shape=(16,16,256), name="vq")

r = keras.layers.Input(shape=(16,16,256), name="r")

h0 = keras.layers.Input(shape=(16,16,256), name="h0")

c0 = keras.layers.Input(shape=(16,16,256), name="c0")

u0 = keras.layers.Input(shape=(64,64,256), name="u0")

cell0 = GeneratorCell()(inputs=[vq, r, h0, c0, u0])

something = keras.layers.Conv2D(

filters = 3,

kernel_size = (1,1),

strides = (1,1)

)(cell0[2])

model = keras.Model(inputs=[vq, r, h0, c0, u0], outputs=something)

return modelKeras tell me:

The strange thing is that I've tested the GeneratorCell model independently and it works, and the Generator model becomes differentiable if I remove the GeneratorCell. I assume I must be using the functional API wrong, but I'm not sure why this would not be differentiable? |

|

Hi @elihanover, It's been quite a while since I used keras, so I'm not sure if I'll be able to help you. One question I have about your post is where this generator cell is coming from, you defined it yourself? Perhaps you are calling it with the wrong arguments? What do you mean when you say it works when you remove the generator cell? In case what would be the input to your convolutional layer? |

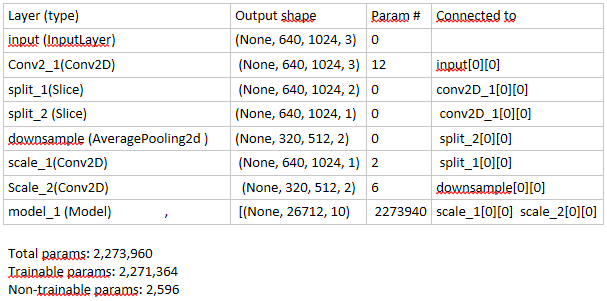

Hi @dieuwkehupkes, I know it's an old comment of yours, but it is still helpful. I'm using the same code as you've mentioned above to combine 2 separate Keras Models and it works correctly. EXCEPT for one small problem when I want to print out the summary of the newly combined model. When I print out the summary, I get the following output: The main info from above to look out for is the last layer called Do you or does anyone else know how one can achieve this in Keras? |

@fchollet

I'm trying to connect 2 functional API models together. here's the summary of the 2 models:

The First "Input" Model (It works as a single model just fine):

The Second Model that is supposed to be connected to the first model:

I'm trying to connect them together like this:

model = Model(input=generator.input, output=[discriminator.output[0], discriminator.output[1]])But I get this error:

I tried to make a model out of them like this:

Model(input=[generator.input, discriminator.input], output=[discriminator.output[0], discriminator.output[1]])But this code just resulted in the second Model (and not the 2 of them together), or at least this is what I think after getting a summary of the model and plotting it's structure.

can we do this in Keras (connecting functional API models) or is there another way?

Thanks

The text was updated successfully, but these errors were encountered: