diff --git a/README.md b/README.md

index 6cf5c3f..c0cc2a7 100644

--- a/README.md

+++ b/README.md

@@ -1,9 +1,9 @@

-# pysheds [](https://travis-ci.org/mdbartos/pysheds) [](https://coveralls.io/github/mdbartos/pysheds?branch=master) [](https://www.python.org/downloads/release/python-370/)

+# pysheds [](https://travis-ci.org/mdbartos/pysheds) [](https://coveralls.io/github/mdbartos/pysheds?branch=master) [](https://www.python.org/downloads/)

🌎 Simple and fast watershed delineation in python.

## Documentation

-Read the docs [here](https://mdbartos.github.io/pysheds).

+Read the docs [here 📖](https://mdbartos.github.io/pysheds).

## Media

@@ -17,45 +17,136 @@ Read the docs [here](https://mdbartos.github.io/pysheds).

## Example usage

-See [examples/quickstart](https://github.com/mdbartos/pysheds/blob/master/examples/quickstart.ipynb) for more details.

+Example data used in this tutorial are linked below:

-Data available via the [USGS HydroSHEDS](https://hydrosheds.cr.usgs.gov/datadownload.php) project.

+ - Elevation: [elevation.tiff](https://pysheds.s3.us-east-2.amazonaws.com/data/elevation.tiff)

+ - Terrain: [impervious_area.zip](https://pysheds.s3.us-east-2.amazonaws.com/data/impervious_area.zip)

+ - Soil Polygons: [soils.zip](https://pysheds.s3.us-east-2.amazonaws.com/data/soils.zip)

+

+Additional DEM datasets are available via the [USGS HydroSHEDS](https://www.hydrosheds.org/) project.

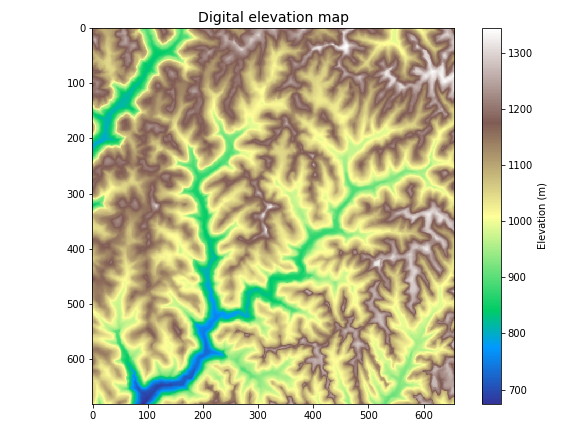

### Read DEM data

```python

-# Read elevation and flow direction rasters

+# Read elevation raster

# ----------------------------

from pysheds.grid import Grid

-grid = Grid.from_raster('n30w100_con', data_name='dem')

-grid.read_raster('n30w100_dir', data_name='dir')

-grid.view('dem')

+grid = Grid.from_raster('elevation.tiff')

+dem = grid.read_raster('elevation.tiff')

```

-

+

+Plotting code...

+

-### Elevation to flow direction

+```python

+import numpy as np

+import matplotlib.pyplot as plt

+from matplotlib import colors

+import seaborn as sns

+

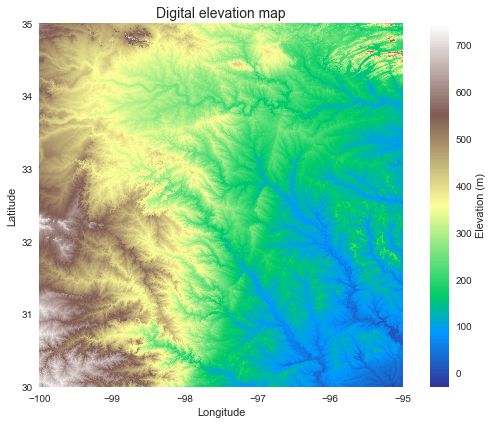

+fig, ax = plt.subplots(figsize=(8,6))

+fig.patch.set_alpha(0)

+

+plt.imshow(dem, extent=grid.extent, cmap='terrain', zorder=1)

+plt.colorbar(label='Elevation (m)')

+plt.grid(zorder=0)

+plt.title('Digital elevation map', size=14)

+plt.xlabel('Longitude')

+plt.ylabel('Latitude')

+plt.tight_layout()

+```

+

+

+

+

+

+

+### Condition the elevation data

```python

-# Determine D8 flow directions from DEM

+# Condition DEM

# ----------------------

+# Fill pits in DEM

+pit_filled_dem = grid.fill_pits(dem)

+

# Fill depressions in DEM

-grid.fill_depressions('dem', out_name='flooded_dem')

+flooded_dem = grid.fill_depressions(pit_filled_dem)

# Resolve flats in DEM

-grid.resolve_flats('flooded_dem', out_name='inflated_dem')

-

+inflated_dem = grid.resolve_flats(flooded_dem)

+```

+

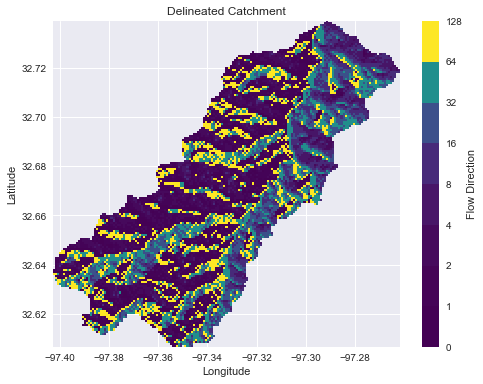

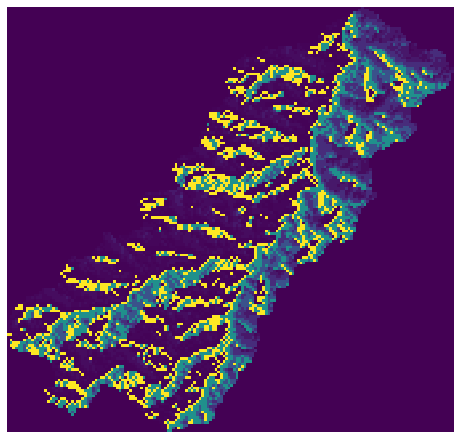

+### Elevation to flow direction

+

+```python

+# Determine D8 flow directions from DEM

+# ----------------------

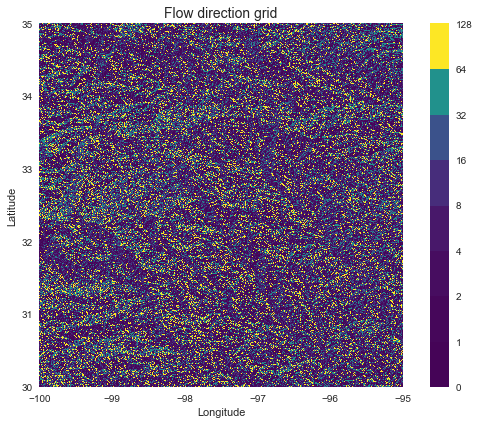

# Specify directional mapping

dirmap = (64, 128, 1, 2, 4, 8, 16, 32)

# Compute flow directions

# -------------------------------------

-grid.flowdir(data='inflated_dem', out_name='dir', dirmap=dirmap)

-grid.view('dir')

+fdir = grid.flowdir(inflated_dem, dirmap=dirmap)

+```

+

+

+Plotting code...

+

+

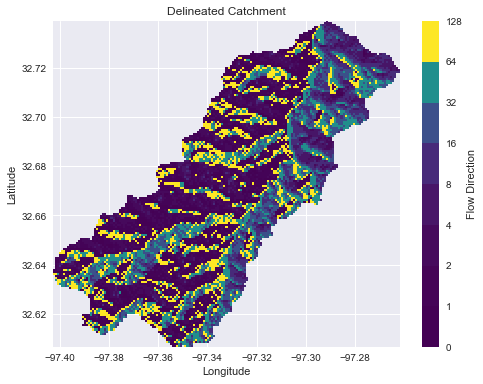

+```python

+fig = plt.figure(figsize=(8,6))

+fig.patch.set_alpha(0)

+

+plt.imshow(fdir, extent=grid.extent, cmap='viridis', zorder=2)

+boundaries = ([0] + sorted(list(dirmap)))

+plt.colorbar(boundaries= boundaries,

+ values=sorted(dirmap))

+plt.xlabel('Longitude')

+plt.ylabel('Latitude')

+plt.title('Flow direction grid', size=14)

+plt.grid(zorder=-1)

+plt.tight_layout()

+```

+

+

+

+

+

+

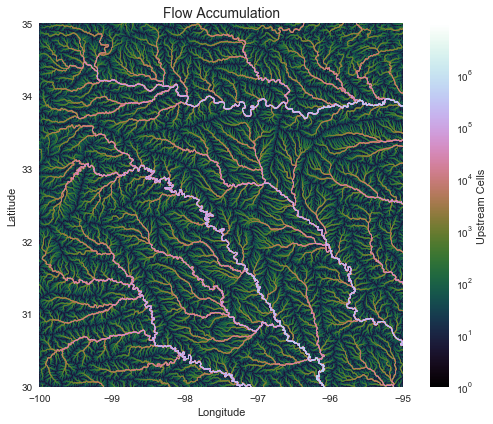

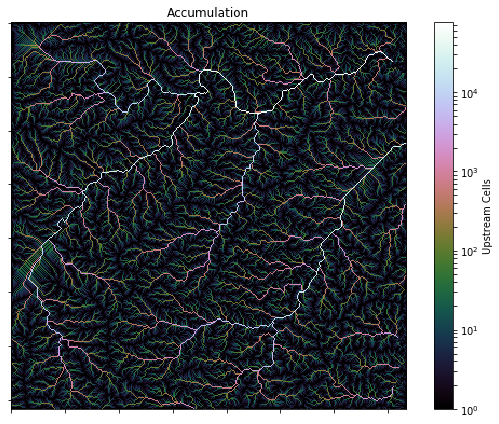

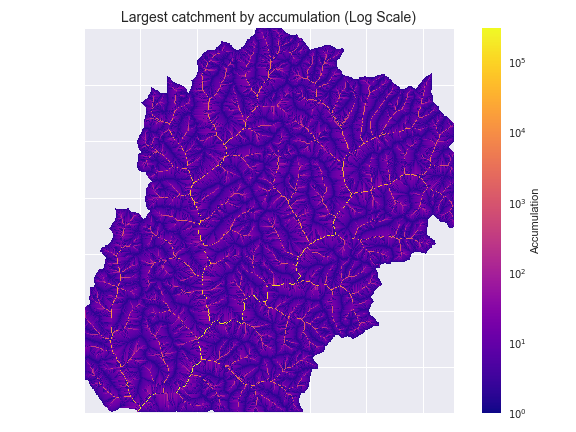

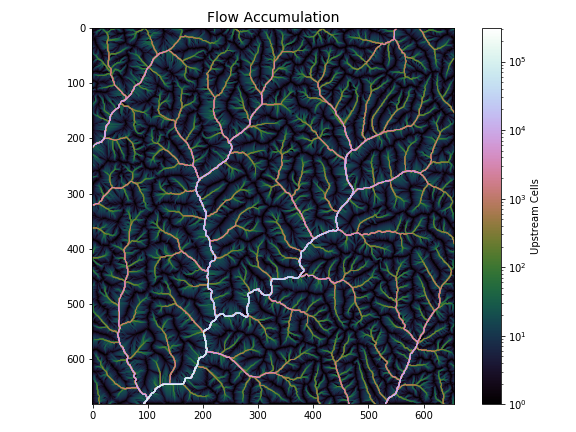

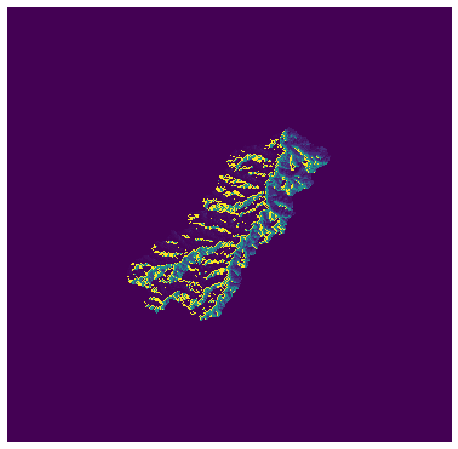

+### Compute accumulation from flow direction

+

+```python

+# Calculate flow accumulation

+# --------------------------

+acc = grid.accumulation(fdir, dirmap=dirmap)

```

-

+

+Plotting code...

+

+

+```python

+fig, ax = plt.subplots(figsize=(8,6))

+fig.patch.set_alpha(0)

+plt.grid('on', zorder=0)

+im = ax.imshow(acc, extent=grid.extent, zorder=2,

+ cmap='cubehelix',

+ norm=colors.LogNorm(1, acc.max()),

+ interpolation='bilinear')

+plt.colorbar(im, ax=ax, label='Upstream Cells')

+plt.title('Flow Accumulation', size=14)

+plt.xlabel('Longitude')

+plt.ylabel('Latitude')

+plt.tight_layout()

+```

+

+

+

+

+

+

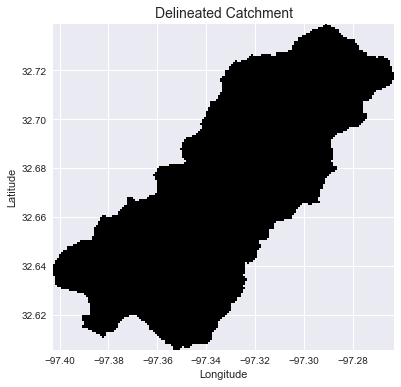

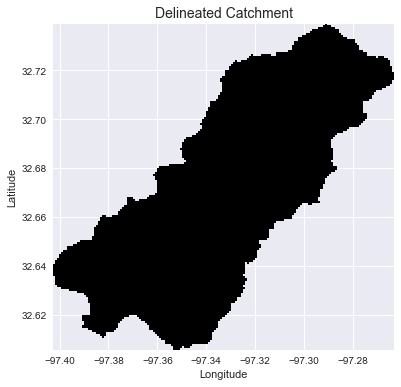

### Delineate catchment from flow direction

@@ -63,66 +154,139 @@ grid.view('dir')

# Delineate a catchment

# ---------------------

# Specify pour point

-x, y = -97.294167, 32.73750

+x, y = -97.294, 32.737

+

+# Snap pour point to high accumulation cell

+x_snap, y_snap = grid.snap_to_mask(acc > 1000, (x, y))

# Delineate the catchment

-grid.catchment(data='dir', x=x, y=y, dirmap=dirmap, out_name='catch',

- recursionlimit=15000, xytype='label')

+catch = grid.catchment(x=x_snap, y=y_snap, fdir=fdir, dirmap=dirmap,

+ xytype='coordinate')

# Crop and plot the catchment

# ---------------------------

# Clip the bounding box to the catchment

-grid.clip_to('catch')

-grid.view('catch')

+grid.clip_to(catch)

+clipped_catch = grid.view(catch)

```

-

+

+Plotting code...

+

-### Compute accumulation from flow direction

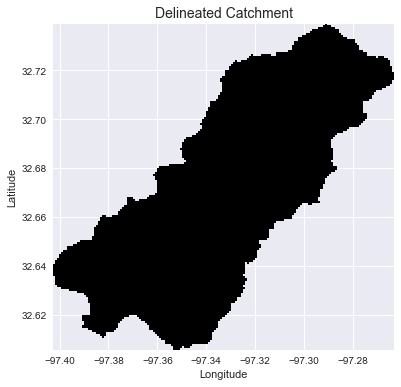

+```python

+# Plot the catchment

+fig, ax = plt.subplots(figsize=(8,6))

+fig.patch.set_alpha(0)

+

+plt.grid('on', zorder=0)

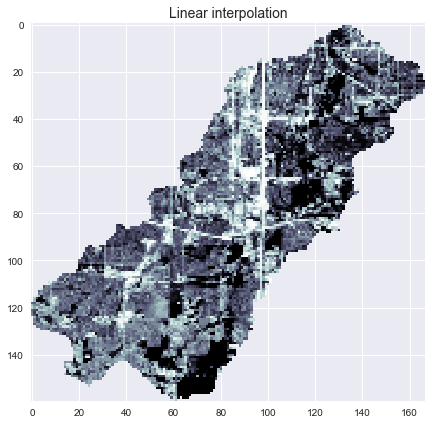

+im = ax.imshow(np.where(clipped_catch, clipped_catch, np.nan), extent=grid.extent,

+ zorder=1, cmap='Greys_r')

+plt.xlabel('Longitude')

+plt.ylabel('Latitude')

+plt.title('Delineated Catchment', size=14)

+```

+

+

+

+

+

+

+### Extract the river network

```python

-# Calculate flow accumulation

-# --------------------------

-grid.accumulation(data='catch', dirmap=dirmap, out_name='acc')

-grid.view('acc')

+# Extract river network

+# ---------------------

+branches = grid.extract_river_network(fdir, acc > 50, dirmap=dirmap)

```

-

+

+Plotting code...

+

+

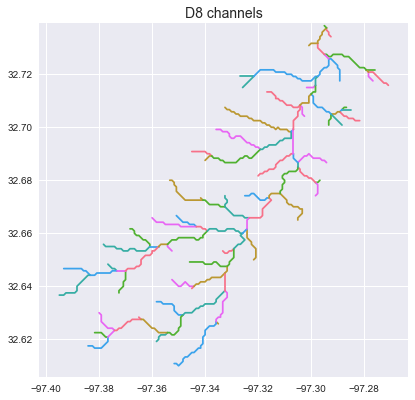

+```python

+sns.set_palette('husl')

+fig, ax = plt.subplots(figsize=(8.5,6.5))

+

+plt.xlim(grid.bbox[0], grid.bbox[2])

+plt.ylim(grid.bbox[1], grid.bbox[3])

+ax.set_aspect('equal')

+

+for branch in branches['features']:

+ line = np.asarray(branch['geometry']['coordinates'])

+ plt.plot(line[:, 0], line[:, 1])

+

+_ = plt.title('D8 channels', size=14)

+```

+

+

+

+

+

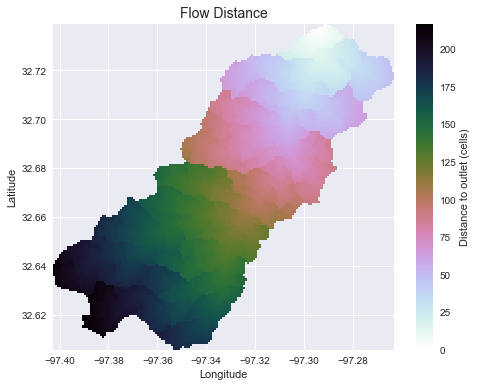

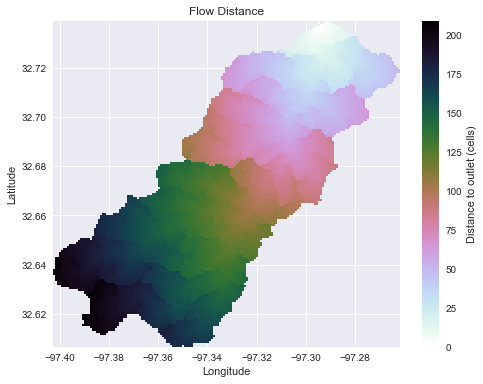

### Compute flow distance from flow direction

```python

# Calculate distance to outlet from each cell

# -------------------------------------------

-grid.flow_distance(data='catch', x=x, y=y, dirmap=dirmap,

- out_name='dist', xytype='label')

-grid.view('dist')

+dist = grid.distance_to_outlet(x=x_snap, y=y_snap, fdir=fdir, dirmap=dirmap,

+ xytype='coordinate')

```

-

-

-### Extract the river network

+

+Plotting code...

+

```python

-# Extract river network

-# ---------------------

-branches = grid.extract_river_network(fdir='catch', acc='acc',

- threshold=50, dirmap=dirmap)

+fig, ax = plt.subplots(figsize=(8,6))

+fig.patch.set_alpha(0)

+plt.grid('on', zorder=0)

+im = ax.imshow(dist, extent=grid.extent, zorder=2,

+ cmap='cubehelix_r')

+plt.colorbar(im, ax=ax, label='Distance to outlet (cells)')

+plt.xlabel('Longitude')

+plt.ylabel('Latitude')

+plt.title('Flow Distance', size=14)

```

-

+

+

+

+

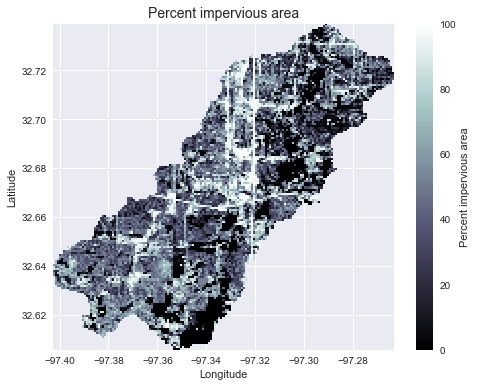

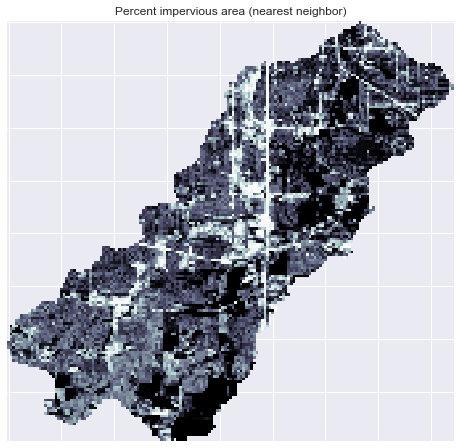

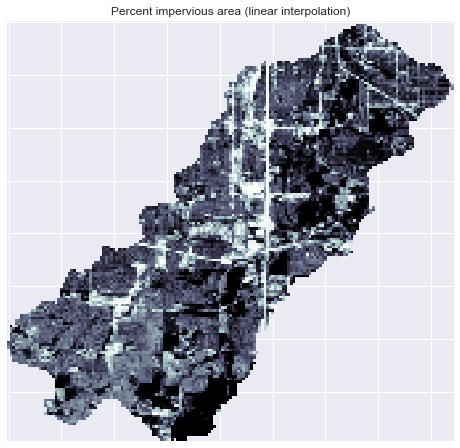

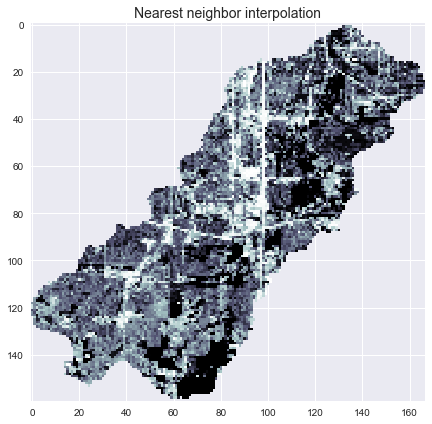

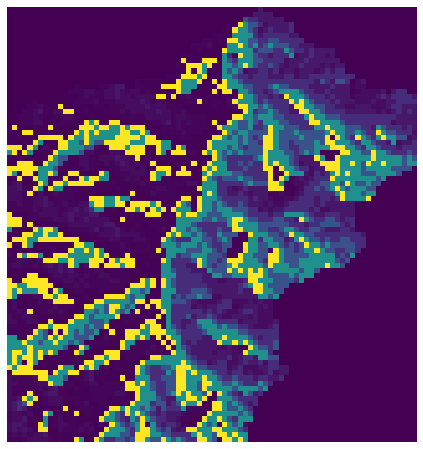

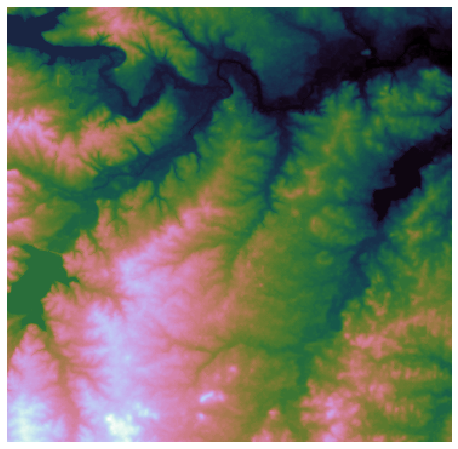

### Add land cover data

```python

# Combine with land cover data

# ---------------------

-grid.read_raster('nlcd_2011_impervious_2011_edition_2014_10_10.img',

- data_name='terrain', window=grid.bbox, window_crs=grid.crs)

-grid.view('terrain')

+terrain = grid.read_raster('impervious_area.tiff', window=grid.bbox,

+ window_crs=grid.crs, nodata=0)

+# Reproject data to grid's coordinate reference system

+projected_terrain = terrain.to_crs(grid.crs)

+# View data in catchment's spatial extent

+catchment_terrain = grid.view(projected_terrain, nodata=np.nan)

```

-

+

+Plotting code...

+

+

+```python

+fig, ax = plt.subplots(figsize=(8,6))

+fig.patch.set_alpha(0)

+plt.grid('on', zorder=0)

+im = ax.imshow(catchment_terrain, extent=grid.extent, zorder=2,

+ cmap='bone')

+plt.colorbar(im, ax=ax, label='Percent impervious area')

+plt.xlabel('Longitude')

+plt.ylabel('Latitude')

+plt.title('Percent impervious area', size=14)

+```

+

+

+

+

+

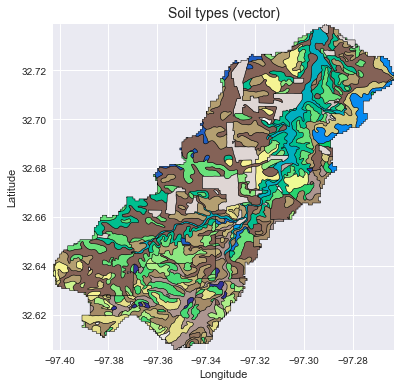

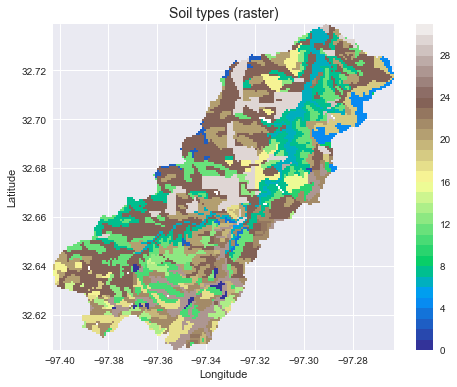

### Add vector data

@@ -130,82 +294,110 @@ grid.view('terrain')

# Convert catchment raster to vector and combine with soils shapefile

# ---------------------

# Read soils shapefile

+import pandas as pd

import geopandas as gpd

from shapely import geometry, ops

-soils = gpd.read_file('nrcs-soils-tarrant_439.shp')

+soils = gpd.read_file('soils.shp')

+soil_id = 'MUKEY'

# Convert catchment raster to vector geometry and find intersection

shapes = grid.polygonize()

catchment_polygon = ops.unary_union([geometry.shape(shape)

for shape, value in shapes])

soils = soils[soils.intersects(catchment_polygon)]

-catchment_soils = soils.intersection(catchment_polygon)

+catchment_soils = gpd.GeoDataFrame(soils[soil_id],

+ geometry=soils.intersection(catchment_polygon))

+# Convert soil types to simple integer values

+soil_types = np.unique(catchment_soils[soil_id])

+soil_types = pd.Series(np.arange(soil_types.size), index=soil_types)

+catchment_soils[soil_id] = catchment_soils[soil_id].map(soil_types)

+```

+

+

+Plotting code...

+

+

+```python

+fig, ax = plt.subplots(figsize=(8, 6))

+catchment_soils.plot(ax=ax, column=soil_id, categorical=True, cmap='terrain',

+ linewidth=0.5, edgecolor='k', alpha=1, aspect='equal')

+ax.set_xlim(grid.bbox[0], grid.bbox[2])

+ax.set_ylim(grid.bbox[1], grid.bbox[3])

+plt.xlabel('Longitude')

+plt.ylabel('Latitude')

+ax.set_title('Soil types (vector)', size=14)

```

-

+

+

+

+

### Convert from vector to raster

```python

-# Convert soils polygons to raster

-# ---------------------

-soil_polygons = zip(catchment_soils.geometry.values,

- catchment_soils['soil_type'].values)

+soil_polygons = zip(catchment_soils.geometry.values, catchment_soils[soil_id].values)

soil_raster = grid.rasterize(soil_polygons, fill=np.nan)

```

-

-

-### Estimate inundation using the Rapid Flood Spilling Method

+

+Plotting code...

+

```python

-# Estimate inundation extent

-# ---------------------

-from pysheds.rfsm import RFSM

-grid = Grid.from_raster('roi.tif', data_name='dem')

-grid.clip_to('dem')

-dem = grid.view('dem')

-cell_area = np.abs(grid.affine.a * grid.affine.e)

-# Create RFSM instance

-rfsm = RFSM(dem)

-# Apply uniform rainfall to DEM

-input_vol = 0.1 * cell_area * np.ones(dem.shape)

-waterlevel = rfsm.compute_waterlevel(input_vol)

+fig, ax = plt.subplots(figsize=(8, 6))

+plt.imshow(soil_raster, cmap='terrain', extent=grid.extent, zorder=1)

+boundaries = np.unique(soil_raster[~np.isnan(soil_raster)]).astype(int)

+plt.colorbar(boundaries=boundaries,

+ values=boundaries)

+ax.set_xlim(grid.bbox[0], grid.bbox[2])

+ax.set_ylim(grid.bbox[1], grid.bbox[3])

+plt.xlabel('Longitude')

+plt.ylabel('Latitude')

+ax.set_title('Soil types (raster)', size=14)

```

- +

+

+

+

+

## Features

- Hydrologic Functions:

- - `flowdir`: DEM to flow direction.

- - `catchment`: Delineate catchment from flow direction.

- - `accumulation`: Flow direction to flow accumulation.

- - `flow_distance`: Compute flow distance to outlet.

- - `extract_river_network`: Extract river network at a given accumulation threshold.

- - `cell_area`: Compute (projected) area of cells.

- - `cell_distances`: Compute (projected) channel length within cells.

- - `cell_dh`: Compute the elevation change between cells.

- - `cell_slopes`: Compute the slopes of cells.

- - `fill_pits`: Fill simple pits in a DEM (single cells lower than their surrounding neighbors).

- - `fill_depressions`: Fill depressions in a DEM (regions of cells lower than their surrounding neighbors).

- - `resolve_flats`: Resolve drainable flats in a DEM using the modified method of Garbrecht and Martz (1997).

- - `compute_hand` : Compute the height above nearest drainage (HAND) as described in Nobre et al. (2011).

-- Utilities:

- - `view`: Returns a view of a dataset at a given bounding box and resolution.

- - `clip_to`: Clip the current view to the extent of nonzero values in a given dataset.

- - `set_bbox`: Set the current view to a rectangular bounding box.

- - `snap_to_mask`: Snap a set of coordinates to the nearest masked cells (e.g. cells with high accumulation).

- - `resize`: Resize a dataset to a new resolution.

- - `rasterize`: Convert a vector dataset to a raster dataset.

- - `polygonize`: Convert a raster dataset to a vector dataset.

- - `detect_pits`: Return boolean array indicating locations of simple pits in a DEM.

- - `detect_flats`: Return boolean array indicating locations of flats in a DEM.

- - `detect_depressions`: Return boolean array indicating locations of depressions in a DEM.

- - `check_cycles`: Check for cycles in a flow direction grid.

- - `set_nodata`: Set nodata value for a dataset.

-- I/O:

+ - `flowdir` : Generate a flow direction grid from a given digital elevation dataset.

+ - `catchment` : Delineate the watershed for a given pour point (x, y).

+ - `accumulation` : Compute the number of cells upstream of each cell; if weights are

+ given, compute the sum of weighted cells upstream of each cell.

+ - `distance_to_outlet` : Compute the (weighted) distance from each cell to a given

+ pour point, moving downstream.

+ - `distance_to_ridge` : Compute the (weighted) distance from each cell to its originating

+ drainage divide, moving upstream.

+ - `compute_hand` : Compute the height above nearest drainage (HAND).

+ - `stream_order` : Compute the (strahler) stream order.

+ - `extract_river_network` : Extract river segments from a catchment and return a geojson

+ object.

+ - `cell_dh` : Compute the drop in elevation from each cell to its downstream neighbor.

+ - `cell_distances` : Compute the distance from each cell to its downstream neighbor.

+ - `cell_slopes` : Compute the slope between each cell and its downstream neighbor.

+ - `fill_pits` : Fill single-celled pits in a digital elevation dataset.

+ - `fill_depressions` : Fill multi-celled depressions in a digital elevation dataset.

+ - `resolve_flats` : Remove flats from a digital elevation dataset.

+ - `detect_pits` : Detect single-celled pits in a digital elevation dataset.

+ - `detect_depressions` : Detect multi-celled depressions in a digital elevation dataset.

+ - `detect_flats` : Detect flats in a digital elevation dataset.

+- Viewing Functions:

+ - `view` : Returns a "view" of a dataset defined by the grid's viewfinder.

+ - `clip_to` : Clip the viewfinder to the smallest area containing all non-

+ null gridcells for a provided dataset.

+ - `nearest_cell` : Returns the index (column, row) of the cell closest

+ to a given geographical coordinate (x, y).

+ - `snap_to_mask` : Snaps a set of points to the nearest nonzero cell in a boolean mask;

+ useful for finding pour points from an accumulation raster.

+- I/O Functions:

- `read_ascii`: Reads ascii gridded data.

- `read_raster`: Reads raster gridded data.

+ - `from_ascii` : Instantiates a grid from an ascii file.

+ - `from_raster` : Instantiates a grid from a raster file or Raster object.

- `to_ascii`: Write grids to delimited ascii files.

- `to_raster`: Write grids to raster files (e.g. geotiff).

@@ -255,10 +447,37 @@ $ pip install .

```

# Performance

-Performance benchmarks on a 2015 MacBook Pro:

-

-- Flow Direction to Flow Accumulation: 36 million grid cells in 15 seconds.

-- Flow Direction to Catchment: 9.8 million grid cells in 4.55 seconds.

+Performance benchmarks on a 2015 MacBook Pro (M: million, K: thousand):

+

+| Function | Routing | Number of cells | Run time |

+| ----------------------- | ------- | ------------------------ | -------- |

+| `flowdir` | D8 | 36M | 1.09 [s] |

+| `flowdir` | DINF | 36M | 6.64 [s] |

+| `accumulation` | D8 | 36M | 3.65 [s] |

+| `accumulation` | DINF | 36M | 16.2 [s] |

+| `catchment` | D8 | 9.76M | 3.43 [s] |

+| `catchment` | DINF | 9.76M | 5.41 [s] |

+| `distance_to_outlet` | D8 | 9.76M | 4.74 [s] |

+| `distance_to_outlet` | DINF | 9.76M | 1 [m] 13 [s] |

+| `distance_to_ridge` | D8 | 36M | 6.83 [s] |

+| `hand` | D8 | 36M total, 730K channel | 12.9 [s] |

+| `hand` | DINF | 36M total, 770K channel | 18.7 [s] |

+| `stream_order` | D8 | 36M total, 1M channel | 3.99 [s] |

+| `extract_river_network` | D8 | 36M total, 345K channel | 4.07 [s] |

+| `detect_pits` | N/A | 36M | 1.80 [s] |

+| `detect_flats` | N/A | 36M | 1.84 [s] |

+| `fill_pits` | N/A | 36M | 2.52 [s] |

+| `fill_depressions` | N/A | 36M | 27.1 [s] |

+| `resolve_flats` | N/A | 36M | 9.56 [s] |

+| `cell_dh` | D8 | 36M | 2.34 [s] |

+| `cell_dh` | DINF | 36M | 4.92 [s] |

+| `cell_distances` | D8 | 36M | 1.11 [s] |

+| `cell_distances` | DINF | 36M | 2.16 [s] |

+| `cell_slopes` | D8 | 36M | 4.01 [s] |

+| `cell_slopes` | DINF | 36M | 10.2 [s] |

+

+Speed tests were run on a conditioned DEM from the HYDROSHEDS DEM repository

+(linked above as `elevation.tiff`).

# Citing

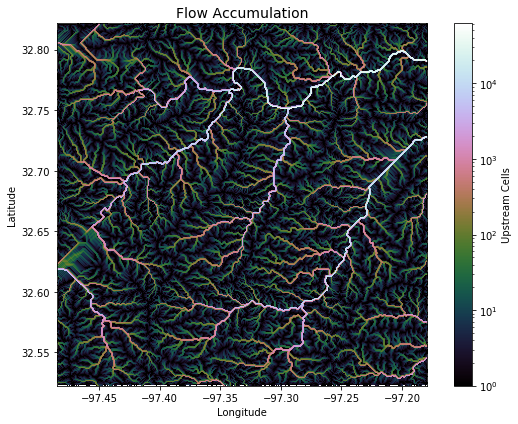

diff --git a/docs/accumulation.md b/docs/accumulation.md

index 1f1dc58..268877e 100644

--- a/docs/accumulation.md

+++ b/docs/accumulation.md

@@ -5,14 +5,15 @@

The `grid.accumulation` method operates on a flow direction grid. This flow direction grid can be computed from a DEM, as shown in [flow directions](https://mdbartos.github.io/pysheds/flow-directions.html).

```python

->>> from pysheds.grid import Grid

+from pysheds.grid import Grid

# Instantiate grid from raster

->>> grid = Grid.from_raster('../data/dem.tif', data_name='dem')

+grid = Grid.from_raster('./data/dem.tif')

+dem = grid.read_raster('./data/dem.tif')

# Resolve flats and compute flow directions

->>> grid.resolve_flats(data='dem', out_name='inflated_dem')

->>> grid.flowdir('inflated_dem', out_name='dir')

+inflated_dem = grid.resolve_flats(dem)

+fdir = grid.flowdir(inflated_dem)

```

## Computing accumulation

@@ -21,29 +22,34 @@ Accumulation is computed using the `grid.accumulation` method.

```python

# Compute accumulation

->>> grid.accumulation(data='dir', out_name='acc')

-

-# Plot accumulation

->>> acc = grid.view('acc')

->>> plt.imshow(acc)

+acc = grid.accumulation(fdir)

```

-

-

-## Computing weighted accumulation

-

-Weights can be used to adjust the relative contribution of each cell.

+

+Plotting code...

+

```python

-import pyproj

+import matplotlib.pyplot as plt

+import matplotlib.colors as colors

+%matplotlib inline

+

+fig, ax = plt.subplots(figsize=(8,6))

+fig.patch.set_alpha(0)

+plt.grid('on', zorder=0)

+im = ax.imshow(acc, extent=grid.extent, zorder=2,

+ cmap='cubehelix',

+ norm=colors.LogNorm(1, acc.max()),

+ interpolation='bilinear')

+plt.colorbar(im, ax=ax, label='Upstream Cells')

+plt.title('Flow Accumulation', size=14)

+plt.xlabel('Longitude')

+plt.ylabel('Latitude')

+plt.tight_layout()

+```

-# Compute areas of each cell in new projection

-new_crs = pyproj.Proj('+init=epsg:3083')

-areas = grid.cell_area(as_crs=new_crs, inplace=False)

+

+

-# Weight each cell by its relative area

-weights = (areas / areas.max()).ravel()

-# Compute accumulation with new weights

-grid.accumulation(data='dir', weights=weights, out_name='acc')

-```

+

diff --git a/docs/catchment.md b/docs/catchment.md

index 4b5a27e..0390e3b 100644

--- a/docs/catchment.md

+++ b/docs/catchment.md

@@ -5,53 +5,139 @@

The `grid.catchment` method operates on a flow direction grid. This flow direction grid can be computed from a DEM, as shown in [flow directions](https://mdbartos.github.io/pysheds/flow-directions.html).

```python

->>> from pysheds.grid import Grid

+from pysheds.grid import Grid

# Instantiate grid from raster

->>> grid = Grid.from_raster('../data/dem.tif', data_name='dem')

+grid = Grid.from_raster('./data/dem.tif')

+dem = grid.read_raster('./data/dem.tif')

# Resolve flats and compute flow directions

->>> grid.resolve_flats(data='dem', out_name='inflated_dem')

->>> grid.flowdir('inflated_dem', out_name='dir')

+inflated_dem = grid.resolve_flats(dem)

+fdir = grid.flowdir(inflated_dem)

```

## Delineating the catchment

-To delineate a catchment, first specify a pour point (the outlet of the catchment). If the x and y components of the pour point are spatial coordinates in the grid's spatial reference system, specify `xytype='label'`.

+To delineate a catchment, first specify a pour point (the outlet of the catchment). If the x and y components of the pour point are spatial coordinates in the grid's spatial reference system, specify `xytype='coordinate'`.

```python

# Specify pour point

->>> x, y = -97.294167, 32.73750

+x, y = -97.294167, 32.73750

# Delineate the catchment

->>> grid.catchment(data='dir', x=x, y=y, out_name='catch',

- recursionlimit=15000, xytype='label')

+catch = grid.catchment(x=x, y=y, fdir=fdir, xytype='coordinate')

# Plot the result

->>> grid.clip_to('catch')

->>> plt.imshow(grid.view('catch'))

+grid.clip_to(catch)

+catch_view = grid.view(catch)

```

-

+

+Plotting code...

+

+

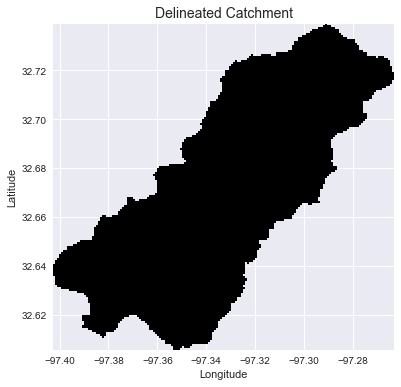

+```python

+# Plot the catchment

+fig, ax = plt.subplots(figsize=(8,6))

+fig.patch.set_alpha(0)

+

+plt.grid('on', zorder=0)

+im = ax.imshow(np.where(catch_view, catch_view, np.nan), extent=grid.extent,

+ zorder=1, cmap='Greys_r')

+plt.xlabel('Longitude')

+plt.ylabel('Latitude')

+plt.title('Delineated Catchment', size=14)

+```

+

+

+

+

+

+

If the x and y components of the pour point correspond to the row and column indices of the flow direction array, specify `xytype='index'`:

```python

# Reset the view

->>> grid.clip_to('dir')

+grid.viewfinder = fdir.viewfinder

# Find the row and column index corresponding to the pour point

->>> col, row = grid.nearest_cell(x, y)

->>> col, row

-(229, 101)

+col, row = grid.nearest_cell(x, y)

+

+# Delineate the catchment

+catch = grid.catchment(x=col, y=row, fdir=fdir, xytype='index')

+

+# Plot the result

+grid.clip_to(catch)

+catch_view = grid.view(catch)

+```

+

+

+Plotting code...

+

+

+```python

+# Plot the catchment

+fig, ax = plt.subplots(figsize=(8,6))

+fig.patch.set_alpha(0)

+

+plt.grid('on', zorder=0)

+im = ax.imshow(np.where(catch_view, catch_view, np.nan), extent=grid.extent,

+ zorder=1, cmap='Greys_r')

+plt.xlabel('Longitude')

+plt.ylabel('Latitude')

+plt.title('Delineated Catchment', size=14)

+```

+

+

+

+

+

+

+

+## Snapping pour point to high accumulation cells

+

+Sometimes the pour point isn't known exactly. In this case, it can be helpful to first compute the accumulation and then snap a trial pour point to the nearest high accumulation cell.

+

+```python

+# Reset view

+grid.viewfinder = fdir.viewfinder

+

+# Compute accumulation

+acc = grid.accumulation(fdir)

+

+# Snap pour point to high accumulation cell

+x_snap, y_snap = grid.snap_to_mask(acc > 1000, (x, y))

+

# Delineate the catchment

->>> grid.catchment(data=grid.dir, x=col, y=row, out_name='catch',

- recursionlimit=15000, xytype='index')

+catch = grid.catchment(x=x_snap, y=y_snap, fdir=fdir, xytype='coordinate')

# Plot the result

->>> grid.clip_to('catch')

->>> plt.imshow(grid.view('catch'))

+grid.clip_to(catch)

+catch_view = grid.view(catch)

+```

+

+

+Plotting code...

+

+

+```python

+# Plot the catchment

+fig, ax = plt.subplots(figsize=(8,6))

+fig.patch.set_alpha(0)

+

+plt.grid('on', zorder=0)

+im = ax.imshow(np.where(catch_view, catch_view, np.nan), extent=grid.extent,

+ zorder=1, cmap='Greys_r')

+plt.xlabel('Longitude')

+plt.ylabel('Latitude')

+plt.title('Delineated Catchment', size=14)

```

-

+

+

+

+

+

+

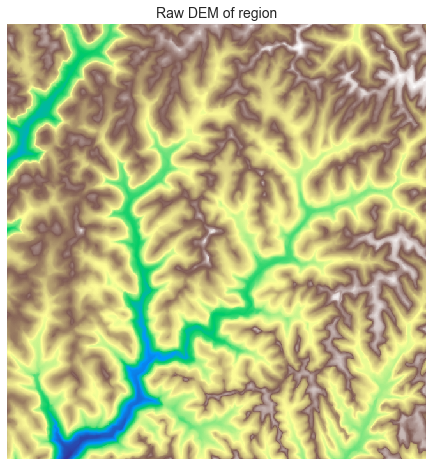

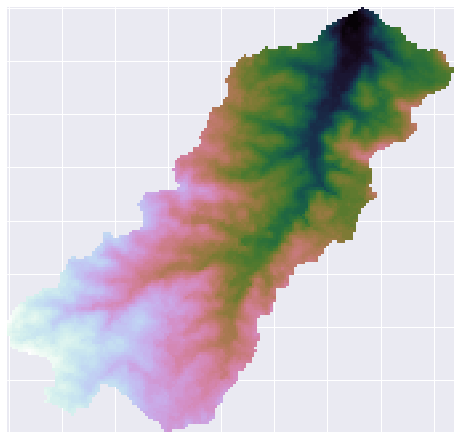

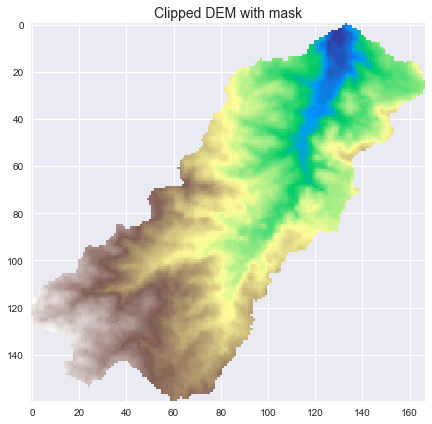

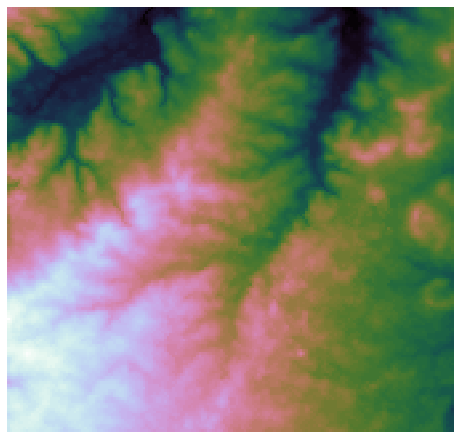

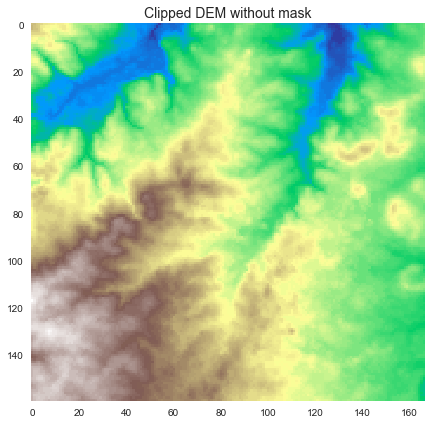

diff --git a/docs/dem-conditioning.md b/docs/dem-conditioning.md

index 38a4b52..00cf279 100644

--- a/docs/dem-conditioning.md

+++ b/docs/dem-conditioning.md

@@ -10,29 +10,102 @@ Raw DEMs often contain depressions that must be removed before further processin

```python

# Import modules

->>> from pysheds.grid import Grid

+import matplotlib.pyplot as plt

+import matplotlib.colors as colors

+from pysheds.grid import Grid

+

+%matplotlib inline

# Read raw DEM

->>> grid = Grid.from_raster('../data/roi_10m', data_name='dem')

+grid = Grid.from_raster('./data/roi_10m')

+dem = grid.read_raster('./data/roi_10m')

+```

+

+

+Plotting code...

+

+```python

# Plot the raw DEM

->>> plt.imshow(grid.view('dem'))

+fig, ax = plt.subplots(figsize=(8,6))

+fig.patch.set_alpha(0)

+

+plt.imshow(grid.view(dem), cmap='terrain', zorder=1)

+plt.colorbar(label='Elevation (m)')

+plt.title('Digital elevation map', size=14)

+plt.tight_layout()

+```

+

+

+

+

+

+

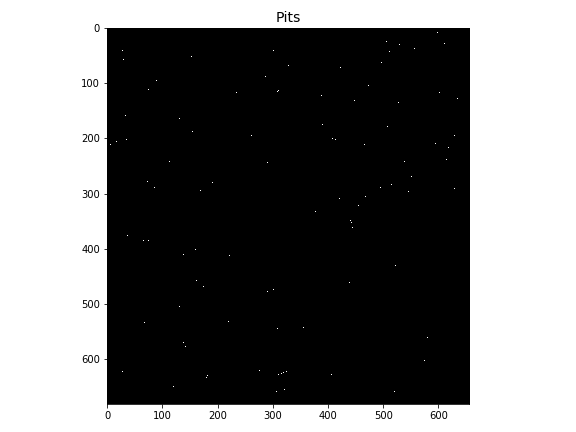

+### Detecting pits

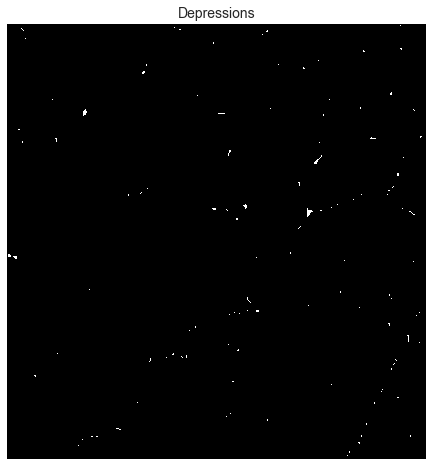

+Pits can be detected using the `grid.detect_depressions` method:

+

+```python

+# Detect pits

+pits = grid.detect_pits(dem)

```

-

+

+Plotting code...

+

+

+```python

+# Plot pits

+fig, ax = plt.subplots(figsize=(8,6))

+fig.patch.set_alpha(0)

+

+plt.imshow(pits, cmap='Greys_r', zorder=1)

+plt.title('Pits', size=14)

+plt.tight_layout()

+```

+

+

+

+

+

+

+### Filling pits

+

+Pits can be filled using the `grid.fill_depressions` method:

+

+```python

+# Fill pits

+pit_filled_dem = grid.fill_pits(dem)

+pits = grid.detect_pits(pit_filled_dem)

+assert not pits.any()

+```

### Detecting depressions

Depressions can be detected using the `grid.detect_depressions` method:

```python

# Detect depressions

-depressions = grid.detect_depressions('dem')

+depressions = grid.detect_depressions(pit_filled_dem)

+```

+

+

+Plotting code...

+

+```python

# Plot depressions

-plt.imshow(depressions)

+fig, ax = plt.subplots(figsize=(8,6))

+fig.patch.set_alpha(0)

+

+plt.imshow(depressions, cmap='Greys_r', zorder=1)

+plt.title('Depressions', size=14)

+plt.tight_layout()

```

-

+

+

+

+

+

### Filling depressions

@@ -40,17 +113,14 @@ Depressions can be filled using the `grid.fill_depressions` method:

```python

# Fill depressions

->>> grid.fill_depressions(data='dem', out_name='flooded_dem')

-

-# Test result

->>> depressions = grid.detect_depressions('dem')

->>> depressions.any()

-False

+flooded_dem = grid.fill_depressions(pit_filled_dem)

+depressions = grid.detect_depressions(flooded_dem)

+assert not depressions.any()

```

## Flats

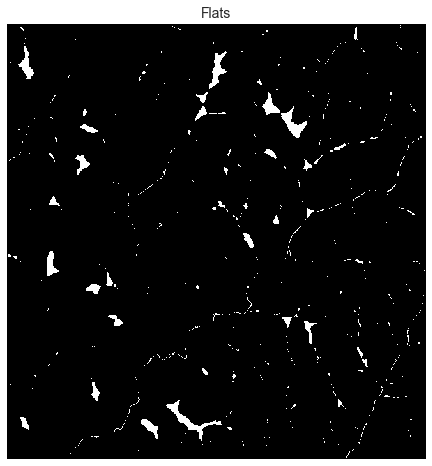

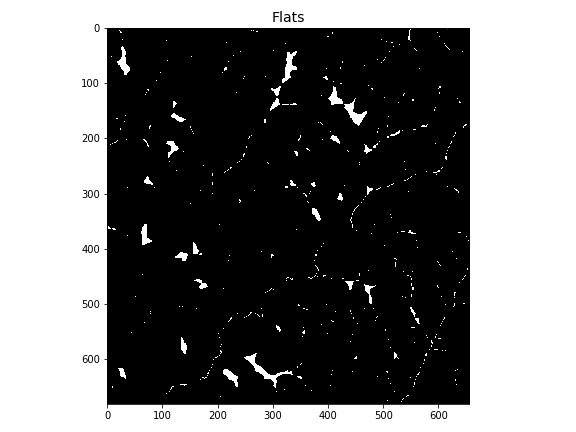

-Flats consist of cells at which every surrounding cell is at the same elevation or higher.

+Flats consist of cells at which every surrounding cell is at the same elevation or higher. Note that we have created flats by filling in our pits and depressions.

### Detecting flats

@@ -58,20 +128,37 @@ Flats can be detected using the `grid.detect_flats` method:

```python

# Detect flats

-flats = grid.detect_flats('flooded_dem')

+flats = grid.detect_flats(flooded_dem)

+```

+

+

+Plotting code...

+

+```python

# Plot flats

-plt.imshow(flats)

+fig, ax = plt.subplots(figsize=(8,6))

+fig.patch.set_alpha(0)

+

+plt.imshow(flats, cmap='Greys_r', zorder=1)

+plt.title('Flats', size=14)

+plt.tight_layout()

```

-

+

+

+

+

+

### Resolving flats

Flats can be resolved using the `grid.resolve_flats` method:

```python

->>> grid.resolve_flats(data='flooded_dem', out_name='inflated_dem')

+inflated_dem = grid.resolve_flats(flooded_dem)

+flats = grid.detect_flats(inflated_dem)

+assert not flats.any()

```

### Finished product

@@ -80,13 +167,33 @@ After filling depressions and resolving flats, the flow direction can be determi

```python

# Compute flow direction based on corrected DEM

-grid.flowdir(data='inflated_dem', out_name='dir', dirmap=dirmap)

+fdir = grid.flowdir(inflated_dem)

# Compute flow accumulation based on computed flow direction

-grid.accumulation(data='dir', out_name='acc', dirmap=dirmap)

+acc = grid.accumulation(fdir)

+```

+

+

+Plotting code...

+

+

+```python

+fig, ax = plt.subplots(figsize=(8,6))

+fig.patch.set_alpha(0)

+im = ax.imshow(acc, zorder=2,

+ cmap='cubehelix',

+ norm=colors.LogNorm(1, acc.max()),

+ interpolation='bilinear')

+plt.colorbar(im, ax=ax, label='Upstream Cells')

+plt.title('Flow Accumulation', size=14)

+plt.tight_layout()

```

-

+

+

+

+

+

## Burning DEMs

diff --git a/docs/extract-river-network.md b/docs/extract-river-network.md

index c292850..c81e7fb 100644

--- a/docs/extract-river-network.md

+++ b/docs/extract-river-network.md

@@ -5,29 +5,27 @@

The `grid.extract_river_network` method requires both a catchment grid and an accumulation grid. The catchment grid can be obtained from a flow direction grid, as shown in [catchments](https://mdbartos.github.io/pysheds/catchment.html). The accumulation grid can also be obtained from a flow direction grid, as shown in [accumulation](https://mdbartos.github.io/pysheds/accumulation.html).

```python

->>> import numpy as np

->>> from matplotlib import pyplot as plt

->>> from pysheds.grid import Grid

+from pysheds.grid import Grid

# Instantiate grid from raster

->>> grid = Grid.from_raster('../data/dem.tif', data_name='dem')

+grid = Grid.from_raster('./data/dem.tif')

+dem = grid.read_raster('./data/dem.tif')

# Resolve flats and compute flow directions

->>> grid.resolve_flats(data='dem', out_name='inflated_dem')

->>> grid.flowdir('inflated_dem', out_name='dir')

+inflated_dem = grid.resolve_flats(dem)

+fdir = grid.flowdir(inflated_dem)

# Specify outlet

->>> x, y = -97.294167, 32.73750

+x, y = -97.294167, 32.73750

# Delineate a catchment

->>> grid.catchment(data='dir', x=x, y=y, out_name='catch',

- recursionlimit=15000, xytype='label')

+catch = grid.catchment(x=x, y=y, fdir=fdir, xytype='coordinate')

# Clip the view to the catchment

->>> grid.clip_to('catch')

+grid.clip_to(catch)

# Compute accumulation

->>> grid.accumulation(data='catch', out_name='acc')

+acc = grid.accumulation(fdir, apply_output_mask=False)

```

## Extracting the river network

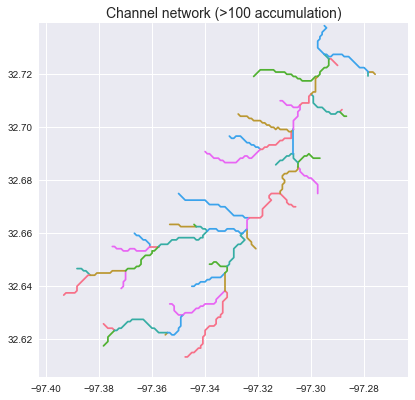

@@ -36,29 +34,112 @@ To extract the river network at a given accumulation threshold, we can call the

```python

# Extract river network

->>> branches = grid.extract_river_network('catch', 'acc')

+branches = grid.extract_river_network(fdir, acc > 100)

```

+

+Plotting code...

+

+

+```python

+import numpy as np

+from matplotlib import pyplot as plt

+import seaborn as sns

+

+sns.set_palette('husl')

+fig, ax = plt.subplots(figsize=(8.5,6.5))

+

+plt.xlim(grid.bbox[0], grid.bbox[2])

+plt.ylim(grid.bbox[1], grid.bbox[3])

+ax.set_aspect('equal')

+

+for branch in branches['features']:

+ line = np.asarray(branch['geometry']['coordinates'])

+ plt.plot(line[:, 0], line[:, 1])

+

+_ = plt.title('Channel network (>100 accumulation)', size=14)

+```

+

+

+

+

+

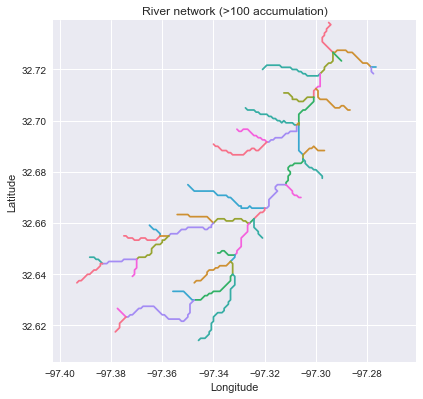

The `grid.extract_river_network` method returns a dictionary in the geojson format. The branches can be plotted by iterating through the features:

+

+

+

```python

-# Plot branches

->>> for branch in branches['features']:

->>> line = np.asarray(branch['geometry']['coordinates'])

->>> plt.plot(line[:, 0], line[:, 1])

+branches = grid.extract_river_network(fdir, acc > 100, apply_output_mask=False)

```

-

+

+Plotting code...

+

+

+```python

+sns.set_palette('husl')

+fig, ax = plt.subplots(figsize=(8.5,6.5))

+plt.xlim(grid.bbox[0], grid.bbox[2])

+plt.ylim(grid.bbox[1], grid.bbox[3])

+ax.set_aspect('equal')

+

+for branch in branches['features']:

+ line = np.asarray(branch['geometry']['coordinates'])

+ plt.plot(line[:, 0], line[:, 1])

+

+_ = plt.title('Channel network (no mask)', size=14)

+```

+

+

+

+

+

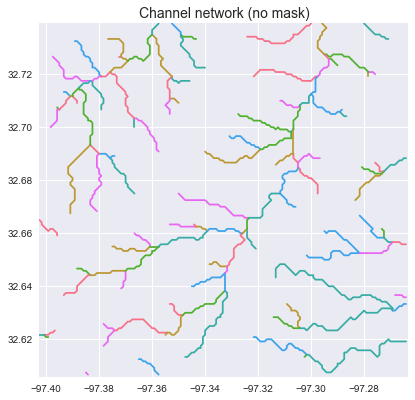

## Specifying the accumulation threshold

We can change the geometry of the returned river network by specifying different accumulation thresholds:

```python

->>> branches_50 = grid.extract_river_network('catch', 'acc', threshold=50)

->>> branches_2 = grid.extract_river_network('catch', 'acc', threshold=2)

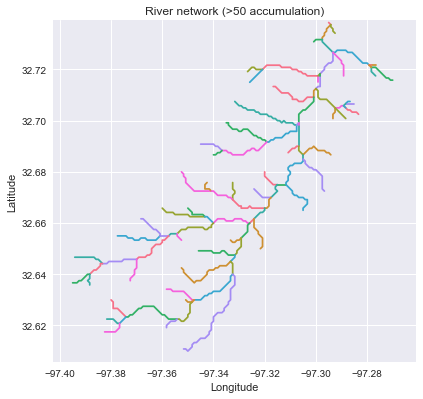

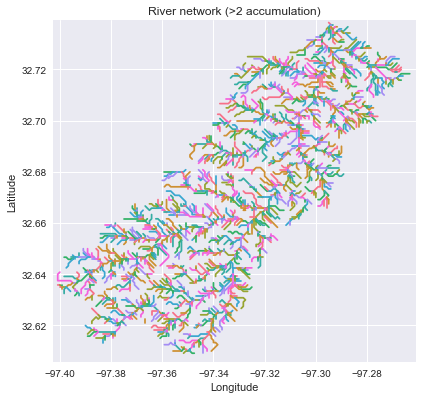

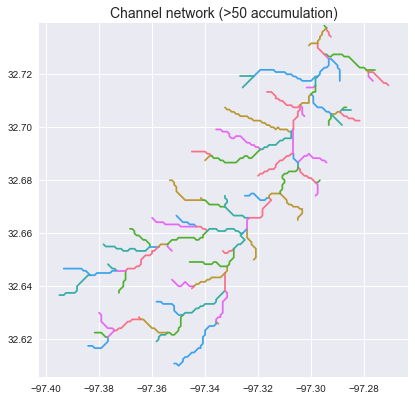

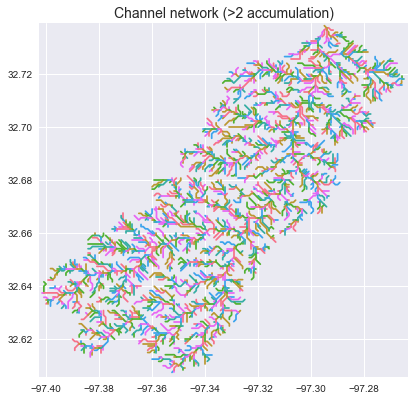

+branches_50 = grid.extract_river_network(fdir, acc > 50)

+branches_2 = grid.extract_river_network(fdir, acc > 2)

```

-

-

+

+Plotting code...

+

+

+```python

+fig, ax = plt.subplots(figsize=(8.5,6.5))

+

+plt.xlim(grid.bbox[0], grid.bbox[2])

+plt.ylim(grid.bbox[1], grid.bbox[3])

+ax.set_aspect('equal')

+

+for branch in branches_50['features']:

+ line = np.asarray(branch['geometry']['coordinates'])

+ plt.plot(line[:, 0], line[:, 1])

+

+_ = plt.title('Channel network (>50 accumulation)', size=14)

+

+sns.set_palette('husl')

+fig, ax = plt.subplots(figsize=(8.5,6.5))

+

+plt.xlim(grid.bbox[0], grid.bbox[2])

+plt.ylim(grid.bbox[1], grid.bbox[3])

+ax.set_aspect('equal')

+

+for branch in branches_2['features']:

+ line = np.asarray(branch['geometry']['coordinates'])

+ plt.plot(line[:, 0], line[:, 1])

+

+_ = plt.title('Channel network (>2 accumulation)', size=14)

+```

+

+

+

+

+

+

+

diff --git a/docs/file-io.md b/docs/file-io.md

index 6eabdc9..b77e54f 100644

--- a/docs/file-io.md

+++ b/docs/file-io.md

@@ -7,15 +7,14 @@

### Instantiating a grid from a raster

```python

->>> from pysheds.grid import Grid

->>> grid = Grid.from_raster('../data/dem.tif', data_name='dem')

+from pysheds.grid import Grid

+grid = Grid.from_raster('./data/dem.tif')

```

### Reading a raster file

```python

->>> grid = Grid()

->>> grid.read_raster('../data/dem.tif', data_name='dem')

+dem = grid.read_raster('./data/dem.tif')

```

## Reading from ASCII files

@@ -23,14 +22,13 @@

### Instantiating a grid from an ASCII grid

```python

->>> grid = Grid.from_ascii('../data/dir.asc', data_name='dir')

+grid = Grid.from_ascii('./data/dir.asc')

```

### Reading an ASCII grid

```python

->>> grid = Grid()

->>> grid.read_ascii('../data/dir.asc', data_name='dir')

+fdir = grid.read_ascii('./data/dir.asc', dtype=np.uint8)

```

## Windowed reading

@@ -39,38 +37,11 @@ If the raster file is very large, you can specify a window to read data from. Th

```python

# Instantiate a grid with data

->>> grid = Grid.from_raster('../data/dem.tif', data_name='dem')

+grid = Grid.from_raster('./data/dem.tif')

# Read windowed raster

->>> grid.read_raster('../data/nlcd_2011_impervious_2011_edition_2014_10_10.img',

- data_name='terrain', window=grid.bbox, window_crs=grid.crs)

-```

-

-## Adding in-memory datasets

-

-In-memory datasets from a python session can also be added.

-

-```python

-# Instantiate a grid with data

->>> grid = Grid.from_raster('../data/dem.tif', data_name='dem')

-

-# Add another copy of the DEM data as a Raster object

->>> grid.add_gridded_data(grid.dem, data_name='dem_copy')

-```

-

-Raw numpy arrays can also be added.

-

-```python

->>> import numpy as np

-

-# Generate random data

->>> data = np.random.randn(*grid.shape)

-

-# Add data to grid

->>> grid.add_gridded_data(data=data, data_name='random',

- affine=grid.affine,

- crs=grid.crs,

- nodata=0)

+terrain = grid.read_raster('./data/impervious_area.tiff',

+ window=grid.bbox, window_crs=grid.crs)

```

## Writing to raster files

@@ -78,72 +49,72 @@ Raw numpy arrays can also be added.

By default, the `grid.to_raster` method will write the grid's current view of the dataset.

```python

->>> grid = Grid.from_ascii('../data/dir.asc', data_name='dir')

->>> grid.to_raster('dir', 'test_dir.tif', blockxsize=16, blockysize=16)

+grid = Grid.from_ascii('./data/dir.asc')

+fdir = grid.read_ascii('./data/dir.asc', dtype=np.uint8)

+grid.to_raster(fdir, 'test_dir.tif', blockxsize=16, blockysize=16)

```

-If the full dataset is desired, set `view=False`:

+If the full dataset is desired, set the `target_view` to the dataset's `viewfinder`:

```python

->>> grid.to_raster('dir', 'test_dir.tif', view=False,

- blockxsize=16, blockysize=16)

+grid.to_raster(fdir, 'test_dir.tif', target_view=fdir.viewfinder,

+ blockxsize=16, blockysize=16)

```

-If you want the output file to be masked with the grid mask, set `apply_mask=True`:

+If you want the output file to be masked with the grid mask, set `apply_output_mask=True`:

```python

->>> grid.to_raster('dir', 'test_dir.tif',

- view=True, apply_mask=True,

- blockxsize=16, blockysize=16)

+grid.to_raster(fdir, 'test_dir.tif', apply_output_mask=True,

+ blockxsize=16, blockysize=16)

```

## Writing to ASCII files

```python

->>> grid.to_ascii('dir', 'test_dir.asc')

+grid.to_ascii(fdir, 'test_dir.asc')

```

## Writing to shapefiles

-For more detail, see the [jupyter notebook](https://github.com/mdbartos/pysheds/blob/master/recipes/write_shapefile.ipynb).

-

```python

->>> import fiona

+import fiona

->>> grid = Grid.from_ascii('../data/dir.asc', data_name='dir')

+grid = Grid.from_ascii('./data/dir.asc')

# Specify pour point

->>> x, y = -97.294167, 32.73750

+x, y = -97.294167, 32.73750

# Delineate the catchment

->>> grid.catchment(data='dir', x=x, y=y, out_name='catch',

- recursionlimit=15000, xytype='label',

- nodata_out=0)

+catch = grid.catchment(x=x, y=y, fdir=fdir,

+ xytype='coordinate')

# Clip to catchment

->>> grid.clip_to('catch')

+grid.clip_to(catch)

+

+# Create view

+catch_view = grid.view(catch, dtype=np.uint8)

# Create a vector representation of the catchment mask

->>> shapes = grid.polygonize()

+shapes = grid.polygonize(catch_view)

# Specify schema

->>> schema = {

+schema = {

'geometry': 'Polygon',

'properties': {'LABEL': 'float:16'}

- }

+}

# Write shapefile

->>> with fiona.open('catchment.shp', 'w',

- driver='ESRI Shapefile',

- crs=grid.crs.srs,

- schema=schema) as c:

- i = 0

- for shape, value in shapes:

- rec = {}

- rec['geometry'] = shape

- rec['properties'] = {'LABEL' : str(value)}

- rec['id'] = str(i)

- c.write(rec)

- i += 1

+with fiona.open('catchment.shp', 'w',

+ driver='ESRI Shapefile',

+ crs=grid.crs.srs,

+ schema=schema) as c:

+ i = 0

+ for shape, value in shapes:

+ rec = {}

+ rec['geometry'] = shape

+ rec['properties'] = {'LABEL' : str(value)}

+ rec['id'] = str(i)

+ c.write(rec)

+ i += 1

```

diff --git a/docs/flow-directions.md b/docs/flow-directions.md

index 3ec05d5..b6e42c0 100644

--- a/docs/flow-directions.md

+++ b/docs/flow-directions.md

@@ -12,16 +12,17 @@ Note that for most use cases, DEMs should be conditioned before computing flow d

```python

# Import modules

->>> from pysheds.grid import Grid

+from pysheds.grid import Grid

# Read raw DEM

->>> grid = Grid.from_raster('../data/roi_10m', data_name='dem')

+grid = Grid.from_raster('./data/roi_10m')

+dem = grid.read_raster('./data/roi_10m')

# Fill depressions

->>> grid.fill_depressions(data='dem', out_name='flooded_dem')

+flooded_dem = grid.fill_depressions(dem)

# Resolve flats

->>> grid.resolve_flats(data='flooded_dem', out_name='inflated_dem')

+inflated_dem = grid.resolve_flats(flooded_dem)

```

### Computing D8 flow directions

@@ -29,8 +30,17 @@ Note that for most use cases, DEMs should be conditioned before computing flow d

After filling depressions, the flow directions can be computed using the `grid.flowdir` method:

```python

->>> grid.flowdir(data='inflated_dem', out_name='dir')

->>> grid.dir

+fdir = grid.flowdir(inflated_dem)

+```

+

+

+Output...

+

+

+```python

+fdir

+```

+```

Raster([[ 0, 0, 0, ..., 0, 0, 0],

[ 0, 2, 2, ..., 4, 1, 0],

[ 0, 1, 2, ..., 4, 2, 0],

@@ -40,6 +50,11 @@ Raster([[ 0, 0, 0, ..., 0, 0, 0],

[ 0, 0, 0, ..., 0, 0, 0]])

```

+

+

+

+

+

### Directional mappings

Cardinal and intercardinal directions are represented by numeric values in the output grid. By default, the ESRI scheme is used:

@@ -56,9 +71,18 @@ Cardinal and intercardinal directions are represented by numeric values in the o

An alternative directional mapping can be specified using the `dirmap` keyword argument:

```python

->>> dirmap = (1, 2, 3, 4, 5, 6, 7, 8)

->>> grid.flowdir(data='inflated_dem', out_name='dir', dirmap=dirmap)

->>> grid.dir

+dirmap = (1, 2, 3, 4, 5, 6, 7, 8)

+fdir = grid.flowdir(inflated_dem, dirmap=dirmap)

+```

+

+

+Output...

+

+

+```python

+fdir

+```

+```

Raster([[0, 0, 0, ..., 0, 0, 0],

[0, 4, 4, ..., 5, 3, 0],

[0, 3, 4, ..., 5, 4, 0],

@@ -68,13 +92,8 @@ Raster([[0, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0]])

```

-### Labeling pits and flats

-

-If pits or flats are present in the originating DEM, these cells can be labeled in the output array using the `pits` and `flats` keyword arguments:

-

-```python

->>> grid.flowdir(data='inflated_dem', out_name='dir', pits=0, flats=-1)

-```

+

+

## D-infinity flow directions

@@ -83,8 +102,17 @@ While the D8 routing scheme allows each cell to be routed to only one of its nea

D-infinity routing can be selected by using the keyword argument `routing='dinf'`.

```python

->>> grid.flowdir(data='inflated_dem', out_name='dir', routing='dinf')

->>> grid.dir

+fdir = grid.flowdir(inflated_dem, routing='dinf')

+```

+

+

+Output...

+

+

+```python

+fdir

+```

+```python

Raster([[ nan, nan, nan, ..., nan, nan, nan],

[ nan, 5.498, 5.3 , ..., 4.712, 0. , nan],

[ nan, 0. , 5.498, ..., 4.712, 5.176, nan],

@@ -94,15 +122,26 @@ Raster([[ nan, nan, nan, ..., nan, nan, nan],

[ nan, nan, nan, ..., nan, nan, nan]])

```

+

+

+

Note that each entry takes a value between 0 and 2π, with `np.nan` representing unknown flow directions.

Note that you must also specify `routing=dinf` when using `grid.catchment` or `grid.accumulation` with a D-infinity output grid.

## Effect of map projections on routing

-The choice of map projection affects the slopes between neighboring cells. The map projection can be specified using the `as_crs` keyword argument.

+The choice of map projection affects the slopes between neighboring cells.

```python

->>> new_crs = pyproj.Proj('+init=epsg:3083')

->>> grid.flowdir(data='inflated_dem', out_name='proj_dir', as_crs=new_crs)

+# Specify new map projection

+import pyproj

+new_crs = pyproj.Proj('epsg:3083')

+

+# Convert CRS of dataset and grid

+proj_dem = inflated_dem.to_crs(new_crs)

+grid.viewfinder = proj_dem.viewfinder

+

+# Compute flow directions on projected grid

+proj_fdir = grid.flowdir(proj_dem)

```

diff --git a/docs/flow-distance.md b/docs/flow-distance.md

index 07b4123..551f2d3 100644

--- a/docs/flow-distance.md

+++ b/docs/flow-distance.md

@@ -2,42 +2,62 @@

## Preliminaries

-The `grid.flow_distance` method operates on a flow direction grid. This flow direction grid can be computed from a DEM, as shown in [flow directions](https://mdbartos.github.io/pysheds/flow-directions.html).

+The `grid.distance_to_outlet` method operates on a flow direction grid. This flow direction grid can be computed from a DEM, as shown in [flow directions](https://mdbartos.github.io/pysheds/flow-directions.html).

```python

->>> import numpy as np

->>> from matplotlib import pyplot as plt

->>> from pysheds.grid import Grid

+import numpy as np

+from matplotlib import pyplot as plt

+import seaborn as sns

+from pysheds.grid import Grid

# Instantiate grid from raster

->>> grid = Grid.from_raster('../data/dem.tif', data_name='dem')

+grid = Grid.from_raster('./data/dem.tif')

+dem = grid.read_raster('./data/dem.tif')

# Resolve flats and compute flow directions

->>> grid.resolve_flats(data='dem', out_name='inflated_dem')

->>> grid.flowdir('inflated_dem', out_name='dir')

+inflated_dem = grid.resolve_flats(dem)

+fdir = grid.flowdir(inflated_dem)

```

## Computing flow distance

-Flow distance is computed using the `grid.flow_distance` method:

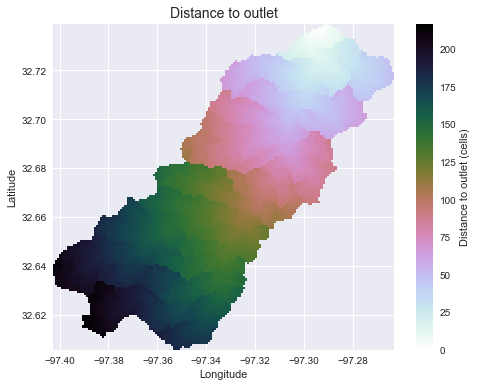

+Flow distance is computed using the `grid.distance_to_outlet` method:

```python

# Specify outlet

->>> x, y = -97.294167, 32.73750

+x, y = -97.294167, 32.73750

# Delineate a catchment

->>> grid.catchment(data='dir', x=x, y=y, out_name='catch',

- recursionlimit=15000, xytype='label')

+catch = grid.catchment(x=x, y=y, fdir=fdir, xytype='coordinate')

# Clip the view to the catchment

->>> grid.clip_to('catch')

+grid.clip_to(catch)

-# Compute flow distance

->>> grid.flow_distance(x, y, data='catch',

- out_name='dist', xytype='label')

+# Compute distance to outlet

+dist = grid.distance_to_outlet(x, y, fdir=fdir, xytype='coordinate')

```

-

+

+Plotting code...

+

+

+```python

+fig, ax = plt.subplots(figsize=(8,6))

+fig.patch.set_alpha(0)

+plt.grid('on', zorder=0)

+im = ax.imshow(dist, extent=grid.extent, zorder=2,

+ cmap='cubehelix_r')

+plt.colorbar(im, ax=ax, label='Distance to outlet (cells)')

+plt.xlabel('Longitude')

+plt.ylabel('Latitude')

+plt.title('Distance to outlet', size=14)

+```

+

+

+

+

+

+

Note that the `grid.flow_distance` method requires an outlet point, much like the `grid.catchment` method.

@@ -46,14 +66,28 @@ Note that the `grid.flow_distance` method requires an outlet point, much like th

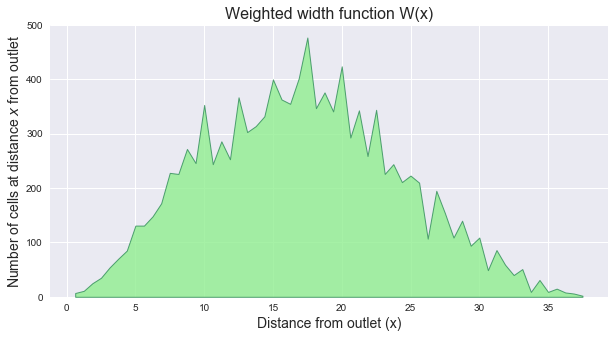

The width function of a catchment `W(x)` represents the number of cells located at a topological distance `x` from the outlet. One can compute the width function of the catchment by counting the number of cells at a distance `x` from the outlet for each distance `x`.

```python

-# Get flow distance array

->>> dists = grid.view('dist')

-

# Compute width function

->>> W = np.bincount(dists[dists != 0].astype(int))

+W = np.bincount(dist[np.isfinite(dist)].astype(int))

```

-

+

+Plotting code...

+

+

+```python

+fig, ax = plt.subplots(figsize=(10, 5))

+plt.fill_between(np.arange(len(W)), W, 0, edgecolor='seagreen', linewidth=1, facecolor='lightgreen', alpha=0.8)

+plt.ylim(0, 100)

+plt.ylabel(r'Number of cells at distance $x$ from outlet', size=14)

+plt.xlabel(r'Distance from outlet (x)', size=14)

+plt.title('Width function W(x)', size=16)

+```

+

+

+

+

+

+

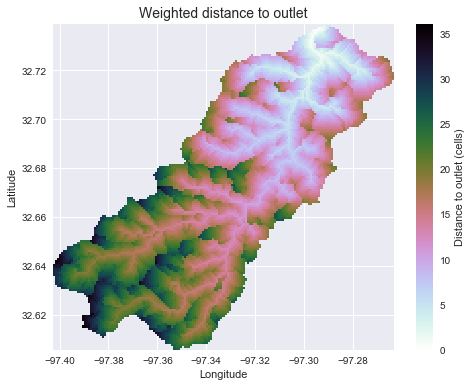

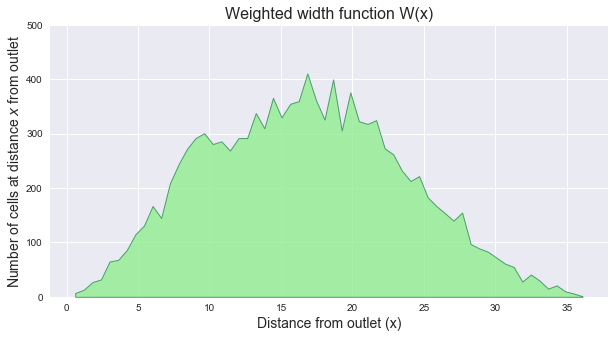

## Computing weighted flow distance

@@ -61,23 +95,42 @@ Weights can be used to adjust the distance metric between cells. This can be use

```python

# Clip the bounding box to the catchment

->>> grid.clip_to('catch', pad=(1,1,1,1))

+grid.clip_to(catch)

# Compute flow accumulation

->>> grid.accumulation(data='catch', out_name='acc')

->>> acc = grid.view('acc')

+acc = grid.accumulation(fdir)

# Assume that water in channelized cells (>= 100 accumulation) travels 10 times faster

# than hillslope cells (< 100 accumulation)

->>> weights = (np.where(acc, 0.1, 0)

- + np.where((0 < acc) & (acc <= 100), 1, 0)).ravel()

-

-# Compute weighted flow distance

->>> dists = grid.flow_distance(data='catch', x=x, y=y, weights=weights,

- xytype='label', inplace=False)

+weights = acc.copy()

+weights[acc >= 100] = 0.1

+weights[(0 < acc) & (acc < 100)] = 1.

+

+# Compute weighted distance to outlet

+dist = grid.distance_to_outlet(x=x, y=y, fdir=fdir, weights=weights, xytype='coordinate')

+```

+

+

+Plotting code...

+

+

+```python

+fig, ax = plt.subplots(figsize=(8,6))

+fig.patch.set_alpha(0)

+plt.grid('on', zorder=0)

+im = ax.imshow(dist, extent=grid.extent, zorder=2,

+ cmap='cubehelix_r')

+plt.colorbar(im, ax=ax, label='Distance to outlet (cells)')

+plt.xlabel('Longitude')

+plt.ylabel('Latitude')

+plt.title('Weighted distance to outlet', size=14)

```

-

+

+

+

+

+

### Weighted width function

@@ -85,8 +138,25 @@ Note that because the distances are no longer integers, the weighted width funct

```python

# Compute weighted width function

-hist, bin_edges = np.histogram(dists[dists != 0].ravel(),

- range=(0,dists.max()+1e-5), bins=40)

+distances = dist[np.isfinite(dist)].ravel()

+hist, bin_edges = np.histogram(distances, range=(0,distances.max()+1e-5),

+ bins=60)

+```

+

+

+Plotting code...

+

+

+```python

+fig, ax = plt.subplots(figsize=(10, 5))

+plt.fill_between(bin_edges[1:], hist, 0, edgecolor='seagreen', linewidth=1, facecolor='lightgreen', alpha=0.8)

+plt.ylim(0, 500)

+plt.ylabel(r'Number of cells at distance $x$ from outlet', size=14)

+plt.xlabel(r'Distance from outlet (x)', size=14)

+plt.title('Weighted width function W(x)', size=16)

```

-

+

+

+

+

diff --git a/docs/hand.md b/docs/hand.md

new file mode 100644

index 0000000..5b313da

--- /dev/null

+++ b/docs/hand.md

@@ -0,0 +1,172 @@

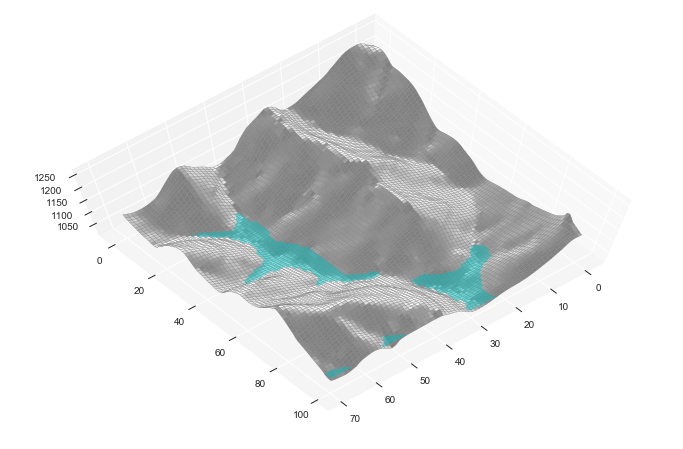

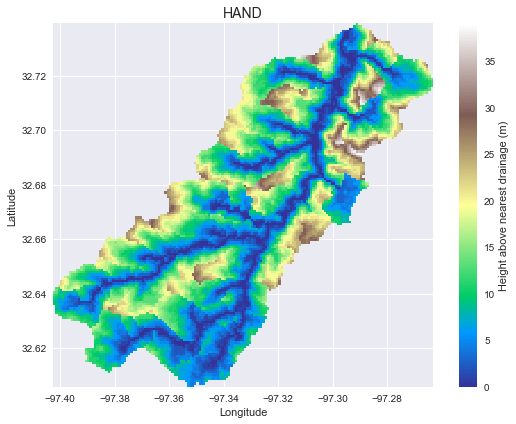

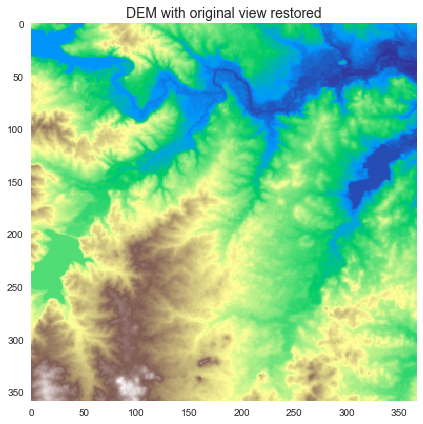

+# Inundation mapping with HAND

+

+The HAND function can be used to estimate inundation extent.

+

+## Computing the height above nearest drainage

+

+First, we begin by computing the flow directions and accumulation for a given DEM.

+

+```python

+import numpy as np

+from pysheds.grid import Grid

+

+# Instantiate grid from raster

+grid = Grid.from_raster('./data/dem.tif')

+dem = grid.read_raster('./data/dem.tif')

+

+# Resolve flats and compute flow directions

+inflated_dem = grid.resolve_flats(dem)

+fdir = grid.flowdir(inflated_dem)

+

+# Compute accumulation

+acc = grid.accumulation(fdir)

+```

+

+We can then compute the height above nearest drainage (HAND) by providing a DEM, a flow direction grid, and a channel mask. For this demonstration, we will take the channel mask to be all cells with accumulation greater than 200.

+

+```python

+# Compute height above nearest drainage

+hand = grid.compute_hand(fdir, dem, acc > 200)

+```

+

+Next, we will clip the HAND raster to a catchment to make it easier to work with.

+

+```python

+# Specify outlet

+x, y = -97.294167, 32.73750

+

+# Delineate a catchment

+catch = grid.catchment(x=x, y=y, fdir=fdir, xytype='coordinate')

+

+# Clip to the catchment

+grid.clip_to(catch)

+

+# Create a view of HAND in the catchment

+hand_view = grid.view(hand, nodata=np.nan)

+```

+

+

+Plotting code...

+

+

+```python

+from matplotlib import pyplot as plt

+import seaborn as sns

+fig, ax = plt.subplots(figsize=(8,6))

+fig.patch.set_alpha(0)

+plt.imshow(hand_view,

+ extent=grid.extent, cmap='terrain', zorder=1)

+plt.colorbar(label='Height above nearest drainage (m)')

+plt.grid(zorder=0)

+plt.title('HAND', size=14)

+plt.xlabel('Longitude')

+plt.ylabel('Latitude')

+plt.tight_layout()

+```

+

+

+

+

+

+

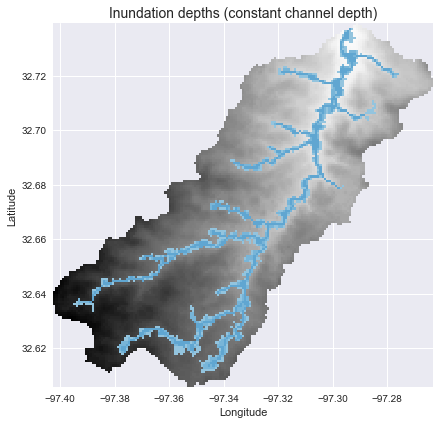

+## Estimating inundation extent (constant channel depth)

+

+We can estimate the inundation extent (assuming a constant channel depth) using a simple binary threshold:

+

+```python

+inundation_extent = np.where(hand_view < 3, 3 - hand_view, np.nan)

+```

+

+

+Plotting code...

+

+

+```python

+fig, ax = plt.subplots(figsize=(8,6))

+fig.patch.set_alpha(0)

+dem_view = grid.view(dem, nodata=np.nan)

+plt.imshow(dem_view, extent=grid.extent, cmap='Greys', zorder=1)

+plt.imshow(inundation_extent, extent=grid.extent,

+ cmap='Blues', vmin=-5, vmax=10, zorder=2)

+plt.grid(zorder=0)

+plt.title('Inundation depths (constant channel depth)', size=14)

+plt.xlabel('Longitude')

+plt.ylabel('Latitude')

+plt.tight_layout()

+```

+

+

+

+

+

+

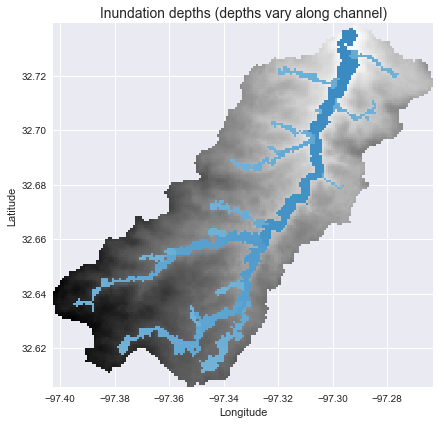

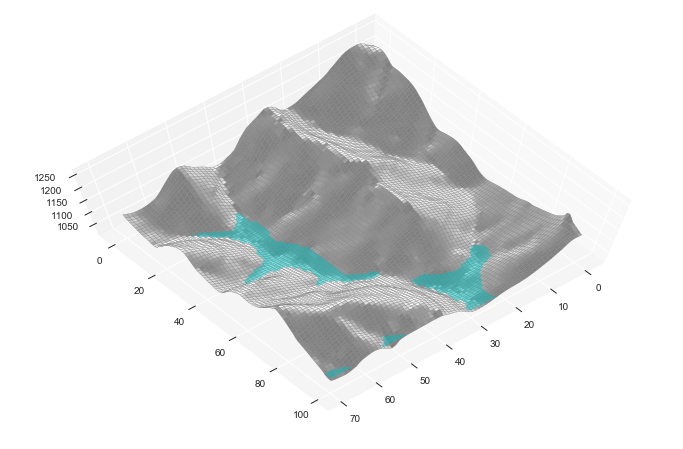

+## Estimating inundation extent (varying channel depth)

+

+We can also estimate the inundation extent given a continuously varying channel depth. First, for the purposes of demonstration, we can generate an estimate of the channel depths using a power law formulation:

+

+```python

+# Clip accumulation to current view

+acc_view = grid.view(acc, nodata=np.nan)

+

+# Create empirical channel depths based on power law

+channel_depths = np.where(acc_view > 200, 0.75 * acc_view**0.2, 0)

+```

+

+

+Plotting code...

+

+

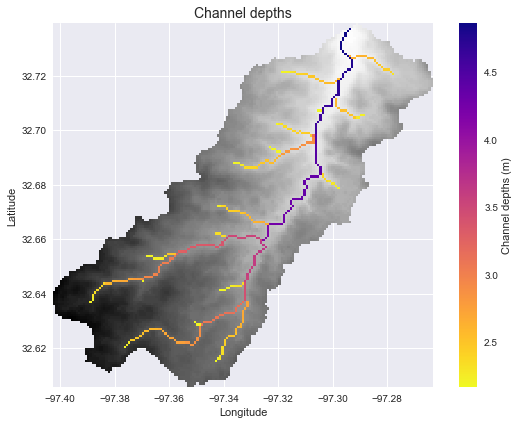

+```python

+fig, ax = plt.subplots(figsize=(8,6))

+fig.patch.set_alpha(0)

+dem_view = grid.view(dem, nodata=np.nan)

+plt.imshow(dem_view, extent=grid.extent, cmap='Greys', zorder=1)

+plt.imshow(np.where(acc_view > 200, channel_depths, np.nan),

+ extent=grid.extent, cmap='plasma_r', zorder=2)

+plt.colorbar(label='Channel depths (m)')

+plt.grid(zorder=0)

+plt.title('Channel depths', size=14)

+plt.xlabel('Longitude')

+plt.ylabel('Latitude')

+plt.tight_layout()

+```

+

+

+

+

+

+

+To find the corresponding depths in the non-channel cells, we can use the `return_index=True` argument in the `compute_hand` function to return the index of the channel cell that is topologically nearest to each cell in the DEM. We can then estimate the inundation depth at each cell:

+

+```python

+# Compute index of nearest channel cell for each cell

+hand_idx = grid.compute_hand(fdir, dem, acc > 200, return_index=True)

+hand_idx_view = grid.view(hand_idx, nodata=0)

+

+# Compute inundation depths

+inundation_depths = np.where(hand_idx_view, channel_depths.flat[hand_idx_view], np.nan)

+```

+

+

+Plotting code...

+

+

+```python

+fig, ax = plt.subplots(figsize=(8,6))

+fig.patch.set_alpha(0)

+dem_view = grid.view(dem, nodata=np.nan)

+plt.imshow(dem_view, extent=grid.extent, cmap='Greys', zorder=1)

+plt.imshow(np.where(hand_view < inundation_depths, inundation_depths, np.nan), extent=grid.extent,

+ cmap='Blues', vmin=-5, vmax=10, zorder=2)

+plt.grid(zorder=0)

+plt.title('Inundation depths (depths vary along channel)', size=14)

+plt.xlabel('Longitude')

+plt.ylabel('Latitude')

+plt.tight_layout()

+```

+

+

+

+

+

+

diff --git a/docs/raster.md b/docs/raster.md

index 6624b8e..515ba13 100644

--- a/docs/raster.md

+++ b/docs/raster.md

@@ -5,14 +5,22 @@

When a dataset is read from a file, it will automatically be saved as a `Raster` object.

```python

->>> from pysheds.grid import Grid

+from pysheds.grid import Grid

->>> grid = Grid.from_raster('../data/dem.tif', data_name='dem')

->>> dem = grid.dem

+grid = Grid.from_raster('./data/dem.tif')

+dem = grid.read_raster('./data/dem.tif')

```

+Here, `grid` is the `Grid` instance, and `dem` is a `Raster` object. If we call the `Raster` object, we will see that it looks much like a numpy array.

+

```python

->>> dem

+dem

+```

+

+Output...

+

+

+```

Raster([[214, 212, 210, ..., 177, 177, 175],

[214, 210, 207, ..., 176, 176, 174],

[211, 209, 204, ..., 174, 174, 174],

@@ -22,22 +30,27 @@ Raster([[214, 212, 210, ..., 177, 177, 175],

[268, 267, 266, ..., 216, 217, 216]], dtype=int16)

```

+

+

+

## Calling methods on rasters

-Primary `Grid` methods (such as flow direction determination and catchment delineation) can be called directly on `Raster objects`:

+Hydrologic functions (such as flow direction determination and catchment delineation) accept and return `Raster objects`:

```python

->>> grid.resolve_flats(dem, out_name='inflated_dem')

+inflated_dem = grid.resolve_flats(dem)

+fdir = grid.flowdir(inflated_dem)

```

-Grid methods can also return `Raster` objects by specifying `inplace=False`:

-

```python

->>> fdir = grid.flowdir(grid.inflated_dem, inplace=False)

+fdir

```

-```python

->>> fdir

+

+Output...

+

+

+```

Raster([[ 0, 0, 0, ..., 0, 0, 0],

[ 0, 2, 2, ..., 4, 1, 0],

[ 0, 1, 2, ..., 4, 2, 0],

@@ -47,58 +60,159 @@ Raster([[ 0, 0, 0, ..., 0, 0, 0],

[ 0, 0, 0, ..., 0, 0, 0]])

```

+

+

+

+

+

## Raster attributes

-### Affine transform

+### Viewfinder

+

+The viewfinder attribute contains all the information needed to specify the Raster's spatial reference system. It can be accessed using the `viewfinder` attribute.

+

+```python

+dem.viewfinder

+```

+

+

+Output...

+

+

+```

+

+```

+

+

+

-An affine transform uniquely specifies the spatial location of each cell in a gridded dataset.

+

+The viewfinder contains five necessary elements that completely define the spatial reference system.

+

+ - `affine`: An affine transformation matrix.

+ - `shape`: The desired shape (rows, columns).

+ - `crs` : The coordinate reference system.

+ - `mask` : A boolean array indicating which cells are masked.

+ - `nodata` : A sentinel value indicating 'no data'.

+

+### Affine transformation matrix

+

+An affine transform uniquely specifies the spatial location of each cell in a gridded dataset. In a `Raster`, the affine transform is given by the `affine` attribute.

```python

->>> dem.affine

+dem.affine

+```

+

+Output...

+

+

+```

Affine(0.0008333333333333, 0.0, -100.0,

0.0, -0.0008333333333333, 34.9999999999998)

```

+

+

+

The elements of the affine transform `(a, b, c, d, e, f)` are:

-- **a**: cell width

-- **b**: row rotation (generally zero)

-- **c**: x-coordinate of upper-left corner of upper-leftmost cell

-- **d**: column rotation (generally zero)

-- **e**: cell height

-- **f**: y-coordinate of upper-left corner of upper-leftmost cell

+- **a**: Horizontal scaling (equal to cell width if no rotation)

+- **b**: Horizontal shear

+- **c**: Horizontal translation (x-coordinate of upper-left corner of upper-leftmost cell)

+- **d**: Vertical shear

+- **e**: Vertical scaling (equal to cell height if no rotation)

+- **f**: Vertical translation (y-coordinate of upper-left corner of upper-leftmost cell)

The affine transform uses the [affine](https://pypi.org/project/affine/) module.

-### Coordinate reference system

+### Shape

-The coordinate reference system (CRS) defines a map projection for the gridded dataset. For datasets read from a raster file, the CRS will be detected and populated automaticaally.

+The shape is equal to the shape of the underlying array (i.e. number of rows, number of columns).

```python

->>> dem.crs

-

+dem.shape

+```

+

+

+Output...

+

+

+```

+(359, 367)

```

-A human-readable representation of the CRS can also be obtained as follows:

+

+

+

+### Coordinate reference system

+

+The coordinate reference system (CRS) defines a map projection for the gridded

+dataset. The `crs` attribute is a `pyproj.Proj` object. For datasets read from a

+raster file, the CRS will be detected and populated automaticaally.

```python

->>> dem.crs.srs

-'+init=epsg:4326 '

+dem.crs

+```

+

+

+Output...

+

+

+```

+Proj('+proj=longlat +datum=WGS84 +no_defs', preserve_units=True)

```

+

+

+

This example dataset has a geographic projection (meaning that coordinates are defined in terms of latitudes and longitudes).

The coordinate reference system uses the [pyproj](https://pypi.org/project/pyproj/) module.

+### Mask

+

+The mask is a boolean array indicating which cells in the dataset should be masked in the output view.

+

+```python

+dem.mask

+```

+

+

+Output...

+

+

+```

+array([[ True, True, True, ..., True, True, True],

+ [ True, True, True, ..., True, True, True],

+ [ True, True, True, ..., True, True, True],

+ ...,

+ [ True, True, True, ..., True, True, True],

+ [ True, True, True, ..., True, True, True],

+ [ True, True, True, ..., True, True, True]])

+```

+

+

+

+

### "No data" value

The `nodata` attribute specifies the value that indicates missing or invalid data.

```python

->>> dem.nodata

+dem.nodata

+```

+

+

+Output...

+

+

+```

-32768

```

+

+

+

### Derived attributes

Other attributes are derived from these primary attributes:

@@ -106,21 +220,48 @@ Other attributes are derived from these primary attributes:

#### Bounding box

```python

->>> dem.bbox

+dem.bbox

+```

+

+

+Output...

+

+

+```

(-97.4849999999961, 32.52166666666537, -97.17833333332945, 32.82166666666536)

```

+

+

+

#### Extent

```python

->>> dem.extent

+dem.extent

+```

+

+

+Output...

+

+

+```

(-97.4849999999961, -97.17833333332945, 32.52166666666537, 32.82166666666536)

```

+

+

+

#### Coordinates

```python

->>> dem.coords

+dem.coords

+```

+

+

+Output...

+

+

+```

array([[ 32.82166667, -97.485 ],

[ 32.82166667, -97.48416667],

[ 32.82166667, -97.48333333],

@@ -130,11 +271,117 @@ array([[ 32.82166667, -97.485 ],

[ 32.52333333, -97.18 ]])

```

-### Numpy attributes

+

+

+

+## Instantiating Rasters

-A `Raster` object also inherits all attributes and methods from numpy ndarrays.

+Rasters can be instantiated directly using the `pysheds.Raster` class. Both an array-like object and a `ViewFinder` must be provided.

```python

->>> dem.shape

-(359, 367)

+from pysheds.view import Raster, ViewFinder

+

+array = np.random.randn(*grid.shape)

+raster = Raster(array, viewfinder=grid.viewfinder)

+```

+

+

+Output...

+

+

+```

+raster

+

+Raster([[-0.71876505, -0.35747123, -0.3296262 , ..., -0.07522118,

+ -0.86431367, -0.45065405],

+ [-1.12477409, 2.28759514, 0.5855458 , ..., -0.43795955,

+ 0.42813309, 0.03900371],

+ [-1.33345727, 1.03254272, 0.0904066 , ..., 0.06465593,

+ -1.09938815, 1.1821455 ],

+ ...,

+ [ 0.67330805, 0.37022934, 0.13783694, ..., -1.59943506,

+ 0.65154575, -0.58218991],

+ [ 0.67738517, 0.43696016, 1.09402764, ..., -1.63815592,

+ 1.67867785, 0.16609381],

+ [ 1.17302635, 0.31176851, 1.79257942, ..., -0.48385788,

+ 1.38478075, -0.76431488]])

+```

+

+

+

+

+We can also instantiate the raster using our own custom `ViewFinder`.

+

+```python

+raster = Raster(array, viewfinder=ViewFinder(shape=array.shape))

+```

+

+Note that the `affine` transformation defaults to the identity matrix, the `nodata` value defaults to zero, the `crs` defaults to geographic coordinates, and the `mask` defaults to a boolean array of ones. If a `shape` is not provided, the shape of the viewfinder defaults to `(1, 1)`. However, when instantiating a `Raster`, the shape of the viewfinder and the shape of the array-like object must be identical.

+

+```python

+raster.viewfinder

+```

+

+

+Output...

+

+

+```

+'affine' : Affine(1.0, 0.0, 0.0,

+ 0.0, 1.0, 0.0)

+'shape' : (359, 367)

+'nodata' : 0

+'crs' : Proj('+proj=longlat +datum=WGS84 +no_defs', preserve_units=True)

+'mask' : array([[ True, True, True, ..., True, True, True],

+ [ True, True, True, ..., True, True, True],

+ [ True, True, True, ..., True, True, True],

+ ...,

+ [ True, True, True, ..., True, True, True],

+ [ True, True, True, ..., True, True, True],

+ [ True, True, True, ..., True, True, True]])

+```

+

+

+

+

+

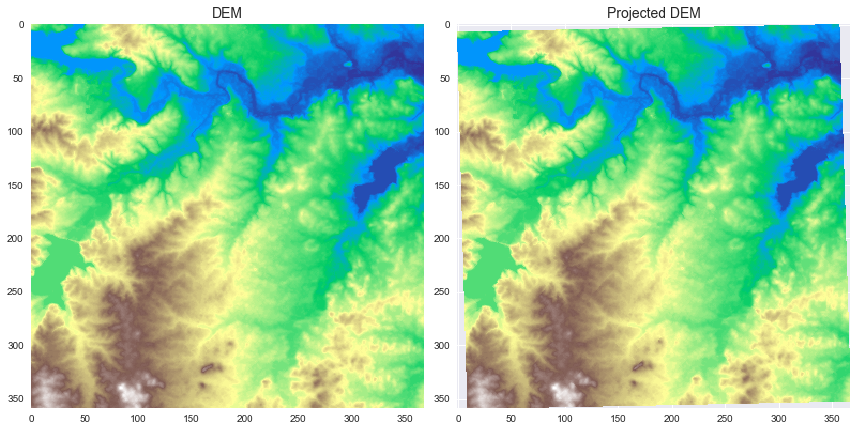

+## Converting the Raster coordinate reference system

+

+The Raster can be transformed to a new coordinate reference system using the `to_crs` method:

+

+```python

+import pyproj

+import numpy as np

+

+# Initialize new CRS

+new_crs = pyproj.Proj('epsg:3083')

+

+# Convert CRS of dataset and set nodata value for better plotting

+dem.nodata = np.nan

+proj_dem = dem.to_crs(new_crs)

+```

+

+

+Plotting code...

+

+

+```python

+import matplotlib.pyplot as plt

+import seaborn as sns

+

+fig, ax = plt.subplots(1, 2, figsize=(12,8))

+fig.patch.set_alpha(0)

+ax[0].imshow(dem, cmap='terrain', zorder=1)

+ax[1].imshow(proj_dem, cmap='terrain', zorder=1)

+ax[0].set_title('DEM', size=14)

+ax[1].set_title('Projected DEM', size=14)

+plt.tight_layout()

```

+

+

+

+

+Note that the projected Raster appears slightly rotated to the counterclockwise direction.

+

+

+

diff --git a/docs/views.md b/docs/views.md

index 6d62ad6..de82ce9 100644

--- a/docs/views.md

+++ b/docs/views.md

@@ -1,30 +1,78 @@

# Views

-The `grid.view` method returns a copy of a dataset cropped to the grid's current view. The grid's current view is defined by the following attributes:

+The `grid.view` method returns a copy of a dataset cropped to the grid's current view. The grid's current view is defined by its `viewfinder` attribute, which contains five properties that fully define the spatial reference system:

-- `affine`: An affine transform that defines the coordinates of the top-left cell, along with the cell resolution and rotation.

-- `crs`: The coordinate reference system of the grid.

-- `shape`: The shape of the grid (number of rows by number of columns)

-- `mask`: A boolean array that defines which cells will be masked in the output `Raster`.

+ - `affine`: An affine transformation matrix.

+ - `shape`: The desired shape (rows, columns).

+ - `crs` : The coordinate reference system.

+ - `mask` : A boolean array indicating which cells are masked.

+ - `nodata` : A sentinel value indicating 'no data'.

## Initializing the grid view

The grid's view will be populated automatically upon reading the first dataset.

```python

->>> grid = Grid.from_raster('../data/dem.tif',

- data_name='dem')

->>> grid.affine

+grid = Grid.from_raster('./data/dem.tif')

+```

+

+```python

+grid.affine

+```

+

+

+Output...

+

+

+```

Affine(0.0008333333333333, 0.0, -97.4849999999961,

0.0, -0.0008333333333333, 32.82166666666536)

-

->>> grid.crs

-

+```

+

+

+

+

+

+```python

+grid.crs

+```

+

+

+Output...

+

+

+```

+Proj('+proj=longlat +datum=WGS84 +no_defs', preserve_units=True)

+```

+

+

+

+

+

+```python

+grid.shape

+```

->>> grid.shape

+

+Output...

+

+

+```

(359, 367)

+```

+

+

+

+

+```python

+grid.mask

+```

+

+

+Output...

+

->>> grid.mask

+```

array([[ True, True, True, ..., True, True, True],

[ True, True, True, ..., True, True, True],

[ True, True, True, ..., True, True, True],

@@ -34,40 +82,133 @@ array([[ True, True, True, ..., True, True, True],

[ True, True, True, ..., True, True, True]])

```

+

+

+

We can verify that the spatial reference system is the same as that of the originating dataset:

```python

->>> grid.affine == grid.dem.affine

+dem = grid.read_raster('./data/dem.tif')

+```

+

+```python

+grid.affine == dem.affine

+```

+

+

+Output...

+

+

+```

True

->>> grid.crs == grid.dem.crs

+```

+

+

+

+

+```python

+grid.crs == dem.crs

+```

+

+

+Output...

+

+

+```

True

->>> grid.shape == grid.dem.shape

+```

+

+

+

+

+```python

+grid.shape == dem.shape

+```

+

+

+Output...

+

+

+```

True

->>> (grid.mask == grid.dem.mask).all()

+```

+

+

+

+

+

+```python

+(grid.mask == dem.mask).all()

+```

+

+

+Output...

+

+

+```

True

```

+

+

+

+

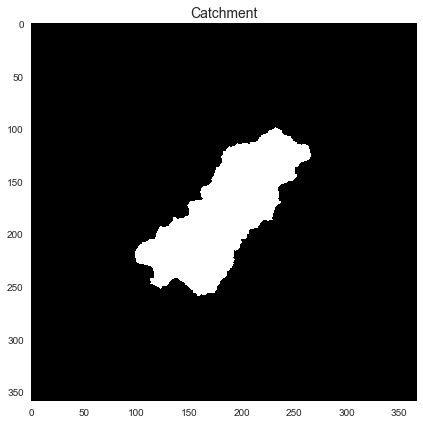

## Viewing datasets

First, let's delineate a watershed and use the `grid.view` method to get the results.

```python

# Resolve flats

->>> grid.resolve_flats(data='dem', out_name='inflated_dem')

+inflated_dem = grid.resolve_flats(dem)

+

+# Compute flow directions

+fdir = grid.flowdir(inflated_dem)

# Specify pour point

->>> x, y = -97.294167, 32.73750

+x, y = -97.294167, 32.73750

# Delineate the catchment

->>> grid.catchment(data='dir', x=x, y=y, out_name='catch',

- recursionlimit=15000, xytype='label')

+catch = grid.catchment(x=x, y=y, fdir=fdir, xytype='coordinate')

# Get the current view and plot

->>> catch = grid.view('catch')

->>> plt.imshow(catch)

+catch_view = grid.view(catch)

+```

+

+

+Plotting code...

+

+

+```python

+fig, ax = plt.subplots(figsize=(8,6))

+fig.patch.set_alpha(0)

+plt.imshow(catch_view, cmap='Greys_r', zorder=1)

+plt.title('Catchment', size=14)

+plt.tight_layout()

+```

+

+

+

+

+

+

+

+Note that in this case, the original raster and its view are the same:

+

+```python

+(catch == catch_view).all()

+```

+

+

+Output...

+

+

+```

+True

```

-

+

+

+

## Clipping the view to a dataset

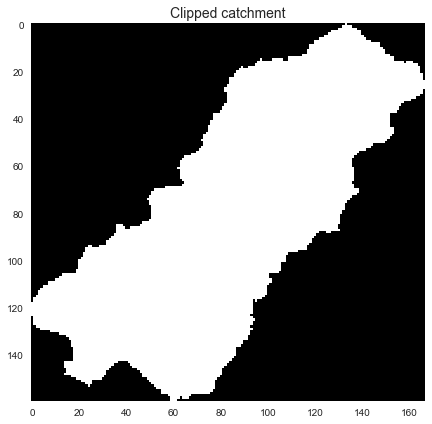

@@ -75,14 +216,54 @@ The `grid.clip_to` method clips the grid's current view to nonzero elements in a

```python

# Clip the grid's view to the catchment dataset

->>> grid.clip_to('catch')

+grid.clip_to(catch)

# Get the current view and plot

->>> catch = grid.view('catch')

->>> plt.imshow(catch)

+catch_view = grid.view(catch)

+```

+

+

+Plotting code...

+

+

+```python

+fig, ax = plt.subplots(figsize=(8,6))

+fig.patch.set_alpha(0)

+plt.imshow(catch_view, cmap='Greys_r', zorder=1)

+plt.title('Clipped catchment', size=14)

+plt.tight_layout()

+```

+

+

+

+

+

+

+

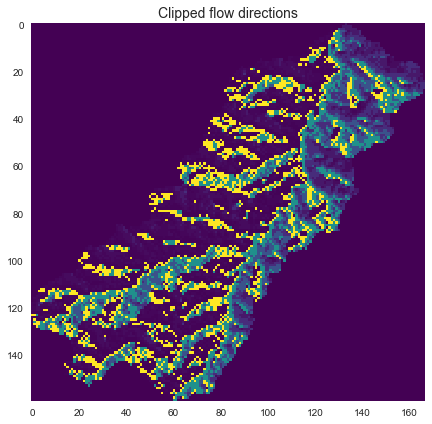

+We can also now use the `view` method to view other datasets within the current catchment boundaries:

+

+```python

+# Get the current view of flow directions

+fdir_view = grid.view(fdir)

+```

+

+

+Plotting code...

+

+

+```python

+fig, ax = plt.subplots(figsize=(8,6))

+fig.patch.set_alpha(0)

+plt.imshow(fdir_view, cmap='viridis', zorder=1)

+plt.title('Clipped flow directions', size=14)

+plt.tight_layout()

```

-

+

+

+