Since I wrote about the new ATProto-powered Tangled Git forge a few months ago, it's come along by leaps and bounds!

+First, and most excitingly, they've added continuous integration via Spindles which are built in a nice ATProto style:

+++When you push code or open a pull request, the knot hosting your repository +emits a pipeline event (sh.tangled.pipeline). Running as a dedicated service, +spindle subscribes to these events via websocket connections to your knot.

+

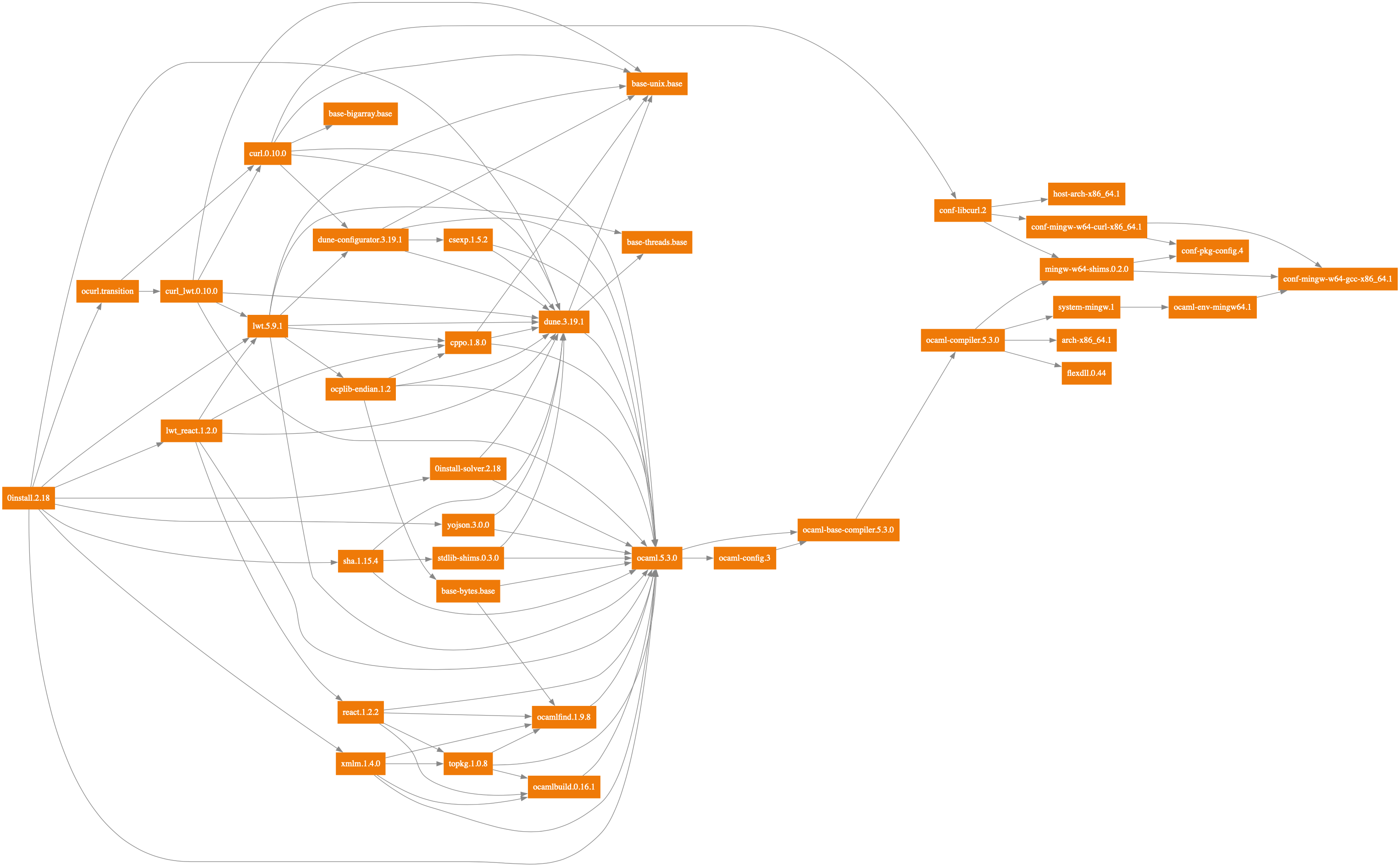

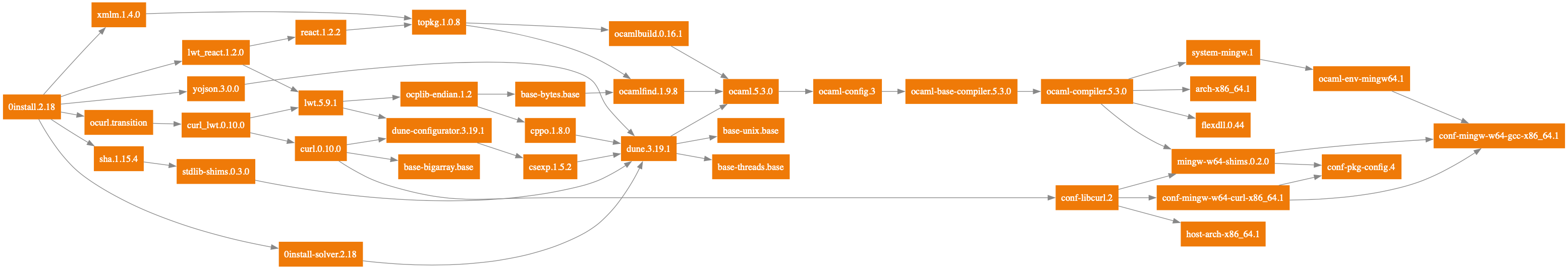

The pipelines are Nix-only right now, so I braved using it[1] for a new GPS Exchange Format library in OCaml that I wrote. The pipelines should look familiar, and the description format very straightforward.

+Secondly, the service has added support for JJ stacked pull requests, which are the closest I've seen to the Jane Street Iron diff workflow which I've been wanting to try in open source for ages. You can see the interdiff review process on a recent PR by Winter who add support for engine-agnostic Spindle workflows, which should pave the path for a Docker or BuildKit engine alongside the existing Nixery-based one.

+And thirdly, the general quality-of-life of the web frontend has improved dramatically, with a nice timeline, repo list, and profile pages. I'm running two knots right now (one on Recoil, and one in the Cambridge Computer Lab), and both have been very painfree. I wrote one of the earliest Dockerfiles for it, but there's now a community-maintained Knot Docker setup which I've switched to. Doesn't take very long at all; give it a try!

+Because I've been using Tangled so much, I added support for Tangled metadata to Dune to make OCaml package maintainence easier. This will appear in Dune 3.21 in a few months, but in the meanwhile enjoy the first Tangled.sh package on opam. It's a simple GPX library I used in my recent trip to Botswana. All you need in your dune-project will be:

(lang dune 3.21)

+(name mlgpx)

+(generate_opam_files true)

+(source (tangled @anil.recoil.org/ocaml-gpx))

+The only major thing I'm missing from Tangled is support for private repositories now, but I'm very content using it for public content today. Beware as usual that it's still in alpha, so don't trust super-ultra-mega-important stuff to it unless you've git mirrored elsewhere.

+An OCaml MCP server

LLMs are proving themselves superbly capable of a variety of coding tasks, having been trained against the enormous amount of code, tutorials and manuals available online. However, with smaller languages like OCaml there simply isn't enough training material out there, particularly when it comes to new language features like effects or new packages that haven't had time to be widely used. With my colleagues Anil, Ryan and Sadiq we've been exploring ways to improve this situation. One way we can mitigate these challenges is to provide a Model Context Protocol (MCP) server that's capable of providing up-to-date info on the current state of the OCaml world.

The MCP specification was released by Anthropic at the end of last year. Since then it has become an astonishingly popular mechanism for extending the capabilities of LLMs, allowing them to become incredibly powerful agents capable of much more than simply chatting. There are now a huge variety of MCP servers, from one that provides professional cycling data to one that can do your email. The awesome mcp server list already lists hundreds, and these are just the awesome ones!

I've been working with Sadiq to make an MCP server for OCaml, with an initial focus on building it such that it can be hosted for everyone rather than something that is run locally. Our plan is to start with a service that can help with choosing OCaml libraries, by taking advantage of the work done by ocaml-docs-ci which is the tool used to generate the documentation for all packages in opam-repository and is served by ocaml.org. As well as producing HTML docs, we can also extract a number of other formats from the pipeline, including a newly created markdown backend. Using this, we can get markdown-formatted documentation for the every version of every package in the OCaml ecosystem.

Continue reading here

diff --git a/data/planet/robur.coop/a-web-ui-for-dnsvizor.md b/data/planet/robur.coop/a-web-ui-for-dnsvizor.md new file mode 100644 index 0000000000..33218ddb77 --- /dev/null +++ b/data/planet/robur.coop/a-web-ui-for-dnsvizor.md @@ -0,0 +1,15 @@ +--- +title: A Web UI for DNSvizor +description: +url: https://blog.robur.coop/articles/dnsvizor05.html +date: 2025-06-18T00:00:00-00:00 +preview_image: +authors: +- Robur Cooperative +source: +ignore: +--- + + + The NGI-funded DNSvizor provides core network services on your network; DNS resolution and DHCP. We added a web UI and metrics. + diff --git a/data/planet/robur.coop/authentication-and-extended-web-ui-for-dnsvizor.md b/data/planet/robur.coop/authentication-and-extended-web-ui-for-dnsvizor.md new file mode 100644 index 0000000000..4769b5bbf5 --- /dev/null +++ b/data/planet/robur.coop/authentication-and-extended-web-ui-for-dnsvizor.md @@ -0,0 +1,15 @@ +--- +title: Authentication and extended web UI for DNSvizor +description: +url: https://blog.robur.coop/articles/dnsvizor08.html +date: 2025-07-22T00:00:00-00:00 +preview_image: +authors: +- Robur Cooperative +source: +ignore: +--- + + + DNSvizor now has authentication mechanisms for users who wish to update their block lists or modify configuration data etc. + diff --git a/data/planet/robur.coop/dnsvizor---run-your-own-dhcp-and-dns-mirageos-unikernel---gets-dnssec-support.md b/data/planet/robur.coop/dnsvizor---run-your-own-dhcp-and-dns-mirageos-unikernel---gets-dnssec-support.md new file mode 100644 index 0000000000..8e14158613 --- /dev/null +++ b/data/planet/robur.coop/dnsvizor---run-your-own-dhcp-and-dns-mirageos-unikernel---gets-dnssec-support.md @@ -0,0 +1,15 @@ +--- +title: DNSvizor - run your own DHCP and DNS MirageOS unikernel - gets DNSsec support +description: +url: https://blog.robur.coop/articles/dnsvizor03.html +date: 2025-05-24T00:00:00-00:00 +preview_image: +authors: +- Robur Cooperative +source: +ignore: +--- + + + The NGI-funded DNSvizor provides core network services on your network; DNS resolution and DHCP. + diff --git a/data/planet/robur.coop/dnsvizor-gets-blocklists.md b/data/planet/robur.coop/dnsvizor-gets-blocklists.md new file mode 100644 index 0000000000..fdbd7b3df7 --- /dev/null +++ b/data/planet/robur.coop/dnsvizor-gets-blocklists.md @@ -0,0 +1,13 @@ +--- +title: DNSvizor Gets Blocklists +description: +url: https://blog.robur.coop/articles/dnsvizor07.html +date: 2025-06-25T00:00:00-00:00 +preview_image: +authors: +- Robur Cooperative +source: +ignore: +--- + +DNSvizor is extended to support blocking (malicious) domains. diff --git a/data/planet/robur.coop/dnsvizor-manual.md b/data/planet/robur.coop/dnsvizor-manual.md new file mode 100644 index 0000000000..3d2188b2d4 --- /dev/null +++ b/data/planet/robur.coop/dnsvizor-manual.md @@ -0,0 +1,13 @@ +--- +title: DNSvizor manual +description: +url: https://blog.robur.coop/articles/dnsvizor10.html +date: 2025-07-30T00:00:00-00:00 +preview_image: +authors: +- Robur Cooperative +source: +ignore: +--- + +Documenting DNSvizor: setup, how to build, configuration, updates diff --git a/data/planet/robur.coop/dnsvizor-performance-engineering.md b/data/planet/robur.coop/dnsvizor-performance-engineering.md new file mode 100644 index 0000000000..562fc5acd7 --- /dev/null +++ b/data/planet/robur.coop/dnsvizor-performance-engineering.md @@ -0,0 +1,13 @@ +--- +title: DNSvizor performance engineering +description: +url: https://blog.robur.coop/articles/dnsvizor09.html +date: 2025-07-24T00:00:00-00:00 +preview_image: +authors: +- Robur Cooperative +source: +ignore: +--- + +Evaluating and engineering the performance of DNSvizor diff --git a/data/planet/robur.coop/dnsvizor-with-dns-over-tls-and-dns-over-https-support.md b/data/planet/robur.coop/dnsvizor-with-dns-over-tls-and-dns-over-https-support.md new file mode 100644 index 0000000000..433dfce11d --- /dev/null +++ b/data/planet/robur.coop/dnsvizor-with-dns-over-tls-and-dns-over-https-support.md @@ -0,0 +1,15 @@ +--- +title: DNSvizor with DNS over TLS and DNS over HTTPS support +description: +url: https://blog.robur.coop/articles/dnsvizor04.html +date: 2025-05-29T00:00:00-00:00 +preview_image: +authors: +- Robur Cooperative +source: +ignore: +--- + + + The NGI-funded DNSvizor provides core network services on your network; DNS resolution and DHCP. We added support for DNS over TLS and DNS over HTTPS + diff --git a/data/planet/robur.coop/privacy-for-dnsvizor.md b/data/planet/robur.coop/privacy-for-dnsvizor.md new file mode 100644 index 0000000000..70e3cebea1 --- /dev/null +++ b/data/planet/robur.coop/privacy-for-dnsvizor.md @@ -0,0 +1,15 @@ +--- +title: Privacy for DNSvizor +description: +url: https://blog.robur.coop/articles/dnsvizor06.html +date: 2025-07-03T00:00:00-00:00 +preview_image: +authors: +- Robur Cooperative +source: +ignore: +--- + + + The NGI-funded DNSvizor provides core network services on your network; DNS resolution and DHCP. We improved the privacy. + diff --git a/data/planet/robur.coop/streaming-binary-data-in-mollymawk.md b/data/planet/robur.coop/streaming-binary-data-in-mollymawk.md new file mode 100644 index 0000000000..6621f2a484 --- /dev/null +++ b/data/planet/robur.coop/streaming-binary-data-in-mollymawk.md @@ -0,0 +1,15 @@ +--- +title: Streaming Binary Data in Mollymawk +description: +url: https://blog.robur.coop/articles/streaming-binaries-mollymawk.html +date: 2025-08-18T00:00:00-00:00 +preview_image: +authors: +- Robur Cooperative +source: +ignore: +--- + + + Mollymawk receives a brand new update that let's it stream binary data avoiding memory issues. + diff --git a/data/planet/robur.coop/streaming-console-output-in-mollymawk.md b/data/planet/robur.coop/streaming-console-output-in-mollymawk.md new file mode 100644 index 0000000000..dcb20e41fc --- /dev/null +++ b/data/planet/robur.coop/streaming-console-output-in-mollymawk.md @@ -0,0 +1,15 @@ +--- +title: Streaming console output in mollymawk +description: +url: https://blog.robur.coop/articles/mollymawk-console.html +date: 2025-05-21T00:00:00-00:00 +preview_image: +authors: +- Robur Cooperative +source: +ignore: +--- + + + HTTP server-sent events are very helpful for streaming live console data, we implemented that in mollymawk + diff --git a/data/planet/tarides/internship-report-refactoring-tools-coming-to-merlin.md b/data/planet/tarides/internship-report-refactoring-tools-coming-to-merlin.md new file mode 100644 index 0000000000..76f9e46dd6 --- /dev/null +++ b/data/planet/tarides/internship-report-refactoring-tools-coming-to-merlin.md @@ -0,0 +1,118 @@ +--- +title: 'Internship Report: Refactoring Tools Coming to Merlin' +description: "Tim\xE9o shares his project integrating new refactoring features into + Merlin, improving user experience with a new command." +url: https://tarides.com/blog/2025-08-20-internship-report-refactoring-tools-coming-to-merlin +date: 2025-08-20T00:00:00-00:00 +preview_image: https://tarides.com/blog/images/tools-merlin-1360w.webp +authors: +- Tarides +source: +ignore: +--- + +Refactoring features have contributed to the popularity of editors like IntelliJ, as well as certain programming languages whose editor support offers interactive mechanisms to manage code — Gleam being an excellent example. Even though OCaml has some features related to refactoring (such as renaming occurrences, substituting typed holes with expressions, and case analysis for pattern matching), the goal of my internship was to kickstart work on a robust set of features to enable the smooth integration of multiple complementary refactoring support commands.

+As part of my Tarides internship (on the editor side), I specified several useful commands, inspired by competitors and materialised in the form of RFCs, subject to discussion. There were multiple candidates, but we found that expression extraction to toplevel was the most suitable for a first experiment. Since it touched on several parts of the protocol and required tools that could be reused for other features, it was important to design the system with extensibility and modularity in mind.

+In this article, I will present the results of this experiment, including the new command and some interesting use cases.

+Examples

+Expression extraction to toplevel will select the most inclusive expression that fits in your selection and propose to extract it. In this case, extract means that the selected expression will be moved into its own freshly generated let binding top level.

Extracting Constants

+Here is a first example: Let's try to extract a constant. Let’s assume +that the float 3.14159 is selected in the following code snippet:

+let circle_area radius = 3.14159 *. (radius ** 2.)

+ (* ^^^^^^^ *)

+The extract action code will then be proposed, and if you apply it, the code will look like this:

let const_name1 = 3.14159

+let circle_area radius = const_name1 *. (radius ** 2.)

+Here is an illustrated example (based on an experimental branch of ocaml-eglot):

+

+ +

+

We can see that the expression has been effectively extracted and replaced by a reference to the fresh let binding. We can also observe that in the absence of a specified name, the generated binding will be named with a generic name that is not taken in the destination scope. You also have the ability to supply the name you want for extraction.

+For example, here is the same example where the user can enter a name:

+

+ +

+

But the refactoring capabilities go much further than constant extraction!

+Extracting an Expression

+In our previous example, we could speculate about the purity of the expression, since we were only extracting a literal value. However, OCaml is an impure language, so extracting an expression into a constant can lead to unintended behavior. For example, let's imagine the following snippet:

+let () =

+ let () =

+ print_endline "Hello World!";

+ print_endline "Done"

+ in ()

+In this example, extracting into a constant would cause problems! Indeed, we would be changing the semantics of our program by executing both print statements beforehand. Fortunately, the command analyses the expression as not being a constant and delays its execution using a thunk — a function of type unit -> ....

+ +

+

As we can see, our goal was to maximise the production of valid code, as much as possible, by carefully analysing how to perform the extraction. This is all the more challenging in OCaml, which allows for arbitrary (and potentially infinite) nesting of expressions.

+Extracting an Expression That Uses Variables

+The final point we’ll briefly cover is the most fun. Indeed, it’s possible that the expression we want to extract depends on values defined in the current scope. For example:

+let z = 45

+

+let a_complicated_function x y =

+ let a = 10 in

+ let b = 11 in

+ let c = 12 in

+ a + b + c + (c * x * y) + z

+In this example, the extraction of the expression a + b + c (c * x * y) + z will be placed between z and a_complicated_function. As a result, z will still be accessible; however, x, y, a, b, and c will be free variables in the extracted expression. Therefore, we generate a function that takes these free variables as arguments:

+ +

+

Identifying free variables was one of the motivations for starting with this command. We are fairly certain that this is a function that we will need to reuse in many contexts! Note that the command behaves correctly in the presence of objects and modules.

+A Real World Example

+Let’s try to extract something a little more complicated now. Let’s assume we have the following code and we want to refactor it, for example, by extracting the markup type pretty print logic outside our pp function.

type t = markup list

+and markup = Text of string | Bold of string

+

+let show doc =

+ let buf = Buffer.create 101 in

+ let bold_tag = "**" in

+ List.iter

+ (fun markup ->

+ Buffer.add_string buf

+ @@

+ match markup with

+ | Text txt -> txt

+ | Bold txt -> bold_tag ^ txt ^ bold_tag)

+ doc;

+ Buffer.contents buf

+We can observe that bounded variables in the extracted region are now passed as arguments, and the extracted function is properly replaced by a call to the new show_markup generated function.

+let show_markup buf bold_tag =

+ fun markup ->

+ (Buffer.add_string buf)

+ (match markup with

+ | Text txt -> txt

+ | Bold txt -> bold_tag ^ txt ^ bold_tag)

+let show doc =

+ let buf = Buffer.create 101 in

+ let bold_tag = "**" in

+ List.iter (show_markup buf bold_tag) doc;

+ Buffer.contents buf

+Here is an example of how it is used. Impressive, isn't it?

+

+ +

+

Editor Support

+To understand how this new Merlin command can be properly used in your favourite editor, we have to take a closer look at the functioning of the Language Server Protocol. The LSP supports two mechanisms to extend the existing protocol with new features. First, there is code action, which allows us to perform multiple LSP commands sequentially. This kind of request has the merit of working out of the box without requiring any plugin or specific command support on the editor side (which oils the wheels for maintenance). Secondly, there are custom requests, which are more powerful than code actions and enable custom interactivity. So, if you want to prompt the user, a custom request is the way to go. The price you have to pay for this power is to have client-side support implemented for each custom request in every editor plugin.

The current editor team approach is as follows: For each of Merlin's commands that don't map directly to a standard LSP request, we provide a code action associated with the Merlin command and potentially a dedicated custom request if the feature requires custom interactivity. Regarding the ‘extract’ feature, the associated code action does not allow us to choose the name of the generated let binding, but the custom request does.

+What’s Next?

+I hope this new command helps you get even more productive in OCaml! Don’t hesitate to experiment with it and report any bugs you encounter.

+The development of Merlin’s refactoring tools was part of a broader vision to improve OCaml editor support and perhaps claim an editor experience similar to JetBrains IDE in the future!

+The work done on the extract command gives us the opportunity to identify various problems pertaining to refactoring (substitution, code generation) and potentially to make the connection to refactoring commands that already exist in Merlin (like open refactoring and project-wide renaming). The next step is to add a small toolbox library in Merlin dedicated to refactoring in order to develop even more refactor actions. I hope this is just the first refactoring feature of a long series.

If you're curious and want to take a look at the feature, it's split into several PRs:

+-

+

- ocaml/merlin#1948 which implements the extraction logic on the Merlin side and exposes it in the protocol, +

- ocaml/ocaml-lsp#1545 which exposes the Custom Request enabling the use of the LSP-side functionality, +

- ocaml/ocaml-lsp#1546 which exposes an Action Code that allows the functionality to be invoked without additional formalities on the Editor side, +

- tarides/ocaml-eglot#65 which implements extraction behaviour in OCaml-Eglot, invocable either from a type enclosing or directly as a classic Emacs command. +

All of these PRs are currently under review, and should be merged soon!

+A big thanks to Xavier, Ulysse, and all the people that helped me during this internship. It was pretty interesting!

+ From 810fbc3147bbbc4f53df4fd517a5d83132fc482d Mon Sep 17 00:00:00 2001 From: "github-actions[bot]" <41898282+github-actions[bot]@users.noreply.github.com> Date: Mon, 25 Aug 2025 08:17:10 +0200 Subject: [PATCH 07/15] [scrape.yml] New OCaml Planet blog posts and videos (#3271) Co-authored-by: cuihtlauac <1917592+cuihtlauac@users.noreply.github.com> --- ...gy-of-the-internet-ideas-at-aarhus-2025.md | 143 ++++++++++++++++++ 1 file changed, 143 insertions(+) create mode 100644 data/planet/anil/presenting-our-ecology-of-the-internet-ideas-at-aarhus-2025.md diff --git a/data/planet/anil/presenting-our-ecology-of-the-internet-ideas-at-aarhus-2025.md b/data/planet/anil/presenting-our-ecology-of-the-internet-ideas-at-aarhus-2025.md new file mode 100644 index 0000000000..b5b392fbbd --- /dev/null +++ b/data/planet/anil/presenting-our-ecology-of-the-internet-ideas-at-aarhus-2025.md @@ -0,0 +1,143 @@ +--- +title: Presenting our Ecology of the Internet ideas at Aarhus 2025 +description: +url: https://anil.recoil.org/notes/ecology-at-aarhus +date: 2025-08-22T00:00:00-00:00 +preview_image: +authors: +- Anil Madhavapeddy +source: +ignore: +--- + +That's a wrap for the next decade with Aarhus 2025, +where I presented our paper on "Steps towards an Ecology for the Internet". +I was a little unsure about how to approach the presentation, largely because +the ideas seem a little crazy if they'd been proposed even a year ago! Luckily +my co-authors strengthened my spine with encouragement and gin, +and the event was tremendous fun packed with useful insights.

+Our key observation is that the Internet is dangerously ossifying into monocultures +at multiple levels. Inspired by wild ecosystems, we're proposing mixing in more natural +selection +into edge deployments by using AI code models to mutate end-hosts and tailor +them to their environment. Generative AI is notoriously +unpredictable, +which turns out to be a useful property if you actually want more local +software diversity! For example, this lets us cook up "antibotty" networks that +fight back against global viruses via locally adapted vigilantes (antibodies).

+

Beyond just making thing more resilient, injecting more software diversity gives +us the hooks to make computers in our environment do what we actually want +them to. +To quote Mark Weiser, "the world is not a desktop", and computers +should be so ubiquitously blended into our day-to-day lives that they are +invisible to the user. We're +getting further away from that dream every day with the monotony of the FAANG +software monocultures, and a wild west of botnets +sweeping through billions of devices.

+Since this conference only happens once a decade, I put myself in the right mindframe by reading through my old ideas and seeing how they had aged. +I was struck by how much Databox (2015), Droplets (2011) and +Digital Yurts (2009) all stood up surprisingly well[1] in 2025. +This time around, we have a fresh edge with the rise of coding models and a relative glut of edge computation. The question is how to harness these new technologies for the health of the Internet and not yet more central lockin.

+

Discussions with the audience

+The talks were arranged in a panel with three other great speakers, who discussed crises through the lenses urban gardening, computing supply chains and two-loops models of change. There were some thought-provoking questions from the audience!

+Firstly, is framing these questions as a "crisis" just saturating us with a constant bombardment of problems we need to react to? Should we be building more (emotionally and systemically) sustainable platforms for engendering change? I certainly agreed with this, but I don't yet have a clear sense of what this means, beyond finding Our World In Data an inspiration of how fun data exploration can be.

+A couple of things I need to follow up on reading:

+-

+

- Computing as Ecocide was a paper at the LIMITS workshop, which I hadn't heard off before. This seems like a complement to PROPL coming up in October. +

- Robin Sloan is an author who combines old and new tech in fun ways. +

- Approaches to directly deal with "eco-anxiety" while teaching sustainability. +

Inversely proportional voting

+The stage discussion then veered into the role of lifecycles in these cultural +systems. An audience member asked about the role of biological mutualism and +cooperation in any future digital framework, and I pointed out +some +examples from our paper +about how cooperative ensembles in nature can be very stable, but also do not +have to last forever. When applying this "nihil aeternum est" principle to +human systems, how sacrosanct are concepts like "democracy" as we move forward? +If our views on these institutions remain unchanging, then they will also +become brittle and collapse as the context in which they operate changes into a hot +crowded world mired in polycrises.

+This let me bring out an idea I've been ruminating on for a while. While the principles of equal suffrage are vital, one dimension where we could relax it is for intergenerational representation. One issue with taking political decisions for the long-term is that wealth pools with the old, who have far less to gain from long-term thinking than the young[2] voters. So why are the young so underrepresented? Imagine a completely made up voting system that looked like this:

+++A voting system assigns each individual a number of votes inversely +proportional to their age. An 18-year old will have the maximum number of +votes, and they gradually degrade until anyone age 70 or higher gets just one +vote. The number of votes decay rapidly with age, so that every year the new +18-year old cohort will control (say) 40% of the total vote. This sort of +system ensures that the older generations (where wealth pools) must educate +the newly minted voters every year, or risk losing control of their agendas. +-- Anil's entirely made up voting system

+

I've found some variations of the theme, like Demeny voting that gives parents a proxy +vote for their children or even cases for disenfranchisement of the elderly. +But neither of these quite capture what I have in mind, which is to build +intergenerational education firmly into how our society operates. If 40% of +the entire voting block appears newly every year, then education on civic +matters need to happen like clockwork and be incorporated into our curriculums.

+But of course, there are huge barriers to trying out these experiments in civic +society. But there are no such barriers to running these experiments in +microcosms of common pool +resources +or even on digital systems. So after these fascinating conversations +at the conference, I'm going to think about the Cambridge Green Blue and apply it there.

+

Self hosting is really far behind

+I was also struck by how far behind self-hosting is, even among an audience +that should be heavily in favour of it. I think my talk was one of only a few +that mentioned BlueSky and the Fediverse, and alternative communication +mechanisms. I also demoed Claude in a corner to show how it could help manage +infrastructure that would +ordinarily take a sysadmin, but could now be reasonably handled by a non-expert +(with care!).

+One of the attendees commented to me afterwards that they remembered the +Databox talk from a decade ago, and wondered why it +hadn't taken off. Maybe now is the time for Ryan Gibb's work on digital +islands to hit the mainstream! +They've certainly never been needed more than now; I am deeply glad to +see my colleagues like Jon Sterling also working on solutions +in this space.

+Presenting in Slipshow

+In the spirit of self-hosting, I also used the great new

+Slipshow tool (that uses

+js_of_ocaml) to write the

+presentation. Slipshow lets the presentation be written in

+Markdown,

+and I used Claude Code to handle all the styling for me. The whole presentation

+took about an hour to put together, and can be viewed

+standalone as a single web page as it

+inlines all the assets.

Using HTML/JS/CSS for talks is really convenient, so I'm sold on using Slipshow

+for my upcoming presentations this year! It's also excellent to be using

+js_of_ocaml. I think the only thing on my "wishlist" is to be able to run a

+headless browser and output PDF snapshots of each of the slips. I'm also not

+yet sure how my 100MB videos will encode, but I'll figure that out ahead of my

+next talk (in the Royal Society at the start of September to the Austrian

+government). The author of the software, Paul-Elliot also

+kindly reached out to get feedback, and I was really pleased to see his work

+was supported by the NGI Commons Fund!

The city of Aarhus

+Aarhus is also a spiritual twin city to Cambridge. It was a gorgeously sunny +week, with bicycles available everywhere and a lovely Latin quarter to hang out +in.

+

The venue itself at Aarhus University was really nice to explore and see what +the students are up to. Lots of music and creative arts in the same area. When +I was here last year to present at +Matchpoints +we were at the Modern Art museum, and the amount of new building work in the +city was remarkable to see. There was also a lovely forest park I went for an +early morning jog in to get some nature!

+

-

+

-

+

Not coincidentally, most of these ideas were cooked up with Jon Crowcroft somewhere in the picture at a nearby pub.

+↩︎︎ -

+

We used the ideas of discount factors to adjust for impermanence in our forest carbon work in Nature Climate Change a few years ago too.

+↩︎︎

- Why Lean 4 replaced OCaml as my Primary Language

- Tacit 1.0.0 - Combinators!

- Slipshow!

- Claudius is now available in opam! 🎉

- New release of UnionFind

- Bogue, the OCaml GUI

- Dune 3.20

- cudajit: Bindings to the ~cuda~ and ~nvrtc~ libraries

- zbg v0.3.0: New release 2 years later

- Announcing Raven: Scientific Computing for OCaml (Alpha Release)

- Outreachy Demo Day August 2025

- Other OCaml News

The FreeBSD workers have been getting [slower]( +(https://github.com/ocurrent/opam-repo-ci/issues/449): jobs that should take a few minutes are now timing out after 60 minutes. My first instinct was that ZFS was acting strangely.

+ +I checked the classic ZFS performance indicators:

+ +-

+

- Pool health:

zpool status- ONLINE, no errors

+ - ARC hit ratio:

sysctl kstat.zfs.misc.arcstats.hits kstat.zfs.misc.arcstats.misses- 98.8% (excellent!)

+ - Fragmentation:

zpool list- 53% (high but not catastrophic)

+ - I/O latency:

zpool iostat -v 1 3andiostat -x 1 3- 1ms read/write (actually pretty good)

+

But the sync command was taking 70-160ms when it should be under 10ms for an SSD. We don’t need sync as the disk has disposable CI artefacts, so why not try:

zfs set sync=disabled obuilder

+The sync times improved to 40-50ms, but the CI jobs were still crawling.

+ +I applied some ZFS tuning to try to improve things:

+ +# Crank up those queue depths

+sysctl vfs.zfs.vdev.async_read_max_active=32

+sysctl vfs.zfs.vdev.async_write_max_active=32

+sysctl vfs.zfs.vdev.sync_read_max_active=32

+sysctl vfs.zfs.vdev.sync_write_max_active=32

+

+# Speed up transaction groups

+sysctl vfs.zfs.txg.timeout=1

+sysctl vfs.zfs.dirty_data_max=8589934592

+

+# Optimize for metadata

+zfs set atime=off obuilder

+zfs set primarycache=metadata obuilder

+sysctl vfs.zfs.arc.meta_balance=1000

+However, these changes were making no measurable difference to the actual performance.

+ +For comparison, I ran one of the CI steps on an identical machine, which was running Ubuntu with BTRFS:-

+ +opam install astring.0.8.5 base-bigarray.base base-domains.base base-effects.base base-nnp.base base-threads.base base-unix.base base64.3.5.1 bechamel.0.5.0 camlp-streams.5.0.1 cmdliner.1.3.0 cppo.1.8.0 csexp.1.5.2 dune.3.20.0 either.1.0.0 fmt.0.11.0 gg.1.0.0 jsonm.1.0.2 logs.0.9.0 mdx.2.5.0 ocaml.5.3.0 ocaml-base-compiler.5.3.0 ocaml-compiler.5.3.0 ocaml-config.3 ocaml-options-vanilla.1 ocaml-version.4.0.1 ocamlbuild.0.16.1 ocamlfind.1.9.8 optint.0.3.0 ounit2.2.2.7 re.1.13.2 repr.0.7.0 result.1.5 seq.base stdlib-shims.0.3.0 topkg.1.1.0 uutf.1.0.4 vg.0.9.5

+This took < 3 minutes, but the worker logs showed the same step took 35 minutes. What could cause such a massive difference on identical hardware?

+ +On macOS, I’ve previously seen problems when the number of mounted filesystems got to around 1000. mount would take t minutes to complete. I wondered, how many file systems are mounted?

# mount | grep obuilder | wc -l

+ 33787

+Now, that’s quite a few file systems. Historically, our FreeBSD workers had tiny SSDs, circa 128GB, but with the move to a new server with a 1.7TB SSD disk and using the same 25% prune threshold, the number of mounted file systems has become quite large.

+ +I gradually increased the prune threshold and waited for ocurrent/ocluster to prune jobs. With the threshold at 90% the number of file systems was down to ~5,000, and performance was restored.

+ +It’s not really a bug; it’s just an unexpected side effect of having a large number of mounted file systems. On macOS, the resolution was to unmount all the file systems at the end of each job, but that’s easy when the concurrency is limited to one and more tricky when the concurrency is 20 jobs.

diff --git a/data/planet/tunbury/animating-3d-models-in-ocaml-with-claude.md b/data/planet/tunbury/animating-3d-models-in-ocaml-with-claude.md new file mode 100644 index 0000000000..cef4a767d6 --- /dev/null +++ b/data/planet/tunbury/animating-3d-models-in-ocaml-with-claude.md @@ -0,0 +1,88 @@ +--- +title: Animating 3D models in OCaml with Claude +description: "In the week, Jon mentioned UTM, which uses Apple\u2019s Hypervisor virtualisation + framework to run ARM64 operating systems on Apple Silicon. It looked awesome, and + the speed of virtualised macOS was fantastic. It also offers x86_64 emulation; we + mused how well it would perform running Windows, but found it disappointing." +url: https://www.tunbury.org/2025/06/07/claude-animates-in-ocaml/ +date: 2025-06-07T00:00:00-00:00 +preview_image: https://www.tunbury.org/images/human.png +authors: +- Mark Elvers +source: +ignore: +--- + +In the week, Jon mentioned UTM, which uses Apple’s Hypervisor virtualisation framework to run ARM64 operating systems on Apple Silicon. It looked awesome, and the speed of virtualised macOS was fantastic. It also offers x86_64 emulation; we mused how well it would perform running Windows, but found it disappointing.

+ +I was particularly interested in this because I am stuck in the past with macOS Monterey on my Intel Mac Pro ‘trashcan’, as I have a niche Windows application that I can’t live without. A few years ago, I got a prototype running written in Swift. I never finished it as other events got in the way. The learning curve of SceneKit and Blender was intense. I still had the Collada files on my machine and today, of course, we have Claude.

+ +“How would I animate a Collada (.dae) file using OCaml?”. Claude acknowledged the complexity and proposed that lablgl, the OCaml bindings for OpenGL, would be a good starting point. Claude obliged and wrote the entire pipeline, giving me opam commands and Dune configuration files.

The code wouldn’t build, so I looked for the API for labgl. The library seemed old, with no recent activity. I mentioned this to Claude; he was happy to suggest an alternative approach of tgls, thin OpenGL bindings, with tsdl, SDL2 bindings, or the higher-level API from raylib. The idea of a high-level API sounded better, so I asked Claude to rewrite it with raylib.

The code had some compilation issues. Claude had proposed Mesh.gen_cube, which didn’t exist. Claude consulted the API documentation and found gen_mesh_cube instead. This went through several iterations, with Model.load becoming load_model and Model.draw_ex becoming draw_model_ex, etc. Twenty-two versions later, the code nearly compiles. This block continued to fail with two issues. The first being Array.find doesn’t exist and the second being that the type inferred for a was wrong. There are two types and they both contain target: string;. I manually fixed this with (a:animation_channel) and used match Array.find_opt ... with instead of the try ... with.

(* Update animations *)

+let update_object_animations objects animations elapsed_time =

+ Array.map (fun obj ->

+ try

+ let anim = Array.find (fun a -> a.target = obj.name) animations in

+ (* Loop animation *)

+ let loop_time = mod_float elapsed_time anim.duration in

+ let new_transform = interpolate_animation anim loop_time in

+ { obj with current_transform = new_transform }

+ with

+ Not_found -> obj

+ ) objects

+There were still many unused variables, but the code could be built using dune build --release.

Unfortunately, it couldn’t load my Collada file as the load functions were just stubs! Claude duly obliged and wrote a simple XML parser using regular expressions through the Str library, but interestingly suggested that I include xmlm as a dependency. Adding the parser broke the code, and it no longer compiled. The issue was similar to above; the compiler had inferred a type that wasn’t what Claude expected. I fixed this as above. The code also had some issues with the ordering - functions were used before they were defined. Again, this was an easy fix.

The parser still didn’t work, so I suggested ditching the regular expression-based approach and using xmlm instead. This loaded the mesh; it looked bad, but I could see that it was my mesh. However, it still didn’t animate, and I took a wrong turn here. I told Claude that the Collada file contained both the mesh and the animation, but that’s not right. It has been a while since I created the Collada files, and I had forgotten that the animation and the mesh definitions were in different files.

I asked Claude to improve the parser so that it would expect the animation data to be in the same file as the mesh. This is within the specification for Collada, but this was not the structure of my file.

+ +Is there a better approach than dealing with the complexity of writing a Collada XML parser? What formats are supported by raylib?

In a new thread, I asked, “Using OCaml with Raylib, what format should I use for my 3D mode and animation data?”. Claude suggested GLTF 2.0. As my animation is in Blender, it can be exported in GLTF format. Let’s try it!

+ +Claude used the raylib library to read and display a GLTF file and run the animation. The code was much shorter, but … it didn’t compile. I wrote to Claude, “The API for Raylib appears to be different to the one you have used. For example, camera3d.create doesn’t take named parameters, camera3d.prespective should be cameraprojection.perspective etc.” We set to work, and a dozen versions later, we built it successfully.

It didn’t work, though; the console produced an error over and over:

+ +Joint attribute data format not supported, use vec4 u8

+This looked like a problem with the model. I wondered if my GLTF file was compatible with raylib. I asked Claude if he knew of any validation tools, and he suggested an online viewer. This loaded my file perfectly and animated it in the browser. Claude also gave me some simple code to validate, which only loaded the model.

let main () =

+ init_window 800 600 "Static Model Test";

+ let camera = Camera3D.create

+ (Vector3.create 25.0 25.0 25.0)

+ (Vector3.create 0.0 0.0 0.0)

+ (Vector3.create 0.0 1.0 0.0)

+ 45.0 CameraProjection.Perspective in

+

+ let model = load_model "assets/character.gltf" in

+

+ while not (window_should_close ()) do

+ begin_drawing ();

+ clear_background Color.darkgray;

+ begin_mode_3d camera;

+ draw_model model (Vector3.create 0.0 0.0 0.0) 1.0 Color.white;

+ draw_grid 10 1.0;

+ end_mode_3d ();

+ draw_text "Static Model Test" 10 10 20 Color.white;

+ end_drawing ()

+ done;

+

+ unload_model model;

+ close_window ()

+Even this didn’t work! As I said at the top, it’s been a few years since I looked at this, and I still had Blender installed on my machine: version 2.83.4. The current version is 4.4, so I decided to upgrade. The GLTF export in 4.4 didn’t work on my Mac and instead displayed a page of Python warnings about numpy. On the Blender Forum, this thread showed me how to fix it. Armed with a new GLTF file, the static test worked. Returning to the animation code showed that it worked with the updated file; however, there are some significant visual distortions. These aren’t present when viewed in Blender, which I think comes down to how the library interpolates between keyframes. I will look into this another day.

I enjoyed the collaborative approach. I’m annoyed with myself for not remembering the separate file with the animation data. However, I think the change of direction from Collada to GLTF was a good decision, and the speed at which Claude can explore ideas is very impressive.

diff --git a/data/planet/tunbury/base-images-for-oxcaml.md b/data/planet/tunbury/base-images-for-oxcaml.md new file mode 100644 index 0000000000..65bd2e54ef --- /dev/null +++ b/data/planet/tunbury/base-images-for-oxcaml.md @@ -0,0 +1,64 @@ +--- +title: Base images for OxCaml +description: As @dra27 suggested, I first added support in ocurrent/ocaml-version. + I went with the name flambda2, which matched the name in the opam package. +url: https://www.tunbury.org/2025/06/10/oxcaml-base-images/ +date: 2025-06-10T00:00:00-00:00 +preview_image: https://www.tunbury.org/images/ocaml-logo.png +authors: +- Mark Elvers +source: +ignore: +--- + +As @dra27 suggested, I first added support in ocurrent/ocaml-version. I went with the name flambda2, which matched the name in the opam package.

Wherever I found the type Flambda, I added Flambda2. I added a list of OxCaml versions in the style of the unreleased betas and a function is_oxcaml to test if the variant is of type Flambda2, closely following the is_multicore design! The final change was to additional_packages concatenated ocaml-options-only- to flambda2 - again, this change was also needed for multicore.

It was a relatively minor change to the base-image-builder, adding Ocaml_version.Releases.oxcaml to the available switches on AMD64 and ARM64. Following the precedent set by maybe_add_beta and maybe_add_multicore, I added maybe_add_jst, which added the Jane Street opam repository for these builds.

The builds mostly failed because they depended on autoconf, which isn’t included by default on most distributions. Looking in the dockerfile, there is a function called ocaml_depexts, which includes zstd for OCaml > 5.1.0. I extended this function to include autoconf when building OxCaml.

The Arch Linux builds failed due to missing which, so I added this as I did for autoconf

The following are working:

+ +-

+

- Ubuntu 24.10, 24.04, 22.04 +

- OpenSUSE Tumbleweed +

- Fedora 42, 41 +

- Debian Unstable, Testing, 12 +

- Arch +

Failures

+ +-

+

- Alpine 3.21

+

-

+

- missing

linux/auxvec.hheader

+

+ - missing

- OpenSUSE 15.6

+

-

+

- autoconf is too old in the distribution +

+ - Debian 11

+

-

+

- autoconf is too old in the distribution +

+ - Oracle Linux 9, 8

+

-

+

- autoconf is too old in the distribution +

+

There is some discussion about whether building these with the base image builder is the best approach, so I won’t create PRs at this time. My branches are:

+-

+

- https://github.com/mtelvers/ocaml-version.git +

- https://github.com/mtelvers/ocaml-dockerfile.git#oxcaml +

- https://github.com/mtelvers/docker-base-images#oxcaml +

Equinix has stopped commercial sales of Metal and will sunset the service at the end of June 2026. Equinix have long been a supporter of OCaml and has provided free credits to use on their Metal platform. These credits are coming to an end at the end of this month, meaning that we need to move some of our services away from Equinix. We have two new four-node blade servers, which will become the new home for these services. The blades have dual 10C/20T processors with either 192GB or 256GB of RAM and a combination of SSD and spinning disk.

+ +192GB, 20C/40T with 1.1TB SSD, 2 x 6T disks

+-

+

- rosemary: FreeBSD CI Worker (releasing spring & summer) +

- oregano: OpenBSD CI Worker (releasing bremusa) +

- basil: docs-ci (new implementation, eventually replacing eumache) +

- mint: spare +

256GB, 20C/40T with 1.5TB SSD, 2 x 8T disks

+-

+

- thyme: Equinix c2-2 (registry.ci.dev) +

- chives: Equinix c2-4 (opam-repo-ci) + Equinix c2-3 (OCaml-ci) + Equinix c2-1 (preview.dune.dev) +

256GB, 20C/40T with 1.1TB SSD, 2 x 6T disks

+-

+

- dill: spare +

- sage: spare +

VMs currently running on hopi can be redeployed to chives, allowing hopi to be redeployed.

+ +Machines which can then be recycled are:

+-

+

- sleepy (4C) +

- grumpy (4C) +

- doc (4C) +

- spring (8T) +

- tigger +

- armyofdockerness +

We have changed our mind about using dm-cache in the SSD/RAID1 configuration. The current thinking is that the mechanical drives would be better served as extra capacity for our distributed ZFS infrastructure, where we intend to have two copies of all data, and these disks represent ~100TB of storage.

As mentioned previously, we have a deadline of Wednesday, 30th April, to move the workloads from the Equinix machines or incur hosting fees.

+ +I also noted that the SSD capacity is 1.7TB in all cases. The new distribution is:

+ +-

+

- rosemary: FreeBSD CI Worker (releasing spring & summer) +

- oregano: OpenBSD CI Worker (releasing bremusa) +

- basil: Equinix c2-2 (registry.ci.dev) +

- mint: @mte24 workstation +

- thyme: spare +

- chives: Equinix c2-4 (opam-repo-ci) + Equinix c2-3 (OCaml-ci) + Equinix c2-1 (preview.dune.dev) +

- dill: spare +

- sage: docs-ci (new implementation, eventually replacing eumache) +

Our PeerTube installation at watch.ocaml.org holds hundreds of videos we wouldn’t want to lose! It’s a VM hosted at Scaleway so the chances of a loss are pretty small, but having a second copy would give us extra reassurance. I’m going to use Borg Backup.

+ +Here’s the list of features (taken directly from their website):

+ +-

+

- Space-efficient storage of backups. +

- Secure, authenticated encryption. +

- Compression: lz4, zstd, zlib, lzma or none. +

- Mountable backups with FUSE. +

- Easy installation on multiple platforms: Linux, macOS, BSD, … +

- Free software (BSD license). +

- Backed by a large and active open source community. +

We have several OBuilder workers with one or more unused hard disks, which would make ideal backup targets.

+ +In this case, I will format and mount sdc as /home on one of the workers.

parted /dev/sdc mklabel gpt

+parted /dev/sdc mkpart primary ext4 0% 100%

+mkfs.ext4 /dev/sdc1

+Add this to /etc/fstab and run mount -a.

/dev/sdc1 /home ext4 defaults 0 2

+Create a user borg.

adduser --disabled-password --gecos '@borg' --home /home/borg borg

+On both machines, install the application borg.

apt install borgbackup

+On the machine we want to backup, generate an SSH key and copy it to the authorized_keys file for user borg on the target server. Ensure that chmod and chown are correct.

ssh-keygen -t ed25519 -f ~/.ssh/borg_backup_key

+Add lines to the .ssh/config for ease of connection. We can now ssh backup-server without any prompts.

Host backup-server

+ HostName your.backup.server.com

+ User borg

+ IdentityFile ~/.ssh/borg_backup_key

+ ServerAliveInterval 60

+ ServerAliveCountMax 3

+Borg supports encrypting the backup at rest on the target machine. The data is publicly available in this case, so encryption seems unnecessary.

+ +On the machine to be backed up, run.

+ +borg init --encryption=none backup-server:repo

+We can now perform a backup or two and see how the deduplication works.

+ +# borg create backup-server:repo::test /var/lib/docker/volumes/postgres --compression lz4 --stats --progress

+------------------------------------------------------------------------------

+Repository: ssh://backup-server/./repo

+Archive name: test

+Archive fingerprint: 627242cb5b65efa23672db317b4cdc8617a78de4d8e195cdd1e1358ed02dd937

+Time (start): Sat, 2025-06-14 13:32:27

+Time (end): Sat, 2025-06-14 13:32:38

+Duration: 11.03 seconds

+Number of files: 3497

+Utilization of max. archive size: 0%

+------------------------------------------------------------------------------

+ Original size Compressed size Deduplicated size

+This archive: 334.14 MB 136.28 MB 132.79 MB

+All archives: 334.14 MB 136.28 MB 132.92 MB

+

+ Unique chunks Total chunks

+Chunk index: 942 1568

+------------------------------------------------------------------------------

+# borg create backup-server:repo::test2 /var/lib/docker/volumes/postgres --compression lz4 --stats --progress

+------------------------------------------------------------------------------

+Repository: ssh://backup-server/./repo

+Archive name: test2

+Archive fingerprint: 572bf2225b3ab19afd32d44f058a49dc2b02cb70c8833fa0b2a1fb5b95526bff

+Time (start): Sat, 2025-06-14 13:33:05

+Time (end): Sat, 2025-06-14 13:33:06

+Duration: 1.43 seconds

+Number of files: 3497

+Utilization of max. archive size: 0%

+------------------------------------------------------------------------------

+ Original size Compressed size Deduplicated size

+This archive: 334.14 MB 136.28 MB 9.58 MB

+All archives: 668.28 MB 272.55 MB 142.61 MB

+

+ Unique chunks Total chunks

+Chunk index: 971 3136

+------------------------------------------------------------------------------

+# borg list backup-server:repo

+test Sat, 2025-06-14 13:32:27 [627242cb5b65efa23672db317b4cdc8617a78de4d8e195cdd1e1358ed02dd937]

+test2 Sat, 2025-06-14 13:33:05 [572bf2225b3ab19afd32d44f058a49dc2b02cb70c8833fa0b2a1fb5b95526bff]

+Let’s run this every day via by placing a script borgbackup in /etc/cron.daily. The paths given are just examples…

#!/bin/bash

+

+# Configuration

+REPOSITORY="backup-server:repo"

+

+# What to backup

+BACKUP_PATHS="

+/home

+"

+

+# What to exclude

+EXCLUDE_ARGS="

+--exclude '*.tmp'

+--exclude '*.log'

+"

+

+# Logging function

+log() {

+ logger -t "borg-backup" "$1"

+ echo "$(date '+%Y-%m-%d %H:%M:%S') - $1"

+}

+

+log "========================================"

+log "Starting Borg backup"

+

+# Check if borg is installed

+if ! command -v borg &> /dev/null; then

+ log "ERROR: borg command not found"

+ exit 1

+fi

+

+# Test repository access

+if ! borg info "$REPOSITORY" &> /dev/null; then

+ log "ERROR: Cannot access repository $REPOSITORY"

+ log "Make sure repository exists and SSH key is set up"

+ exit 1

+fi

+

+# Create backup

+log "Creating backup archive..."

+if borg create \

+ "$REPOSITORY::backup-{now}" \

+ $BACKUP_PATHS \

+ $EXCLUDE_ARGS \

+ --compression lz4 \

+ --stats 2>&1 | logger -t "borg-backup"; then

+ log "Backup created successfully"

+else

+ log "ERROR: Backup creation failed"

+ exit 1

+fi

+

+# Prune old backups

+log "Pruning old backups..."

+if borg prune "$REPOSITORY" \

+ --keep-daily=7 \

+ --keep-weekly=4 \

+ --keep-monthly=6 \

+ --stats 2>&1 | logger -t "borg-backup"; then

+ log "Pruning completed successfully"

+else

+ log "WARNING: Pruning failed, but backup was successful"

+fi

+

+# Monthly repository check (on the 1st of each month)

+if [ "$(date +%d)" = "01" ]; then

+ log "Running monthly repository check..."

+ if borg check "$REPOSITORY" 2>&1 | logger -t "borg-backup"; then

+ log "Repository check passed"

+ else

+ log "WARNING: Repository check failed"

+ fi

+fi

+

+log "Backup completed successfully"

+log "========================================"

+Check the logs…

+ +journalctl -t borg-backup

+Over the weekend, I decided to extend my Box tool to incorporate file upload. There is a straightforward POST API for this with a curl one-liner given in the Box documentation. Easy.

The documentation for Cohttp-eio.Client only gives the function signature for post, but it looked pretty similar to get, which I had already been working with. The README for Cohttp gave me pause when I read this comment about multipart forms.

++ +Multipart form data is not supported out of the box but is provided by external libraries

+

Of the three options given, the second option looked abandoned, while the third said it didn’t support streaming, so I went with the first one dionsaure/multipart_form.

+ +The landing page included an example encoder. A couple of external functions are mentioned, and I found example code for these in test/test.ml. This built, but didn’t work against Box. I ran nc -l 127.0.0.1 6789 and set that as the API endpoint for both the curl and my application. This showed I was missing the Content-Type header in the part boundary. It should be application/octet-stream.

There is a ~header parameter to part, and I hoped for a Header.add like the Cohttp, but sadly not. See the documentation. There is Header.content_type, but that returns the content type. How do you make it? Header.of_list requires a Field.field list.

In a bit of frustration, I decided to ask Claude. I’ve not tried it before, but I’ve seen some impressive demonstrations. My first lesson here was to be specific. Claude is not a mind reader. After a few questions, I got to this:

+ +Field.(make Content_type.name (Content_type.v `Application `Octet_stream));

+I can see why this was suggested as Content_disposition.v exists, but Content_type.v does not, nor does Field.make. Claude quickly obliged with a new version when I pointed this out but added the Content_type to the HTTP header rather than the boundary header. This went back and forth for a while, with Claude repeatedly suggesting functions which did not exist. I gave up.

On OCaml.org, the multipart-form documentation includes a Used by section that listed dream as the only (external) application which used the library. From the source, I could see Field.Field (field_name, Field.Content_type, v), which looked good.

There is a function Content_type.of_string. I used :MerlinLocate to find the source, which turned out to be an Angstrom parser which returns a Content_type.t. This led me to Content_type.make, and ultimately, I was able to write these two lines:

let v = Content_type.make `Application (`Iana_token "octet-stream") Content_type.Parameters.empty

+let p0 = part ~header:(Header.of_list [ Field (Field_name.content_type, Content_type, v) ]) ...

+As a relatively new adopter of OCaml as my language of choice, the most significant challenge I face is documentation, particularly when I find a library on opam which I want to use. I find this an interesting contrast to the others in the community, where it is often cited that tooling is the most significant barrier to adoption. In my opinion, the time taken to set up a build environment is dwarfed by the time spent in that environment iterating code.

+ +I would like to take this opportunity to thank all contributors to opam repository for their time and effort in making packages available. This post mentions specific packages but only to illustrate my point.

diff --git a/data/planet/tunbury/box-diff-tool.md b/data/planet/tunbury/box-diff-tool.md new file mode 100644 index 0000000000..3a6ef656c1 --- /dev/null +++ b/data/planet/tunbury/box-diff-tool.md @@ -0,0 +1,21 @@ +--- +title: Box Diff Tool +description: Over the weekend, I extended mtelvers/ocaml-box-diff to include the ability + to upload files over 50MB. This is a more complex API which requires a call to https://upload.box.com/api/2.0/files/upload_sessions + by posting JSON containing the name of the file, the folder ID and the file size. + Box replies with various session endpoints which give the URIs to use to upload + the parts and to commit the the file. Box also specifies the size of each part. +url: https://www.tunbury.org/2025/04/12/box-diff/ +date: 2025-04-12T00:00:00-00:00 +preview_image: https://www.tunbury.org/images/box-logo.png +authors: +- Mark Elvers +source: +ignore: +--- + +Over the weekend, I extended mtelvers/ocaml-box-diff to include the ability to upload files over 50MB. This is a more complex API which requires a call to https://upload.box.com/api/2.0/files/upload_sessions by posting JSON containing the name of the file, the folder ID and the file size. Box replies with various session endpoints which give the URIs to use to upload the parts and to commit the the file. Box also specifies the size of each part.

+ +Each part is uploaded with an HTTP PUT of the binary data, with header fields giving the byte range within the overall file along with the SHA for this chunk. Box replies with a part identifier. Once all the parts have been uploaded, an HTTP POST is required to the commit URI, passing a JSON array of all the parts as well as the overall SHA for the file.

+ +I was pleased to be able to reuse stream_of_file, which was written for the small file upload. Additionally, I was able to keep a running total SHA for the data uploaded so far using Sha1.update_string ctx chunk, meaning that I did not need to recompute the overall file SHA at the end.

Everything was going fine until I ran out of disk space. My NVMe, C: drive, is only 256GB, but I have a large, 1.7TB SSD available as D:. How trivial, change a few paths and carry on, but it wasn’t that simple, or was it?

Distilling the problem down to the minimum and excluding all code written by me, the following command fails, but changing src=d:\cache\opam to src=c:\cache\opam works. It’s not the content, as it’s just an empty folder.

ctr run --rm --cni -user ContainerAdministrator -mount type=bind,src=d:\cache\opam,dst=c:\Users\ContainerAdministrator\AppData\Local\opam mcr.microsoft.com/windows/servercore:ltsc2022 my-container cmd /c "curl.exe -L -o c:\Windows\opam.exe https://github.com/ocaml/opam/releases/download/2.3.0/opam-2.3.0-x86_64-windows.exe && opam.exe init --debug-level=3 -y"

+The failure point is the ability to create the lock file config.lock. Checking the code, the log entry is written before the lock is acquired. If c:\Users\ContainerAdministrator\AppData\Local\opam is not a bind mount, or the bind mount is on C:, then it works.

01:26.722 CLIENT updating repository state

+01:26.722 GSTATE LOAD-GLOBAL-STATE @ C:\Users\ContainerAdministrator\AppData\Local\opam

+01:26.723 SYSTEM LOCK C:\Users\ContainerAdministrator\AppData\Local\opam\lock (none => read)

+01:26.723 SYSTEM LOCK C:\Users\ContainerAdministrator\AppData\Local\opam\config.lock (none => write)

+Suffice it to say, I spent a long time trying to resolve this. I’ll mention a couple of interesting points that appeared along the way. Firstly, files created on D: effectively appear as hard links, and the Update Sequence Number, USN, is 0.

C:\> fsutil file layout d:\cache\opam\lock

+

+********* File 0x000400000001d251 *********

+File reference number : 0x000400000001d251

+File attributes : 0x00000020: Archive

+File entry flags : 0x00000000

+Link (ParentID: Name) : 0x000c00000000002d: HLINK Name : \cache\opam\lock

+...

+LastUsn : 0

+...

+The reason behind this is down to Windows defaults:

+ +-

+

- Windows still likes to create the legacy 8.3 MS-DOS file names on the system volume,

C:, which explains the difference betweenHLINKandNTFS+DOS. Runningfsutil 8dot3name set d: 0will enable the creation of the old-style file names.

+ - Drive

C:has a USN journal created automatically, as it’s required for Windows to operate, but it isn’t created by default on other drives. Runningfsutil usn createjournal d: m=32000000 a=8000000will create the journal.

+

C:\> fsutil file layout c:\cache\opam\lock

+

+********* File 0x000300000002f382 *********

+File reference number : 0x000300000002f382

+File attributes : 0x00000020: Archive

+File entry flags : 0x00000000

+Link (ParentID: Name) : 0x000b0000000271d1: NTFS+DOS Name: \cache\opam\lock

+...

+LastUsn : 16,897,595,224

+...

+Sadly, neither of these insights makes any difference to my problem. I did notice that containerd 2.1.3 had been released, where I had been using 2.1.1. Upgrading didn’t fix the issue, but it did affect how the network namespaces were created. More later.

I decided to both ignore the problem and try it on another machine. After all, this problem was only a problem because my C: was too small. I created a QEMU VM with a 40GB C: and a 1TB D: and installed everything, and it worked fine with the bind mount on D: even without any of the above tuning and even with D: formatted using ReFS, rather than NTFS.

Trying on another physical machine with a single large spinning disk as C: also worked as anticipated.

In both of these new installations, I used containerd 2.1.3 and noticed that the behaviour I had come to rely upon seemed to have changed. If you recall, in this post, I found the network namespace GUID by running ctr run on a standard Windows container and then ctr container info in another window. This no longer worked reliably, as the namespace was removed when the container exited. Perhaps it always should have been?

I need to find out how to create these namespaces. PowerShell has a cmdlet Get-HnsNetwork, but none of the GUID values there match the currently running namespaces I observe from ctr container info. The source code of containerd is on GitHub..

When you pass --cni to the ctr command, it populates the network namespace from NetNewNS. Snippet from cmd/ctr/commands/run/run_windows.go

if cliContext.Bool("cni") {

+ ns, err := netns.NewNetNS("")

+ if err != nil {

+ return nil, err

+ }

+ opts = append(opts, oci.WithWindowsNetworkNamespace(ns.GetPath()))

+ }

+NewNetNS is defined in pkg/netns/netns_windows.go

// NetNS holds network namespace for sandbox

+type NetNS struct {

+ path string

+}

+

+// NewNetNS creates a network namespace for the sandbox.

+func NewNetNS(baseDir string) (*NetNS, error) {

+ temp := hcn.HostComputeNamespace{}

+ hcnNamespace, err := temp.Create()

+ if err != nil {

+ return nil, err

+ }

+

+ return &NetNS{path: hcnNamespace.Id}, nil

+}

+Following the thread, and cutting out a few steps in the interest of brevity, we end up in vendor/github.com/Microsoft/hcsshim/hcn/zsyscall_windows.go which calls a Win32 API.

func _hcnCreateNamespace(id *_guid, settings *uint16, namespace *hcnNamespace, result **uint16) (hr error) {

+ hr = procHcnCreateNamespace.Find()

+ if hr != nil {

+ return

+ }

+ r0, _, _ := syscall.SyscallN(procHcnCreateNamespace.Addr(), uintptr(unsafe.Pointer(id)), uintptr(unsafe.Pointer(settings)), uintptr(unsafe.Pointer(namespace)), uintptr(unsafe.Pointer(result)))

+ if int32(r0) < 0 {

+ if r0&0x1fff0000 == 0x00070000 {

+ r0 &= 0xffff

+ }

+ hr = syscall.Errno(r0)

+ }

+ return

+}

+PowerShell provides Get-HnsNamespace to list available namespaces. These are the droids values I’ve been looking for to put in config.json! However, by default there are no cmdlets to create them. The installation PowerShell script for containerd pulls in hns.psm1 for containerd, has a lot of interesting cmdlets, such as New-HnsNetwork, but not a cmdlet to create a namespace. There is also hns.v2.psm1, which does have New-HnsNamespace.

PS C:\Users\Administrator> curl.exe -o hns.v2.psm1 -L https://raw.githubusercontent.com/microsoft/SDN/refs/heads/master/Kubernetes/windows/hns.v2.psm1

+ % Total % Received % Xferd Average Speed Time Time Time Current

+ Dload Upload Total Spent Left Speed

+100 89329 100 89329 0 0 349k 0 --:--:-- --:--:-- --:--:-- 353k

+

+PS C:\Users\Administrator> Import-Module .\hns.v2.psm1

+WARNING: The names of some imported commands from the module 'hns.v2' include unapproved verbs that might make them less discoverable. To find the commands with unapproved verbs, run the Import-Module command again with the Verbose parameter. For a list of approved verbs, type Get-Verb.

+

+PS C:\Users\Administrator> New-HnsNamespace

+HcnCreateNamespace -- HRESULT: 2151350299. Result: {"Success":false,"Error":"Invalid JSON document string. {{CreateWithCompartment,UnknownField}}","ErrorCode":2151350299}

+At C:\Users\Administrator\hns.v2.psm1:2392 char:13

++ throw $errString

++ ~~~~~~~~~~~~~~~~

+ + CategoryInfo : OperationStopped: (HcnCreateNamesp...de":2151350299}:String) [], RuntimeException

+ + FullyQualifiedErrorId : HcnCreateNamespace -- HRESULT: 2151350299. Result: {"Success":false,"Error":"Invalid JSON document string. {{CreateWithCompartment,UnknownField}}","ErrorCode":2151350299}

+With a lot of frustration, I decided to have a go at calling the Win32 API from OCaml. This resulted in mtelvers/hcn-namespace, which allows me to create the namespaces by running hcn-namespace create. These namespaces appear in the output from Get-HnsNamespace and work correctly in config.json.

Run hcn-namespace.exe create, and then populate "networkNamespace": "<GUID>" with the GUID provided and run with ctr run --rm -cni --config config.json.

The log from an OBuilder job starts with the steps needed to reproduce the job locally. This boilerplate output assumes that all OBuilder jobs start from a Docker base image, but on some operating systems, such as FreeBSD and macOS, OBuilder uses ZFS base images. On OpenBSD and Windows, it uses QEMU images. The situation is further complicated when the issue only affects a specific architecture that may be unavailable to the user.

+ +2025-05-08 13:29.37: New job: build bitwuzla-cxx.0.7.0, using opam 2.3

+ from https://github.com/ocaml/opam-repository.git#refs/pull/27768/head (55a47416d532dc829d9111297970934a21a1b1c4)

+ on macos-homebrew-ocaml-4.14/amd64

+

+To reproduce locally:

+

+cd $(mktemp -d)

+git clone --recursive "https://github.com/ocaml/opam-repository.git" && cd "opam-repository" && git fetch origin "refs/pull/27768/head" && git reset --hard 55a47416

+git fetch origin master

+git merge --no-edit b8a7f49af3f606bf8a22869a1b52b250dd90092e

+cat > ../Dockerfile <<'END-OF-DOCKERFILE'

+

+FROM macos-homebrew-ocaml-4.14

+USER 1000:1000

+RUN ln -f ~/local/bin/opam-2.3 ~/local/bin/opam

+RUN opam init --reinit -ni

+RUN opam option solver=builtin-0install && opam config report

+ENV OPAMDOWNLOADJOBS="1"

+ENV OPAMERRLOGLEN="0"

+ENV OPAMPRECISETRACKING="1"

+ENV CI="true"

+ENV OPAM_REPO_CI="true"

+RUN rm -rf opam-repository/

+COPY --chown=1000:1000 . opam-repository/

+RUN opam repository set-url -k local --strict default opam-repository/

+RUN opam update --depexts || true

+RUN opam pin add -k version -yn bitwuzla-cxx.0.7.0 0.7.0

+RUN opam reinstall bitwuzla-cxx.0.7.0; \

+ res=$?; \

+ test "$res" != 31 && exit "$res"; \

+ export OPAMCLI=2.0; \

+ build_dir=$(opam var prefix)/.opam-switch/build; \

+ failed=$(ls "$build_dir"); \

+ partial_fails=""; \

+ for pkg in $failed; do \

+ if opam show -f x-ci-accept-failures: "$pkg" | grep -qF "\"macos-homebrew\""; then \

+ echo "A package failed and has been disabled for CI using the 'x-ci-accept-failures' field."; \

+ fi; \

+ test "$pkg" != 'bitwuzla-cxx.0.7.0' && partial_fails="$partial_fails $pkg"; \

+ done; \

+ test "${partial_fails}" != "" && echo "opam-repo-ci detected dependencies failing: ${partial_fails}"; \

+ exit 1

+

+

+END-OF-DOCKERFILE

+docker build -f ../Dockerfile .

+It is, therefore, difficult to diagnose the issue on these operating systems and on esoteric architectures. Is it an issue with the CI system or the job itself?

+ +My approach is to get myself into an interactive shell at the point in the build where the failure occurs. On Linux and FreeBSD, the log is available in /var/log/syslog or /var/log/messages respectively. On macOS, this log is written to ocluster.log. macOS workers are single-threaded, so the worker must be paused before progressing.

Each step in an OBuilder job consists of taking a snapshot of the previous layer, running a command in that layer, and keeping or discarding the layer depending on the command’s success or failure. On macOS, layers are ZFS snapshots mounted over the Homebrew directory and the CI users’ home directory. We can extract the appropriate command from the logs.

+ +2025-05-08 14:31.17 application [INFO] Exec "zfs" "clone" "-o" "canmount=noauto" "--" "obuilder/result/a67e6d3b460fa52b5c57581e7c01fa74ddca0a0b5462fef34103a09e87f3feec@snap" "obuilder/result/af09425cd7744c7b32ed000b11db90295142f3d3430fddb594932d5c02343b40"

+2025-05-08 14:31.17 application [INFO] Exec "zfs" "mount" "obuilder/result/af09425cd7744c7b32ed000b11db90295142f3d3430fddb594932d5c02343b40"

+2025-05-08 14:31.17 application [INFO] Exec "zfs" "clone" "-o" "mountpoint=none" "--" "obuilder/result/a67e6d3b460fa52b5c57581e7c01fa74ddca0a0b5462fef34103a09e87f3feec/brew@snap" "obuilder/result/af09425cd7744c7b32ed000b11db90295142f3d3430fddb594932d5c02343b40/brew"

+2025-05-08 14:31.17 application [INFO] Exec "zfs" "clone" "-o" "mountpoint=none" "--" "obuilder/result/a67e6d3b460fa52b5c57581e7c01fa74ddca0a0b5462fef34103a09e87f3feec/home@snap" "obuilder/result/af09425cd7744c7b32ed000b11db90295142f3d3430fddb594932d5c02343b40/home"

+cannot open 'obuilder/result/af09425cd7744c7b32ed000b11db90295142f3d3430fddb594932d5c02343b40@snap': dataset does not exist

+2025-05-08 14:31.17 application [INFO] Exec "zfs" "clone" "--" "obuilder/cache/c-opam-archives@snap" "obuilder/cache-tmp/8608-c-opam-archives"

+2025-05-08 14:31.17 application [INFO] Exec "zfs" "clone" "--" "obuilder/cache/c-homebrew@snap" "obuilder/cache-tmp/8609-c-homebrew"

+2025-05-08 14:31.18 obuilder [INFO] result_tmp = /Volumes/obuilder/result/af09425cd7744c7b32ed000b11db90295142f3d3430fddb594932d5c02343b40

+2025-05-08 14:31.18 application [INFO] Exec "zfs" "set" "mountpoint=/Users/mac1000" "obuilder/result/af09425cd7744c7b32ed000b11db90295142f3d3430fddb594932d5c02343b40/home"

+2025-05-08 14:31.18 application [INFO] Exec "zfs" "set" "mountpoint=/usr/local" "obuilder/result/af09425cd7744c7b32ed000b11db90295142f3d3430fddb594932d5c02343b40/brew"

+2025-05-08 14:31.18 obuilder [INFO] src = /Volumes/obuilder/cache-tmp/8608-c-opam-archives, dst = /Users/mac1000/.opam/download-cache, type rw

+2025-05-08 14:31.18 application [INFO] Exec "zfs" "set" "mountpoint=/Users/mac1000/.opam/download-cache" "obuilder/cache-tmp/8608-c-opam-archives"

+Unmount successful for /Volumes/obuilder/cache-tmp/8608-c-opam-archives

+2025-05-08 14:31.18 obuilder [INFO] src = /Volumes/obuilder/cache-tmp/8609-c-homebrew, dst = /Users/mac1000/Library/Caches/Homebrew, type rw

+2025-05-08 14:31.18 application [INFO] Exec "zfs" "set" "mountpoint=/Users/mac1000/Library/Caches/Homebrew" "obuilder/cache-tmp/8609-c-homebrew"

+Unmount successful for /Volumes/obuilder/cache-tmp/8609-c-homebrew

+2025-05-08 14:31.19 application [INFO] Exec "sudo" "dscl" "." "list" "/Users"

+2025-05-08 14:31.19 application [INFO] Exec "sudo" "-u" "mac1000" "-i" "getconf" "DARWIN_USER_TEMP_DIR"

+2025-05-08 14:31.19 application [INFO] Fork exec "sudo" "su" "-l" "mac1000" "-c" "--" "source ~/.obuilder_profile.sh && env 'TMPDIR=/var/folders/s_/z7_t3bvn5txfn81hk9p3ntfw0000z8/T/' 'OPAM_REPO_CI=true' 'CI=true' 'OPAMPRECISETRACKING=1' 'OPAMERRLOGLEN=0' 'OPAMDOWNLOADJOBS=1' "$0" "$@"" "/usr/bin/env" "bash" "-c" "opam reinstall bitwuzla-cxx.0.7.0;

+ res=$?;

+ test "$res" != 31 && exit "$res";

+ export OPAMCLI=2.0;

+ build_dir=$(opam var prefix)/.opam-switch/build;

+ failed=$(ls "$build_dir");

+ partial_fails="";

+ for pkg in $failed; do

+ if opam show -f x-ci-accept-failures: "$pkg" | grep -qF "\"macos-homebrew\""; then

+ echo "A package failed and has been disabled for CI using the 'x-ci-accept-failures' field.";

+ fi;

+ test "$pkg" != 'bitwuzla-cxx.0.7.0' && partial_fails="$partial_fails $pkg";

+ done;

+ test "${partial_fails}" != "" && echo "opam-repo-ci detected dependencies failing: ${partial_fails}”;

+ exit 1"

+2025-05-08 14:31.28 worker [INFO] OBuilder partition: 27% free, 2081 items

+2025-05-08 14:31.58 worker [INFO] OBuilder partition: 27% free, 2081 items

+2025-05-08 14:32.28 worker [INFO] OBuilder partition: 27% free, 2081 items

+2025-05-08 14:32.43 application [INFO] Exec "zfs" "inherit" "mountpoint" "obuilder/cache-tmp/8608-c-opam-archives"

+Unmount successful for /Users/mac1000/.opam/download-cache

+2025-05-08 14:32.44 application [INFO] Exec "zfs" "inherit" "mountpoint" "obuilder/cache-tmp/8609-c-homebrew"

+Unmount successful for /Users/mac1000/Library/Caches/Homebrew

+2025-05-08 14:32.45 application [INFO] Exec "zfs" "set" "mountpoint=none" "obuilder/result/af09425cd7744c7b32ed000b11db90295142f3d3430fddb594932d5c02343b40/home"

+Unmount successful for /Users/mac1000

+2025-05-08 14:32.45 application [INFO] Exec "zfs" "set" "mountpoint=none" "obuilder/result/af09425cd7744c7b32ed000b11db90295142f3d3430fddb594932d5c02343b40/brew"

+Unmount successful for /usr/local

+2025-05-08 14:32.46 application [INFO] Exec "zfs" "rename" "--" "obuilder/cache/c-homebrew" "obuilder/cache-tmp/8610-c-homebrew"

+Unmount successful for /Volumes/obuilder/cache/c-homebrew

+2025-05-08 14:32.46 application [INFO] Exec "zfs" "promote" "obuilder/cache-tmp/8609-c-homebrew"

+2025-05-08 14:32.46 application [INFO] Exec "zfs" "destroy" "-f" "--" "obuilder/cache-tmp/8610-c-homebrew"

+Unmount successful for /Volumes/obuilder/cache-tmp/8610-c-homebrew

+2025-05-08 14:32.48 application [INFO] Exec "zfs" "rename" "--" "obuilder/cache-tmp/8609-c-homebrew@snap" "obuilder/cache-tmp/8609-c-homebrew@old-2152"

+2025-05-08 14:32.48 application [INFO] Exec "zfs" "destroy" "-d" "--" "obuilder/cache-tmp/8609-c-homebrew@old-2152"

+2025-05-08 14:32.48 application [INFO] Exec "zfs" "snapshot" "-r" "--" "obuilder/cache-tmp/8609-c-homebrew@snap"

+2025-05-08 14:32.48 application [INFO] Exec "zfs" "rename" "--" "obuilder/cache-tmp/8609-c-homebrew" "obuilder/cache/c-homebrew"

+Unmount successful for /Volumes/obuilder/cache-tmp/8609-c-homebrew

+2025-05-08 14:32.49 application [INFO] Exec "zfs" "rename" "--" "obuilder/cache/c-opam-archives" "obuilder/cache-tmp/8611-c-opam-archives"

+Unmount successful for /Volumes/obuilder/cache/c-opam-archives

+2025-05-08 14:32.50 application [INFO] Exec "zfs" "promote" "obuilder/cache-tmp/8608-c-opam-archives"

+2025-05-08 14:32.50 application [INFO] Exec "zfs" "destroy" "-f" "--" "obuilder/cache-tmp/8611-c-opam-archives"

+Unmount successful for /Volumes/obuilder/cache-tmp/8611-c-opam-archives

+2025-05-08 14:32.51 application [INFO] Exec "zfs" "rename" "--" "obuilder/cache-tmp/8608-c-opam-archives@snap" "obuilder/cache-tmp/8608-c-opam-archives@old-2152"

+2025-05-08 14:32.51 application [INFO] Exec "zfs" "destroy" "-d" "--" "obuilder/cache-tmp/8608-c-opam-archives@old-2152"

+2025-05-08 14:32.51 application [INFO] Exec "zfs" "snapshot" "-r" "--" "obuilder/cache-tmp/8608-c-opam-archives@snap"

+2025-05-08 14:32.52 application [INFO] Exec "zfs" "rename" "--" "obuilder/cache-tmp/8608-c-opam-archives" "obuilder/cache/c-opam-archives"

+Unmount successful for /Volumes/obuilder/cache-tmp/8608-c-opam-archives

+2025-05-08 14:32.52 application [INFO] Exec "zfs" "destroy" "-r" "-f" "--" "obuilder/result/af09425cd7744c7b32ed000b11db90295142f3d3430fddb594932d5c02343b40"

+Unmount successful for /Volumes/obuilder/result/af09425cd7744c7b32ed000b11db90295142f3d3430fddb594932d5c02343b40

+2025-05-08 14:32.58 worker [INFO] OBuilder partition: 27% free, 2081 items

+2025-05-08 14:33.04 worker [INFO] Job failed: "/usr/bin/env" "bash" "-c" "opam reinstall bitwuzla-cxx.0.7.0;

+ res=$?;

+ test "$res" != 31 && exit "$res";

+ export OPAMCLI=2.0;

+ build_dir=$(opam var prefix)/.opam-switch/build;

+ failed=$(ls "$build_dir");

+ partial_fails="";

+ for pkg in $failed; do

+ if opam show -f x-ci-accept-failures: "$pkg" | grep -qF "\"macos-homebrew\""; then

+ echo "A package failed and has been disabled for CI using the 'x-ci-accept-failures' field.";

+ fi;

+ test "$pkg" != 'bitwuzla-cxx.0.7.0' && partial_fails="$partial_fails $pkg";

+ done;

+ test "${partial_fails}" != "" && echo "opam-repo-ci detected dependencies failing: ${partial_fails}";

+ exit 1" failed with exit status 1

+

+Run each of the Exec commands at the command prompt up to the Fork exec. We do need to run it, but we want an interactive shell, so let’s change the final part of the command to bash:

sudo su -l mac1000 -c -- "source ~/.obuilder_profile.sh && env 'TMPDIR=/var/folders/s_/z7_t3bvn5txfn81hk9p3ntfw0000z8/T/' 'OPAM_REPO_CI=true' 'CI=true' 'OPAMPRECISETRACKING=1' 'OPAMERRLOGLEN=0' 'OPAMDOWNLOADJOBS=1' bash"