.

-Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# Cite this Library

-See the License for the specific language governing permissions and limitations under the License.

+If you found this library useful for your research article, blog post, or product, we would be grateful if you would cite it using the following bibtex entry:

+```

+@software{pycteam2025concept,

+ author = {Barbiero, Pietro and De Felice, Giovanni and Espinosa Zarlenga, Mateo and Ciravegna, Gabriele and Dominici, Gabriele and De Santis, Francesco and Casanova, Arianna and Debot, David and Giannini, Francesco and Diligenti, Michelangelo and Marra, Giuseppe},

+ license = {Apache 2.0},

+ month = {3},

+ title = {{PyTorch Concepts}},

+ url = {https://github.com/pyc-team/pytorch_concepts},

+ year = {2025}

+}

+```

+Reference authors: [Pietro Barbiero](http://www.pietrobarbiero.eu/), [Giovanni De Felice](https://gdefe.github.io/), and [Mateo Espinosa Zarlenga](https://hairyballtheorem.com/).

-## Cite this library

+---

-If you found this library useful for your blog post, research article or product, we would be grateful if you would cite it like this:

+# Funding

-```

-Barbiero P., Ciravegna G., Debot D., Diligenti M.,

-Dominici G., Espinosa Zarlenga M., Giannini F., Marra G. (2024).

-Concept-based Interpretable Deep Learning in Python.

-https://pyc-team.github.io/pyc-book/intro.html

-```

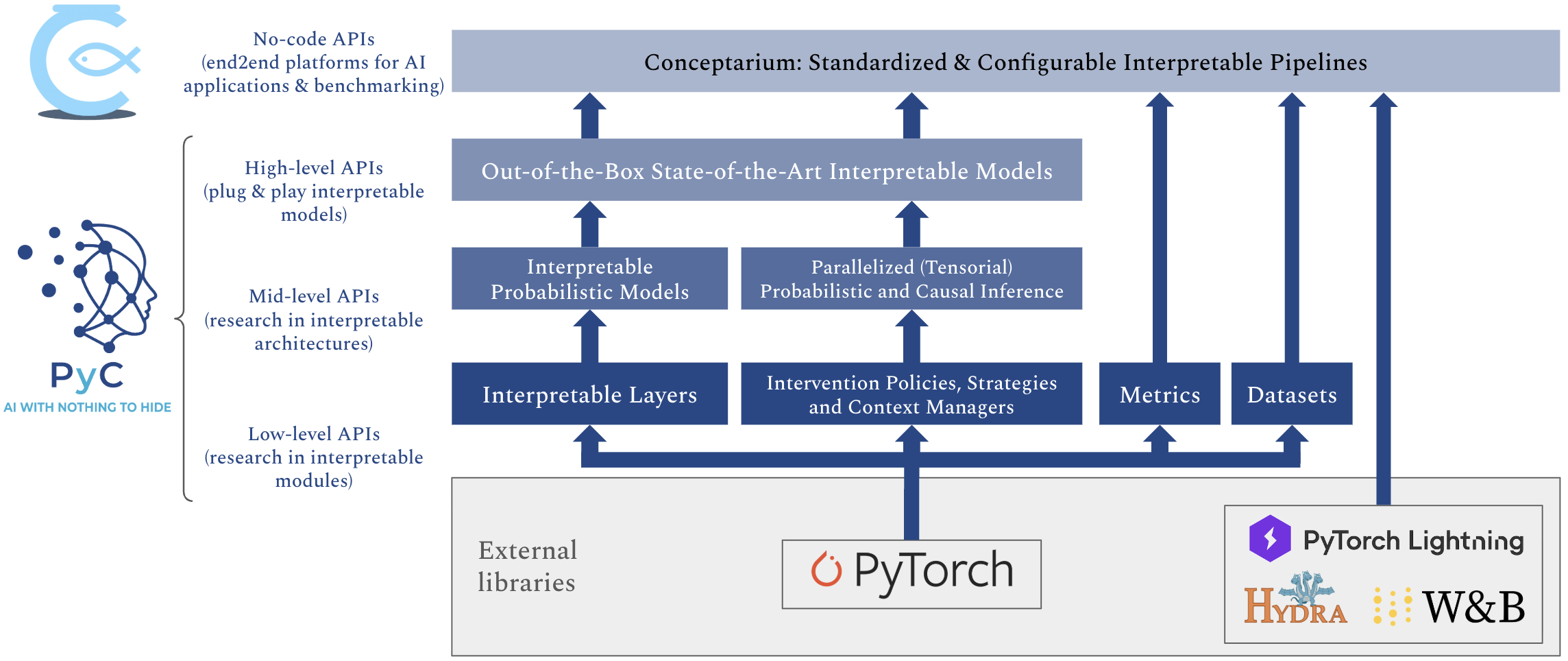

+This project is supported by the following organizations:

-Or use the following bibtex entry:

+

+

-```

-@book{pycteam2024concept,

- title = {Concept-based Interpretable Deep Learning in Python},

- author = {Pietro Barbiero, Gabriele Ciravegna, David Debot, Michelangelo Diligenti, Gabriele Dominici, Mateo Espinosa Zarlenga, Francesco Giannini, Giuseppe Marra},

- year = {2024},

- url = {https://pyc-team.github.io/pyc-book/intro.html}

-}

-```

diff --git a/SECURITY.md b/SECURITY.md

new file mode 100644

index 0000000..740f6bf

--- /dev/null

+++ b/SECURITY.md

@@ -0,0 +1,22 @@

+# PyC Security Policy

+

+The PyC Team takes security seriously and appreciates responsible reports from the community.

+

+## Reporting a Vulnerability

+If you believe you’ve found a security issue in PyC, please contact the PyC Team privately instead of opening a public issue.

+

+You can reach us at our email: **pyc.devteam@gmail.com**.

+

+Please include:

+- A short description of the issue

+- Steps to reproduce (if possible)

+- Any details that might help us understand the problem

+

+## Our Approach

+We will review security reports as time allows.

+Because PyC is maintained with limited time and resources, we **cannot make specific guarantees** about response times or patch timelines.

+

+That said, we will do our best to look into legitimate reports and address important issues when we can.

+

+## Thank You

+Thank you for helping keep PyC safe and reliable.

diff --git a/codecov.yml b/codecov.yml

new file mode 100644

index 0000000..c07acbd

--- /dev/null

+++ b/codecov.yml

@@ -0,0 +1,34 @@

+codecov:

+ require_ci_to_pass: yes

+

+coverage:

+ precision: 2

+ round: down

+ range: "70...100"

+

+ status:

+ project:

+ default:

+ target: auto

+ threshold: 1%

+ if_ci_failed: error

+

+ patch:

+ default:

+ target: 80%

+ threshold: 5%

+

+comment:

+ layout: "reach,diff,flags,files,footer"

+ behavior: default

+ require_changes: no

+

+ignore:

+ - "tests/"

+ - "examples/"

+ - "doc/"

+ - "conceptarium/"

+ - "setup.py"

+ - "**/__pycache__"

+ - "**/*.pyc"

+

diff --git a/conceptarium/README.md b/conceptarium/README.md

new file mode 100644

index 0000000..a3023a7

--- /dev/null

+++ b/conceptarium/README.md

@@ -0,0 +1,324 @@

+

+image/svg+xml

+

+

+

+ image/svg+xml

+

+

+

+

+

+

+ Hydra-Full-Color

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

diff --git a/doc/_static/img/logos/lightning.svg b/doc/_static/img/logos/lightning.svg

new file mode 100644

index 0000000..39531f9

--- /dev/null

+++ b/doc/_static/img/logos/lightning.svg

@@ -0,0 +1,9 @@

+

+

+

+

+

+

diff --git a/doc/_static/img/logos/numpy.svg b/doc/_static/img/logos/numpy.svg

new file mode 100644

index 0000000..cb1abac

--- /dev/null

+++ b/doc/_static/img/logos/numpy.svg

@@ -0,0 +1,7 @@

+

+

+

+

+

diff --git a/doc/_static/img/logos/pandas.svg b/doc/_static/img/logos/pandas.svg

new file mode 100644

index 0000000..1451f57

--- /dev/null

+++ b/doc/_static/img/logos/pandas.svg

@@ -0,0 +1,111 @@

+

+

+

+

+

+ image/svg+xml

+

+

+

+

+

+

+ Artboard 61

+

diff --git a/doc/_static/img/logos/pyc.svg b/doc/_static/img/logos/pyc.svg

new file mode 100644

index 0000000..73a5d7a

--- /dev/null

+++ b/doc/_static/img/logos/pyc.svg

@@ -0,0 +1 @@

+

+

+

+

+

+

+

+

+

+

diff --git a/doc/_static/img/logos/pytorch.svg b/doc/_static/img/logos/pytorch.svg

new file mode 100644

index 0000000..ef37c11

--- /dev/null

+++ b/doc/_static/img/logos/pytorch.svg

@@ -0,0 +1 @@

+

+

diff --git a/doc/_static/img/pyc_logo_transparent.png b/doc/_static/img/pyc_logo_transparent.png

new file mode 100644

index 0000000..07773eb

Binary files /dev/null and b/doc/_static/img/pyc_logo_transparent.png differ

diff --git a/doc/_static/img/pyc_logo_transparent_b.png b/doc/_static/img/pyc_logo_transparent_b.png

new file mode 100644

index 0000000..ed300c0

Binary files /dev/null and b/doc/_static/img/pyc_logo_transparent_b.png differ

diff --git a/doc/_static/img/pyc_logo_transparent_w.png b/doc/_static/img/pyc_logo_transparent_w.png

new file mode 100644

index 0000000..f2df22d

Binary files /dev/null and b/doc/_static/img/pyc_logo_transparent_w.png differ

diff --git a/doc/_static/img/pyc_software_stack.png b/doc/_static/img/pyc_software_stack.png

new file mode 100644

index 0000000..c8593eb

Binary files /dev/null and b/doc/_static/img/pyc_software_stack.png differ

diff --git a/doc/_static/js/theme-logo-switcher.js b/doc/_static/js/theme-logo-switcher.js

new file mode 100644

index 0000000..069b0a5

--- /dev/null

+++ b/doc/_static/js/theme-logo-switcher.js

@@ -0,0 +1,52 @@

+// Adaptive logo switcher for light/dark theme

+(function() {

+ 'use strict';

+

+ // Logo paths

+ const LIGHT_LOGO = '_static/img/pyc_logo_transparent.png';

+ const DARK_LOGO = '_static/img/pyc_logo_transparent_w.png';

+

+ function updateLogos() {

+ // Get current theme from data-theme attribute

+ const theme = document.documentElement.getAttribute('data-theme');

+ const isDark = theme === 'dark';

+

+ // Update sidebar logo

+ const sidebarLogo = document.querySelector('.sidebar-logo-img');

+ if (sidebarLogo) {

+ sidebarLogo.src = isDark ? DARK_LOGO : LIGHT_LOGO;

+ }

+

+ // Update any other logos with the adaptive class

+ const adaptiveLogos = document.querySelectorAll('.adaptive-logo');

+ adaptiveLogos.forEach(logo => {

+ logo.src = isDark ? DARK_LOGO : LIGHT_LOGO;

+ });

+ }

+

+ // Initial update

+ updateLogos();

+

+ // Watch for theme changes

+ const observer = new MutationObserver(function(mutations) {

+ mutations.forEach(function(mutation) {

+ if (mutation.type === 'attributes' && mutation.attributeName === 'data-theme') {

+ updateLogos();

+ }

+ });

+ });

+

+ // Start observing

+ observer.observe(document.documentElement, {

+ attributes: true,

+ attributeFilter: ['data-theme']

+ });

+

+ // Also listen for theme toggle button clicks (backup method)

+ document.addEventListener('click', function(e) {

+ if (e.target.closest('.theme-toggle')) {

+ setTimeout(updateLogos, 100);

+ }

+ });

+})();

+

diff --git a/doc/_templates/sidebar/brand.html b/doc/_templates/sidebar/brand.html

new file mode 100644

index 0000000..62199a1

--- /dev/null

+++ b/doc/_templates/sidebar/brand.html

@@ -0,0 +1,10 @@

+{% extends "!sidebar/brand.html" %}

+

+{% block brand_content %}

+

+{{ super() }}

+{% endblock %}

diff --git a/doc/conf.py b/doc/conf.py

index 6e2ce82..c61066c 100644

--- a/doc/conf.py

+++ b/doc/conf.py

@@ -1,8 +1,4 @@

-# Configuration file for the Sphinx documentation builder.

-#

-# This file only contains a selection of the most common options. For a full

-# list see the documentation:

-# https://www.sphinx-doc.org/en/master/usage/configuration.html

+# Configuration file for the Sphinx documentation adapted from TorchSpatiotemporal project (https://github.com/TorchSpatiotemporal/tsl/blob/main/docs/source/conf.py).

# -- Path setup --------------------------------------------------------------

@@ -14,19 +10,23 @@

# import sys

# sys.path.insert(0, os.path.abspath('.'))

import datetime

+import doctest

import os

import sys

+

+from docutils import nodes

+

sys.path.insert(0, os.path.abspath('../'))

-import torch_concepts

+import torch_concepts as pyc

# -- Project information -----------------------------------------------------

project = 'pytorch_concepts'

author = 'PyC Team'

-copyright = '{}, {}'.format(datetime.datetime.now().year, author)

+copyright = f'{datetime.datetime.now().year}, {author}'

-version = torch_concepts.__version__

-release = torch_concepts.__version__

+version = pyc.__version__

+release = pyc.__version__

# -- General configuration ---------------------------------------------------

@@ -36,42 +36,176 @@

# Add any Sphinx extension module names here, as strings. They can be

# extensions coming with Sphinx (named 'sphinx.ext.*') or your custom

# ones.

-extensions = ['sphinx.ext.autodoc', 'sphinx.ext.coverage', 'sphinx_rtd_theme']

+extensions = [

+ 'sphinx.ext.autodoc',

+ 'sphinx.ext.autosummary',

+ 'sphinx.ext.doctest',

+ 'sphinx.ext.intersphinx',

+ 'sphinx.ext.mathjax',

+ 'sphinx.ext.napoleon',

+ 'sphinx.ext.viewcode',

+ 'sphinx_design',

+ 'sphinxext.opengraph',

+ 'sphinx_copybutton',

+ 'myst_nb',

+ 'hoverxref.extension',

+]

+

+autosummary_generate = True

+autosummary_imported_members = True

+

+source_suffix = '.rst'

+master_doc = 'index'

-# Add any paths that contain templates here, relative to this directory.

templates_path = ['_templates']

-# List of patterns, relative to source directory, that match files and

-# directories to ignore when looking for source files.

-# This pattern also affects html_static_path and html_extra_path.

-exclude_patterns = ['_build', 'Thumbs.db', '.DS_Store']

+doctest_default_flags = doctest.NORMALIZE_WHITESPACE

+autodoc_member_order = 'bysource'

+rst_context = {'pyc': pyc}

-# -- Options for HTML output -------------------------------------------------

+add_module_names = False

+# autodoc_inherit_docstrings = False

-# The theme to use for HTML and HTML Help pages. See the documentation for

-# a list of builtin themes.

+# exclude_patterns = ['_build', 'Thumbs.db', '.DS_Store']

+

+napoleon_custom_sections = [("Shape", "params_style"),

+ ("Shapes", "params_style")]

+

+numfig = True # Enumerate figures and tables

+

+# Ensure proper navigation tree building

+html_show_sourcelink = True

+html_sidebars = {

+ "**": [

+ "sidebar/brand.html",

+ "sidebar/search.html",

+ "sidebar/scroll-start.html",

+ "sidebar/navigation.html",

+ "sidebar/ethical-ads.html",

+ "sidebar/scroll-end.html",

+ ]

+}

+

+# -- Options for intersphinx -------------------------------------------------

#

-# html_theme = 'alabaster'

-html_theme = "sphinx_rtd_theme"

-html_logo = './_static/img/pyc_logo.png'

+

+intersphinx_mapping = {

+ 'python': ('https://docs.python.org/3/', None),

+ 'numpy': ('https://numpy.org/doc/stable/', None),

+ 'pd': ('https://pandas.pydata.org/docs/', None),

+ 'PyTorch': ('https://pytorch.org/docs/stable/', None),

+ 'pytorch_lightning': ('https://lightning.ai/docs/pytorch/latest/', None),

+ 'PyG': ('https://pytorch-geometric.readthedocs.io/en/latest/', None)

+}

+

+# -- Theme options -----------------------------------------------------------

+#

+

+html_title = "Torch Concepts"

+html_theme = 'furo'

+language = "en"

+

+html_baseurl = ''

+html_static_path = ['_static']

+html_logo = '_static/img/logos/pyc.png'

+html_favicon = '_static/img/logos/pyc.svg'

+

+html_css_files = [

+ 'css/custom.css',

+]

+

+html_js_files = [

+ 'js/theme-logo-switcher.js',

+]

html_theme_options = {

- 'canonical_url': 'https://pytorch_concepts.readthedocs.io/en/latest/',

- 'logo_only': True,

- 'display_version': True,

- 'prev_next_buttons_location': 'bottom',

- 'style_external_links': False,

- # Toc options

- 'collapse_navigation': False,

- 'sticky_navigation': True,

- 'navigation_depth': 4,

- 'includehidden': True,

- 'titles_only': False,

+ "sidebar_hide_name": True,

+ "navigation_with_keys": True,

+ "collapse_navigation": False,

+ "top_of_page_button": "edit",

+ "light_css_variables": {

+ "color-brand-primary": "#20b0d6",

+ "color-brand-content": "#20b0d6",

+ },

+ "dark_css_variables": {

+ "color-brand-primary": "#20b0d6",

+ "color-brand-content": "#20b0d6",

+ "color-background-primary": "#020d1e",

+ },

+ "footer_icons": [

+ {

+ "name": "GitHub",

+ "url": "https://github.com/pyc-team/pytorch_concepts",

+ "html": """

+

+

+ """,

+ "class": "",

+ },

+ ],

}

+pygments_style = "tango"

+pygments_dark_style = "material"

+

+# -- Notebooks options -------------------------------------------------------

+#

+

+nb_execution_mode = 'off'

+myst_enable_extensions = ['dollarmath']

+myst_dmath_allow_space = True

+myst_dmath_double_inline = True

+nb_code_prompt_hide = 'Hide code cell outputs'

+

+# -- OpenGraph options -------------------------------------------------------

+#

+

+ogp_site_url = "https://github.com/pyc-team/pytorch_concepts"

+ogp_image = ogp_site_url + "_static/img/logos/pyc.png"

+

+# -- Hoverxref options -------------------------------------------------------

+#

+

+hoverxref_auto_ref = True

+hoverxref_roles = ['class', 'mod', 'doc', 'meth', 'func']

+hoverxref_mathjax = True

+hoverxref_intersphinx = ['PyG', 'numpy']

+

+# -- Setup options -----------------------------------------------------------

+#

+

-# Add any paths that contain custom static files (such as style sheets) here,

-# relative to this directory. They are copied after the builtin static files,

-# so a file named "default.css" will overwrite the builtin "default.css".

-html_static_path = ['_static']

\ No newline at end of file

+def logo_role(name, rawtext, text, *args, **kwargs):

+ if name == 'pyc':

+ url = f'{html_baseurl}/_static/img/logos/pyc.svg'

+ elif name == 'hydra':

+ url = f'{html_baseurl}/_static/img/logos/hydra-head.svg'

+ elif name in ['pyg', 'pytorch', 'lightning']:

+ url = f'{html_baseurl}/_static/img/logos/{name}.svg'

+ else:

+ raise RuntimeError

+ node = nodes.image(uri=url, alt=str(name).capitalize() + ' logo')

+ node['classes'] += ['inline-logo', name]

+ if text != 'null':

+ node['classes'].append('with-text')

+ span = nodes.inline(text=text)

+ return [node, span], []

+ return [node], []

+

+

+def setup(app):

+

+ def rst_jinja_render(app, docname, source):

+ src = source[0]

+ rendered = app.builder.templates.render_string(src, rst_context)

+ source[0] = rendered

+

+ app.connect("source-read", rst_jinja_render)

+

+ app.add_role('pyc', logo_role)

+ app.add_role('pyg', logo_role)

+ app.add_role('pytorch', logo_role)

+ app.add_role('hydra', logo_role)

+ app.add_role('lightning', logo_role)

diff --git a/doc/genindex.rst b/doc/genindex.rst

new file mode 100644

index 0000000..66a2352

--- /dev/null

+++ b/doc/genindex.rst

@@ -0,0 +1,2 @@

+Index

+=====

\ No newline at end of file

diff --git a/doc/guides/contributing.rst b/doc/guides/contributing.rst

new file mode 100644

index 0000000..9dd0c39

--- /dev/null

+++ b/doc/guides/contributing.rst

@@ -0,0 +1,104 @@

+Contributing Guide

+=================

+

+We welcome contributions to PyC! This guide will help you contribute effectively.

+

+Thank you for your interest in contributing! The PyC Team welcomes all contributions, whether small bug fixes or major features.

+

+Join Our Community

+------------------

+

+Have questions or want to discuss your ideas? Join our Slack community to connect with other contributors and maintainers!

+

+.. image:: https://img.shields.io/badge/Slack-Join%20Us-4A154B?style=for-the-badge&logo=slack

+ :target: https://join.slack.com/t/pyc-yu37757/shared_invite/zt-3jdcsex5t-LqkU6Plj5rxFemh5bRhe_Q

+ :alt: Slack

+

+How to Contribute

+-----------------

+

+1. **Fork the repository** - Create your own fork of the PyC repository on GitHub.

+2. **Use the** ``dev`` **branch** - Write and test your contributions locally on the ``dev`` branch.

+3. **Create a new branch** - Make a new branch for your specific contribution.

+4. **Make your changes** - Implement your changes with clear, descriptive commit messages.

+5. **Use Gitmoji** - Add emojis to your commit messages using `Gitmoji `_ using the appropriate issue template.

+

+When reporting issues, please include:

+

+- A clear description of the problem

+- Steps to reproduce the issue

+- Expected vs. actual behavior

+- Your environment (Python version, PyTorch version, OS, etc.)

+

+Code Style

+----------

+

+Please follow these guidelines when contributing code:

+

+- **PEP 8** - Follow `PEP 8 `_ for real-world use cases.

+

+Need Help?

+----------

+

+- **Issues**: `GitHub Issues `_

+- **Discussions**: `GitHub Discussions `_

+- **Contributing**: :doc:`Contributor Guide `

+

+

+.. toctree::

+ :maxdepth: 2

+ :hidden:

+

+ using_low_level

+ using_mid_level_proba

+ using_mid_level_causal

+ using_high_level

+ using_conceptarium

diff --git a/doc/guides/using_conceptarium.rst b/doc/guides/using_conceptarium.rst

new file mode 100644

index 0000000..98d3004

--- /dev/null

+++ b/doc/guides/using_conceptarium.rst

@@ -0,0 +1,982 @@

+.. |pyc_logo| image:: https://raw.githubusercontent.com/pyc-team/pytorch_concepts/refs/heads/master/doc/_static/img/logos/pyc.svg

+ :width: 20px

+ :align: middle

+

+.. |pytorch_logo| image:: https://raw.githubusercontent.com/pyc-team/pytorch_concepts/refs/heads/master/doc/_static/img/logos/pytorch.svg

+ :width: 20px

+ :align: middle

+

+.. |hydra_logo| image:: https://raw.githubusercontent.com/pyc-team/pytorch_concepts/refs/heads/master/doc/_static/img/logos/hydra-head.svg

+ :width: 20px

+ :align: middle

+

+.. |pl_logo| image:: https://raw.githubusercontent.com/pyc-team/pytorch_concepts/refs/heads/master/doc/_static/img/logos/lightning.svg

+ :width: 20px

+ :align: middle

+

+.. |wandb_logo| image:: https://raw.githubusercontent.com/pyc-team/pytorch_concepts/refs/heads/master/doc/_static/img/logos/wandb.svg

+ :width: 20px

+ :align: middle

+

+.. |conceptarium_logo| image:: https://raw.githubusercontent.com/pyc-team/pytorch_concepts/refs/heads/master/doc/_static/img/logos/conceptarium.svg

+ :width: 20px

+ :align: middle

+

+

+Conceptarium

+============

+

+|conceptarium_logo| **Conceptarium** is a no-code framework for running large-scale experiments on concept-based models.

+Built on top of |pyc_logo| PyC, |hydra_logo| Hydra, and |pl_logo| PyTorch Lightning, it enables configuration-driven experimentation

+without writing Python code.

+

+

+Design Principles

+-----------------

+

+Configuration-Driven Experimentation

+^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

+

+Conceptarium uses YAML configuration files to define all experiment parameters. No Python coding required:

+

+- **Models**: Select and configure any |pyc_logo| PyC model (CBM, CEM, CGM, BlackBox)

+- **Datasets**: Use built-in datasets (CUB-200, CelebA) or add custom ones

+- **Training**: Configure optimizer, scheduler, and Lightning Trainer settings

+- **Tracking**: Automatic logging to |wandb_logo| W&B for visualization and comparison

+

+Large-Scale Sweeps

+^^^^^^^^^^^^^^^^^^

+

+Run multiple experiments with single commands using |hydra_logo| Hydra's multi-run capabilities:

+

+.. code-block:: bash

+

+ # Test 3 datasets × 2 models × 5 seeds = 30 experiments

+ python run_experiment.py dataset=celeba,cub,mnist model=cbm,cem seed=1,2,3,4,5

+

+Or by creating custom sweep configuration files:

+

+.. code-block:: yaml

+

+ # conceptarium/conf/my_sweep.yaml

+ defaults:

+ - _commons # Inherit standard encoder/optimizer settings

+ - _self_ # This file's parameters override

+

+ hydra:

+ job:

+ name: experiment_name

+ sweeper:

+ # standard grid search

+ params:

+ seed: 1

+ dataset: celeba, cub, mnist, ...

+ model: blackbox, cbm, cem, ...

+

+All runs are automatically organized, logged, and tracked.

+

+Hierarchical Composition

+^^^^^^^^^^^^^^^^^^^^^^^^

+

+Configurations inherit and override using ``defaults`` for maintainability:

+

+.. code-block:: yaml

+

+ # conceptarium/conf/my_sweep.yaml

+ defaults:

+ - _commons # Inherit standard encoder/optimizer settings

+ - _self_ # This file's parameters override

+

+ # Only specify what's different

+ model:

+ optim_kwargs:

+ lr: 0.05 # Override learning rate

+

+This keeps configurations concise and reduces duplication.

+

+

+Detailed Guides

+^^^^^^^^^^^^^^^

+

+.. dropdown:: Installation and Basic Usage

+ :icon: rocket

+

+ **Installation**

+

+ Clone the repository and set up the environment:

+

+ .. code-block:: bash

+

+ git clone https://github.com/pyc-team/pytorch_concepts.git

+ cd pytorch_concepts/conceptarium

+ conda env create -f environment.yml

+ conda activate conceptarium

+

+ **Basic Usage**

+

+ Run a single experiment with default configuration:

+

+ .. code-block:: bash

+

+ python run_experiment.py

+

+ Run a sweep over multiple configurations:

+

+ .. code-block:: bash

+

+ python run_experiment.py --config-name sweep

+

+ Override parameters from command line:

+

+ .. code-block:: bash

+

+ # Change dataset

+ python run_experiment.py dataset=cub

+

+ # Change model

+ python run_experiment.py model=cbm_joint

+

+ # Change multiple parameters

+ python run_experiment.py dataset=celeba model=cbm_joint trainer.max_epochs=100

+

+ # Run sweep over multiple values

+ python run_experiment.py dataset=celeba,cub model=cbm_joint,blackbox seed=1,2,3,4,5

+

+.. dropdown:: Understanding Configurations

+ :icon: file-code

+

+ **Configuration Structure**

+

+ All configurations are stored in ``conceptarium/conf/``:

+

+ .. code-block:: text

+

+ conf/

+ ├── _default.yaml # Base configuration

+ ├── sweep.yaml # Example sweep configuration

+ ├── dataset/ # Dataset configurations

+ │ ├── _commons.yaml # Shared dataset parameters

+ │ ├── celeba.yaml # CelebA dataset

+ │ ├── cub.yaml # CUB-200 dataset

+ │ └── ... # More datasets

+ ├── loss/ # Loss function configs

+ │ ├── standard.yaml # Type-aware losses

+ │ └── weighted.yaml # Weighted losses

+ ├── metrics/ # Metric configs

+ │ └── standard.yaml # Type-aware metrics

+ └── model/ # Model configurations

+ ├── _commons.yaml # Shared model parameters

+ ├── blackbox.yaml # Black-box baseline

+ ├── cbm.yaml # Alias for cbm_joint

+ └── cbm_joint.yaml # CBM (joint training)

+

+ **Configuration Hierarchy**

+

+ Configurations use |hydra_logo| Hydra's composition system with ``defaults`` to inherit and override:

+

+ .. code-block:: yaml

+

+ # conf/model/cbm_joint.yaml

+ defaults:

+ - _commons # Inherit common model parameters

+ - _self_ # Current file takes precedence

+

+ # Model-specific configuration

+ _target_: torch_concepts.nn.ConceptBottleneckModel_Joint

+ task_names: ${dataset.default_task_names}

+

+ inference:

+ _target_: torch_concepts.nn.DeterministicInference

+ _partial_: true

+

+ **Priority**: Parameters defined later override earlier ones. ``_self_`` controls where current file's parameters fit in the hierarchy.

+

+ **Base Configuration**

+

+ The ``_default.yaml`` file contains base settings for all experiments:

+

+ .. code-block:: yaml

+

+ defaults:

+ - dataset: cub

+ - model: cbm_joint

+ - _self_

+

+ seed: 42

+

+ trainer:

+ max_epochs: 500

+ patience: 30

+ monitor: "val_loss"

+ mode: "min"

+

+ wandb:

+ project: conceptarium

+ entity: your-team

+ log_model: false

+

+ **Key sections**:

+

+ - ``defaults``: Which dataset and model configurations to use

+ - ``seed``: Random seed for reproducibility

+ - ``trainer``: PyTorch Lightning Trainer settings

+ - ``wandb``: Weights & Biases logging configuration

+

+.. dropdown:: Working with Datasets

+ :icon: database

+

+ **Dataset Configuration Files**

+

+ Each dataset has a YAML file in ``conf/dataset/`` that specifies:

+

+ 1. The datamodule class (``_target_``)

+ 2. Dataset-specific parameters

+ 3. Backbone architecture (if needed)

+ 4. Preprocessing settings

+

+ **Example - CUB-200 Dataset**

+

+ .. code-block:: yaml

+

+ # conf/dataset/cub.yaml

+ defaults:

+ - _commons

+ - _self_

+

+ _target_: torch_concepts.data.datamodules.CUBDataModule

+

+ name: cub

+

+ # Backbone for feature extraction

+ backbone:

+ _target_: torchvision.models.resnet18

+ pretrained: true

+

+ precompute_embs: true # Precompute features to speed up training

+

+ # Task variables to predict

+ default_task_names: [bird_species]

+

+ # Concept descriptions (optional, for interpretability)

+ label_descriptions:

+ - has_wing_color::blue: Wing color is blue

+ - has_upperparts_color::blue: Upperparts color is blue

+ - has_breast_pattern::solid: Breast pattern is solid

+ - has_back_color::brown: Back color is brown

+

+ **Example - CelebA Dataset**

+

+ .. code-block:: yaml

+

+ # conf/dataset/celeba.yaml

+ defaults:

+ - _commons

+ - _self_

+

+ _target_: torch_concepts.data.datamodules.CelebADataModule

+

+ name: celeba

+

+ backbone:

+ _target_: torchvision.models.resnet18

+ pretrained: true

+

+ precompute_embs: true

+

+ # Predict attractiveness from facial attributes

+ default_task_names: [Attractive]

+

+ label_descriptions:

+ - Smiling: Person is smiling

+ - Male: Person is male

+ - Young: Person is young

+ - Eyeglasses: Person wears eyeglasses

+ - Attractive: Person is attractive

+

+ **Common Dataset Parameters**

+

+ Defined in ``conf/dataset/_commons.yaml``:

+

+ .. code-block:: yaml

+

+ batch_size: 256 # Training batch size

+ val_size: 0.15 # Validation split fraction

+ test_size: 0.15 # Test split fraction

+ num_workers: 4 # DataLoader workers

+ pin_memory: true # Pin memory for GPU

+

+ # Optional: Subsample concepts

+ concept_subset: null # null = use all concepts

+ # concept_subset: [concept1, concept2, concept3]

+

+ **Overriding Dataset Parameters**

+

+ From command line:

+

+ .. code-block:: bash

+

+ # Change batch size

+ python run_experiment.py dataset.batch_size=512

+

+ # Use only specific concepts

+ python run_experiment.py dataset.concept_subset=[has_wing_color::blue,has_back_color::brown]

+

+ # Change validation split

+ python run_experiment.py dataset.val_size=0.2

+

+ In a custom sweep file:

+

+ .. code-block:: yaml

+

+ # conf/my_sweep.yaml

+ defaults:

+ - _default

+ - _self_

+

+ dataset:

+ batch_size: 512

+ val_size: 0.2

+

+.. dropdown:: Working with Models

+ :icon: cpu

+

+ **Model Configuration Files**

+

+ Each model has a YAML file in ``conf/model/`` that specifies:

+

+ 1. The model class (``_target_``)

+ 2. Architecture parameters (from ``_commons.yaml``)

+ 3. Inference strategy

+ 4. Metric tracking options

+

+ **Example - Concept Bottleneck Model**

+

+ .. code-block:: yaml

+

+ # conf/model/cbm_joint.yaml

+ defaults:

+ - _commons

+ - _self_

+

+ _target_: torch_concepts.nn.ConceptBottleneckModel_Joint

+

+ # Task variables (from dataset)

+ task_names: ${dataset.default_task_names}

+

+ # Inference strategy

+ inference:

+ _target_: torch_concepts.nn.DeterministicInference

+ _partial_: true

+

+ # Metric tracking

+ summary_metrics: true # Aggregate metrics by concept type

+ perconcept_metrics: false # Per-concept individual metrics

+

+ **Example - Black-box Baseline**

+

+ .. code-block:: yaml

+

+ # conf/model/blackbox.yaml

+ defaults:

+ - _commons

+ - _self_

+

+ _target_: torch_concepts.nn.BlackBox

+

+ task_names: ${dataset.default_task_names}

+

+ # Black-box models don't use concepts

+ inference: null

+

+ summary_metrics: false

+ perconcept_metrics: false

+

+ **Common Model Parameters**

+

+ Defined in ``conf/model/_commons.yaml``:

+

+ .. code-block:: yaml

+

+ # Encoder architecture

+ encoder_kwargs:

+ hidden_size: 128 # Hidden layer dimension

+ n_layers: 2 # Number of hidden layers

+ activation: relu # Activation function

+ dropout: 0.1 # Dropout probability

+

+ # Concept distributions (how concepts are modeled)

+ variable_distributions:

+ binary: torch.distributions.Bernoulli

+ categorical: torch.distributions.Categorical

+

+ # Optimizer configuration

+ optim_class:

+ _target_: torch.optim.AdamW

+ _partial_: true

+

+ optim_kwargs:

+ lr: 0.00075 # Learning rate

+ weight_decay: 0.0 # L2 regularization

+

+ # Learning rate scheduler

+ scheduler_class:

+ _target_: torch.optim.lr_scheduler.ReduceLROnPlateau

+ _partial_: true

+

+ scheduler_kwargs:

+ mode: min

+ factor: 0.5

+ patience: 10

+ min_lr: 0.00001

+

+ **Loss Configuration**

+

+ Loss functions are type-aware, automatically selecting the appropriate loss based on concept types.

+ Loss configurations are in ``conf/loss/``:

+

+ **Standard losses** (``conf/loss/standard.yaml``):

+

+ .. code-block:: yaml

+

+ _target_: torch_concepts.nn.ConceptLoss

+ _partial_: true

+

+ fn_collection:

+ discrete:

+ binary:

+ path: torch.nn.BCEWithLogitsLoss

+ kwargs: {}

+ categorical:

+ path: torch.nn.CrossEntropyLoss

+ kwargs: {}

+ # continuous: # Not yet supported

+ # path: torch.nn.MSELoss

+ # kwargs: {}

+

+ **Weighted losses** (``conf/loss/weighted.yaml``):

+

+ .. code-block:: yaml

+

+ _target_: torch_concepts.nn.ConceptLoss

+ _partial_: true

+

+ fn_collection:

+ discrete:

+ binary:

+ path: torch.nn.BCEWithLogitsLoss

+ kwargs:

+ reduction: none # Required for weighting

+ categorical:

+ path: torch.nn.CrossEntropyLoss

+ kwargs:

+ reduction: none

+

+ concept_loss_weight: 1.0

+ task_loss_weight: 1.0

+

+ **Metrics Configuration**

+

+ Metrics are also type-aware and configured in ``conf/metrics/``:

+

+ .. code-block:: yaml

+

+ # conf/metrics/standard.yaml

+ discrete:

+ binary:

+ accuracy:

+ path: torchmetrics.classification.BinaryAccuracy

+ kwargs: {}

+ categorical:

+ accuracy:

+ path: torchmetrics.classification.MulticlassAccuracy

+ kwargs:

+ average: micro

+

+ continuous:

+ mae:

+ path: torchmetrics.regression.MeanAbsoluteError

+ kwargs: {}

+ mse:

+ path: torchmetrics.regression.MeanSquaredError

+ kwargs: {}

+

+ **Overriding Model Parameters**

+

+ From command line:

+

+ .. code-block:: bash

+

+ # Change learning rate

+ python run_experiment.py model.optim_kwargs.lr=0.001

+

+ # Enable per-concept metrics

+ python run_experiment.py model.perconcept_metrics=true

+

+ # Change encoder architecture

+ python run_experiment.py model.encoder_kwargs.hidden_size=256 \

+ model.encoder_kwargs.n_layers=3

+

+ # Use weighted loss

+ python run_experiment.py loss=weighted

+

+ In a custom sweep file:

+

+ .. code-block:: yaml

+

+ # conf/my_sweep.yaml

+ defaults:

+ - _default

+ - _self_

+

+ model:

+ encoder_kwargs:

+ hidden_size: 256

+ n_layers: 3

+ optim_kwargs:

+ lr: 0.001

+ perconcept_metrics: true

+

+.. dropdown:: Running Experiments

+ :icon: play

+

+ **Single Experiment**

+

+ Run with default configuration:

+

+ .. code-block:: bash

+

+ python run_experiment.py

+

+ Specify dataset and model:

+

+ .. code-block:: bash

+

+ python run_experiment.py dataset=celeba model=cbm_joint

+

+ With custom parameters:

+

+ .. code-block:: bash

+

+ python run_experiment.py \

+ dataset=cub \

+ model=cem \

+ model.optim_kwargs.lr=0.001 \

+ trainer.max_epochs=100 \

+ seed=42

+

+ **Multi-Run Sweeps**

+

+ Sweep over multiple values using comma-separated lists:

+

+ .. code-block:: bash

+

+ # Sweep over datasets

+ python run_experiment.py dataset=celeba,cub,mnist

+

+ # Sweep over models

+ python run_experiment.py model=cbm_joint,cem,cgm

+

+ # Sweep over hyperparameters

+ python run_experiment.py model.optim_kwargs.lr=0.0001,0.0005,0.001,0.005

+

+ # Sweep over seeds for robustness

+ python run_experiment.py seed=1,2,3,4,5

+

+ # Combined sweeps

+ python run_experiment.py \

+ dataset=celeba,cub \

+ model=cbm_joint,cem \

+ seed=1,2,3

+

+ This runs 2 × 2 × 3 = 12 experiments.

+

+ **Custom Sweep Configuration**

+

+ Create a sweep file (``conf/my_sweep.yaml``):

+

+ .. code-block:: yaml

+

+ defaults:

+ - _default

+ - _self_

+

+ hydra:

+ job:

+ name: my_sweep

+ sweeper:

+ params:

+ dataset: celeba,cub,mnist

+ model: cbm_joint,cem

+ seed: 1,2,3,4,5

+ model.optim_kwargs.lr: 0.0001,0.001

+

+ # Default overrides

+ trainer:

+ max_epochs: 500

+ patience: 50

+

+ model:

+ summary_metrics: true

+ perconcept_metrics: true

+

+ Run the sweep:

+

+ .. code-block:: bash

+

+ python run_experiment.py --config-name my_sweep

+

+ **Parallel Execution**

+

+ Use Hydra's joblib launcher for parallel execution:

+

+ .. code-block:: bash

+

+ python run_experiment.py \

+ --multirun \

+ hydra/launcher=joblib \

+ hydra.launcher.n_jobs=4 \

+ dataset=celeba,cub \

+ model=cbm_joint,cem

+

+ Or use SLURM for cluster execution:

+

+ .. code-block:: bash

+

+ python run_experiment.py \

+ --multirun \

+ hydra/launcher=submitit_slurm \

+ hydra.launcher.partition=gpu \

+ hydra.launcher.gpus_per_node=1 \

+ dataset=celeba,cub \

+ model=cbm_joint,cem

+

+.. dropdown:: Output Structure

+ :icon: file-directory

+

+ **Directory Organization**

+

+ Experiment outputs are organized by timestamp:

+

+ .. code-block:: text

+

+ outputs/

+ └── multirun/

+ └── 2025-11-27/

+ └── 14-30-15_my_experiment/

+ ├── 0/ # First run

+ │ ├── .hydra/ # Hydra configuration

+ │ │ ├── config.yaml # Full resolved config

+ │ │ ├── hydra.yaml # Hydra settings

+ │ │ └── overrides.yaml # CLI overrides

+ │ ├── checkpoints/ # Model checkpoints

+ │ │ ├── best.ckpt # Best model

+ │ │ └── last.ckpt # Last epoch

+ │ ├── logs/ # Training logs

+ │ │ └── version_0/

+ │ │ ├── events.out.tfevents # TensorBoard

+ │ │ └── hparams.yaml # Hyperparameters

+ │ └── run.log # Console output

+ ├── 1/ # Second run

+ ├── 2/ # Third run

+ └── multirun.yaml # Sweep configuration

+

+ **Accessing Results**

+

+ Each run directory contains:

+

+ - **Checkpoints**: ``checkpoints/best.ckpt`` - Best model based on validation metric

+ - **Logs**: ``logs/version_0/`` - TensorBoard logs

+ - **Configuration**: ``.hydra/config.yaml`` - Full configuration used for this run

+ - **Console output**: ``run.log`` - All printed output

+

+ Load a checkpoint:

+

+ .. code-block:: python

+

+ import torch

+ from torch_concepts.nn import ConceptBottleneckModel_Joint

+

+ checkpoint = torch.load('outputs/multirun/.../0/checkpoints/best.ckpt')

+ model = ConceptBottleneckModel_Joint.load_from_checkpoint(checkpoint)

+

+ **Weights & Biases Integration**

+

+ All experiments are automatically logged to W&B if configured:

+

+ .. code-block:: yaml

+

+ # In your config or _default.yaml

+ wandb:

+ project: my_project

+ entity: my_team

+ log_model: false # Set true to save models to W&B

+ mode: online # or 'offline' or 'disabled'

+

+ View results at https://wandb.ai/your-team/my_project

+

+.. dropdown:: Creating Custom Configurations

+ :icon: pencil

+

+ **Adding a New Model**

+

+ 1. **Implement the model** in |pyc_logo| PyC (see ``examples/contributing/model.md``)

+

+ 2. **Create configuration file** ``conf/model/my_model.yaml``:

+

+ .. code-block:: yaml

+

+ defaults:

+ - _commons

+ - loss: _default

+ - metrics: _default

+ - _self_

+

+ _target_: torch_concepts.nn.MyModel

+

+ task_names: ${dataset.default_task_names}

+

+ # Model-specific parameters

+ my_param: 42

+ another_param: hello

+

+ 3. **Run experiments**:

+

+ .. code-block:: bash

+

+ python run_experiment.py model=my_model dataset=cub

+

+ **Adding a New Dataset**

+

+ 1. **Implement the dataset and datamodule** (see ``examples/contributing/dataset.md``)

+

+ 2. **Create configuration file** ``conf/dataset/my_dataset.yaml``:

+

+ .. code-block:: yaml

+

+ defaults:

+ - _commons

+ - _self_

+

+ _target_: my_package.MyDataModule

+

+ name: my_dataset

+

+ # Backbone (if needed)

+ backbone:

+ _target_: torchvision.models.resnet18

+ pretrained: true

+

+ precompute_embs: false

+

+ # Default tasks

+ default_task_names: [my_task]

+

+ # Dataset-specific parameters

+ data_path: /path/to/data

+ preprocess: true

+

+ 3. **Run experiments**:

+

+ .. code-block:: bash

+

+ python run_experiment.py dataset=my_dataset model=cbm_joint

+

+ **Adding Custom Loss/Metrics**

+

+ Create ``conf/model/loss/my_loss.yaml``:

+

+ .. code-block:: yaml

+

+ _target_: torch_concepts.nn.WeightedConceptLoss

+ _partial_: true

+

+ fn_collection:

+ discrete:

+ binary:

+ path: my_package.MyBinaryLoss

+ kwargs:

+ alpha: 0.25

+ gamma: 2.0

+ categorical:

+ path: torch.nn.CrossEntropyLoss

+ kwargs:

+ label_smoothing: 0.1

+

+ concept_loss_weight: 0.5

+ task_loss_weight: 1.0

+

+ Use it:

+

+ .. code-block:: bash

+

+ python run_experiment.py model/loss=my_loss

+

+.. dropdown:: Advanced Usage

+ :icon: gear

+

+ **Conditional Configuration**

+

+ Use Hydra's variable interpolation:

+

+ .. code-block:: yaml

+

+ # Automatically adjust batch size based on dataset

+ dataset:

+ batch_size: ${select:${dataset.name},{celeba:512,cub:256,mnist:1024}}

+

+ # Scale learning rate with batch size

+ model:

+ optim_kwargs:

+ lr: ${multiply:0.001,${divide:${dataset.batch_size},256}}

+

+ **Configuration Validation**

+

+ Add validation to catch errors early:

+

+ .. code-block:: yaml

+

+ # conf/model/cbm_joint.yaml

+ defaults:

+ - _commons

+ - loss: _default

+ - metrics: _default

+ - _self_

+

+ _target_: torch_concepts.nn.ConceptBottleneckModel_Joint

+

+ # Require task names

+ task_names: ${dataset.default_task_names}

+ ??? # Error if not provided

+

+ **Experiment Grouping**

+

+ Organize related experiments:

+

+ .. code-block:: yaml

+

+ # conf/ablation_study.yaml

+ hydra:

+ job:

+ name: ablation_${model.encoder_kwargs.hidden_size}

+

+ defaults:

+ - _default

+ - _self_

+

+ model:

+ encoder_kwargs:

+ hidden_size: ??? # Must be provided

+

+ Run:

+

+ .. code-block:: bash

+

+ python run_experiment.py \

+ --config-name ablation_study \

+ model.encoder_kwargs.hidden_size=64,128,256,512

+

+.. dropdown:: Best Practices

+ :icon: checklist

+

+ 1. **Use Descriptive Names**

+

+ .. code-block:: yaml

+

+ hydra:

+ job:

+ name: ${model._target_}_${dataset.name}_seed${seed}

+

+ 2. **Keep Configs Small**

+

+ - Use ``defaults`` to inherit common parameters

+ - Only override what's different

+

+ 3. **Document Custom Parameters**

+

+ .. code-block:: yaml

+

+ my_parameter: 42 # Controls X behavior, higher = more Y

+

+ 4. **Version Control Configurations**

+

+ - Commit all YAML files to git

+ - Tag important configurations

+

+ 5. **Use Sweeps for Exploration**

+

+ - Start with broad sweeps

+ - Narrow down based on results

+

+ 6. **Monitor with W&B**

+

+ - Enable W&B logging for all experiments

+ - Use tags to organize runs

+

+ 7. **Save Important Checkpoints**

+

+ - Set ``trainer.save_top_k`` appropriately

+ - Copy important checkpoints out of temp directories

+

+.. dropdown:: Troubleshooting

+ :icon: tools

+

+ **Common Issues**

+

+ **Error: "Could not find dataset config"**

+

+ - Check that ``conf/dataset/your_dataset.yaml`` exists

+ - Verify the filename matches what you're passing to ``dataset=``

+

+ **Error: "Missing _target_ in config"**

+

+ - Ensure your config has ``_target_`` pointing to the class

+ - Check for typos in the class path

+

+ **Error: "Validation loss not improving"**

+

+ - Check learning rate: try ``model.optim_kwargs.lr=0.0001``

+ - Increase patience: ``trainer.patience=50``

+ - Check your loss configuration

+

+ **Experiments running slowly**

+

+ - Enable feature precomputation: ``dataset.precompute_embs=true``

+ - Increase batch size: ``dataset.batch_size=512``

+ - Use more workers: ``dataset.num_workers=8``

+

+ **Out of memory**

+

+ - Reduce batch size: ``dataset.batch_size=128``

+ - Reduce model size: ``model.encoder_kwargs.hidden_size=64``

+ - Enable gradient checkpointing (model-specific)

+

+ **Debugging**

+

+ Check resolved configuration:

+

+ .. code-block:: bash

+

+ python run_experiment.py --cfg job

+

+ Print config without running:

+

+ .. code-block:: bash

+

+ python run_experiment.py --cfg all

+

+ Validate configuration:

+

+ .. code-block:: bash

+

+ python run_experiment.py --resolve

+

+

+See Also

+--------

+

+- :doc:`using_high_level` - High-level API for programmatic usage

+- `Contributing Guide - Models `_ - Implementing custom models

+- `Contributing Guide - Datasets `_ - Implementing custom datasets

+- `Conceptarium README `_ - Additional documentation

+- `Hydra Documentation ``

+

+where:

+

+- ``LayerType``: describes the type of layer (e.g., Linear, HyperLinear, Selector, Transformer, etc...)

+- ``InputType`` and ``OutputType``: describe the type of data representations the layer takes as input and produces as output. |pyc_logo| PyC uses the following abbreviations:

+

+ - ``Z``: Input

+ - ``U``: Exogenous

+ - ``C``: Endogenous

+

+

+For instance, a layer named ``LinearZC`` is a linear layer that takes as input an

+``Input`` representation and produces an ``Endogenous`` representation. Since it does not take

+as input any endogenous variables, it is an encoder layer.

+

+.. code-block:: python

+

+ pyc.nn.LinearZC(in_features=10, out_features=3)

+

+As another example, a layer named ``HyperLinearCUC`` is a hyper-network layer that

+takes as input both ``Endogenous`` and ``Exogenous`` representations and produces an

+``Endogenous`` representation. Since it takes as input endogenous variables, it is a predictor layer.

+

+.. code-block:: python

+

+ pyc.nn.HyperLinearCUC(

+ in_features_endogenous=10,

+ in_features_exogenous=7,

+ embedding_size=24,

+ out_features=3

+ )

+

+As a final example, graph learners are a special layers that learn relationships between concepts.

+They do not follow the standard naming convention of encoders and predictors, but their purpose should be

+clear from their name.

+

+.. code-block:: python

+

+ wanda = pyc.nn.WANDAGraphLearner(

+ ['c1', 'c2', 'c3'],

+ ['task A', 'task B', 'task C']

+ )

+

+

+Detailed Guides

+------------------------------

+

+

+.. dropdown:: Concept Bottleneck Model

+ :icon: package

+

+ **Import Libraries**

+

+ To get started, import |pyc_logo| PyC and |pytorch_logo| PyTorch:

+

+ .. code-block:: python

+

+ import torch

+ import torch_concepts as pyc

+

+ **Create Sample Data**

+

+ Generate random inputs and targets for demonstration:

+

+ .. code-block:: python

+

+ batch_size = 32

+ input_dim = 64

+ n_concepts = 5

+ n_tasks = 3

+

+ # Random input

+ x = torch.randn(batch_size, input_dim)

+

+ # Random concept labels (binary)

+ concept_labels = torch.randint(0, 2, (batch_size, n_concepts)).float()

+

+ # Random task labels

+ task_labels = torch.randint(0, n_tasks, (batch_size,))

+

+ **Build a Concept Bottleneck Model**

+

+ Use a ModuleDict to combine encoder and predictor:

+

+ .. code-block:: python

+

+ # Create model using ModuleDict

+ model = torch.nn.ModuleDict({

+ 'encoder': pyc.nn.LinearZC(

+ in_features=input_dim,

+ out_features=n_concepts

+ ),

+ 'predictor': pyc.nn.LinearCC(

+ in_features_endogenous=n_concepts,

+ out_features=n_tasks

+ ),

+ })

+

+

+.. dropdown:: Inference and Training

+ :icon: rocket

+

+ **Inference**

+

+ Once a concept bottleneck model is built, we can perform inference by first obtaining

+ concept activations from the encoder, and then task predictions from the predictor:

+

+ .. code-block:: python

+

+ # Get concept endogenous from input

+ concept_endogenous = model['encoder'](input=x)

+

+ # Get task predictions from concept endogenous

+ task_endogenous = model['predictor'](endogenous=concept_endogenous)

+

+ print(f"Concept endogenous shape: {concept_endogenous.shape}") # [32, 5]

+ print(f"Task endogenous shape: {task_endogenous.shape}") # [32, 3]

+

+ **Compute Loss and Train**

+

+ Train with both concept and task supervision:

+

+ .. code-block:: python

+

+ import torch.nn.functional as F

+

+ # Compute losses

+ concept_loss = F.binary_cross_entropy(torch.sigmoid(concept_endogenous), concept_labels)

+ task_loss = F.cross_entropy(task_endogenous, task_labels)

+ total_loss = task_loss + 0.5 * concept_loss

+

+ # Backpropagation

+ total_loss.backward()

+

+ print(f"Concept loss: {concept_loss.item():.4f}")

+ print(f"Task loss: {task_loss.item():.4f}")

+

+

+.. dropdown:: Interventions

+ :icon: tools

+

+ Intervene using the ``intervention`` context manager which replaces the encoder layer temporarily.

+ The context manager takes two main arguments: **strategies** and **policies**.

+

+ - Intervention strategies define how the layer behaves during the intervention, e.g., setting concept endogenous to ground truth values.

+ - Intervention policies define the priority/order of concepts to intervene on.

+

+ .. code-block:: python

+

+ from torch_concepts.nn import GroundTruthIntervention, UniformPolicy

+ from torch_concepts.nn import intervention

+

+ ground_truth = 10 * torch.rand_like(concept_endogenous)

+ strategy = GroundTruthIntervention(model=model['encoder'], ground_truth=ground_truth)

+ policy = UniformPolicy(out_features=n_concepts)

+

+ # Apply intervention to encoder

+ with intervention(

+ policies=policy,

+ strategies=strategy,

+ target_concepts=[0, 2]

+ ) as new_encoder_layer:

+ intervened_concepts = new_encoder_layer(input=x)

+ intervened_tasks = model['predictor'](endogenous=intervened_concepts)

+

+ print(f"Original concept endogenous: {concept_endogenous[0]}")

+ print(f"Original task predictions: {task_endogenous[0]}")

+ print(f"Intervened concept endogenous: {intervened_concepts[0]}")

+ print(f"Intervened task predictions: {intervened_tasks[0]}")

+

+

+.. dropdown:: (Advanced) Graph Learning

+ :icon: workflow

+

+ Add a graph learner to discover concept relationships:

+

+ .. code-block:: python

+

+ # Define concept and task names

+ concept_names = ['round', 'smooth', 'bright', 'large', 'centered']

+

+ # Create WANDA graph learner

+ graph_learner = pyc.nn.WANDAGraphLearner(

+ row_labels=concept_names,

+ col_labels=concept_names

+ )

+

+ print(f"Learned graph shape: {graph_learner.weighted_adj}")

+

+

+ The ``graph_learner.weighted_adj`` tensor contains a learnable adjacency matrix representing relationships

+ between concepts.

+

+

+.. dropdown:: (Advanced) Verifiable Concept-Based Models

+ :icon: shield-check

+

+ To design more complex concept-based models, you can combine multiple interpretable layers.

+ For example, to build a verifiable concept-based model we can use an encoder to predict concept activations,

+ a selector to select relevant exogenous information, and a hyper-network predictor to make final predictions

+ based on both concept activations and exogenous information.

+

+ .. code-block:: python

+

+ from torch_concepts.nn import LinearZC, SelectorZU, HyperLinearCUC

+

+ memory_size = 7

+ exogenous_size = 16

+ embedding_size = 5

+

+ # Create model using ModuleDict

+ model = torch.nn.ModuleDict({

+ 'encoder': LinearZC(

+ in_features=input_dim,

+ out_features=n_concepts

+ ),

+ 'selector': SelectorZU(

+ in_features=input_dim,

+ memory_size=memory_size,

+ exogenous_size=exogenous_size,

+ out_features=n_tasks

+ ),

+ 'predictor': HyperLinearCUC(

+ in_features_endogenous=n_concepts,

+ in_features_exogenous=exogenous_size,

+ embedding_size=embedding_size,

+ )

+ })

+

+

+

+Next Steps

+----------

+

+- Explore the full :doc:`Low-Level API documentation `

+- Try the :doc:`Mid-Level API ` for probabilistic modeling

+- Try the :doc:`Mid-Level API ` for causal modeling

+- Check out :doc:`example notebooks `

diff --git a/doc/guides/using_mid_level_causal.rst b/doc/guides/using_mid_level_causal.rst

new file mode 100644

index 0000000..c06aeb0

--- /dev/null

+++ b/doc/guides/using_mid_level_causal.rst

@@ -0,0 +1,321 @@

+Structural Equation Models

+=====================================

+

+.. |pyc_logo| image:: https://raw.githubusercontent.com/pyc-team/pytorch_concepts/refs/heads/master/doc/_static/img/logos/pyc.svg

+ :width: 20px

+ :align: middle

+

+.. |pytorch_logo| image:: https://raw.githubusercontent.com/pyc-team/pytorch_concepts/refs/heads/master/doc/_static/img/logos/pytorch.svg

+ :width: 20px

+ :align: middle

+

+|pyc_logo| PyC can be used to build interpretable concept-based causal models and perform causal inference.

+

+.. warning::

+

+ This API is still under development and interfaces might change in future releases.

+

+

+Design principles

+-----------------

+

+Structural Equation Models

+^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

+

+|pyc_logo| PyC can be used to design Structural Equation Models (SEMs), where:

+

+- ``ExogenousVariable`` and ``EndogenousVariable`` objects represent random variables in the SEM. Variables are defined by their name, parents, and distribution type. For example, in this guide we define variables as:

+

+ .. code-block:: python

+

+ exogenous_var = ExogenousVariable(

+ "exogenous",

+ parents=[],

+ distribution=RelaxedBernoulli

+ )

+ genotype_var = EndogenousVariable(

+ "genotype",

+ parents=["exogenous"],

+ distribution=RelaxedBernoulli

+ )

+

+- ``ParametricCPD`` objects represent the structural equations (causal mechanisms) between variables in the SEM and are parameterized by |pyc_logo| PyC or |pytorch_logo| PyTorch modules. For example:

+

+ .. code-block:: python

+

+ genotype_cpd = ParametricCPD(

+ "genotype",

+ parametrization=torch.nn.Sequential(

+ torch.nn.Linear(1, 1),

+ torch.nn.Sigmoid()

+ )

+ )

+

+- ``ProbabilisticModel`` objects collect all variables and CPDs to define the full SEM. For example:

+

+ .. code-block:: python

+

+ sem_model = ProbabilisticModel(

+ variables=[exogenous_var, genotype_var],

+ parametric_cpds=[exogenous_cpd, genotype_cpd]

+ )

+

+Interventions

+^^^^^^^^^^^^^

+

+Interventions allow us to estimate causal effects. For instance, do-interventions allow us to set specific variables

+to fixed values and observe the effect on downstream variables simulating a randomized controlled trial.

+

+To perform a do-intervention, use the ``DoIntervention`` strategy and the ``intervention`` context manager.

+For example, to set ``smoking`` to 0 (prevent smoking) and query the effect on downstream variables:

+

+.. code-block:: python

+

+ # Intervention: Force smoking to 0 (prevent smoking)

+ smoking_strategy_0 = DoIntervention(

+ model=sem_model.parametric_cpds,

+ constants=0.0

+ )

+

+ with intervention(

+ policies=UniformPolicy(out_features=1),

+ strategies=smoking_strategy_0,

+ target_concepts=["smoking"]

+ ):

+ intervened_results_0 = inference_engine.query(

+ query_concepts=["genotype", "smoking", "tar", "cancer"],

+ evidence=initial_input

+ )

+ # Results reflect the effect of setting smoking=0

+

+You can use these interventional results to estimate causal effects, such as the Average Causal Effect (ACE),

+as shown in later steps of this guide.

+

+

+Detailed Guides

+------------------------------

+

+

+.. dropdown:: Structural Equation Models

+ :icon: package

+

+ **Import Libraries**

+

+ Start by importing |pyc_logo| PyC and |pytorch_logo| PyTorch libraries:

+

+ .. code-block:: python

+

+ import torch

+ from torch.distributions import RelaxedBernoulli

+ import torch_concepts as pyc

+ from torch_concepts import EndogenousVariable, ExogenousVariable

+ from torch_concepts.nn import ParametricCPD, ProbabilisticModel

+ from torch_concepts.nn import AncestralSamplingInference

+ from torch_concepts.nn import CallableCC, UniformPolicy, DoIntervention, intervention

+ from torch_concepts.nn.functional import cace_score

+

+ **Create Sample Data**

+

+ .. code-block:: python

+

+ n_samples = 1000

+

+ # Create exogenous input (noise/unobserved confounders)

+ initial_input = {'exogenous': torch.randn((n_samples, 1))}

+

+ **Define Variables and Causal Structure**

+

+ In Structural Equation Models, we distinguish between exogenous (external) and endogenous (internal) variables.

+ Each variable is defined by its name, parents, and distribution type.

+ By specifying parents, we define the causal graph structure.

+

+ .. code-block:: python

+

+ # Define exogenous variable (external noise/confounders)

+ exogenous_var = ExogenousVariable(

+ "exogenous",

+ parents=[],

+ distribution=RelaxedBernoulli

+ )

+

+ # Define endogenous variables (causal chain)

+ genotype_var = EndogenousVariable(

+ "genotype",

+ parents=["exogenous"],

+ distribution=RelaxedBernoulli

+ )

+

+ smoking_var = EndogenousVariable(

+ "smoking",

+ parents=["genotype"],

+ distribution=RelaxedBernoulli

+ )

+

+ tar_var = EndogenousVariable(

+ "tar",

+ parents=["genotype", "smoking"],

+ distribution=RelaxedBernoulli

+ )

+

+ cancer_var = EndogenousVariable(

+ "cancer",

+ parents=["tar"],

+ distribution=RelaxedBernoulli

+ )

+

+ **Define ParametricCPDs**

+

+ ParametricCPDs define the structural equations (causal mechanisms) between variables.

+ We can use |pyc_logo| PyC or |pytorch_logo| PyTorch modules to parameterize these CPDs.

+ More specifically, |pyc_logo| PyC provides ``CallableCC`` to define structural equations using arbitrary callables.

+

+ .. code-block:: python

+

+ # CPD for exogenous variable (no parents)

+ exogenous_cpd = ParametricCPD(

+ "exogenous",

+ parametrization=torch.nn.Sigmoid()

+ )

+

+ # CPD for genotype (depends on exogenous noise)

+ genotype_cpd = ParametricCPD(

+ "genotype",

+ parametrization=torch.nn.Sequential(

+ torch.nn.Linear(1, 1),

+ torch.nn.Sigmoid()

+ )

+ )

+

+ # CPD for smoking (depends on genotype)

+ smoking_cpd = ParametricCPD(

+ ["smoking"],

+ parametrization=CallableCC(

+ lambda x: (x > 0.5).float(),

+ use_bias=False

+ )

+ )

+

+ # CPD for tar (depends on genotype and smoking)

+ tar_cpd = ParametricCPD(

+ "tar",

+ parametrization=CallableCC(

+ lambda x: torch.logical_or(x[:, 0] > 0.5, x[:, 1] > 0.5).float().unsqueeze(-1),

+ use_bias=False

+ )

+ )

+

+ # CPD for cancer (depends on tar)

+ cancer_cpd = ParametricCPD(

+ "cancer",

+ parametrization=CallableCC(

+ lambda x: x,

+ use_bias=False

+ )

+ )

+

+ **Build Structural Equation Model**

+

+ Combine all variables and CPDs into a probabilistic model:

+

+ .. code-block:: python

+

+ # Create the structural equation model

+ sem_model = ProbabilisticModel(

+ variables=[exogenous_var, genotype_var, smoking_var, tar_var, cancer_var],

+ parametric_cpds=[exogenous_cpd, genotype_cpd, smoking_cpd, tar_cpd, cancer_cpd]

+ )

+

+

+.. dropdown:: Observational Inference

+ :icon: telescope

+

+ Once the SEM is defined, we can perform observational inference to obtain predictions

+ for all endogenous variables given exogenous evidence:

+

+ .. code-block:: python

+

+ # Create inference engine

+ inference_engine = AncestralSamplingInference(

+ sem_model,

+ temperature=1.0,

+ log_probs=False

+ )

+

+ # Query all endogenous variables

+ query_concepts = ["genotype", "smoking", "tar", "cancer"]

+ results = inference_engine.query(query_concepts, evidence=initial_input)

+

+ print("Genotype Predictions (first 5 samples):")

+ print(results[:, 0][:5])

+ print("Smoking Predictions (first 5 samples):")

+ print(results[:, 1][:5])

+ print("Tar Predictions (first 5 samples):")

+ print(results[:, 2][:5])

+ print("Cancer Predictions (first 5 samples):")

+ print(results[:, 3][:5])

+

+

+.. dropdown:: Do-Interventions

+ :icon: tools

+

+ We can perform do-interventions to set specific variables to fixed values

+ and observe the effect on downstream variables, simulating a randomized controlled trial.

+ The intervention API is the same we use for probabilistic models and low-level APIs.

+

+ .. code-block:: python

+

+ # Intervention 1: Force smoking to 0 (prevent smoking)

+ smoking_strategy_0 = DoIntervention(

+ model=sem_model.parametric_cpds,

+ constants=0.0

+ )

+

+ with intervention(

+ policies=UniformPolicy(out_features=1),

+ strategies=smoking_strategy_0,

+ target_concepts=["smoking"]

+ ):

+ intervened_results_0 = inference_engine.query(

+ query_concepts=["genotype", "smoking", "tar", "cancer"],

+ evidence=initial_input

+ )

+ cancer_do_smoking_0 = intervened_results_0[:, 3]

+

+ # Intervention 2: Force smoking to 1 (promote smoking)

+ smoking_strategy_1 = DoIntervention(

+ model=sem_model.parametric_cpds,

+ constants=1.0

+ )

+

+ with intervention(

+ policies=UniformPolicy(out_features=1),

+ strategies=smoking_strategy_1,

+ target_concepts=["smoking"]

+ ):

+ intervened_results_1 = inference_engine.query(

+ query_concepts=["genotype", "smoking", "tar", "cancer"],

+ evidence=initial_input

+ )

+ cancer_do_smoking_1 = intervened_results_1[:, 3]

+

+

+.. dropdown:: Causal Effect Estimation

+ :icon: beaker

+

+ Calculate the Average Causal Effect (ACE) using the interventional distributions obtained from the do-interventions:

+

+ .. code-block:: python

+

+ # Compute ACE of smoking on cancer

+ ace_cancer_do_smoking = cace_score(cancer_do_smoking_0, cancer_do_smoking_1)

+ print(f"ACE of smoking on cancer: {ace_cancer_do_smoking:.3f}")

+

+ This represents the causal effect of smoking on cancer, accounting for the full causal structure.

+

+

+Next Steps

+----------

+

+- Explore the full :doc:`Mid-Level API documentation `

+- Compare with :doc:`Probabilistic Models ` for standard probabilistic inference

+- Try the :doc:`High-Level API ` for out-of-the-box models

diff --git a/doc/guides/using_mid_level_proba.rst b/doc/guides/using_mid_level_proba.rst

new file mode 100644

index 0000000..4896130

--- /dev/null

+++ b/doc/guides/using_mid_level_proba.rst

@@ -0,0 +1,278 @@

+Interpretable Probabilistic Models

+=====================================

+

+

+.. |pyc_logo| image:: https://raw.githubusercontent.com/pyc-team/pytorch_concepts/refs/heads/master/doc/_static/img/logos/pyc.svg

+ :width: 20px

+ :align: middle

+

+.. |pytorch_logo| image:: https://raw.githubusercontent.com/pyc-team/pytorch_concepts/refs/heads/master/doc/_static/img/logos/pytorch.svg

+ :width: 20px

+ :align: middle

+

+

+|pyc_logo| PyC can be used to build interpretable concept-based probabilisitc models.

+

+.. warning::

+

+ This API is still under development and interfaces might change in future releases.

+

+

+

+Design principles

+-----------------

+

+Probabilistic Models

+^^^^^^^^^^^^^^^^^^^^

+

+At this API level, models are represented as probabilistic models where:

+

+- ``Variable`` objects represent random variables in the probabilistic model. Variables are defined by their name, parents, and distribution type. For instance we can define a list of three concepts as:

+

+ .. code-block:: python

+

+ concepts = pyc.EndogenousVariable(

+ concepts=["c1", "c2", "c3"],

+ parents=[],

+ distribution=torch.distributions.RelaxedBernoulli

+ )

+

+- ``ParametricCPD`` objects represent conditional probability distributions (CPDs) between variables in the probabilistic model and are parameterized by |pyc_logo| PyC layers. For instance we can define a list of three parametric CPDs for the above concepts as:

+

+ .. code-block:: python

+

+ concept_cpd = pyc.nn.ParametricCPD(

+ concepts=["c1", "c2", "c3"],

+ parametrization=pyc.nn.LinearZC(in_features=10, out_features=3)

+ )

+

+- ``ProbabilisticModel`` objects are a collection of variables and CPDs. For instance we can define a model as:

+

+ .. code-block:: python

+

+ probabilistic_model = pyc.nn.ProbabilisticModel(

+ variables=concepts,

+ parametric_cpds=concept_cpd

+ )

+

+Inference

+^^^^^^^^^

+

+Inference is performed using efficient tensorial probabilistic inference algorithms. For instance, we can perform ancestral sampling as:

+

+.. code-block:: python

+

+ inference_engine = pyc.nn.AncestralSamplingInference(

+ probabilistic_model=probabilistic_model,

+ graph_learner=wanda,

+ temperature=1.

+ )

+ predictions = inference_engine.query(["c1"], evidence={'input': x})

+

+

+Detailed Guides

+------------------------------

+

+

+.. dropdown:: Interpretable Probabilistic Models

+ :icon: package

+

+ **Import Libraries**

+

+ Start by importing |pyc_logo| PyC and |pytorch_logo| PyTorch:

+

+ .. code-block:: python

+

+ import torch

+ import torch_concepts as pyc

+

+ **Create Sample Data**

+

+ .. code-block:: python

+

+ batch_size = 16

+ input_dim = 64

+

+ x = torch.randn(batch_size, input_dim)

+

+ **Define Variables and Graph Structure**

+

+ Variables represent random variables in the probabilistic model.

+ To define a variable, specify its name, parents, and distribution type.

+ By specifying parents, we define the graph structure of the model.

+

+ .. code-block:: python

+

+ # Define input variable

+ input_var = pyc.InputVariable(

+ concepts=["input"],

+ parents=[],

+ )

+

+ # Define concept variables

+ concepts = pyc.EndogenousVariable(

+ concepts=["round", "smooth", "bright"],

+ parents=["input"],

+ distribution=torch.distributions.RelaxedBernoulli

+ )

+

+ # Define task variables

+ tasks = pyc.EndogenousVariable(

+ concepts=["class_A", "class_B"],

+ parents=["round", "smooth", "bright"],

+ distribution=torch.distributions.RelaxedBernoulli

+ )

+

+ **Define ParametricCPDs**

+

+ ParametricCPDs are conditional probability distributions parameterized by |pyc_logo| PyC or |pytorch_logo| PyTorch layers.

+ Define a ParametricCPD for each variable based on its parents.

+

+ .. code-block:: python

+

+ # ParametricCPD for input (no parents)

+ input_factor = pyc.nn.ParametricCPD(

+ concepts=["input"],

+ parametrization=torch.nn.Identity()

+ )

+

+ # ParametricCPD for concepts (from input)

+ concept_cpd = pyc.nn.ParametricCPD(

+ concepts=["round", "smooth", "bright"],

+ parametrization=pyc.nn.LinearZC(

+ in_features=input_dim,

+ out_features=1

+ )

+ )

+

+ # ParametricCPD for tasks (from concepts)

+ task_cpd = pyc.nn.ParametricCPD(

+ concepts=["class_A", "class_B"],

+ parametrization=pyc.nn.LinearCC(

+ in_features_endogenous=3,

+ out_features=1

+ )

+ )

+

+ **Build Concept-based Probabilistic Model**

+

+ A concept-based probabilistic model is defined by collecting all variables and their corresponding ParametricCPDs.

+

+ .. code-block:: python

+

+ # Create the probabilistic model

+ prob_model = pyc.nn.ProbabilisticModel(

+ variables=[input_var, *concepts, *tasks],

+ parametric_cpds=[input_factor, *concept_cpd, *task_cpd]

+ )

+

+

+.. dropdown:: Probabilistic Inference

+ :icon: rocket

+

+ **Deterministic Inference**

+

+ We can perform deterministic inference by querying the model for concept and task predictions given input evidence:

+

+ .. code-block:: python

+

+ # Create inference engine

+ inference_engine = pyc.nn.DeterministicInference(

+ probabilistic_model=prob_model,

+ )

+

+ # Query concept predictions

+ concept_predictions = inference_engine.query(

+ query_concepts=["round", "smooth", "bright"],

+ evidence={'input': x}

+ )

+

+ # Query task predictions given concepts

+ task_predictions = inference_engine.query(

+ query_concepts=["class_A", "class_B"],

+ evidence={

+ 'input': x,

+ 'round': concept_predictions[:, 0],

+ 'smooth': concept_predictions[:, 1],

+ 'bright': concept_predictions[:, 2]

+ }

+ )

+

+ print(f"Concept predictions: {concept_predictions}")

+ print(f"Task predictions: {task_predictions}")

+

+

+ **Ancestral Sampling**

+

+ While deterministic inference is the standard approach in deep learning, |pyc_logo| PyC also supports probabilistic inference methods.

+ For instance, we can perform ancestral sampling to obtain predictions by sampling from each variable's distribution:

+

+ .. code-block:: python

+

+ # Create inference engine

+ inference_engine = pyc.nn.AncestralSamplingInference(

+ probabilistic_model=prob_model,

+ temperature=1.0

+ )

+

+ # Query concept predictions

+ concept_predictions = inference_engine.query(

+ query_concepts=["round", "smooth", "bright"],

+ evidence={'input': x}

+ )

+

+ # Query task predictions given concepts

+ task_predictions = inference_engine.query(

+ query_concepts=["class_A", "class_B"],

+ evidence={

+ 'input': x,

+ 'round': concept_predictions[:, 0],

+ 'smooth': concept_predictions[:, 1],

+ 'bright': concept_predictions[:, 2]

+ }

+ )

+

+ print(f"Concept predictions: {concept_predictions}")

+ print(f"Task predictions: {task_predictions}")

+

+

+.. dropdown:: Interventions

+ :icon: tools

+

+ We can perform interventions on specific concepts to observe their effects on other variables, similarly to how

+ interventions are performed using low-level APIs.

+

+ .. code-block:: python

+

+ from torch_concepts.nn import DoIntervention, UniformPolicy

+ from torch_concepts.nn import intervention

+

+ strategy = DoIntervention(model=prob_model.parametric_cpds, constants=100.0)

+ policy = UniformPolicy(out_features=prob_model.concept_to_variable["round"].size)

+

+ original_predictions = inference_engine.query(

+ query_concepts=["round", "smooth", "bright", "class_A", "class_B"],

+ evidence={'input': x}

+ )

+

+ # Apply intervention to encoder

+ with intervention(

+ policies=policy,

+ strategies=strategy,

+ target_concepts=["round", "smooth"]