diff --git a/examples/demo-apps/android/LlamaDemo/README.md b/examples/demo-apps/android/LlamaDemo/README.md

index 41b030cef06..0cb9b7f0ba9 100644

--- a/examples/demo-apps/android/LlamaDemo/README.md

+++ b/examples/demo-apps/android/LlamaDemo/README.md

@@ -46,7 +46,7 @@ Below are the UI features for the app.

Select the settings widget to get started with picking a model, its parameters and any prompts.

- +

+

@@ -55,7 +55,7 @@ Select the settings widget to get started with picking a model, its parameters a

Once you've selected the model, tokenizer, and model type you are ready to click on "Load Model" to have the app load the model and go back to the main Chat activity.

-  +

+

@@ -87,12 +87,12 @@ int loadResult = mModule.load();

### User Prompt

Once model is successfully loaded then enter any prompt and click the send (i.e. generate) button to send it to the model.

- +

+

You can provide it more follow-up questions as well.

- +

+

> [!TIP]

@@ -109,14 +109,14 @@ mModule.generate(prompt,sequence_length, MainActivity.this);

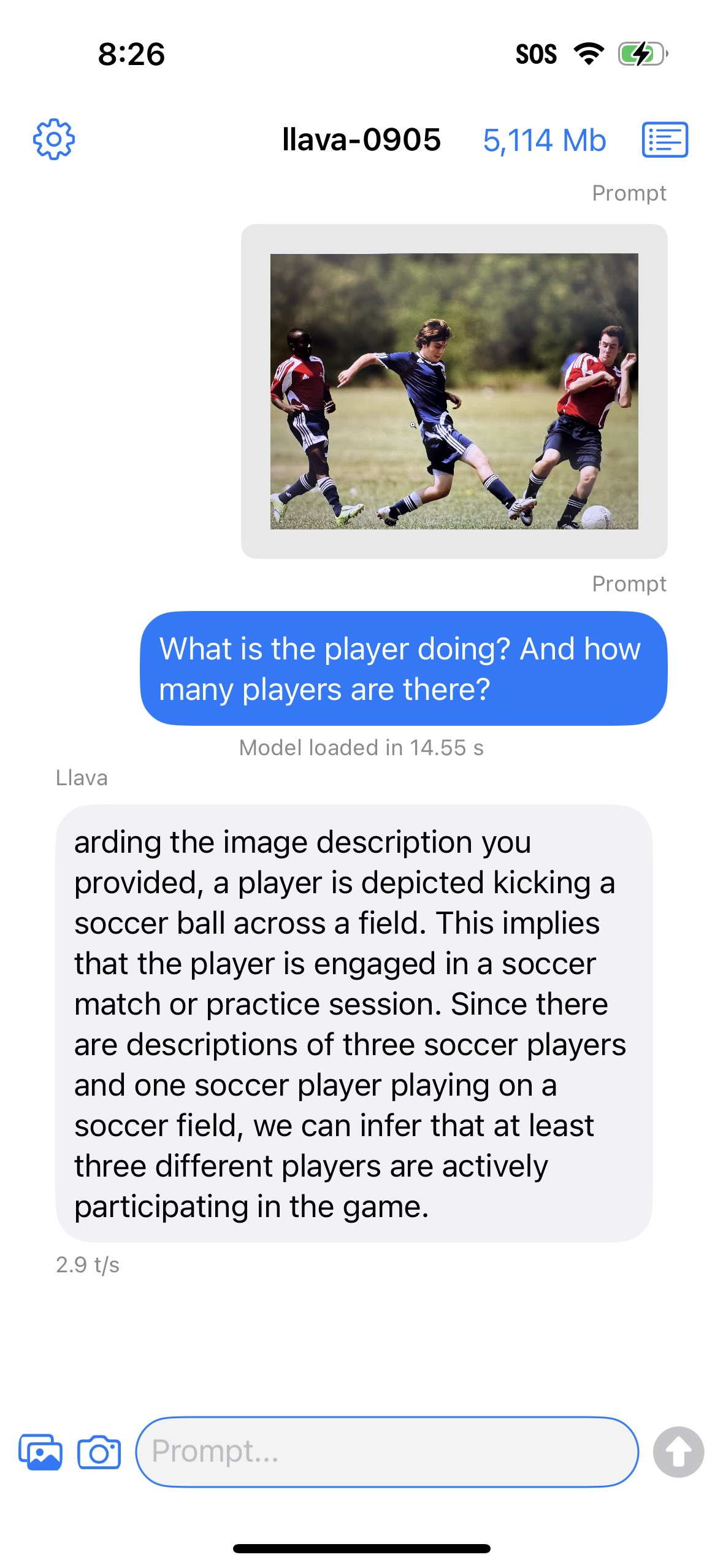

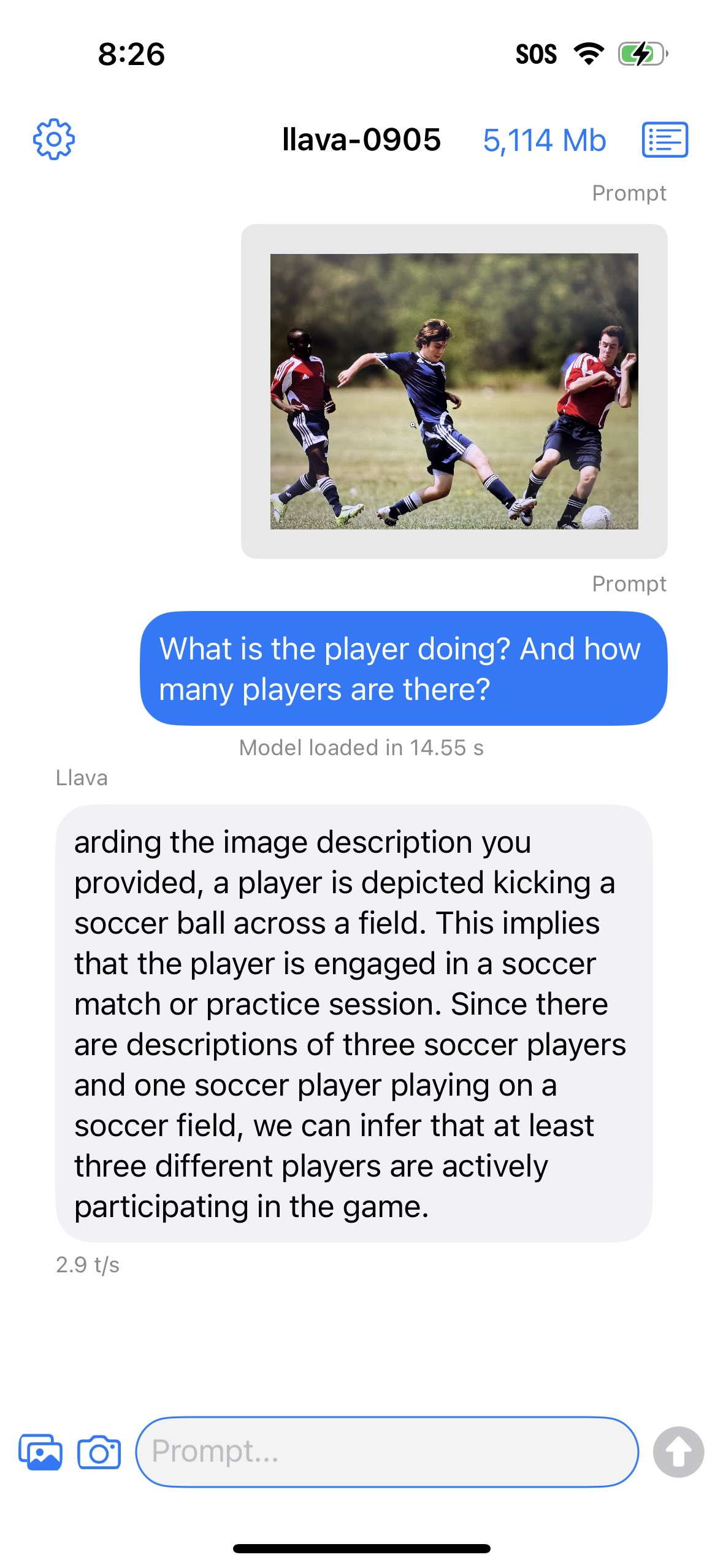

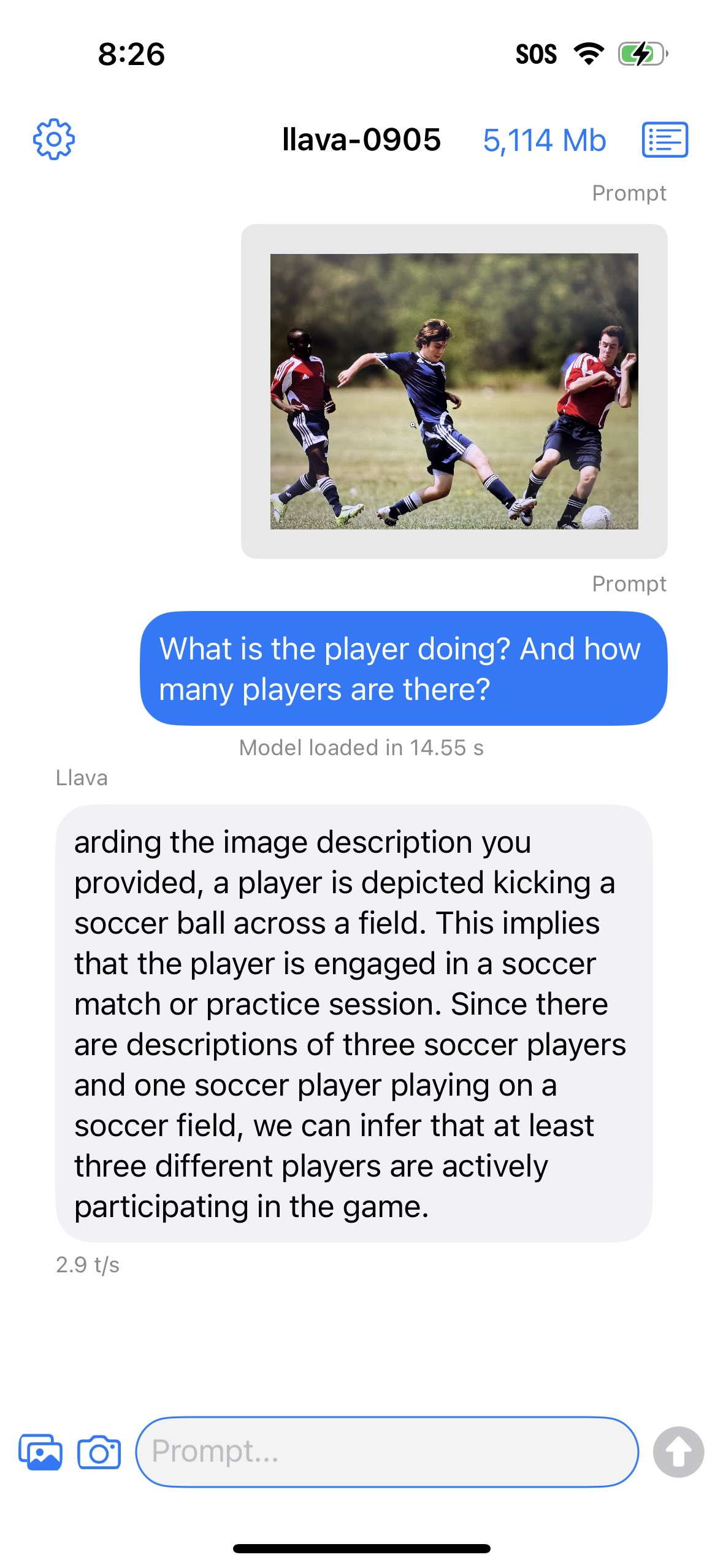

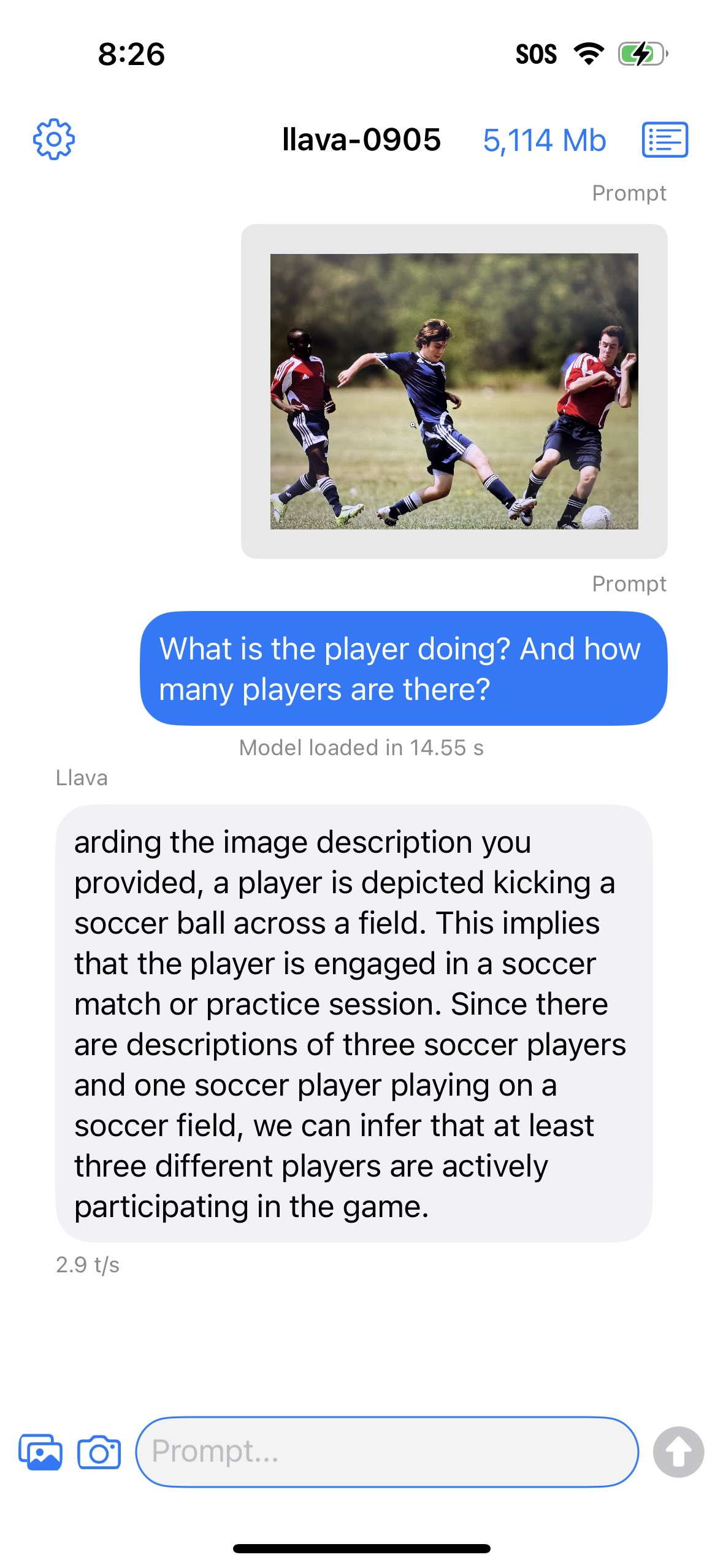

For LLaVA-1.5 implementation, select the exported LLaVA .pte and tokenizer file in the Settings menu and load the model. After this you can send an image from your gallery or take a live picture along with a text prompt to the model.

- +

+

### Output Generated

To show completion of the follow-up question, here is the complete detailed response from the model.

- +

+

> [!TIP]

diff --git a/examples/demo-apps/android/LlamaDemo/docs/delegates/mediatek_README.md b/examples/demo-apps/android/LlamaDemo/docs/delegates/mediatek_README.md

index 8010d79fc70..24be86f07a5 100644

--- a/examples/demo-apps/android/LlamaDemo/docs/delegates/mediatek_README.md

+++ b/examples/demo-apps/android/LlamaDemo/docs/delegates/mediatek_README.md

@@ -112,7 +112,7 @@ Before continuing forward, make sure to modify the tokenizer, token embedding, a

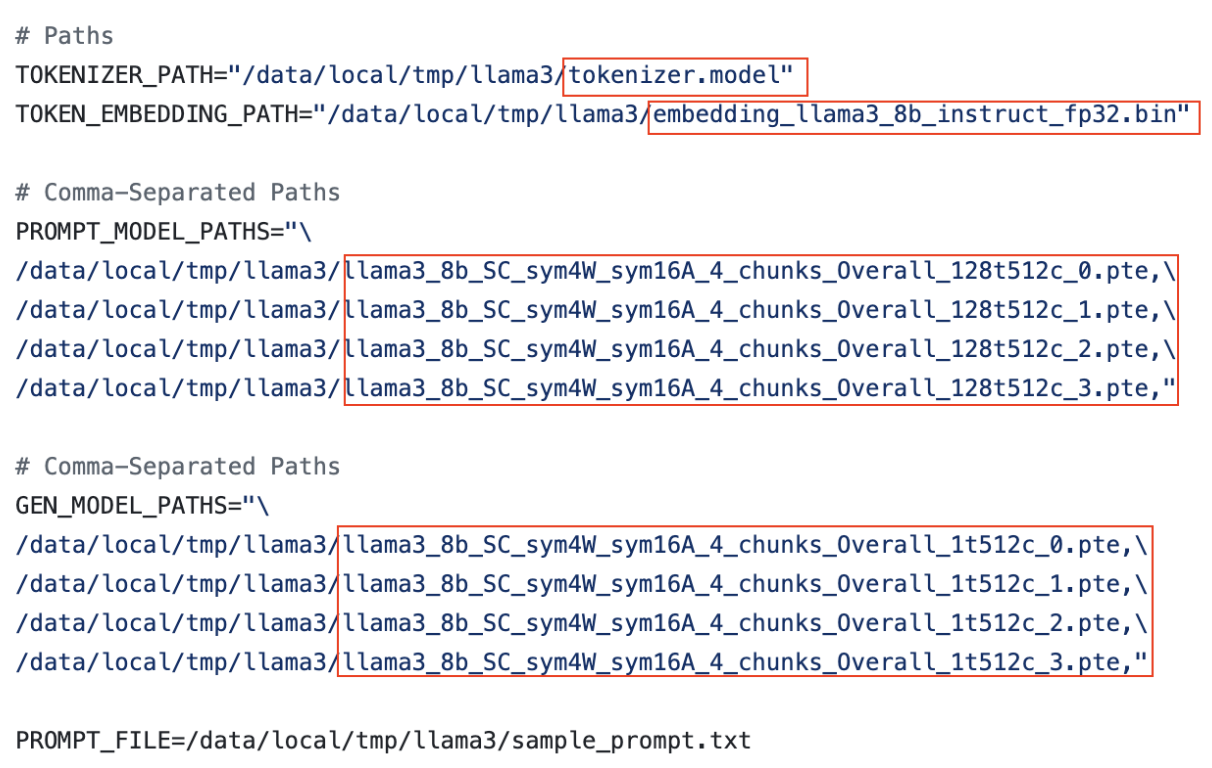

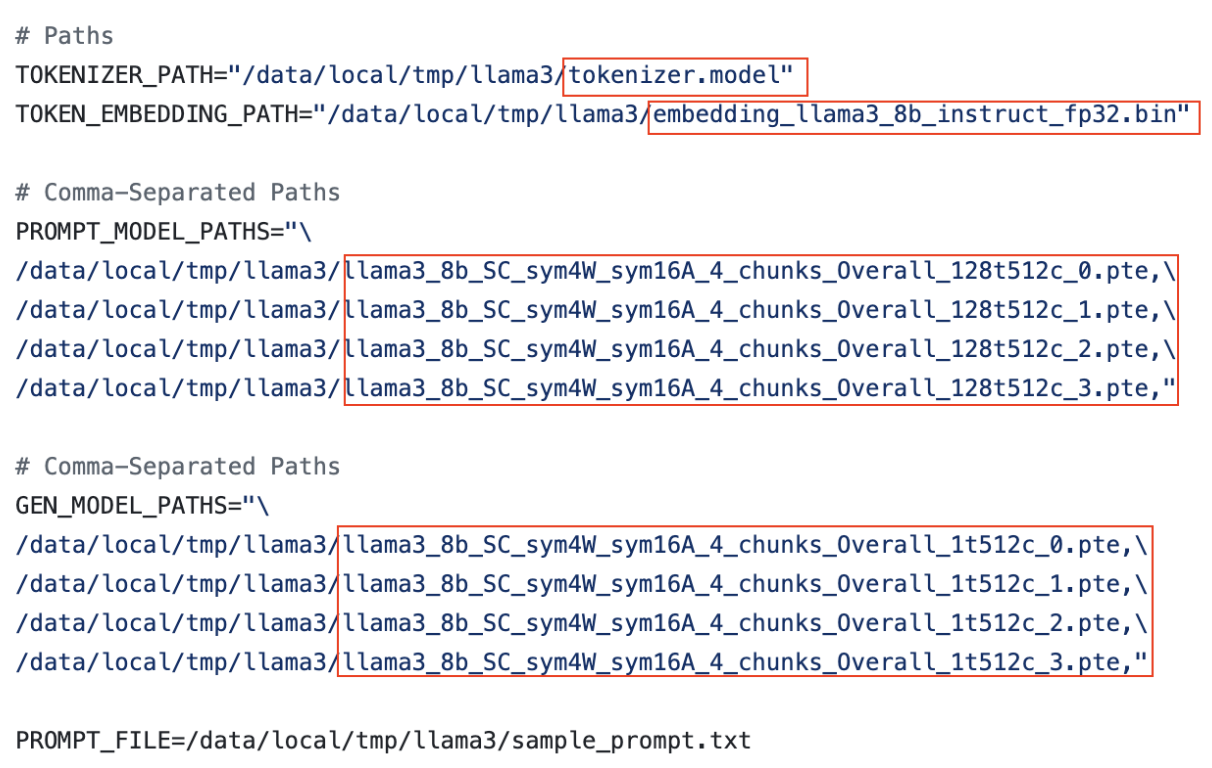

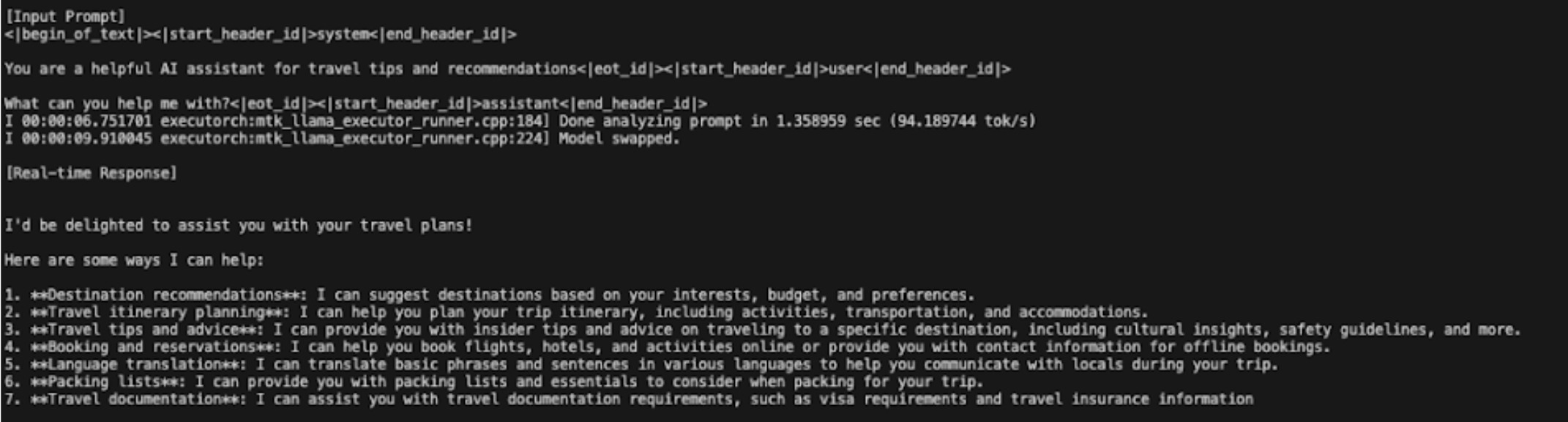

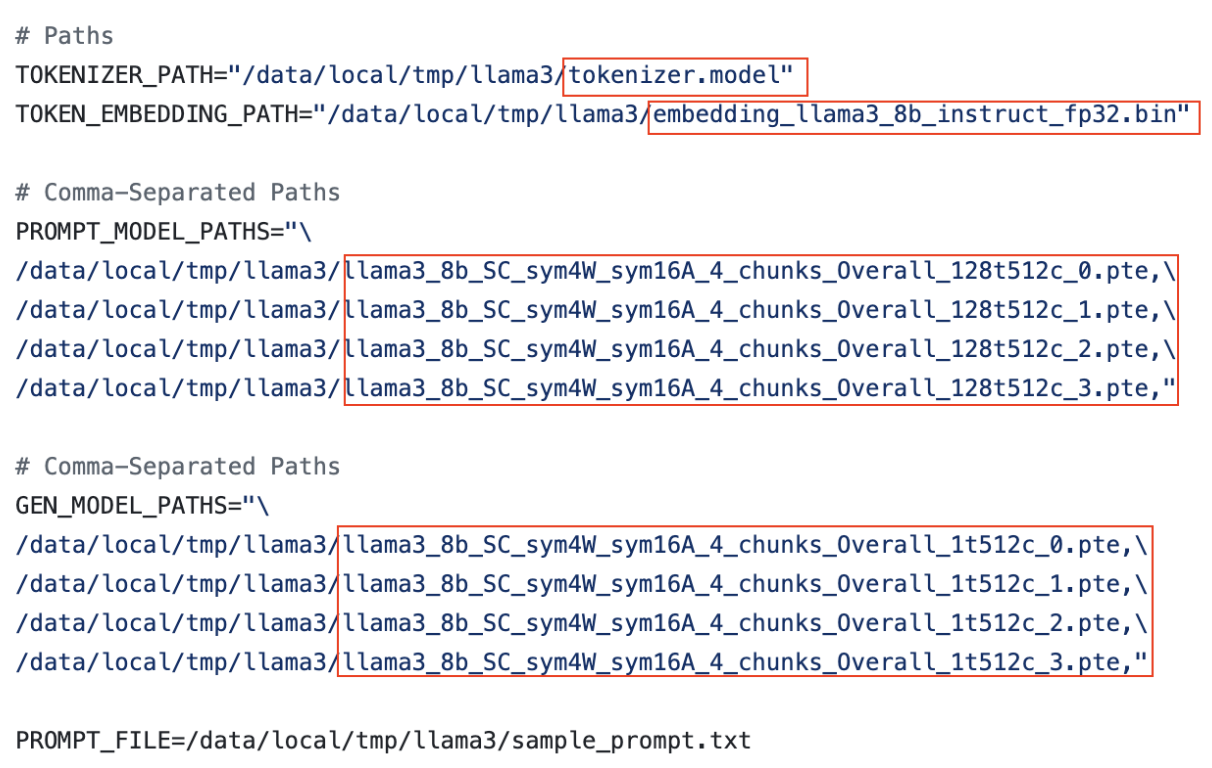

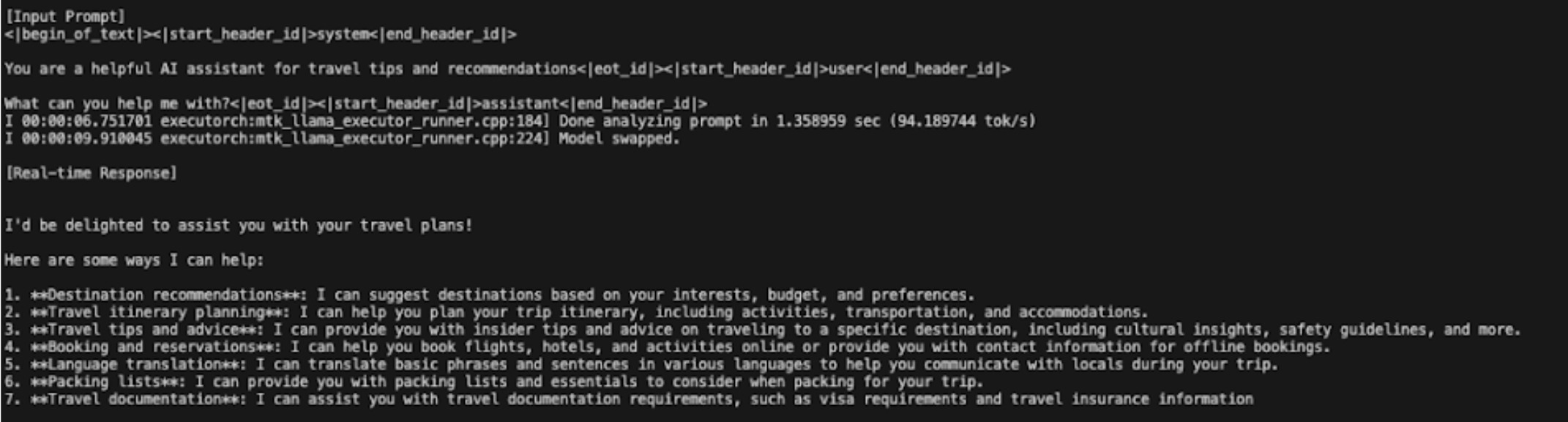

Prior to deploying the files on device, make sure to modify the tokenizer, token embedding, and model file names in examples/mediatek/executor_runner/run_llama3_sample.sh reflect what was generated during the Export Llama Model step.

- +

+

In addition, create a sample_prompt.txt file with a prompt. This will be deployed to the device in the next step.

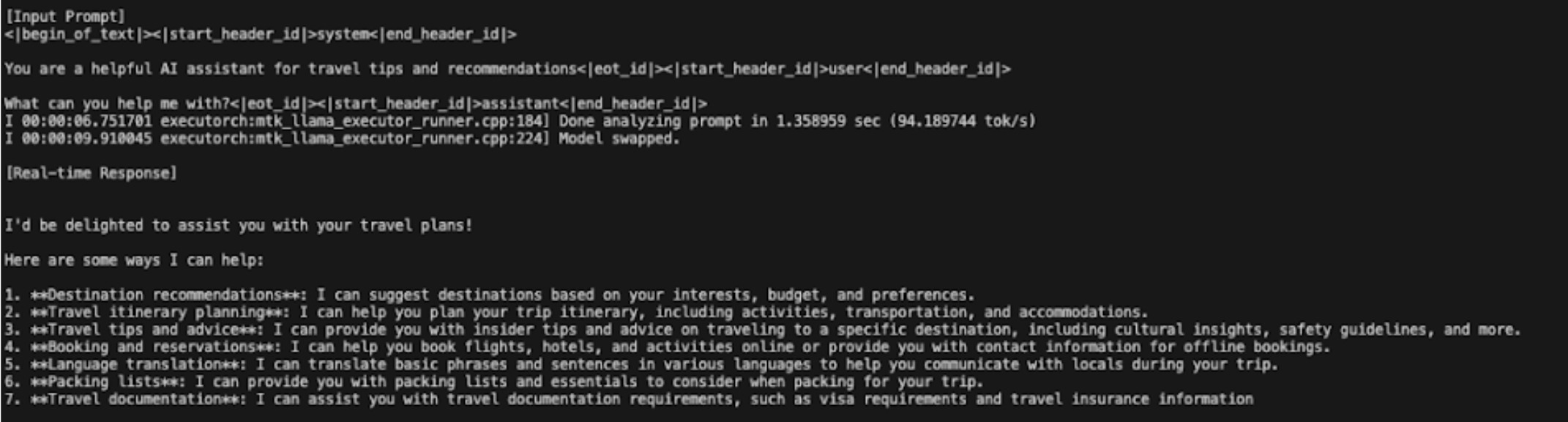

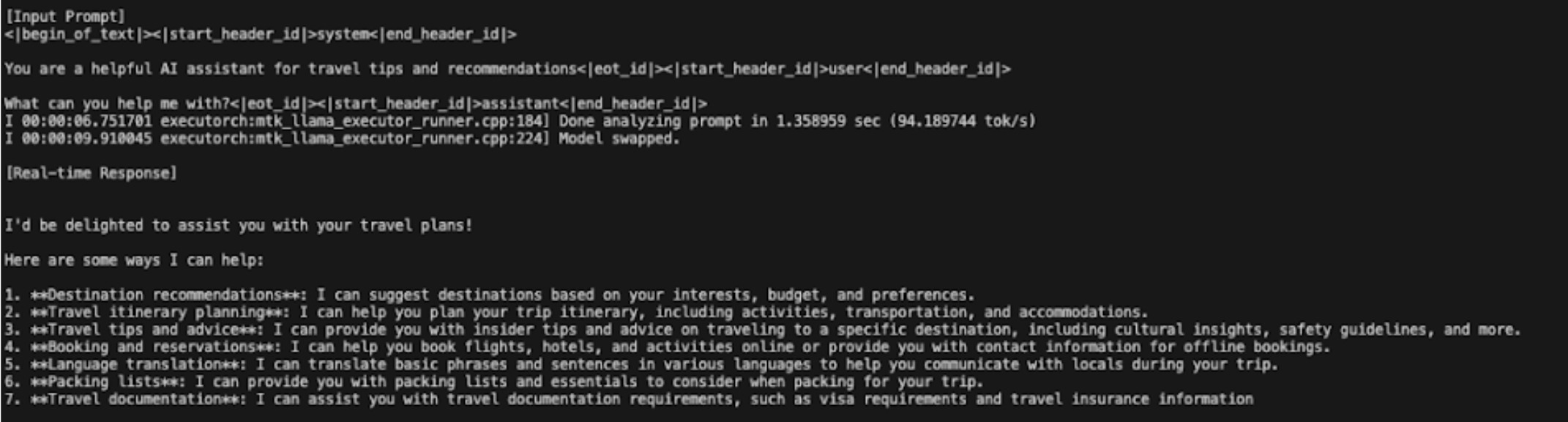

@@ -150,7 +150,7 @@ adb shell

```

- +

+

## Reporting Issues

diff --git a/examples/demo-apps/android/LlamaDemo/docs/delegates/qualcomm_README.md b/examples/demo-apps/android/LlamaDemo/docs/delegates/qualcomm_README.md

index ca628c1f8c7..304cfc5d39b 100644

--- a/examples/demo-apps/android/LlamaDemo/docs/delegates/qualcomm_README.md

+++ b/examples/demo-apps/android/LlamaDemo/docs/delegates/qualcomm_README.md

@@ -221,7 +221,7 @@ popd

If the app successfully run on your device, you should see something like below:

- +

+

## Reporting Issues

diff --git a/examples/demo-apps/android/LlamaDemo/docs/delegates/xnnpack_README.md b/examples/demo-apps/android/LlamaDemo/docs/delegates/xnnpack_README.md

index 017f0464108..ee6f89db5b9 100644

--- a/examples/demo-apps/android/LlamaDemo/docs/delegates/xnnpack_README.md

+++ b/examples/demo-apps/android/LlamaDemo/docs/delegates/xnnpack_README.md

@@ -149,7 +149,7 @@ popd

If the app successfully run on your device, you should see something like below:

- +

+

## Reporting Issues

diff --git a/examples/demo-apps/apple_ios/LLaMA/README.md b/examples/demo-apps/apple_ios/LLaMA/README.md

index bbf8572a38a..b6644f1dfb9 100644

--- a/examples/demo-apps/apple_ios/LLaMA/README.md

+++ b/examples/demo-apps/apple_ios/LLaMA/README.md

@@ -59,11 +59,11 @@ For more details integrating and Running ExecuTorch on Apple Platforms, checkout

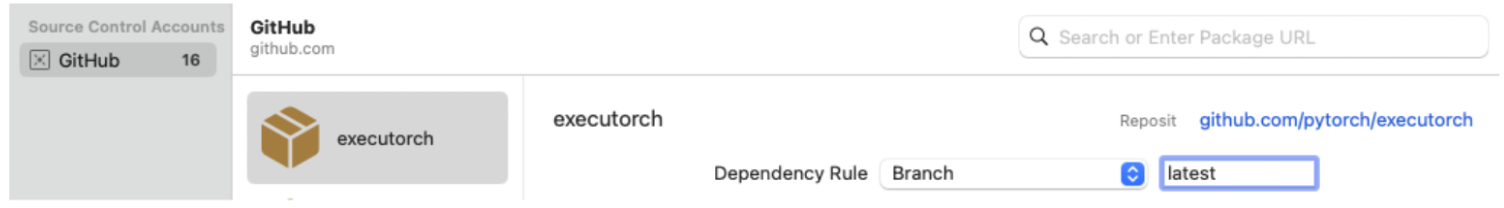

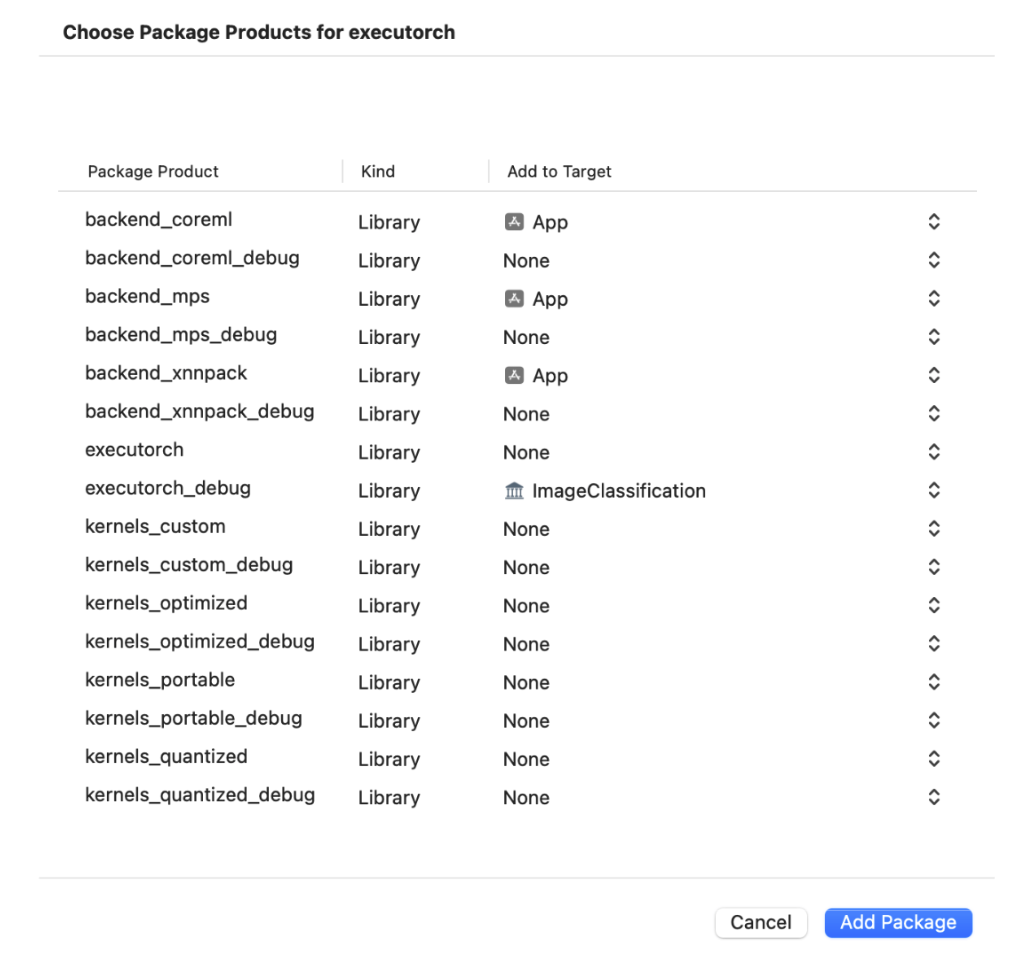

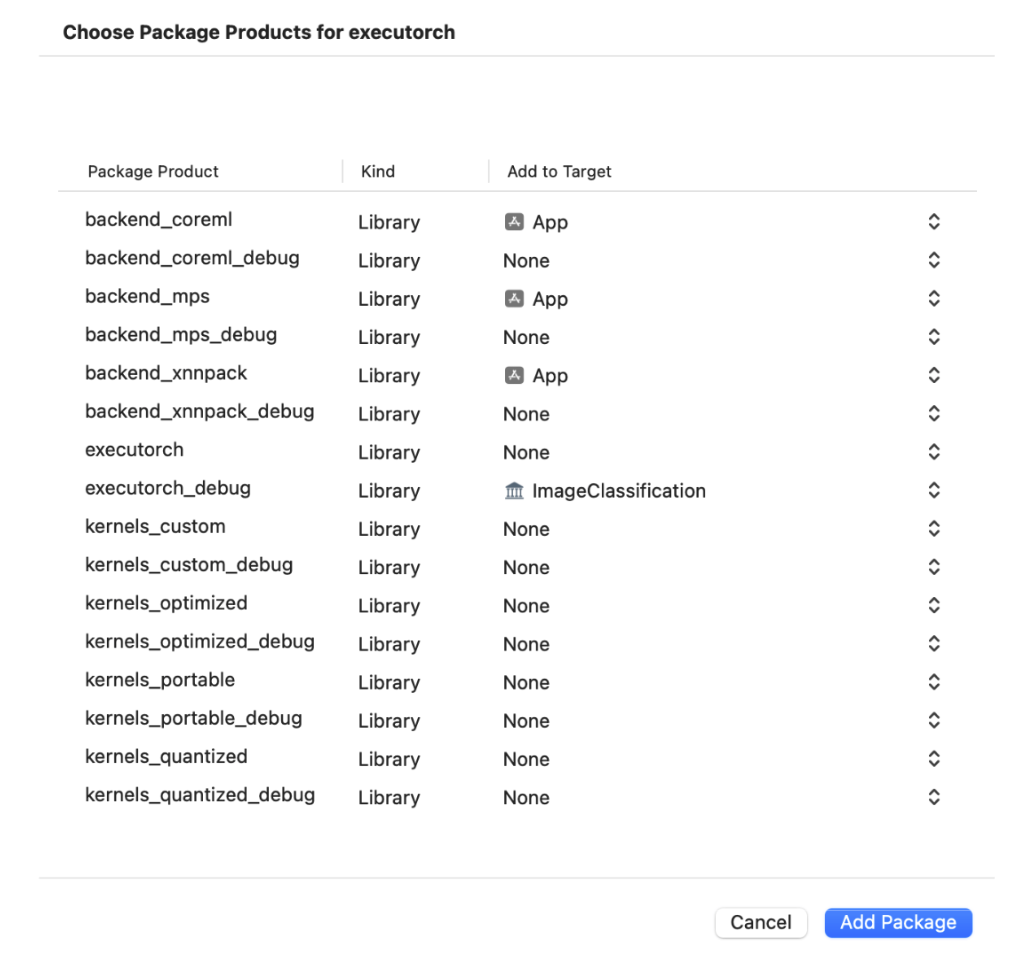

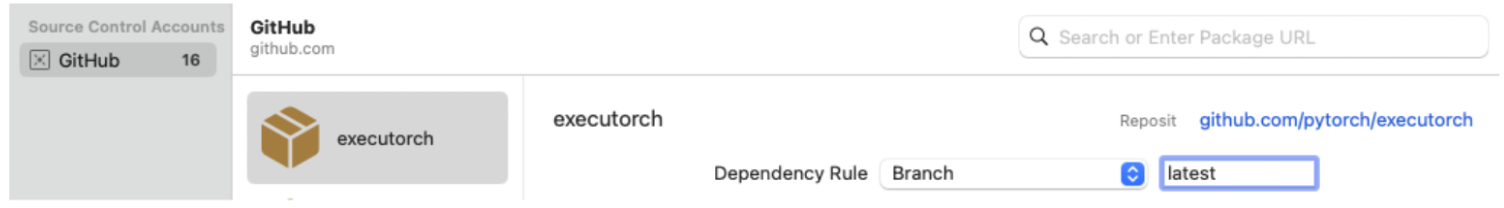

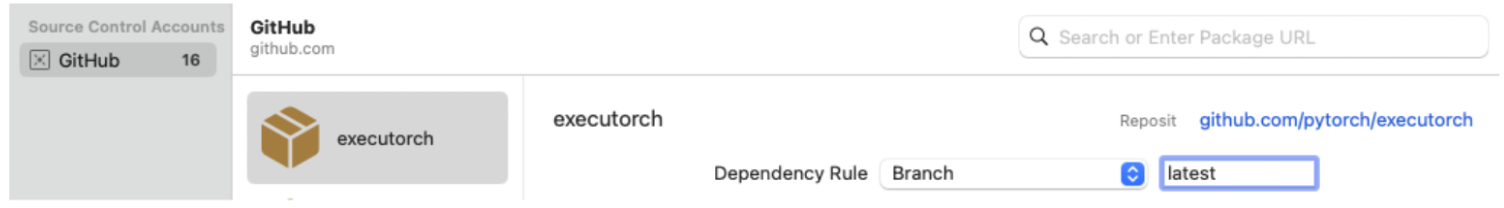

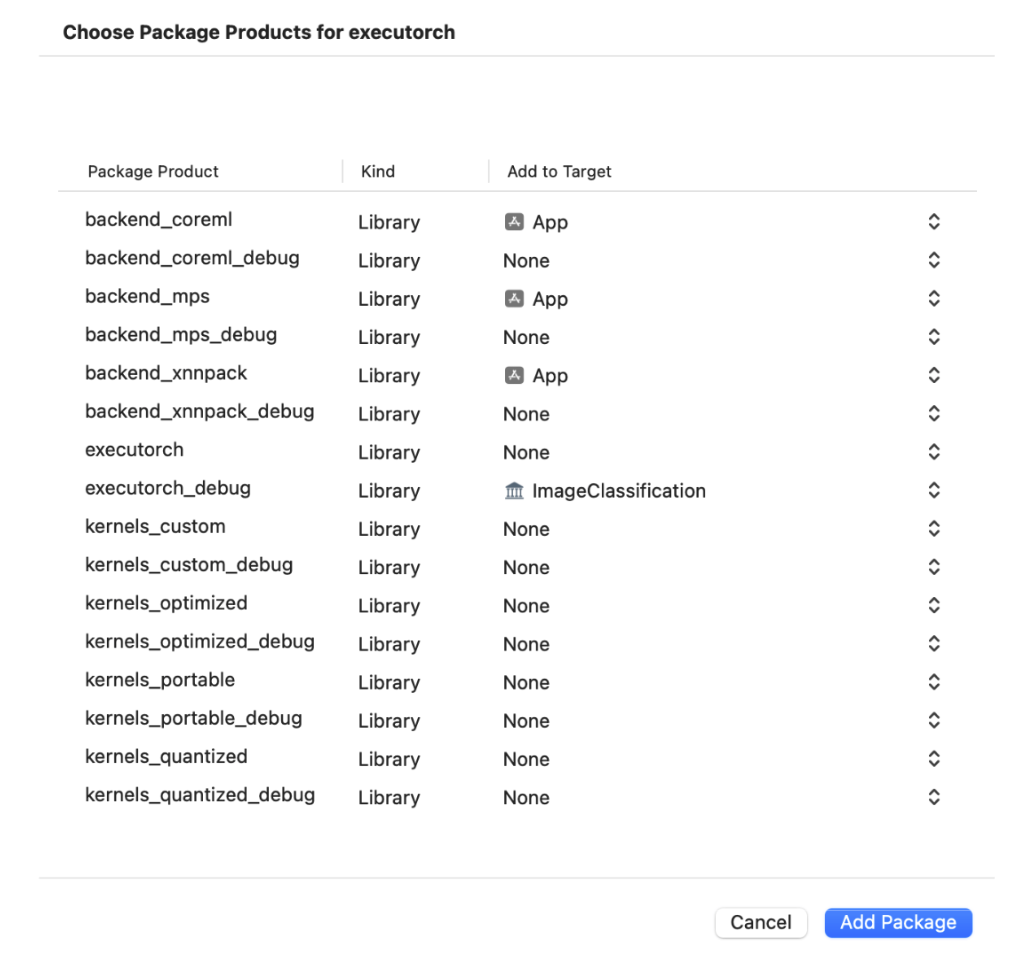

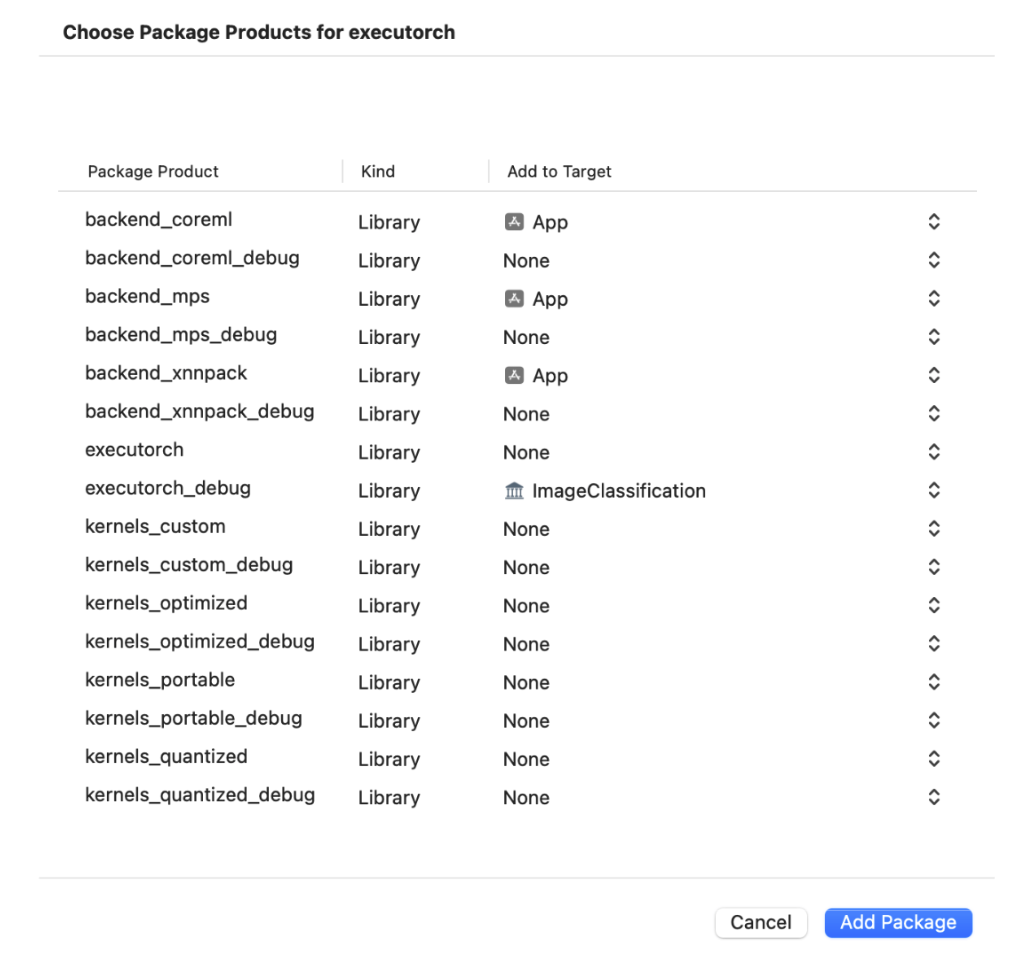

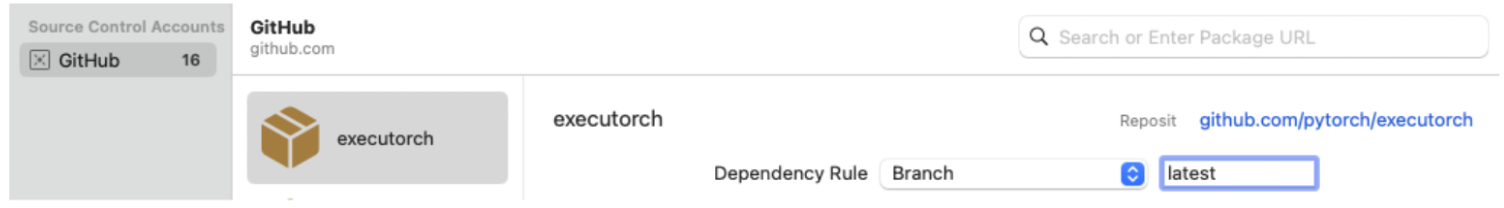

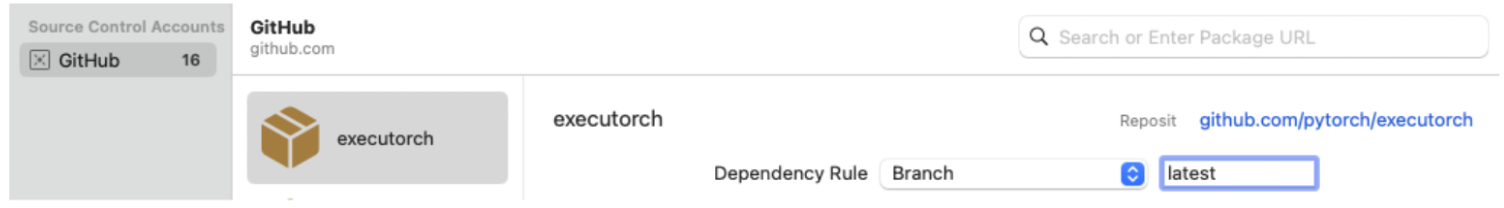

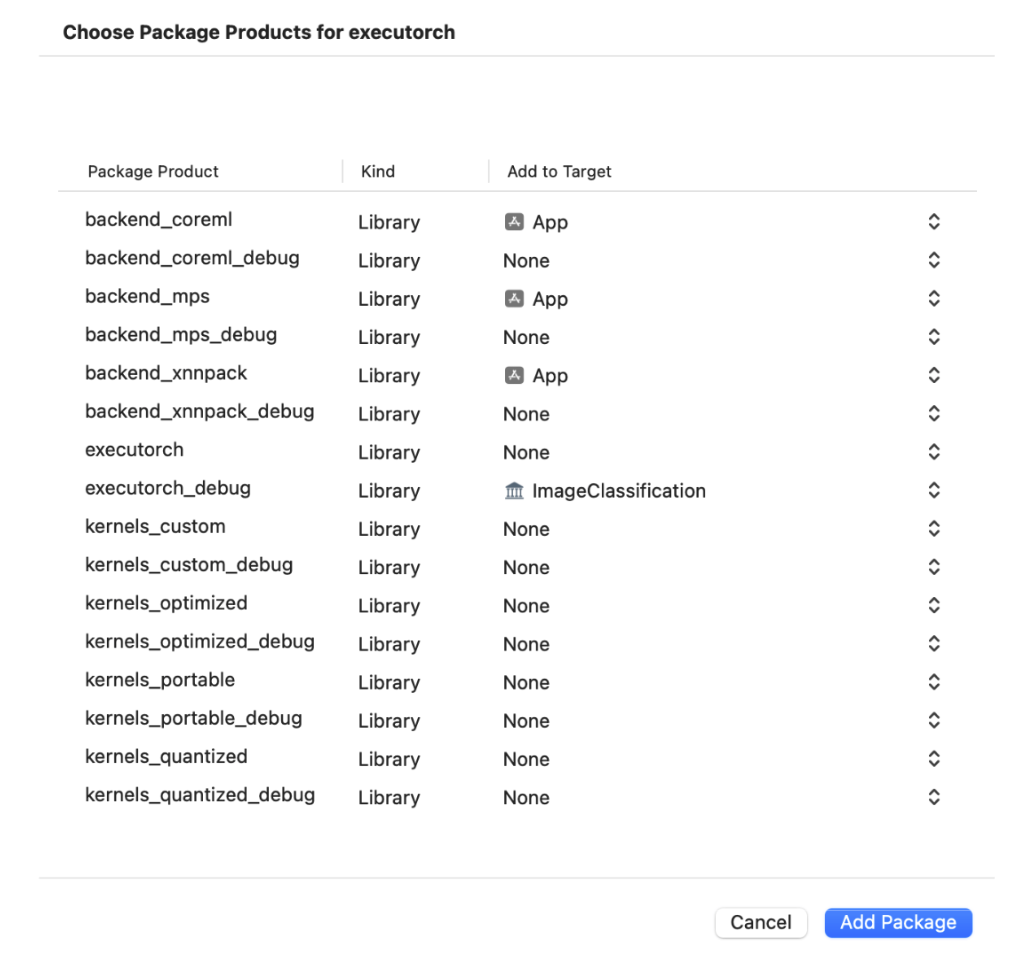

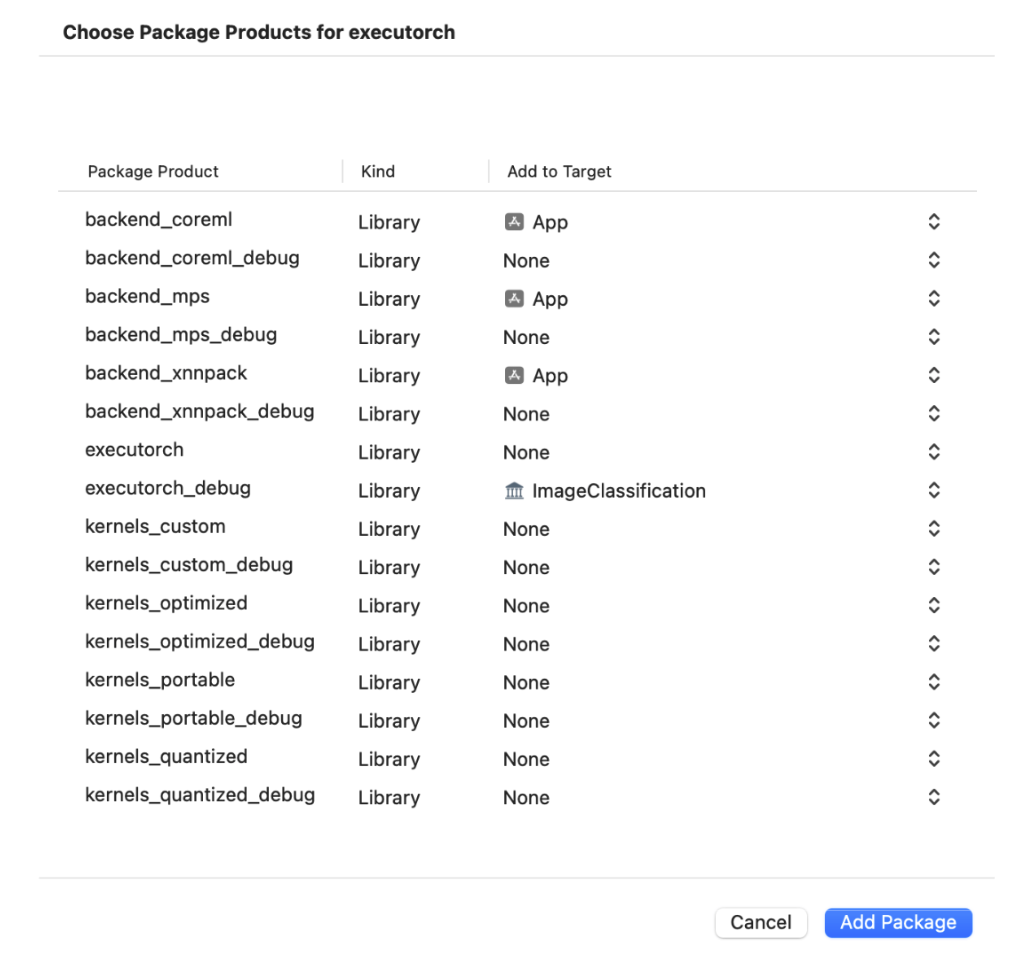

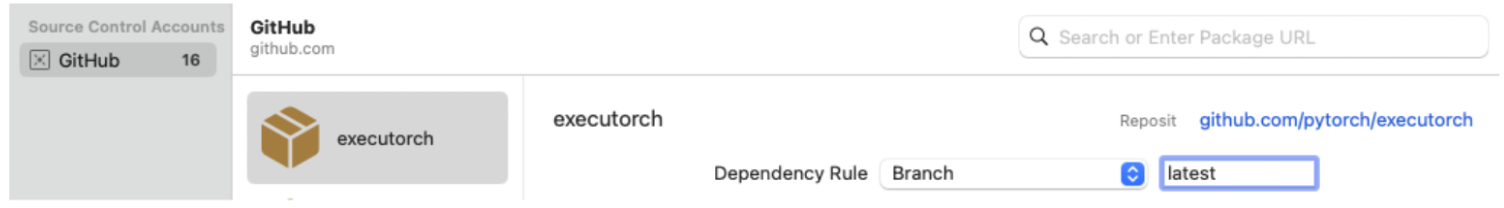

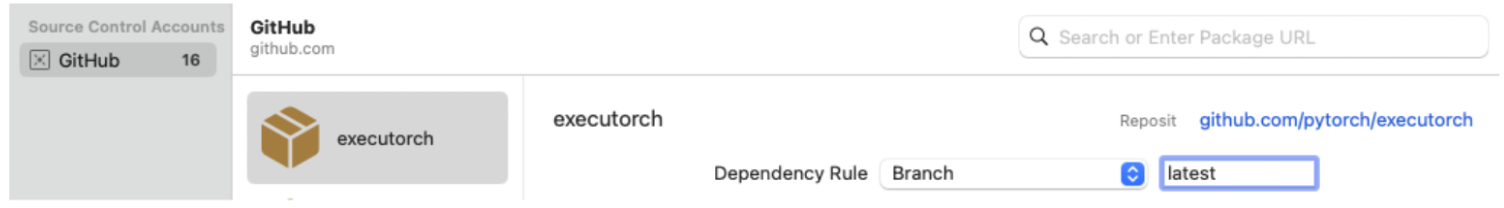

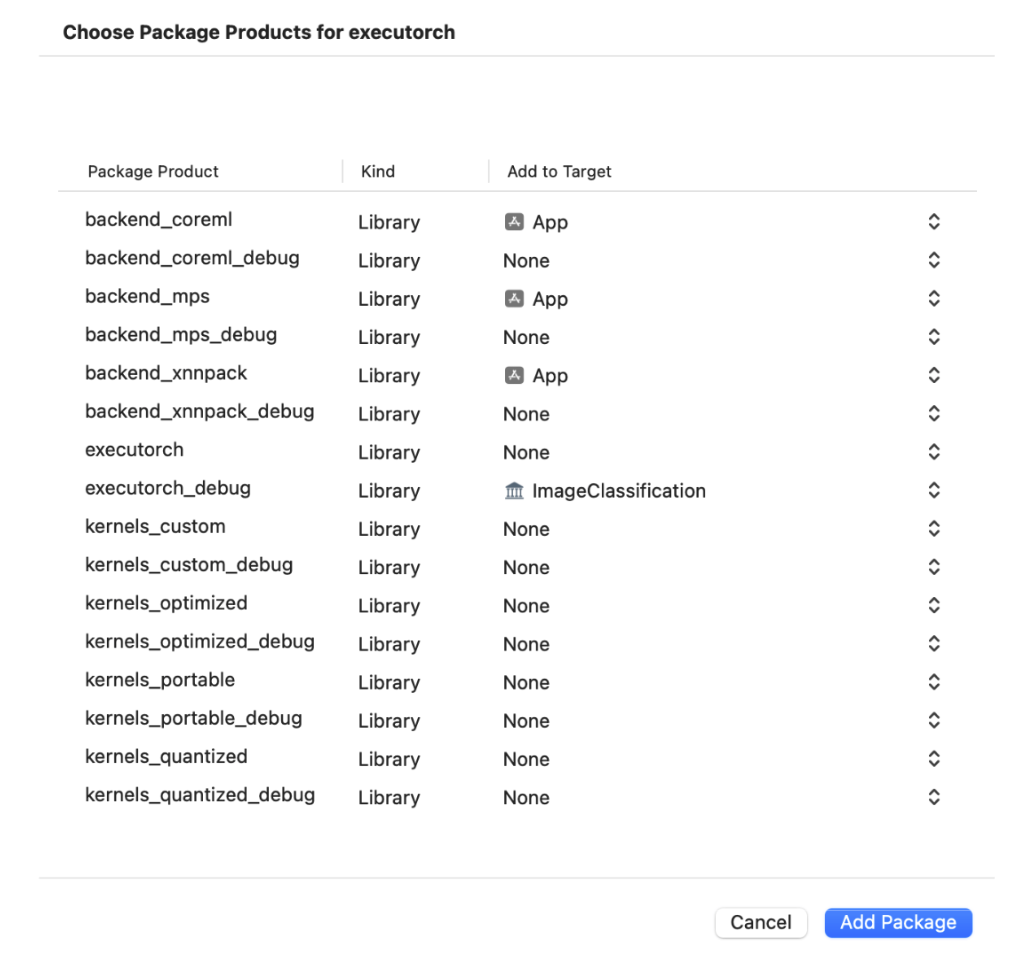

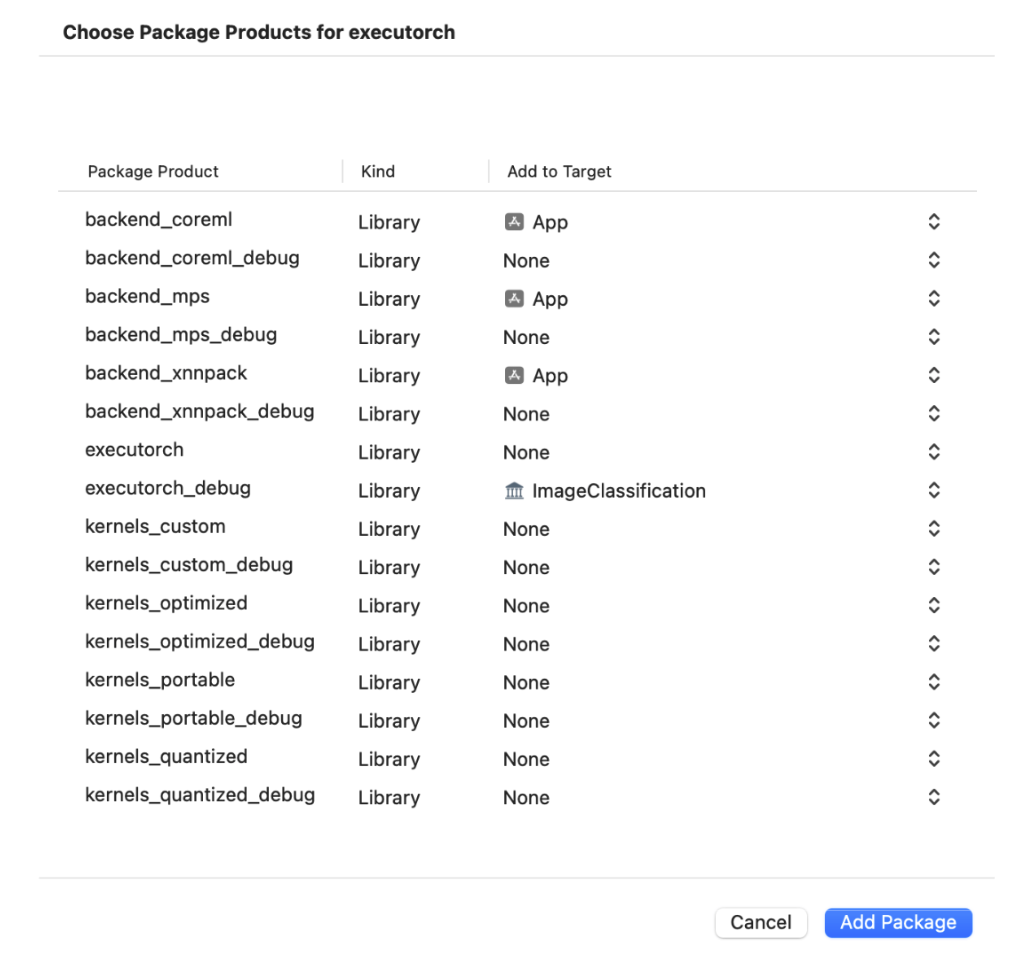

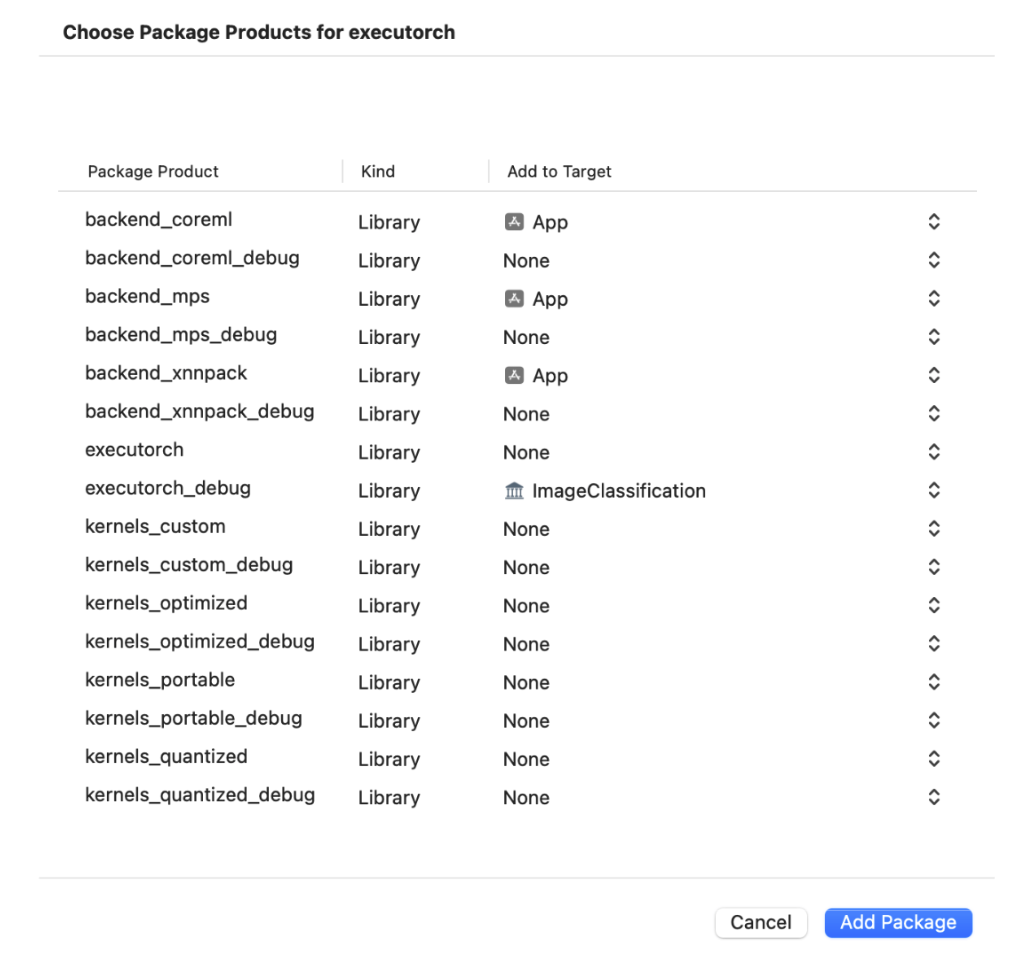

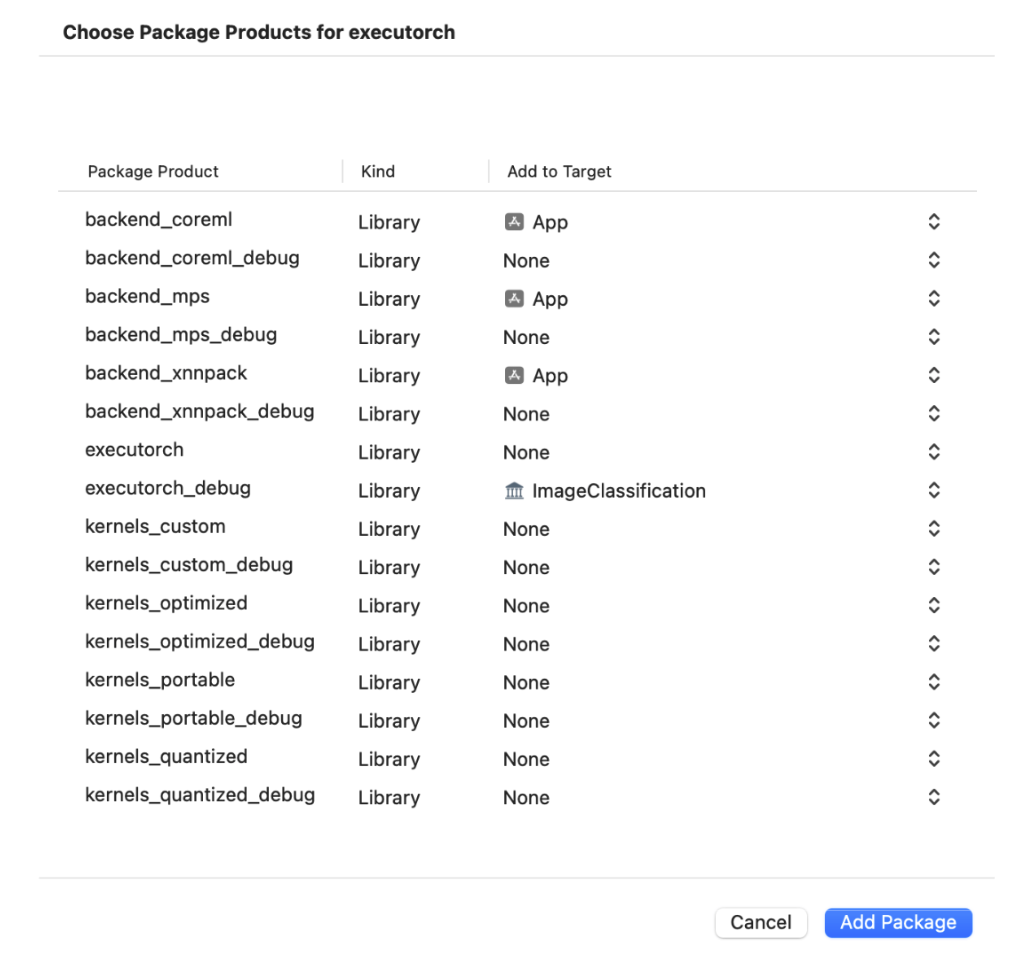

* Ensure that the ExecuTorch package dependencies are installed correctly, then select which ExecuTorch framework should link against which target.

- +

+

- +

+

* Run the app. This builds and launches the app on the phone.

@@ -83,13 +83,13 @@ For more details integrating and Running ExecuTorch on Apple Platforms, checkout

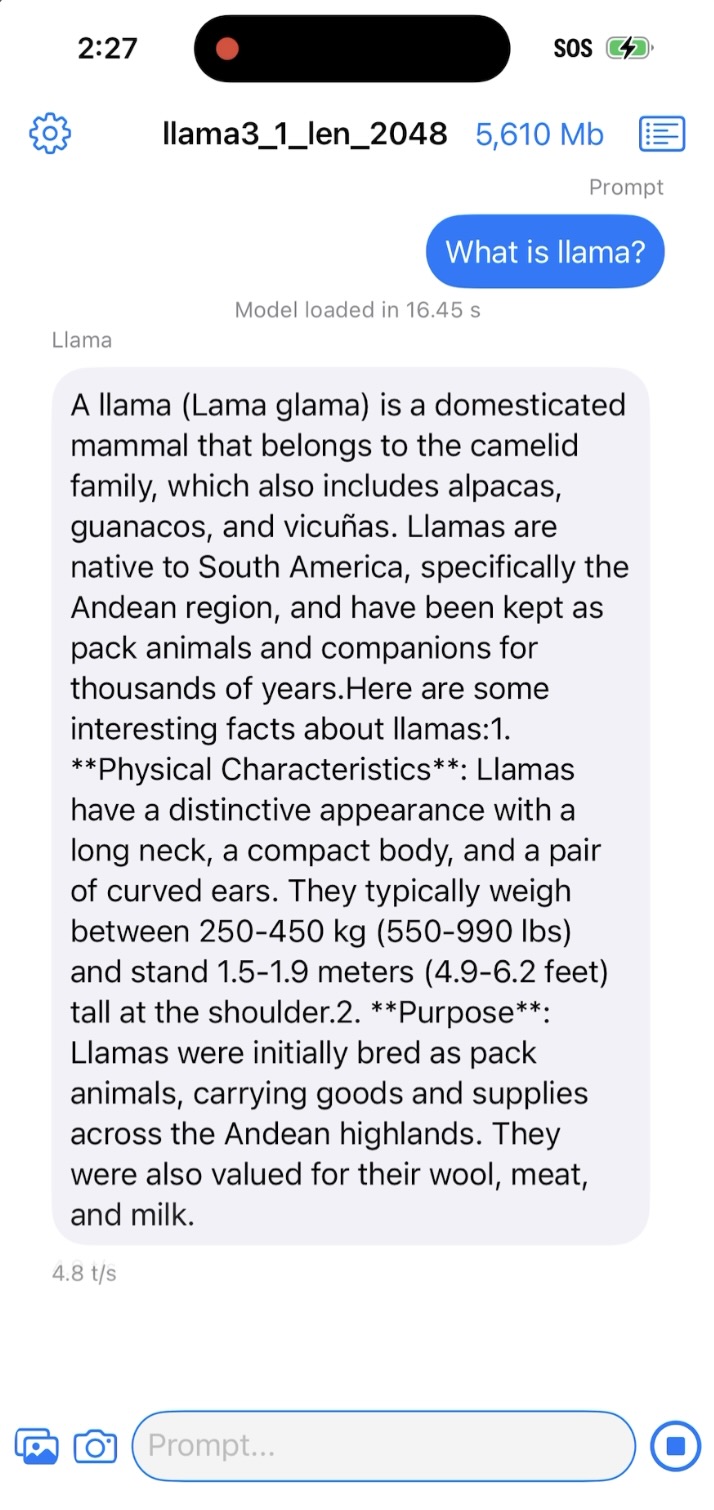

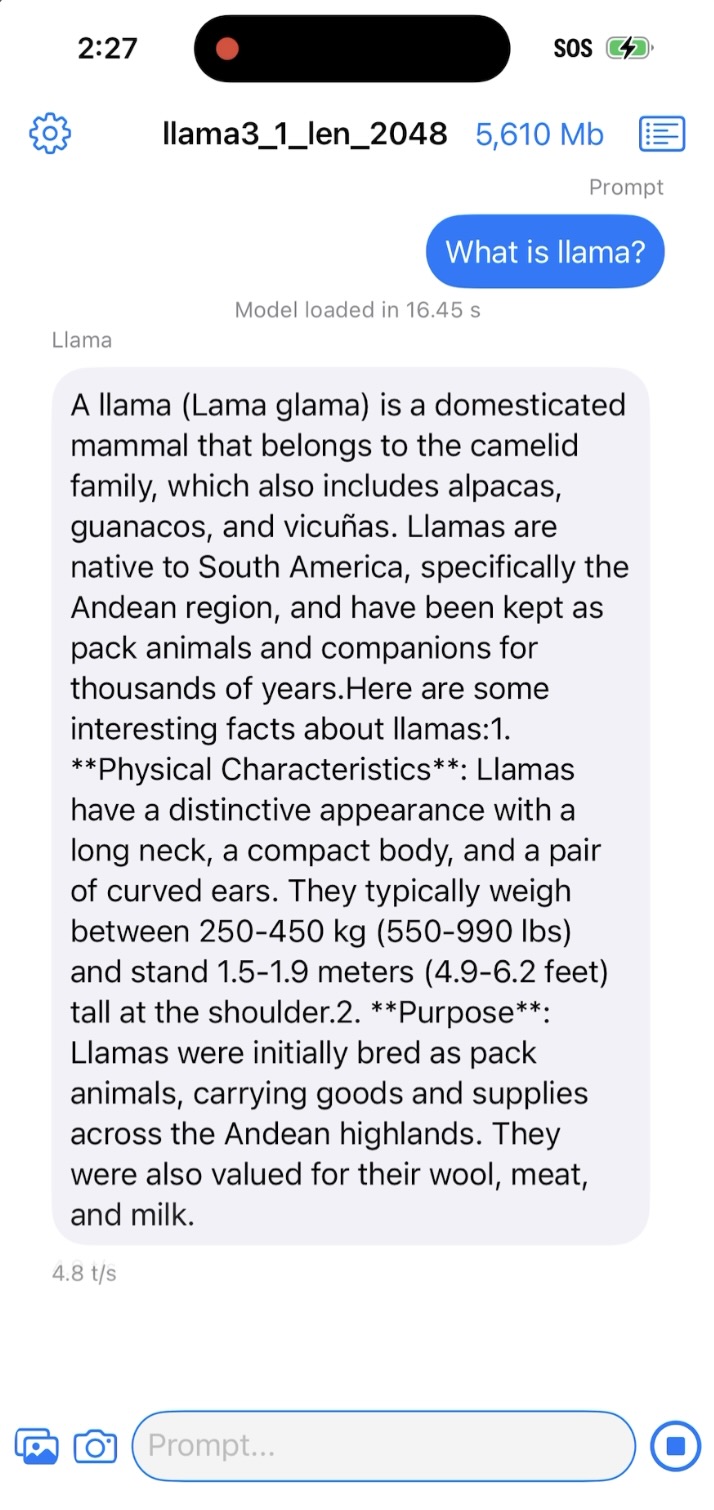

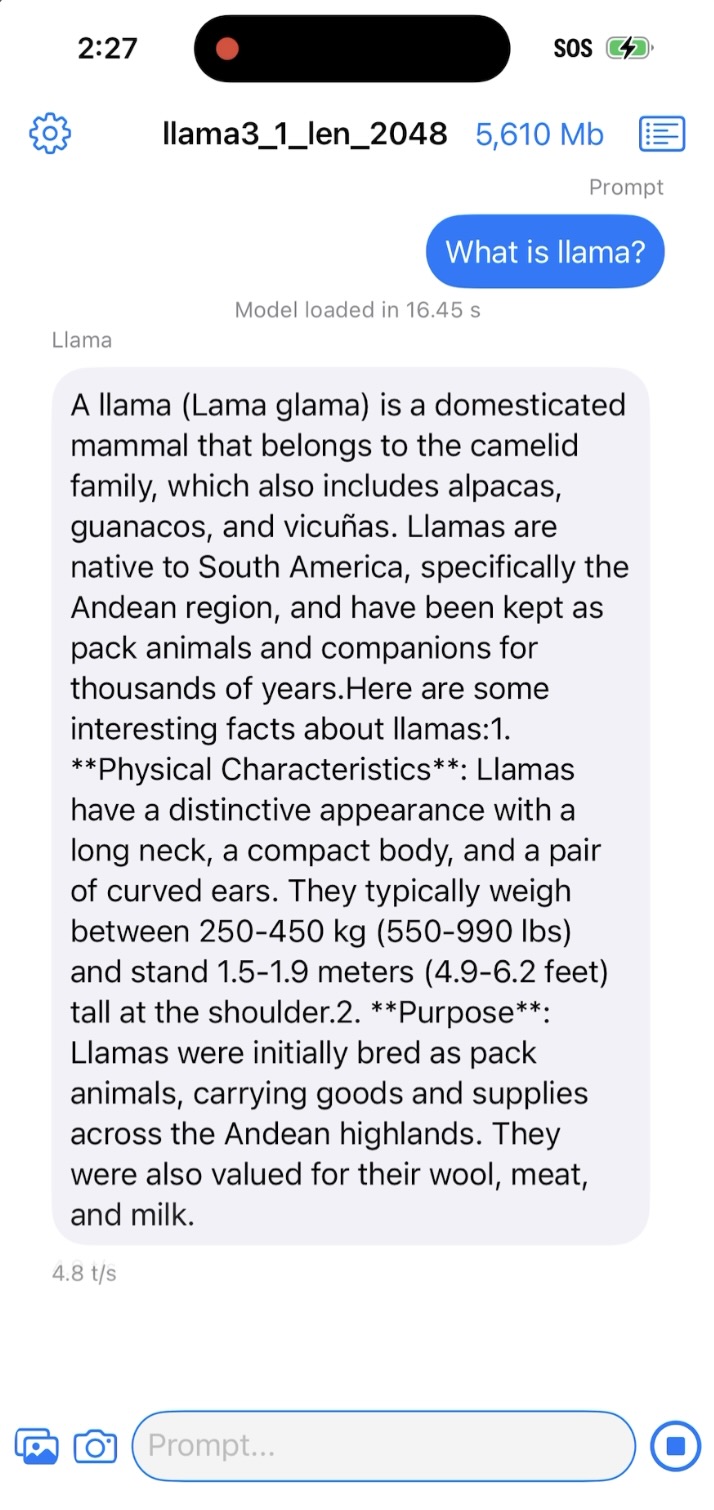

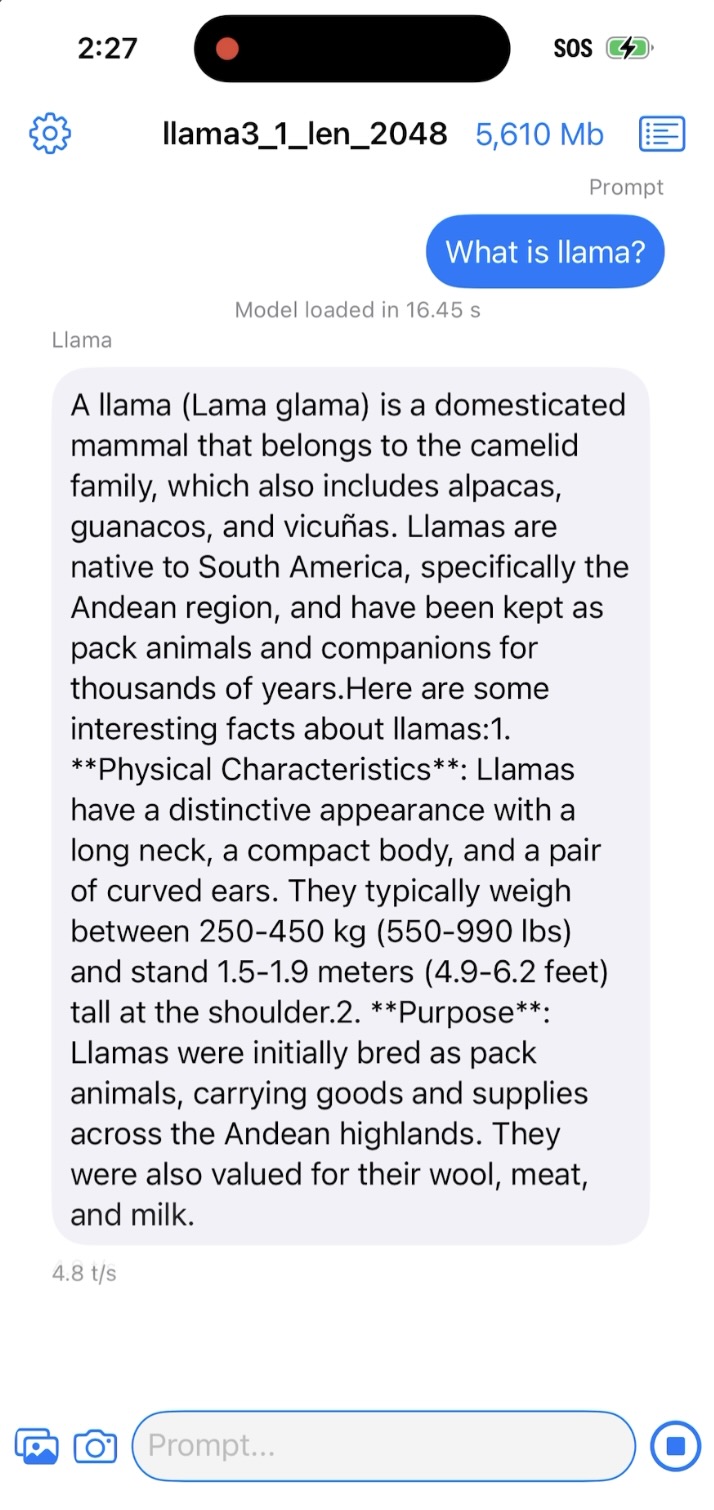

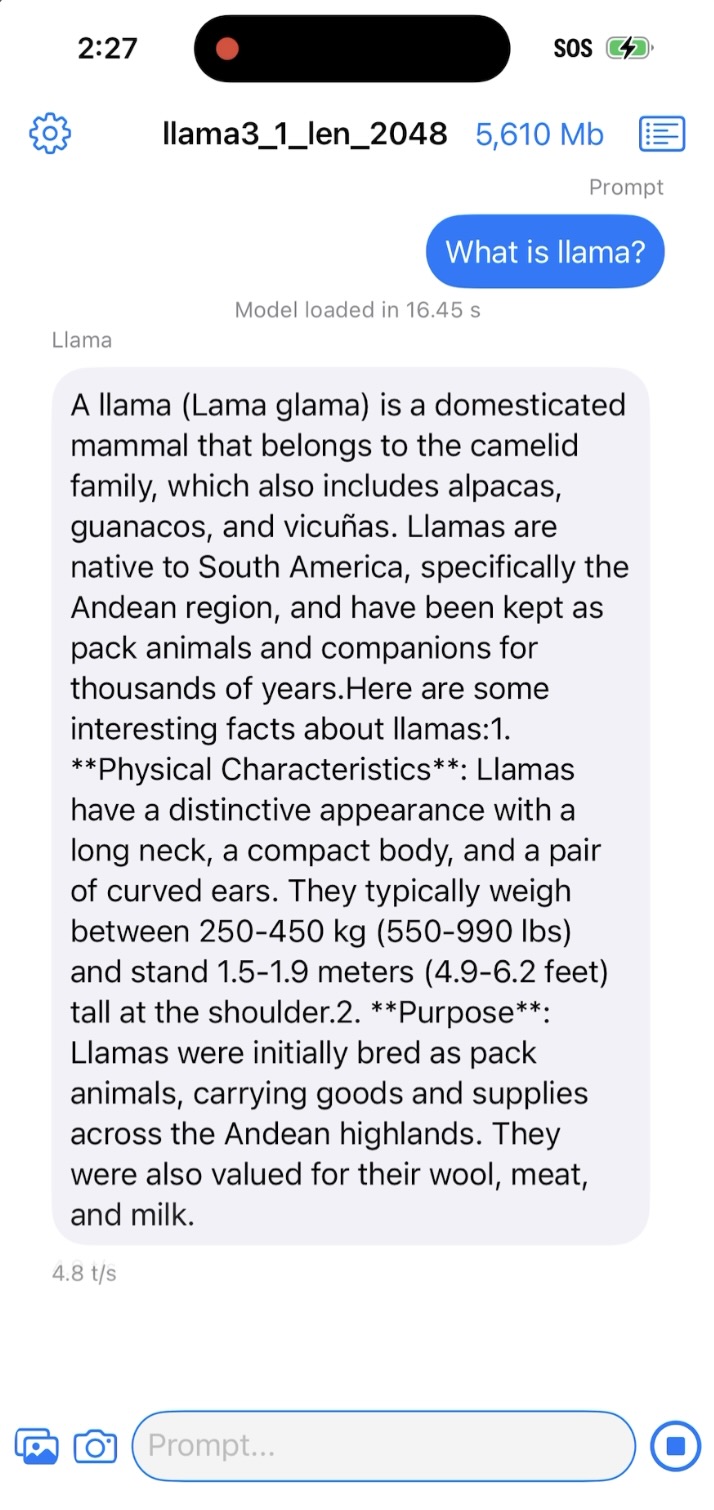

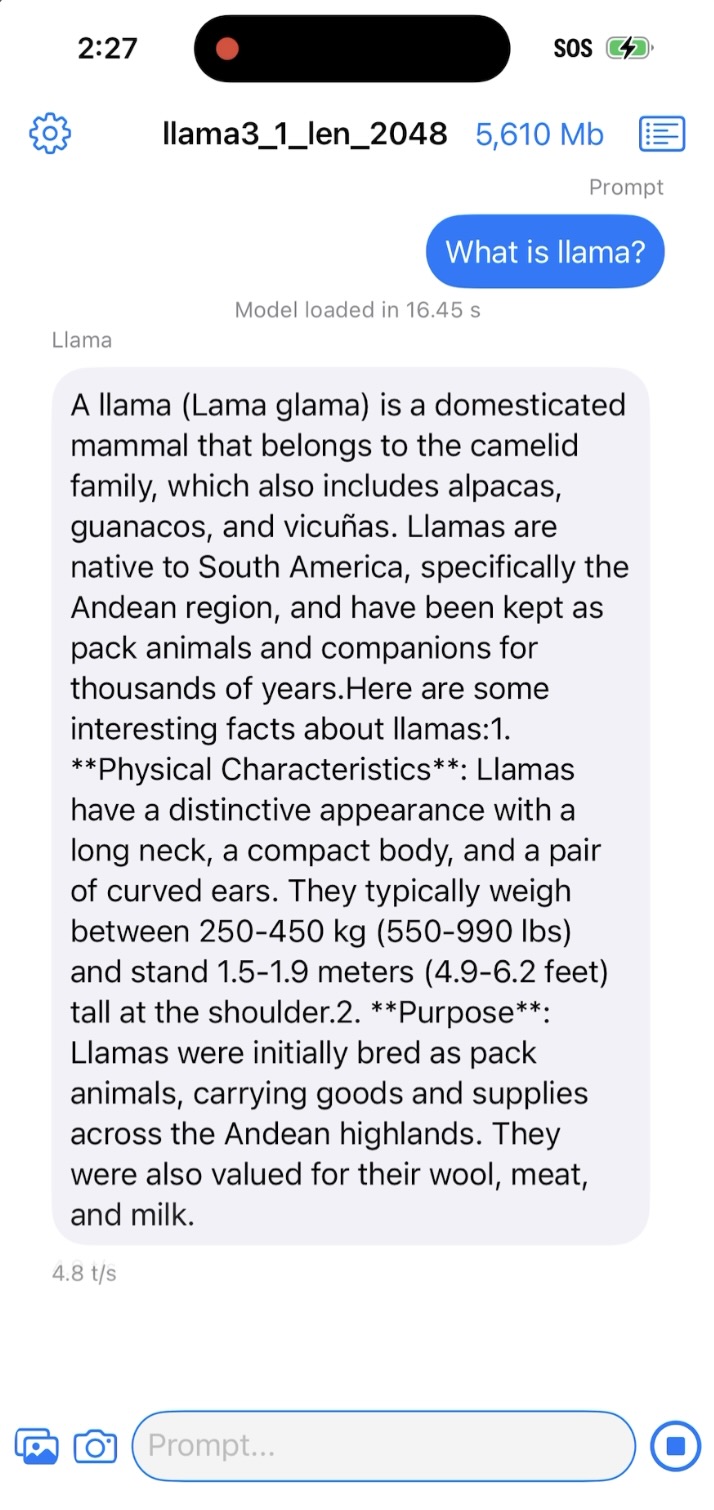

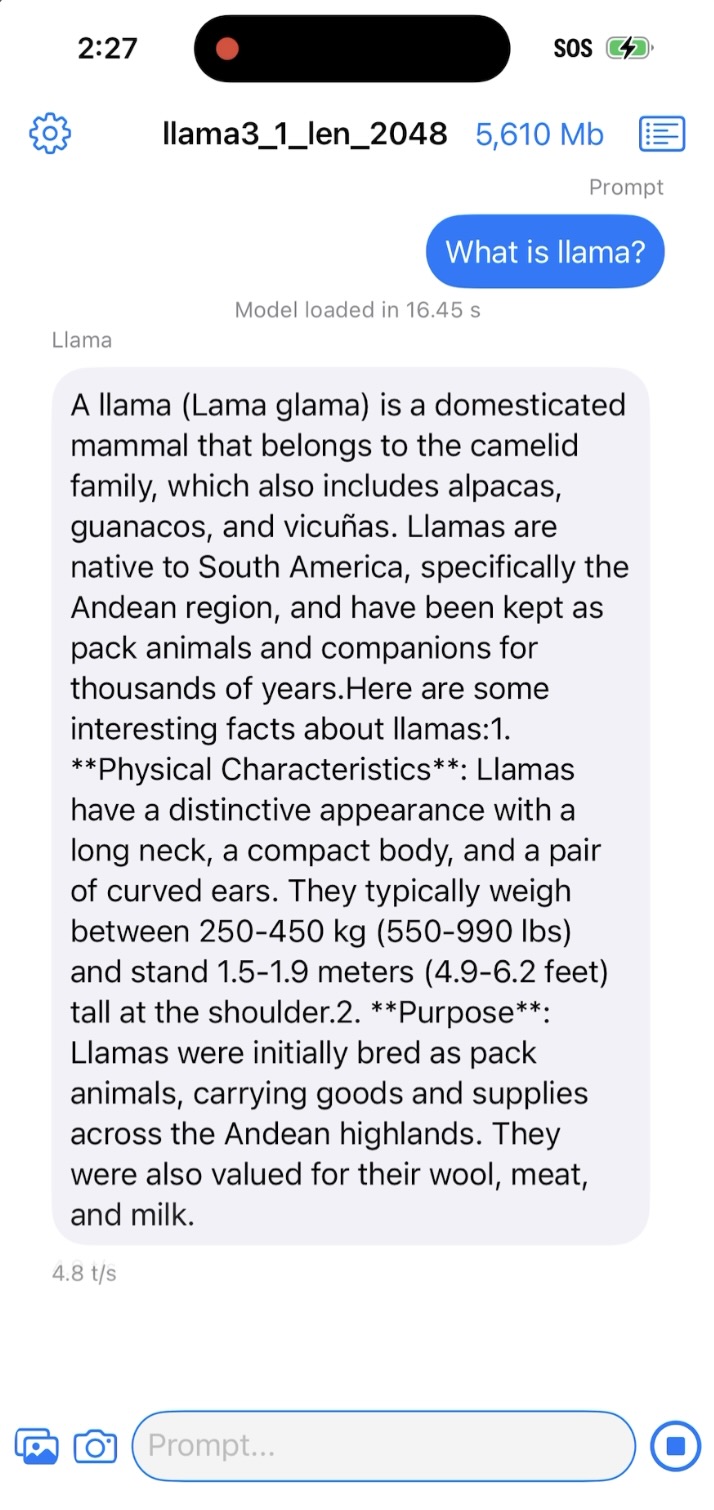

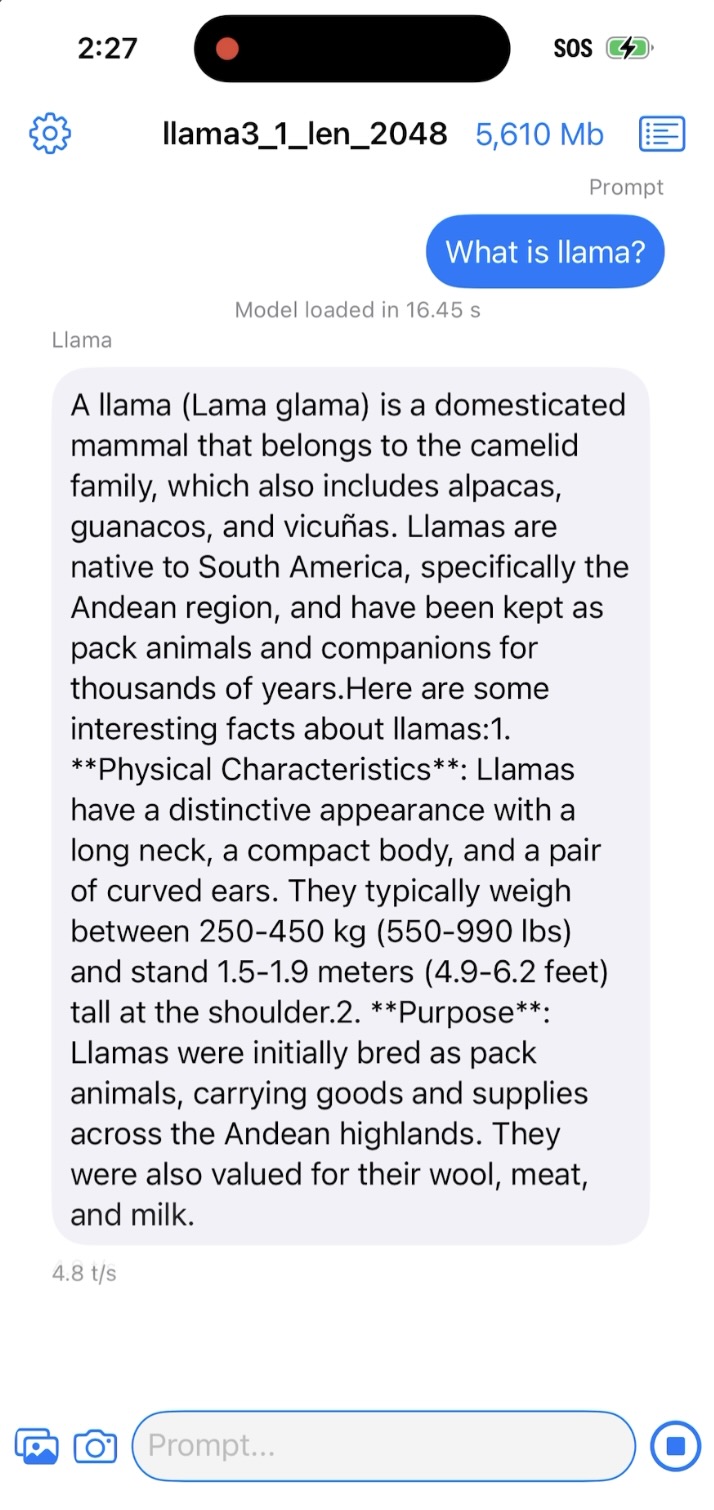

If the app successfully run on your device, you should see something like below:

- +

+

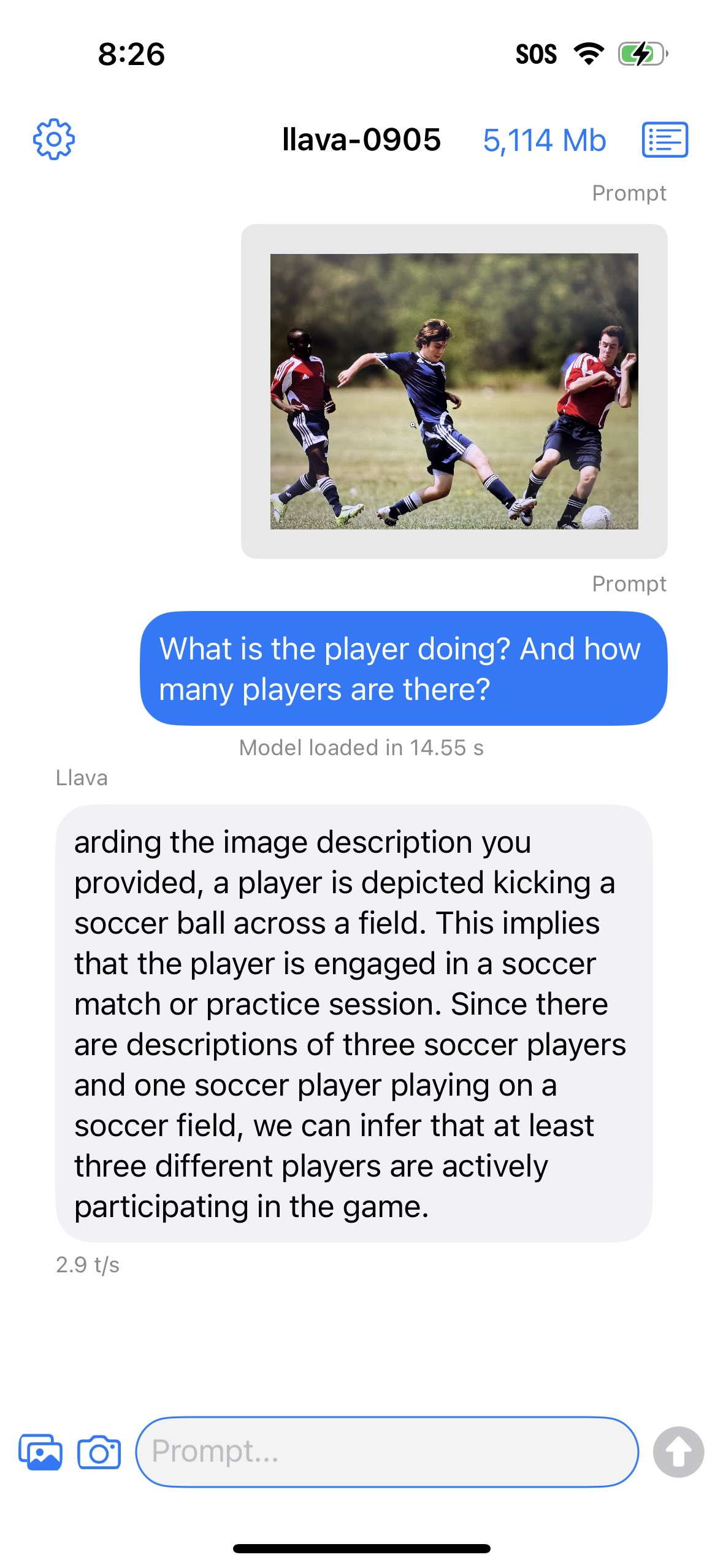

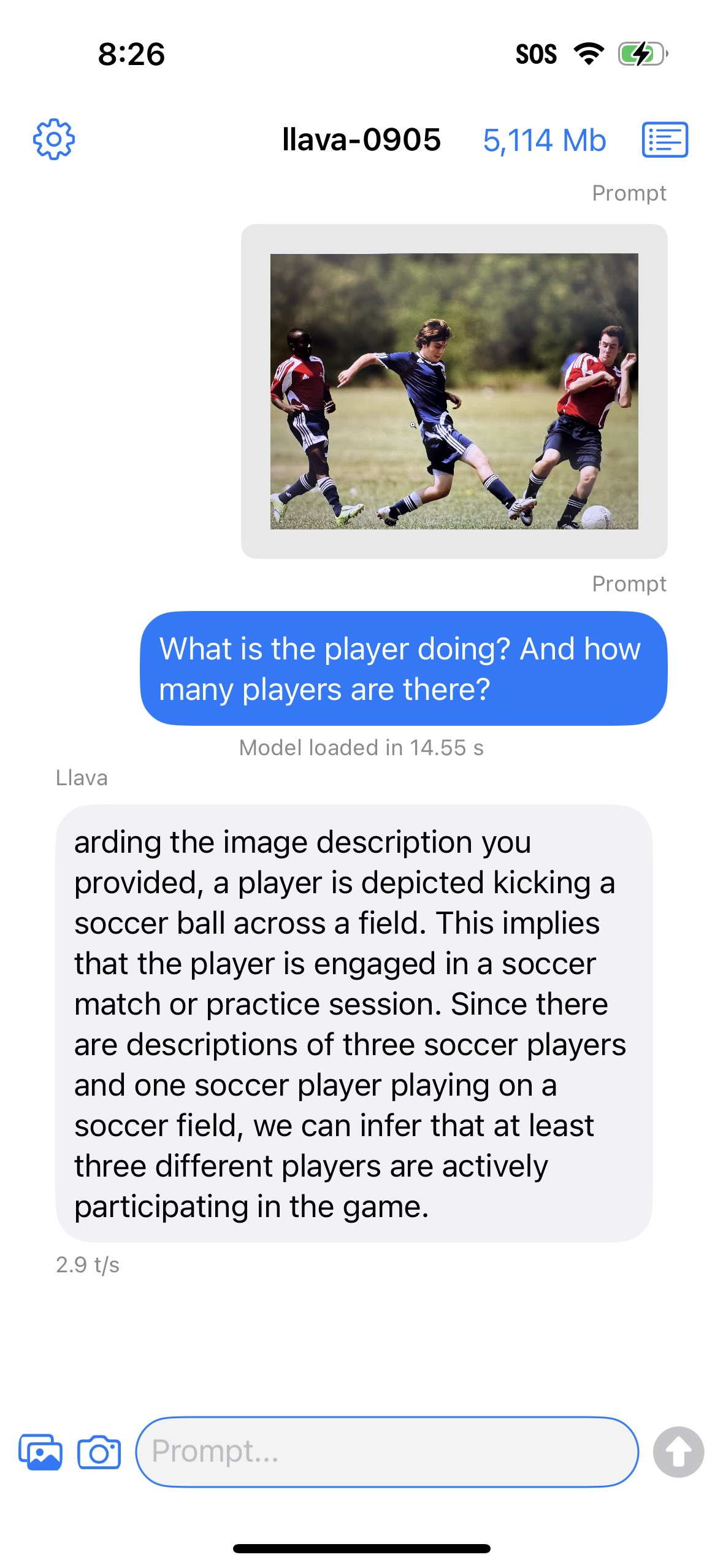

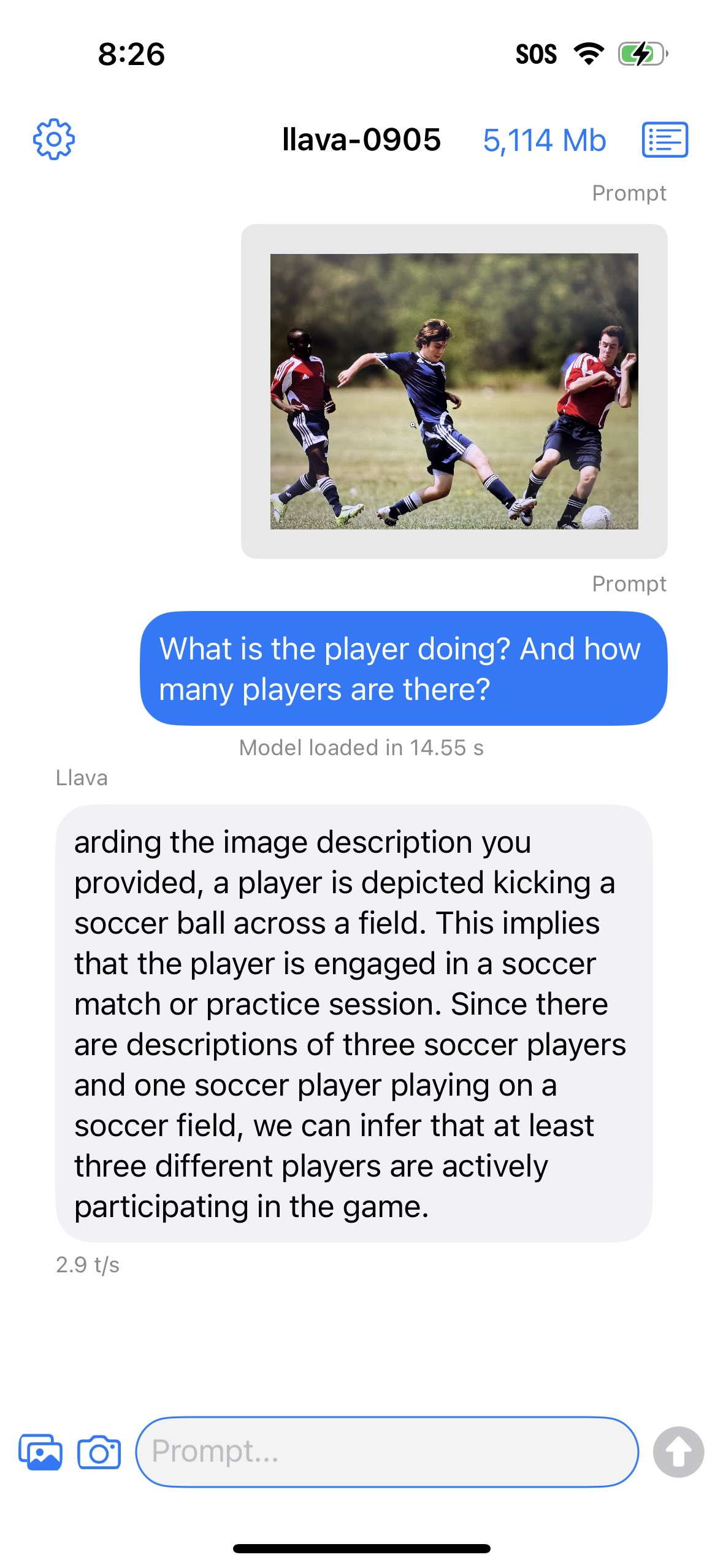

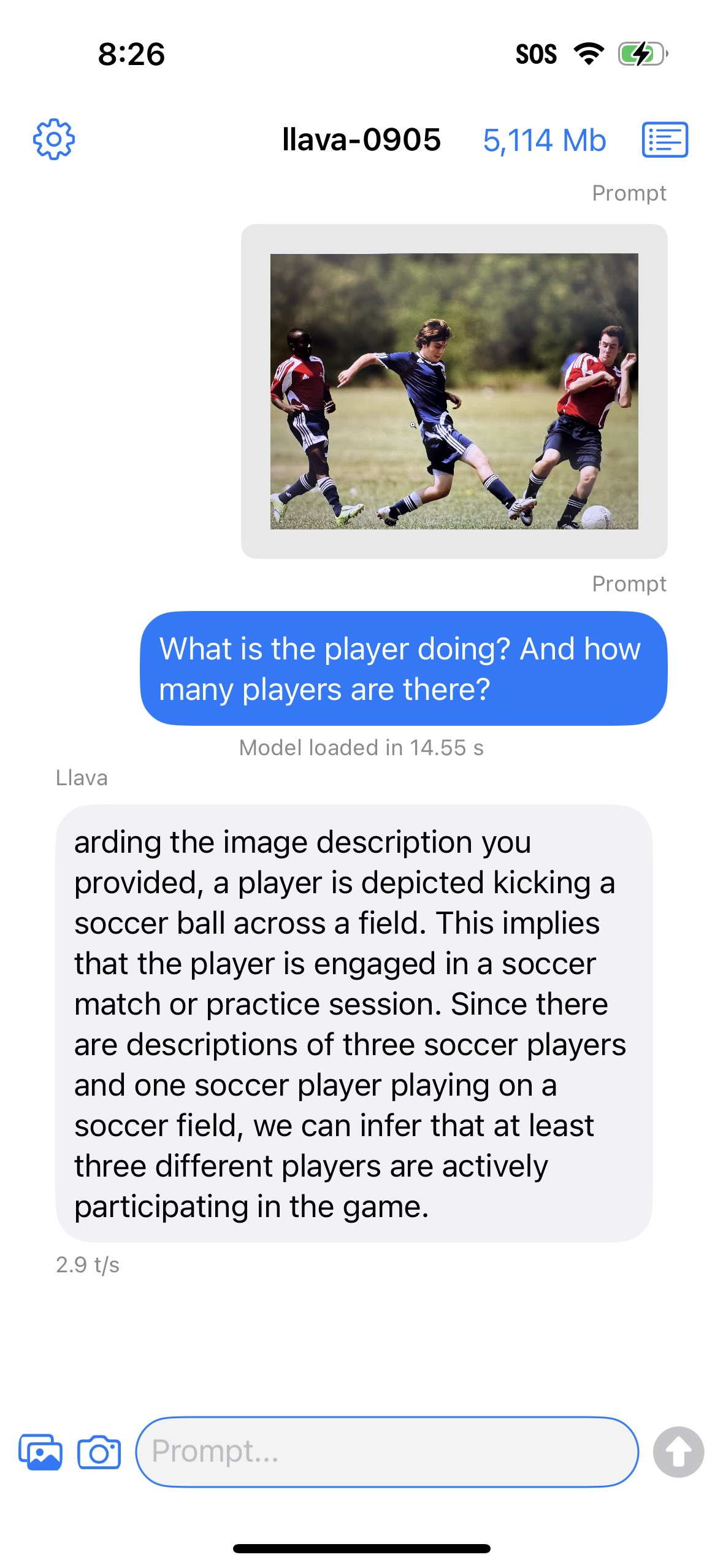

For Llava 1.5 models, you can select and image (via image/camera selector button) before typing prompt and send button.

- +

+

## Reporting Issues

diff --git a/examples/demo-apps/apple_ios/LLaMA/docs/delegates/mps_README.md b/examples/demo-apps/apple_ios/LLaMA/docs/delegates/mps_README.md

index 0c0fd9a5366..19b14ae4c30 100644

--- a/examples/demo-apps/apple_ios/LLaMA/docs/delegates/mps_README.md

+++ b/examples/demo-apps/apple_ios/LLaMA/docs/delegates/mps_README.md

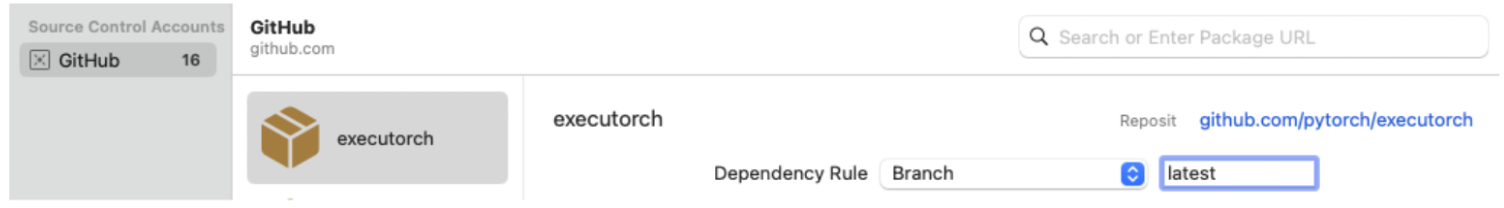

@@ -95,19 +95,19 @@ Note: To access logs, link against the Debug build of the ExecuTorch runtime, i.

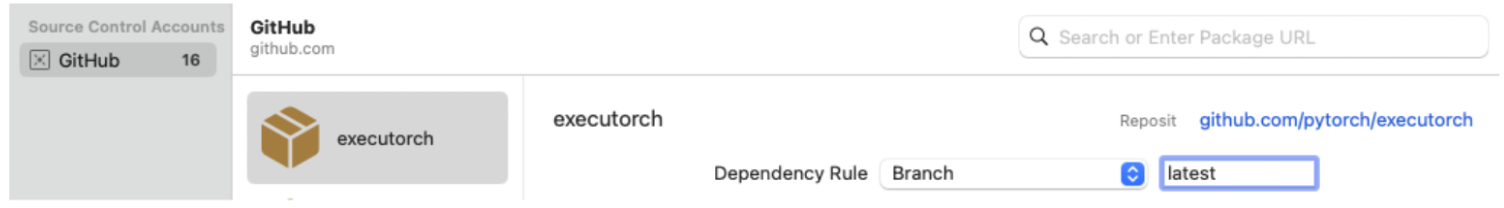

For more details integrating and Running ExecuTorch on Apple Platforms, checkout this [link](https://pytorch.org/executorch/main/apple-runtime.html).

- +

+

Then select which ExecuTorch framework should link against which target.

- +

+

Click “Run” to build the app and run in on your iPhone. If the app successfully run on your device, you should see something like below:

- +

+

## Reporting Issues

diff --git a/examples/demo-apps/apple_ios/LLaMA/docs/delegates/xnnpack_README.md b/examples/demo-apps/apple_ios/LLaMA/docs/delegates/xnnpack_README.md

index 76a5cf448a4..fbe709742dd 100644

--- a/examples/demo-apps/apple_ios/LLaMA/docs/delegates/xnnpack_README.md

+++ b/examples/demo-apps/apple_ios/LLaMA/docs/delegates/xnnpack_README.md

@@ -95,25 +95,25 @@ Note: To access logs, link against the Debug build of the ExecuTorch runtime, i.

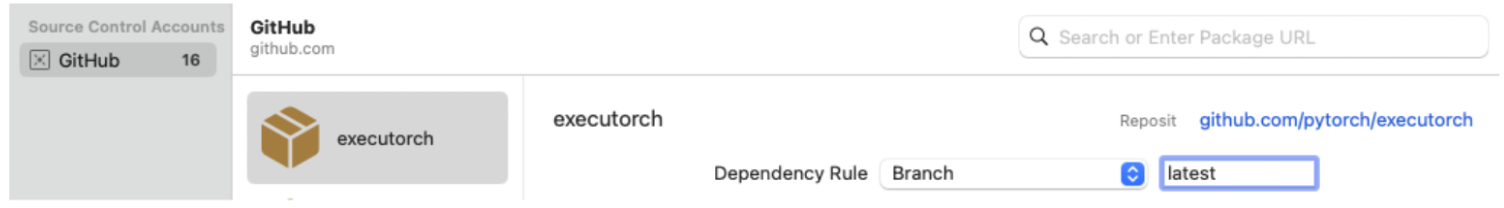

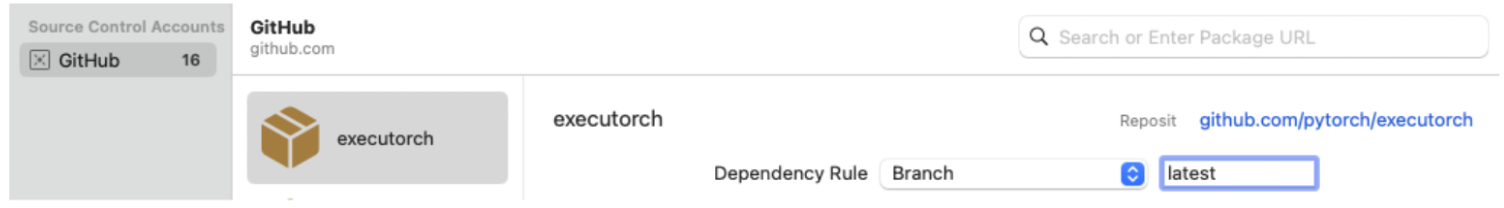

For more details integrating and Running ExecuTorch on Apple Platforms, checkout this [link](https://pytorch.org/executorch/main/apple-runtime.html).

- +

+

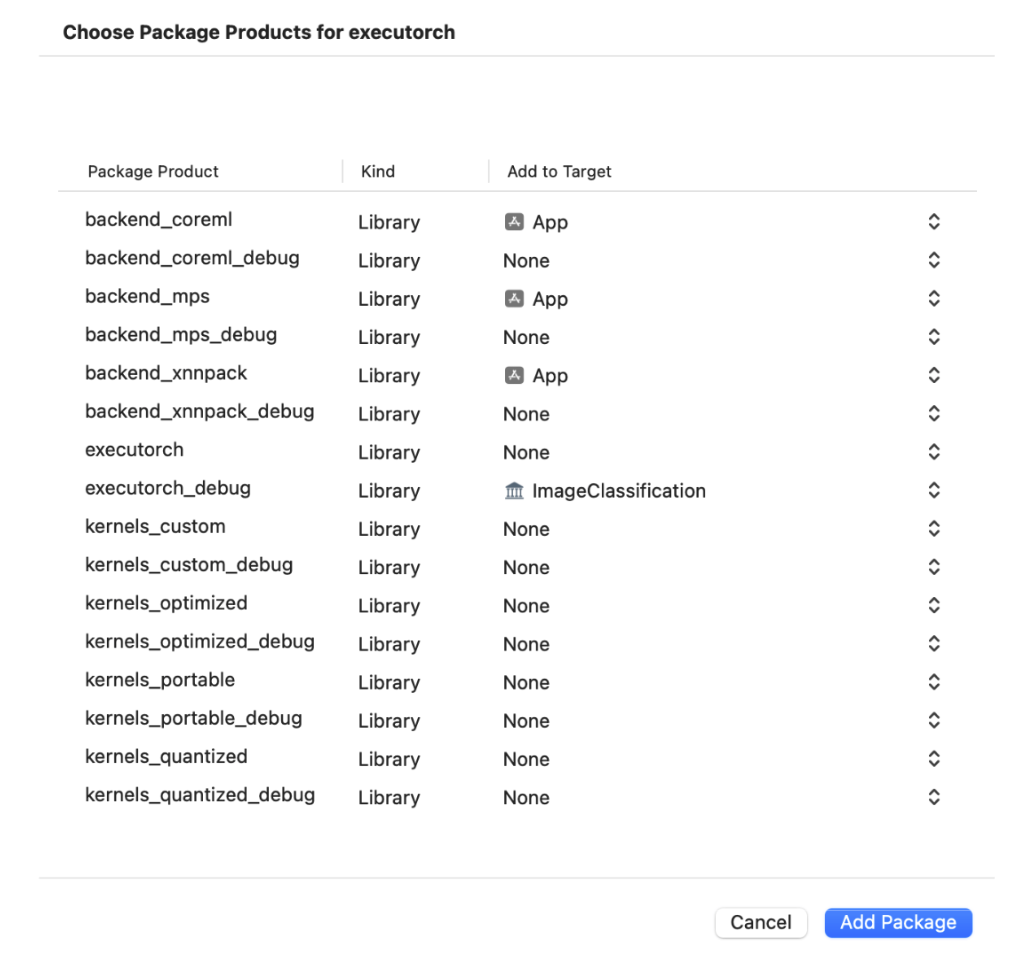

Then select which ExecuTorch framework should link against which target.

- +

+

Click “Run” to build the app and run in on your iPhone. If the app successfully run on your device, you should see something like below:

- +

+

For Llava 1.5 models, you can select and image (via image/camera selector button) before typing prompt and send button.

- +

+

## Reporting Issues

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+