diff --git a/README.md b/README.md

index ed649e8..f423abe 100644

--- a/README.md

+++ b/README.md

@@ -63,6 +63,7 @@ To get started with RAG, either from scratch or using a popular framework like L

| [/RAG/04_advanced_redisvl.ipynb](python-recipes/RAG/04_advanced_redisvl.ipynb) | Advanced RAG techniques |

| [/RAG/05_nvidia_ai_rag_redis.ipynb](python-recipes/RAG/05_nvidia_ai_rag_redis.ipynb) | RAG using Redis and Nvidia NIMs |

| [/RAG/06_ragas_evaluation.ipynb](python-recipes/RAG/06_ragas_evaluation.ipynb) | Utilize the RAGAS framework to evaluate RAG performance |

+| [/RAG/07_user_role_based_rag.ipynb](python-recipes/RAG/07_user_role_based_rag.ipynb) | Implement a simple RBAC policy with vector search using Redis |

### LLM Memory

LLMs are stateless. To maintain context within a conversation chat sessions must be stored and resent to the LLM. Redis manages the storage and retrieval of chat sessions to maintain context and conversational relevance.

diff --git a/assets/role-based-rag.png b/assets/role-based-rag.png

new file mode 100644

index 0000000..4c5d6a5

Binary files /dev/null and b/assets/role-based-rag.png differ

diff --git a/python-recipes/RAG/07_user_role_based_rag.ipynb b/python-recipes/RAG/07_user_role_based_rag.ipynb

new file mode 100644

index 0000000..fbf6139

--- /dev/null

+++ b/python-recipes/RAG/07_user_role_based_rag.ipynb

@@ -0,0 +1,1789 @@

+{

+ "cells": [

+ {

+ "cell_type": "markdown",

+ "id": "XwR-PYCFu0Nd",

+ "metadata": {

+ "id": "XwR-PYCFu0Nd"

+ },

+ "source": [

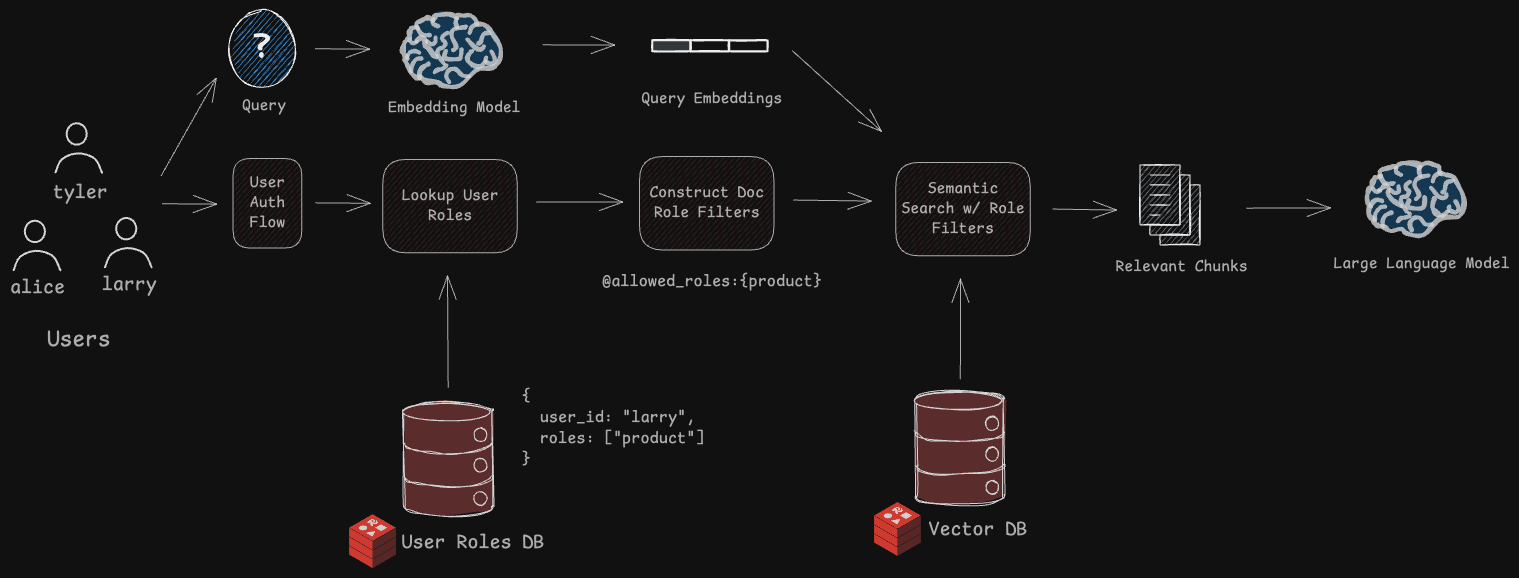

+ "# Building a Role-Based RAG Pipeline with Redis\n",

+ "\n",

+ "This notebook demonstrates a simplified setup for a **Role-Based Retrieval Augmented Generation (RAG)** pipeline, where:\n",

+ "\n",

+ "1. Each **User** has one or more **roles**.\n",

+ "2. Knowledge base **Documents** in Redis are tagged with the official roles that can access them (`allowed_roles`).\n",

+ "3. A unified **query flow** ensures a user only sees documents that match at least one of their roles.\n",

+ "\n",

+ ""

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "58823e66",

+ "metadata": {

+ "id": "58823e66"

+ },

+ "source": [

+ "\n",

+ "## Let's Begin!\n",

+ " "

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 1,

+ "id": "4e0aa177",

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/"

+ },

+ "id": "4e0aa177",

+ "outputId": "0ba61596-b3e4-442f-cd9c-8b480f1c52d1"

+ },

+ "outputs": [

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "\u001b[?25l \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m0.0/99.3 kB\u001b[0m \u001b[31m?\u001b[0m eta \u001b[36m-:--:--\u001b[0m\r\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m99.3/99.3 kB\u001b[0m \u001b[31m7.1 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

+ "\u001b[?25h\u001b[?25l \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m0.0/2.5 MB\u001b[0m \u001b[31m?\u001b[0m eta \u001b[36m-:--:--\u001b[0m\r\u001b[2K \u001b[91m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[91m╸\u001b[0m \u001b[32m2.5/2.5 MB\u001b[0m \u001b[31m91.5 MB/s\u001b[0m eta \u001b[36m0:00:01\u001b[0m\r\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m2.5/2.5 MB\u001b[0m \u001b[31m55.0 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

+ "\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m298.0/298.0 kB\u001b[0m \u001b[31m25.9 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

+ "\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m1.0/1.0 MB\u001b[0m \u001b[31m60.2 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

+ "\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m412.2/412.2 kB\u001b[0m \u001b[31m34.2 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

+ "\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m261.5/261.5 kB\u001b[0m \u001b[31m19.1 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

+ "\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m46.0/46.0 kB\u001b[0m \u001b[31m4.3 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

+ "\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m86.8/86.8 kB\u001b[0m \u001b[31m8.6 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

+ "\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m50.8/50.8 kB\u001b[0m \u001b[31m4.6 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

+ "\u001b[?25h"

+ ]

+ }

+ ],

+ "source": [

+ "# NBVAL_SKIP\n",

+ "%pip install -q 'redisvl>=0.3.8' openai langchain-community pypdf"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "fXsGCsLQu0Ne",

+ "metadata": {

+ "id": "fXsGCsLQu0Ne"

+ },

+ "source": [

+ "## 1. High-Level Data Flow & Setup\n",

+ "\n",

+ "1. **User Creation & Role Management**\n",

+ " - A user is stored at `user:{user_id}` in Redis with a JSON structure containing the user’s roles.\n",

+ " - We can create, update, or delete users as needed.\n",

+ " - **This serves as a simple look up layer and should NOT replace your production-ready auth API flow**\n",

+ "\n",

+ "2. **Document Storage**\n",

+ " - Documents chunks are stored at `doc:{doc_id}:{chunk_id}` in Redis as JSON.\n",

+ " - Each document chunk includes fields such as `doc_id`, `chunk_id`, `content`, `allowed_roles`, and an `embedding` (for vector similarity).\n",

+ "\n",

+ "3. **Querying / Search**\n",

+ " - User roles are retrieved from Redis.\n",

+ " - We perform a vector similarity search (or any other type of retrieval) on the documents.\n",

+ " - We filter the results so that only documents whose `allowed_roles` intersect with the user’s roles are returned.\n",

+ "\n",

+ "4. **RAG Integration**\n",

+ " - The returned documents can be fed into a Large Language Model (LLM) to provide context and generate an answer.\n",

+ "\n",

+ "First, we’ll set up our Python environment and Redis connection.\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "73c33af6",

+ "metadata": {

+ "id": "73c33af6"

+ },

+ "source": [

+ "### Download Documents\n",

+ "Running remotely or in collab? Run this cell to download the necessary datasets."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "48971c52",

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/"

+ },

+ "id": "48971c52",

+ "outputId": "e17d146a-43be-41fb-b029-f330d79f1a65"

+ },

+ "outputs": [],

+ "source": [

+ "# NBVAL_SKIP\n",

+ "!git clone https://github.com/redis-developer/redis-ai-resources.git temp_repo\n",

+ "!mkdir -p resources\n",

+ "!mv temp_repo/python-recipes/RAG/resources/aapl-10k-2023.pdf resources/\n",

+ "!mv temp_repo/python-recipes/RAG/resources/2022-chevrolet-colorado-ebrochure.pdf resources/\n",

+ "!rm -rf temp_repo"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "993371a2",

+ "metadata": {

+ "id": "993371a2"

+ },

+ "source": [

+ "### Run Redis Stack\n",

+ "\n",

+ "For this tutorial you will need a running instance of Redis if you don't already have one.\n",

+ "\n",

+ "#### For Colab\n",

+ "Use the shell script below to download, extract, and install [Redis Stack](https://redis.io/docs/getting-started/install-stack/) directly from the Redis package archive."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 4,

+ "id": "8edc5862",

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/"

+ },

+ "id": "8edc5862",

+ "outputId": "df2643ed-2422-4ee5-bd42-bec17b405eec"

+ },

+ "outputs": [

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "deb [signed-by=/usr/share/keyrings/redis-archive-keyring.gpg] https://packages.redis.io/deb jammy main\n",

+ "Starting redis-stack-server, database path /var/lib/redis-stack\n"

+ ]

+ }

+ ],

+ "source": [

+ "# NBVAL_SKIP\n",

+ "%%sh\n",

+ "curl -fsSL https://packages.redis.io/gpg | sudo gpg --dearmor -o /usr/share/keyrings/redis-archive-keyring.gpg\n",

+ "echo \"deb [signed-by=/usr/share/keyrings/redis-archive-keyring.gpg] https://packages.redis.io/deb $(lsb_release -cs) main\" | sudo tee /etc/apt/sources.list.d/redis.list\n",

+ "sudo apt-get update > /dev/null 2>&1\n",

+ "sudo apt-get install redis-stack-server > /dev/null 2>&1\n",

+ "redis-stack-server --daemonize yes"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "bc571319",

+ "metadata": {

+ "id": "bc571319"

+ },

+ "source": [

+ "#### For Alternative Environments\n",

+ "There are many ways to get the necessary redis-stack instance running\n",

+ "1. On cloud, deploy a [FREE instance of Redis in the cloud](https://redis.com/try-free/). Or, if you have your\n",

+ "own version of Redis Enterprise running, that works too!\n",

+ "2. Per OS, [see the docs](https://redis.io/docs/latest/operate/oss_and_stack/install/install-stack/)\n",

+ "3. With docker: `docker run -d --name redis-stack-server -p 6379:6379 redis/redis-stack-server:latest`"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 5,

+ "id": "qU49fNVnu0Nf",

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/"

+ },

+ "id": "qU49fNVnu0Nf",

+ "outputId": "4d2f34c3-6179-4f1d-eff7-5e8e9d8fd58b"

+ },

+ "outputs": [

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "Successfully connected to Redis\n"

+ ]

+ }

+ ],

+ "source": [

+ "import os\n",

+ "\n",

+ "from redis import Redis\n",

+ "\n",

+ "# Replace values below with your own if using Redis Cloud instance\n",

+ "REDIS_HOST = os.getenv(\"REDIS_HOST\", \"localhost\") # ex: \"redis-18374.c253.us-central1-1.gce.cloud.redislabs.com\"\n",

+ "REDIS_PORT = os.getenv(\"REDIS_PORT\", \"6379\") # ex: 18374\n",

+ "REDIS_PASSWORD = os.getenv(\"REDIS_PASSWORD\", \"\") # ex: \"1TNxTEdYRDgIDKM2gDfasupCADXXXX\"\n",

+ "\n",

+ "# If SSL is enabled on the endpoint, use rediss:// as the URL prefix\n",

+ "REDIS_URL = f\"redis://:{REDIS_PASSWORD}@{REDIS_HOST}:{REDIS_PORT}\"\n",

+ "\n",

+ "# Connect to Redis (adjust host/port if needed)\n",

+ "redis_client = Redis.from_url(REDIS_URL)\n",

+ "redis_client.ping()\n",

+ "\n",

+ "print(\"Successfully connected to Redis\")"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "aqzMteQsu0Nf",

+ "metadata": {

+ "id": "aqzMteQsu0Nf"

+ },

+ "source": [

+ "## 2. User Management\n",

+ "\n",

+ "Below is a simple `User` class that stores a user in Redis as JSON. We:\n",

+ "\n",

+ "- Use a Redis key of the form `user:{user_id}`.\n",

+ "- Store fields like `user_id`, `roles`, etc.\n",

+ "- Provide CRUD methods (Create, Read, Update, Delete) for user objects.\n",

+ "\n",

+ "**Data Structure Example**\n",

+ "```json\n",

+ "{\n",

+ " \"user_id\": \"alice\",\n",

+ " \"roles\": [\"finance\", \"manager\"]\n",

+ "}\n",

+ "```\n",

+ "\n",

+ "We'll also include some basic checks to ensure we don't add duplicate roles, handle empty role lists, etc.\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 6,

+ "id": "38pdjXJvu0Nf",

+ "metadata": {

+ "id": "38pdjXJvu0Nf"

+ },

+ "outputs": [],

+ "source": [

+ "from typing import List, Optional\n",

+ "from enum import Enum\n",

+ "\n",

+ "\n",

+ "class UserRoles(str, Enum):\n",

+ " FINANCE = \"finance\"\n",

+ " MANAGER = \"manager\"\n",

+ " EXECUTIVE = \"executive\"\n",

+ " HR = \"hr\"\n",

+ " SALES = \"sales\"\n",

+ " PRODUCT = \"product\"\n",

+ "\n",

+ "\n",

+ "class User:\n",

+ " \"\"\"\n",

+ " User class for storing user data in Redis.\n",

+ "\n",

+ " Each user has:\n",

+ " - user_id (string)\n",

+ " - roles (list of UserRoles)\n",

+ "\n",

+ " Key in Redis: user:{user_id}\n",

+ " \"\"\"\n",

+ " def __init__(\n",

+ " self,\n",

+ " redis_client: Redis,\n",

+ " user_id: str,\n",

+ " roles: Optional[List[UserRoles]] = None\n",

+ " ):\n",

+ " self.redis_client = redis_client\n",

+ " self.user_id = user_id\n",

+ " self.roles = roles or []\n",

+ "\n",

+ " @property\n",

+ " def key(self) -> str:\n",

+ " return f\"user:{self.user_id}\"\n",

+ "\n",

+ " def exists(self) -> bool:\n",

+ " \"\"\"Check if the user key exists in Redis.\"\"\"\n",

+ " return self.redis_client.exists(self.key) == 1\n",

+ "\n",

+ " def create(self):\n",

+ " \"\"\"\n",

+ " Create a new user in Redis. Fails if user already exists.\n",

+ " \"\"\"\n",

+ " if self.exists():\n",

+ " raise ValueError(f\"User {self.user_id} already exists.\")\n",

+ "\n",

+ " self.save()\n",

+ "\n",

+ " def save(self):\n",

+ " \"\"\"\n",

+ " Save (create or update) the user data in Redis.\n",

+ " If user does not exist, it will be created.\n",

+ " \"\"\"\n",

+ " data = {\n",

+ " \"user_id\": self.user_id,\n",

+ " \"roles\": [UserRoles(role).value for role in set(self.roles)] # ensure roles are unique and convert to strings\n",

+ " }\n",

+ " self.redis_client.json().set(self.key, \".\", data)\n",

+ "\n",

+ " @classmethod\n",

+ " def get(cls, redis_client: Redis, user_id):\n",

+ " \"\"\"\n",

+ " Retrieve a user from Redis.\n",

+ " \"\"\"\n",

+ " key = f\"user:{user_id}\"\n",

+ " data = redis_client.json().get(key)\n",

+ " if not data:\n",

+ " return None\n",

+ " # Convert string roles back to UserRoles enum\n",

+ " roles = [UserRoles(role) for role in data.get(\"roles\", [])]\n",

+ " return cls(redis_client, data[\"user_id\"], roles)\n",

+ "\n",

+ " def update_roles(self, roles: List[UserRoles]):\n",

+ " \"\"\"\n",

+ " Overwrite the user's roles in Redis.\n",

+ " \"\"\"\n",

+ " self.roles = roles\n",

+ " self.save()\n",

+ "\n",

+ " def add_role(self, role: UserRoles):\n",

+ " \"\"\"Add a single role to the user.\"\"\"\n",

+ " if role not in self.roles:\n",

+ " self.roles.append(role)\n",

+ " self.save()\n",

+ "\n",

+ " def remove_role(self, role: UserRoles):\n",

+ " \"\"\"Remove a single role from the user.\"\"\"\n",

+ " if role in self.roles:\n",

+ " self.roles.remove(role)\n",

+ " self.save()\n",

+ "\n",

+ " def delete(self):\n",

+ " \"\"\"Delete this user from Redis.\"\"\"\n",

+ " self.redis_client.delete(self.key)\n",

+ "\n",

+ " def __repr__(self):\n",

+ " return f\"\"\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "FNQxAaoCxPN7",

+ "metadata": {

+ "id": "FNQxAaoCxPN7"

+ },

+ "source": [

+ "### Example usage of User class"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 7,

+ "id": "_WcOlgVyu0Ng",

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/"

+ },

+ "id": "_WcOlgVyu0Ng",

+ "outputId": "0776fa25-513b-445b-d46d-35d9333b3a75"

+ },

+ "outputs": [

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "User 'alice' created.\n",

+ "Retrieved: \n",

+ "After adding 'executive': \n",

+ "After removing 'manager': \n"

+ ]

+ }

+ ],

+ "source": [

+ "# Example usage of the User class\n",

+ "\n",

+ "# Let's create a new user\n",

+ "alice = User(redis_client, \"alice\", roles=[\"finance\", \"manager\"])\n",

+ "\n",

+ "# We'll save the user in Redis\n",

+ "try:\n",

+ " alice.create()\n",

+ " print(\"User 'alice' created.\")\n",

+ "except ValueError as e:\n",

+ " print(e)\n",

+ "\n",

+ "# Retrieve the user\n",

+ "alice_obj = User.get(redis_client, \"alice\")\n",

+ "print(\"Retrieved:\", alice_obj)\n",

+ "\n",

+ "# Add another role\n",

+ "alice_obj.add_role(\"executive\")\n",

+ "print(\"After adding 'executive':\", alice_obj)\n",

+ "\n",

+ "# Remove a role\n",

+ "alice_obj.remove_role(\"manager\")\n",

+ "print(\"After removing 'manager':\", alice_obj)\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 8,

+ "id": "c911e892",

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/"

+ },

+ "id": "c911e892",

+ "outputId": "df4666ff-97ce-4e75-d70c-75fe5d9e6703"

+ },

+ "outputs": [

+ {

+ "data": {

+ "text/plain": [

+ ""

+ ]

+ },

+ "execution_count": 8,

+ "metadata": {},

+ "output_type": "execute_result"

+ }

+ ],

+ "source": [

+ "# Take a peek at the user object itself\n",

+ "alice"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 9,

+ "id": "P3j6yu8l87j3",

+ "metadata": {

+ "id": "P3j6yu8l87j3"

+ },

+ "outputs": [],

+ "source": [

+ "# Create one more user\n",

+ "larry = User(redis_client, \"larry\", roles=[\"product\"])\n",

+ "larry.create()"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "Y7B4l7XVx5md",

+ "metadata": {

+ "id": "Y7B4l7XVx5md"

+ },

+ "source": [

+ ">💡 Using a cloud DB? Take a peek at your instance using [RedisInsight](https://redis.io/insight) to see what user data is in place."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "aCXYFXu0u0Ng",

+ "metadata": {

+ "id": "aCXYFXu0u0Ng"

+ },

+ "source": [

+ "## 3. Document Management (Using LangChain)\n",

+ "\n",

+ "Here, we'll use **LangChain** for document loading, chunking, and vectorizing. Then, we’ll **store documents** in Redis as JSON. Each document will look like:\n",

+ "\n",

+ "```json\n",

+ "{\n",

+ " \"doc_id\": \"123\",\n",

+ " \"chunk_id\": \"123\",\n",

+ " \"path\": \"resources/doc.pdf\",\n",

+ " \"title\": \"Quarterly Finance Report\",\n",

+ " \"content\": \"Some text...\",\n",

+ " \"allowed_roles\": [\"finance\", \"executive\"],\n",

+ " \"embedding\": [0.12, 0.98, ...] \n",

+ "}\n",

+ "```"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "d3cJ5DSP5vXt",

+ "metadata": {

+ "id": "d3cJ5DSP5vXt"

+ },

+ "source": [

+ "### Building a document knowledge base\n",

+ "We will create a `KnowledgeBase` class to encapsulate document processing logic and search. The class will handle:\n",

+ "1. Document ingest and chunking\n",

+ "2. Role tagging with a simple str-based rule (likely custom depending on use case)\n",

+ "3. Retrieval over the entire document corpus adhering to provided user roles\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 23,

+ "id": "67d38524",

+ "metadata": {

+ "id": "67d38524"

+ },

+ "outputs": [],

+ "source": [

+ "from typing import List, Optional, Dict, Any, Set\n",

+ "from pathlib import Path\n",

+ "import uuid\n",

+ "\n",

+ "from langchain_community.document_loaders import PyPDFLoader\n",

+ "from langchain.text_splitter import RecursiveCharacterTextSplitter\n",

+ "from redisvl.index import SearchIndex\n",

+ "from redisvl.query import VectorQuery\n",

+ "from redisvl.query.filter import FilterExpression, Tag\n",

+ "from redisvl.utils.vectorize import OpenAITextVectorizer\n",

+ "\n",

+ "\n",

+ "class KnowledgeBase:\n",

+ " \"\"\"Manages document processing, embedding, and storage in Redis.\"\"\"\n",

+ "\n",

+ " def __init__(\n",

+ " self,\n",

+ " redis_client,\n",

+ " embeddings_model: str = \"text-embedding-3-small\",\n",

+ " chunk_size: int = 2500,\n",

+ " chunk_overlap: int = 100\n",

+ " ):\n",

+ " self.redis_client = redis_client\n",

+ " self.embeddings = OpenAITextVectorizer(model=embeddings_model)\n",

+ " self.text_splitter = RecursiveCharacterTextSplitter(\n",

+ " chunk_size=chunk_size,\n",

+ " chunk_overlap=chunk_overlap,\n",

+ " )\n",

+ "\n",

+ " # Initialize document search index\n",

+ " self.index = self._create_search_index()\n",

+ "\n",

+ " def _create_search_index(self) -> SearchIndex:\n",

+ " \"\"\"Create the Redis search index for documents.\"\"\"\n",

+ " schema = {\n",

+ " \"index\": {\n",

+ " \"name\": \"docs\",\n",

+ " \"prefix\": \"doc\",\n",

+ " \"storage_type\": \"json\"\n",

+ " },\n",

+ " \"fields\": [\n",

+ " {\n",

+ " \"name\": \"doc_id\",\n",

+ " \"type\": \"tag\",\n",

+ " },\n",

+ " {\n",

+ " \"name\": \"chunk_id\",\n",

+ " \"type\": \"tag\",\n",

+ " },\n",

+ " {\n",

+ " \"name\": \"allowed_roles\",\n",

+ " \"path\": \"$.allowed_roles[*]\",\n",

+ " \"type\": \"tag\",\n",

+ " },\n",

+ " {\n",

+ " \"name\": \"content\",\n",

+ " \"type\": \"text\",\n",

+ " },\n",

+ " {\n",

+ " \"name\": \"embedding\",\n",

+ " \"type\": \"vector\",\n",

+ " \"attrs\": {\n",

+ " \"dims\": self.embeddings.dims,\n",

+ " \"distance_metric\": \"cosine\",\n",

+ " \"algorithm\": \"flat\",\n",

+ " \"datatype\": \"float32\"\n",

+ " }\n",

+ " }\n",

+ " ]\n",

+ " }\n",

+ " index = SearchIndex.from_dict(schema, redis_client=self.redis_client)\n",

+ " index.create()\n",

+ " return index\n",

+ "\n",

+ " def ingest(self, doc_path: str, allowed_roles: Optional[List[str]] = None) -> str:\n",

+ " \"\"\"\n",

+ " Load a document, chunk it, create embeddings, and store in Redis.\n",

+ " Returns the document ID.\n",

+ " \"\"\"\n",

+ " # Generate document ID\n",

+ " doc_id = str(uuid.uuid4())\n",

+ " path = Path(doc_path)\n",

+ "\n",

+ " if not path.exists():\n",

+ " raise FileNotFoundError(f\"Document not found: {doc_path}\")\n",

+ "\n",

+ " # Load and chunk document\n",

+ " loader = PyPDFLoader(str(path))\n",

+ " pages = loader.load()\n",

+ " chunks = self.text_splitter.split_documents(pages)\n",

+ " print(f\"Extracted {len(chunks)} for doc {doc_id} from file {str(path)}\", flush=True)\n",

+ "\n",

+ " # If roles not provided, determine from filename\n",

+ " if allowed_roles is None:\n",

+ " allowed_roles = self._determine_roles(path)\n",

+ "\n",

+ " # Prepare chunks for Redis\n",

+ " data, keys = [], []\n",

+ " for i, chunk in enumerate(chunks):\n",

+ " # Create embedding w/ openai\n",

+ " embedding = self.embeddings.embed(chunk.page_content)\n",

+ "\n",

+ " # Prepare chunk payload\n",

+ " chunk_id = f\"chunk_{i}\"\n",

+ " key = f\"doc:{doc_id}:{chunk_id}\"\n",

+ " data.append({\n",

+ " \"doc_id\": doc_id,\n",

+ " \"chunk_id\": chunk_id,\n",

+ " \"path\": str(path),\n",

+ " \"content\": chunk.page_content,\n",

+ " \"allowed_roles\": list(allowed_roles),\n",

+ " \"embedding\": embedding,\n",

+ " })\n",

+ " keys.append(key)\n",

+ "\n",

+ " # Store in Redis\n",

+ " _ = self.index.load(data=data, keys=keys)\n",

+ " print(f\"Loaded {len(chunks)} chunks for document {doc_id}\")\n",

+ " return doc_id\n",

+ "\n",

+ " def _determine_roles(self, file_path: Path) -> Set[str]:\n",

+ " \"\"\"Determine allowed roles based on file path and name patterns.\"\"\"\n",

+ " # Customize based on use case and business logic\n",

+ " ROLE_PATTERNS = {\n",

+ " ('10k', 'financial', 'earnings', 'revenue'):\n",

+ " {'finance', 'executive'},\n",

+ " ('brochure', 'spec', 'product', 'manual'):\n",

+ " {'product', 'sales'},\n",

+ " ('hr', 'handbook', 'policy', 'employee'):\n",

+ " {'hr', 'manager'},\n",

+ " ('sales', 'pricing', 'customer'):\n",

+ " {'sales', 'manager'}\n",

+ " }\n",

+ "\n",

+ " filename = file_path.name.lower()\n",

+ " roles = {\n",

+ " role for terms, roles in ROLE_PATTERNS.items()\n",

+ " for role in roles\n",

+ " if any(term in filename for term in terms)\n",

+ " }\n",

+ " return roles or {'executive'}\n",

+ "\n",

+ " @staticmethod\n",

+ " def role_filter(user_roles: List[str]) -> FilterExpression:\n",

+ " \"\"\"Generate a Redis filter based on provided user roles.\"\"\"\n",

+ " return Tag(\"allowed_roles\") == user_roles\n",

+ "\n",

+ " def search(self, query: str, user_roles: List[str], top_k: int = 5) -> List[Dict[str, Any]]:\n",

+ " \"\"\"\n",

+ " Search for documents matching the query and user roles.\n",

+ " Returns list of matching documents.\n",

+ " \"\"\"\n",

+ " # Create query vector\n",

+ " query_vector = self.embeddings.embed(query)\n",

+ "\n",

+ " # Build role filter\n",

+ " roles_filter = self.role_filter(user_roles)\n",

+ "\n",

+ " # Execute search\n",

+ " return self.index.query(\n",

+ " VectorQuery(\n",

+ " vector=query_vector,\n",

+ " vector_field_name=\"embedding\",\n",

+ " filter_expression=roles_filter,\n",

+ " return_fields=[\"doc_id\", \"chunk_id\", \"allowed_roles\", \"content\"],\n",

+ " num_results=top_k,\n",

+ " dialect=4\n",

+ " )\n",

+ " )\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "YsBuAa_q9QU_",

+ "metadata": {

+ "id": "YsBuAa_q9QU_"

+ },

+ "source": [

+ "Load a document into the knowledge base."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 12,

+ "id": "s1LDdWhKu0Nh",

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/"

+ },

+ "id": "s1LDdWhKu0Nh",

+ "outputId": "66e1105e-78ba-425a-8156-c810c7c9054a"

+ },

+ "outputs": [

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "21:09:47 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "Extracted 34 for doc f2c7171a-16cc-4aad-a777-ed7202bd7212 from file resources/2022-chevy-colorado-ebrochure.pdf\n",

+ "21:09:49 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:49 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:50 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:50 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:51 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:51 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:52 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:52 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:53 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:53 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:53 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:53 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:54 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:54 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:55 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:55 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:55 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:56 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:56 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:56 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:57 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:57 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:57 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:58 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:10:01 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:10:02 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:10:02 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:10:05 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:10:05 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:10:05 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:10:06 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:10:06 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:10:06 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:10:07 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "Loaded 34 chunks for document f2c7171a-16cc-4aad-a777-ed7202bd7212\n",

+ "Loaded all chunks for f2c7171a-16cc-4aad-a777-ed7202bd7212\n"

+ ]

+ }

+ ],

+ "source": [

+ "kb = KnowledgeBase(redis_client)\n",

+ "\n",

+ "doc_id = kb.ingest(\"resources/2022-chevy-colorado-ebrochure.pdf\")\n",

+ "print(f\"Loaded all chunks for {doc_id}\", flush=True)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "-Ekqkf1fu0Nh",

+ "metadata": {

+ "id": "-Ekqkf1fu0Nh"

+ },

+ "source": [

+ "## 4. User Query Flow\n",

+ "\n",

+ "Now that we have our User DB and our Vector DB loaded in Redis. We will perform:\n",

+ "\n",

+ "1. **Vector Similarity Search** on `embedding`.\n",

+ "2. A metadata **Filter** based on `allowed_roles`.\n",

+ "3. Return top-k matching document chunks.\n",

+ "\n",

+ "This is implemented below.\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 13,

+ "id": "WpvrXmluu0Nh",

+ "metadata": {

+ "id": "WpvrXmluu0Nh"

+ },

+ "outputs": [],

+ "source": [

+ "def user_query(user_id: str, query: str):\n",

+ " \"\"\"\n",

+ " Placeholder for a search function.\n",

+ " 1. Load the user's roles.\n",

+ " 2. Perform a vector search for docs.\n",

+ " 3. Filter docs that match at least one of the user's roles.\n",

+ " 4. Return top-K results.\n",

+ " \"\"\"\n",

+ " # 1. Load & validate user roles\n",

+ " user_obj = User.get(redis_client, user_id)\n",

+ " if not user_obj:\n",

+ " raise ValueError(f\"User {user_id} not found.\")\n",

+ "\n",

+ " roles = set([role.value for role in user_obj.roles])\n",

+ " if not roles:\n",

+ " raise ValueError(f\"User {user_id} does not have any roles.\")\n",

+ "\n",

+ " # 2. Retrieve document chunks\n",

+ " results = kb.search(query, roles)\n",

+ "\n",

+ " if not results:\n",

+ " raise ValueError(f\"No available documents found for {user_id}\")\n",

+ "\n",

+ " return results"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "qQS1BLwGBVDA",

+ "metadata": {

+ "id": "qQS1BLwGBVDA"

+ },

+ "source": [

+ "### Search examples\n",

+ "\n",

+ "Search with a non-existent user."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 14,

+ "id": "wYishsNy6lty",

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/",

+ "height": 287

+ },

+ "id": "wYishsNy6lty",

+ "outputId": "dfa5a8b5-d926-4e94-e8a1-ecceb51ccff5"

+ },

+ "outputs": [

+ {

+ "ename": "ValueError",

+ "evalue": "User tyler not found.",

+ "output_type": "error",

+ "traceback": [

+ "\u001b[0;31m---------------------------------------------------------------------------\u001b[0m",

+ "\u001b[0;31mValueError\u001b[0m Traceback (most recent call last)",

+ "\u001b[0;32m\u001b[0m in \u001b[0;36m

"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 1,

+ "id": "4e0aa177",

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/"

+ },

+ "id": "4e0aa177",

+ "outputId": "0ba61596-b3e4-442f-cd9c-8b480f1c52d1"

+ },

+ "outputs": [

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "\u001b[?25l \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m0.0/99.3 kB\u001b[0m \u001b[31m?\u001b[0m eta \u001b[36m-:--:--\u001b[0m\r\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m99.3/99.3 kB\u001b[0m \u001b[31m7.1 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

+ "\u001b[?25h\u001b[?25l \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m0.0/2.5 MB\u001b[0m \u001b[31m?\u001b[0m eta \u001b[36m-:--:--\u001b[0m\r\u001b[2K \u001b[91m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[91m╸\u001b[0m \u001b[32m2.5/2.5 MB\u001b[0m \u001b[31m91.5 MB/s\u001b[0m eta \u001b[36m0:00:01\u001b[0m\r\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m2.5/2.5 MB\u001b[0m \u001b[31m55.0 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

+ "\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m298.0/298.0 kB\u001b[0m \u001b[31m25.9 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

+ "\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m1.0/1.0 MB\u001b[0m \u001b[31m60.2 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

+ "\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m412.2/412.2 kB\u001b[0m \u001b[31m34.2 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

+ "\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m261.5/261.5 kB\u001b[0m \u001b[31m19.1 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

+ "\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m46.0/46.0 kB\u001b[0m \u001b[31m4.3 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

+ "\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m86.8/86.8 kB\u001b[0m \u001b[31m8.6 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

+ "\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m50.8/50.8 kB\u001b[0m \u001b[31m4.6 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

+ "\u001b[?25h"

+ ]

+ }

+ ],

+ "source": [

+ "# NBVAL_SKIP\n",

+ "%pip install -q 'redisvl>=0.3.8' openai langchain-community pypdf"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "fXsGCsLQu0Ne",

+ "metadata": {

+ "id": "fXsGCsLQu0Ne"

+ },

+ "source": [

+ "## 1. High-Level Data Flow & Setup\n",

+ "\n",

+ "1. **User Creation & Role Management**\n",

+ " - A user is stored at `user:{user_id}` in Redis with a JSON structure containing the user’s roles.\n",

+ " - We can create, update, or delete users as needed.\n",

+ " - **This serves as a simple look up layer and should NOT replace your production-ready auth API flow**\n",

+ "\n",

+ "2. **Document Storage**\n",

+ " - Documents chunks are stored at `doc:{doc_id}:{chunk_id}` in Redis as JSON.\n",

+ " - Each document chunk includes fields such as `doc_id`, `chunk_id`, `content`, `allowed_roles`, and an `embedding` (for vector similarity).\n",

+ "\n",

+ "3. **Querying / Search**\n",

+ " - User roles are retrieved from Redis.\n",

+ " - We perform a vector similarity search (or any other type of retrieval) on the documents.\n",

+ " - We filter the results so that only documents whose `allowed_roles` intersect with the user’s roles are returned.\n",

+ "\n",

+ "4. **RAG Integration**\n",

+ " - The returned documents can be fed into a Large Language Model (LLM) to provide context and generate an answer.\n",

+ "\n",

+ "First, we’ll set up our Python environment and Redis connection.\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "73c33af6",

+ "metadata": {

+ "id": "73c33af6"

+ },

+ "source": [

+ "### Download Documents\n",

+ "Running remotely or in collab? Run this cell to download the necessary datasets."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "48971c52",

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/"

+ },

+ "id": "48971c52",

+ "outputId": "e17d146a-43be-41fb-b029-f330d79f1a65"

+ },

+ "outputs": [],

+ "source": [

+ "# NBVAL_SKIP\n",

+ "!git clone https://github.com/redis-developer/redis-ai-resources.git temp_repo\n",

+ "!mkdir -p resources\n",

+ "!mv temp_repo/python-recipes/RAG/resources/aapl-10k-2023.pdf resources/\n",

+ "!mv temp_repo/python-recipes/RAG/resources/2022-chevrolet-colorado-ebrochure.pdf resources/\n",

+ "!rm -rf temp_repo"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "993371a2",

+ "metadata": {

+ "id": "993371a2"

+ },

+ "source": [

+ "### Run Redis Stack\n",

+ "\n",

+ "For this tutorial you will need a running instance of Redis if you don't already have one.\n",

+ "\n",

+ "#### For Colab\n",

+ "Use the shell script below to download, extract, and install [Redis Stack](https://redis.io/docs/getting-started/install-stack/) directly from the Redis package archive."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 4,

+ "id": "8edc5862",

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/"

+ },

+ "id": "8edc5862",

+ "outputId": "df2643ed-2422-4ee5-bd42-bec17b405eec"

+ },

+ "outputs": [

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "deb [signed-by=/usr/share/keyrings/redis-archive-keyring.gpg] https://packages.redis.io/deb jammy main\n",

+ "Starting redis-stack-server, database path /var/lib/redis-stack\n"

+ ]

+ }

+ ],

+ "source": [

+ "# NBVAL_SKIP\n",

+ "%%sh\n",

+ "curl -fsSL https://packages.redis.io/gpg | sudo gpg --dearmor -o /usr/share/keyrings/redis-archive-keyring.gpg\n",

+ "echo \"deb [signed-by=/usr/share/keyrings/redis-archive-keyring.gpg] https://packages.redis.io/deb $(lsb_release -cs) main\" | sudo tee /etc/apt/sources.list.d/redis.list\n",

+ "sudo apt-get update > /dev/null 2>&1\n",

+ "sudo apt-get install redis-stack-server > /dev/null 2>&1\n",

+ "redis-stack-server --daemonize yes"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "bc571319",

+ "metadata": {

+ "id": "bc571319"

+ },

+ "source": [

+ "#### For Alternative Environments\n",

+ "There are many ways to get the necessary redis-stack instance running\n",

+ "1. On cloud, deploy a [FREE instance of Redis in the cloud](https://redis.com/try-free/). Or, if you have your\n",

+ "own version of Redis Enterprise running, that works too!\n",

+ "2. Per OS, [see the docs](https://redis.io/docs/latest/operate/oss_and_stack/install/install-stack/)\n",

+ "3. With docker: `docker run -d --name redis-stack-server -p 6379:6379 redis/redis-stack-server:latest`"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 5,

+ "id": "qU49fNVnu0Nf",

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/"

+ },

+ "id": "qU49fNVnu0Nf",

+ "outputId": "4d2f34c3-6179-4f1d-eff7-5e8e9d8fd58b"

+ },

+ "outputs": [

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "Successfully connected to Redis\n"

+ ]

+ }

+ ],

+ "source": [

+ "import os\n",

+ "\n",

+ "from redis import Redis\n",

+ "\n",

+ "# Replace values below with your own if using Redis Cloud instance\n",

+ "REDIS_HOST = os.getenv(\"REDIS_HOST\", \"localhost\") # ex: \"redis-18374.c253.us-central1-1.gce.cloud.redislabs.com\"\n",

+ "REDIS_PORT = os.getenv(\"REDIS_PORT\", \"6379\") # ex: 18374\n",

+ "REDIS_PASSWORD = os.getenv(\"REDIS_PASSWORD\", \"\") # ex: \"1TNxTEdYRDgIDKM2gDfasupCADXXXX\"\n",

+ "\n",

+ "# If SSL is enabled on the endpoint, use rediss:// as the URL prefix\n",

+ "REDIS_URL = f\"redis://:{REDIS_PASSWORD}@{REDIS_HOST}:{REDIS_PORT}\"\n",

+ "\n",

+ "# Connect to Redis (adjust host/port if needed)\n",

+ "redis_client = Redis.from_url(REDIS_URL)\n",

+ "redis_client.ping()\n",

+ "\n",

+ "print(\"Successfully connected to Redis\")"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "aqzMteQsu0Nf",

+ "metadata": {

+ "id": "aqzMteQsu0Nf"

+ },

+ "source": [

+ "## 2. User Management\n",

+ "\n",

+ "Below is a simple `User` class that stores a user in Redis as JSON. We:\n",

+ "\n",

+ "- Use a Redis key of the form `user:{user_id}`.\n",

+ "- Store fields like `user_id`, `roles`, etc.\n",

+ "- Provide CRUD methods (Create, Read, Update, Delete) for user objects.\n",

+ "\n",

+ "**Data Structure Example**\n",

+ "```json\n",

+ "{\n",

+ " \"user_id\": \"alice\",\n",

+ " \"roles\": [\"finance\", \"manager\"]\n",

+ "}\n",

+ "```\n",

+ "\n",

+ "We'll also include some basic checks to ensure we don't add duplicate roles, handle empty role lists, etc.\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 6,

+ "id": "38pdjXJvu0Nf",

+ "metadata": {

+ "id": "38pdjXJvu0Nf"

+ },

+ "outputs": [],

+ "source": [

+ "from typing import List, Optional\n",

+ "from enum import Enum\n",

+ "\n",

+ "\n",

+ "class UserRoles(str, Enum):\n",

+ " FINANCE = \"finance\"\n",

+ " MANAGER = \"manager\"\n",

+ " EXECUTIVE = \"executive\"\n",

+ " HR = \"hr\"\n",

+ " SALES = \"sales\"\n",

+ " PRODUCT = \"product\"\n",

+ "\n",

+ "\n",

+ "class User:\n",

+ " \"\"\"\n",

+ " User class for storing user data in Redis.\n",

+ "\n",

+ " Each user has:\n",

+ " - user_id (string)\n",

+ " - roles (list of UserRoles)\n",

+ "\n",

+ " Key in Redis: user:{user_id}\n",

+ " \"\"\"\n",

+ " def __init__(\n",

+ " self,\n",

+ " redis_client: Redis,\n",

+ " user_id: str,\n",

+ " roles: Optional[List[UserRoles]] = None\n",

+ " ):\n",

+ " self.redis_client = redis_client\n",

+ " self.user_id = user_id\n",

+ " self.roles = roles or []\n",

+ "\n",

+ " @property\n",

+ " def key(self) -> str:\n",

+ " return f\"user:{self.user_id}\"\n",

+ "\n",

+ " def exists(self) -> bool:\n",

+ " \"\"\"Check if the user key exists in Redis.\"\"\"\n",

+ " return self.redis_client.exists(self.key) == 1\n",

+ "\n",

+ " def create(self):\n",

+ " \"\"\"\n",

+ " Create a new user in Redis. Fails if user already exists.\n",

+ " \"\"\"\n",

+ " if self.exists():\n",

+ " raise ValueError(f\"User {self.user_id} already exists.\")\n",

+ "\n",

+ " self.save()\n",

+ "\n",

+ " def save(self):\n",

+ " \"\"\"\n",

+ " Save (create or update) the user data in Redis.\n",

+ " If user does not exist, it will be created.\n",

+ " \"\"\"\n",

+ " data = {\n",

+ " \"user_id\": self.user_id,\n",

+ " \"roles\": [UserRoles(role).value for role in set(self.roles)] # ensure roles are unique and convert to strings\n",

+ " }\n",

+ " self.redis_client.json().set(self.key, \".\", data)\n",

+ "\n",

+ " @classmethod\n",

+ " def get(cls, redis_client: Redis, user_id):\n",

+ " \"\"\"\n",

+ " Retrieve a user from Redis.\n",

+ " \"\"\"\n",

+ " key = f\"user:{user_id}\"\n",

+ " data = redis_client.json().get(key)\n",

+ " if not data:\n",

+ " return None\n",

+ " # Convert string roles back to UserRoles enum\n",

+ " roles = [UserRoles(role) for role in data.get(\"roles\", [])]\n",

+ " return cls(redis_client, data[\"user_id\"], roles)\n",

+ "\n",

+ " def update_roles(self, roles: List[UserRoles]):\n",

+ " \"\"\"\n",

+ " Overwrite the user's roles in Redis.\n",

+ " \"\"\"\n",

+ " self.roles = roles\n",

+ " self.save()\n",

+ "\n",

+ " def add_role(self, role: UserRoles):\n",

+ " \"\"\"Add a single role to the user.\"\"\"\n",

+ " if role not in self.roles:\n",

+ " self.roles.append(role)\n",

+ " self.save()\n",

+ "\n",

+ " def remove_role(self, role: UserRoles):\n",

+ " \"\"\"Remove a single role from the user.\"\"\"\n",

+ " if role in self.roles:\n",

+ " self.roles.remove(role)\n",

+ " self.save()\n",

+ "\n",

+ " def delete(self):\n",

+ " \"\"\"Delete this user from Redis.\"\"\"\n",

+ " self.redis_client.delete(self.key)\n",

+ "\n",

+ " def __repr__(self):\n",

+ " return f\"\"\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "FNQxAaoCxPN7",

+ "metadata": {

+ "id": "FNQxAaoCxPN7"

+ },

+ "source": [

+ "### Example usage of User class"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 7,

+ "id": "_WcOlgVyu0Ng",

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/"

+ },

+ "id": "_WcOlgVyu0Ng",

+ "outputId": "0776fa25-513b-445b-d46d-35d9333b3a75"

+ },

+ "outputs": [

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "User 'alice' created.\n",

+ "Retrieved: \n",

+ "After adding 'executive': \n",

+ "After removing 'manager': \n"

+ ]

+ }

+ ],

+ "source": [

+ "# Example usage of the User class\n",

+ "\n",

+ "# Let's create a new user\n",

+ "alice = User(redis_client, \"alice\", roles=[\"finance\", \"manager\"])\n",

+ "\n",

+ "# We'll save the user in Redis\n",

+ "try:\n",

+ " alice.create()\n",

+ " print(\"User 'alice' created.\")\n",

+ "except ValueError as e:\n",

+ " print(e)\n",

+ "\n",

+ "# Retrieve the user\n",

+ "alice_obj = User.get(redis_client, \"alice\")\n",

+ "print(\"Retrieved:\", alice_obj)\n",

+ "\n",

+ "# Add another role\n",

+ "alice_obj.add_role(\"executive\")\n",

+ "print(\"After adding 'executive':\", alice_obj)\n",

+ "\n",

+ "# Remove a role\n",

+ "alice_obj.remove_role(\"manager\")\n",

+ "print(\"After removing 'manager':\", alice_obj)\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 8,

+ "id": "c911e892",

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/"

+ },

+ "id": "c911e892",

+ "outputId": "df4666ff-97ce-4e75-d70c-75fe5d9e6703"

+ },

+ "outputs": [

+ {

+ "data": {

+ "text/plain": [

+ ""

+ ]

+ },

+ "execution_count": 8,

+ "metadata": {},

+ "output_type": "execute_result"

+ }

+ ],

+ "source": [

+ "# Take a peek at the user object itself\n",

+ "alice"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 9,

+ "id": "P3j6yu8l87j3",

+ "metadata": {

+ "id": "P3j6yu8l87j3"

+ },

+ "outputs": [],

+ "source": [

+ "# Create one more user\n",

+ "larry = User(redis_client, \"larry\", roles=[\"product\"])\n",

+ "larry.create()"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "Y7B4l7XVx5md",

+ "metadata": {

+ "id": "Y7B4l7XVx5md"

+ },

+ "source": [

+ ">💡 Using a cloud DB? Take a peek at your instance using [RedisInsight](https://redis.io/insight) to see what user data is in place."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "aCXYFXu0u0Ng",

+ "metadata": {

+ "id": "aCXYFXu0u0Ng"

+ },

+ "source": [

+ "## 3. Document Management (Using LangChain)\n",

+ "\n",

+ "Here, we'll use **LangChain** for document loading, chunking, and vectorizing. Then, we’ll **store documents** in Redis as JSON. Each document will look like:\n",

+ "\n",

+ "```json\n",

+ "{\n",

+ " \"doc_id\": \"123\",\n",

+ " \"chunk_id\": \"123\",\n",

+ " \"path\": \"resources/doc.pdf\",\n",

+ " \"title\": \"Quarterly Finance Report\",\n",

+ " \"content\": \"Some text...\",\n",

+ " \"allowed_roles\": [\"finance\", \"executive\"],\n",

+ " \"embedding\": [0.12, 0.98, ...] \n",

+ "}\n",

+ "```"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "d3cJ5DSP5vXt",

+ "metadata": {

+ "id": "d3cJ5DSP5vXt"

+ },

+ "source": [

+ "### Building a document knowledge base\n",

+ "We will create a `KnowledgeBase` class to encapsulate document processing logic and search. The class will handle:\n",

+ "1. Document ingest and chunking\n",

+ "2. Role tagging with a simple str-based rule (likely custom depending on use case)\n",

+ "3. Retrieval over the entire document corpus adhering to provided user roles\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 23,

+ "id": "67d38524",

+ "metadata": {

+ "id": "67d38524"

+ },

+ "outputs": [],

+ "source": [

+ "from typing import List, Optional, Dict, Any, Set\n",

+ "from pathlib import Path\n",

+ "import uuid\n",

+ "\n",

+ "from langchain_community.document_loaders import PyPDFLoader\n",

+ "from langchain.text_splitter import RecursiveCharacterTextSplitter\n",

+ "from redisvl.index import SearchIndex\n",

+ "from redisvl.query import VectorQuery\n",

+ "from redisvl.query.filter import FilterExpression, Tag\n",

+ "from redisvl.utils.vectorize import OpenAITextVectorizer\n",

+ "\n",

+ "\n",

+ "class KnowledgeBase:\n",

+ " \"\"\"Manages document processing, embedding, and storage in Redis.\"\"\"\n",

+ "\n",

+ " def __init__(\n",

+ " self,\n",

+ " redis_client,\n",

+ " embeddings_model: str = \"text-embedding-3-small\",\n",

+ " chunk_size: int = 2500,\n",

+ " chunk_overlap: int = 100\n",

+ " ):\n",

+ " self.redis_client = redis_client\n",

+ " self.embeddings = OpenAITextVectorizer(model=embeddings_model)\n",

+ " self.text_splitter = RecursiveCharacterTextSplitter(\n",

+ " chunk_size=chunk_size,\n",

+ " chunk_overlap=chunk_overlap,\n",

+ " )\n",

+ "\n",

+ " # Initialize document search index\n",

+ " self.index = self._create_search_index()\n",

+ "\n",

+ " def _create_search_index(self) -> SearchIndex:\n",

+ " \"\"\"Create the Redis search index for documents.\"\"\"\n",

+ " schema = {\n",

+ " \"index\": {\n",

+ " \"name\": \"docs\",\n",

+ " \"prefix\": \"doc\",\n",

+ " \"storage_type\": \"json\"\n",

+ " },\n",

+ " \"fields\": [\n",

+ " {\n",

+ " \"name\": \"doc_id\",\n",

+ " \"type\": \"tag\",\n",

+ " },\n",

+ " {\n",

+ " \"name\": \"chunk_id\",\n",

+ " \"type\": \"tag\",\n",

+ " },\n",

+ " {\n",

+ " \"name\": \"allowed_roles\",\n",

+ " \"path\": \"$.allowed_roles[*]\",\n",

+ " \"type\": \"tag\",\n",

+ " },\n",

+ " {\n",

+ " \"name\": \"content\",\n",

+ " \"type\": \"text\",\n",

+ " },\n",

+ " {\n",

+ " \"name\": \"embedding\",\n",

+ " \"type\": \"vector\",\n",

+ " \"attrs\": {\n",

+ " \"dims\": self.embeddings.dims,\n",

+ " \"distance_metric\": \"cosine\",\n",

+ " \"algorithm\": \"flat\",\n",

+ " \"datatype\": \"float32\"\n",

+ " }\n",

+ " }\n",

+ " ]\n",

+ " }\n",

+ " index = SearchIndex.from_dict(schema, redis_client=self.redis_client)\n",

+ " index.create()\n",

+ " return index\n",

+ "\n",

+ " def ingest(self, doc_path: str, allowed_roles: Optional[List[str]] = None) -> str:\n",

+ " \"\"\"\n",

+ " Load a document, chunk it, create embeddings, and store in Redis.\n",

+ " Returns the document ID.\n",

+ " \"\"\"\n",

+ " # Generate document ID\n",

+ " doc_id = str(uuid.uuid4())\n",

+ " path = Path(doc_path)\n",

+ "\n",

+ " if not path.exists():\n",

+ " raise FileNotFoundError(f\"Document not found: {doc_path}\")\n",

+ "\n",

+ " # Load and chunk document\n",

+ " loader = PyPDFLoader(str(path))\n",

+ " pages = loader.load()\n",

+ " chunks = self.text_splitter.split_documents(pages)\n",

+ " print(f\"Extracted {len(chunks)} for doc {doc_id} from file {str(path)}\", flush=True)\n",

+ "\n",

+ " # If roles not provided, determine from filename\n",

+ " if allowed_roles is None:\n",

+ " allowed_roles = self._determine_roles(path)\n",

+ "\n",

+ " # Prepare chunks for Redis\n",

+ " data, keys = [], []\n",

+ " for i, chunk in enumerate(chunks):\n",

+ " # Create embedding w/ openai\n",

+ " embedding = self.embeddings.embed(chunk.page_content)\n",

+ "\n",

+ " # Prepare chunk payload\n",

+ " chunk_id = f\"chunk_{i}\"\n",

+ " key = f\"doc:{doc_id}:{chunk_id}\"\n",

+ " data.append({\n",

+ " \"doc_id\": doc_id,\n",

+ " \"chunk_id\": chunk_id,\n",

+ " \"path\": str(path),\n",

+ " \"content\": chunk.page_content,\n",

+ " \"allowed_roles\": list(allowed_roles),\n",

+ " \"embedding\": embedding,\n",

+ " })\n",

+ " keys.append(key)\n",

+ "\n",

+ " # Store in Redis\n",

+ " _ = self.index.load(data=data, keys=keys)\n",

+ " print(f\"Loaded {len(chunks)} chunks for document {doc_id}\")\n",

+ " return doc_id\n",

+ "\n",

+ " def _determine_roles(self, file_path: Path) -> Set[str]:\n",

+ " \"\"\"Determine allowed roles based on file path and name patterns.\"\"\"\n",

+ " # Customize based on use case and business logic\n",

+ " ROLE_PATTERNS = {\n",

+ " ('10k', 'financial', 'earnings', 'revenue'):\n",

+ " {'finance', 'executive'},\n",

+ " ('brochure', 'spec', 'product', 'manual'):\n",

+ " {'product', 'sales'},\n",

+ " ('hr', 'handbook', 'policy', 'employee'):\n",

+ " {'hr', 'manager'},\n",

+ " ('sales', 'pricing', 'customer'):\n",

+ " {'sales', 'manager'}\n",

+ " }\n",

+ "\n",

+ " filename = file_path.name.lower()\n",

+ " roles = {\n",

+ " role for terms, roles in ROLE_PATTERNS.items()\n",

+ " for role in roles\n",

+ " if any(term in filename for term in terms)\n",

+ " }\n",

+ " return roles or {'executive'}\n",

+ "\n",

+ " @staticmethod\n",

+ " def role_filter(user_roles: List[str]) -> FilterExpression:\n",

+ " \"\"\"Generate a Redis filter based on provided user roles.\"\"\"\n",

+ " return Tag(\"allowed_roles\") == user_roles\n",

+ "\n",

+ " def search(self, query: str, user_roles: List[str], top_k: int = 5) -> List[Dict[str, Any]]:\n",

+ " \"\"\"\n",

+ " Search for documents matching the query and user roles.\n",

+ " Returns list of matching documents.\n",

+ " \"\"\"\n",

+ " # Create query vector\n",

+ " query_vector = self.embeddings.embed(query)\n",

+ "\n",

+ " # Build role filter\n",

+ " roles_filter = self.role_filter(user_roles)\n",

+ "\n",

+ " # Execute search\n",

+ " return self.index.query(\n",

+ " VectorQuery(\n",

+ " vector=query_vector,\n",

+ " vector_field_name=\"embedding\",\n",

+ " filter_expression=roles_filter,\n",

+ " return_fields=[\"doc_id\", \"chunk_id\", \"allowed_roles\", \"content\"],\n",

+ " num_results=top_k,\n",

+ " dialect=4\n",

+ " )\n",

+ " )\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "YsBuAa_q9QU_",

+ "metadata": {

+ "id": "YsBuAa_q9QU_"

+ },

+ "source": [

+ "Load a document into the knowledge base."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 12,

+ "id": "s1LDdWhKu0Nh",

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/"

+ },

+ "id": "s1LDdWhKu0Nh",

+ "outputId": "66e1105e-78ba-425a-8156-c810c7c9054a"

+ },

+ "outputs": [

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "21:09:47 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "Extracted 34 for doc f2c7171a-16cc-4aad-a777-ed7202bd7212 from file resources/2022-chevy-colorado-ebrochure.pdf\n",

+ "21:09:49 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:49 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:50 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:50 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:51 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:51 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:52 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:52 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:53 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:53 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:53 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:53 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:54 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:54 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:55 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:55 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:55 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:56 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:56 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:56 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:57 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:57 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:57 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:09:58 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:10:01 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:10:02 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:10:02 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:10:05 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:10:05 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:10:05 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:10:06 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:10:06 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:10:06 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "21:10:07 httpx INFO HTTP Request: POST https://api.openai.com/v1/embeddings \"HTTP/1.1 200 OK\"\n",

+ "Loaded 34 chunks for document f2c7171a-16cc-4aad-a777-ed7202bd7212\n",

+ "Loaded all chunks for f2c7171a-16cc-4aad-a777-ed7202bd7212\n"

+ ]

+ }

+ ],

+ "source": [

+ "kb = KnowledgeBase(redis_client)\n",

+ "\n",

+ "doc_id = kb.ingest(\"resources/2022-chevy-colorado-ebrochure.pdf\")\n",

+ "print(f\"Loaded all chunks for {doc_id}\", flush=True)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "-Ekqkf1fu0Nh",

+ "metadata": {

+ "id": "-Ekqkf1fu0Nh"

+ },

+ "source": [

+ "## 4. User Query Flow\n",

+ "\n",

+ "Now that we have our User DB and our Vector DB loaded in Redis. We will perform:\n",

+ "\n",

+ "1. **Vector Similarity Search** on `embedding`.\n",

+ "2. A metadata **Filter** based on `allowed_roles`.\n",

+ "3. Return top-k matching document chunks.\n",

+ "\n",

+ "This is implemented below.\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 13,

+ "id": "WpvrXmluu0Nh",

+ "metadata": {

+ "id": "WpvrXmluu0Nh"

+ },

+ "outputs": [],

+ "source": [

+ "def user_query(user_id: str, query: str):\n",

+ " \"\"\"\n",

+ " Placeholder for a search function.\n",

+ " 1. Load the user's roles.\n",

+ " 2. Perform a vector search for docs.\n",

+ " 3. Filter docs that match at least one of the user's roles.\n",

+ " 4. Return top-K results.\n",

+ " \"\"\"\n",

+ " # 1. Load & validate user roles\n",

+ " user_obj = User.get(redis_client, user_id)\n",

+ " if not user_obj:\n",

+ " raise ValueError(f\"User {user_id} not found.\")\n",

+ "\n",

+ " roles = set([role.value for role in user_obj.roles])\n",

+ " if not roles:\n",

+ " raise ValueError(f\"User {user_id} does not have any roles.\")\n",

+ "\n",

+ " # 2. Retrieve document chunks\n",

+ " results = kb.search(query, roles)\n",

+ "\n",

+ " if not results:\n",

+ " raise ValueError(f\"No available documents found for {user_id}\")\n",

+ "\n",

+ " return results"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "qQS1BLwGBVDA",

+ "metadata": {

+ "id": "qQS1BLwGBVDA"

+ },

+ "source": [

+ "### Search examples\n",

+ "\n",

+ "Search with a non-existent user."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 14,

+ "id": "wYishsNy6lty",

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/",

+ "height": 287

+ },

+ "id": "wYishsNy6lty",

+ "outputId": "dfa5a8b5-d926-4e94-e8a1-ecceb51ccff5"

+ },

+ "outputs": [

+ {

+ "ename": "ValueError",

+ "evalue": "User tyler not found.",

+ "output_type": "error",

+ "traceback": [

+ "\u001b[0;31m---------------------------------------------------------------------------\u001b[0m",

+ "\u001b[0;31mValueError\u001b[0m Traceback (most recent call last)",

+ "\u001b[0;32m\u001b[0m in \u001b[0;36m\u001b[0;34m()\u001b[0m\n\u001b[1;32m 1\u001b[0m \u001b[0;31m# Search with a non-existent user\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[0;32m----> 2\u001b[0;31m \u001b[0mresults\u001b[0m \u001b[0;34m=\u001b[0m \u001b[0muser_query\u001b[0m\u001b[0;34m(\u001b[0m\u001b[0;34m\"tyler\"\u001b[0m\u001b[0;34m,\u001b[0m \u001b[0mquery\u001b[0m\u001b[0;34m=\u001b[0m\u001b[0;34m\"What is the make and model of the vehicle here?\"\u001b[0m\u001b[0;34m)\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[0m",

+ "\u001b[0;32m\u001b[0m in \u001b[0;36muser_query\u001b[0;34m(user_id, query)\u001b[0m\n\u001b[1;32m 10\u001b[0m \u001b[0muser_obj\u001b[0m \u001b[0;34m=\u001b[0m \u001b[0mUser\u001b[0m\u001b[0;34m.\u001b[0m\u001b[0mget\u001b[0m\u001b[0;34m(\u001b[0m\u001b[0mredis_client\u001b[0m\u001b[0;34m,\u001b[0m \u001b[0muser_id\u001b[0m\u001b[0;34m)\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[1;32m 11\u001b[0m \u001b[0;32mif\u001b[0m \u001b[0;32mnot\u001b[0m \u001b[0muser_obj\u001b[0m\u001b[0;34m:\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[0;32m---> 12\u001b[0;31m \u001b[0;32mraise\u001b[0m \u001b[0mValueError\u001b[0m\u001b[0;34m(\u001b[0m\u001b[0;34mf\"User {user_id} not found.\"\u001b[0m\u001b[0;34m)\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[0m\u001b[1;32m 13\u001b[0m \u001b[0;34m\u001b[0m\u001b[0m\n\u001b[1;32m 14\u001b[0m \u001b[0mroles\u001b[0m \u001b[0;34m=\u001b[0m \u001b[0mset\u001b[0m\u001b[0;34m(\u001b[0m\u001b[0;34m[\u001b[0m\u001b[0mrole\u001b[0m\u001b[0;34m.\u001b[0m\u001b[0mvalue\u001b[0m \u001b[0;32mfor\u001b[0m \u001b[0mrole\u001b[0m \u001b[0;32min\u001b[0m \u001b[0muser_obj\u001b[0m\u001b[0;34m.\u001b[0m\u001b[0mroles\u001b[0m\u001b[0;34m]\u001b[0m\u001b[0;34m)\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n",

+ "\u001b[0;31mValueError\u001b[0m: User tyler not found."

+ ]

+ }

+ ],

+ "source": [

+ "# NBVAL_SKIP\n",

+ "results = user_query(\"tyler\", query=\"What is the make and model of the vehicle here?\")"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "0af59693",

+ "metadata": {},

+ "source": [

+ "Create user for Tyler."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 15,

+ "id": "ZNgxlQSvChx7",

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/",

+ "height": 329

+ },

+ "id": "ZNgxlQSvChx7",

+ "outputId": "d59aad34-2d24-4c87-dd42-b9a44ccaf26b"

+ },

+ "outputs": [

+ {

+ "ename": "ValueError",

+ "evalue": "'engineering' is not a valid UserRoles",

+ "output_type": "error",

+ "traceback": [

+ "\u001b[0;31m---------------------------------------------------------------------------\u001b[0m",

+ "\u001b[0;31mValueError\u001b[0m Traceback (most recent call last)",

+ "\u001b[0;32m\u001b[0m in \u001b[0;36m\u001b[0;34m()\u001b[0m\n\u001b[1;32m 1\u001b[0m \u001b[0;31m# Create user for Tyler\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[1;32m 2\u001b[0m \u001b[0mtyler\u001b[0m \u001b[0;34m=\u001b[0m \u001b[0mUser\u001b[0m\u001b[0;34m(\u001b[0m\u001b[0mredis_client\u001b[0m\u001b[0;34m,\u001b[0m \u001b[0;34m\"tyler\"\u001b[0m\u001b[0;34m,\u001b[0m \u001b[0mroles\u001b[0m\u001b[0;34m=\u001b[0m\u001b[0;34m[\u001b[0m\u001b[0;34m\"sales\"\u001b[0m\u001b[0;34m,\u001b[0m \u001b[0;34m\"engineering\"\u001b[0m\u001b[0;34m]\u001b[0m\u001b[0;34m)\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[0;32m----> 3\u001b[0;31m \u001b[0mtyler\u001b[0m\u001b[0;34m.\u001b[0m\u001b[0mcreate\u001b[0m\u001b[0;34m(\u001b[0m\u001b[0;34m)\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[0m",

+ "\u001b[0;32m\u001b[0m in \u001b[0;36mcreate\u001b[0;34m(self)\u001b[0m\n\u001b[1;32m 47\u001b[0m \u001b[0;32mraise\u001b[0m \u001b[0mValueError\u001b[0m\u001b[0;34m(\u001b[0m\u001b[0;34mf\"User {self.user_id} already exists.\"\u001b[0m\u001b[0;34m)\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[1;32m 48\u001b[0m \u001b[0;34m\u001b[0m\u001b[0m\n\u001b[0;32m---> 49\u001b[0;31m \u001b[0mself\u001b[0m\u001b[0;34m.\u001b[0m\u001b[0msave\u001b[0m\u001b[0;34m(\u001b[0m\u001b[0;34m)\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[0m\u001b[1;32m 50\u001b[0m \u001b[0;34m\u001b[0m\u001b[0m\n\u001b[1;32m 51\u001b[0m \u001b[0;32mdef\u001b[0m \u001b[0msave\u001b[0m\u001b[0;34m(\u001b[0m\u001b[0mself\u001b[0m\u001b[0;34m)\u001b[0m\u001b[0;34m:\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n",

+ "\u001b[0;32m\u001b[0m in \u001b[0;36msave\u001b[0;34m(self)\u001b[0m\n\u001b[1;32m 56\u001b[0m data = {\n\u001b[1;32m 57\u001b[0m \u001b[0;34m\"user_id\"\u001b[0m\u001b[0;34m:\u001b[0m \u001b[0mself\u001b[0m\u001b[0;34m.\u001b[0m\u001b[0muser_id\u001b[0m\u001b[0;34m,\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[0;32m---> 58\u001b[0;31m \u001b[0;34m\"roles\"\u001b[0m\u001b[0;34m:\u001b[0m \u001b[0;34m[\u001b[0m\u001b[0mUserRoles\u001b[0m\u001b[0;34m(\u001b[0m\u001b[0mrole\u001b[0m\u001b[0;34m)\u001b[0m\u001b[0;34m.\u001b[0m\u001b[0mvalue\u001b[0m \u001b[0;32mfor\u001b[0m \u001b[0mrole\u001b[0m \u001b[0;32min\u001b[0m \u001b[0mset\u001b[0m\u001b[0;34m(\u001b[0m\u001b[0mself\u001b[0m\u001b[0;34m.\u001b[0m\u001b[0mroles\u001b[0m\u001b[0;34m)\u001b[0m\u001b[0;34m]\u001b[0m \u001b[0;31m# ensure roles are unique and convert to strings\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[0m\u001b[1;32m 59\u001b[0m }\n\u001b[1;32m 60\u001b[0m \u001b[0mself\u001b[0m\u001b[0;34m.\u001b[0m\u001b[0mredis_client\u001b[0m\u001b[0;34m.\u001b[0m\u001b[0mjson\u001b[0m\u001b[0;34m(\u001b[0m\u001b[0;34m)\u001b[0m\u001b[0;34m.\u001b[0m\u001b[0mset\u001b[0m\u001b[0;34m(\u001b[0m\u001b[0mself\u001b[0m\u001b[0;34m.\u001b[0m\u001b[0mkey\u001b[0m\u001b[0;34m,\u001b[0m \u001b[0;34m\".\"\u001b[0m\u001b[0;34m,\u001b[0m \u001b[0mdata\u001b[0m\u001b[0;34m)\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n",

+ "\u001b[0;32m\u001b[0m in \u001b[0;36m\u001b[0;34m(.0)\u001b[0m\n\u001b[1;32m 56\u001b[0m data = {\n\u001b[1;32m 57\u001b[0m \u001b[0;34m\"user_id\"\u001b[0m\u001b[0;34m:\u001b[0m \u001b[0mself\u001b[0m\u001b[0;34m.\u001b[0m\u001b[0muser_id\u001b[0m\u001b[0;34m,\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[0;32m---> 58\u001b[0;31m \u001b[0;34m\"roles\"\u001b[0m\u001b[0;34m:\u001b[0m \u001b[0;34m[\u001b[0m\u001b[0mUserRoles\u001b[0m\u001b[0;34m(\u001b[0m\u001b[0mrole\u001b[0m\u001b[0;34m)\u001b[0m\u001b[0;34m.\u001b[0m\u001b[0mvalue\u001b[0m \u001b[0;32mfor\u001b[0m \u001b[0mrole\u001b[0m \u001b[0;32min\u001b[0m \u001b[0mset\u001b[0m\u001b[0;34m(\u001b[0m\u001b[0mself\u001b[0m\u001b[0;34m.\u001b[0m\u001b[0mroles\u001b[0m\u001b[0;34m)\u001b[0m\u001b[0;34m]\u001b[0m \u001b[0;31m# ensure roles are unique and convert to strings\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[0m\u001b[1;32m 59\u001b[0m }\n\u001b[1;32m 60\u001b[0m \u001b[0mself\u001b[0m\u001b[0;34m.\u001b[0m\u001b[0mredis_client\u001b[0m\u001b[0;34m.\u001b[0m\u001b[0mjson\u001b[0m\u001b[0;34m(\u001b[0m\u001b[0;34m)\u001b[0m\u001b[0;34m.\u001b[0m\u001b[0mset\u001b[0m\u001b[0;34m(\u001b[0m\u001b[0mself\u001b[0m\u001b[0;34m.\u001b[0m\u001b[0mkey\u001b[0m\u001b[0;34m,\u001b[0m \u001b[0;34m\".\"\u001b[0m\u001b[0;34m,\u001b[0m \u001b[0mdata\u001b[0m\u001b[0;34m)\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n",

+ "\u001b[0;32m/usr/lib/python3.11/enum.py\u001b[0m in \u001b[0;36m__call__\u001b[0;34m(cls, value, names, module, qualname, type, start, boundary)\u001b[0m\n\u001b[1;32m 712\u001b[0m \"\"\"\n\u001b[1;32m 713\u001b[0m \u001b[0;32mif\u001b[0m \u001b[0mnames\u001b[0m \u001b[0;32mis\u001b[0m \u001b[0;32mNone\u001b[0m\u001b[0;34m:\u001b[0m \u001b[0;31m# simple value lookup\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[0;32m--> 714\u001b[0;31m \u001b[0;32mreturn\u001b[0m \u001b[0mcls\u001b[0m\u001b[0;34m.\u001b[0m\u001b[0m__new__\u001b[0m\u001b[0;34m(\u001b[0m\u001b[0mcls\u001b[0m\u001b[0;34m,\u001b[0m \u001b[0mvalue\u001b[0m\u001b[0;34m)\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[0m\u001b[1;32m 715\u001b[0m \u001b[0;31m# otherwise, functional API: we're creating a new Enum type\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[1;32m 716\u001b[0m return cls._create_(\n",

+ "\u001b[0;32m/usr/lib/python3.11/enum.py\u001b[0m in \u001b[0;36m__new__\u001b[0;34m(cls, value)\u001b[0m\n\u001b[1;32m 1135\u001b[0m \u001b[0mve_exc\u001b[0m \u001b[0;34m=\u001b[0m \u001b[0mValueError\u001b[0m\u001b[0;34m(\u001b[0m\u001b[0;34m\"%r is not a valid %s\"\u001b[0m \u001b[0;34m%\u001b[0m \u001b[0;34m(\u001b[0m\u001b[0mvalue\u001b[0m\u001b[0;34m,\u001b[0m \u001b[0mcls\u001b[0m\u001b[0;34m.\u001b[0m\u001b[0m__qualname__\u001b[0m\u001b[0;34m)\u001b[0m\u001b[0;34m)\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[1;32m 1136\u001b[0m \u001b[0;32mif\u001b[0m \u001b[0mresult\u001b[0m \u001b[0;32mis\u001b[0m \u001b[0;32mNone\u001b[0m \u001b[0;32mand\u001b[0m \u001b[0mexc\u001b[0m \u001b[0;32mis\u001b[0m \u001b[0;32mNone\u001b[0m\u001b[0;34m:\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[0;32m-> 1137\u001b[0;31m \u001b[0;32mraise\u001b[0m \u001b[0mve_exc\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[0m\u001b[1;32m 1138\u001b[0m \u001b[0;32melif\u001b[0m \u001b[0mexc\u001b[0m \u001b[0;32mis\u001b[0m \u001b[0;32mNone\u001b[0m\u001b[0;34m:\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[1;32m 1139\u001b[0m exc = TypeError(\n",

+ "\u001b[0;31mValueError\u001b[0m: 'engineering' is not a valid UserRoles"

+ ]

+ }

+ ],

+ "source": [

+ "# NBVAL_SKIP\n",

+ "tyler = User(redis_client, \"tyler\", roles=[\"sales\", \"engineering\"])\n",

+ "tyler.create()"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 16,

+ "id": "WWVJF0UVCt4d",

+ "metadata": {

+ "collapsed": true,

+ "id": "WWVJF0UVCt4d"

+ },

+ "outputs": [],

+ "source": [

+ "# Try again but this time with valid roles\n",

+ "tyler = User(redis_client, \"tyler\", roles=[\"sales\"])\n",

+ "tyler.create()"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 17,

+ "id": "DXEyktWLC1cC",

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/"

+ },

+ "id": "DXEyktWLC1cC",

+ "outputId": "dbb6e93f-3b81-4c14-f329-daf97a613c89"

+ },

+ "outputs": [

+ {

+ "data": {

+ "text/plain": [

+ ""

+ ]

+ },

+ "execution_count": 17,

+ "metadata": {},

+ "output_type": "execute_result"

+ }

+ ],

+ "source": [

+ "tyler"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 18,

+ "id": "O0K_rdC7C6OH",

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/"

+ },

+ "id": "O0K_rdC7C6OH",

+ "outputId": "f823f253-cf42-4975-f711-6391b36f83bd"

+ },

+ "outputs": [

+ {