New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

org.redisson.client.RedisTimeoutException: Unable to acquire connection! RedissonPromise #4299

Comments

|

The solution given at that time was never the final answer, and did not give the reasons for the above problems |

|

Could you try 3.17.1 version? |

|

Do you mean the above problems exist in 3.16.1? |

|

I'm unable to reproduce it with the latest version. |

|

3.17.1 there is indeed a change |

|

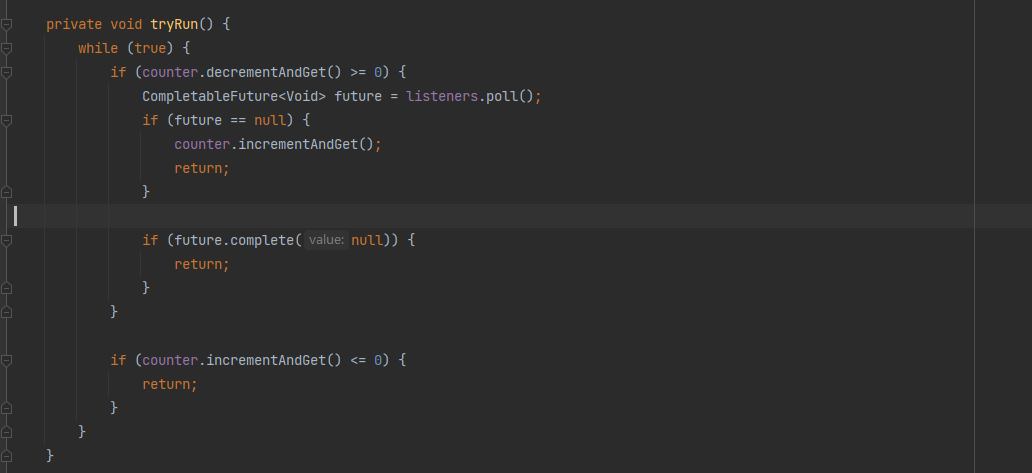

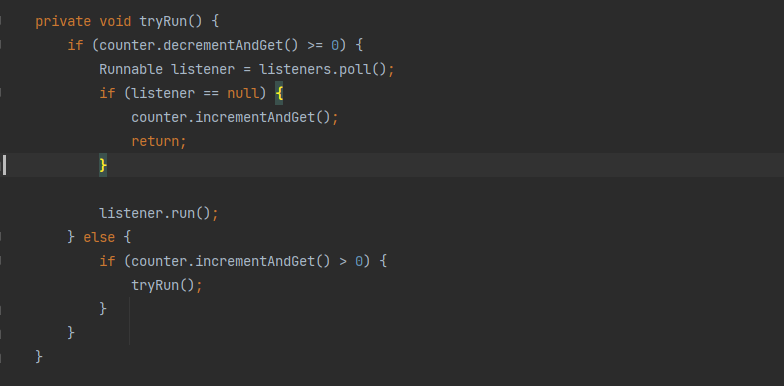

Yes, I improved AsyncSemaphore a bit. |

|

Really dont want to hijack this issue but since upgrading to 3.17.0/3.17.3 we also have a lot of these errors in our log but also other things start to throw sporadically exceptions like this one for example: or other class cast exceptions like this (both are RLock related) Interesting is that it only happens on one node so it does not seem to be a redis cluster issue (we use ElastiCache). Sometimes it works again after a couple of minutes, sometimes it takes 30minutes for this single node to get operational again (almost all calls through redisson fail in this time). I think we will try to downgrade to 3.16.7 and see how things evolve. We use java 11 and redisson 3.17.0/3.17.3 (but the same version in all nodes at the same time). |

|

Could you set |

|

@ycy1991 Can you share your Redisson config? |

|

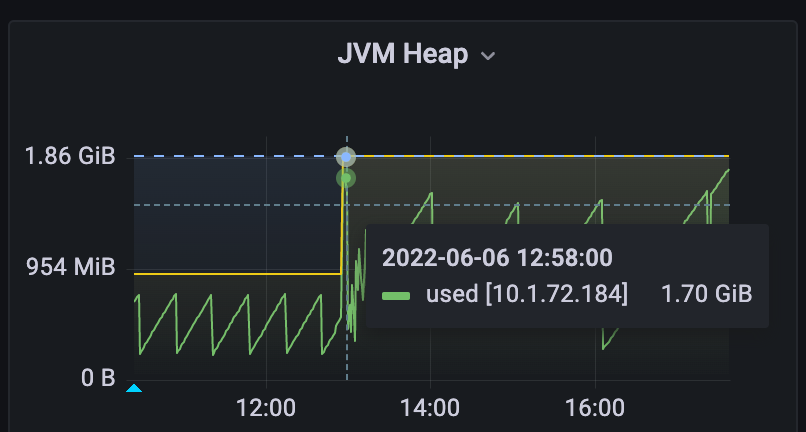

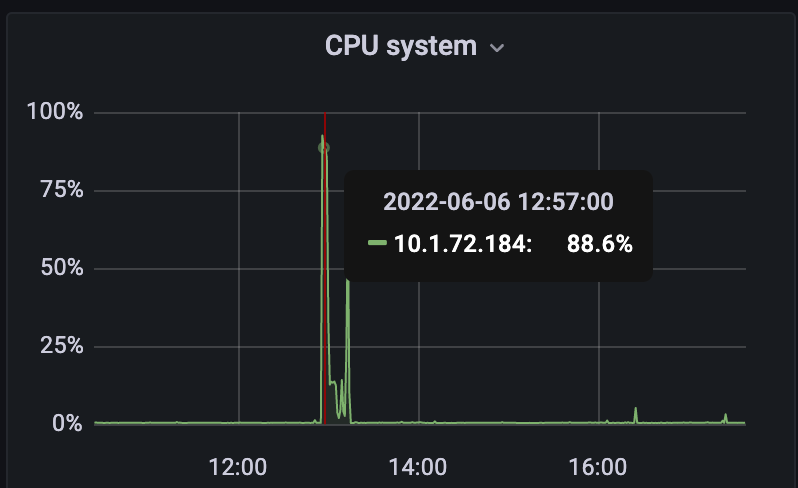

@mrniko Edit: The only thing I noticed whenever this happens we also have a spike in JVM heap usage. Sometimes 100% more heap allocated during these incidents. Not all connections seem to be affected, some calls are going through without any problem. Maybe a dead/stale netty connection? |

|

Which Redisson objects do you use? |

|

@mrniko |

Which value is about 2000 ? |

|

Thank you for provided details. Did you get a dump at OOM moment? |

|

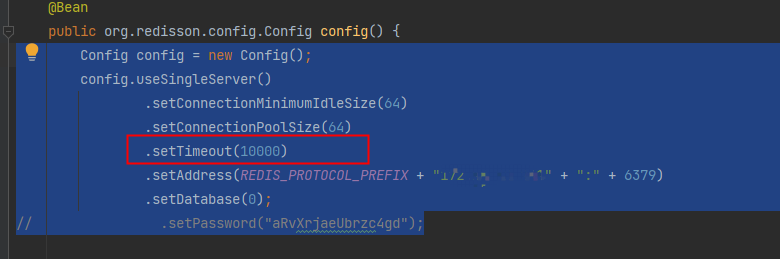

Can you also share Redisson settings set at that moment? |

Unfortunately not. Something on my long list of things to do :D

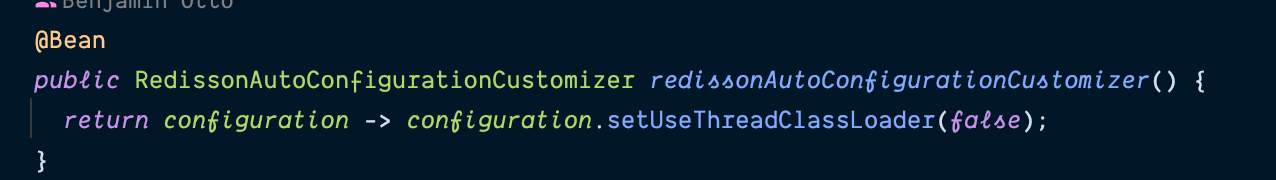

default spring boot starter config except for (without this we had issue with thread locals e.g. from Spring Data...) |

Can you clarify here? |

Sorry I mixed things up here a little bit. At some point we introduced I think this is an issue with the underlying tomcat in SB if you use the standard pool for |

Can you try 3.16.8 version as well? |

|

@mrniko |

|

I think I have fixed the issue. Please try version attached. |

|

@mrniko Could you publish this snapshot to a maven repository? Quite hard to get this into our applications otherwise |

|

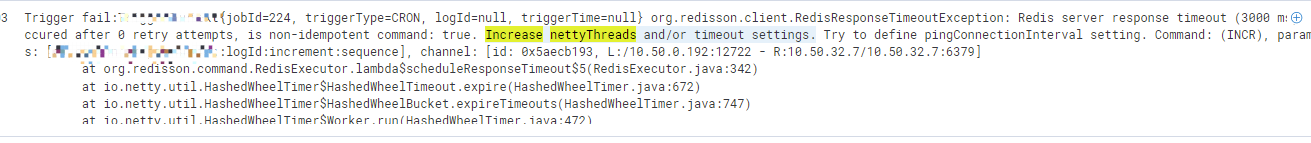

Just fyi: Exceptions this time: |

|

I'll apply changes and make a release.

It seems a connection issue. |

|

3.17.4 version has been released. Please try it |

|

we tested 3.17.4 for several days and it seems to be fine for us. |

|

Great. Thanks for feedback. I'm closing it. |

Is your feature request related to a problem? Please describe.

version 3.16.1,see the exceptions:

org.redisson.client.RedisTimeoutException: Unable to acquire connection! RedissonPromise [promise=ImmediateEventExecutor$ImmediatePromise@4bc618ba(failure: java.util.concurrent.CancellationException)]Increase connection pool size. Node source: NodeSource [slot=null, addr=null, redisClient=null, redirect=null, entry=MasterSlaveEntry [masterEntry=[freeSubscribeConnectionsAmount=0, freeSubscribeConnectionsCounter=value:49:queue:0, freeConnectionsAmount=22, freeConnectionsCounter=value:-2144903244:queue:26923, freezeReason=null, client=[addr=redis://], nodeType=MASTER, firstFail=0]]], command: null, params: null after 0 retryattempts

at org.redisson.command.RedisExecutor$2.run(RedisExecutor.java:187)

at io.netty.util.HashedWheelTimer$HashedWheelTimeout.expire(HashedWheelTimer.java:672)

at io.netty.util.HashedWheelTimer$HashedWheelBucket.expireTimeouts(HashedWheelTimer.java:747)

at io.netty.util.HashedWheelTimer$Worker.run(HashedWheelTimer.java:472)

at io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

at java.lang.Thread.run(Thread.java:748)

why freeConnectionsCounter is more then range of int?

reeConnectionsAmount=22, freeConnectionsCounter=value:-2144903244:queue:26923

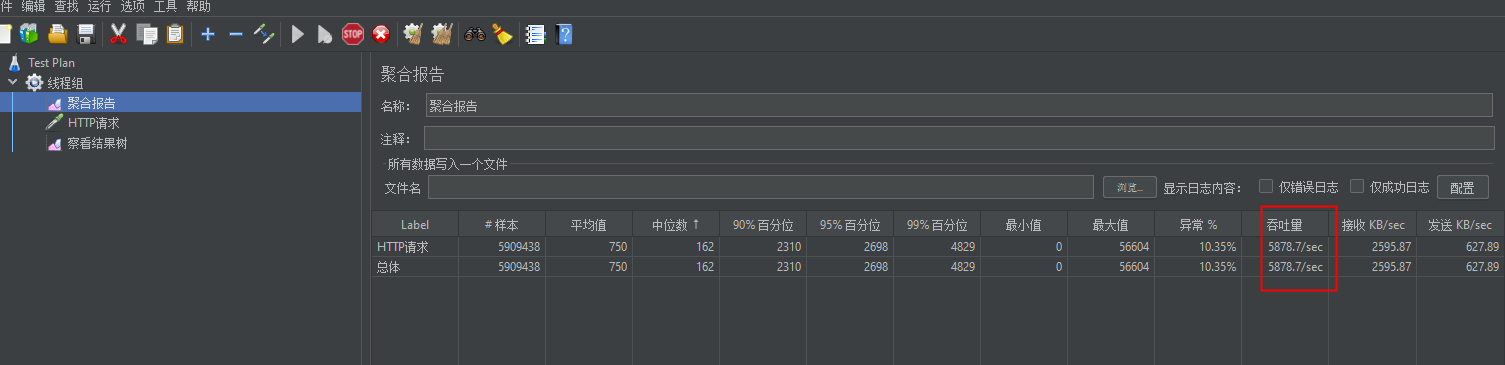

The above errors can be repeated by local simulation disconnection test. Freeconnectionscounter is always equal to 0

The production environment doesn't know why freeconnectionscounter exceeds the integer range every time a problem occurs. Why is the semaphore range the maximum number of connections, but freeconnectionscounter can decrease at will? What causes freeconnectionscounter to exceed the integer range?

The text was updated successfully, but these errors were encountered: