diff --git a/docs/cspell.json b/docs/cspell.json

index 3d8494ea388e..9bc9e15152ce 100644

--- a/docs/cspell.json

+++ b/docs/cspell.json

@@ -210,6 +210,7 @@

"Nikhila",

"nohash",

"noqa",

+ "nuScenes",

"numpy",

"nyud",

"obbs",

diff --git a/examples/manifest.yml b/examples/manifest.yml

index fd5d7c5b371e..87f1fe7d4086 100644

--- a/examples/manifest.yml

+++ b/examples/manifest.yml

@@ -72,12 +72,18 @@ root:

- name: human-pose-tracking

python: python/human_pose_tracking

+ - name: lidar

+ python: python/lidar

+

- name: live-camera-edge-detection

python: python/live_camera_edge_detection

- name: live-depth-sensor

python: python/live_depth_sensor

+ - name: nuscenes

+ python: python/nuscenes

+

- name: objectron

python: python/objectron

rust: rust/objectron

diff --git a/examples/python/lidar/.gitignore b/examples/python/lidar/.gitignore

new file mode 100644

index 000000000000..d1ac3a94954e

--- /dev/null

+++ b/examples/python/lidar/.gitignore

@@ -0,0 +1 @@

+dataset/**

diff --git a/examples/python/lidar/README.md b/examples/python/lidar/README.md

new file mode 100644

index 000000000000..35c363c7988e

--- /dev/null

+++ b/examples/python/lidar/README.md

@@ -0,0 +1,23 @@

+---

+title: Lidar

+python: https://github.com/rerun-io/rerun/blob/latest/examples/python/lidar/main.py?speculative-link

+tags: [lidar, 3D]

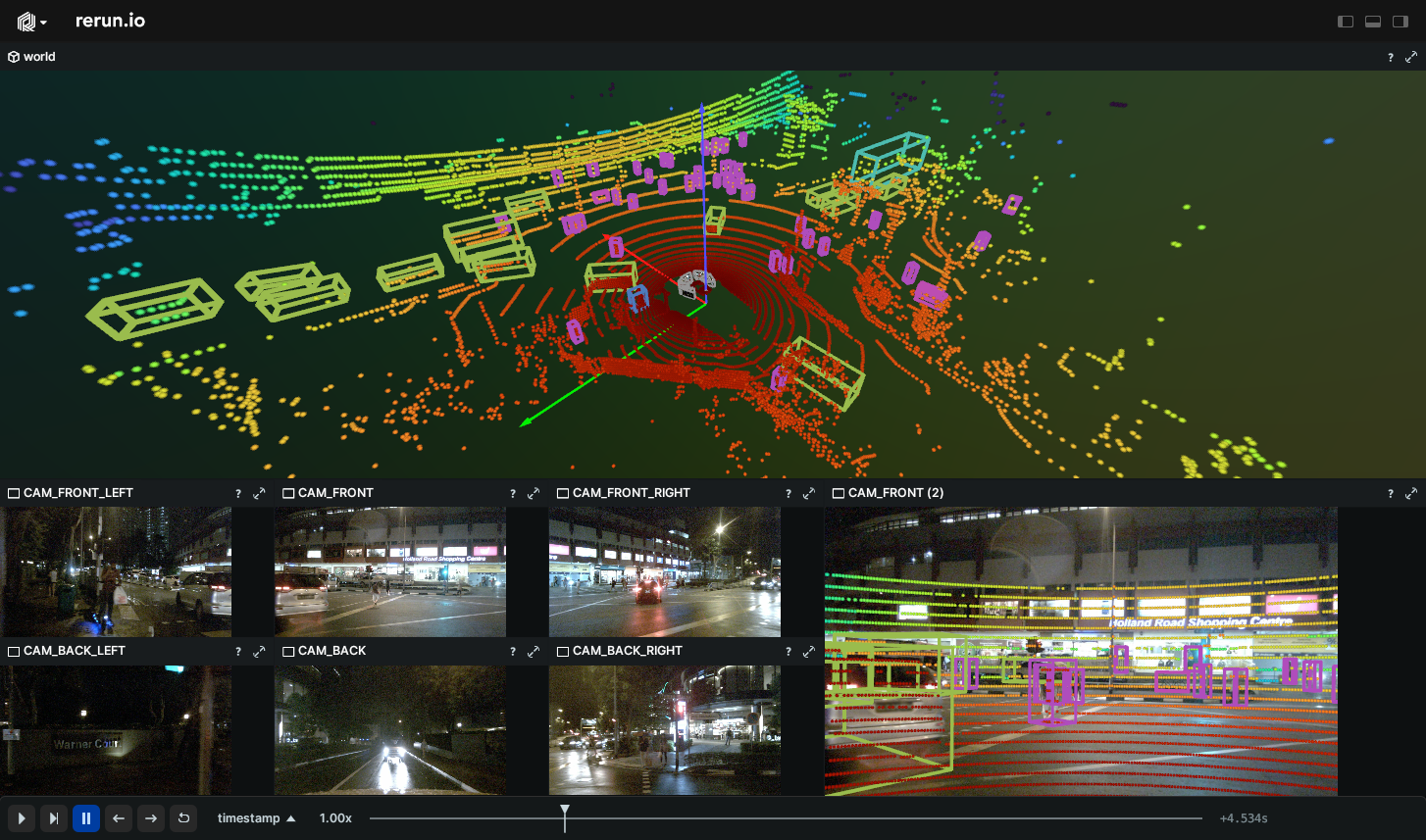

+description: "Visualize the lidar data from the nuScenes dataset."

+thumbnail: https://static.rerun.io/lidar/bcea9337044919c1524429bd26bc51a3c4db8ccb/480w.png

+thumbnail_dimensions: [480, 286]

+---

+

+

+  +

+

+

+

+

+

+This example visualizes only the lidar data from the [nuScenes dataset](https://www.nuscenes.org/) using Rerun. For a moe extensive example including other sensors and annotations check out the [nuScenes example](https://www.rerun.io/examples/real-data/nuscenes?speculative-link).

+

+```bash

+pip install -r examples/python/lidar/requirements.txt

+python examples/python/lidar/main.py

+```

diff --git a/examples/python/lidar/dataset b/examples/python/lidar/dataset

new file mode 120000

index 000000000000..407167dd09c5

--- /dev/null

+++ b/examples/python/lidar/dataset

@@ -0,0 +1 @@

+../nuscenes/dataset

\ No newline at end of file

diff --git a/examples/python/lidar/download_dataset.py b/examples/python/lidar/download_dataset.py

new file mode 100644

index 000000000000..fbb384643ffe

--- /dev/null

+++ b/examples/python/lidar/download_dataset.py

@@ -0,0 +1,65 @@

+"""Module to download nuScenes minisplit."""

+from __future__ import annotations

+

+import os

+import pathlib

+import tarfile

+

+import requests

+import tqdm

+

+MINISPLIT_SCENES = [

+ "scene-0061",

+ "scene-0103",

+ "scene-0553",

+ "scene-0655",

+ "scene-0757",

+ "scene-0796",

+ "scene-0916",

+ "scene-1077",

+ "scene-1094",

+ "scene-1100",

+]

+MINISPLIT_URL = "https://www.nuscenes.org/data/v1.0-mini.tgz"

+

+

+def download_file(url: str, dst_file_path: pathlib.Path) -> None:

+ """Download file from url to dst_fpath."""

+ dst_file_path.parent.mkdir(parents=True, exist_ok=True)

+ print(f"Downloading {url} to {dst_file_path}")

+ response = requests.get(url, stream=True)

+ with tqdm.tqdm.wrapattr(

+ open(dst_file_path, "wb"),

+ "write",

+ miniters=1,

+ total=int(response.headers.get("content-length", 0)),

+ desc=f"Downloading {dst_file_path.name}",

+ ) as f:

+ for chunk in response.iter_content(chunk_size=4096):

+ f.write(chunk)

+

+

+def untar_file(tar_file_path: pathlib.Path, dst_path: pathlib.Path, keep_tar: bool = True) -> bool:

+ """Untar tar file at tar_file_path to dst."""

+ print(f"Untar file {tar_file_path}")

+ try:

+ with tarfile.open(tar_file_path, "r") as tf:

+ tf.extractall(dst_path)

+ except Exception as error:

+ print(f"Error unzipping {tar_file_path}, error: {error}")

+ return False

+ if not keep_tar:

+ os.remove(tar_file_path)

+ return True

+

+

+def download_minisplit(root_dir: pathlib.Path) -> None:

+ """

+ Download nuScenes minisplit.

+

+ Adopted from https://colab.research.google.com/github/nutonomy/nuscenes-devkit/blob/master/python-sdk/tutorials/nuscenes_tutorial.ipynb

+ """

+ zip_file_path = pathlib.Path("./v1.0-mini.tgz")

+ if not zip_file_path.is_file():

+ download_file(MINISPLIT_URL, zip_file_path)

+ untar_file(zip_file_path, root_dir, keep_tar=True)

diff --git a/examples/python/lidar/main.py b/examples/python/lidar/main.py

new file mode 100755

index 000000000000..17751652f700

--- /dev/null

+++ b/examples/python/lidar/main.py

@@ -0,0 +1,102 @@

+#!/usr/bin/env python3

+from __future__ import annotations

+

+import argparse

+import os

+import pathlib

+from typing import Final

+

+import matplotlib

+import numpy as np

+import rerun as rr

+from download_dataset import MINISPLIT_SCENES, download_minisplit

+from nuscenes import nuscenes

+

+EXAMPLE_DIR: Final = pathlib.Path(os.path.dirname(__file__))

+DATASET_DIR: Final = EXAMPLE_DIR / "dataset"

+

+# currently need to calculate the color manually

+# see https://github.com/rerun-io/rerun/issues/4409

+cmap = matplotlib.colormaps["turbo_r"]

+norm = matplotlib.colors.Normalize(

+ vmin=3.0,

+ vmax=75.0,

+)

+

+

+def ensure_scene_available(root_dir: pathlib.Path, dataset_version: str, scene_name: str) -> None:

+ """

+ Ensure that the specified scene is available.

+

+ Downloads minisplit into root_dir if scene_name is part of it and root_dir is empty.

+

+ Raises ValueError if scene is not available and cannot be downloaded.

+ """

+ try:

+ nusc = nuscenes.NuScenes(version=dataset_version, dataroot=root_dir, verbose=True)

+ except AssertionError: # dataset initialization failed

+ if dataset_version == "v1.0-mini" and scene_name in MINISPLIT_SCENES:

+ download_minisplit(root_dir)

+ nusc = nuscenes.NuScenes(version=dataset_version, dataroot=root_dir, verbose=True)

+ else:

+ print(f"Could not find dataset at {root_dir} and could not automatically download specified scene.")

+ exit()

+

+ scene_names = [s["name"] for s in nusc.scene]

+ if scene_name not in scene_names:

+ raise ValueError(f"{scene_name=} not found in dataset")

+

+

+def log_nuscenes_lidar(root_dir: pathlib.Path, dataset_version: str, scene_name: str) -> None:

+ nusc = nuscenes.NuScenes(version=dataset_version, dataroot=root_dir, verbose=True)

+

+ scene = next(s for s in nusc.scene if s["name"] == scene_name)

+

+ rr.log("world", rr.ViewCoordinates.RIGHT_HAND_Z_UP, timeless=True)

+

+ first_sample = nusc.get("sample", scene["first_sample_token"])

+ current_lidar_token = first_sample["data"]["LIDAR_TOP"]

+ while current_lidar_token != "":

+ sample_data = nusc.get("sample_data", current_lidar_token)

+

+ data_file_path = nusc.dataroot / sample_data["filename"]

+ pointcloud = nuscenes.LidarPointCloud.from_file(str(data_file_path))

+ points = pointcloud.points[:3].T # shape after transposing: (num_points, 3)

+ point_distances = np.linalg.norm(points, axis=1)

+ point_colors = cmap(norm(point_distances))

+

+ # timestamps are in microseconds

+ rr.set_time_seconds("timestamp", sample_data["timestamp"] * 1e-6)

+ rr.log("world/lidar", rr.Points3D(points, colors=point_colors))

+

+ current_lidar_token = sample_data["next"]

+

+

+def main() -> None:

+ parser = argparse.ArgumentParser(description="Visualizes lidar scans using the Rerun SDK.")

+ parser.add_argument(

+ "--root_dir",

+ type=pathlib.Path,

+ default=DATASET_DIR,

+ help="Root directory of nuScenes dataset",

+ )

+ parser.add_argument(

+ "--scene_name",

+ type=str,

+ default="scene-0061",

+ help="Scene name to visualize (typically of form 'scene-xxxx')",

+ )

+ parser.add_argument("--dataset_version", type=str, default="v1.0-mini", help="Scene id to visualize")

+ rr.script_add_args(parser)

+ args = parser.parse_args()

+

+ ensure_scene_available(args.root_dir, args.dataset_version, args.scene_name)

+

+ rr.script_setup(args, "rerun_example_lidar")

+ log_nuscenes_lidar(args.root_dir, args.dataset_version, args.scene_name)

+

+ rr.script_teardown(args)

+

+

+if __name__ == "__main__":

+ main()

diff --git a/examples/python/lidar/requirements.txt b/examples/python/lidar/requirements.txt

new file mode 100644

index 000000000000..9ddcccf2ce52

--- /dev/null

+++ b/examples/python/lidar/requirements.txt

@@ -0,0 +1,4 @@

+matplotlib

+numpy

+nuscenes-devkit

+rerun-sdk

diff --git a/examples/python/nuscenes/.gitignore b/examples/python/nuscenes/.gitignore

new file mode 100644

index 000000000000..d1ac3a94954e

--- /dev/null

+++ b/examples/python/nuscenes/.gitignore

@@ -0,0 +1 @@

+dataset/**

diff --git a/examples/python/nuscenes/README.md b/examples/python/nuscenes/README.md

new file mode 100644

index 000000000000..ad1a7b6d4e5c

--- /dev/null

+++ b/examples/python/nuscenes/README.md

@@ -0,0 +1,24 @@

+---

+title: nuScenes

+python: https://github.com/rerun-io/rerun/blob/latest/examples/python/nuscenes/main.py?speculative-link

+tags: [lidar, 3D, 2D, object-detection, pinhole-camera]

+description: "Visualize the nuScenes dataset including lidar, radar, images, and bounding boxes."

+thumbnail: https://static.rerun.io/nuscenes/64a50a9d67cbb69ae872551989ee807b195f6b5d/480w.png

+thumbnail_dimensions: [480, 282]

+---

+

+

+

+

+

+

+

+

+

+This example visualizes only the lidar data from the [nuScenes dataset](https://www.nuscenes.org/) using Rerun. For a moe extensive example including other sensors and annotations check out the [nuScenes example](https://www.rerun.io/examples/real-data/nuscenes?speculative-link).

+

+```bash

+pip install -r examples/python/lidar/requirements.txt

+python examples/python/lidar/main.py

+```

diff --git a/examples/python/lidar/dataset b/examples/python/lidar/dataset

new file mode 120000

index 000000000000..407167dd09c5

--- /dev/null

+++ b/examples/python/lidar/dataset

@@ -0,0 +1 @@

+../nuscenes/dataset

\ No newline at end of file

diff --git a/examples/python/lidar/download_dataset.py b/examples/python/lidar/download_dataset.py

new file mode 100644

index 000000000000..fbb384643ffe

--- /dev/null

+++ b/examples/python/lidar/download_dataset.py

@@ -0,0 +1,65 @@

+"""Module to download nuScenes minisplit."""

+from __future__ import annotations

+

+import os

+import pathlib

+import tarfile

+

+import requests

+import tqdm

+

+MINISPLIT_SCENES = [

+ "scene-0061",

+ "scene-0103",

+ "scene-0553",

+ "scene-0655",

+ "scene-0757",

+ "scene-0796",

+ "scene-0916",

+ "scene-1077",

+ "scene-1094",

+ "scene-1100",

+]

+MINISPLIT_URL = "https://www.nuscenes.org/data/v1.0-mini.tgz"

+

+

+def download_file(url: str, dst_file_path: pathlib.Path) -> None:

+ """Download file from url to dst_fpath."""

+ dst_file_path.parent.mkdir(parents=True, exist_ok=True)

+ print(f"Downloading {url} to {dst_file_path}")

+ response = requests.get(url, stream=True)

+ with tqdm.tqdm.wrapattr(

+ open(dst_file_path, "wb"),

+ "write",

+ miniters=1,

+ total=int(response.headers.get("content-length", 0)),

+ desc=f"Downloading {dst_file_path.name}",

+ ) as f:

+ for chunk in response.iter_content(chunk_size=4096):

+ f.write(chunk)

+

+

+def untar_file(tar_file_path: pathlib.Path, dst_path: pathlib.Path, keep_tar: bool = True) -> bool:

+ """Untar tar file at tar_file_path to dst."""

+ print(f"Untar file {tar_file_path}")

+ try:

+ with tarfile.open(tar_file_path, "r") as tf:

+ tf.extractall(dst_path)

+ except Exception as error:

+ print(f"Error unzipping {tar_file_path}, error: {error}")

+ return False

+ if not keep_tar:

+ os.remove(tar_file_path)

+ return True

+

+

+def download_minisplit(root_dir: pathlib.Path) -> None:

+ """

+ Download nuScenes minisplit.

+

+ Adopted from https://colab.research.google.com/github/nutonomy/nuscenes-devkit/blob/master/python-sdk/tutorials/nuscenes_tutorial.ipynb

+ """

+ zip_file_path = pathlib.Path("./v1.0-mini.tgz")

+ if not zip_file_path.is_file():

+ download_file(MINISPLIT_URL, zip_file_path)

+ untar_file(zip_file_path, root_dir, keep_tar=True)

diff --git a/examples/python/lidar/main.py b/examples/python/lidar/main.py

new file mode 100755

index 000000000000..17751652f700

--- /dev/null

+++ b/examples/python/lidar/main.py

@@ -0,0 +1,102 @@

+#!/usr/bin/env python3

+from __future__ import annotations

+

+import argparse

+import os

+import pathlib

+from typing import Final

+

+import matplotlib

+import numpy as np

+import rerun as rr

+from download_dataset import MINISPLIT_SCENES, download_minisplit

+from nuscenes import nuscenes

+

+EXAMPLE_DIR: Final = pathlib.Path(os.path.dirname(__file__))

+DATASET_DIR: Final = EXAMPLE_DIR / "dataset"

+

+# currently need to calculate the color manually

+# see https://github.com/rerun-io/rerun/issues/4409

+cmap = matplotlib.colormaps["turbo_r"]

+norm = matplotlib.colors.Normalize(

+ vmin=3.0,

+ vmax=75.0,

+)

+

+

+def ensure_scene_available(root_dir: pathlib.Path, dataset_version: str, scene_name: str) -> None:

+ """

+ Ensure that the specified scene is available.

+

+ Downloads minisplit into root_dir if scene_name is part of it and root_dir is empty.

+

+ Raises ValueError if scene is not available and cannot be downloaded.

+ """

+ try:

+ nusc = nuscenes.NuScenes(version=dataset_version, dataroot=root_dir, verbose=True)

+ except AssertionError: # dataset initialization failed

+ if dataset_version == "v1.0-mini" and scene_name in MINISPLIT_SCENES:

+ download_minisplit(root_dir)

+ nusc = nuscenes.NuScenes(version=dataset_version, dataroot=root_dir, verbose=True)

+ else:

+ print(f"Could not find dataset at {root_dir} and could not automatically download specified scene.")

+ exit()

+

+ scene_names = [s["name"] for s in nusc.scene]

+ if scene_name not in scene_names:

+ raise ValueError(f"{scene_name=} not found in dataset")

+

+

+def log_nuscenes_lidar(root_dir: pathlib.Path, dataset_version: str, scene_name: str) -> None:

+ nusc = nuscenes.NuScenes(version=dataset_version, dataroot=root_dir, verbose=True)

+

+ scene = next(s for s in nusc.scene if s["name"] == scene_name)

+

+ rr.log("world", rr.ViewCoordinates.RIGHT_HAND_Z_UP, timeless=True)

+

+ first_sample = nusc.get("sample", scene["first_sample_token"])

+ current_lidar_token = first_sample["data"]["LIDAR_TOP"]

+ while current_lidar_token != "":

+ sample_data = nusc.get("sample_data", current_lidar_token)

+

+ data_file_path = nusc.dataroot / sample_data["filename"]

+ pointcloud = nuscenes.LidarPointCloud.from_file(str(data_file_path))

+ points = pointcloud.points[:3].T # shape after transposing: (num_points, 3)

+ point_distances = np.linalg.norm(points, axis=1)

+ point_colors = cmap(norm(point_distances))

+

+ # timestamps are in microseconds

+ rr.set_time_seconds("timestamp", sample_data["timestamp"] * 1e-6)

+ rr.log("world/lidar", rr.Points3D(points, colors=point_colors))

+

+ current_lidar_token = sample_data["next"]

+

+

+def main() -> None:

+ parser = argparse.ArgumentParser(description="Visualizes lidar scans using the Rerun SDK.")

+ parser.add_argument(

+ "--root_dir",

+ type=pathlib.Path,

+ default=DATASET_DIR,

+ help="Root directory of nuScenes dataset",

+ )

+ parser.add_argument(

+ "--scene_name",

+ type=str,

+ default="scene-0061",

+ help="Scene name to visualize (typically of form 'scene-xxxx')",

+ )

+ parser.add_argument("--dataset_version", type=str, default="v1.0-mini", help="Scene id to visualize")

+ rr.script_add_args(parser)

+ args = parser.parse_args()

+

+ ensure_scene_available(args.root_dir, args.dataset_version, args.scene_name)

+

+ rr.script_setup(args, "rerun_example_lidar")

+ log_nuscenes_lidar(args.root_dir, args.dataset_version, args.scene_name)

+

+ rr.script_teardown(args)

+

+

+if __name__ == "__main__":

+ main()

diff --git a/examples/python/lidar/requirements.txt b/examples/python/lidar/requirements.txt

new file mode 100644

index 000000000000..9ddcccf2ce52

--- /dev/null

+++ b/examples/python/lidar/requirements.txt

@@ -0,0 +1,4 @@

+matplotlib

+numpy

+nuscenes-devkit

+rerun-sdk

diff --git a/examples/python/nuscenes/.gitignore b/examples/python/nuscenes/.gitignore

new file mode 100644

index 000000000000..d1ac3a94954e

--- /dev/null

+++ b/examples/python/nuscenes/.gitignore

@@ -0,0 +1 @@

+dataset/**

diff --git a/examples/python/nuscenes/README.md b/examples/python/nuscenes/README.md

new file mode 100644

index 000000000000..ad1a7b6d4e5c

--- /dev/null

+++ b/examples/python/nuscenes/README.md

@@ -0,0 +1,24 @@

+---

+title: nuScenes

+python: https://github.com/rerun-io/rerun/blob/latest/examples/python/nuscenes/main.py?speculative-link

+tags: [lidar, 3D, 2D, object-detection, pinhole-camera]

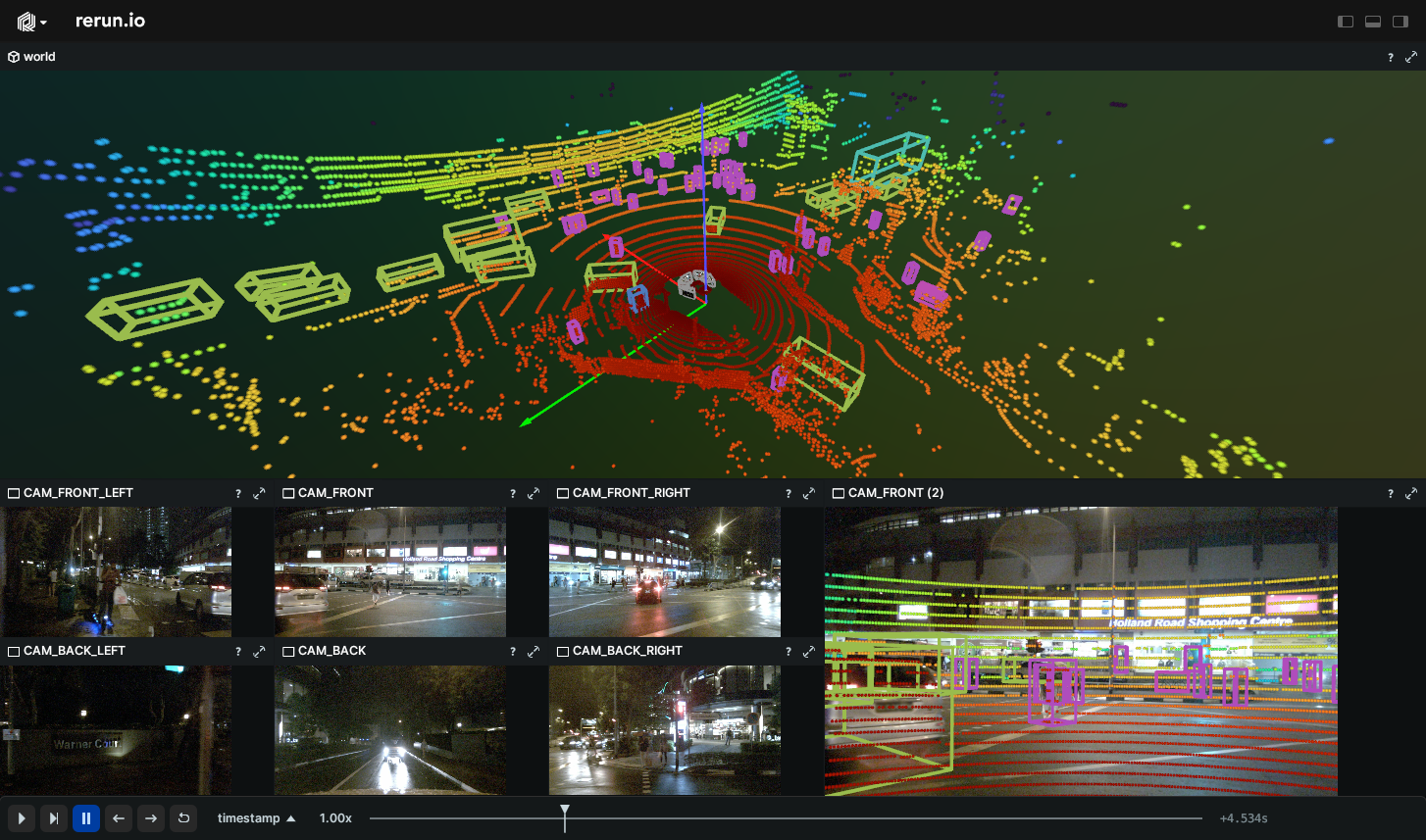

+description: "Visualize the nuScenes dataset including lidar, radar, images, and bounding boxes."

+thumbnail: https://static.rerun.io/nuscenes/64a50a9d67cbb69ae872551989ee807b195f6b5d/480w.png

+thumbnail_dimensions: [480, 282]

+---

+

+

+  +

+

+

+

+

+

+This example visualizes the [nuScenes dataset](https://www.nuscenes.org/) using Rerun. The dataset

+contains lidar data, radar data, color images, and labeled bounding boxes.

+

+```bash

+pip install -r examples/python/nuscenes/requirements.txt

+python examples/python/nuscenes/main.py

+```

diff --git a/examples/python/nuscenes/download_dataset.py b/examples/python/nuscenes/download_dataset.py

new file mode 100644

index 000000000000..fbb384643ffe

--- /dev/null

+++ b/examples/python/nuscenes/download_dataset.py

@@ -0,0 +1,65 @@

+"""Module to download nuScenes minisplit."""

+from __future__ import annotations

+

+import os

+import pathlib

+import tarfile

+

+import requests

+import tqdm

+

+MINISPLIT_SCENES = [

+ "scene-0061",

+ "scene-0103",

+ "scene-0553",

+ "scene-0655",

+ "scene-0757",

+ "scene-0796",

+ "scene-0916",

+ "scene-1077",

+ "scene-1094",

+ "scene-1100",

+]

+MINISPLIT_URL = "https://www.nuscenes.org/data/v1.0-mini.tgz"

+

+

+def download_file(url: str, dst_file_path: pathlib.Path) -> None:

+ """Download file from url to dst_fpath."""

+ dst_file_path.parent.mkdir(parents=True, exist_ok=True)

+ print(f"Downloading {url} to {dst_file_path}")

+ response = requests.get(url, stream=True)

+ with tqdm.tqdm.wrapattr(

+ open(dst_file_path, "wb"),

+ "write",

+ miniters=1,

+ total=int(response.headers.get("content-length", 0)),

+ desc=f"Downloading {dst_file_path.name}",

+ ) as f:

+ for chunk in response.iter_content(chunk_size=4096):

+ f.write(chunk)

+

+

+def untar_file(tar_file_path: pathlib.Path, dst_path: pathlib.Path, keep_tar: bool = True) -> bool:

+ """Untar tar file at tar_file_path to dst."""

+ print(f"Untar file {tar_file_path}")

+ try:

+ with tarfile.open(tar_file_path, "r") as tf:

+ tf.extractall(dst_path)

+ except Exception as error:

+ print(f"Error unzipping {tar_file_path}, error: {error}")

+ return False

+ if not keep_tar:

+ os.remove(tar_file_path)

+ return True

+

+

+def download_minisplit(root_dir: pathlib.Path) -> None:

+ """

+ Download nuScenes minisplit.

+

+ Adopted from https://colab.research.google.com/github/nutonomy/nuscenes-devkit/blob/master/python-sdk/tutorials/nuscenes_tutorial.ipynb

+ """

+ zip_file_path = pathlib.Path("./v1.0-mini.tgz")

+ if not zip_file_path.is_file():

+ download_file(MINISPLIT_URL, zip_file_path)

+ untar_file(zip_file_path, root_dir, keep_tar=True)

diff --git a/examples/python/nuscenes/main.py b/examples/python/nuscenes/main.py

new file mode 100755

index 000000000000..d8e489358979

--- /dev/null

+++ b/examples/python/nuscenes/main.py

@@ -0,0 +1,228 @@

+#!/usr/bin/env python3

+from __future__ import annotations

+

+import argparse

+import os

+import pathlib

+from typing import Any, Final

+

+import matplotlib

+import numpy as np

+import rerun as rr

+from download_dataset import MINISPLIT_SCENES, download_minisplit

+from nuscenes import nuscenes

+

+EXAMPLE_DIR: Final = pathlib.Path(os.path.dirname(__file__))

+DATASET_DIR: Final = EXAMPLE_DIR / "dataset"

+

+# currently need to calculate the color manually

+# see https://github.com/rerun-io/rerun/issues/4409

+cmap = matplotlib.colormaps["turbo_r"]

+norm = matplotlib.colors.Normalize(

+ vmin=3.0,

+ vmax=75.0,

+)

+

+

+def ensure_scene_available(root_dir: pathlib.Path, dataset_version: str, scene_name: str) -> None:

+ """

+ Ensure that the specified scene is available.

+

+ Downloads minisplit into root_dir if scene_name is part of it and root_dir is empty.

+

+ Raises ValueError if scene is not available and cannot be downloaded.

+ """

+ try:

+ nusc = nuscenes.NuScenes(version=dataset_version, dataroot=root_dir, verbose=True)

+ except AssertionError: # dataset initialization failed

+ if dataset_version == "v1.0-mini" and scene_name in MINISPLIT_SCENES:

+ download_minisplit(root_dir)

+ nusc = nuscenes.NuScenes(version=dataset_version, dataroot=root_dir, verbose=True)

+ else:

+ print(f"Could not find dataset at {root_dir} and could not automatically download specified scene.")

+ exit()

+

+ scene_names = [s["name"] for s in nusc.scene]

+ if scene_name not in scene_names:

+ raise ValueError(f"{scene_name=} not found in dataset")

+

+

+def log_nuscenes(root_dir: pathlib.Path, dataset_version: str, scene_name: str) -> None:

+ """Log nuScenes scene."""

+ nusc = nuscenes.NuScenes(version=dataset_version, dataroot=root_dir, verbose=True)

+

+ scene = next(s for s in nusc.scene if s["name"] == scene_name)

+

+ rr.log("world", rr.ViewCoordinates.RIGHT_HAND_Z_UP, timeless=True)

+

+ first_sample_token = scene["first_sample_token"]

+ first_sample = nusc.get("sample", scene["first_sample_token"])

+

+ first_lidar_token = ""

+ first_radar_tokens = []

+ first_camera_tokens = []

+ for sample_data_token in first_sample["data"].values():

+ sample_data = nusc.get("sample_data", sample_data_token)

+ log_sensor_calibration(sample_data, nusc)

+

+ if sample_data["sensor_modality"] == "lidar":

+ first_lidar_token = sample_data_token

+ elif sample_data["sensor_modality"] == "radar":

+ first_radar_tokens.append(sample_data_token)

+ elif sample_data["sensor_modality"] == "camera":

+ first_camera_tokens.append(sample_data_token)

+

+ log_lidar_and_ego_pose(first_lidar_token, nusc)

+ log_cameras(first_camera_tokens, nusc)

+ log_radars(first_radar_tokens, nusc)

+ log_annotations(first_sample_token, nusc)

+

+

+def log_lidar_and_ego_pose(first_lidar_token: str, nusc: nuscenes.NuScenes) -> None:

+ """Log lidar data and vehicle pose."""

+ current_lidar_token = first_lidar_token

+

+ while current_lidar_token != "":

+ sample_data = nusc.get("sample_data", current_lidar_token)

+ sensor_name = sample_data["channel"]

+

+ # timestamps are in microseconds

+ rr.set_time_seconds("timestamp", sample_data["timestamp"] * 1e-6)

+

+ ego_pose = nusc.get("ego_pose", sample_data["ego_pose_token"])

+ rotation_xyzw = np.roll(ego_pose["rotation"], shift=-1) # go from wxyz to xyzw

+ rr.log(

+ "world/ego_vehicle",

+ rr.Transform3D(

+ translation=ego_pose["translation"],

+ rotation=rr.Quaternion(xyzw=rotation_xyzw),

+ from_parent=False,

+ ),

+ )

+ current_lidar_token = sample_data["next"]

+

+ data_file_path = nusc.dataroot / sample_data["filename"]

+ pointcloud = nuscenes.LidarPointCloud.from_file(str(data_file_path))

+ points = pointcloud.points[:3].T # shape after transposing: (num_points, 3)

+ point_distances = np.linalg.norm(points, axis=1)

+ point_colors = cmap(norm(point_distances))

+ rr.log(f"world/ego_vehicle/{sensor_name}", rr.Points3D(points, colors=point_colors))

+

+

+def log_cameras(first_camera_tokens: list[str], nusc: nuscenes.NuScenes) -> None:

+ """Log camera data."""

+ for first_camera_token in first_camera_tokens:

+ current_camera_token = first_camera_token

+ while current_camera_token != "":

+ sample_data = nusc.get("sample_data", current_camera_token)

+ sensor_name = sample_data["channel"]

+ rr.set_time_seconds("timestamp", sample_data["timestamp"] * 1e-6)

+ data_file_path = nusc.dataroot / sample_data["filename"]

+ rr.log(f"world/ego_vehicle/{sensor_name}", rr.ImageEncoded(path=data_file_path))

+ current_camera_token = sample_data["next"]

+

+

+def log_radars(first_radar_tokens: list[str], nusc: nuscenes.NuScenes) -> None:

+ """Log radar data."""

+ for first_radar_token in first_radar_tokens:

+ current_camera_token = first_radar_token

+ while current_camera_token != "":

+ sample_data = nusc.get("sample_data", current_camera_token)

+ sensor_name = sample_data["channel"]

+ rr.set_time_seconds("timestamp", sample_data["timestamp"] * 1e-6)

+ data_file_path = nusc.dataroot / sample_data["filename"]

+ pointcloud = nuscenes.RadarPointCloud.from_file(str(data_file_path))

+ points = pointcloud.points[:3].T # shape after transposing: (num_points, 3)

+ point_distances = np.linalg.norm(points, axis=1)

+ point_colors = cmap(norm(point_distances))

+ rr.log(f"world/ego_vehicle/{sensor_name}", rr.Points3D(points, colors=point_colors))

+ current_camera_token = sample_data["next"]

+

+

+def log_annotations(first_sample_token: str, nusc: nuscenes.NuScenes) -> None:

+ """Log 3D bounding boxes."""

+ label2id: dict[str, int] = {}

+ current_sample_token = first_sample_token

+ while current_sample_token != "":

+ sample = nusc.get("sample", current_sample_token)

+ rr.set_time_seconds("timestamp", sample["timestamp"] * 1e-6)

+ ann_tokens = sample["anns"]

+ sizes = []

+ centers = []

+ rotations = []

+ class_ids = []

+ for ann_token in ann_tokens:

+ ann = nusc.get("sample_annotation", ann_token)

+

+ rotation_xyzw = np.roll(ann["rotation"], shift=-1) # go from wxyz to xyzw

+ width, length, height = ann["size"]

+ sizes.append((length, width, height)) # x, y, z sizes

+ centers.append(ann["translation"])

+ rotations.append(rr.Quaternion(xyzw=rotation_xyzw))

+ if ann["category_name"] not in label2id:

+ label2id[ann["category_name"]] = len(label2id)

+ class_ids.append(label2id[ann["category_name"]])

+

+ rr.log("world/anns", rr.Boxes3D(sizes=sizes, centers=centers, rotations=rotations, class_ids=class_ids))

+ current_sample_token = sample["next"]

+

+ # skipping for now since labels take too much space in 3D view (see https://github.com/rerun-io/rerun/issues/4451)

+ # annotation_context = [(i, label) for label, i in label2id.items()]

+ # rr.log("world/anns", rr.AnnotationContext(annotation_context), timeless=True)

+

+

+def log_sensor_calibration(sample_data: dict[str, Any], nusc: nuscenes.NuScenes) -> None:

+ """Log sensor calibration (pinhole camera, sensor poses, etc.)."""

+ sensor_name = sample_data["channel"]

+ calibrated_sensor_token = sample_data["calibrated_sensor_token"]

+ calibrated_sensor = nusc.get("calibrated_sensor", calibrated_sensor_token)

+ rotation_xyzw = np.roll(calibrated_sensor["rotation"], shift=-1) # go from wxyz to xyzw

+ rr.log(

+ f"world/ego_vehicle/{sensor_name}",

+ rr.Transform3D(

+ translation=calibrated_sensor["translation"],

+ rotation=rr.Quaternion(xyzw=rotation_xyzw),

+ from_parent=False,

+ ),

+ timeless=True,

+ )

+ if len(calibrated_sensor["camera_intrinsic"]) != 0:

+ rr.log(

+ f"world/ego_vehicle/{sensor_name}",

+ rr.Pinhole(

+ image_from_camera=calibrated_sensor["camera_intrinsic"],

+ width=sample_data["width"],

+ height=sample_data["height"],

+ ),

+ timeless=True,

+ )

+

+

+def main() -> None:

+ parser = argparse.ArgumentParser(description="Visualizes the nuScenes dataset using the Rerun SDK.")

+ parser.add_argument(

+ "--root_dir",

+ type=pathlib.Path,

+ default=DATASET_DIR,

+ help="Root directory of nuScenes dataset",

+ )

+ parser.add_argument(

+ "--scene_name",

+ type=str,

+ default="scene-0061",

+ help="Scene name to visualize (typically of form 'scene-xxxx')",

+ )

+ parser.add_argument("--dataset_version", type=str, default="v1.0-mini", help="Scene id to visualize")

+ rr.script_add_args(parser)

+ args = parser.parse_args()

+

+ ensure_scene_available(args.root_dir, args.dataset_version, args.scene_name)

+

+ rr.script_setup(args, "rerun_example_nuscenes")

+ log_nuscenes(args.root_dir, args.dataset_version, args.scene_name)

+

+ rr.script_teardown(args)

+

+

+if __name__ == "__main__":

+ main()

diff --git a/examples/python/nuscenes/requirements.txt b/examples/python/nuscenes/requirements.txt

new file mode 100644

index 000000000000..9ddcccf2ce52

--- /dev/null

+++ b/examples/python/nuscenes/requirements.txt

@@ -0,0 +1,4 @@

+matplotlib

+numpy

+nuscenes-devkit

+rerun-sdk

diff --git a/examples/python/requirements.txt b/examples/python/requirements.txt

index 278767cbce1d..5be4a3853cc8 100644

--- a/examples/python/requirements.txt

+++ b/examples/python/requirements.txt

@@ -9,6 +9,7 @@

-r dna/requirements.txt

-r face_tracking/requirements.txt

-r human_pose_tracking/requirements.txt

+-r lidar/requirements.txt

-r live_camera_edge_detection/requirements.txt

-r live_depth_sensor/requirements.txt

-r minimal/requirements.txt

@@ -16,6 +17,7 @@

-r multiprocessing/requirements.txt

-r multithreading/requirements.txt

-r notebook/requirements.txt

+-r nuscenes/requirements.txt

-r nv12/requirements.txt

-r objectron/requirements.txt

-r open_photogrammetry_format/requirements.txt

+

+

+

+

+

+

+This example visualizes the [nuScenes dataset](https://www.nuscenes.org/) using Rerun. The dataset

+contains lidar data, radar data, color images, and labeled bounding boxes.

+

+```bash

+pip install -r examples/python/nuscenes/requirements.txt

+python examples/python/nuscenes/main.py

+```

diff --git a/examples/python/nuscenes/download_dataset.py b/examples/python/nuscenes/download_dataset.py

new file mode 100644

index 000000000000..fbb384643ffe

--- /dev/null

+++ b/examples/python/nuscenes/download_dataset.py

@@ -0,0 +1,65 @@

+"""Module to download nuScenes minisplit."""

+from __future__ import annotations

+

+import os

+import pathlib

+import tarfile

+

+import requests

+import tqdm

+

+MINISPLIT_SCENES = [

+ "scene-0061",

+ "scene-0103",

+ "scene-0553",

+ "scene-0655",

+ "scene-0757",

+ "scene-0796",

+ "scene-0916",

+ "scene-1077",

+ "scene-1094",

+ "scene-1100",

+]

+MINISPLIT_URL = "https://www.nuscenes.org/data/v1.0-mini.tgz"

+

+

+def download_file(url: str, dst_file_path: pathlib.Path) -> None:

+ """Download file from url to dst_fpath."""

+ dst_file_path.parent.mkdir(parents=True, exist_ok=True)

+ print(f"Downloading {url} to {dst_file_path}")

+ response = requests.get(url, stream=True)

+ with tqdm.tqdm.wrapattr(

+ open(dst_file_path, "wb"),

+ "write",

+ miniters=1,

+ total=int(response.headers.get("content-length", 0)),

+ desc=f"Downloading {dst_file_path.name}",

+ ) as f:

+ for chunk in response.iter_content(chunk_size=4096):

+ f.write(chunk)

+

+

+def untar_file(tar_file_path: pathlib.Path, dst_path: pathlib.Path, keep_tar: bool = True) -> bool:

+ """Untar tar file at tar_file_path to dst."""

+ print(f"Untar file {tar_file_path}")

+ try:

+ with tarfile.open(tar_file_path, "r") as tf:

+ tf.extractall(dst_path)

+ except Exception as error:

+ print(f"Error unzipping {tar_file_path}, error: {error}")

+ return False

+ if not keep_tar:

+ os.remove(tar_file_path)

+ return True

+

+

+def download_minisplit(root_dir: pathlib.Path) -> None:

+ """

+ Download nuScenes minisplit.

+

+ Adopted from https://colab.research.google.com/github/nutonomy/nuscenes-devkit/blob/master/python-sdk/tutorials/nuscenes_tutorial.ipynb

+ """

+ zip_file_path = pathlib.Path("./v1.0-mini.tgz")

+ if not zip_file_path.is_file():

+ download_file(MINISPLIT_URL, zip_file_path)

+ untar_file(zip_file_path, root_dir, keep_tar=True)

diff --git a/examples/python/nuscenes/main.py b/examples/python/nuscenes/main.py

new file mode 100755

index 000000000000..d8e489358979

--- /dev/null

+++ b/examples/python/nuscenes/main.py

@@ -0,0 +1,228 @@

+#!/usr/bin/env python3

+from __future__ import annotations

+

+import argparse

+import os

+import pathlib

+from typing import Any, Final

+

+import matplotlib

+import numpy as np

+import rerun as rr

+from download_dataset import MINISPLIT_SCENES, download_minisplit

+from nuscenes import nuscenes

+

+EXAMPLE_DIR: Final = pathlib.Path(os.path.dirname(__file__))

+DATASET_DIR: Final = EXAMPLE_DIR / "dataset"

+

+# currently need to calculate the color manually

+# see https://github.com/rerun-io/rerun/issues/4409

+cmap = matplotlib.colormaps["turbo_r"]

+norm = matplotlib.colors.Normalize(

+ vmin=3.0,

+ vmax=75.0,

+)

+

+

+def ensure_scene_available(root_dir: pathlib.Path, dataset_version: str, scene_name: str) -> None:

+ """

+ Ensure that the specified scene is available.

+

+ Downloads minisplit into root_dir if scene_name is part of it and root_dir is empty.

+

+ Raises ValueError if scene is not available and cannot be downloaded.

+ """

+ try:

+ nusc = nuscenes.NuScenes(version=dataset_version, dataroot=root_dir, verbose=True)

+ except AssertionError: # dataset initialization failed

+ if dataset_version == "v1.0-mini" and scene_name in MINISPLIT_SCENES:

+ download_minisplit(root_dir)

+ nusc = nuscenes.NuScenes(version=dataset_version, dataroot=root_dir, verbose=True)

+ else:

+ print(f"Could not find dataset at {root_dir} and could not automatically download specified scene.")

+ exit()

+

+ scene_names = [s["name"] for s in nusc.scene]

+ if scene_name not in scene_names:

+ raise ValueError(f"{scene_name=} not found in dataset")

+

+

+def log_nuscenes(root_dir: pathlib.Path, dataset_version: str, scene_name: str) -> None:

+ """Log nuScenes scene."""

+ nusc = nuscenes.NuScenes(version=dataset_version, dataroot=root_dir, verbose=True)

+

+ scene = next(s for s in nusc.scene if s["name"] == scene_name)

+

+ rr.log("world", rr.ViewCoordinates.RIGHT_HAND_Z_UP, timeless=True)

+

+ first_sample_token = scene["first_sample_token"]

+ first_sample = nusc.get("sample", scene["first_sample_token"])

+

+ first_lidar_token = ""

+ first_radar_tokens = []

+ first_camera_tokens = []

+ for sample_data_token in first_sample["data"].values():

+ sample_data = nusc.get("sample_data", sample_data_token)

+ log_sensor_calibration(sample_data, nusc)

+

+ if sample_data["sensor_modality"] == "lidar":

+ first_lidar_token = sample_data_token

+ elif sample_data["sensor_modality"] == "radar":

+ first_radar_tokens.append(sample_data_token)

+ elif sample_data["sensor_modality"] == "camera":

+ first_camera_tokens.append(sample_data_token)

+

+ log_lidar_and_ego_pose(first_lidar_token, nusc)

+ log_cameras(first_camera_tokens, nusc)

+ log_radars(first_radar_tokens, nusc)

+ log_annotations(first_sample_token, nusc)

+

+

+def log_lidar_and_ego_pose(first_lidar_token: str, nusc: nuscenes.NuScenes) -> None:

+ """Log lidar data and vehicle pose."""

+ current_lidar_token = first_lidar_token

+

+ while current_lidar_token != "":

+ sample_data = nusc.get("sample_data", current_lidar_token)

+ sensor_name = sample_data["channel"]

+

+ # timestamps are in microseconds

+ rr.set_time_seconds("timestamp", sample_data["timestamp"] * 1e-6)

+

+ ego_pose = nusc.get("ego_pose", sample_data["ego_pose_token"])

+ rotation_xyzw = np.roll(ego_pose["rotation"], shift=-1) # go from wxyz to xyzw

+ rr.log(

+ "world/ego_vehicle",

+ rr.Transform3D(

+ translation=ego_pose["translation"],

+ rotation=rr.Quaternion(xyzw=rotation_xyzw),

+ from_parent=False,

+ ),

+ )

+ current_lidar_token = sample_data["next"]

+

+ data_file_path = nusc.dataroot / sample_data["filename"]

+ pointcloud = nuscenes.LidarPointCloud.from_file(str(data_file_path))

+ points = pointcloud.points[:3].T # shape after transposing: (num_points, 3)

+ point_distances = np.linalg.norm(points, axis=1)

+ point_colors = cmap(norm(point_distances))

+ rr.log(f"world/ego_vehicle/{sensor_name}", rr.Points3D(points, colors=point_colors))

+

+

+def log_cameras(first_camera_tokens: list[str], nusc: nuscenes.NuScenes) -> None:

+ """Log camera data."""

+ for first_camera_token in first_camera_tokens:

+ current_camera_token = first_camera_token

+ while current_camera_token != "":

+ sample_data = nusc.get("sample_data", current_camera_token)

+ sensor_name = sample_data["channel"]

+ rr.set_time_seconds("timestamp", sample_data["timestamp"] * 1e-6)

+ data_file_path = nusc.dataroot / sample_data["filename"]

+ rr.log(f"world/ego_vehicle/{sensor_name}", rr.ImageEncoded(path=data_file_path))

+ current_camera_token = sample_data["next"]

+

+

+def log_radars(first_radar_tokens: list[str], nusc: nuscenes.NuScenes) -> None:

+ """Log radar data."""

+ for first_radar_token in first_radar_tokens:

+ current_camera_token = first_radar_token

+ while current_camera_token != "":

+ sample_data = nusc.get("sample_data", current_camera_token)

+ sensor_name = sample_data["channel"]

+ rr.set_time_seconds("timestamp", sample_data["timestamp"] * 1e-6)

+ data_file_path = nusc.dataroot / sample_data["filename"]

+ pointcloud = nuscenes.RadarPointCloud.from_file(str(data_file_path))

+ points = pointcloud.points[:3].T # shape after transposing: (num_points, 3)

+ point_distances = np.linalg.norm(points, axis=1)

+ point_colors = cmap(norm(point_distances))

+ rr.log(f"world/ego_vehicle/{sensor_name}", rr.Points3D(points, colors=point_colors))

+ current_camera_token = sample_data["next"]

+

+

+def log_annotations(first_sample_token: str, nusc: nuscenes.NuScenes) -> None:

+ """Log 3D bounding boxes."""

+ label2id: dict[str, int] = {}

+ current_sample_token = first_sample_token

+ while current_sample_token != "":

+ sample = nusc.get("sample", current_sample_token)

+ rr.set_time_seconds("timestamp", sample["timestamp"] * 1e-6)

+ ann_tokens = sample["anns"]

+ sizes = []

+ centers = []

+ rotations = []

+ class_ids = []

+ for ann_token in ann_tokens:

+ ann = nusc.get("sample_annotation", ann_token)

+

+ rotation_xyzw = np.roll(ann["rotation"], shift=-1) # go from wxyz to xyzw

+ width, length, height = ann["size"]

+ sizes.append((length, width, height)) # x, y, z sizes

+ centers.append(ann["translation"])

+ rotations.append(rr.Quaternion(xyzw=rotation_xyzw))

+ if ann["category_name"] not in label2id:

+ label2id[ann["category_name"]] = len(label2id)

+ class_ids.append(label2id[ann["category_name"]])

+

+ rr.log("world/anns", rr.Boxes3D(sizes=sizes, centers=centers, rotations=rotations, class_ids=class_ids))

+ current_sample_token = sample["next"]

+

+ # skipping for now since labels take too much space in 3D view (see https://github.com/rerun-io/rerun/issues/4451)

+ # annotation_context = [(i, label) for label, i in label2id.items()]

+ # rr.log("world/anns", rr.AnnotationContext(annotation_context), timeless=True)

+

+

+def log_sensor_calibration(sample_data: dict[str, Any], nusc: nuscenes.NuScenes) -> None:

+ """Log sensor calibration (pinhole camera, sensor poses, etc.)."""

+ sensor_name = sample_data["channel"]

+ calibrated_sensor_token = sample_data["calibrated_sensor_token"]

+ calibrated_sensor = nusc.get("calibrated_sensor", calibrated_sensor_token)

+ rotation_xyzw = np.roll(calibrated_sensor["rotation"], shift=-1) # go from wxyz to xyzw

+ rr.log(

+ f"world/ego_vehicle/{sensor_name}",

+ rr.Transform3D(

+ translation=calibrated_sensor["translation"],

+ rotation=rr.Quaternion(xyzw=rotation_xyzw),

+ from_parent=False,

+ ),

+ timeless=True,

+ )

+ if len(calibrated_sensor["camera_intrinsic"]) != 0:

+ rr.log(

+ f"world/ego_vehicle/{sensor_name}",

+ rr.Pinhole(

+ image_from_camera=calibrated_sensor["camera_intrinsic"],

+ width=sample_data["width"],

+ height=sample_data["height"],

+ ),

+ timeless=True,

+ )

+

+

+def main() -> None:

+ parser = argparse.ArgumentParser(description="Visualizes the nuScenes dataset using the Rerun SDK.")

+ parser.add_argument(

+ "--root_dir",

+ type=pathlib.Path,

+ default=DATASET_DIR,

+ help="Root directory of nuScenes dataset",

+ )

+ parser.add_argument(

+ "--scene_name",

+ type=str,

+ default="scene-0061",

+ help="Scene name to visualize (typically of form 'scene-xxxx')",

+ )

+ parser.add_argument("--dataset_version", type=str, default="v1.0-mini", help="Scene id to visualize")

+ rr.script_add_args(parser)

+ args = parser.parse_args()

+

+ ensure_scene_available(args.root_dir, args.dataset_version, args.scene_name)

+

+ rr.script_setup(args, "rerun_example_nuscenes")

+ log_nuscenes(args.root_dir, args.dataset_version, args.scene_name)

+

+ rr.script_teardown(args)

+

+

+if __name__ == "__main__":

+ main()

diff --git a/examples/python/nuscenes/requirements.txt b/examples/python/nuscenes/requirements.txt

new file mode 100644

index 000000000000..9ddcccf2ce52

--- /dev/null

+++ b/examples/python/nuscenes/requirements.txt

@@ -0,0 +1,4 @@

+matplotlib

+numpy

+nuscenes-devkit

+rerun-sdk

diff --git a/examples/python/requirements.txt b/examples/python/requirements.txt

index 278767cbce1d..5be4a3853cc8 100644

--- a/examples/python/requirements.txt

+++ b/examples/python/requirements.txt

@@ -9,6 +9,7 @@

-r dna/requirements.txt

-r face_tracking/requirements.txt

-r human_pose_tracking/requirements.txt

+-r lidar/requirements.txt

-r live_camera_edge_detection/requirements.txt

-r live_depth_sensor/requirements.txt

-r minimal/requirements.txt

@@ -16,6 +17,7 @@

-r multiprocessing/requirements.txt

-r multithreading/requirements.txt

-r notebook/requirements.txt

+-r nuscenes/requirements.txt

-r nv12/requirements.txt

-r objectron/requirements.txt

-r open_photogrammetry_format/requirements.txt

+

+  +

+  +

+