+

```json

"modelConfiguration": {

@@ -14963,7 +15563,7 @@ In the configuration above,

"modelOverrides": [

{

"modelRef": "google::unknown::claude-3-5-sonnet",

- "displayName": "Claude 3.5 Sonnet (via Google/Vertex)",

+ "displayName": "Claude 3.5 Sonnet (via Google Vertex)",

"modelName": "claude-3-5-sonnet@20240620",

"contextWindow": {

"maxInputTokens": 45000,

@@ -15013,7 +15613,7 @@ In the configuration above,

"displayName": "Anthropic models through AWS Bedrock",

"serverSideConfig": {

"type": "awsBedrock",

- "accessToken": ":",

"endpoint": "",

"region": "us-west-2"

}

@@ -15021,16 +15621,16 @@ In the configuration above,

],

"modelOverrides": [

{

- "modelRef": "aws-bedrock::2024-02-29::claude-3-sonnet",

- "displayName": "Claude 3 Sonnet",

- "modelName": "claude-3-sonnet",

+ "modelRef": "aws-bedrock::2025-02-19::claude-3-7-sonnet",

+ "displayName": "Claude 3.7 Sonnet",

+ "modelName": "anthropic.claude-3-7-sonnet-20250219-v1:0",

"serverSideConfig": {

"type": "awsBedrockProvisionedThroughput",

"arn": "" // e.g., arn:aws:bedrock:us-west-2:537452198621:provisioned-model/57z3lgkt1cx2

},

"contextWindow": {

- "maxInputTokens": 16000,

- "maxOutputTokens": 4000

+ "maxInputTokens": 132000,

+ "maxOutputTokens": 8192

},

"capabilities": ["chat", "autocomplete"],

"category": "balanced",

@@ -15038,9 +15638,9 @@ In the configuration above,

},

],

"defaultModels": {

- "chat": "aws-bedrock::2024-02-29::claude-3-sonnet",

- "codeCompletion": "aws-bedrock::2024-02-29::claude-3-sonnet",

- "fastChat": "aws-bedrock::2024-02-29::claude-3-sonnet"

+ "chat": "aws-bedrock::2025-02-19::claude-3-7-sonnet",

+ "codeCompletion": "aws-bedrock::2025-02-19::claude-3-7-sonnet",

+ "fastChat": "aws-bedrock::2025-02-19::claude-3-7-sonnet"

},

}

```

@@ -15049,7 +15649,7 @@ In the configuration described above,

- Set up a provider override for Amazon Bedrock, routing requests for this provider directly to the specified endpoint, bypassing Cody Gateway

- Add the `"aws-bedrock::2024-02-29::claude-3-sonnet"` model, which is used for all Cody features. We do not add other models for simplicity, as adding multiple models is already covered in the examples above

-- Note: Since the model in the example uses provisioned throughput, specify the ARN in the `serverSideConfig.arn` field of the model override.

+- Since the model in the example uses [Amazon Bedrock provisioned throughput](https://docs.aws.amazon.com/bedrock/latest/userguide/prov-throughput.html), specify the ARN in the `serverSideConfig.arn` field of the model override.

Provider override `serverSideConfig` fields:

@@ -15058,18 +15658,24 @@ Provider override `serverSideConfig` fields:

| `type` | Must be `"awsBedrock"`. |

| `accessToken` | Leave empty to rely on instance role bindings or other AWS configurations in the frontend service. Use `:` for direct credential configuration, or `::` if a session token is also required. |

| `endpoint` | For pay-as-you-go, set it to an AWS region code (e.g., `us-west-2`) when using a public Amazon Bedrock endpoint. For provisioned throughput, set it to the provisioned VPC endpoint for the bedrock-runtime API (e.g., `https://vpce-0a10b2345cd67e89f-abc0defg.bedrock-runtime.us-west-2.vpce.amazonaws.com`). |

-| `region` | The region to use when configuring API clients. This is necessary because the 'frontend' binary container cannot access environment variables from the host OS. |

+| `region` | The region to use when configuring API clients. The `AWS_REGION` Environment variable must also be configured in the `sourcegraph-frontend` container to match. |

Provisioned throughput for Amazon Bedrock models can be configured using the `"awsBedrockProvisionedThroughput"` server-side configuration type. Refer to the [Model Overrides](/cody/enterprise/model-configuration#model-overrides) section for more details.

+

+ If using [IAM roles for EC2 / instance role binding](https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/iam-roles-for-amazon-ec2.html),

+ you may need to increase the [HttpPutResponseHopLimit

+](https://docs.aws.amazon.com/AWSEC2/latest/APIReference/API_InstanceMetadataOptionsRequest.html#:~:text=HttpPutResponseHopLimit) instance metadata option to a higher value (e.g., 2) to ensure that the metadata service can be accessed from the frontend container running in the EC2 instance. See [here](https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/configuring-IMDS-existing-instances.html) for instructions.

+

+

We only recommend configuring AWS Bedrock to use an accessToken for

authentication. Specifying no accessToken (e.g. to use [IAM roles for EC2 /

instance role

binding](https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/iam-roles-for-amazon-ec2.html))

- is not currently recommended (there is a known performance bug with this

- method which will prevent autocomplete from working correctly. (internal

- issue: PRIME-662)

+ is not currently recommended. There is a known performance bug with this

+ method which will prevent autocomplete from working correctly (internal

+ issue: CORE-819)

@@ -17180,12 +17786,19 @@ Cody supports a variety of cutting-edge large language models for use in chat an

| OpenAI | [o3-mini-medium](https://openai.com/index/openai-o3-mini/) (experimental) | ✅ | ✅ | ✅ | | | | |

| OpenAI | [o3-mini-high](https://openai.com/index/openai-o3-mini/) (experimental) | - | - | ✅ | | | | |

| OpenAI | [o1](https://platform.openai.com/docs/models#o1) | - | ✅ | ✅ | | | | |

+| OpenAI | [o3](https://platform.openai.com/docs/models#o3) | - | ✅ | ✅ | | | | |

+| OpenAI | [o4-mini](https://platform.openai.com/docs/models/o4-mini) | ✅ | ✅ | ✅ | | | | |

+| OpenAI | [GPT-4.1](https://platform.openai.com/docs/models/gpt-4.1) | - | ✅ | ✅ | | | | |

+| OpenAI | [GPT-4.1-mini](https://platform.openai.com/docs/models/gpt-4o-mini) | ✅ | ✅ | ✅ | | | | |

+| OpenAI | [GPT-4.1-nano](https://platform.openai.com/docs/models/gpt-4.1-nano) | ✅ | ✅ | ✅ | | | | |

| Anthropic | [Claude 3.5 Haiku](https://docs.anthropic.com/claude/docs/models-overview#model-comparison) | ✅ | ✅ | ✅ | | | | |

| Anthropic | [Claude 3.5 Sonnet](https://docs.anthropic.com/claude/docs/models-overview#model-comparison) | ✅ | ✅ | ✅ | | | | |

| Anthropic | [Claude 3.7 Sonnet](https://docs.anthropic.com/claude/docs/models-overview#model-comparison) | - | ✅ | ✅ | | | | |

| Google | [Gemini 1.5 Pro](https://deepmind.google/technologies/gemini/pro/) | ✅ | ✅ | ✅ (beta) | | | | |

| Google | [Gemini 2.0 Flash](https://deepmind.google/technologies/gemini/flash/) | ✅ | ✅ | ✅ | | | | |

-| Google | [Gemini 2.0 Flash-Lite Preview](https://deepmind.google/technologies/gemini/flash/) (experimental) | ✅ | ✅ | ✅ | | | | |

+| Google | [Gemini 2.0 Flash](https://deepmind.google/technologies/gemini/flash/) | ✅ | ✅ | ✅ | | | | |

+| Google | [Gemini 2.5 Pro Preview](https://cloud.google.com/vertex-ai/generative-ai/docs/models/gemini/2-5-pro) | - | ✅ | ✅ | | | | |

+| Google | [Gemini 2.5 Flash Preview](https://cloud.google.com/vertex-ai/generative-ai/docs/models/gemini/2-5-flash) (experimental) | ✅ | ✅ | ✅ | | | | |

To use Claude 3 Sonnet models with Cody Enterprise, make sure you've upgraded your Sourcegraph instance to the latest version.

@@ -17205,17 +17818,24 @@ In addition, Sourcegraph Enterprise customers using GCP Vertex (Google Cloud Pla

Cody uses a set of models for autocomplete which are suited for the low latency use case.

-| **Provider** | **Model** | **Free** | **Pro** | **Enterprise** | | | | |

-| :-------------------- | :---------------------------------------------------------------------------------------- | :------- | :------ | :------------- | --- | --- | --- | --- |

-| Fireworks.ai | [DeepSeek-Coder-V2](https://huggingface.co/deepseek-ai/DeepSeek-Coder-V2-Lite-Instruct) | ✅ | ✅ | ✅ | | | | |

-| Fireworks.ai | [StarCoder](https://arxiv.org/abs/2305.06161) | - | - | ✅ | | | | |

-| Anthropic | [claude Instant](https://docs.anthropic.com/claude/docs/models-overview#model-comparison) | - | - | ✅ | | | | |

-| | | | | | | | | |

+| **Provider** | **Model** | **Free** | **Pro** | **Enterprise** | | | | |

+| :----------- | :---------------------------------------------------------------------------------------- | :------- | :------ | :------------- | --- | --- | --- | --- |

+| Fireworks.ai | [DeepSeek-Coder-V2](https://huggingface.co/deepseek-ai/DeepSeek-Coder-V2-Lite-Instruct) | ✅ | ✅ | ✅ | | | | |

+| Anthropic | [claude Instant](https://docs.anthropic.com/claude/docs/models-overview#model-comparison) | - | - | ✅ | | | | |

+| | | | | | | | | |

The default autocomplete model for Cody Free, Pro and Enterprise users is DeepSeek-Coder-V2.

The DeepSeek model used by Sourcegraph is hosted by Fireworks.ai, and is hosted as a single-tenant service in a US-based data center. For more information see our [Cody FAQ](https://sourcegraph.com/docs/cody/faq#is-any-of-my-data-sent-to-deepseek).

+## Smart Apply

+

+| **Provider** | **Model** | **Free** | **Pro** | **Enterprise** | | | | | | |

+| :----------- | :------------- | :------- | :------ | :------------- | --- | --- | --- | --- | --- | --- |

+| Fireworks.ai | Qwen 2.5 Coder | ✅ | ✅ | ✅ | | | | | | |

+

+Enterprise users not using Cody Gateway get a Claude Sonnet-based model for Smart Apply.

+

@@ -17983,6 +18603,12 @@ Smart Apply also supports the executing of commands in the terminal. When you as

+### Model used for Smart Apply

+

+To ensure low latency, Cody uses a more targeted Qwen 2.5 Coder model for Smart Apply. This model improves the responsiveness of the Smart Apply feature in both VS Code and JetBrains while preserving edit quality. Users on Cody Free, Pro, Enterprise Starter, and Enterprise plans get this default Qwen 2.5 Coder model for Smart Apply suggestions.

+

+Enterprise users not using Cody Gateway get a Claude Sonnet-based model for Smart Apply.

+

## Chat history

Cody keeps a history of your chat sessions. You can view it by clicking the **History** button in the chat panel. You can **Export** it to a JSON file for later use or click the **Delete all** button to clear the chat history.

@@ -20757,6 +21383,15 @@ Here is an example of search results with personalized search ranking enabled:

As you can see, the results are now ranked based on their relevance to the query, and the results from repositories you've recently contributed to are boosted.

+## Compare changes across revisions

+

+When you run a search, you can compare the results from two different revisions of the codebase. From your search query results page, click the three-dot **...** icon next to the **Contributors** tab. Then select the **Compare** option.

+

+From here, you can execute file and directory filtering and compare large diffs, making it easier to navigate and manage.

+

+This file picker is useful when comparing branches with thousands of changed files and allows you to select specific files or directories to focus on. You can [filter files directly](/code-search/compare-file-filtering) by constructing a URL with multiple file paths or use a compressed file list for even larger selections.

+

+

## Other search tips

@@ -20815,6 +21450,128 @@ The [symbol search](/code-search/types/symbol) performance section describes que

+

+# File Filtering in the Repository Comparison Page

+

+The repository comparison page provides powerful file filtering capabilities that allow you to focus on specific files in a comparison. The system supports multiple ways to specify which files you want to view when comparing branches, tags, or commits.

+

+## Query parameter-based file filtering

+

+The comparison page supports three different query parameters to specify which files to include in the comparison:

+

+### 1. Individual file paths

+

+You can specify individual files using either of these parameters:

+

+- `filePath=path/to/file.js` - Primary parameter for specifying files

+- `f=path/to/file.js` - Shorthand alternative

+

+Multiple files can be included by repeating the parameter:

+

+```shell

+?filePath=src/index.ts&filePath=src/components/Button.tsx

+```

+

+### 2. Compressed file lists

+

+For comparisons with a large number of files, the system supports compressed file lists (newline-separated):

+

+- `compressedFileList=base64EncodedCompressedData` - Efficiently packs many file paths

+

+This parameter efficiently transmits large file paths using base64-encoded, gzip-compressed data. The compression allows hundreds or thousands of files to be included in a URL without exceeding length limits, which vary depending on the browser, HTTP server, and other services involved, like Cloudflare.

+

+```typescript

+// Behind the scenes, the code decompresses file lists using:

+const decodedData = atoburl(compressedFileList)

+const compressedData = Uint8Array.from([...decodedData].map(char => char.charCodeAt(0)))

+const decompressedData = pako.inflate(compressedData, { to: 'string' })

+```

+

+One way to create a list of files for the `compressedFileList` parameter is to use Python's built-in libraries to compress and encode using url-safe base64 encoding (smaller than base64-encoding, then url-encoding).

+

+```shell

+python3 -c "import sys, zlib, base64; sys.stdout.write(base64.urlsafe_b64encode(zlib.compress(sys.stdin.buffer.read())).decode().rstrip('='))" < list.of.files > list.of.files.deflated.b64url

+```

+

+### 3. Special focus mode

+

+You can focus on a single file using:

+

+- `focus=true&filePath=path/to/specific/file.js` - Show only this file in detail view

+

+## File filtering UI components

+

+The comparison view provides several UI components to help you filter and navigate files:

+

+### FileDiffPicker

+

+The FileDiffPicker component allows you to:

+

+- Search for files by name or path

+- Filter files by type/extension

+- Toggle between showing all files or only modified files

+- Sort files by different criteria (path, size of change, etc.)

+

+This component uses a dedicated file metadata query optimized for quick filtering. Results are displayed as you type. Through client-side filtering, the component can efficiently handle repositories with thousands of files.

+

+### File navigation

+

+When viewing diffs, you can:

+

+- Click on any file in the sidebar to switch to that file

+- Use keyboard shortcuts to navigate between files

+- Toggle between expanded and collapsed views of files

+- Show or hide specific changes (additions, deletions, etc.)

+

+### URL-based filtering

+

+Any filters you apply through the UI will update the URL with the appropriate query parameters. This means you can:

+

+1. Share specific filtered views with others

+2. Bookmark comparisons with specific file filters

+3. Navigate back and forth between different filter states using browser history

+

+## Implementation details

+

+The system makes strategic performance trade-offs to provide a smooth user experience:

+

+```typescript

+/*

+ * For customers with extremely large PRs with thousands of files,

+ * we fetch all file paths in a single API call to enable client-side filtering.

+ *

+ * This eliminates numerous smaller API calls for server-side filtering

+ * when users search in the FileDiffPicker. While requiring one large

+ * initial API call, it significantly improves subsequent search performance.

+ */

+```

+

+The file filtering system uses a specialized file metadata query that is faster and lighter than the comprehensive file diffs query used to display actual code changes.

+

+## Usage examples

+

+1. View only JavaScript files:

+

+```bash

+ ?filePath=src/utils.js&filePath=src/components/App.js

+```

+

+2. Focus on a single file:

+

+```bash

+ ?focus=true&filePath=src/components/Button.tsx

+ ```

+

+3. Use a compressed file list for many files:

+

+```bash

+ ?compressedFileList=H4sIAAAAAAAAA2NgYGBg...

+```

+

+This flexible filtering system allows you to create customized views of repository comparisons, making reviewing changes in large projects easier.

+

+

+

# Search Snippets

@@ -21093,7 +21850,9 @@ You can also search across multiple contexts at once using the `OR` [boolean ope

To organize your search contexts better, you can use a specific context as your default and star any number of contexts. This affects what context is selected when loading Sourcegraph and how the list of contexts is sorted.

-### Default context

+### Default search context

+

+#### For users

Any authenticated user can use a search context as their default. To set a default, go to the search context management page, open the "..." menu for a context, and click on "Use as default". If the user doesn't have a default, `global` will be used.

@@ -21101,6 +21860,25 @@ If a user ever loses access to their default search context (eg. the search cont

The default search context is always selected when loading the Sourcegraph webapp. The one exception is when opening a link to a search query that does not contain a `context:` filter, in which case the `global` context will be used.

+#### For site admins

+

+Site admins can set a default search context for all users on the Sourcegraph instance. This helps teams improve onboarding and search quality by focusing searches on the most relevant parts of the codebase rather than the entire indexed set of repositories.

+

+An admin can set a default search context via:

+

+- Click the **More** button from the top menu of the Sourcegraph web app

+- Next, go to **Search Contexts**

+- For the existing context list, click on the **...** menu and select **[Site admin only] Set as default for all users**

+- Alternatively, you can create a new context and then set it for all users via the same option

+

+

+

+Here are a few considerations:

+

+- If a user already has a personal default search context set, it will not be overridden

+- The admin-set default will apply automatically if a user only uses the global context

+- Individual users can see the instance-wide default and override it with their own default if they choose

+

### Starred contexts

Any authenticated user can star a search context. To star a context, click on the star icon in the search context management page. This will cause the context to appear near the top of their search contexts list. The `global` context cannot be starred.

@@ -26173,6 +26951,51 @@ Other tips:

+

+# src search-jobs

+

+`src search-jobs` is a tool that manages search jobs in a Sourcegraph instance.

+

+## Usage

+

+```bash

+'src search-jobs' is a tool that manages search jobs on a Sourcegraph instance.

+

+ Usage:

+

+ src search-jobs command [command options]

+

+ The commands are:

+

+ cancel cancels a search job by ID

+ create creates a search job

+ delete deletes a search job by ID

+ get gets a search job by ID

+ list lists search jobs

+ logs fetches logs for a search job by ID

+ restart restarts a search job by ID

+ results fetches results for a search job by ID

+

+ Common options for all commands:

+ -c Select columns to display (e.g., -c id,query,state,username)

+ -json Output results in JSON format

+

+ Use "src search-jobs [command] -h" for more information about a command.

+```

+

+## Sub-commands

+

+* [cancel](search-jobs/cancel)

+* [create](search-jobs/create)

+* [delete](search-jobs/delete)

+* [get](search-jobs/get)

+* [list](search-jobs/list)

+* [logs](search-jobs/logs)

+* [restart](search-jobs/restart)

+* [results](search-jobs/results)

+

+

+

# `src scout`

@@ -26290,6 +27113,7 @@ Most commands require that the user first [authenticate](quickstart#connect-to-s

* [`repos`](references/repos)

* [`scout`](references/scout)

* [`search`](references/search)

+* [`search-jobs`](references/search-jobs)

* [`serve-git`](references/serve-git)

* [`snapshot`](references/snapshot)

* [`teams`](references/teams)

@@ -26803,6 +27627,359 @@ Examples:

+

+# src search-jobs results

+

+`src search-jobs results` is a tool that gets the results of a search job on a Sourcegraph instance.

+

+## Usage

+

+```bash

+Usage of 'src search-jobs results':

+ -c string

+ Comma-separated list of columns to display. Available: id,query,state,username,createdat,startedat,finishedat,url,logurl,total,completed,failed,inprogress (default "id,username,state,query")

+ -dump-requests

+ Log GraphQL requests and responses to stdout

+ -get-curl

+ Print the curl command for executing this query and exit (WARNING: includes printing your access token!)

+ -insecure-skip-verify

+ Skip validation of TLS certificates against trusted chains

+ -json

+ Output results as JSON for programmatic access

+ -out string

+ File path to save the results (optional)

+ -trace

+ Log the trace ID for requests. See https://docs.sourcegraph.com/admin/observability/tracing

+ -user-agent-telemetry

+ Include the operating system and architecture in the User-Agent sent with requests to Sourcegraph (default true)

+

+ Examples:

+

+ Get the results of a search job:

+ $ src search-jobs results U2VhcmNoSm9iOjY5

+

+ Save search results to a file:

+ $ src search-jobs results U2VhcmNoSm9iOjY5 -out results.jsonl

+

+ The results command retrieves the raw search results in JSON Lines format.

+ Each line contains a single JSON object representing a search result. The data

+ will be displayed on stdout or written to the file specified with -out.

+```

+

+

+

+

+# src search-jobs restart

+

+`src search-jobs restart` is a tool that restarts a search job on a Sourcegraph instance.

+

+## Usage

+

+```bash

+Usage of 'src search-jobs restart':

+ -c string

+ Comma-separated list of columns to display. Available: id,query,state,username,createdat,startedat,finishedat,url,logurl,total,completed,failed,inprogress (default "id,username,state,query")

+ -dump-requests

+ Log GraphQL requests and responses to stdout

+ -get-curl

+ Print the curl command for executing this query and exit (WARNING: includes printing your access token!)

+ -insecure-skip-verify

+ Skip validation of TLS certificates against trusted chains

+ -json

+ Output results as JSON for programmatic access

+ -trace

+ Log the trace ID for requests. See https://docs.sourcegraph.com/admin/observability/tracing

+ -user-agent-telemetry

+ Include the operating system and architecture in the User-Agent sent with requests to Sourcegraph (default true)

+

+ Examples:

+

+ Restart a search job by ID:

+

+ $ src search-jobs restart U2VhcmNoSm9iOjY5

+

+ Restart a search job and display specific columns:

+

+ $ src search-jobs restart U2VhcmNoSm9iOjY5 -c id,state,query

+

+ Restart a search job and output in JSON format:

+

+ $ src search-jobs restart U2VhcmNoSm9iOjY5 -json

+

+ Available columns are: id, query, state, username, createdat, startedat, finishedat,

+ url, logurl, total, completed, failed, inprogress

+```

+

+

+

+

+# src search-jobs logs

+

+`src search-jobs logs` is a tool that gets the logs of a search job on a Sourcegraph instance.

+

+## Usage

+

+```bash

+Usage of 'src search-jobs logs':

+ -c string

+ Comma-separated list of columns to display. Available: id,query,state,username,createdat,startedat,finishedat,url,logurl,total,completed,failed,inprogress (default "id,username,state,query")

+ -dump-requests

+ Log GraphQL requests and responses to stdout

+ -get-curl

+ Print the curl command for executing this query and exit (WARNING: includes printing your access token!)

+ -insecure-skip-verify

+ Skip validation of TLS certificates against trusted chains

+ -json

+ Output results as JSON for programmatic access

+ -out string

+ File path to save the logs (optional)

+ -trace

+ Log the trace ID for requests. See https://docs.sourcegraph.com/admin/observability/tracing

+ -user-agent-telemetry

+ Include the operating system and architecture in the User-Agent sent with requests to Sourcegraph (default true)

+

+ Examples:

+

+ View the logs of a search job:

+ $ src search-jobs logs U2VhcmNoSm9iOjY5

+

+ Save the logs to a file:

+ $ src search-jobs logs U2VhcmNoSm9iOjY5 -out logs.csv

+

+ The logs command retrieves the raw log data in CSV format. The data will be

+ displayed on stdout or written to the file specified with -out.

+```

+

+

+

+

+# src search-jobs list

+

+`src search-jobs list` is a tool that lists search jobs on a Sourcegraph instance.

+

+## Usage

+

+```bash

+Usage of 'src search-jobs list':

+ -asc

+ Sort search jobs in ascending order

+ -c string

+ Comma-separated list of columns to display. Available: id,query,state,username,createdat,startedat,finishedat,url,logurl,total,completed,failed,inprogress (default "id,username,state,query")

+ -dump-requests

+ Log GraphQL requests and responses to stdout

+ -get-curl

+ Print the curl command for executing this query and exit (WARNING: includes printing your access token!)

+ -insecure-skip-verify

+ Skip validation of TLS certificates against trusted chains

+ -json

+ Output results as JSON for programmatic access

+ -limit int

+ Limit the number of search jobs returned (default 10)

+ -order-by string

+ Sort search jobs by a sortable field (QUERY, CREATED_AT, STATE) (default "CREATED_AT")

+ -trace

+ Log the trace ID for requests. See https://docs.sourcegraph.com/admin/observability/tracing

+ -user-agent-telemetry

+ Include the operating system and architecture in the User-Agent sent with requests to Sourcegraph (default true)

+

+ Examples:

+

+ List all search jobs:

+

+ $ src search-jobs list

+

+ List all search jobs in ascending order:

+

+ $ src search-jobs list --asc

+

+ Limit the number of search jobs returned:

+

+ $ src search-jobs list --limit 5

+

+ Order search jobs by a field (must be one of: QUERY, CREATED_AT, STATE):

+

+ $ src search-jobs list --order-by QUERY

+

+ Select specific columns to display:

+

+ $ src search-jobs list -c id,state,username,createdat

+

+ Output results as JSON:

+

+ $ src search-jobs list -json

+

+ Combine options:

+

+ $ src search-jobs list --limit 10 --order-by STATE --asc -c id,query,state

+

+ Available columns are: id, query, state, username, createdat, startedat, finishedat,

+ url, logurl, total, completed, failed, inprogress

+```

+

+

+

+

+# src search-jobs get

+

+`src search-jobs get` is a tool that gets details of a single search job on a Sourcegraph instance.

+

+## Usage

+

+```bash

+Usage of 'src search-jobs get':

+ -c string

+ Comma-separated list of columns to display. Available: id,query,state,username,createdat,startedat,finishedat,url,logurl,total,completed,failed,inprogress (default "id,username,state,query")

+ -dump-requests

+ Log GraphQL requests and responses to stdout

+ -get-curl

+ Print the curl command for executing this query and exit (WARNING: includes printing your access token!)

+ -insecure-skip-verify

+ Skip validation of TLS certificates against trusted chains

+ -json

+ Output results as JSON for programmatic access

+ -trace

+ Log the trace ID for requests. See https://docs.sourcegraph.com/admin/observability/tracing

+ -user-agent-telemetry

+ Include the operating system and architecture in the User-Agent sent with requests to Sourcegraph (default true)

+

+ Examples:

+

+ Get a search job by ID:

+

+ $ src search-jobs get U2VhcmNoSm9iOjY5

+

+ Get a search job with specific columns:

+

+ $ src search-jobs get U2VhcmNoSm9iOjY5 -c id,state,username

+

+ Get a search job in JSON format:

+

+ $ src search-jobs get U2VhcmNoSm9iOjY5 -json

+

+ Available columns are: id, query, state, username, createdat, startedat, finishedat,

+ url, logurl, total, completed, failed, inprogress

+```

+

+

+

+

+# src search-jobs delete

+

+`src search-jobs delete` is a tool that deletes a search job on a Sourcegraph instance.

+

+```bash

+Usage of 'src search-jobs delete':

+ -c string

+ Comma-separated list of columns to display. Available: id,query,state,username,createdat,startedat,finishedat,url,logurl,total,completed,failed,inprogress (default "id,username,state,query")

+ -dump-requests

+ Log GraphQL requests and responses to stdout

+ -get-curl

+ Print the curl command for executing this query and exit (WARNING: includes printing your access token!)

+ -insecure-skip-verify

+ Skip validation of TLS certificates against trusted chains

+ -json

+ Output results as JSON for programmatic access

+ -trace

+ Log the trace ID for requests. See https://docs.sourcegraph.com/admin/observability/tracing

+ -user-agent-telemetry

+ Include the operating system and architecture in the User-Agent sent with requests to Sourcegraph (default true)

+

+ Examples:

+

+ Delete a search job by ID:

+

+ $ src search-jobs delete U2VhcmNoSm9iOjY5

+

+ Arguments:

+ The ID of the search job to delete.

+

+ The delete command permanently removes a search job and outputs a confirmation message.

+```

+

+

+

+

+# src search-jobs create

+

+`src search-jobs create` is a tool that creates a search job on a Sourcegraph instance.

+

+## Usage

+

+```bash

+Usage of 'src search-jobs create':

+ -c string

+ Comma-separated list of columns to display. Available: id,query,state,username,createdat,startedat,finishedat,url,logurl,total,completed,failed,inprogress (default "id,username,state,query")

+ -dump-requests

+ Log GraphQL requests and responses to stdout

+ -get-curl

+ Print the curl command for executing this query and exit (WARNING: includes printing your access token!)

+ -insecure-skip-verify

+ Skip validation of TLS certificates against trusted chains

+ -json

+ Output results as JSON for programmatic access

+ -trace

+ Log the trace ID for requests. See https://docs.sourcegraph.com/admin/observability/tracing

+ -user-agent-telemetry

+ Include the operating system and architecture in the User-Agent sent with requests to Sourcegraph (default true)

+

+ Examples:

+

+ Create a search job:

+

+ $ src search-jobs create "repo:^github\.com/sourcegraph/sourcegraph$ sort:indexed-desc"

+

+ Create a search job and display specific columns:

+

+ $ src search-jobs create "repo:sourcegraph" -c id,state,username

+

+ Create a search job and output in JSON format:

+

+ $ src search-jobs create "repo:sourcegraph" -json

+

+ Available columns are: id, query, state, username, createdat, startedat, finishedat,

+ url, logurl, total, completed, failed, inprogress

+```

+

+

+

+

+# src search-jobs cancel

+

+`src search-jobs cancel` is a tool that cancels a search job on a Sourcegraph instance.

+

+## Usage

+

+```bash

+Usage of 'src search-jobs cancel':

+ -c string

+ Comma-separated list of columns to display. Available: id,query,state,username,createdat,startedat,finishedat,url,logurl,total,completed,failed,inprogress (default "id,username,state,query")

+ -dump-requests

+ Log GraphQL requests and responses to stdout

+ -get-curl

+ Print the curl command for executing this query and exit (WARNING: includes printing your access token!)

+ -insecure-skip-verify

+ Skip validation of TLS certificates against trusted chains

+ -json

+ Output results as JSON for programmatic access

+ -trace

+ Log the trace ID for requests. See https://docs.sourcegraph.com/admin/observability/tracing

+ -user-agent-telemetry

+ Include the operating system and architecture in the User-Agent sent with requests to Sourcegraph (default true)

+

+ Examples:

+

+ Cancel a search job by ID:

+

+ $ src search-jobs cancel U2VhcmNoSm9iOjY5

+

+ Arguments:

+ The ID of the search job to cancel.

+

+ The cancel command stops a running search job and outputs a confirmation message.

+```

+

+

+

# `src repos update-metadata`

@@ -29762,7 +30939,11 @@ Start by going to the workspace that failed. Then, you get an overview of all th

Learn how to track your existing changesets.

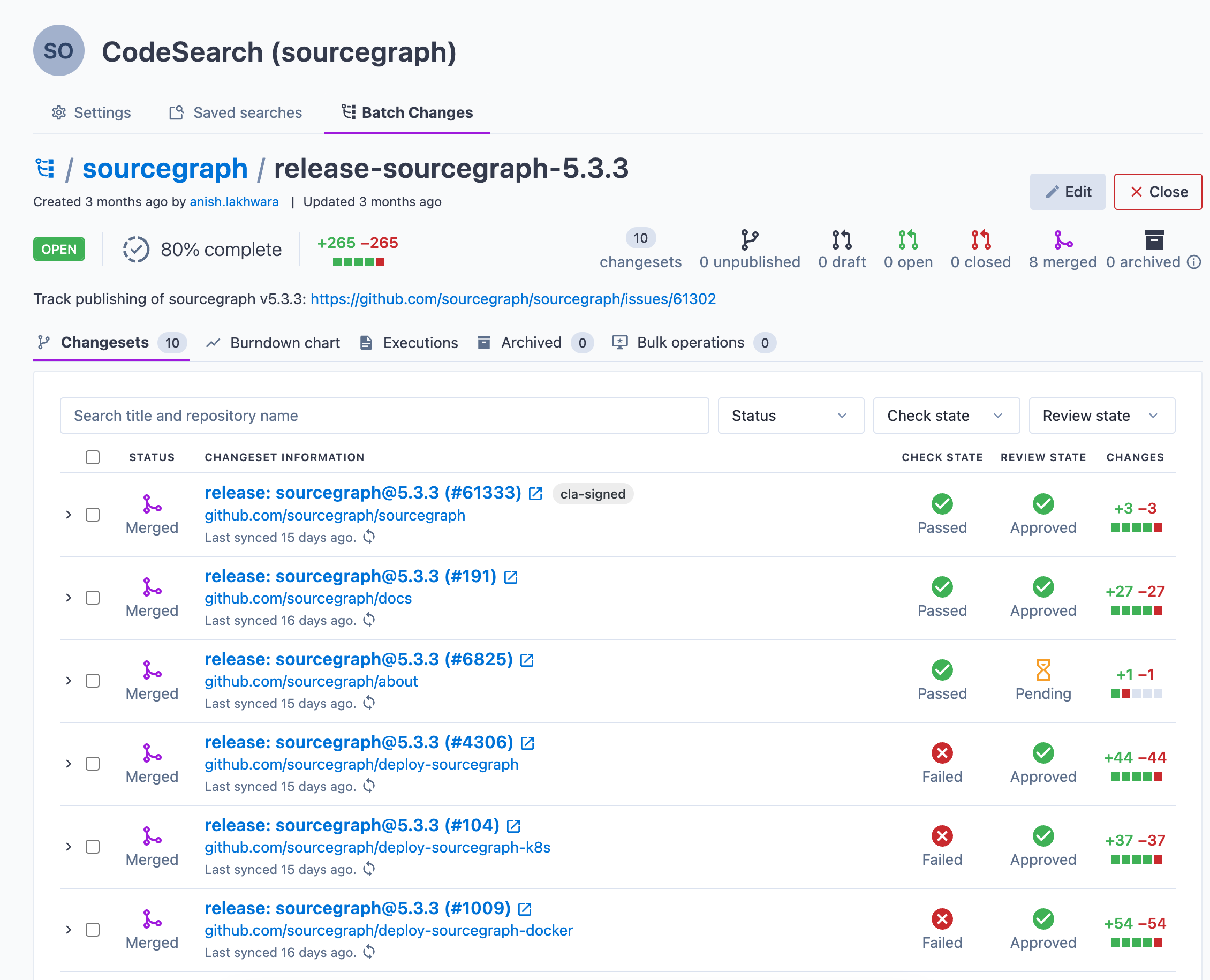

-Batch Changes allow you not only to [publish changesets](/batch-changes/publishing-changesets) but also to **import and track changesets** that already exist on different code hosts. That allows you to get an overview of the status of multiple changesets, with the ability to filter and drill down into the details of a specific changeset.

+Batch Changes allow you not only to [publish changesets](/batch-changes/publishing-changesets) but also to **import and track changesets** that already exist on different code hosts. That allows you to get an overview of the status of multiple changesets, with the ability to filter and drill down into the details of a specific changeset. After you have successfully imported changesets, you can perform the following bulk actions:

+

+- Write comments on each of the imported changesets

+- Merge each of the imported changesets to main

+- Close each of the imported changesets

@@ -38612,14 +39793,18 @@ For upgrade procedures or general info about sourcegraph versioning see the link

- [General Upgrade Info](/admin/updates)

- [Technical changelog](/technical-changelog)

-> ***Attention:** These notes may contain relevant information about the infrastructure update such as resource requirement changes or versions of depencies (Docker, kubernetes, externalized databases).*

+> ***Attention:** These notes may contain relevant information about the infrastructure update such as resource requirement changes or versions of dependencies (Docker, kubernetes, externalized databases).*

>

> ***If the notes indicate a patch release exists, target the highest one.***

+## v6.4.0

+

+- The repo-updater service is no longer needed and will be removed from deployment methods going forward.

+

## v6.0.0

- Sourcegraph 6.0.0 no longer supports PostgreSQL 12, admins must upgrade to PostgreSQL 16. See our [postgres 12 end of life](/admin/postgres12_end_of_life_notice) notice! As well as [supporting documentation](/admin/postgres) and advisements on how to upgrade.

-- The Kuberentes Helm deployment type does not support MVU from Sourcegraph `v5.9.45` versions and earlier to Sourcegraph `v6.0.0`. Admins seeking to upgrade to Sourcegraph `v6.0.0` should upgrade to `v5.11.6271` then use the standard upgrade procedure to get to `v6.0.0`. This is because migrator v6.0.0 will no longer connect to Postgres 12 databases. For more info see our [PostgreSQL upgrade docs](/admin/postgres#requirements).

+- The Kubernetes Helm deployment type does not support MVU from Sourcegraph `v5.9.45` versions and earlier to Sourcegraph `v6.0.0`. Admins seeking to upgrade to Sourcegraph `v6.0.0` should upgrade to `v5.11.6271` then use the standard upgrade procedure to get to `v6.0.0`. This is because migrator v6.0.0 will no longer connect to Postgres 12 databases. For more info see our [PostgreSQL upgrade docs](/admin/postgres#requirements).

## v5.9.0 ➔ v5.10.1164

@@ -43052,7 +44237,8 @@ Learn more about how to apply these environment variables in [docker-compose](/a

## Log format

-A Sourcegraph service's log output format is configured via the environment variable `SRC_LOG_FORMAT`. The valid values are:

+A Sourcegraph service's log output format is configured via the environment variable `SRC_LOG_FORMAT`. This design facilitates integration with external log aggregation systems and SIEM tools for centralized analysis, monitoring, and alerting.

+The valid values are:

* `condensed`: Optimized for human readability.

* `json`: Machine-readable JSON format.

@@ -43105,7 +44291,7 @@ Note that this will also affect child scopes. So in the example you will also re

## Log sampling

-Sourcegraph services that have migrated to the [new internal logging standard](/dev/how-to/add_logging) have log sampling enabled by default.

+Sourcegraph services have log sampling enabled by default.

The first 100 identical log entries per second will always be output, but thereafter only every 100th identical message will be output.

This behaviour can be configured for each service using the following environment variables:

@@ -43155,7 +44341,7 @@ Sourcegraph is designed, and ships with, a number of observability tools and cap

## Support

-For help configuring observability on your Sourcegraph instance, use our [public issue tracker](https://github.com/sourcegraph/issues/issues).

+For help configuring observability on your Sourcegraph instance, contact the support team at support@sourcegraph.com.

@@ -90131,23 +91317,6 @@ Open the repository's `Settings` page on Sourcegraph and from the `Mirroring` ta

-## Manually purge deleted repository data from disk

-

-After a repository is deleted from Sourcegraph in the database, its data still remains on disk on gitserver so that in the event the repository is added again it doesn't need to be recloned. These repos are automatically removed when disk space is low on gitserver. However, it is possible to manually trigger removal of deleted repos in the following way:

-

-**NOTE:** This is not available on Docker Compose deployments.

-

-1. Browse to Site Admin -> Instrumentation -> Repo Updater -> Manual Repo Purge

-2. You'll be at a url similar to `https://sourcegraph-instance/-/debug/proxies/repo-updater/manual-purge`

-3. You need to specify a limit parameter which specifies the upper limit to the number of repos that will be removed, for example: `https://sourcegraph-instance/-/debug/proxies/repo-updater/manual-purge?limit=1000`

-4. This will trigger a background process to delete up to `limit` repos, rate limited at 1 per second.

-

-It's possible to see the number of repos that can be cleaned up on disk in Grafana using this query:

-

-```

-max(src_repoupdater_purgeable_repos)

-```

-