Learn how Cody makes use to Keyword Search to gather context.

+Learn how Cody's local indexing engine works to provide fast keyword search in your workspace.

-Keyword search is the traditional approach to text search. It splits content into terms and builds a mapping from terms to documents. At query time, it extracts terms from the query and uses the mapping to retrieve your documents. +Cody uses **symf** (symbol finder), a local keyword search engine, to create and maintain code indexes for your workspace folders. This enables fast context retrieval directly from your local codebase. -Both Cody chat and completions use Keyword Search. It comes out of the box without any additional setup. Cody with Keyword Search searches your local VS Code workspace and is a cost-effective and time-saving solution. +## How Local Indexing Works -For an enterprise admin who has set up Cody with a Code Search instance, developers on their local machines can seamlessly access it. +Symf automatically creates and maintains indexes of your code: -Learn about all the core concepts and fundamentals that helps Cody provide codebase-aware answers.

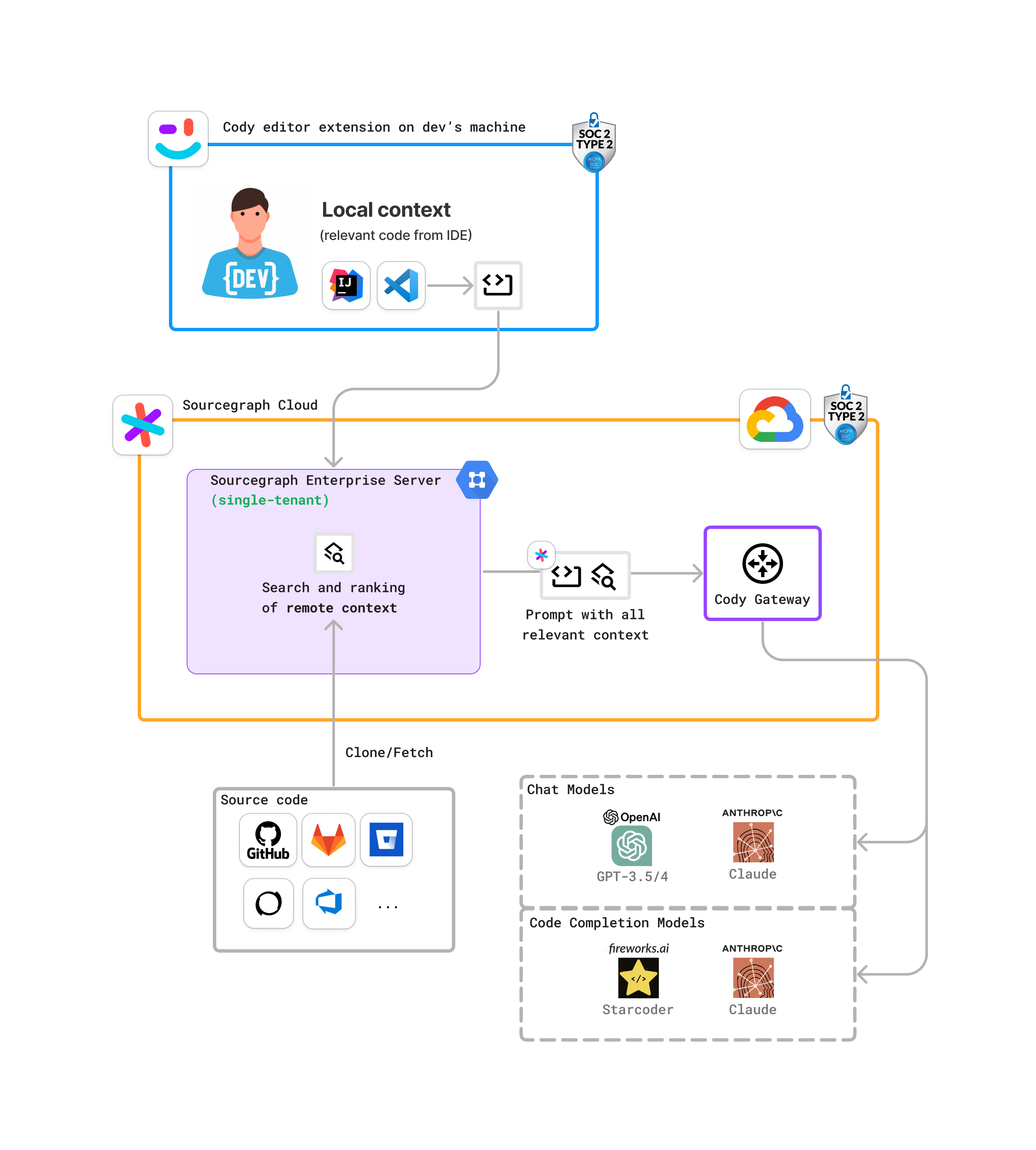

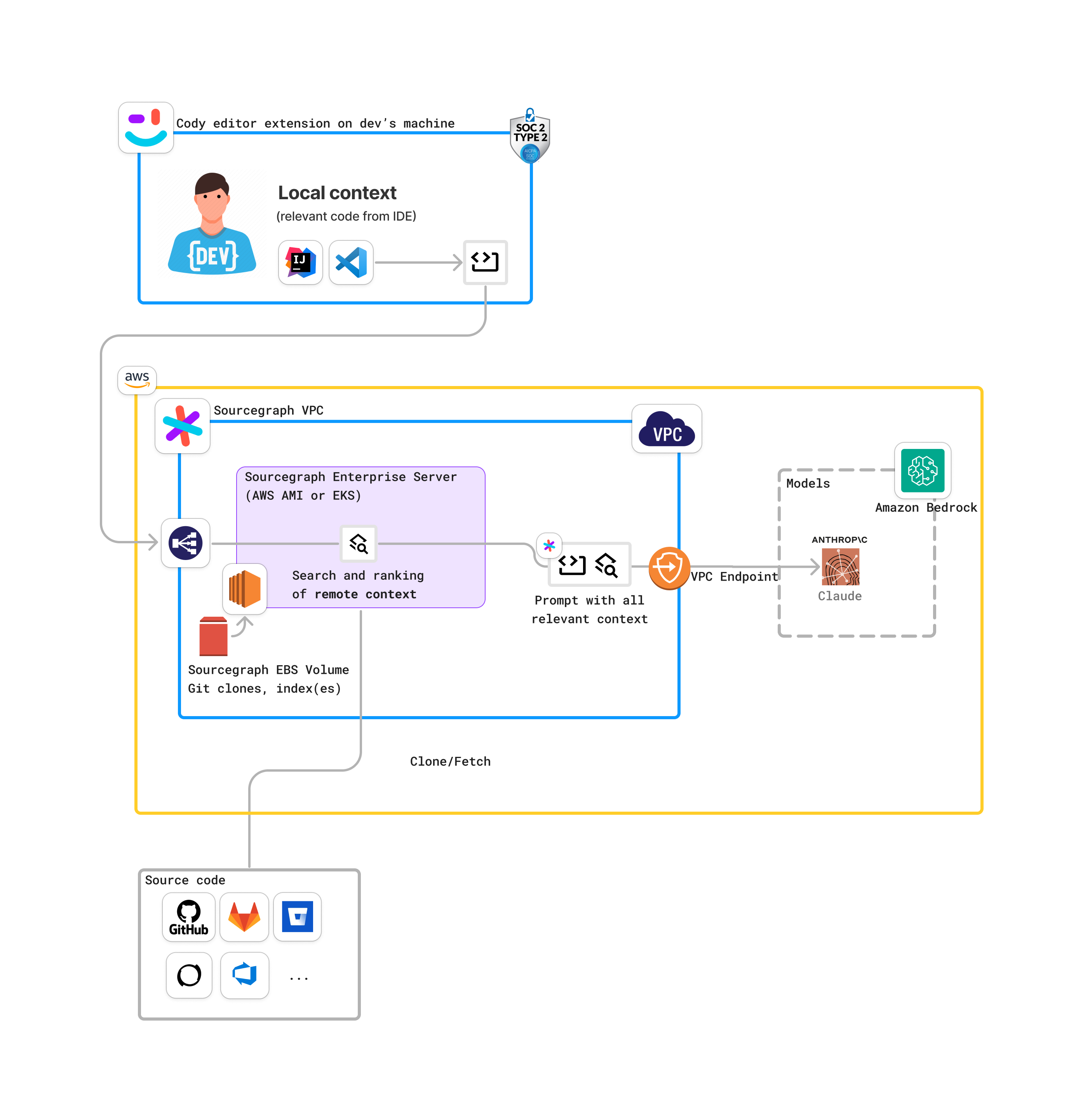

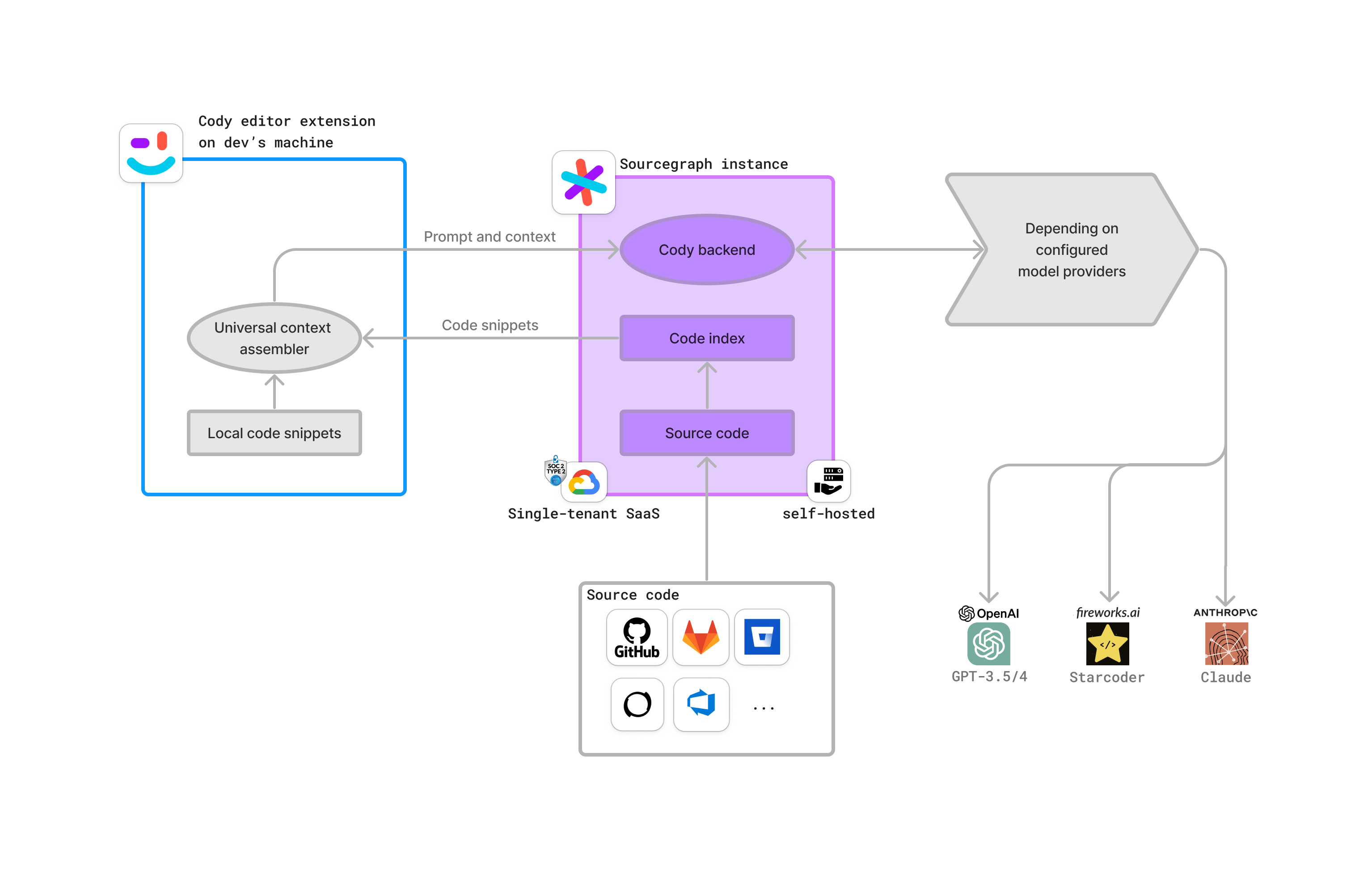

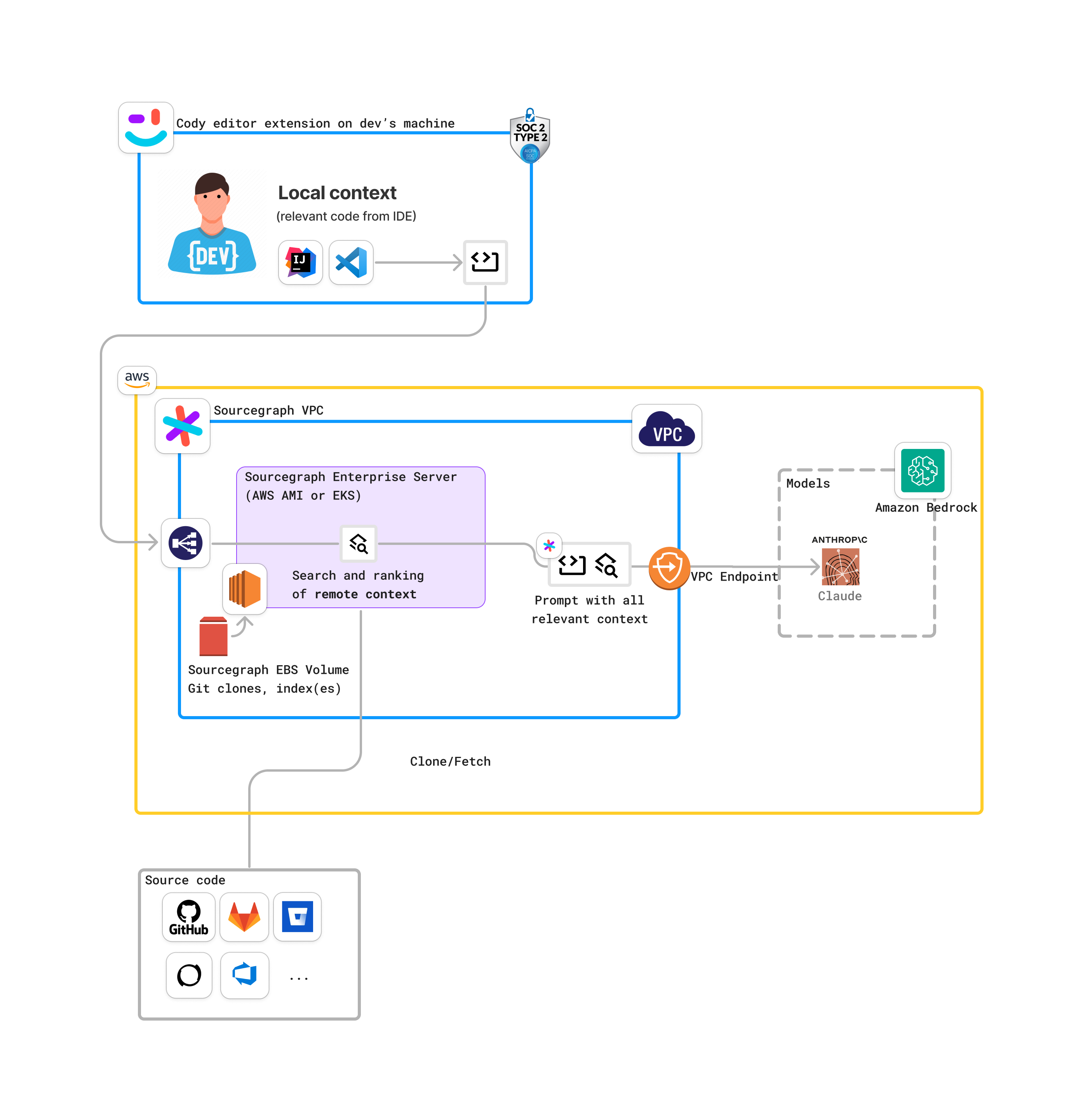

+### VS Code Settings -[Cody Enterprise](/cody/clients/enable-cody-enterprise) can be deployed via the Sourcegraph Cloud or on your self-hosted infrastructure. This page describes the architecture diagrams for Cody deployed in different Sourcegraph environments.

+Learn how Cody makes use to Keyword Search to gather context.

-{/* Figma source: https://www.figma.com/file/lAPHpdhtEmOJ22IQXVZ0vs/Cody-architecture-diagrams-SQS-draft-2024-04?type=whiteboard&node-id=0-1&t=blg78H2YXXbdGSPc-0 */} +Keyword search is the traditional approach to text search. It splits content into terms and builds a mapping from terms to documents. At query time, it extracts terms from the query and uses the mapping to retrieve your documents. -## Sourcegraph Cloud deployment +Both Cody chat and completions use Keyword Search. It comes out of the box without any additional setup. Cody with Keyword Search searches your [local VS Code workspace](/cody/core-concepts/local-indexing) and is a cost-effective and time-saving solution. -This is a recommended deployment for Cody Enterprise. It uses the Sourcegraph Cloud infrastructure and Cody gateway. +For an enterprise admin who has set up Cody with a Code Search instance, developers on their local machines can seamlessly access it. - +

+Learn about all the core concepts and fundamentals that helps Cody provide codebase-aware answers.

- +

+ +

+Learn how Cody Gateway powers the default Sourcegraph provider for completions, enabling Cody features for Sourcegraph Enterprise customers.

- -- [`gohugoio/hugo`](https://sourcegraph.com/github.com/gohugoio/hugo@master/-/blob/common/hugo/hugo.go?L63:15&popover=pinned)

- [`gin-gonic/gin`](https://sourcegraph.com/github.com/gin-gonic/gin@master/-/blob/routergroup.go?L33:6&popover=pinned) | -| Java | - [`sourcegraph/jetbrains`](https://sourcegraph.com/github.com/sourcegraph/jetbrains/-/blob/src/main/java/com/sourcegraph/cody/CodyActionGroup.java?L13) | -| Scala | - [`neandertech/langoustine`](https://sourcegraph.com/github.com/neandertech/langoustine/-/blob/modules/lsp/src/main/scala/Communicate.scala?L28) | -| Kotlin | - [`sourcegraph/jetbrains`](https://sourcegraph.com/github.com/sourcegraph/jetbrains/-/blob/src/main/kotlin/com/sourcegraph/cody/agent/CodyAgent.kt?L42) | -| Python | - [`pipecat-ai/pipecat-flows`](https://sourcegraph.com/github.com/pipecat-ai/pipecat-flows/-/blob/src/pipecat_flows/actions.py?L38) | -| TypeScript | - [`vuejs/vue`](https://sourcegraph.com/github.com/vuejs/vue@main/-/blob/src/core/observer/index.ts?L68:3&popover=pinned) | -| Ruby | - [`Homebrew/brew`](https://sourcegraph.com/github.com/Homebrew/brew@master/-/blob/Library/Homebrew/utils/bottles.rb?L18:18&popover=pinned) | -| Rust | - [`rust-lang/cargo`](https://sourcegraph.com/github.com/rust-lang/cargo/-/blob/src/cargo/core/compiler/compilation.rs?L15:12&popover=pinned#tab=references)

- [`rust-lang/rustlings`](https://sourcegraph.com/github.com/rust-lang/rustlings@main/-/blob/src/dev.rs?L10) | -| C, C++ | - [`sourcegraph/cxx-precise-examples`](https://sourcegraph.com/github.com/sourcegraph/cxx-precise-examples/-/blob/piecewise_monorepo/arithmetic/src/multiplication.cpp?L3) | +| **Programming Language** | **Repo** | +|--------------------------|--------------------------------------------------------------------------------------------------------------------------------------------------------| +| Go | [`kubernetes/kubernetes`](https://sourcegraph.com/github.com/kubernetes/kubernetes/-/blob/cmd/cloud-controller-manager/main.go) | +| Java | [`google/guava`](https://sourcegraph.com/github.com/google/guava/-/blob/guava/src/com/google/common/io/ByteSink.java) | +| Scala | [`apache/pekko`](https://sourcegraph.com/github.com/apache/pekko/-/blob/persistence/src/main/scala/org/apache/pekko/persistence/JournalProtocol.scala) | +| Python | [`Textualize/rich`](https://sourcegraph.com/github.com/Textualize/rich/-/blob/rich/style.py) | +| TypeScript | [`vuejs/core`](https://sourcegraph.com/github.com/vuejs/core/-/blob/packages/vue/src/index.ts) | +| Rust | [`serde-rs/serde`](https://sourcegraph.com/github.com/serde-rs/serde/-/blob/serde_derive/src/bound.rs) | +| Ruby | [`Homebrew/brew`](https://sourcegraph.com/github.com/Homebrew/brew/-/blob/Library/Homebrew/commands.rb) | +| C++ | [`fmtlib/fmt`](https://sourcegraph.com/github.com/fmtlib/fmt/-/blob/include/fmt/ostream.h) | +| C# | [`serilog/serilog`](https://sourcegraph.com/github.com/serilog/serilog/-/blob/src/Serilog/Events/EventProperty.cs) | ## More resources @@ -15371,9 +15916,9 @@ jobs: - run: src code-intel upload -github-token=<

Connect AI agents and applications to your Sourcegraph instance's code search and analysis capabilities.

+ + @@ -30519,7 +31454,7 @@ This is an example of a more complex deployment that uses Sourcegraph Enterprise

@@ -30519,7 +31454,7 @@ This is an example of a more complex deployment that uses Sourcegraph Enterprise

-### Data flow

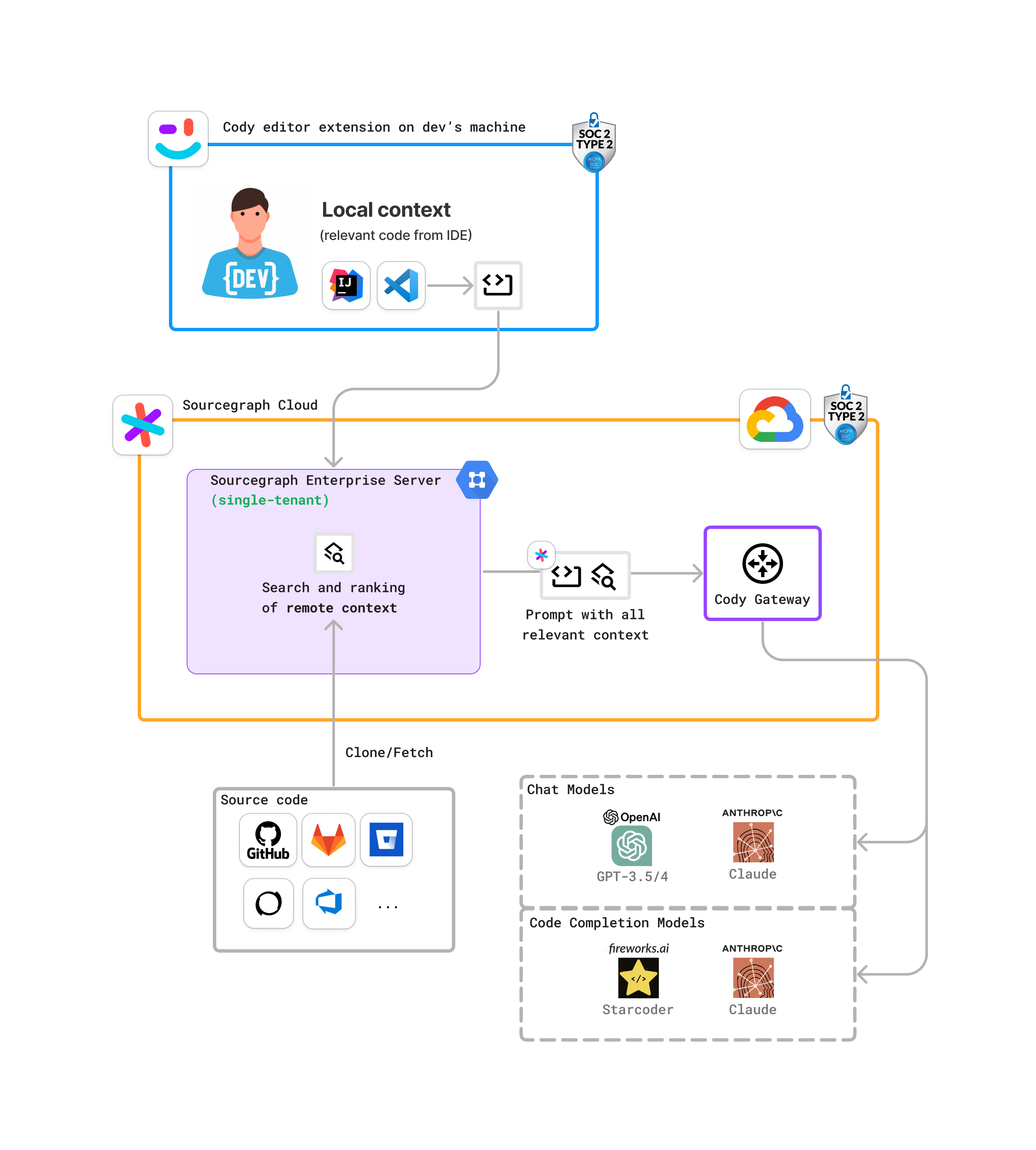

+### Cody Data flow

The following diagram describes the data flow between the different components of Cody Enterprise.

@@ -31415,6 +32350,10 @@ For upgrade procedures or general info about sourcegraph versioning see the link

>

> ***If the notes indicate a patch release exists, target the highest one.***

+## v6.10.0

+

+- Port names have been updated on services and pods, to unblock Kubernetes service meshes from auto-configuring themselves for the traffic type, since most inter-pod communication has been switched from http to gRPC [[PR 756](https://github.com/sourcegraph/deploy-sourcegraph-helm/pull/756)]. If you are using Istio (or similar service meshes), and [our Envoy filter example](https://github.com/sourcegraph/deploy-sourcegraph-helm/tree/main/charts/sourcegraph/examples/envoy), and your pods fail to communicate after upgrading to >= v6.10, please remove the Envoy filter and try again so your service mesh can auto-configure itself based on the new port names.

+

## v6.4.0

- The repo-updater service is no longer needed and will be removed from deployment methods going forward.

@@ -32414,7 +33353,7 @@ upgrade \

[--dry-run=false] \

[--disable-animation=false] \

[--skip-version-check=false] [--skip-drift-check=false] \

- [--unprivileged-only=false] [--noop-privileged=false] [--privileged-hash=

-### Data flow

+### Cody Data flow

The following diagram describes the data flow between the different components of Cody Enterprise.

@@ -31415,6 +32350,10 @@ For upgrade procedures or general info about sourcegraph versioning see the link

>

> ***If the notes indicate a patch release exists, target the highest one.***

+## v6.10.0

+

+- Port names have been updated on services and pods, to unblock Kubernetes service meshes from auto-configuring themselves for the traffic type, since most inter-pod communication has been switched from http to gRPC [[PR 756](https://github.com/sourcegraph/deploy-sourcegraph-helm/pull/756)]. If you are using Istio (or similar service meshes), and [our Envoy filter example](https://github.com/sourcegraph/deploy-sourcegraph-helm/tree/main/charts/sourcegraph/examples/envoy), and your pods fail to communicate after upgrading to >= v6.10, please remove the Envoy filter and try again so your service mesh can auto-configure itself based on the new port names.

+

## v6.4.0

- The repo-updater service is no longer needed and will be removed from deployment methods going forward.

@@ -32414,7 +33353,7 @@ upgrade \

[--dry-run=false] \

[--disable-animation=false] \

[--skip-version-check=false] [--skip-drift-check=false] \

- [--unprivileged-only=false] [--noop-privileged=false] [--privileged-hash=-#### frontend: hard_timeout_search_responses +#### frontend: timeout_search_responses -

Hard timeout search responses every 5m

+Timeout search responses every 5m

-Refer to the [alerts reference](alerts#frontend-hard-timeout-search-responses) for 1 alert related to this panel. +Refer to the [alerts reference](alerts#frontend-timeout-search-responses) for 1 alert related to this panel. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=100010` on your Sourcegraph instance. @@ -35787,7 +36726,7 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=100010` Query: ``` -(sum(increase(src_graphql_search_response{status="timeout",source="browser",request_name!="CodeIntelSearch"}[5m])) + sum(increase(src_graphql_search_response{status="alert",alert_type="timed_out",source="browser",request_name!="CodeIntelSearch"}[5m]))) / sum(increase(src_graphql_search_response{source="browser",request_name!="CodeIntelSearch"}[5m])) * 100 +sum(increase(src_search_streaming_response{status=~"timeout|partial_timeout",source="browser"}[5m])) / sum(increase(src_search_streaming_response{source="browser"}[5m])) * 100 ``` @@ -35809,17 +36748,17 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=100011` Query: ``` -sum by (status)(increase(src_graphql_search_response{status=~"error",source="browser",request_name!="CodeIntelSearch"}[5m])) / ignoring(status) group_left sum(increase(src_graphql_search_response{source="browser",request_name!="CodeIntelSearch"}[5m])) * 100 +sum(increase(src_search_streaming_response{status="error",source="browser"}[5m])) / sum(increase(src_search_streaming_response{source="browser"}[5m])) * 100 ```-#### frontend: partial_timeout_search_responses +#### frontend: search_no_results -

Partial timeout search responses every 5m

+Searches with no results every 5m

-Refer to the [alerts reference](alerts#frontend-partial-timeout-search-responses) for 1 alert related to this panel. +Refer to the [alerts reference](alerts#frontend-search-no-results) for 1 alert related to this panel. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=100012` on your Sourcegraph instance. @@ -35831,7 +36770,7 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=100012` Query: ``` -sum by (status)(increase(src_graphql_search_response{status="partial_timeout",source="browser",request_name!="CodeIntelSearch"}[5m])) / ignoring(status) group_left sum(increase(src_graphql_search_response{source="browser",request_name!="CodeIntelSearch"}[5m])) * 100 +sum(increase(src_search_streaming_response{status="no_results",source="browser"}[5m])) / sum(increase(src_search_streaming_response{source="browser"}[5m])) * 100 ``` @@ -35853,7 +36792,7 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=100013` Query: ``` -sum by (alert_type)(increase(src_graphql_search_response{status="alert",alert_type!~"timed_out|no_results__suggest_quotes",source="browser",request_name!="CodeIntelSearch"}[5m])) / ignoring(alert_type) group_left sum(increase(src_graphql_search_response{source="browser",request_name!="CodeIntelSearch"}[5m])) * 100 +sum by (alert_type)(increase(src_search_streaming_response{status="alert",alert_type!~"timed_out",source="browser"}[5m])) / ignoring(alert_type) group_left sum(increase(src_search_streaming_response{source="browser"}[5m])) * 100 ``` @@ -36121,7 +37060,7 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=100212` Query: ``` -sum by (alert_type)(increase(src_graphql_search_response{status="alert",alert_type!~"timed_out|no_results__suggest_quotes",source="other"}[5m])) / ignoring(alert_type) group_left sum(increase(src_graphql_search_response{status="alert",source="other"}[5m])) +sum by (alert_type)(increase(src_graphql_search_response{status="alert",alert_type!~"timed_out",source="other"}[5m])) / ignoring(alert_type) group_left sum(increase(src_graphql_search_response{status="alert",source="other"}[5m])) ``` @@ -36711,7 +37650,7 @@ sum by (op)(increase(src_codeintel_uploads_store_errors_total{job=~"^(frontend|s ### Frontend: Workerutil: lsif_indexes dbworker/store stats -#### frontend: workerutil_dbworker_store_codeintel_index_total +#### frontend: workerutil_dbworker_store_totalStore operations every 5m

@@ -36727,13 +37666,13 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=100700` Query: ``` -sum(increase(src_workerutil_dbworker_store_codeintel_index_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum(increase(src_workerutil_dbworker_store_total{domain='codeintel_index_jobs',job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: workerutil_dbworker_store_codeintel_index_99th_percentile_duration +#### frontend: workerutil_dbworker_store_99th_percentile_duration

Aggregate successful store operation duration distribution over 5m

@@ -36749,13 +37688,13 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=100701` Query: ``` -sum by (le)(rate(src_workerutil_dbworker_store_codeintel_index_duration_seconds_bucket{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum by (le)(rate(src_workerutil_dbworker_store_duration_seconds_bucket{domain='codeintel_index_jobs',job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: workerutil_dbworker_store_codeintel_index_errors_total +#### frontend: workerutil_dbworker_store_errors_total

Store operation errors every 5m

@@ -36771,13 +37710,13 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=100702` Query: ``` -sum(increase(src_workerutil_dbworker_store_codeintel_index_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum(increase(src_workerutil_dbworker_store_errors_total{domain='codeintel_index_jobs',job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: workerutil_dbworker_store_codeintel_index_error_rate +#### frontend: workerutil_dbworker_store_error_rate

Store operation error rate over 5m

@@ -36793,7 +37732,7 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=100703` Query: ``` -sum(increase(src_workerutil_dbworker_store_codeintel_index_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum(increase(src_workerutil_dbworker_store_codeintel_index_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum(increase(src_workerutil_dbworker_store_codeintel_index_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 +sum(increase(src_workerutil_dbworker_store_errors_total{domain='codeintel_index_jobs',job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum(increase(src_workerutil_dbworker_store_total{domain='codeintel_index_jobs',job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum(increase(src_workerutil_dbworker_store_errors_total{domain='codeintel_index_jobs',job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 ``` @@ -36979,7 +37918,7 @@ sum by (op)(increase(src_codeintel_uploads_lsifstore_errors_total{job=~"^(fronte ### Frontend: Codeintel: gitserver client -#### frontend: codeintel_gitserver_total +#### frontend: gitserver_client_totalAggregate client operations every 5m

@@ -36995,13 +37934,13 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=100900` Query: ``` -sum(increase(src_codeintel_gitserver_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum(increase(src_gitserver_client_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: codeintel_gitserver_99th_percentile_duration +#### frontend: gitserver_client_99th_percentile_duration

Aggregate successful client operation duration distribution over 5m

@@ -37017,13 +37956,13 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=100901` Query: ``` -sum by (le)(rate(src_codeintel_gitserver_duration_seconds_bucket{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum by (le)(rate(src_gitserver_client_duration_seconds_bucket{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: codeintel_gitserver_errors_total +#### frontend: gitserver_client_errors_total

Aggregate client operation errors every 5m

@@ -37039,13 +37978,13 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=100902` Query: ``` -sum(increase(src_codeintel_gitserver_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum(increase(src_gitserver_client_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: codeintel_gitserver_error_rate +#### frontend: gitserver_client_error_rate

Aggregate client operation error rate over 5m

@@ -37061,13 +38000,13 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=100903` Query: ``` -sum(increase(src_codeintel_gitserver_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum(increase(src_codeintel_gitserver_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum(increase(src_codeintel_gitserver_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 +sum(increase(src_gitserver_client_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum(increase(src_gitserver_client_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum(increase(src_gitserver_client_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 ```-#### frontend: codeintel_gitserver_total +#### frontend: gitserver_client_total

Client operations every 5m

@@ -37083,13 +38022,13 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=100910` Query: ``` -sum by (op)(increase(src_codeintel_gitserver_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum by (op)(increase(src_gitserver_client_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: codeintel_gitserver_99th_percentile_duration +#### frontend: gitserver_client_99th_percentile_duration

99th percentile successful client operation duration over 5m

@@ -37105,13 +38044,13 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=100911` Query: ``` -histogram_quantile(0.99, sum by (le,op)(rate(src_codeintel_gitserver_duration_seconds_bucket{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) +histogram_quantile(0.99, sum by (le,op)(rate(src_gitserver_client_duration_seconds_bucket{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) ```-#### frontend: codeintel_gitserver_errors_total +#### frontend: gitserver_client_errors_total

Client operation errors every 5m

@@ -37127,13 +38066,13 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=100912` Query: ``` -sum by (op)(increase(src_codeintel_gitserver_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum by (op)(increase(src_gitserver_client_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: codeintel_gitserver_error_rate +#### frontend: gitserver_client_error_rate

Client operation error rate over 5m

@@ -37149,7 +38088,7 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=100913` Query: ``` -sum by (op)(increase(src_codeintel_gitserver_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum by (op)(increase(src_codeintel_gitserver_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum by (op)(increase(src_codeintel_gitserver_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 +sum by (op)(increase(src_gitserver_client_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum by (op)(increase(src_gitserver_client_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum by (op)(increase(src_gitserver_client_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 ``` @@ -37333,17 +38272,17 @@ sum by (op)(increase(src_codeintel_uploadstore_errors_total{job=~"^(frontend|sou-### Frontend: Codeintel: dependencies service stats +### Frontend: Gitserver: Gitserver Client -#### frontend: codeintel_dependencies_total +#### frontend: gitserver_client_total -

Aggregate service operations every 5m

+Aggregate client operations every 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101100` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Source team](https://handbook.sourcegraph.com/departments/engineering/teams/source).*Technical details

@@ -37351,21 +38290,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101100` Query: ``` -sum(increase(src_codeintel_dependencies_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum(increase(src_gitserver_client_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: codeintel_dependencies_99th_percentile_duration +#### frontend: gitserver_client_99th_percentile_duration -

Aggregate successful service operation duration distribution over 5m

+Aggregate successful client operation duration distribution over 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101101` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Source team](https://handbook.sourcegraph.com/departments/engineering/teams/source).*Technical details

@@ -37373,21 +38312,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101101` Query: ``` -sum by (le)(rate(src_codeintel_dependencies_duration_seconds_bucket{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum by (le)(rate(src_gitserver_client_duration_seconds_bucket{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: codeintel_dependencies_errors_total +#### frontend: gitserver_client_errors_total -

Aggregate service operation errors every 5m

+Aggregate client operation errors every 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101102` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Source team](https://handbook.sourcegraph.com/departments/engineering/teams/source).*Technical details

@@ -37395,21 +38334,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101102` Query: ``` -sum(increase(src_codeintel_dependencies_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum(increase(src_gitserver_client_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: codeintel_dependencies_error_rate +#### frontend: gitserver_client_error_rate -

Aggregate service operation error rate over 5m

+Aggregate client operation error rate over 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101103` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Source team](https://handbook.sourcegraph.com/departments/engineering/teams/source).*Technical details

@@ -37417,21 +38356,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101103` Query: ``` -sum(increase(src_codeintel_dependencies_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum(increase(src_codeintel_dependencies_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum(increase(src_codeintel_dependencies_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 +sum(increase(src_gitserver_client_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum(increase(src_gitserver_client_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum(increase(src_gitserver_client_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 ```-#### frontend: codeintel_dependencies_total +#### frontend: gitserver_client_total -

Service operations every 5m

+Client operations every 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101110` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Source team](https://handbook.sourcegraph.com/departments/engineering/teams/source).*Technical details

@@ -37439,21 +38378,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101110` Query: ``` -sum by (op)(increase(src_codeintel_dependencies_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum by (op,scope)(increase(src_gitserver_client_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: codeintel_dependencies_99th_percentile_duration +#### frontend: gitserver_client_99th_percentile_duration -

99th percentile successful service operation duration over 5m

+99th percentile successful client operation duration over 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101111` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Source team](https://handbook.sourcegraph.com/departments/engineering/teams/source).*Technical details

@@ -37461,21 +38400,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101111` Query: ``` -histogram_quantile(0.99, sum by (le,op)(rate(src_codeintel_dependencies_duration_seconds_bucket{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) +histogram_quantile(0.99, sum by (le,op,scope)(rate(src_gitserver_client_duration_seconds_bucket{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) ```-#### frontend: codeintel_dependencies_errors_total +#### frontend: gitserver_client_errors_total -

Service operation errors every 5m

+Client operation errors every 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101112` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Source team](https://handbook.sourcegraph.com/departments/engineering/teams/source).*Technical details

@@ -37483,21 +38422,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101112` Query: ``` -sum by (op)(increase(src_codeintel_dependencies_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum by (op,scope)(increase(src_gitserver_client_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: codeintel_dependencies_error_rate +#### frontend: gitserver_client_error_rate -

Service operation error rate over 5m

+Client operation error rate over 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101113` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Source team](https://handbook.sourcegraph.com/departments/engineering/teams/source).*Technical details

@@ -37505,23 +38444,23 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101113` Query: ``` -sum by (op)(increase(src_codeintel_dependencies_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum by (op)(increase(src_codeintel_dependencies_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum by (op)(increase(src_codeintel_dependencies_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 +sum by (op,scope)(increase(src_gitserver_client_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum by (op,scope)(increase(src_gitserver_client_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum by (op,scope)(increase(src_gitserver_client_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 ```-### Frontend: Codeintel: dependencies service store stats +### Frontend: Gitserver: Gitserver Repository Service Client -#### frontend: codeintel_dependencies_background_total +#### frontend: gitserver_repositoryservice_client_total -

Aggregate service operations every 5m

+Aggregate client operations every 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101200` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Source team](https://handbook.sourcegraph.com/departments/engineering/teams/source).*Technical details

@@ -37529,21 +38468,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101200` Query: ``` -sum(increase(src_codeintel_dependencies_background_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum(increase(src_gitserver_repositoryservice_client_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: codeintel_dependencies_background_99th_percentile_duration +#### frontend: gitserver_repositoryservice_client_99th_percentile_duration -

Aggregate successful service operation duration distribution over 5m

+Aggregate successful client operation duration distribution over 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101201` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Source team](https://handbook.sourcegraph.com/departments/engineering/teams/source).*Technical details

@@ -37551,21 +38490,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101201` Query: ``` -sum by (le)(rate(src_codeintel_dependencies_background_duration_seconds_bucket{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum by (le)(rate(src_gitserver_repositoryservice_client_duration_seconds_bucket{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: codeintel_dependencies_background_errors_total +#### frontend: gitserver_repositoryservice_client_errors_total -

Aggregate service operation errors every 5m

+Aggregate client operation errors every 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101202` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Source team](https://handbook.sourcegraph.com/departments/engineering/teams/source).*Technical details

@@ -37573,21 +38512,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101202` Query: ``` -sum(increase(src_codeintel_dependencies_background_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum(increase(src_gitserver_repositoryservice_client_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: codeintel_dependencies_background_error_rate +#### frontend: gitserver_repositoryservice_client_error_rate -

Aggregate service operation error rate over 5m

+Aggregate client operation error rate over 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101203` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Source team](https://handbook.sourcegraph.com/departments/engineering/teams/source).*Technical details

@@ -37595,21 +38534,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101203` Query: ``` -sum(increase(src_codeintel_dependencies_background_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum(increase(src_codeintel_dependencies_background_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum(increase(src_codeintel_dependencies_background_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 +sum(increase(src_gitserver_repositoryservice_client_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum(increase(src_gitserver_repositoryservice_client_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum(increase(src_gitserver_repositoryservice_client_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 ```-#### frontend: codeintel_dependencies_background_total +#### frontend: gitserver_repositoryservice_client_total -

Service operations every 5m

+Client operations every 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101210` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Source team](https://handbook.sourcegraph.com/departments/engineering/teams/source).*Technical details

@@ -37617,21 +38556,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101210` Query: ``` -sum by (op)(increase(src_codeintel_dependencies_background_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum by (op,scope)(increase(src_gitserver_repositoryservice_client_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: codeintel_dependencies_background_99th_percentile_duration +#### frontend: gitserver_repositoryservice_client_99th_percentile_duration -

99th percentile successful service operation duration over 5m

+99th percentile successful client operation duration over 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101211` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Source team](https://handbook.sourcegraph.com/departments/engineering/teams/source).*Technical details

@@ -37639,21 +38578,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101211` Query: ``` -histogram_quantile(0.99, sum by (le,op)(rate(src_codeintel_dependencies_background_duration_seconds_bucket{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) +histogram_quantile(0.99, sum by (le,op,scope)(rate(src_gitserver_repositoryservice_client_duration_seconds_bucket{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) ```-#### frontend: codeintel_dependencies_background_errors_total +#### frontend: gitserver_repositoryservice_client_errors_total -

Service operation errors every 5m

+Client operation errors every 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101212` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Source team](https://handbook.sourcegraph.com/departments/engineering/teams/source).*Technical details

@@ -37661,21 +38600,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101212` Query: ``` -sum by (op)(increase(src_codeintel_dependencies_background_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum by (op,scope)(increase(src_gitserver_repositoryservice_client_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: codeintel_dependencies_background_error_rate +#### frontend: gitserver_repositoryservice_client_error_rate -

Service operation error rate over 5m

+Client operation error rate over 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101213` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Source team](https://handbook.sourcegraph.com/departments/engineering/teams/source).*Technical details

@@ -37683,23 +38622,23 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101213` Query: ``` -sum by (op)(increase(src_codeintel_dependencies_background_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum by (op)(increase(src_codeintel_dependencies_background_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum by (op)(increase(src_codeintel_dependencies_background_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 +sum by (op,scope)(increase(src_gitserver_repositoryservice_client_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum by (op,scope)(increase(src_gitserver_repositoryservice_client_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum by (op,scope)(increase(src_gitserver_repositoryservice_client_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 ```-### Frontend: Codeintel: dependencies service background stats +### Frontend: Batches: dbstore stats -#### frontend: codeintel_dependencies_background_total +#### frontend: batches_dbstore_total -

Aggregate service operations every 5m

+Aggregate store operations every 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101300` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).*Technical details

@@ -37707,21 +38646,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101300` Query: ``` -sum(increase(src_codeintel_dependencies_background_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum(increase(src_batches_dbstore_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: codeintel_dependencies_background_99th_percentile_duration +#### frontend: batches_dbstore_99th_percentile_duration -

Aggregate successful service operation duration distribution over 5m

+Aggregate successful store operation duration distribution over 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101301` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).*Technical details

@@ -37729,21 +38668,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101301` Query: ``` -sum by (le)(rate(src_codeintel_dependencies_background_duration_seconds_bucket{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum by (le)(rate(src_batches_dbstore_duration_seconds_bucket{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: codeintel_dependencies_background_errors_total +#### frontend: batches_dbstore_errors_total -

Aggregate service operation errors every 5m

+Aggregate store operation errors every 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101302` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).*Technical details

@@ -37751,21 +38690,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101302` Query: ``` -sum(increase(src_codeintel_dependencies_background_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum(increase(src_batches_dbstore_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: codeintel_dependencies_background_error_rate +#### frontend: batches_dbstore_error_rate -

Aggregate service operation error rate over 5m

+Aggregate store operation error rate over 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101303` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).*Technical details

@@ -37773,21 +38712,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101303` Query: ``` -sum(increase(src_codeintel_dependencies_background_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum(increase(src_codeintel_dependencies_background_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum(increase(src_codeintel_dependencies_background_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 +sum(increase(src_batches_dbstore_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum(increase(src_batches_dbstore_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum(increase(src_batches_dbstore_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 ```-#### frontend: codeintel_dependencies_background_total +#### frontend: batches_dbstore_total -

Service operations every 5m

+Store operations every 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101310` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).*Technical details

@@ -37795,21 +38734,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101310` Query: ``` -sum by (op)(increase(src_codeintel_dependencies_background_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum by (op)(increase(src_batches_dbstore_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: codeintel_dependencies_background_99th_percentile_duration +#### frontend: batches_dbstore_99th_percentile_duration -

99th percentile successful service operation duration over 5m

+99th percentile successful store operation duration over 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101311` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).*Technical details

@@ -37817,21 +38756,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101311` Query: ``` -histogram_quantile(0.99, sum by (le,op)(rate(src_codeintel_dependencies_background_duration_seconds_bucket{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) +histogram_quantile(0.99, sum by (le,op)(rate(src_batches_dbstore_duration_seconds_bucket{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) ```-#### frontend: codeintel_dependencies_background_errors_total +#### frontend: batches_dbstore_errors_total -

Service operation errors every 5m

+Store operation errors every 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101312` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).*Technical details

@@ -37839,21 +38778,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101312` Query: ``` -sum by (op)(increase(src_codeintel_dependencies_background_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum by (op)(increase(src_batches_dbstore_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: codeintel_dependencies_background_error_rate +#### frontend: batches_dbstore_error_rate -

Service operation error rate over 5m

+Store operation error rate over 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101313` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).*Technical details

@@ -37861,15 +38800,15 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101313` Query: ``` -sum by (op)(increase(src_codeintel_dependencies_background_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum by (op)(increase(src_codeintel_dependencies_background_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum by (op)(increase(src_codeintel_dependencies_background_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 +sum by (op)(increase(src_batches_dbstore_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum by (op)(increase(src_batches_dbstore_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum by (op)(increase(src_batches_dbstore_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 ```-### Frontend: Codeintel: lockfiles service stats +### Frontend: Batches: service stats -#### frontend: codeintel_lockfiles_total +#### frontend: batches_service_total

Aggregate service operations every 5m

@@ -37877,7 +38816,7 @@ This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101400` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).*Technical details

@@ -37885,13 +38824,13 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101400` Query: ``` -sum(increase(src_codeintel_lockfiles_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum(increase(src_batches_service_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: codeintel_lockfiles_99th_percentile_duration +#### frontend: batches_service_99th_percentile_duration

Aggregate successful service operation duration distribution over 5m

@@ -37899,7 +38838,7 @@ This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101401` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).*Technical details

@@ -37907,13 +38846,13 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101401` Query: ``` -sum by (le)(rate(src_codeintel_lockfiles_duration_seconds_bucket{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum by (le)(rate(src_batches_service_duration_seconds_bucket{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: codeintel_lockfiles_errors_total +#### frontend: batches_service_errors_total

Aggregate service operation errors every 5m

@@ -37921,7 +38860,7 @@ This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101402` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).*Technical details

@@ -37929,13 +38868,13 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101402` Query: ``` -sum(increase(src_codeintel_lockfiles_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum(increase(src_batches_service_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: codeintel_lockfiles_error_rate +#### frontend: batches_service_error_rate

Aggregate service operation error rate over 5m

@@ -37943,7 +38882,7 @@ This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101403` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).*Technical details

@@ -37951,13 +38890,13 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101403` Query: ``` -sum(increase(src_codeintel_lockfiles_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum(increase(src_codeintel_lockfiles_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum(increase(src_codeintel_lockfiles_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 +sum(increase(src_batches_service_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum(increase(src_batches_service_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum(increase(src_batches_service_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 ```-#### frontend: codeintel_lockfiles_total +#### frontend: batches_service_total

Service operations every 5m

@@ -37965,7 +38904,7 @@ This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101410` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).*Technical details

@@ -37973,13 +38912,13 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101410` Query: ``` -sum by (op)(increase(src_codeintel_lockfiles_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum by (op)(increase(src_batches_service_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: codeintel_lockfiles_99th_percentile_duration +#### frontend: batches_service_99th_percentile_duration

99th percentile successful service operation duration over 5m

@@ -37987,7 +38926,7 @@ This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101411` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).*Technical details

@@ -37995,13 +38934,13 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101411` Query: ``` -histogram_quantile(0.99, sum by (le,op)(rate(src_codeintel_lockfiles_duration_seconds_bucket{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) +histogram_quantile(0.99, sum by (le,op)(rate(src_batches_service_duration_seconds_bucket{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) ```-#### frontend: codeintel_lockfiles_errors_total +#### frontend: batches_service_errors_total

Service operation errors every 5m

@@ -38009,7 +38948,7 @@ This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101412` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).*Technical details

@@ -38017,13 +38956,13 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101412` Query: ``` -sum by (op)(increase(src_codeintel_lockfiles_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum by (op)(increase(src_batches_service_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: codeintel_lockfiles_error_rate +#### frontend: batches_service_error_rate

Service operation error rate over 5m

@@ -38031,7 +38970,7 @@ This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101413` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).* +*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).*Technical details

@@ -38039,23 +38978,23 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101413` Query: ``` -sum by (op)(increase(src_codeintel_lockfiles_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum by (op)(increase(src_codeintel_lockfiles_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum by (op)(increase(src_codeintel_lockfiles_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 +sum by (op)(increase(src_batches_service_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum by (op)(increase(src_batches_service_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum by (op)(increase(src_batches_service_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 ```-### Frontend: Gitserver: Gitserver Client +### Frontend: Batches: HTTP API File Handler -#### frontend: gitserver_client_total +#### frontend: batches_httpapi_total -

Aggregate graphql operations every 5m

+Aggregate http handler operations every 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101500` on your Sourcegraph instance. -*Managed by the [Sourcegraph Source team](https://handbook.sourcegraph.com/departments/engineering/teams/source).* +*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).*Technical details

@@ -38063,21 +39002,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101500` Query: ``` -sum(increase(src_gitserver_client_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum(increase(src_batches_httpapi_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: gitserver_client_99th_percentile_duration +#### frontend: batches_httpapi_99th_percentile_duration -

Aggregate successful graphql operation duration distribution over 5m

+Aggregate successful http handler operation duration distribution over 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101501` on your Sourcegraph instance. -*Managed by the [Sourcegraph Source team](https://handbook.sourcegraph.com/departments/engineering/teams/source).* +*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).*Technical details

@@ -38085,21 +39024,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101501` Query: ``` -sum by (le)(rate(src_gitserver_client_duration_seconds_bucket{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum by (le)(rate(src_batches_httpapi_duration_seconds_bucket{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: gitserver_client_errors_total +#### frontend: batches_httpapi_errors_total -

Aggregate graphql operation errors every 5m

+Aggregate http handler operation errors every 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101502` on your Sourcegraph instance. -*Managed by the [Sourcegraph Source team](https://handbook.sourcegraph.com/departments/engineering/teams/source).* +*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).*Technical details

@@ -38107,21 +39046,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101502` Query: ``` -sum(increase(src_gitserver_client_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum(increase(src_batches_httpapi_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: gitserver_client_error_rate +#### frontend: batches_httpapi_error_rate -

Aggregate graphql operation error rate over 5m

+Aggregate http handler operation error rate over 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101503` on your Sourcegraph instance. -*Managed by the [Sourcegraph Source team](https://handbook.sourcegraph.com/departments/engineering/teams/source).* +*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).*Technical details

@@ -38129,21 +39068,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101503` Query: ``` -sum(increase(src_gitserver_client_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum(increase(src_gitserver_client_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum(increase(src_gitserver_client_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 +sum(increase(src_batches_httpapi_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum(increase(src_batches_httpapi_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum(increase(src_batches_httpapi_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 ```-#### frontend: gitserver_client_total +#### frontend: batches_httpapi_total -

Graphql operations every 5m

+Http handler operations every 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101510` on your Sourcegraph instance. -*Managed by the [Sourcegraph Source team](https://handbook.sourcegraph.com/departments/engineering/teams/source).* +*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).*Technical details

@@ -38151,21 +39090,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101510` Query: ``` -sum by (op,scope)(increase(src_gitserver_client_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum by (op)(increase(src_batches_httpapi_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: gitserver_client_99th_percentile_duration +#### frontend: batches_httpapi_99th_percentile_duration -

99th percentile successful graphql operation duration over 5m

+99th percentile successful http handler operation duration over 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101511` on your Sourcegraph instance. -*Managed by the [Sourcegraph Source team](https://handbook.sourcegraph.com/departments/engineering/teams/source).* +*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).*Technical details

@@ -38173,21 +39112,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101511` Query: ``` -histogram_quantile(0.99, sum by (le,op,scope)(rate(src_gitserver_client_duration_seconds_bucket{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) +histogram_quantile(0.99, sum by (le,op)(rate(src_batches_httpapi_duration_seconds_bucket{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) ```-#### frontend: gitserver_client_errors_total +#### frontend: batches_httpapi_errors_total -

Graphql operation errors every 5m

+Http handler operation errors every 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101512` on your Sourcegraph instance. -*Managed by the [Sourcegraph Source team](https://handbook.sourcegraph.com/departments/engineering/teams/source).* +*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).*Technical details

@@ -38195,21 +39134,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101512` Query: ``` -sum by (op,scope)(increase(src_gitserver_client_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum by (op)(increase(src_batches_httpapi_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: gitserver_client_error_rate +#### frontend: batches_httpapi_error_rate -

Graphql operation error rate over 5m

+Http handler operation error rate over 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101513` on your Sourcegraph instance. -*Managed by the [Sourcegraph Source team](https://handbook.sourcegraph.com/departments/engineering/teams/source).* +*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).*Technical details

@@ -38217,23 +39156,23 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101513` Query: ``` -sum by (op,scope)(increase(src_gitserver_client_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum by (op,scope)(increase(src_gitserver_client_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum by (op,scope)(increase(src_gitserver_client_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 +sum by (op)(increase(src_batches_httpapi_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum by (op)(increase(src_batches_httpapi_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum by (op)(increase(src_batches_httpapi_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 ```-### Frontend: Batches: dbstore stats +### Frontend: Out-of-band migrations: up migration invocation (one batch processed) -#### frontend: batches_dbstore_total +#### frontend: oobmigration_total -

Aggregate store operations every 5m

+Migration handler operations every 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101600` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).* +*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).*Technical details

@@ -38241,21 +39180,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101600` Query: ``` -sum(increase(src_batches_dbstore_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum(increase(src_oobmigration_total{op="up",job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: batches_dbstore_99th_percentile_duration +#### frontend: oobmigration_99th_percentile_duration -

Aggregate successful store operation duration distribution over 5m

+Aggregate successful migration handler operation duration distribution over 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101601` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).* +*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).*Technical details

@@ -38263,21 +39202,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101601` Query: ``` -sum by (le)(rate(src_batches_dbstore_duration_seconds_bucket{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum by (le)(rate(src_oobmigration_duration_seconds_bucket{op="up",job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: batches_dbstore_errors_total +#### frontend: oobmigration_errors_total -

Aggregate store operation errors every 5m

+Migration handler operation errors every 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101602` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).* +*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).*Technical details

@@ -38285,21 +39224,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101602` Query: ``` -sum(increase(src_batches_dbstore_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum(increase(src_oobmigration_errors_total{op="up",job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: batches_dbstore_error_rate +#### frontend: oobmigration_error_rate -

Aggregate store operation error rate over 5m

+Migration handler operation error rate over 5m

This panel has no related alerts. To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101603` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).* +*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).*Technical details

@@ -38307,21 +39246,23 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101603` Query: ``` -sum(increase(src_batches_dbstore_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum(increase(src_batches_dbstore_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum(increase(src_batches_dbstore_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 +sum(increase(src_oobmigration_errors_total{op="up",job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum(increase(src_oobmigration_total{op="up",job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum(increase(src_oobmigration_errors_total{op="up",job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 ```-#### frontend: batches_dbstore_total +### Frontend: Out-of-band migrations: down migration invocation (one batch processed) -

Store operations every 5m

+#### frontend: oobmigration_total + +Migration handler operations every 5m

This panel has no related alerts. -To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101610` on your Sourcegraph instance. +To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101700` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).* +*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).*Technical details

@@ -38329,21 +39270,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101610` Query: ``` -sum by (op)(increase(src_batches_dbstore_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum(increase(src_oobmigration_total{op="down",job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: batches_dbstore_99th_percentile_duration +#### frontend: oobmigration_99th_percentile_duration -

99th percentile successful store operation duration over 5m

+Aggregate successful migration handler operation duration distribution over 5m

This panel has no related alerts. -To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101611` on your Sourcegraph instance. +To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101701` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).* +*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).*Technical details

@@ -38351,21 +39292,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101611` Query: ``` -histogram_quantile(0.99, sum by (le,op)(rate(src_batches_dbstore_duration_seconds_bucket{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) +sum by (le)(rate(src_oobmigration_duration_seconds_bucket{op="down",job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: batches_dbstore_errors_total +#### frontend: oobmigration_errors_total -

Store operation errors every 5m

+Migration handler operation errors every 5m

This panel has no related alerts. -To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101612` on your Sourcegraph instance. +To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101702` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).* +*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).*Technical details

@@ -38373,21 +39314,21 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101612` Query: ``` -sum by (op)(increase(src_batches_dbstore_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum(increase(src_oobmigration_errors_total{op="down",job=~"^(frontend|sourcegraph-frontend).*"}[5m])) ```-#### frontend: batches_dbstore_error_rate +#### frontend: oobmigration_error_rate -

Store operation error rate over 5m

+Migration handler operation error rate over 5m

This panel has no related alerts. -To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101613` on your Sourcegraph instance. +To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101703` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).* +*Managed by the [Sourcegraph Code intelligence team](https://handbook.sourcegraph.com/departments/engineering/teams/code-intelligence).*Technical details

@@ -38395,23 +39336,25 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101613` Query: ``` -sum by (op)(increase(src_batches_dbstore_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum by (op)(increase(src_batches_dbstore_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum by (op)(increase(src_batches_dbstore_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 +sum(increase(src_oobmigration_errors_total{op="down",job=~"^(frontend|sourcegraph-frontend).*"}[5m])) / (sum(increase(src_oobmigration_total{op="down",job=~"^(frontend|sourcegraph-frontend).*"}[5m])) + sum(increase(src_oobmigration_errors_total{op="down",job=~"^(frontend|sourcegraph-frontend).*"}[5m]))) * 100 ```-### Frontend: Batches: service stats +### Frontend: Zoekt Configuration GRPC server metrics -#### frontend: batches_service_total +#### frontend: zoekt_configuration_grpc_request_rate_all_methods -

Aggregate service operations every 5m

+Request rate across all methods over 2m

+ +The number of gRPC requests received per second across all methods, aggregated across all instances. This panel has no related alerts. -To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101700` on your Sourcegraph instance. +To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101800` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).* +*Managed by the [Sourcegraph Search Platform team](https://handbook.sourcegraph.com/departments/engineering/teams/search/core).*Technical details

@@ -38419,21 +39362,23 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101700` Query: ``` -sum(increase(src_batches_service_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum(rate(grpc_server_started_total{instance=~`${internalInstance:regex}`,grpc_service=~"sourcegraph.zoekt.configuration.v1.ZoektConfigurationService"}[2m])) ```-#### frontend: batches_service_99th_percentile_duration +#### frontend: zoekt_configuration_grpc_request_rate_per_method -

Aggregate successful service operation duration distribution over 5m

+Request rate per-method over 2m

+ +The number of gRPC requests received per second broken out per method, aggregated across all instances. This panel has no related alerts. -To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101701` on your Sourcegraph instance. +To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101801` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).* +*Managed by the [Sourcegraph Search Platform team](https://handbook.sourcegraph.com/departments/engineering/teams/search/core).*Technical details

@@ -38441,21 +39386,23 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101701` Query: ``` -sum by (le)(rate(src_batches_service_duration_seconds_bucket{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +sum(rate(grpc_server_started_total{grpc_method=~`${zoekt_configuration_method:regex}`,instance=~`${internalInstance:regex}`,grpc_service=~"sourcegraph.zoekt.configuration.v1.ZoektConfigurationService"}[2m])) by (grpc_method) ```-#### frontend: batches_service_errors_total +#### frontend: zoekt_configuration_error_percentage_all_methods -

Aggregate service operation errors every 5m

+Error percentage across all methods over 2m

+ +The percentage of gRPC requests that fail across all methods, aggregated across all instances. This panel has no related alerts. -To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101702` on your Sourcegraph instance. +To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101810` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).* +*Managed by the [Sourcegraph Search Platform team](https://handbook.sourcegraph.com/departments/engineering/teams/search/core).*Technical details

@@ -38463,21 +39410,23 @@ To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101702` Query: ``` -sum(increase(src_batches_service_errors_total{job=~"^(frontend|sourcegraph-frontend).*"}[5m])) +(100.0 * ( (sum(rate(grpc_server_handled_total{grpc_code!="OK",instance=~`${internalInstance:regex}`,grpc_service=~"sourcegraph.zoekt.configuration.v1.ZoektConfigurationService"}[2m]))) / (sum(rate(grpc_server_handled_total{instance=~`${internalInstance:regex}`,grpc_service=~"sourcegraph.zoekt.configuration.v1.ZoektConfigurationService"}[2m]))) )) ```-#### frontend: batches_service_error_rate +#### frontend: zoekt_configuration_grpc_error_percentage_per_method -

Aggregate service operation error rate over 5m

+Error percentage per-method over 2m

+ +The percentage of gRPC requests that fail per method, aggregated across all instances. This panel has no related alerts. -To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101703` on your Sourcegraph instance. +To see this panel, visit `/-/debug/grafana/d/frontend/frontend?viewPanel=101811` on your Sourcegraph instance. -*Managed by the [Sourcegraph Code Search team](https://handbook.sourcegraph.com/departments/engineering/teams/code-search).* +*Managed by the [Sourcegraph Search Platform team](https://handbook.sourcegraph.com/departments/engineering/teams/search/core).*Technical details