Ever since he played some of his first video games on the Windows 95 tower his father brought home from work, Paul Chiou has found a bit of freedom in computers. As a child, console systems like Playstation and Nintendo 64 were off limits in his house, but the computer was different. It could be used for homework … and perhaps a bit of surreptitious gaming on the side.

Nowadays, Chiou is working on his Ph.D. in computer science at the USC Viterbi School of Engineering, where his research focuses on automatically finding and fixing keyboard accessibility issues in both web and Android apps using dynamic program analysis—an effort that he says is among the first of its kind. He’s been working on this problem with his research partner, Ali Alotaibi, and Professor William G.J. Halfond since his first semester in the Ph.D. program. Chiou said he chose this topic because he could relate.

“I have a personal connection to inaccessibility. I only have access to the mouse, so I know how it feels to be excluded from access to input devices,” says Chiou. “Keyboard accessibility immediately stood out to me because I realized there are people out there who are the complete opposite of me—who only can use a keyboard and not a mouse. I completely understand the hardship and inconvenience that they face every day.”

Chiou has been paralyzed from the neck down since an accident when he was 15-years-old, and now he uses a computer with a combination of ready-made and custom assistive technologies to do everything from writing and coding to playing video games. Dr. Halfond said that working with Chiou has made him realize how he’d taken user interfaces for granted.

“Seeing Paul struggle with the problems he's trying to fix has made me aware of how inaccessible so much software is,” says Halfond. “Seeing the functionality that’s unavailable to people using websites with a keyboard has been eye-opening.”

Check out the video below—the latest in GitHub’s Coding Accessibility video series—and keep reading to learn how Chiou develops custom computer interfaces to help him research, code, collaborate, and compete online.

A copy of this video with audio descriptions of visual content is also available on YouTube.

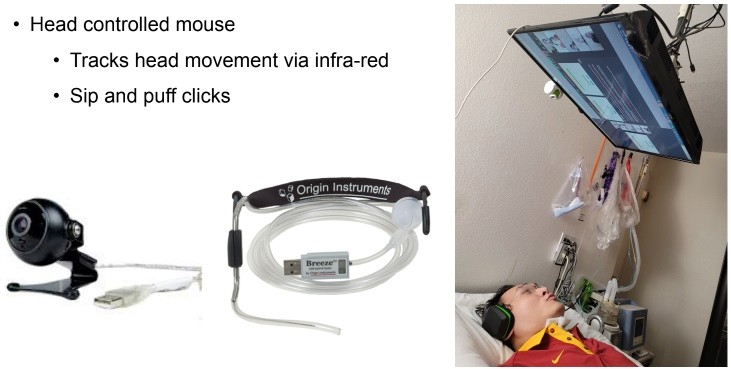

The hardware Chiou uses to interact with his computer varies depending on the situation. For example, when he’s in bed, he uses two devices that, when combined, behave “just like a mouse.” First there’s a gadget called TrackerPro that uses a camera to track his head and control the mouse pointer. Then there’s a sensor tool called Breeze that senses pressure changes in a tube that he either sips from to left click or puffs into to right click.

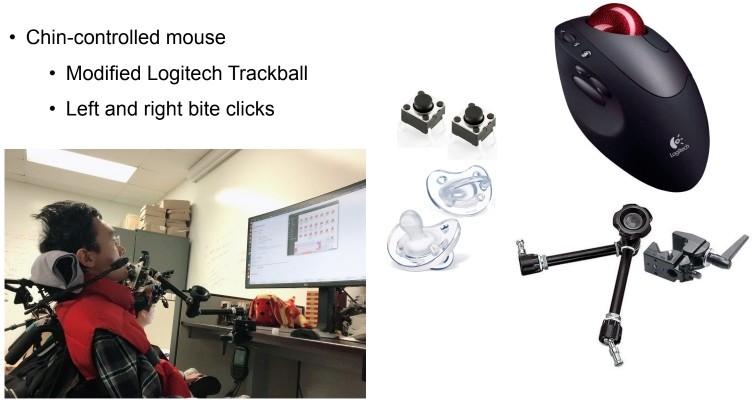

When he’s in his wheelchair, he uses a more portable and custom setup. He controls the mouse pointer with a device his father fashioned for him: a disassembled Logitech trackball mounted onto a Manfrotto arm that he uses by rolling with his chin and two pacifiers containing mini switches that he bites to right or left click. Whatever the hardware, Chiou then uses an on-screen keyboard (OSK) to type.

Although he might describe his solution as equivalent to the mouse anyone might use, he also explains that the equivalence is situational.

“As someone who doesn't have access to the keyboard, I perceive that ‘access’ isn't a binary term and can be defined in varying amounts,” says Chiou. For example, some applications might only allow keyboard access when in full-screen mode, but not show the OSK. When that happens, the application suddenly becomes inaccessible to him. “I’d be completely stuck, unable to return to the accessible environment without someone's help.”

Just as he used his experience to create an innovative solution to solve keyboard accessibility, he also brought this creativity to his daily life—for example, his ability to better compete in the multiplayer online battle arena of Dota 2.

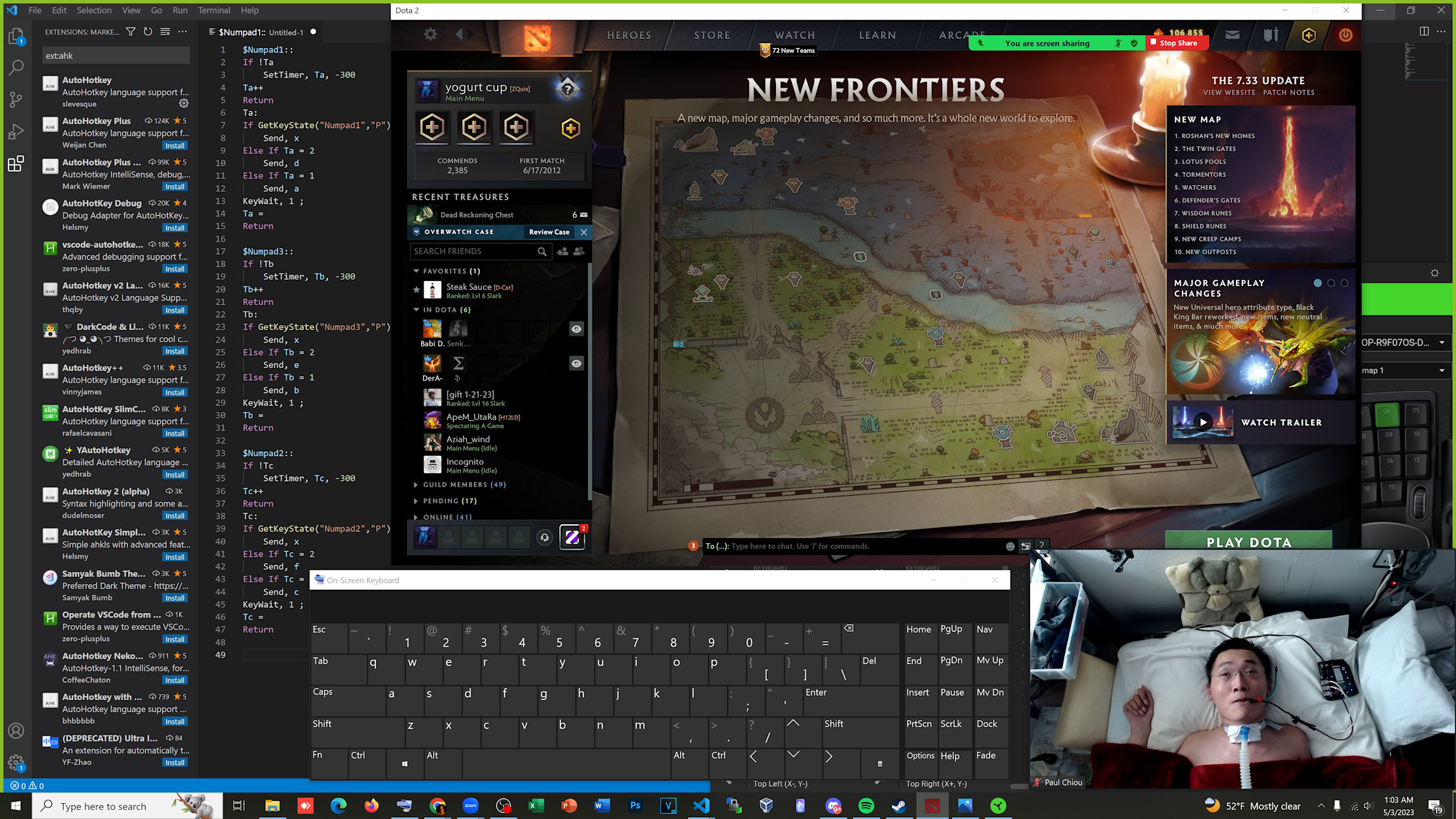

Able-bodied players, he explains, use a mouse with one hand to move around, and a keyboard with the other hand to instantly cast spells using hotkeys. Chiou, by contrast, moves around the map by moving the mouse and sipping and puffing, but when he needs to cast a spell, he needs to move his mouse cursor to the spell buttons at the bottom of the screen, click on them, and move the cursor back to the map to either place the spell or continue the movement. Given the real-time nature of the game, this was an obvious barrier, and so he began developing a solution.

At first, he worked with his father to create two bite switches to act as hotkeys to cast spells, but it was too much to sip and puff and bite, all at the same time. They moved on to mounting switches for him to press using his cheeks, but again ran into difficulties when he’d hit the switches accidentally while moving the mouse with his head. The third prototype, however, landed on a better solution: a switch he can push with his tongue that was originally designed for skydivers to take pictures when they can’t use their hands. They wired up two switches for two hotkeys, and his gameplay significantly improved.

“Then I got greedy and thought, ‘Can I do better?’,” says Chiou. And the answer was yes, he could. He wrote a small program using the AutoKey project to effectively turn one button into two, and suddenly he had four hotkeys.

But still, he wanted more and realized he could do more with his tongue than just push. The most recent iteration evolved to include three four-way directional micro joysticks placed side-by-side in his mouth to give him access to 12 hotkeys.

“If I want something bad enough, creativity automatically kicks in to make things happen,” says Chiou. “This gaming project is another example of the stuff I create to accommodate my physical shortcomings. Over the years, my dad and I have made different projects, from helping me use the computer directly from my wheelchair (with a permanent setup without having to set up my laptop) to allowing me to do photography and videography on my own.”

While his computer input interface might be custom, Chiou says that his developer stack is what you would expect: Eclipse for Java, PyCharm for Python, and Visual Studio Code for other tasks, as well as the recent addition of ChatGPT and GitHub Copilot. He credits these last two to Alotaibi, noting that they use them to automate tasks, like quickly writing a script to randomly distribute their user study surveys to participants, and save time and effort by writing boilerplate code for common functions.

"It's helpful to quickly get things started syntactically,” says Chiou. “These new AIs definitely make things easier on simple tasks.”

Similarly, his collaboration with his partners employs an expected set of tools, such as Asana, Slack, Zoom, Overleaf, Google Docs, and GitHub. And when Chiou needs to quickly write something on the whiteboard as they’re discussing ideas, he’ll open up an MS Paint window.

As a developer himself, he argues that paying attention to accessibility is a matter of ensuring quality software, and not something extra.

“Much attention has been focused on topics like security but little on accessibility. I believe every software developer has the obligation to make the software they create accessible,” says Chiou. “Just like software testing is important, and no developer would deploy their products without testing for bugs, I believe the same should be applied to accessibility bugs.”

Chiou says he feels good about being a software developer for several reasons.

“It’s something that I can still do, thanks to technology, despite my physical limitations,” says Chiou. “As an accessibility-conscious developer, I’m promoting accessibility so it can be understood by others and, hopefully, no longer has to be considered an optional feature by other developers. And as a developer who creates accessible software, I know I’m enabling others like myself and giving them an equal opportunity to enjoy a livelihood, just as able-bodied users do.”