|

27 | 27 | "\n", |

28 | 28 | "The Assistants API from OpenAI allows developers to build AI assistants that can utilize multiple tools and data sources in parallel, such as code interpreters, file search, and custom tools created by calling functions. These assistants can access OpenAI's language models like GPT-4 with specific prompts, maintain persistent conversation histories, and process various file formats like text, images, and spreadsheets. Developers can fine-tune the language models on their own data and control aspects like output randomness. The API provides a framework for creating AI applications that combine language understanding with external tools and data.\n", |

29 | 29 | "\n", |

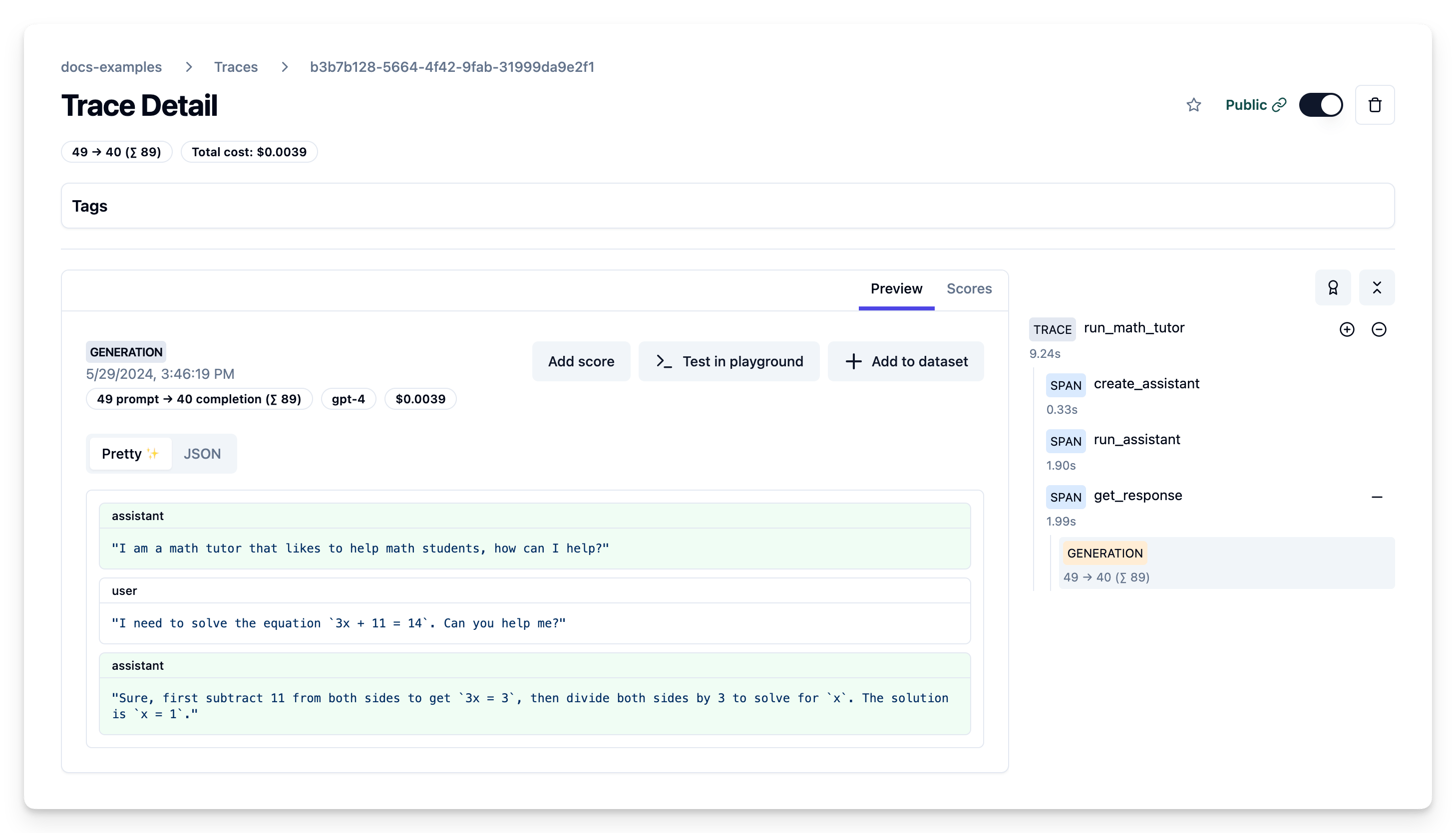

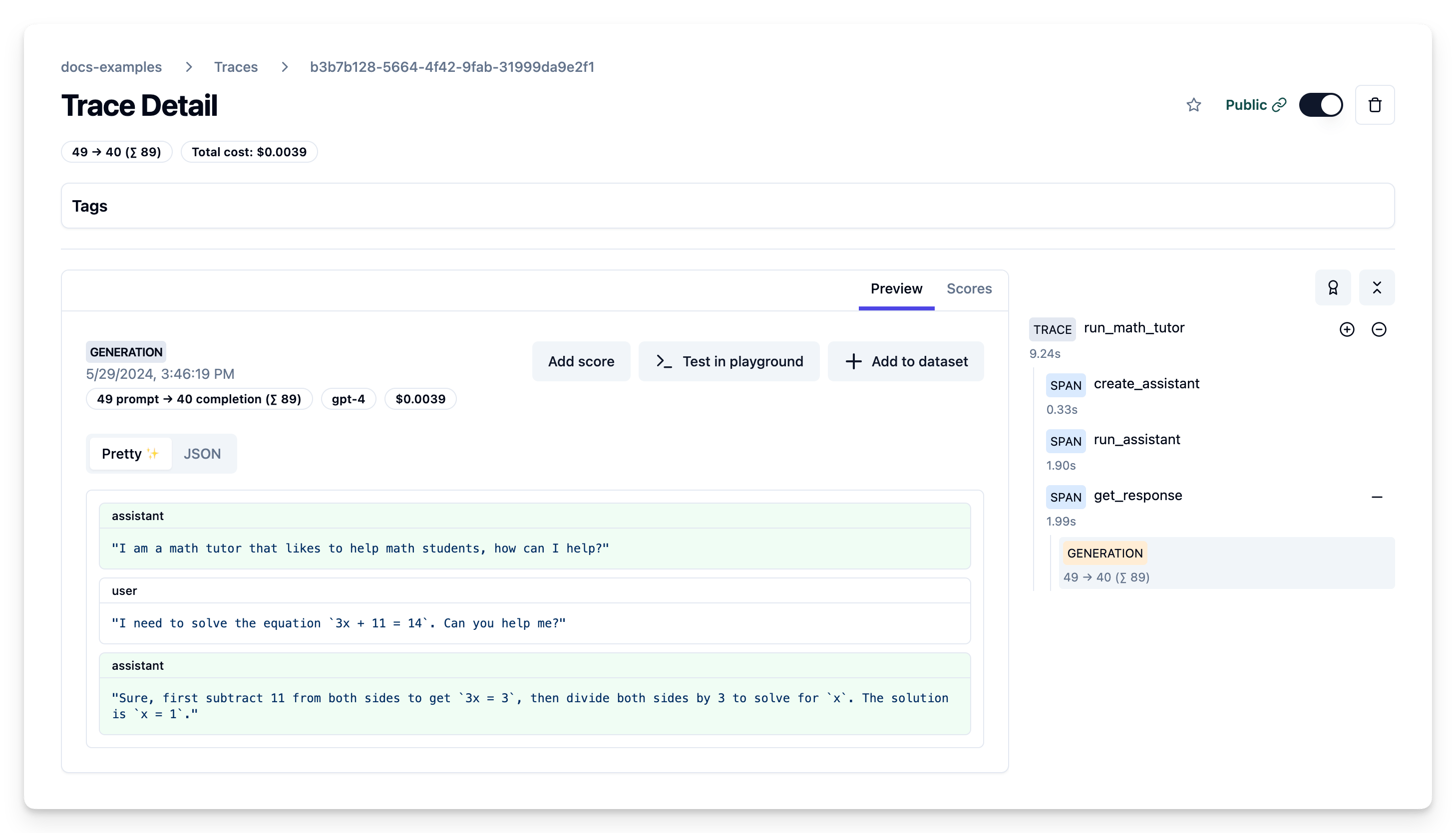

| 30 | + "## Example Trace Output\n", |

| 31 | + "\n", |

| 32 | + "\n", |

| 33 | + "\n", |

30 | 34 | "## Setup\n", |

31 | 35 | "\n", |

32 | 36 | "Install the required packages:" |

|

189 | 193 | "import json\n", |

190 | 194 | "from langfuse.decorators import langfuse_context\n", |

191 | 195 | "\n", |

192 | | - "@observe(as_type=\"generation\")\n", |

| 196 | + "@observe()\n", |

193 | 197 | "def get_response(thread_id, run_id):\n", |

194 | 198 | " client = OpenAI()\n", |

195 | 199 | " \n", |

|

206 | 210 | " thread_id=thread_id,\n", |

207 | 211 | " )\n", |

208 | 212 | " input_messages = [{\"role\": message.role, \"content\": message.content[0].text.value} for message in message_log.data[::-1][:-1]]\n", |

209 | | - " \n", |

210 | | - " langfuse_context.update_current_observation(\n", |

| 213 | + "\n", |

| 214 | + " # log internal generation within the openai assistant as a separate child generation to langfuse\n", |

| 215 | + " # get langfuse client used by the decorator, uses the low-level Python SDK\n", |

| 216 | + " langfuse_client = langfuse_context._get_langfuse()\n", |

| 217 | + " # pass trace_id and current observation ids to the newly created child generation\n", |

| 218 | + " langfuse_client.generation(\n", |

| 219 | + " trace_id=langfuse_context.get_current_trace_id(),\n", |

| 220 | + " parent_observation_id=langfuse_context.get_current_observation_id(),\n", |

211 | 221 | " model=run.model,\n", |

212 | 222 | " usage=run.usage,\n", |

213 | 223 | " input=input_messages,\n", |

|

216 | 226 | " \n", |

217 | 227 | " return assistant_response, run\n", |

218 | 228 | "\n", |

219 | | - "# wrapper function as we want get_response to be a generation to track tokens\n", |

220 | | - "# -> generations need to have a parent trace\n", |

221 | | - "@observe()\n", |

222 | | - "def get_response_trace(thread_id, run_id):\n", |

223 | | - " return get_response(thread_id, run_id)\n", |

224 | | - "\n", |

225 | | - "response = get_response_trace(thread.id, run.id)\n", |

| 229 | + "response = get_response(thread.id, run.id)\n", |

226 | 230 | "print(f\"Assistant response: {response[0]}\")" |

227 | 231 | ] |

228 | 232 | }, |

229 | 233 | { |

230 | 234 | "cell_type": "markdown", |

231 | 235 | "metadata": {}, |

232 | 236 | "source": [ |

233 | | - "**[Public link of example trace](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/3020450b-e9b7-4c12-b4fe-7288b6324118?observation=a083878e-73dd-4c47-867e-db4e23050fac) of fetching the response**" |

| 237 | + "**[Public link of example trace](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/e0933ea5-6806-4eb7-aed8-a42d23c57096?observation=401fb816-22e5-45ac-a4c9-e437b120f2e7) of fetching the response**" |

234 | 238 | ] |

235 | 239 | }, |

236 | 240 | { |

|

246 | 250 | "metadata": {}, |

247 | 251 | "outputs": [], |

248 | 252 | "source": [ |

| 253 | + "import time\n", |

| 254 | + "\n", |

249 | 255 | "@observe()\n", |

250 | 256 | "def run_math_tutor(user_input):\n", |

251 | 257 | " assistant = create_assistant()\n", |

252 | 258 | " run, thread = run_assistant(assistant.id, user_input)\n", |

| 259 | + "\n", |

| 260 | + " time.sleep(5) # notebook only, wait for the assistant to finish\n", |

| 261 | + "\n", |

253 | 262 | " response = get_response(thread.id, run.id)\n", |

254 | 263 | " \n", |

255 | 264 | " return response[0]\n", |

|

265 | 274 | "source": [ |

266 | 275 | "The Langfuse trace shows the flow of creating the assistant, running it on a thread with user input, and retrieving the response, along with the captured input/output data.\n", |

267 | 276 | "\n", |

268 | | - "**[Public link of example trace](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/1b2d53ad-f5d2-4f1e-9121-628b5ca1b5b2)**\n", |

269 | | - "\n" |

| 277 | + "**[Public link of example trace](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/b3b7b128-5664-4f42-9fab-31999da9e2f1)**\n", |

| 278 | + "\n", |

| 279 | + "" |

| 280 | + ] |

| 281 | + }, |

| 282 | + { |

| 283 | + "cell_type": "markdown", |

| 284 | + "metadata": {}, |

| 285 | + "source": [ |

| 286 | + "## Learn more\n", |

| 287 | + "\n", |

| 288 | + "If you use non-Assistants API endpoints, you can use the OpenAI SDK wrapper for tracing. Check out the [Langfuse documentation](https://langfuse.com/docs/integrations/openai/python/get-started) for more details." |

270 | 289 | ] |

271 | 290 | } |

272 | 291 | ], |

|

0 commit comments