|

| 1 | +# Langfuse Datasets Cookbook |

| 2 | + |

| 3 | +In this cookbook, we'll iterate on systems prompts with the goal of getting only the capital of a given country. We use Langfuse datasets, to store a list of example inputs and expected outputs. |

| 4 | + |

| 5 | +This is a very simple example, you can run experiments on any LLM application that you either trace with the [Langfuse SDKs](https://langfuse.com/docs/sdk) (Python, JS/TS) or via one of our [integrations](https://langfuse.com/docs/integrations) (e.g. Langchain). |

| 6 | + |

| 7 | +_Simple example application_ |

| 8 | + |

| 9 | +- **Model**: gpt-3.5-turbo |

| 10 | +- **Input**: country name |

| 11 | +- **Output**: capital |

| 12 | +- **Evaluation**: exact match of completion and ground truth |

| 13 | +- **Experiment on**: system prompt |

| 14 | + |

| 15 | +## Setup |

| 16 | + |

| 17 | + |

| 18 | +```python |

| 19 | +%pip install langfuse openai langchain --upgrade |

| 20 | +``` |

| 21 | + |

| 22 | + |

| 23 | +```python |

| 24 | +import os |

| 25 | + |

| 26 | +# get keys for your project from https://cloud.langfuse.com |

| 27 | +os.environ["LANGFUSE_PUBLIC_KEY"] = "" |

| 28 | +os.environ["LANGFUSE_SECRET_KEY"] = "" |

| 29 | + |

| 30 | +# your openai key |

| 31 | +os.environ["OPENAI_API_KEY"] = "" |

| 32 | + |

| 33 | +# Your host, defaults to https://cloud.langfuse.com |

| 34 | +# For US data region, set to "https://us.cloud.langfuse.com" |

| 35 | +# os.environ["LANGFUSE_HOST"] = "http://localhost:3000" |

| 36 | +``` |

| 37 | + |

| 38 | + |

| 39 | +```python |

| 40 | +# import |

| 41 | +from langfuse import Langfuse |

| 42 | +import openai |

| 43 | + |

| 44 | +# init |

| 45 | +langfuse = Langfuse() |

| 46 | +``` |

| 47 | + |

| 48 | +## Create a dataset |

| 49 | + |

| 50 | + |

| 51 | +```python |

| 52 | +langfuse.create_dataset(name="capital_cities"); |

| 53 | +``` |

| 54 | + |

| 55 | +### Items |

| 56 | + |

| 57 | +Load local items into the Langfuse dataset. Alternatively you can add items from production via the Langfuse UI. |

| 58 | + |

| 59 | + |

| 60 | +```python |

| 61 | +# example items, could also be json instead of strings |

| 62 | +local_items = [ |

| 63 | + {"input": {"country": "Italy"}, "expected_output": "Rome"}, |

| 64 | + {"input": {"country": "Spain"}, "expected_output": "Madrid"}, |

| 65 | + {"input": {"country": "Brazil"}, "expected_output": "Brasília"}, |

| 66 | + {"input": {"country": "Japan"}, "expected_output": "Tokyo"}, |

| 67 | + {"input": {"country": "India"}, "expected_output": "New Delhi"}, |

| 68 | + {"input": {"country": "Canada"}, "expected_output": "Ottawa"}, |

| 69 | + {"input": {"country": "South Korea"}, "expected_output": "Seoul"}, |

| 70 | + {"input": {"country": "Argentina"}, "expected_output": "Buenos Aires"}, |

| 71 | + {"input": {"country": "South Africa"}, "expected_output": "Pretoria"}, |

| 72 | + {"input": {"country": "Egypt"}, "expected_output": "Cairo"}, |

| 73 | +] |

| 74 | +``` |

| 75 | + |

| 76 | + |

| 77 | +```python |

| 78 | +# Upload to Langfuse |

| 79 | +for item in local_items: |

| 80 | + langfuse.create_dataset_item( |

| 81 | + dataset_name="capital_cities", |

| 82 | + # any python object or value |

| 83 | + input=item["input"], |

| 84 | + # any python object or value, optional |

| 85 | + expected_output=item["expected_output"] |

| 86 | +) |

| 87 | +``` |

| 88 | + |

| 89 | +## Define application and run experiments |

| 90 | + |

| 91 | +We implement the application in two ways to demonstrate how it's done |

| 92 | + |

| 93 | +1. Custom LLM app using e.g. OpenAI SDK, traced with Langfuse Python SDK |

| 94 | +2. Langchain Application, traced via native Langfuse integration |

| 95 | + |

| 96 | + |

| 97 | +```python |

| 98 | +# we use a very simple eval here, you can use any eval library |

| 99 | +# see https://langfuse.com/docs/scores/model-based-evals for details |

| 100 | +def simple_evaluation(output, expected_output): |

| 101 | + return output == expected_output |

| 102 | +``` |

| 103 | + |

| 104 | +### Custom app |

| 105 | + |

| 106 | + |

| 107 | +```python |

| 108 | +from datetime import datetime |

| 109 | + |

| 110 | +def run_my_custom_llm_app(input, system_prompt): |

| 111 | + messages = [ |

| 112 | + {"role":"system", "content": system_prompt}, |

| 113 | + {"role":"user", "content": input["country"]} |

| 114 | + ] |

| 115 | + |

| 116 | + generationStartTime = datetime.now() |

| 117 | + |

| 118 | + openai_completion = openai.chat.completions.create( |

| 119 | + model="gpt-3.5-turbo", |

| 120 | + messages=messages |

| 121 | + ).choices[0].message.content |

| 122 | + |

| 123 | + langfuse_generation = langfuse.generation( |

| 124 | + name="guess-countries", |

| 125 | + input=messages, |

| 126 | + output=openai_completion, |

| 127 | + model="gpt-3.5-turbo", |

| 128 | + start_time=generationStartTime, |

| 129 | + end_time=datetime.now() |

| 130 | + ) |

| 131 | + |

| 132 | + return openai_completion, langfuse_generation |

| 133 | +``` |

| 134 | + |

| 135 | + |

| 136 | +```python |

| 137 | +def run_experiment(experiment_name, system_prompt): |

| 138 | + dataset = langfuse.get_dataset("capital_cities") |

| 139 | + |

| 140 | + for item in dataset.items: |

| 141 | + completion, langfuse_generation = run_my_custom_llm_app(item.input, system_prompt) |

| 142 | + |

| 143 | + item.link(langfuse_generation, experiment_name) # pass the observation/generation object or the id |

| 144 | + |

| 145 | + langfuse_generation.score( |

| 146 | + name="exact_match", |

| 147 | + value=simple_evaluation(completion, item.expected_output) |

| 148 | + ) |

| 149 | +``` |

| 150 | + |

| 151 | + |

| 152 | +```python |

| 153 | +run_experiment( |

| 154 | + "famous_city", |

| 155 | + "The user will input countries, respond with the most famous city in this country" |

| 156 | +) |

| 157 | +run_experiment( |

| 158 | + "directly_ask", |

| 159 | + "What is the capital of the following country?" |

| 160 | +) |

| 161 | +run_experiment( |

| 162 | + "asking_specifically", |

| 163 | + "The user will input countries, respond with only the name of the capital" |

| 164 | +) |

| 165 | +run_experiment( |

| 166 | + "asking_specifically_2nd_try", |

| 167 | + "The user will input countries, respond with only the name of the capital. State only the name of the city." |

| 168 | +) |

| 169 | +``` |

| 170 | + |

| 171 | +### Langchain application |

| 172 | + |

| 173 | + |

| 174 | +```python |

| 175 | +from datetime import datetime |

| 176 | +from langchain.chat_models import ChatOpenAI |

| 177 | +from langchain.chains import LLMChain |

| 178 | +from langchain.schema import AIMessage, HumanMessage, SystemMessage |

| 179 | + |

| 180 | +def run_my_langchain_llm_app(input, system_message, callback_handler): |

| 181 | + |

| 182 | + # needs to include {country} |

| 183 | + messages = [ |

| 184 | + SystemMessage( |

| 185 | + content=system_message |

| 186 | + ), |

| 187 | + HumanMessage( |

| 188 | + content=input |

| 189 | + ), |

| 190 | + ] |

| 191 | + chat = ChatOpenAI(callbacks=[callback_handler]) |

| 192 | + completion = chat(messages) |

| 193 | + |

| 194 | + return completion.content |

| 195 | +``` |

| 196 | + |

| 197 | + |

| 198 | +```python |

| 199 | +def run_langchain_experiment(experiment_name, system_message): |

| 200 | + dataset = langfuse.get_dataset("capital_cities") |

| 201 | + |

| 202 | + for item in dataset.items: |

| 203 | + handler = item.get_langchain_handler(run_name=experiment_name) |

| 204 | + |

| 205 | + completion = run_my_langchain_llm_app(item.input["country"], system_message, handler) |

| 206 | + |

| 207 | + handler.root_span.score( |

| 208 | + name="exact_match", |

| 209 | + value=simple_evaluation(completion, item.expected_output) |

| 210 | + ) |

| 211 | +``` |

| 212 | + |

| 213 | + |

| 214 | +```python |

| 215 | +run_langchain_experiment( |

| 216 | + "langchain_famous_city", |

| 217 | + "The user will input countries, respond with the most famous city in this country" |

| 218 | +) |

| 219 | +run_langchain_experiment( |

| 220 | + "langchain_directly_ask", |

| 221 | + "What is the capital of the following country?" |

| 222 | +) |

| 223 | +run_langchain_experiment( |

| 224 | + "langchain_asking_specifically", |

| 225 | + "The user will input countries, respond with only the name of the capital" |

| 226 | +) |

| 227 | +run_langchain_experiment( |

| 228 | + "langchain_asking_specifically_2nd_try", |

| 229 | + "The user will input countries, respond with only the name of the capital. State only the name of the city." |

| 230 | +) |

| 231 | +``` |

| 232 | + |

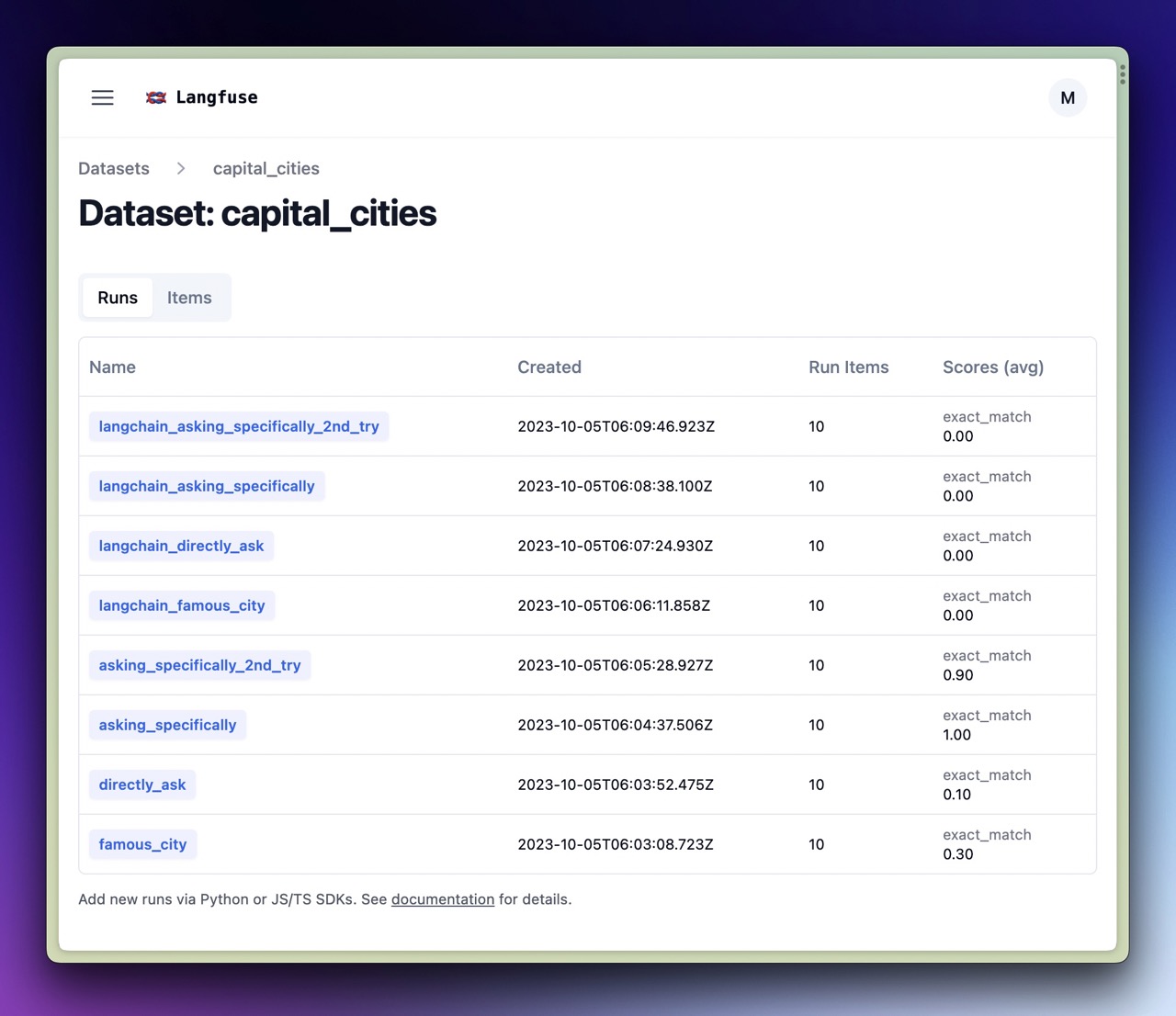

| 233 | +## Evaluate experiments in Langfuse UI |

| 234 | + |

| 235 | +- Average scores per experiment run |

| 236 | +- Browse each run for an individual item |

| 237 | +- Look at traces to debug issues |

| 238 | + |

| 239 | + |

0 commit comments