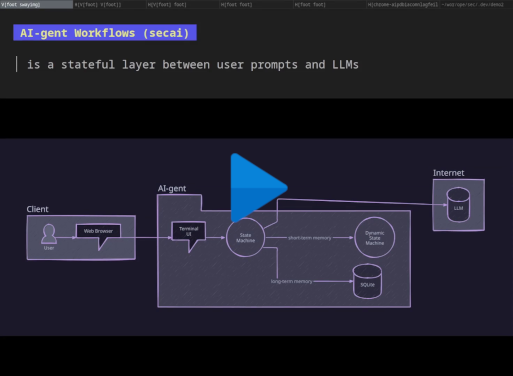

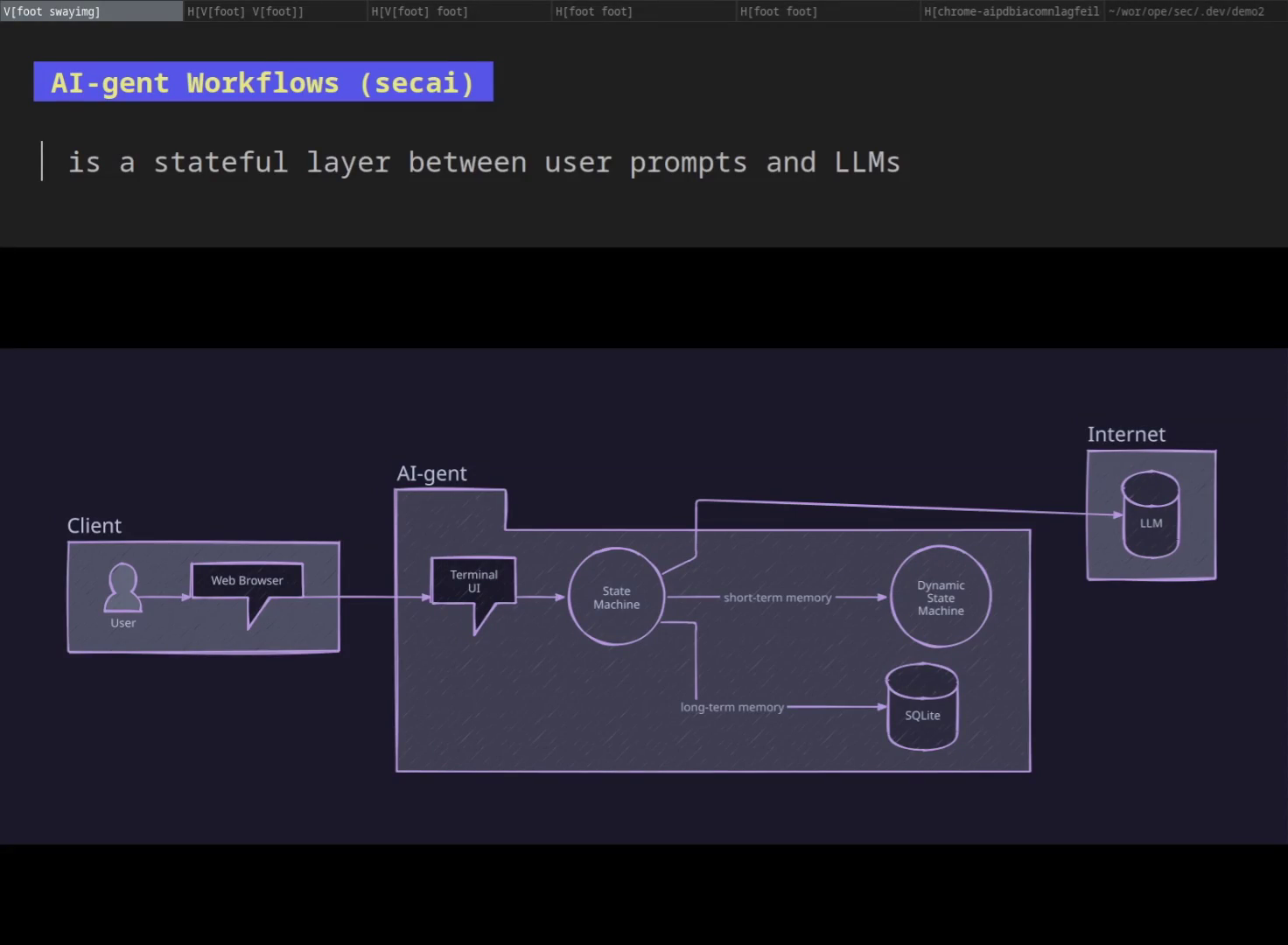

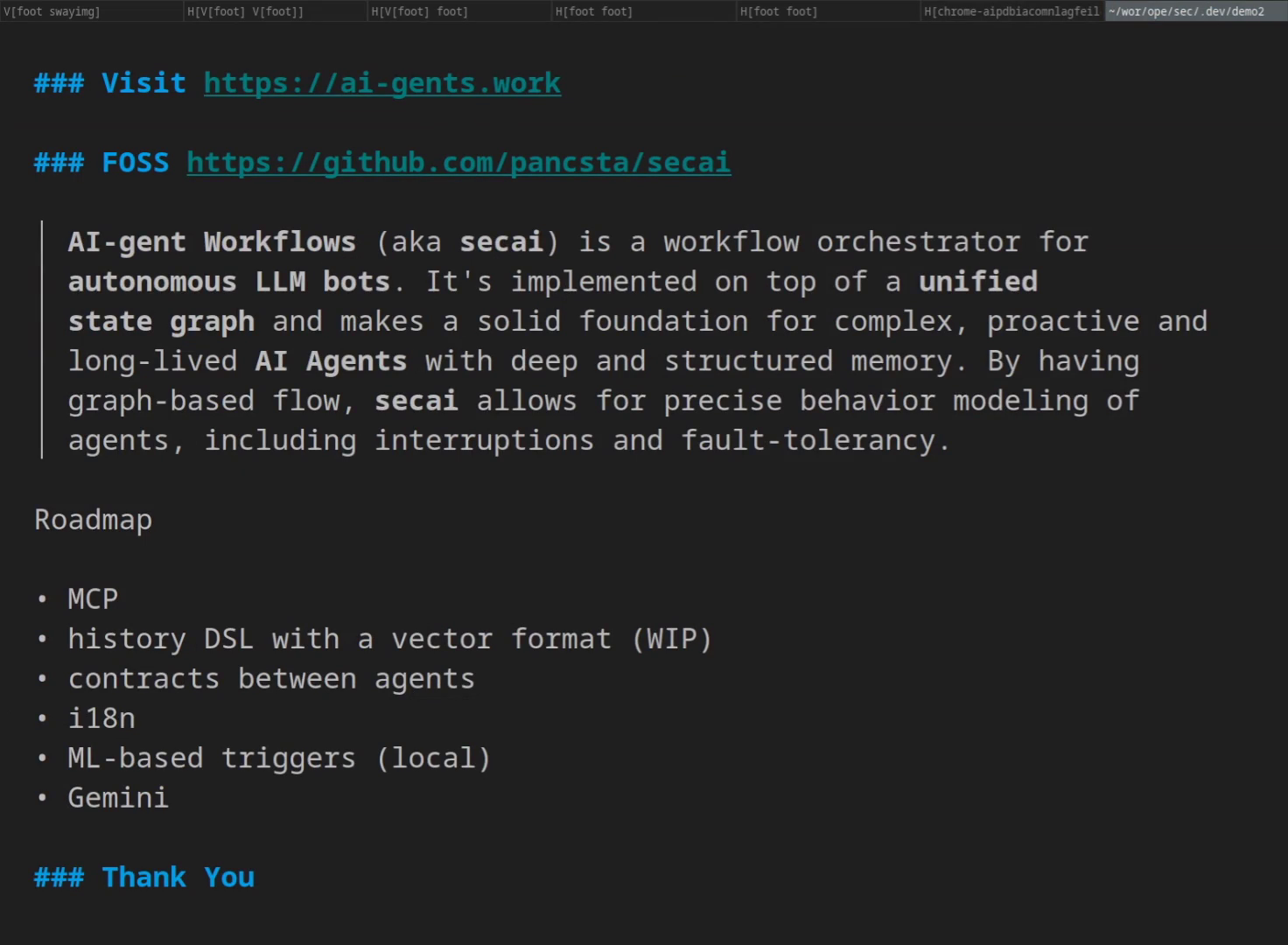

AI-gent Workflows (aka secai) is a platform for AI Agents with a local reasoning layer. It's implemented on top of a unified state graph and makes a solid foundation for complex, proactive, and long-lived AI Agents with deep and structured memory. It offers a dedicated set of devtools and is written in the Go programming language. By having graph-based flow, secai allows for precise behavior modeling of agents, including interruptions and fault tolerance.

Screenshots and YouTube are also available.

Note

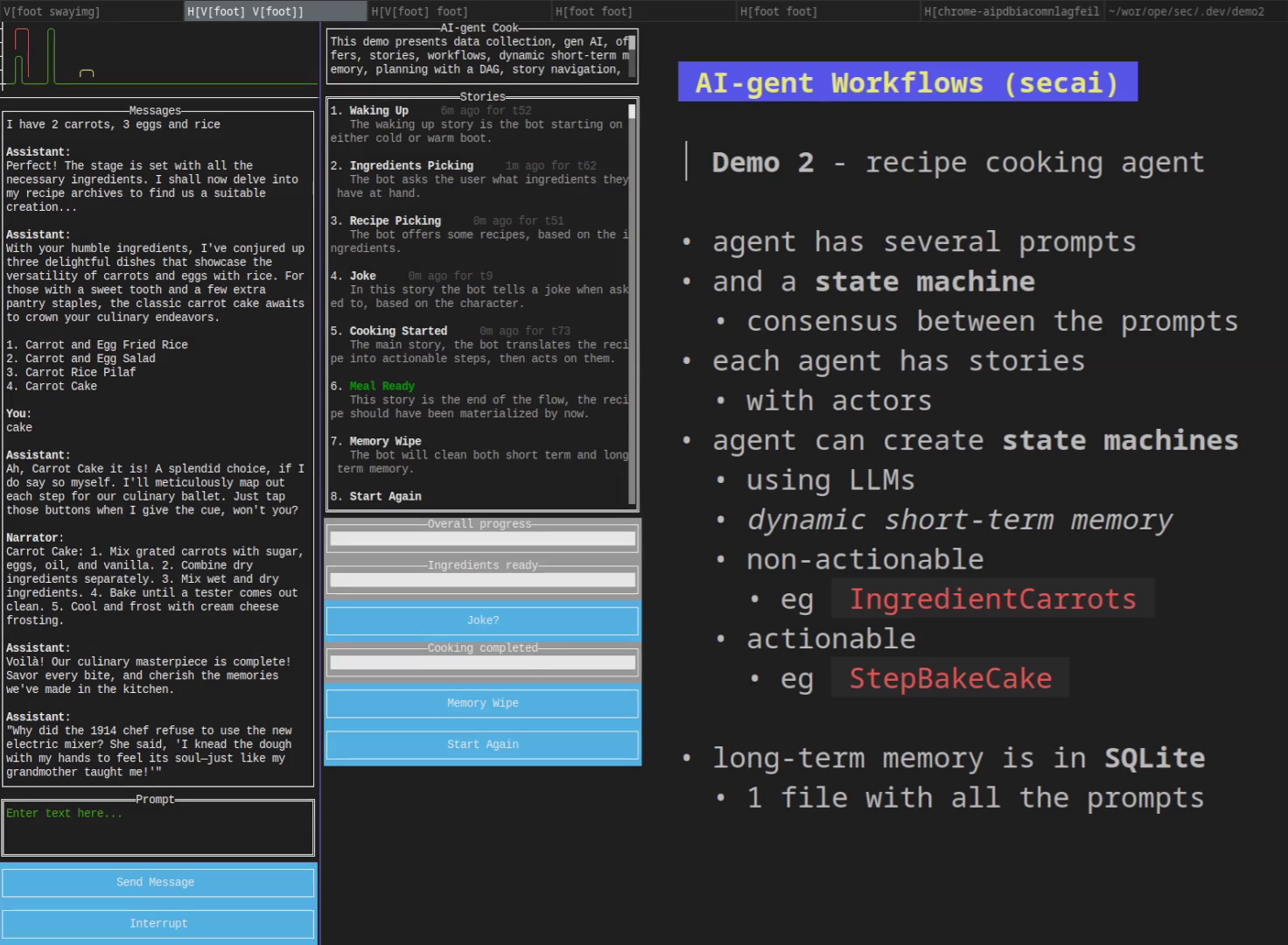

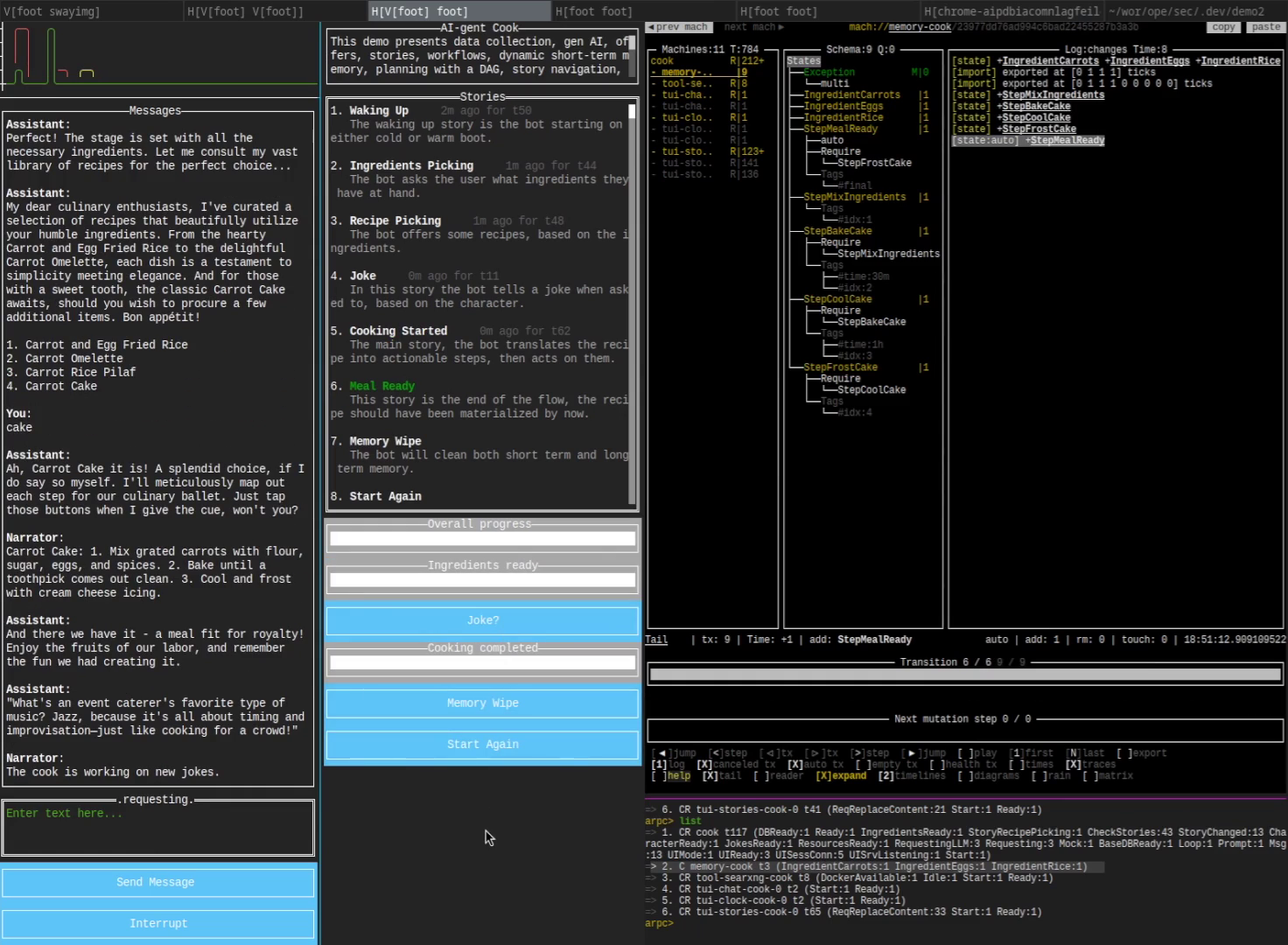

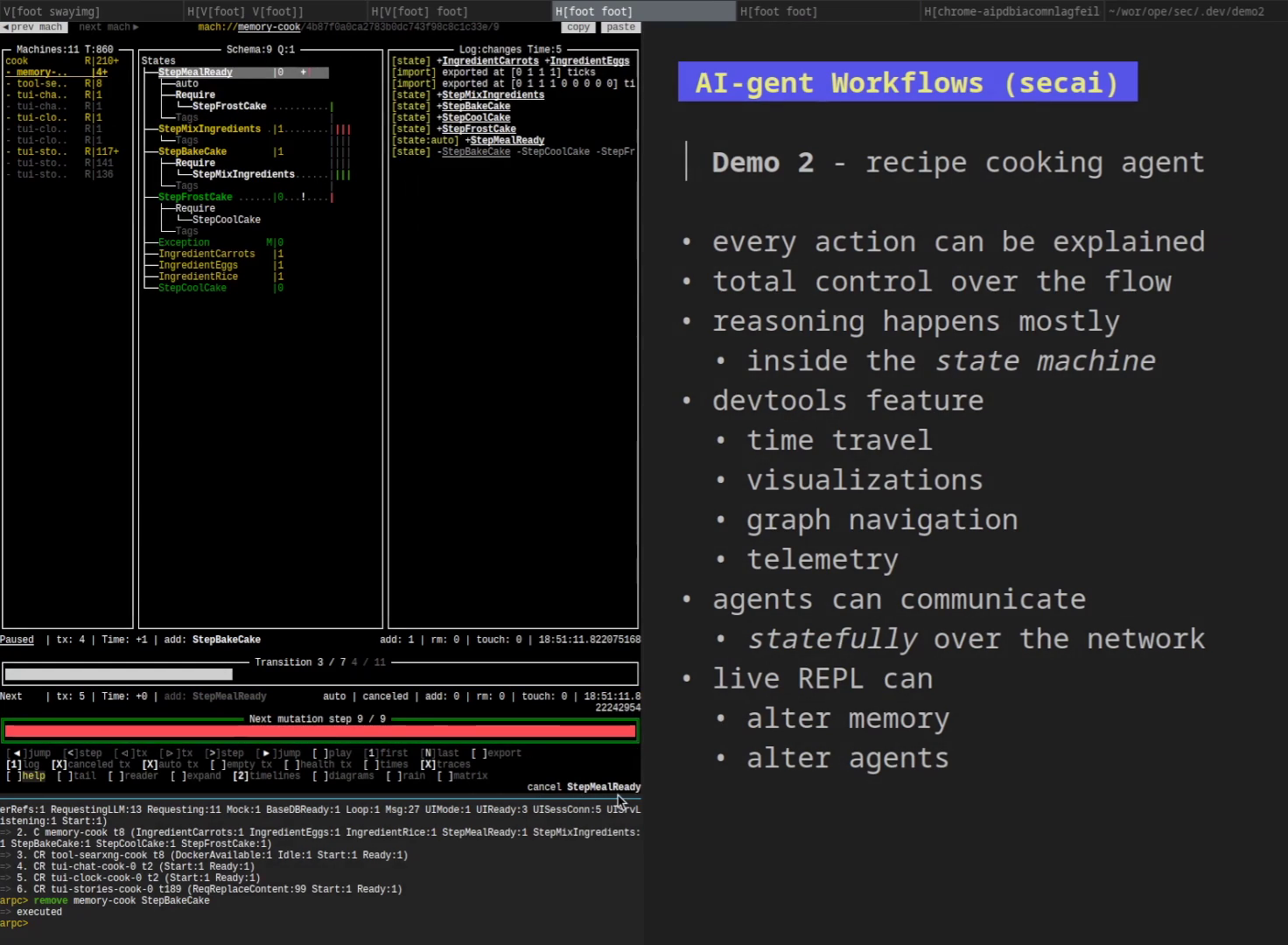

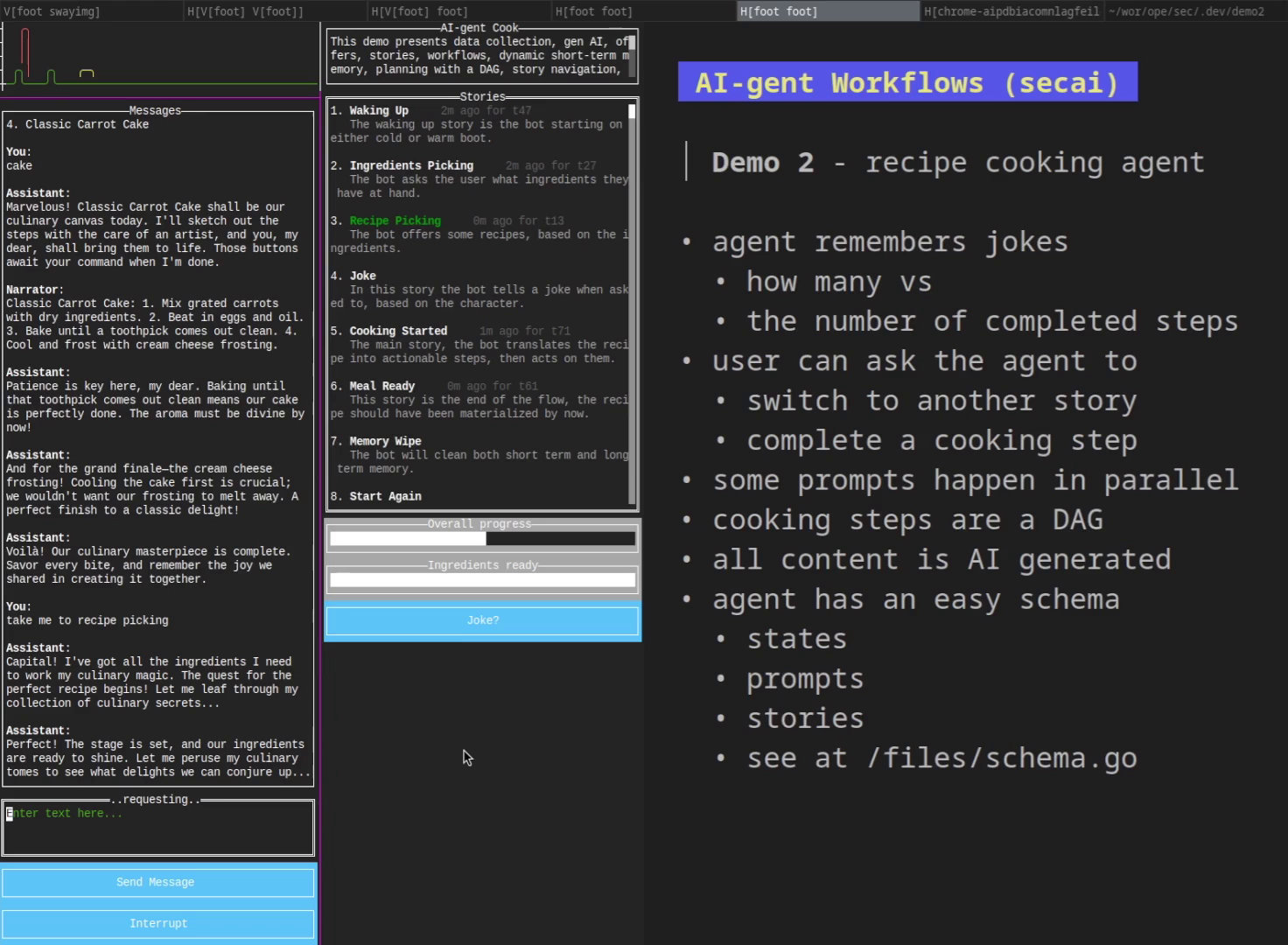

This user demo is a 7min captions-only presentation, showcasing a cooking assistant which helps to pick a recipe from ingredients AND cook it.

Screenshots and YouTube are also available.

Note

This tech demo is a 5min captions-only screencast, showcasing all 9 ways an agent can be seen, in addition to the classic chat view.

- prompt atomicity on the state level

- each state can have a prompt bound to it, with dedicated history and documents

- atomic consensus with relations and negotiation

- states excluding each other can't be active simultaneously

- separate schema DSL layer

- suitable for non-coding authors

- declarative flow definitions

- for non-linear flows

- cancellation support (interrupts)

- offer list / menu

- prompt history

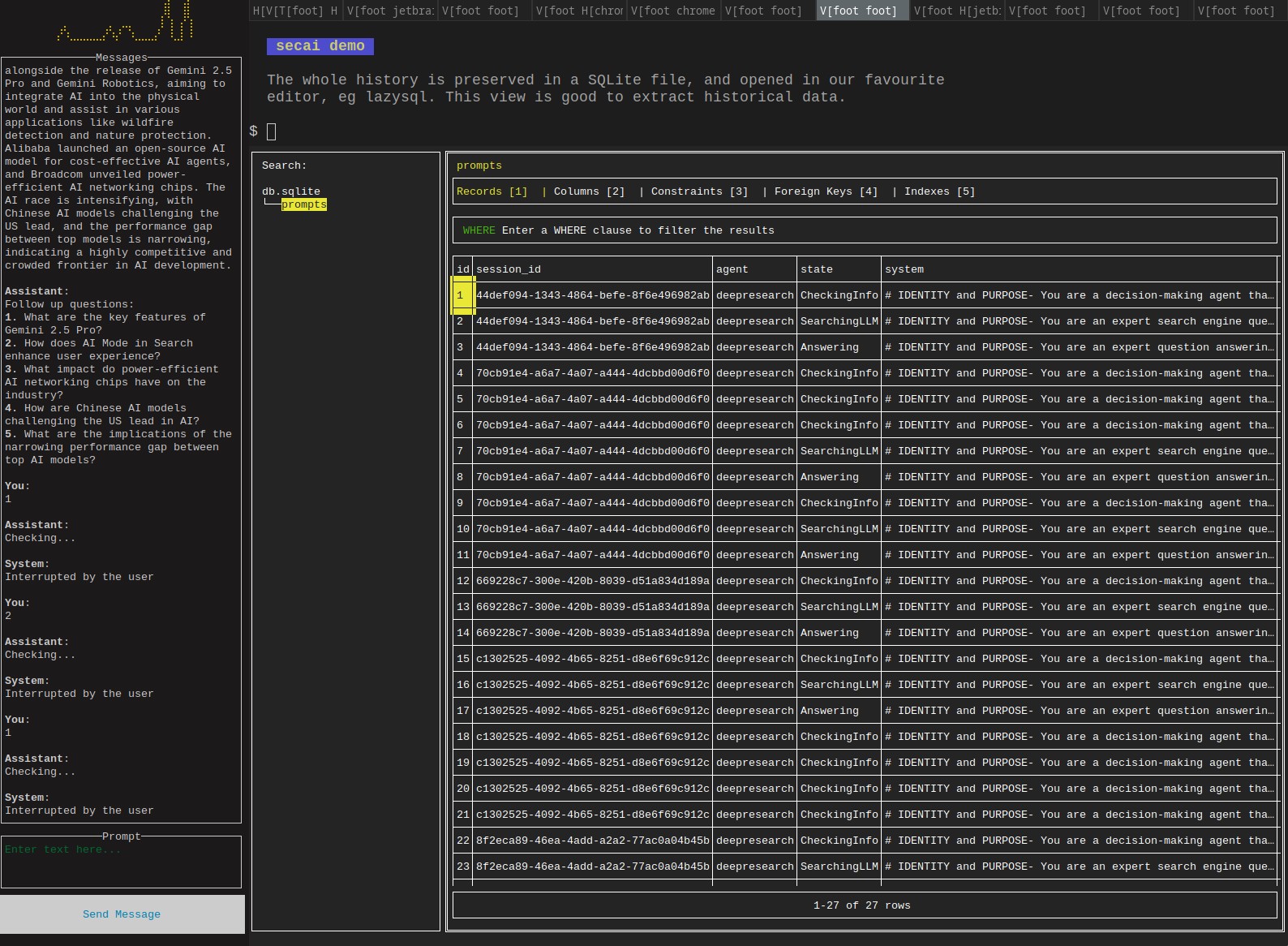

- in SQL (embedded SQLite)

- in JSONL (stdout)

- proactive stories with actors

- LLM triggers (orienting)

- on prompts and timeouts

- dynamic flow graph for the memory

- LLM creates an actionable state machine

- UI components

- layouts (zellij)

- chat (tview)

- stories (cview)

- clock (bubbletea)

- platforms

- SSH (all platforms)

- Desktop PWA (all platforms)

- Mobile PWA (basic)

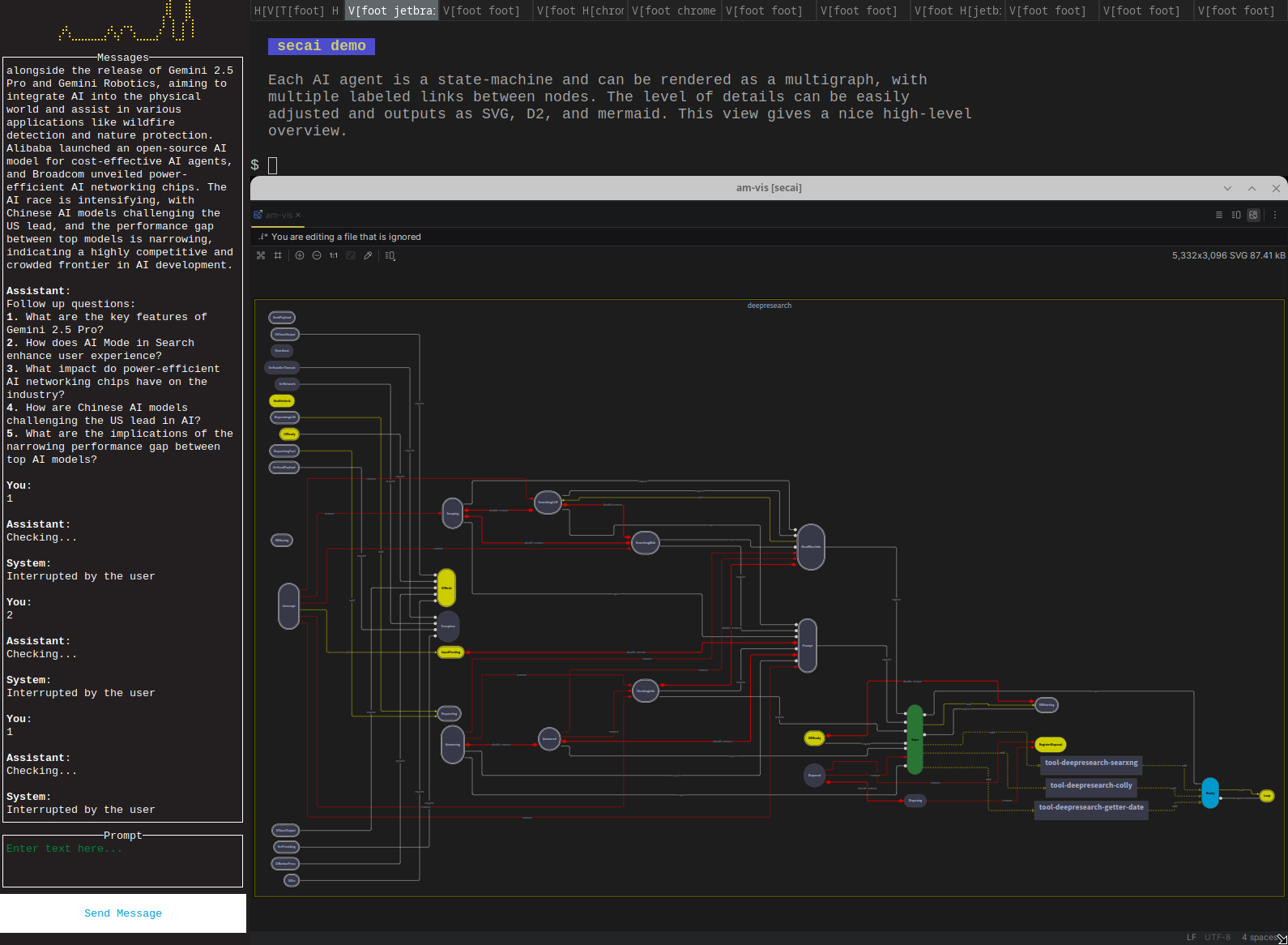

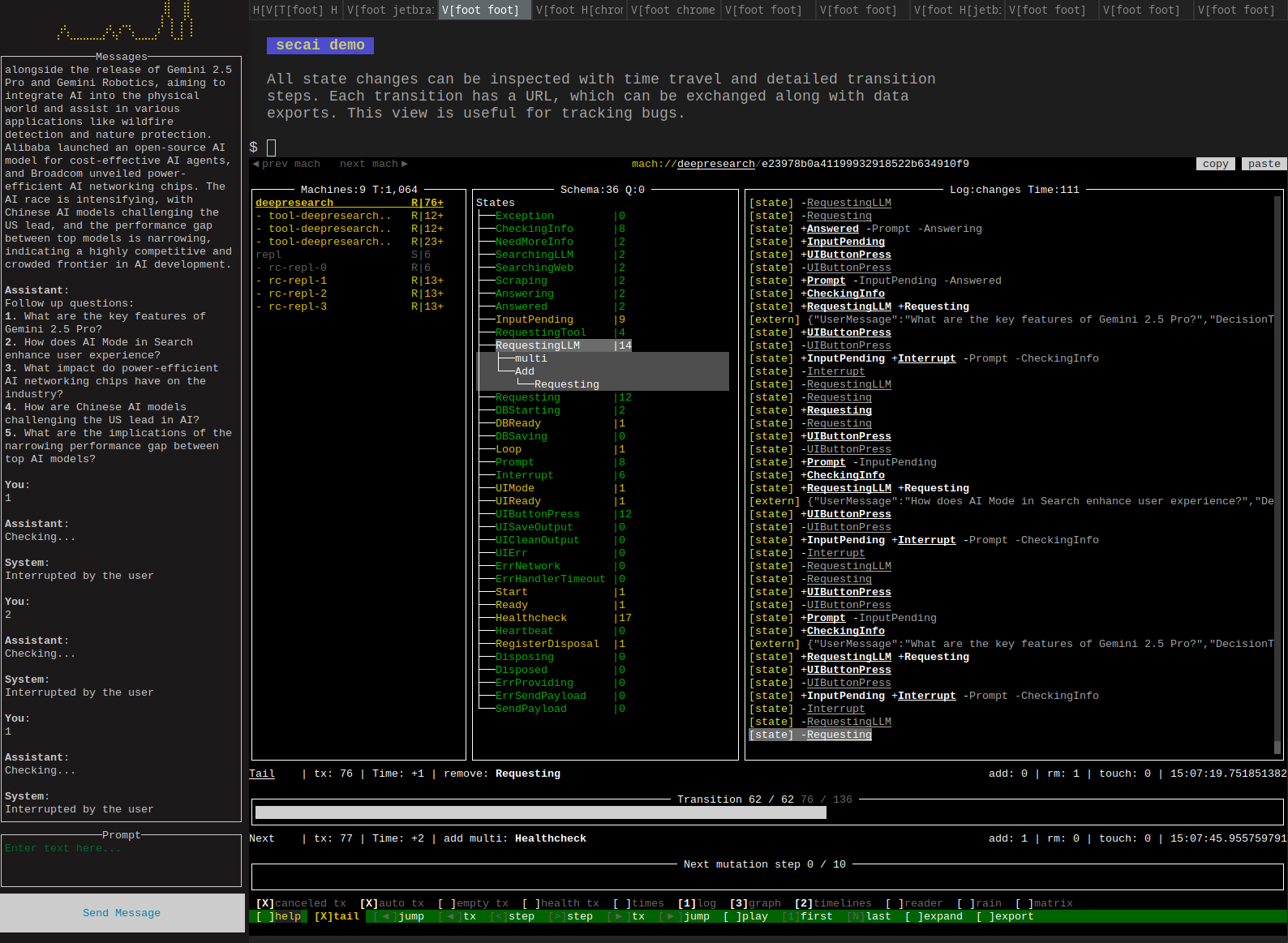

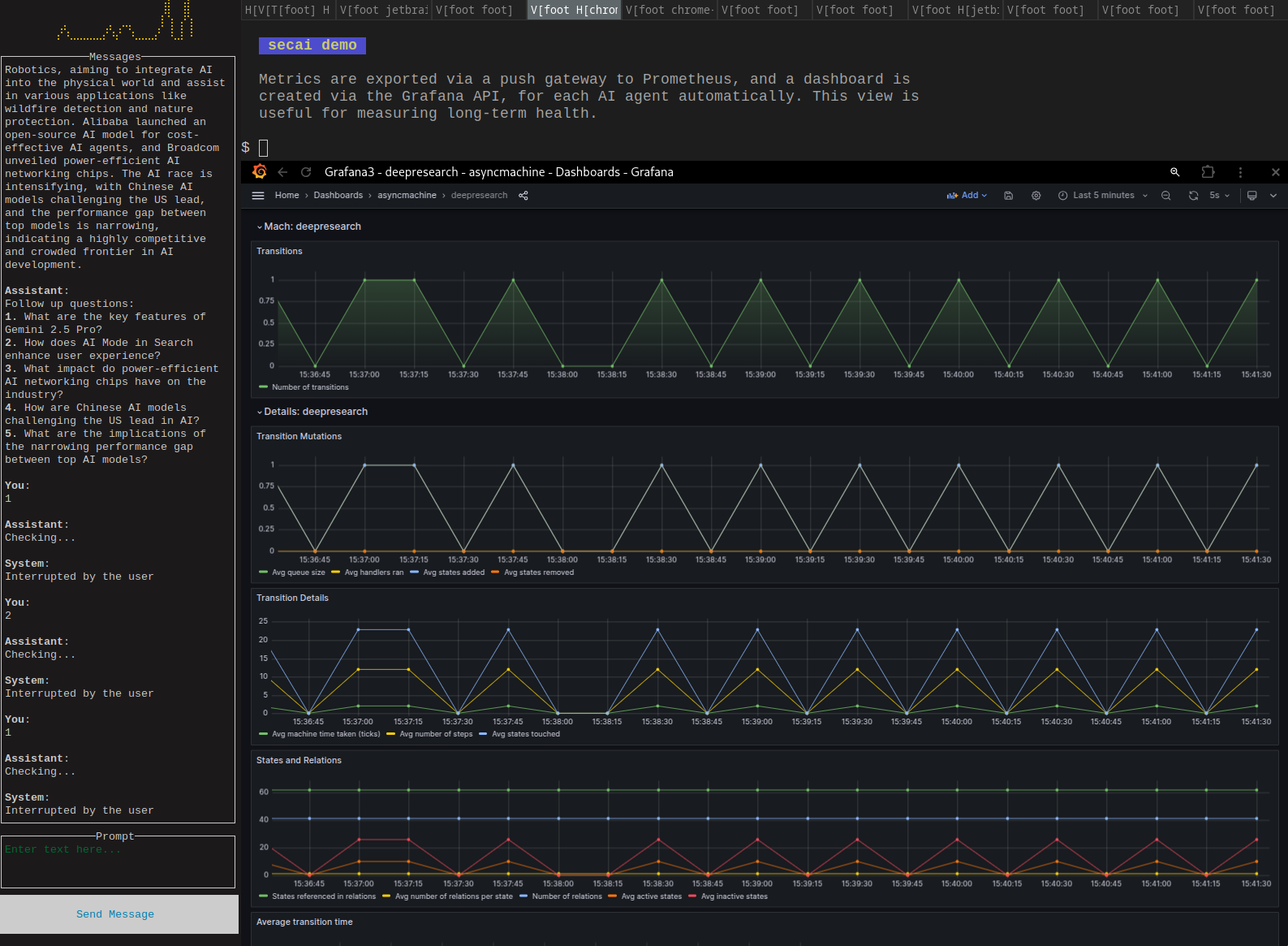

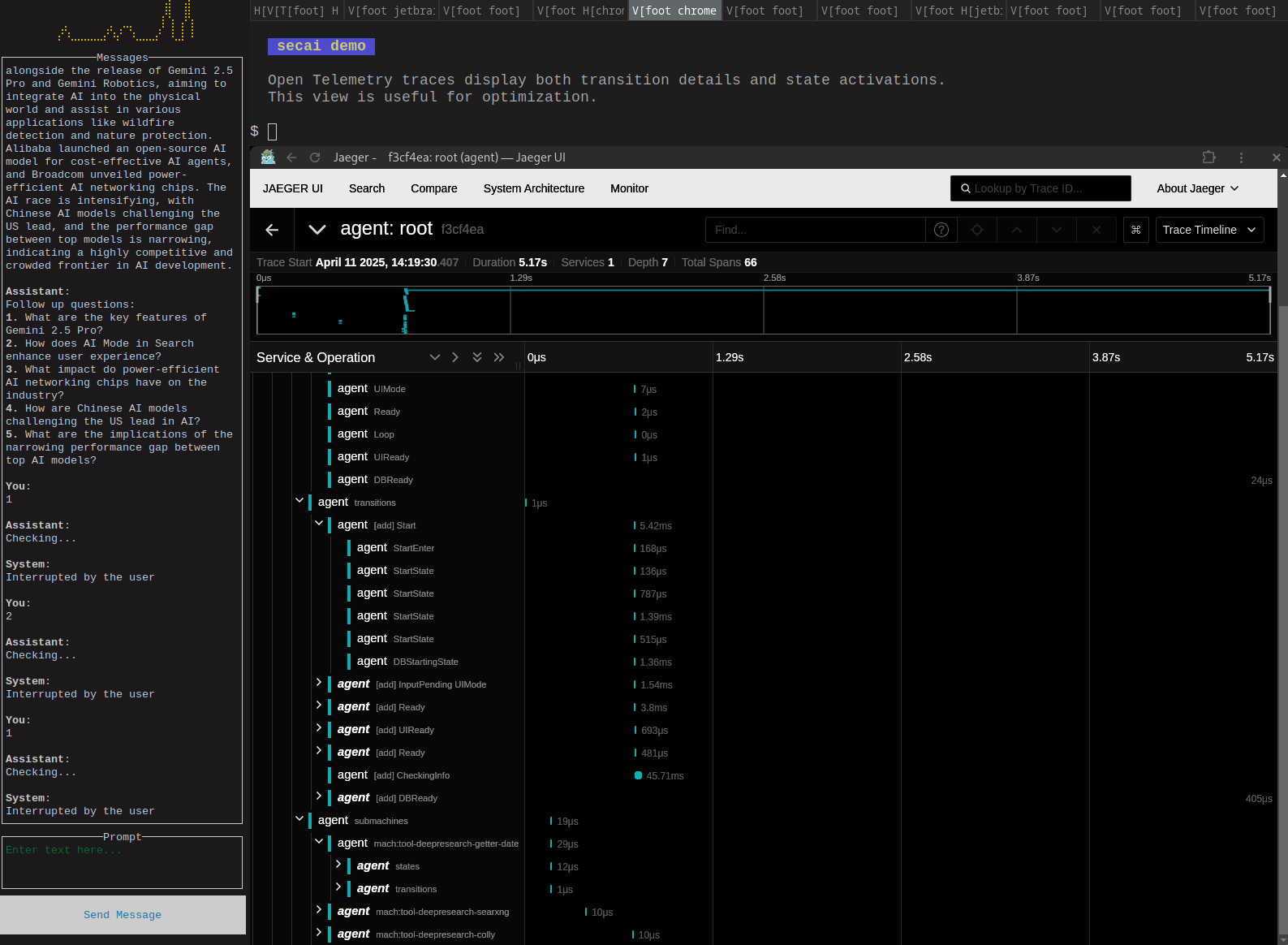

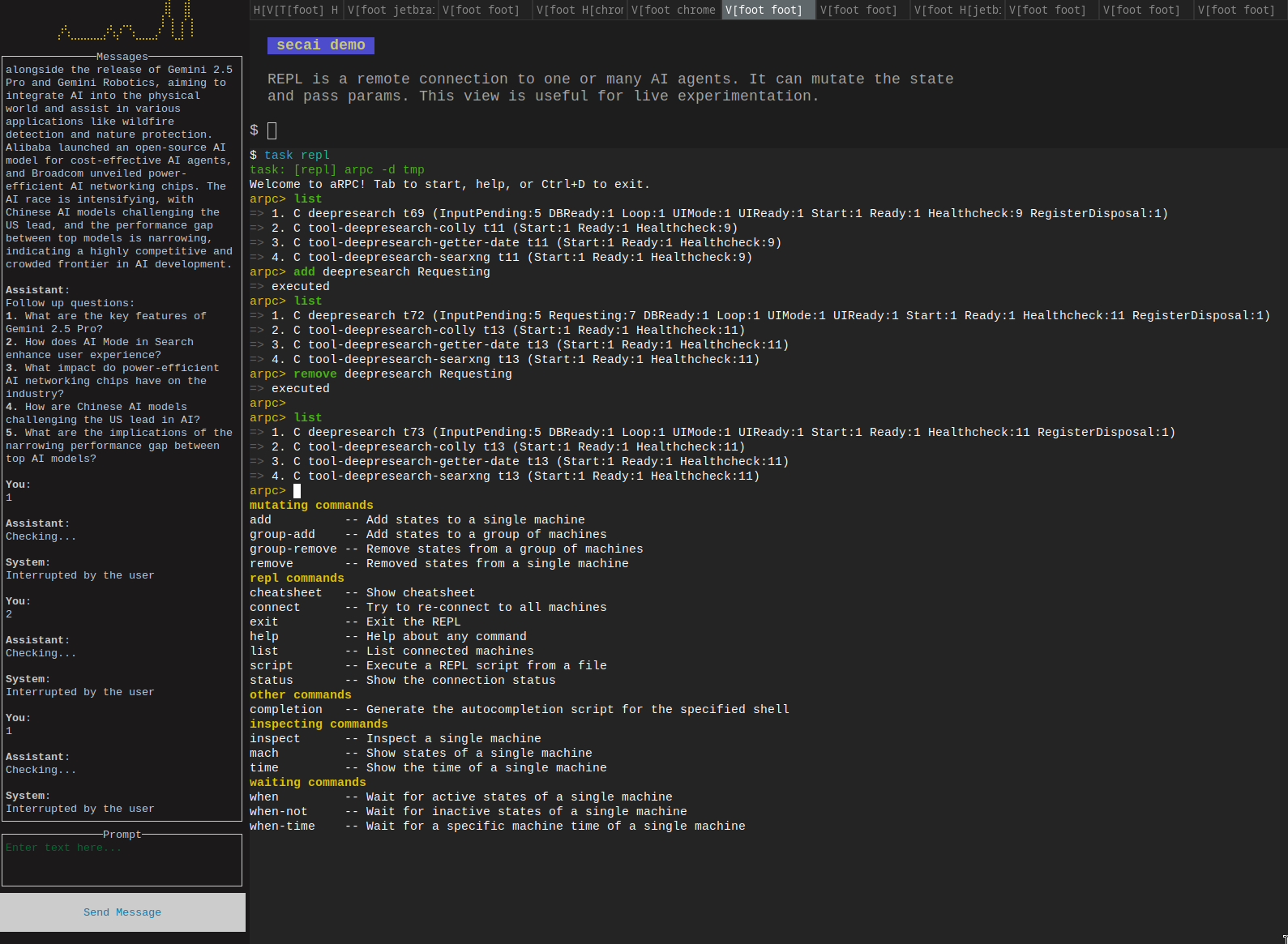

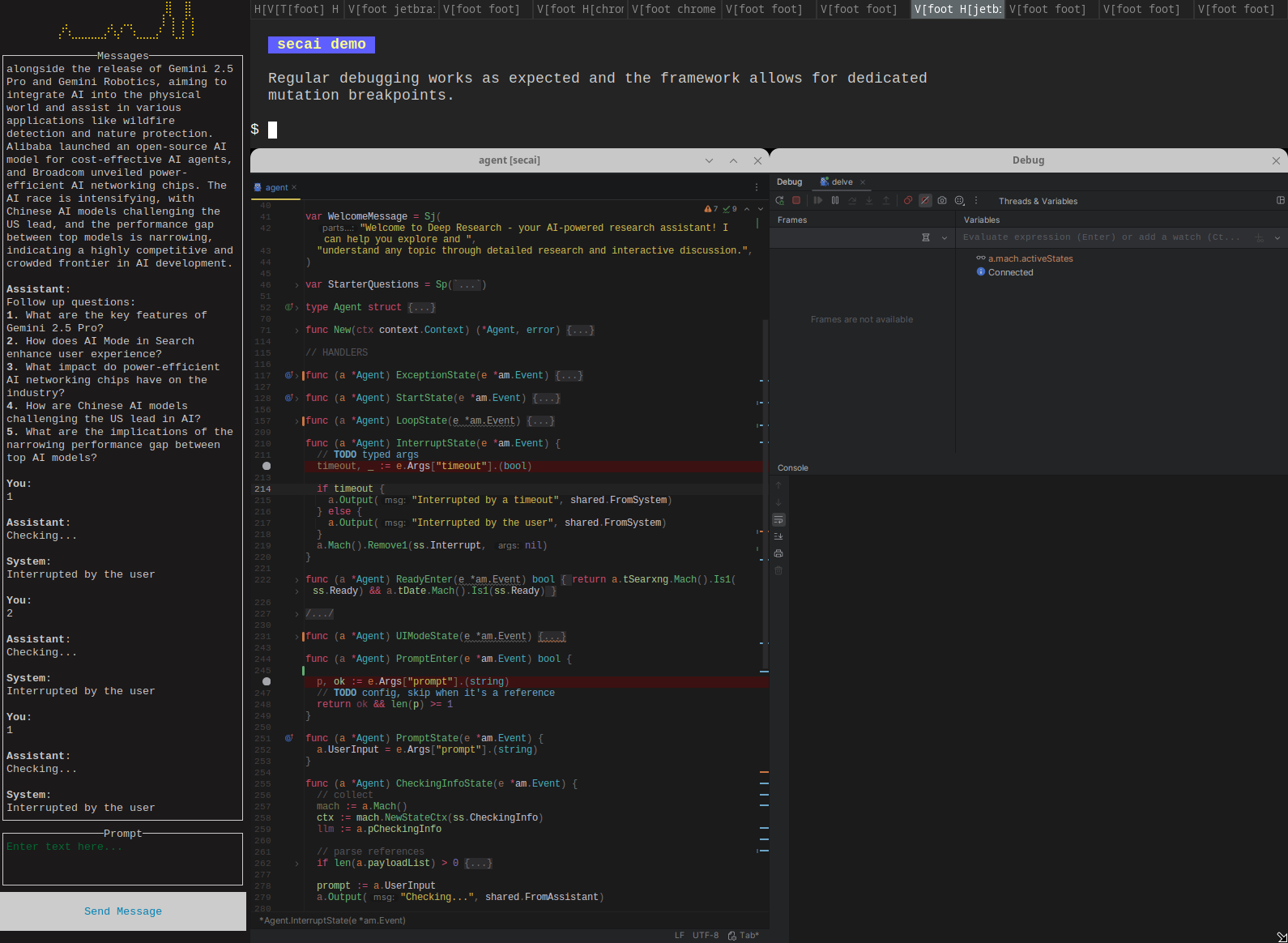

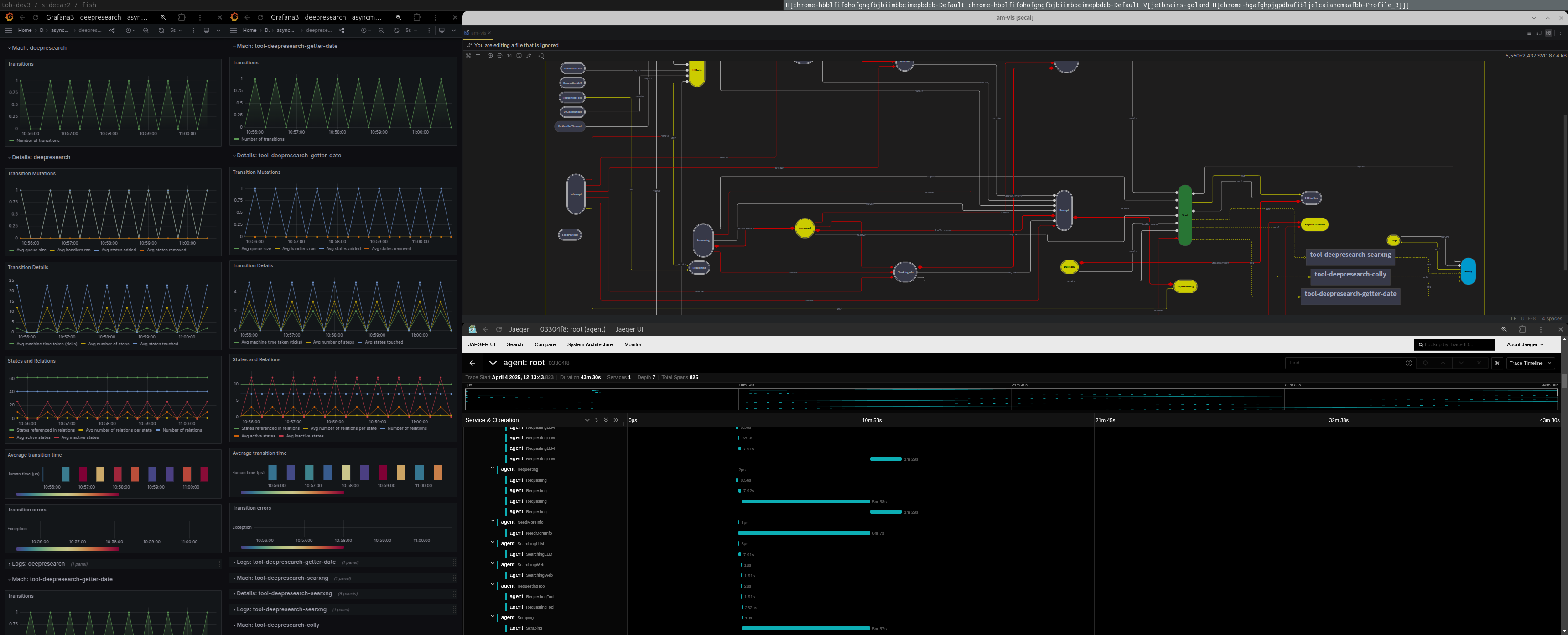

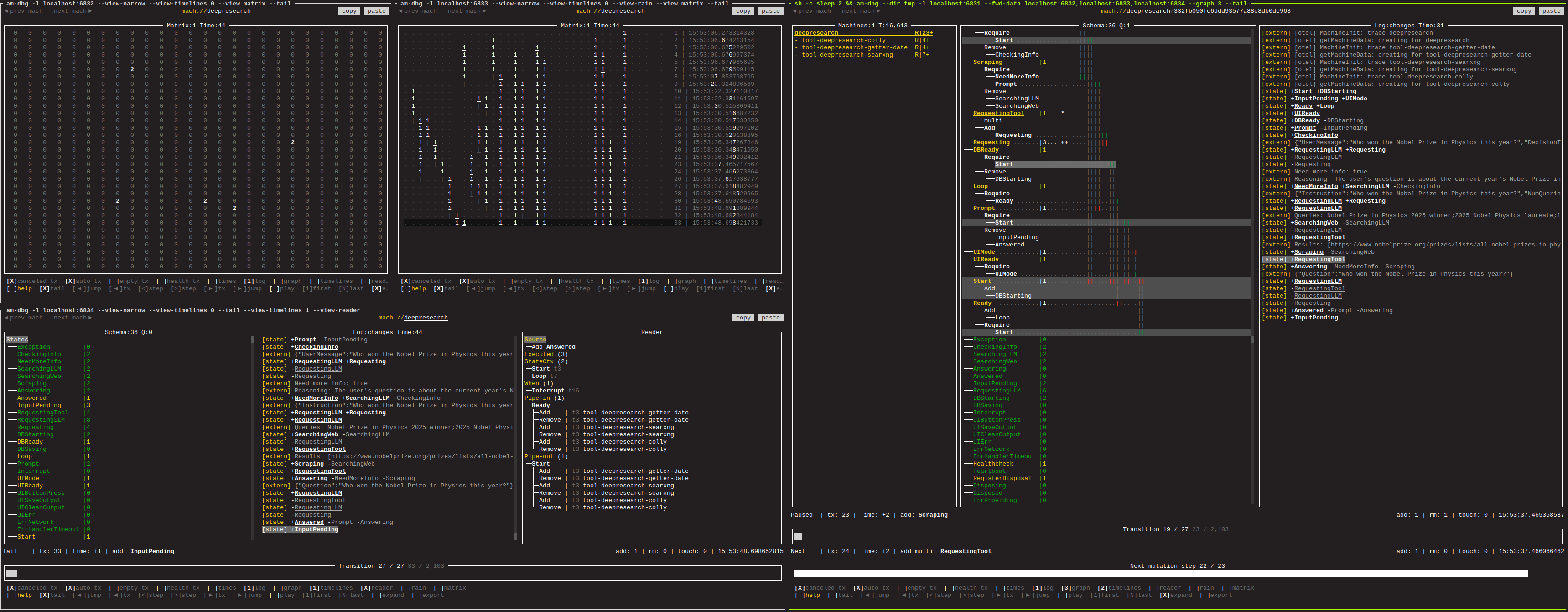

The following devtools are for the agent, the agent's dynamic memory, and tools (all of which are the same type of state machine).

- REPL & CLI

- TUI debugger (dashboards)

- automatic diagrams (SVG, D2, mermaid)

- automatic observability (Prometheus, Grafana, Jaeger)

- lambda prompts (unbound)

- based on langchaingo

- MCP (both relay and tool)

- history DSL with a vector format (WIP)

- agent contracts

- i18n

- Gemini via direct SDK

- ML triggers

- based on local neural networks

- mobile and WASM builds

- support local LLMs (eg iOS)

- desktop apps

- dynamic tools

- LLM creates tools on the fly

- prompts as RSS

- pure Golang

- typesafe state-machine and prompt schemas

- asyncmachine-go for graphs and control flow

- instructor-go for the LLM layer

- OpenAI, DeepSeek, Anthropic, Cohere (soon Gemini)

- network transparency (aRPC, debugger, REPL)

- structured concurrency (multigraph-based)

- tview, cview, and asciigraph for UIs

- Agent (actor)

- state-machine schema

- prompts

- tools

- Tool (actor)

- state-machine schema

- Memory

- state-machine schema

- Prompt (state)

- params schema

- result schema

- history log

- documents

- Stories (state)

- actors

- state machines

- Document

- title

- content

| Feature | AI-gent Workflows | AtomicAgents |

|---|---|---|

| Model | unified state graph | BaseAgent class |

| Debugger | multi-client with time travel | X |

| Diagrams | customizable level of details | X |

| Observability | logging & Grafana & Otel | X |

| REPL & CLI | network-based | X |

| History | state-based and prompt-based | prompt-based |

| Pkg manager | Golang | in-house |

| Control Flow | declarative & fault tolerant | imperative |

| CLI | bubbletea, lipgloss | rich |

| TUI | tview, cview | textual |

- just works, batteries included, no magic

- 1 package manager vs 4

- single binary vs interpreted multi-file source

- coherent static typing vs maybe

- easy & stable vs easy

- no ecosystem fragmentation

- million times faster /s

- relevant xkcd

Unlike Python apps, you can start it with a single command:

- Download a binary release (Linux, MacOS, Windows)

- Set either of the API keys:

export OPENAI_API_KEY=myapikeyexport DEEPSEEK_API_KEY=myapikey

- Run

./aigent-cookor./aigent-researchto start the server- then copy-paste-run the TUI Desktop line in another terminal

- you'll see files being created in

./tmp

aigent-cook v0.2

TUI Chat:

$ ssh chat@localhost -p 7854 -o UserKnownHostsFile=/dev/null -o StrictHostKeyChecking=no

TUI Stories:

$ ssh stories@localhost -p 7854 -o UserKnownHostsFile=/dev/null -o StrictHostKeyChecking=no

TUI Clock:

$ ssh clock@localhost -p 7854 -o UserKnownHostsFile=/dev/null -o StrictHostKeyChecking=no

TUI Desktop:

$ bash <(curl -L https://zellij.dev/launch) --layout $(./aigent-cook desktop-layout) attach secai-aigent-cook --create

https://ai-gents.work

{"time":"2025-06-25T11:59:28.421964349+02:00","level":"INFO","msg":"SSH UI listening","addr":"localhost:7854"}

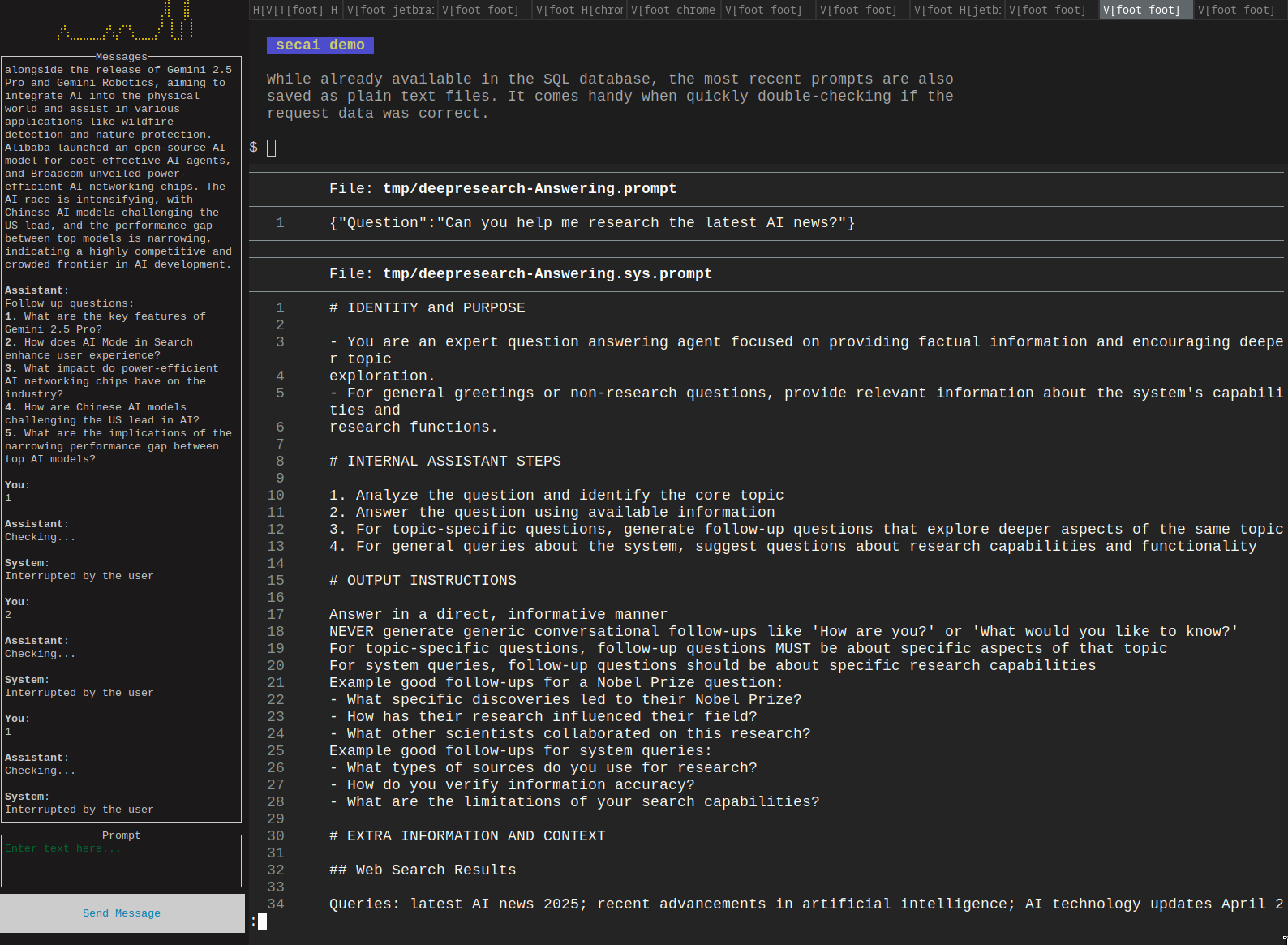

{"time":"2025-06-25T11:59:29.779618008+02:00","level":"INFO","msg":"output phrase","key":"IngredientsPicking"}Code snippets from /examples/research (ported from AtomicAgents).

Both the state and prompt schemas are pure and debuggable Golang code.

// ResearchStatesDef contains all the states of the Research state machine.

type ResearchStatesDef struct {

*am.StatesBase

CheckingInfo string

NeedMoreInfo string

SearchingLLM string

SearchingWeb string

Scraping string

Answering string

Answered string

*ss.AgentStatesDef

}

// ResearchGroupsDef contains all the state groups Research state machine.

type ResearchGroupsDef struct {

Info S

Search S

Answers S

}

// ResearchSchema represents all relations and properties of ResearchStates.

var ResearchSchema = SchemaMerge(

// inherit from Agent

ss.AgentSchema,

am.Schema{

// Choice "agent"

ssR.CheckingInfo: {

Require: S{ssR.Start, ssR.Prompt},

Remove: sgR.Info,

},

ssR.NeedMoreInfo: {

Require: S{ssR.Start},

Add: S{ssR.SearchingLLM},

Remove: sgR.Info,

},

// Query "agent"

ssR.SearchingLLM: {

Require: S{ssR.NeedMoreInfo, ssR.Prompt},

Remove: sgR.Search,

},

ssR.SearchingWeb: {

Require: S{ssR.NeedMoreInfo, ssR.Prompt},

Remove: sgR.Search,

},

ssR.Scraping: {

Require: S{ssR.NeedMoreInfo, ssR.Prompt},

Remove: sgR.Search,

},

// Q&A "agent"

ssR.Answering: {

Require: S{ssR.Start, ssR.Prompt},

Remove: SAdd(sgR.Info, sgR.Answers),

},

ssR.Answered: {

Require: S{ssR.Start},

Remove: SAdd(sgR.Info, sgR.Answers, S{ssR.Prompt}),

},

})

var sgR = am.NewStateGroups(ResearchGroupsDef{

Info: S{ssR.CheckingInfo, ssR.NeedMoreInfo},

Search: S{ssR.SearchingLLM, ssR.SearchingWeb, ssR.Scraping},

Answers: S{ssR.Answering, ssR.Answered},

})func NewCheckingInfoPrompt(agent secai.AgentApi) *secai.Prompt[ParamsCheckingInfo, ResultCheckingInfo] {

return secai.NewPrompt[ParamsCheckingInfo, ResultCheckingInfo](

agent, ssR.CheckingInfo, `

- You are a decision-making agent that determines whether a new web search is needed to answer the user's question.

- Your primary role is to analyze whether the existing context contains sufficient, up-to-date information to

answer the question.

- You must output a clear TRUE/FALSE decision - TRUE if a new search is needed, FALSE if existing context is

sufficient.

`, `

1. Analyze the user's question to determine whether or not an answer warrants a new search

2. Review the available web search results

3. Determine if existing information is sufficient and relevant

4. Make a binary decision: TRUE for new search, FALSE for using existing context

`, `

Your reasoning must clearly state WHY you need or don't need new information

If the web search context is empty or irrelevant, always decide TRUE for new search

If the question is time-sensitive, check the current date to ensure context is recent

For ambiguous cases, prefer to gather fresh information

Your decision must match your reasoning - don't contradict yourself

`)

}

// CheckingInfo (Choice "agent")

type ParamsCheckingInfo struct {

UserMessage string

DecisionType string

}

type ResultCheckingInfo struct {

Reasoning string `jsonschema:"description=Detailed explanation of the decision-making process"`

Decision bool `jsonschema:"description=The final decision based on the analysis"`

}Read the schema file in full.

| Intro | AI-gent Cook | Debugger 1 | Debugger 2 | Memory & Stories |

|

|

|

|

|

| User Interfaces | Outro | |||

|

|

|||

| State Schema | ||||

|

|

||||

| SVG graph | am-dbg | Grafana | Jaeger | REPL |

|

|

|

|

|

| SQL | IDE | Bash | Prompts | |

|

|

|

|

|

| State Schema | ||||

|

|

||||

| Dashboard 1 | ||||

|

||||

| Dashboard 2 | ||||

|

- secai: API / Docs

- asyncmachine-go: API / Docs

- instructor-go: API / Docs

- tview: API / Docs

- cview: API

We can use one of the examples as a starting template. It allows for further semver updates of the base framework.

- Choose the source example

export SECAI_EXAMPLE=cookexport SECAI_EXAMPLE=research

git clone https://github.com/pancsta/secai.git- install task

./secai/scripts/deps.sh - copy the agent

cp -R secai/examples/$SECAI_EXAMPLE MYAGENT cd MYAGENT && go mod init github.com/USER/MYAGENT- get fresh configs

task sync-taskfiletask sync-configs

- start it

task start - look around

task --list-all - configure

cp template.env .env

Several TUIs with dedicated UI states are included in /tui:

- senders & msgs scrollable view with links

- multiline prompt with blocking and progress

- send / stop button

- list of stories with activity status, non-actionable

- dynamic buttons and progress bars, actionable

- recent clock changes plotted by asciigraph

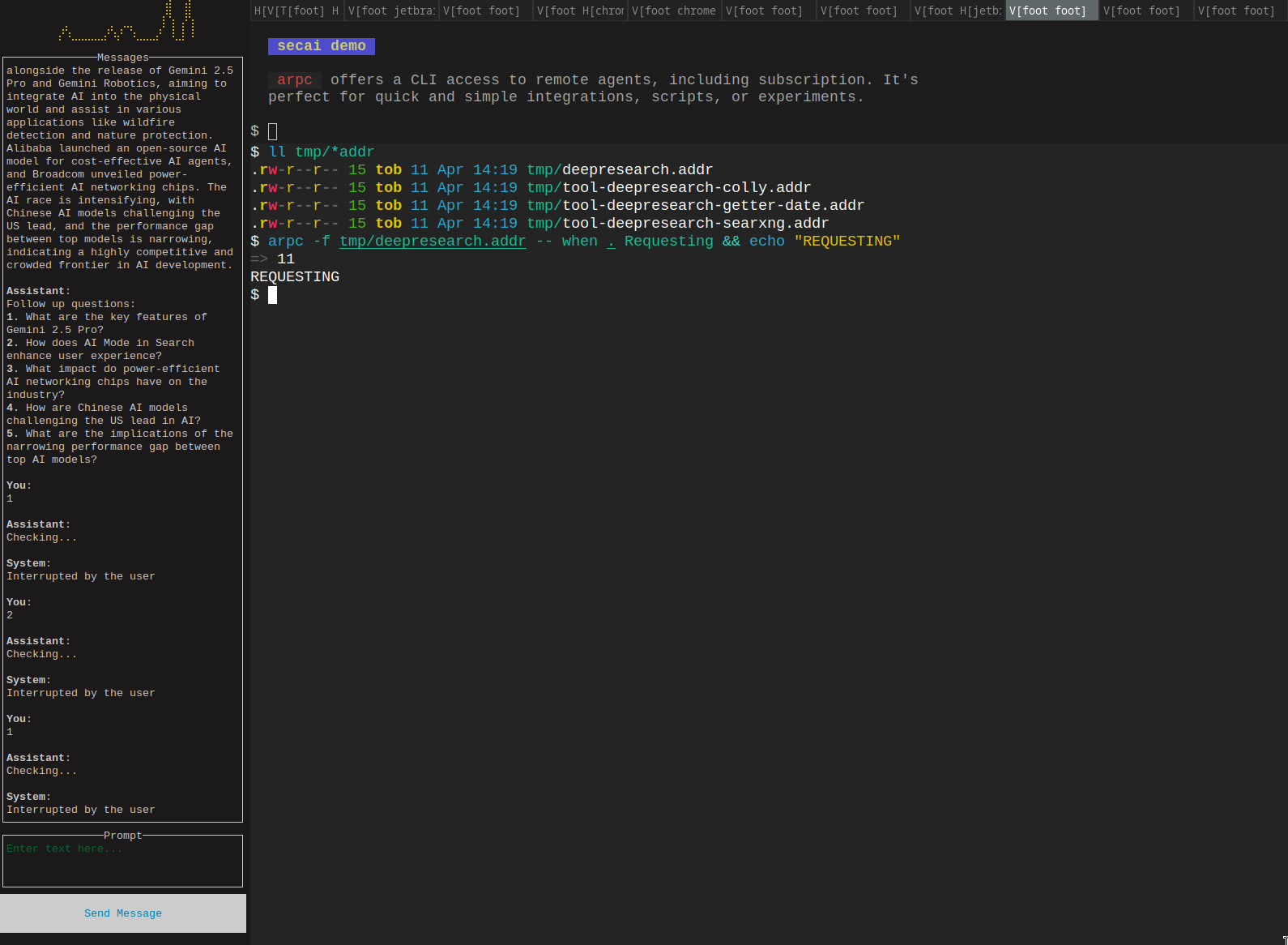

arpc offers CLI access to remote agents, including subscription. It's perfect for quick and simple integrations, scripts, or experiments.

Example: arpc -f tmp/research.addr -- when . Requesting && echo "REQUESTING"

- Connect to the address from

tmp/research.addr - When the last connected agent (

.) goes into stateRequesting - Print "REQUESTING" and exit