| 🚀Project Page | 📖Paper | 🤗Data | 🤗Model | 🤗Demo |

Scholar Copilot is an intelligent academic writing assistant that enhances the research writing process through AI-powered text completion and citation suggestions. Built by TIGER-Lab, it aims to streamline academic writing while maintaining high scholarly standards.

- Next-3-Sentence Suggestions: Get contextually relevant suggestions for your next three sentences

- Full Section Auto-Completion: Generate complete sections with appropriate academic structure and flow

- Context-Aware Writing: All generations consider your existing text to maintain coherence

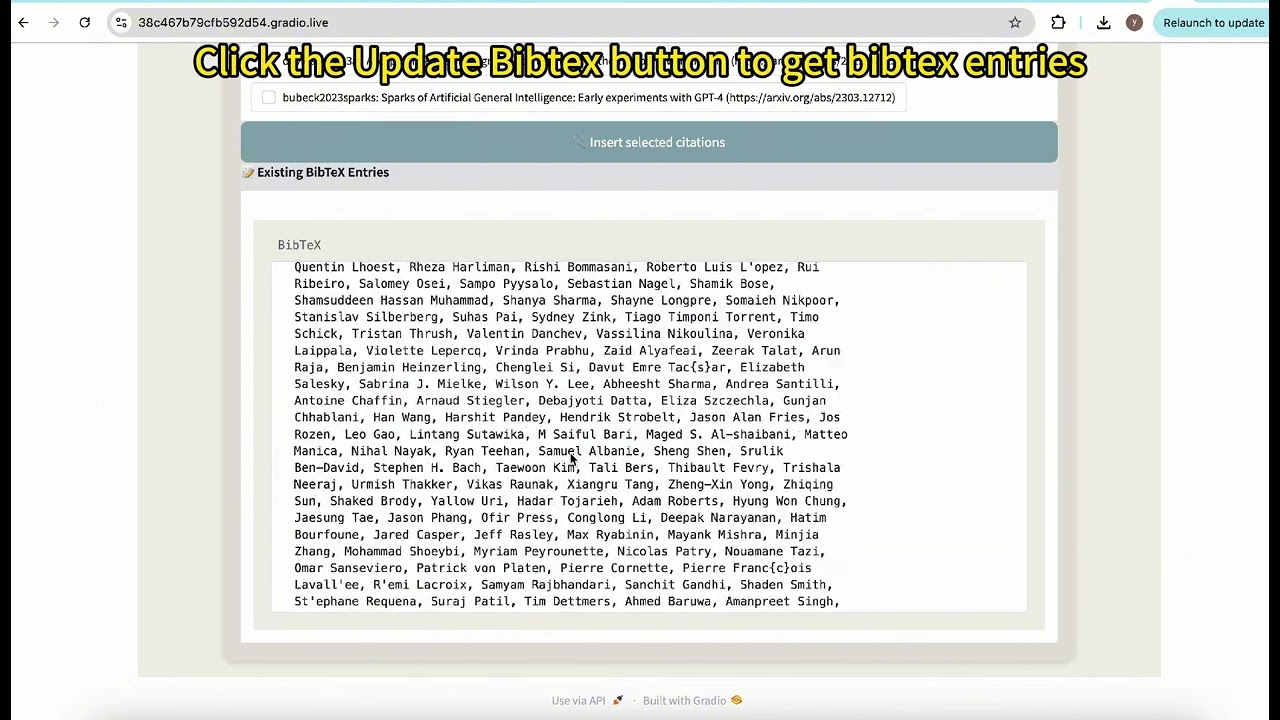

- Real-time Citation Suggestions: Receive relevant paper citations based on your writing context

- One-Click Citation Insertion: Easily select and insert citations in proper academic format

- Citation Bibtex Generation: Automatically generate and export bibtex entries for your citations

Scholar Copilot employs a unified model architecture that seamlessly integrates retrieval and generation through a dynamic switching mechanism. During the generation process, the model autonomously determines appropriate citation points using learned citation patterns. When a citation is deemed necessary, the model temporarily halts generation, utilizes the hidden states of the citation token to retrieve relevant papers from the corpus, inserts the selected references, and then resumes coherent text generation.

To set up the ScholarCopilot demo on your own server, follow these simple steps:

- Clone the repository:

git clone git@github.com:TIGER-AI-Lab/ScholarCopilot.git

cd ScholarCopilot/run_demo- Set up the environment:

pip install -r requirements.txt- Download the required model and data:

bash download.sh- Launch the demo:

bash run_demo.sh

To update your corpus with the latest papers, follow these steps:

- Download the most recent arXiv metadata from Kaggle and save it to your chosen ARXIV_META_DATA_PATH

- Run the data processing script:

cd utils/

python process_arxiv_meta_data.py ARXIV_META_DATA_PATH ../data/corpus_data_arxiv_1215.jsonl- Generate the embedding of the corpus:

bash encode_corpus.sh- Convert the embedding to HNSW index for efficient search:

python build_hnsw_index.py --input_dir <embedding dir> --output_dir <hnsw index dir>

- Download the training data:

cd train/

bash download.sh- Configure and run the training script (To reproduce our results, you can use the hyperparameters in the script and 4 machines with 8 GPUs each (32 GPUs in total).)

cd src/

bash start_train.sh@article{wang2024scholarcopilot,

title={ScholarCopilot: Training Large Language Models for Academic Writing with Accurate Citations},

author = {Wang, Yubo and Ma, Xueguang and Nie, Ping and Zeng, Huaye and Lyu, Zhiheng and Zhang, Yuxuan and Schneider, Benjamin and Lu, Yi and Yue, Xiang and Chen, Wenhu},

journal={arXiv preprint arXiv:2504.00824},

year={2025}

}