-

Notifications

You must be signed in to change notification settings - Fork 1.3k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Extremely slow cache on self-hosted from time to time #720

Comments

|

Seeing the same behaviour on self-hosted (GCP, |

|

Experiencing the same issues on GCP, I had to implement a timeout on the whole job because the cache action routinely takes 10 plus minutes for 30MB |

|

Our workaround - name: Cache mvn repository

uses: actions/cache@v2

timeout-minutes: 5

continue-on-error: true

with:

path: ~/.m2/repository

key: cache-m2-${{ hashFiles('pom.xml') }}

restore-keys: |

cache-m2- |

|

@levonet @akushneruk @SophisticaSean @es Are you still seeing the same behaviour on self-hosted runners? If yes, can one of you please enable debugging and the share log file here. Thank you. |

|

Hey, Most of the reports in this thread are from people self-hosting, but this issue doesn't seem to be unique to self-hosting, the hosted runners have the same issue. I don't know whether you have more insights into workflow runs that use native runners, but here is one example from Google Chrome Lighthouse where the As we have a lot of traffic running on our infrastructure, we really notice when bugs happen with commonly used actions, and we'd be more than happy giving providing you with insights into how users are failing. You can reach |

|

@adamshiervani The workflow you pointed to ran 18 days ago. Can you share anything which happened in the last week. 1 point of slowness might be an outlier in which case an upgrade/downtime might have happened? If this happens every few days, I'd like to know more about the frequency. |

|

We've been experiencing this intermittently on builds (not self-hosted). When it happens, the cache takes 12+ hours to retrieve. It would be nice if it were possible to set a timeout to deal with this. |

|

@vsvipul - we are heavy users of BuildJet and this action and this is probably the most common issues we face. Here are some runs for you: We'll get this issue every week, as we push a lot of builds out. No rhyme or reason to when it occurs. A lot are missing because I ended up deleting the workflow logs - there were just too many. |

We're also users of BuildJet, bless their offerings, and had to disable caching in some of our jobs because of this. |

|

This has consequences in incurring additional runner costs, if not timeout limited. There is a newer issue opened #810, and an environment variable Just happened on a github-hosted runner, which failed to download 3GB during 1 hour (I was not aware of the issue so didn't have any remediation settings), by slowing down the download more and more, https://github.com/marcindulak/jhalfs-ci/actions/runs/3210575787/jobs/5249037125 "Re-run failed" executed several minutes after the original failure, proceeded without the cache download slowdown, and finished the cache download in 2 minutes. |

|

i've also had this happen sporadically on github hosted runners and pretty consistently on buildjet runners |

|

We now use

Never mind. The issue appeared today, with cache@v3. Luckily our macOS build timed out at this step after 10min rather than the full 60min we allow for the entire workflow. |

|

This has become a significant issue for us. Currently around 50% of our actions time out at ~98%, which is particularly painful because our download is relatively large (850 MB), so we have to wait a while before checking if builds time out. We will have to migrate to a different solution if we don't find a resolution, as we cannot rely on manually monitoring CI runs. |

|

In the cases where this problem occurred for us, it seemed to be the case that restoring from the cache, according to the logs, went 0% to say 60% very quickly and then stalled or trickled the rest of the way. (basically once it stops making progress, it will not complete) |

This workaround does not work for us, it doesn't seem to be a supported argument (perhaps because it's in the step of an action, rather than a workflow): |

|

expanding the example: name: Caching Primes

on: push

jobs:

build:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Cache Primes

id: cache-primes

uses: actions/cache@v3

timeout-minutes: 10 # <---------

with:

path: prime-numbers

key: ${{ runner.os }}-primes

- name: Generate Prime Numbers

if: steps.cache-primes.outputs.cache-hit != 'true'

run: /generate-primes.sh -d prime-numbers

- name: Use Prime Numbers

run: /primes.sh -d prime-numbers |

|

We have added an environment variable |

@vsvipul this happens to us pretty often, at least a few times a week. We also use Buildjet. One case where we recently ran into this was the |

|

👋🏽 @rattrayalex, are these private repos? unfortunately we can't see the logs. Can you please elaborate on the issue you are facing. |

|

Ah ok. Basically the network hangs for many minutes (often long enough to hit our 20min timeout) - same as the other screenshots posted here. Yesterday this was happening on almost every PR for us. I'd recommend you reach out to @adamshiervani for more details as they've done some investigations of this at buildjet. |

Prevent stalling builds due to a bug[^0] in Github actions. [^0]: actions/cache#720 Signed-off-by: xla <self@xla.is>

|

Also noticing this behavior while using buildjet: Using the rust-cache action sporadically takes several factors of time longer than a clean build. On average this makes self-hosted runners perform worse overall than using basic github runners (even if the actual execution stage is much faster). |

|

Same here: https://github.com/mlflow/mlflow/actions/runs/4179427251/jobs/7239362333 |

|

I'm now seeing this on github's larger hosted runners as well: We've had this happen a handful of times recently. This job was https://github.com/stainless-api/stainless/actions/runs/4232471756/jobs/7352268632 if that's useful to the GHA team. |

|

We're seeing it on normal GitHub hosted runners now. |

|

@sammcj , we are aware of this problem in the GitHub hosted runners and are investigating it thoroughly. |

We currently use BuildJet and see this fairly regularly. |

|

We can't see this run @michael-projectx you should paste the output of the terminal... |

|

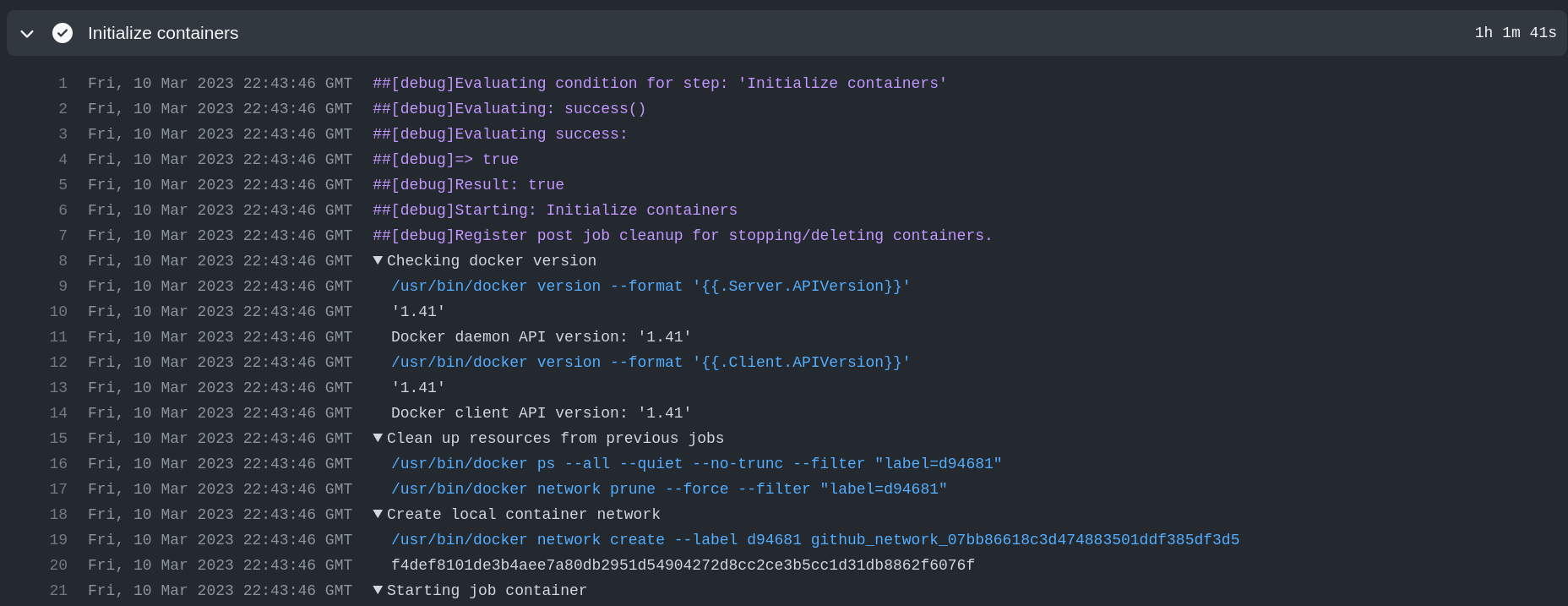

@Sytten To start, spinning up the container usually takes a short period of time, but in this case, over an hour. Hopefully this is helpful: |

|

Hey everyone, I'm Adam, co-founder of BuildJet. Our customers often complain about reliability and speed issues like the one reported here. Today, we launched BuildJet Cache, a GitHub Cache alternative thats reliable, fast and free. It’s fully compatible with GitHub’s Cache, all you need to do is replace Head over to our launch post for more details: https://buildjet.com/for-github-actions/blog/launch-buildjet-cache |

|

@thinkafterbefore Since you hijacked the post for promotion let me push back a little. Your cache fork is really not well done, it's just a build on top of the existing cache action with a random closed source https://www.npmjs.com/package/github-actions.cache-buildjet. Fix your shit please, we have enough supply chain attacks without legit stuff looking sus like that. Ideally do proper tagging too. I would be much more supportive of an action that actually allowed people to not be dependant on any one provider similar to |

|

@Sytten, I hear you, and I apologize if my prior message was too promotional, my intention was simply to help people solve a long-standing issue with the Regarding the Lastly, as we wrote in the launch post, if people are interested in using their own object storage, whywaita/actions-cache-s3 is perfect for that. We simply wanted to alleviate that for the users. Thanks for pushing back and calling out where we need to improve. |

|

I can only say: I'm also a happy BuildJet customer and long-time sufferer of the cache issue and I'm glad this was posted here. Probably because I'm affected, I did not find this inappropriate; the opposite, I'm glad I learned about this and can't wait to wake-up tomorrow and try it out 👌🏼 |

|

@thinkafterbefore can you publish in https://github.com/marketplace?type=&verification=&query=buildjet/cache please? :) |

|

I also started my own solution, forked the |

|

This issue is stale because it has been open for 200 days with no activity. Leave a comment to avoid closing this issue in 5 days. |

|

Still an issue |

|

Can we have an ENV variable that disables saving/restoring cache (maybe 2 separate variables) so that we can stop when other actions try to use this action. |

|

Yes

…On Thu, Mar 28, 2024, 17:51 dangeReis ***@***.***> wrote:

Can we have an ENV variable that disables saving/restoring cache (maybe 2

separate variables) so that we can stop when other actions try to use this

action.

—

Reply to this email directly, view it on GitHub

<#720 (comment)>, or

unsubscribe

<https://github.com/notifications/unsubscribe-auth/BGG5ZMW222E4TMLFABQURQDY2SUIDAVCNFSM5M5KIEQ2U5DIOJSWCZC7NNSXTN2JONZXKZKDN5WW2ZLOOQ5TEMBSGYZTGMRVHE2A>

.

You are receiving this because you are subscribed to this thread.Message

ID: ***@***.***>

|

|

Will we? |

It usually takes a few seconds to load the cache. But from time to time it takes a very long time. In my opinion, this has something to do with the slow operation of the Github API. But I can't prove it yet.

I would like to have some transparency about what happens when the action returns a response like

Received 0 of XXX (0.0%), 0.0 MBs/sec. This will allow us to localize and solve the problem completely, or find a workaround.The text was updated successfully, but these errors were encountered: