Feb. 19, 2025Inst-It BenchEvaluation toolkitis released, you can evluate your model now!Dec. 11, 2024Inst-It Dataset is available athere. Welcome to use our dataset!Dec. 5, 2024our checkpoints are available athuggingface.

Inst-It Bench is a fine-grained multimodal benchmark for evaluating LMMs at the instance-level.

- Size: ~1,000 image QAs and ~1,000 video QAs

- Splits: Image split and Video split

- Evaluation Formats: Open-Ended and Multiple-Choice

See this Evaluate.md to learn how to perform evaluation on Inst-It-Bench.

Inst-It Dataset can be downloaded here. To our knowledge, this is the first dataset that provides fine-grained annotations centric on specific instances. In total, Inst-it Dataset includes :

- 21k videos

- 51k images

- 21k video-level descriptions

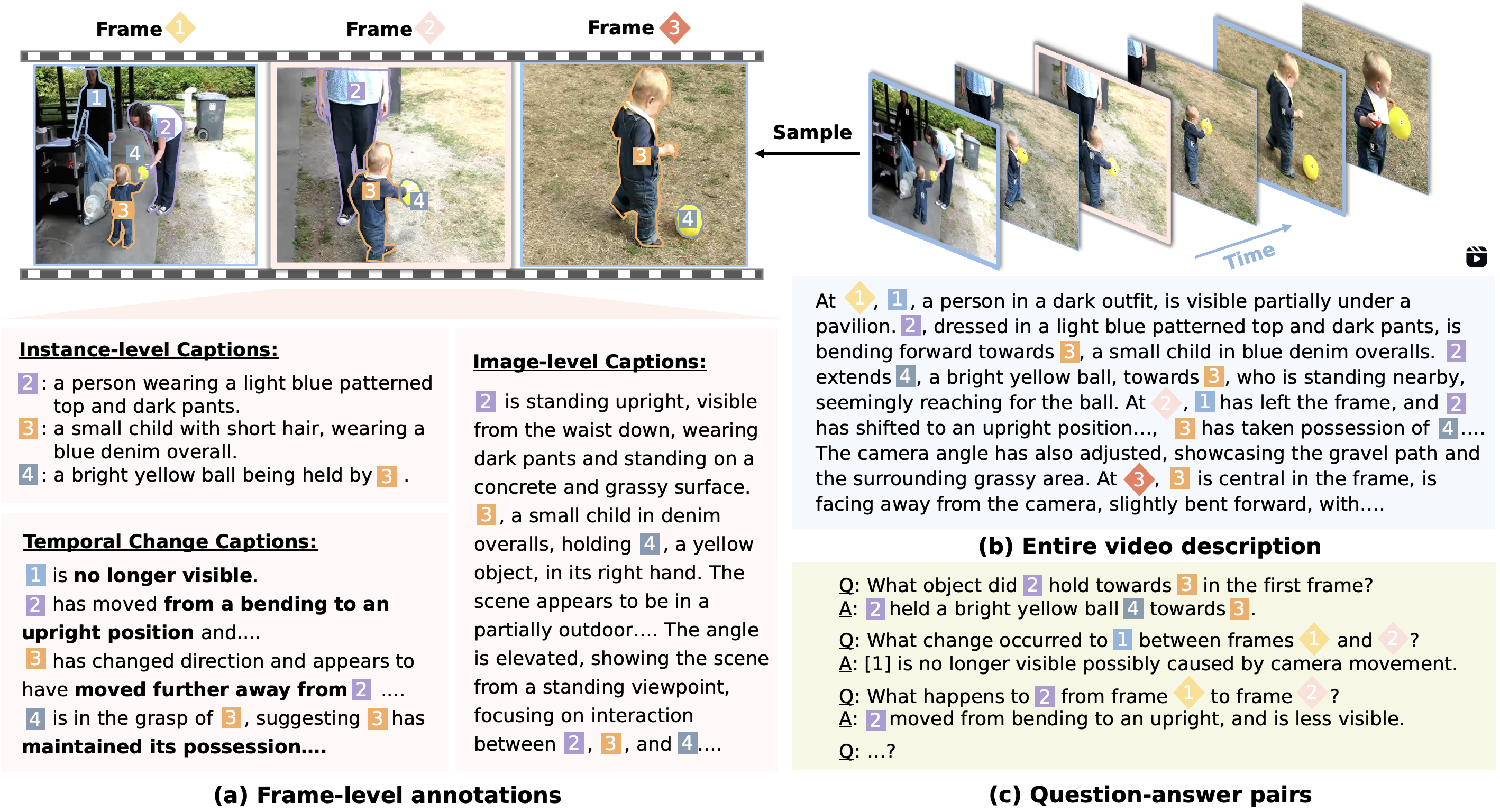

- 207k frame-level descriptions (51k images, 156k video frames) (each frame-level description includes captions of 1)individual instances, 2)the entire image, and 3)the temporal changes.)

- 335k open-ended QA pairs

We visualize the data structure in the figure below, and you can view a more detailed data sample here.

click here to see the annotation format of Inst-It-Bench

- video annotations in file

inst_it_dataset_video_21k.json

[

{

"video_id": int,

"frame_level_caption": (annotation for each frame within this video)

[

{

"timestamp": int, (indicate the timestamp of this frame in the video, e.g. <1>)

"frame_name": string, (the image filename of this frame)

"instance_level": (caption for each instance within this frame)

{

"1": "caption for instance 1",

(more instance level captions ...)

},

"image_level": string, (caption for the entire frame)

"temporal_change": string (caption for the temporal changes relative to the previous frame)

},

(more frame level captions ...)

],

"question_answer_pairs": (open ended question answer pairs)

[

{

"question": "the question",

"answer": "the corresponding answer"

},

(more question answer pairs ...)

],

"video_level_caption": string, (a dense caption for the entire video, encompassing all frames)

"video_path": string (the path to where this video is stored)

},

(more annotations for other videos ...)

]

- image annotations in file

inst_it_dataset_image_51k.json

[

{

"image_id": int,

"instance_level_caption": (caption for each instance within this image)

{

"1": "caption for instance 1",

(more instance level captions ...)

},

"image_level_caption": string, (caption for the entire image)

"image_path": string (the path to where this image is stored)

},

(more annotations for other images ...)

]

We trained two models based on LLaVA-Next using our Inst-It-Dataset, which not only achieve outstanding performance on Inst-It-Bench but also demonstrate significant improvements on other generic image and video understanding benchmarks. We provide the checkpoints here:

| Model | Checkpoints |

|---|---|

| LLaVA-Next-Inst-It-Vicuna-7B | weights and docs |

| LLaVA-Next-Inst-It-Qwen2-7B | weights and docs |

- Release the Inst-It Bench data and evaluation code.

- Release the Inst-It Dataset.

- Release the checkpoint of our fine-tuned models.

- Release the meta-annotation of Inst-It Dataset, such as instance segmentation masks, bounding boxes, and more ...

- Release the annotation file of Inst-It Dataset, which follows the format in the LLaVA codebase.

- Release the training code.

Feel free to contact us if you have any questions or suggestions

- Email (Wujian Peng): wjpeng24@m.fudan.edu.cn

- Email (Lingchen Meng): lcmeng20@fudan.edu.cn

If you find our work helpful, please consider citing our paper 📎 and starring our repo 🌟 :

@article{peng2024inst,

title={Inst-IT: Boosting Multimodal Instance Understanding via Explicit Visual Prompt Instruction Tuning},

author={Peng, Wujian and Meng, Lingchen and Chen, Yitong and Xie, Yiweng and Liu, Yang and Gui, Tao and Xu, Hang and Qiu, Xipeng and Wu, Zuxuan and Jiang, Yu-Gang},

journal={arXiv preprint arXiv:2412.03565},

year={2024}

}