A curated list for Efficient Large Language Models

- Network Pruning / Sparsity

- Knowledge Distillation

- Quantization

- Inference Acceleration

- Efficient MOE

- Efficient Architecture of LLM

- KV Cache Compression

- Text Compression

- Low-Rank Decomposition

- Hardware / System / Serving

- Efficient Fine-tuning

- Efficient Training

- Survey or Benchmark

- Reasoning Model

Please check out all the papers by selecting the sub-area you're interested in. On this main page, only papers released in the past 90 days are shown.

- April 15, 2025: We have a new curated list for efficient reasoning model!

- May 29, 2024: We've had this awesome list for a year now 🥰!

- Sep 6, 2023: Add a new subdirectory project/ to organize efficient LLM projects.

- July 11, 2023: A new subdirectory efficient_plm/ is created to house papers that are applicable to PLMs.

If you'd like to include your paper, or need to update any details such as conference information or code URLs, please feel free to submit a pull request. You can generate the required markdown format for each paper by filling in the information in generate_item.py and execute python generate_item.py. We warmly appreciate your contributions to this list. Alternatively, you can email me with the links to your paper and code, and I would add your paper to the list at my earliest convenience.

For each topic, we have curated a list of recommended papers that have garnered a lot of GitHub stars or citations.

Paper from Sep 30, 2024 - Now (see Full List from May 22, 2023 here)

- Network Pruning / Sparsity

- Knowledge Distillation

- Quantization

- Inference Acceleration

- Efficient MOE

- Efficient Architecture of LLM

- KV Cache Compression

- Text Compression

- Low-Rank Decomposition

- Hardware / System / Serving

- Efficient Fine-tuning

- Efficient Training

- Survey

| Title & Authors | Introduction | Links |

|---|---|---|

⭐ Deja Vu: Contextual Sparsity for Efficient LLMs at Inference Time Zichang Liu, Jue WANG, Tri Dao, Tianyi Zhou, Binhang Yuan, Zhao Song, Anshumali Shrivastava, Ce Zhang, Yuandong Tian, Christopher Re, Beidi Chen |

|

Github Paper |

⭐ SpecInfer: Accelerating Generative LLM Serving with Speculative Inference and Token Tree Verification Xupeng Miao, Gabriele Oliaro, Zhihao Zhang, Xinhao Cheng, Zeyu Wang, Rae Ying Yee Wong, Zhuoming Chen, Daiyaan Arfeen, Reyna Abhyankar, Zhihao Jia |

|

Github paper |

⭐ Efficient Streaming Language Models with Attention Sinks Guangxuan Xiao, Yuandong Tian, Beidi Chen, Song Han, Mike Lewis |

|

Github Paper |

⭐ EAGLE: Lossless Acceleration of LLM Decoding by Feature Extrapolation Yuhui Li, Chao Zhang, and Hongyang Zhang |

|

Github Blog |

⭐ Medusa: Simple LLM Inference Acceleration Framework with Multiple Decoding Heads Tianle Cai, Yuhong Li, Zhengyang Geng, Hongwu Peng, Jason D. Lee, Deming Chen, Tri Dao |

|

Github Paper |

| Speculative Decoding with CTC-based Draft Model for LLM Inference Acceleration Zhuofan Wen, Shangtong Gui, Yang Feng |

|

Paper |

| PLD+: Accelerating LLM inference by leveraging Language Model Artifacts Shwetha Somasundaram, Anirudh Phukan, Apoorv Saxena |

|

Paper |

FastDraft: How to Train Your Draft Ofir Zafrir, Igor Margulis, Dorin Shteyman, Guy Boudoukh |

Paper | |

SMoA: Improving Multi-agent Large Language Models with Sparse Mixture-of-Agents Dawei Li, Zhen Tan, Peijia Qian, Yifan Li, Kumar Satvik Chaudhary, Lijie Hu, Jiayi Shen |

|

Github Paper |

| The N-Grammys: Accelerating Autoregressive Inference with Learning-Free Batched Speculation Lawrence Stewart, Matthew Trager, Sujan Kumar Gonugondla, Stefano Soatto |

Paper | |

| Accelerated AI Inference via Dynamic Execution Methods Haim Barad, Jascha Achterberg, Tien Pei Chou, Jean Yu |

Paper | |

| SuffixDecoding: A Model-Free Approach to Speeding Up Large Language Model Inference Gabriele Oliaro, Zhihao Jia, Daniel Campos, Aurick Qiao |

|

Paper |

| Dynamic Strategy Planning for Efficient Question Answering with Large Language Models Tanmay Parekh, Pradyot Prakash, Alexander Radovic, Akshay Shekher, Denis Savenkov |

|

Paper |

MagicPIG: LSH Sampling for Efficient LLM Generation Zhuoming Chen, Ranajoy Sadhukhan, Zihao Ye, Yang Zhou, Jianyu Zhang, Niklas Nolte, Yuandong Tian, Matthijs Douze, Leon Bottou, Zhihao Jia, Beidi Chen |

|

Github Paper |

| Faster Language Models with Better Multi-Token Prediction Using Tensor Decomposition Artem Basharin, Andrei Chertkov, Ivan Oseledets |

|

Paper |

| Efficient Inference for Augmented Large Language Models Rana Shahout, Cong Liang, Shiji Xin, Qianru Lao, Yong Cui, Minlan Yu, Michael Mitzenmacher |

|

Paper |

Dynamic Vocabulary Pruning in Early-Exit LLMs Jort Vincenti, Karim Abdel Sadek, Joan Velja, Matteo Nulli, Metod Jazbec |

|

Github Paper |

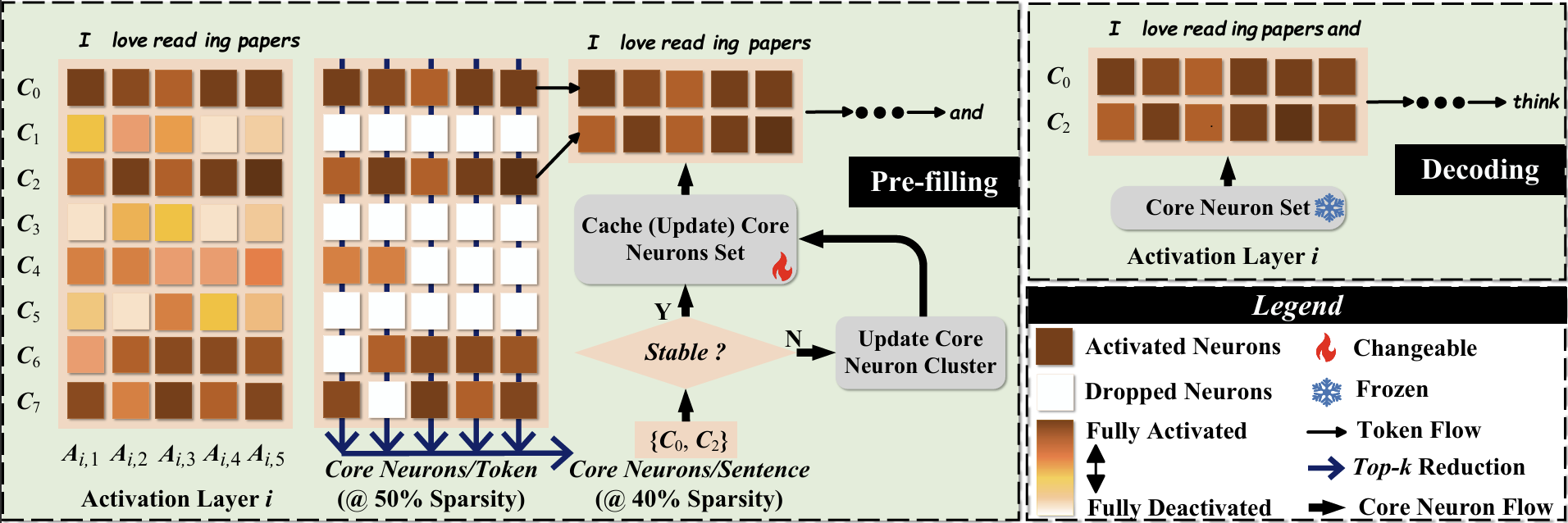

CoreInfer: Accelerating Large Language Model Inference with Semantics-Inspired Adaptive Sparse Activation Qinsi Wang, Saeed Vahidian, Hancheng Ye, Jianyang Gu, Jianyi Zhang, Yiran Chen |

|

Github Paper |

DuoAttention: Efficient Long-Context LLM Inference with Retrieval and Streaming Heads Guangxuan Xiao, Jiaming Tang, Jingwei Zuo, Junxian Guo, Shang Yang, Haotian Tang, Yao Fu, Song Han |

|

Github Paper |

| DySpec: Faster Speculative Decoding with Dynamic Token Tree Structure Yunfan Xiong, Ruoyu Zhang, Yanzeng Li, Tianhao Wu, Lei Zou |

|

Paper |

| QSpec: Speculative Decoding with Complementary Quantization Schemes Juntao Zhao, Wenhao Lu, Sheng Wang, Lingpeng Kong, Chuan Wu |

|

Paper |

| TidalDecode: Fast and Accurate LLM Decoding with Position Persistent Sparse Attention Lijie Yang, Zhihao Zhang, Zhuofu Chen, Zikun Li, Zhihao Jia |

|

Paper |

| ParallelSpec: Parallel Drafter for Efficient Speculative Decoding Zilin Xiao, Hongming Zhang, Tao Ge, Siru Ouyang, Vicente Ordonez, Dong Yu |

|

Paper |

SWIFT: On-the-Fly Self-Speculative Decoding for LLM Inference Acceleration Heming Xia, Yongqi Li, Jun Zhang, Cunxiao Du, Wenjie Li |

|

Github Paper |

TurboRAG: Accelerating Retrieval-Augmented Generation with Precomputed KV Caches for Chunked Text Songshuo Lu, Hua Wang, Yutian Rong, Zhi Chen, Yaohua Tang |

|

Github Paper |

| A Little Goes a Long Way: Efficient Long Context Training and Inference with Partial Contexts Suyu Ge, Xihui Lin, Yunan Zhang, Jiawei Han, Hao Peng |

|

Paper |

Cache-Craft: Managing Chunk-Caches for Efficient Retrieval-Augmented Generation Shubham Agarwal, Sai Sundaresan, Subrata Mitra, Debabrata Mahapatra, Archit Gupta, Rounak Sharma, Nirmal Joshua Kapu, Tong Yu, Shiv Saini |

|

Paper |

| Mamba Drafters for Speculative Decoding Daewon Choi, Seunghyuk Oh, Saket Dingliwal, Jihoon Tack, Kyuyoung Kim, Woomin Song, Seojin Kim, Insu Han, Jinwoo Shin, Aram Galstyan, Shubham Katiyar, Sravan Babu Bodapati |

|

Paper |

| Accelerated Test-Time Scaling with Model-Free Speculative Sampling Woomin Song, Saket Dingliwal, Sai Muralidhar Jayanthi, Bhavana Ganesh, Jinwoo Shin, Aram Galstyan, Sravan Babu Bodapati |

|

Paper |

| Title & Authors | Introduction | Links |

|---|---|---|

⭐ Fast Inference of Mixture-of-Experts Language Models with Offloading Artyom Eliseev, Denis Mazur |

|

Github Paper |

Condense, Don't Just Prune: Enhancing Efficiency and Performance in MoE Layer Pruning Mingyu Cao, Gen Li, Jie Ji, Jiaqi Zhang, Xiaolong Ma, Shiwei Liu, Lu Yin |

|

Github Paper |

| Mixture of Cache-Conditional Experts for Efficient Mobile Device Inference Andrii Skliar, Ties van Rozendaal, Romain Lepert, Todor Boinovski, Mart van Baalen, Markus Nagel, Paul Whatmough, Babak Ehteshami Bejnordi |

|

Paper |

MoNTA: Accelerating Mixture-of-Experts Training with Network-Traffc-Aware Parallel Optimization Jingming Guo, Yan Liu, Yu Meng, Zhiwei Tao, Banglan Liu, Gang Chen, Xiang Li |

|

Github Paper |

MoE-I2: Compressing Mixture of Experts Models through Inter-Expert Pruning and Intra-Expert Low-Rank Decomposition Cheng Yang, Yang Sui, Jinqi Xiao, Lingyi Huang, Yu Gong, Yuanlin Duan, Wenqi Jia, Miao Yin, Yu Cheng, Bo Yuan |

|

Github Paper |

| HOBBIT: A Mixed Precision Expert Offloading System for Fast MoE Inference Peng Tang, Jiacheng Liu, Xiaofeng Hou, Yifei Pu, Jing Wang, Pheng-Ann Heng, Chao Li, Minyi Guo |

|

Paper |

| ProMoE: Fast MoE-based LLM Serving using Proactive Caching Xiaoniu Song, Zihang Zhong, Rong Chen |

|

Paper |

| ExpertFlow: Optimized Expert Activation and Token Allocation for Efficient Mixture-of-Experts Inference Xin He, Shunkang Zhang, Yuxin Wang, Haiyan Yin, Zihao Zeng, Shaohuai Shi, Zhenheng Tang, Xiaowen Chu, Ivor Tsang, Ong Yew Soon |

|

Paper |

| EPS-MoE: Expert Pipeline Scheduler for Cost-Efficient MoE Inference Yulei Qian, Fengcun Li, Xiangyang Ji, Xiaoyu Zhao, Jianchao Tan, Kefeng Zhang, Xunliang Cai |

Paper | |

MC-MoE: Mixture Compressor for Mixture-of-Experts LLMs Gains More Wei Huang, Yue Liao, Jianhui Liu, Ruifei He, Haoru Tan, Shiming Zhang, Hongsheng Li, Si Liu, Xiaojuan Qi |

|

Github Paper |

| Title & Authors | Introduction | Links |

|---|---|---|

Hymba: A Hybrid-head Architecture for Small Language Models Xin Dong, Yonggan Fu, Shizhe Diao, Wonmin Byeon, Zijia Chen, Ameya Sunil Mahabaleshwarkar, Shih-Yang Liu, Matthijs Van Keirsbilck, Min-Hung Chen, Yoshi Suhara, Yingyan Lin, Jan Kautz, Pavlo Molchanov |

|

Paper |

⭐ MobiLlama: Towards Accurate and Lightweight Fully Transparent GPT Omkar Thawakar, Ashmal Vayani, Salman Khan, Hisham Cholakal, Rao M. Anwer, Michael Felsberg, Tim Baldwin, Eric P. Xing, Fahad Shahbaz Khan |

|

Github Paper Model |

⭐ Megalodon: Efficient LLM Pretraining and Inference with Unlimited Context Length Xuezhe Ma, Xiaomeng Yang, Wenhan Xiong, Beidi Chen, Lili Yu, Hao Zhang, Jonathan May, Luke Zettlemoyer, Omer Levy, Chunting Zhou |

|

Github Paper |

| Taipan: Efficient and Expressive State Space Language Models with Selective Attention Chien Van Nguyen, Huy Huu Nguyen, Thang M. Pham, Ruiyi Zhang, Hanieh Deilamsalehy, Puneet Mathur, Ryan A. Rossi, Trung Bui, Viet Dac Lai, Franck Dernoncourt, Thien Huu Nguyen |

|

Paper |

SeerAttention: Learning Intrinsic Sparse Attention in Your LLMs Yizhao Gao, Zhichen Zeng, Dayou Du, Shijie Cao, Hayden Kwok-Hay So, Ting Cao, Fan Yang, Mao Yang |

|

Github Paper |

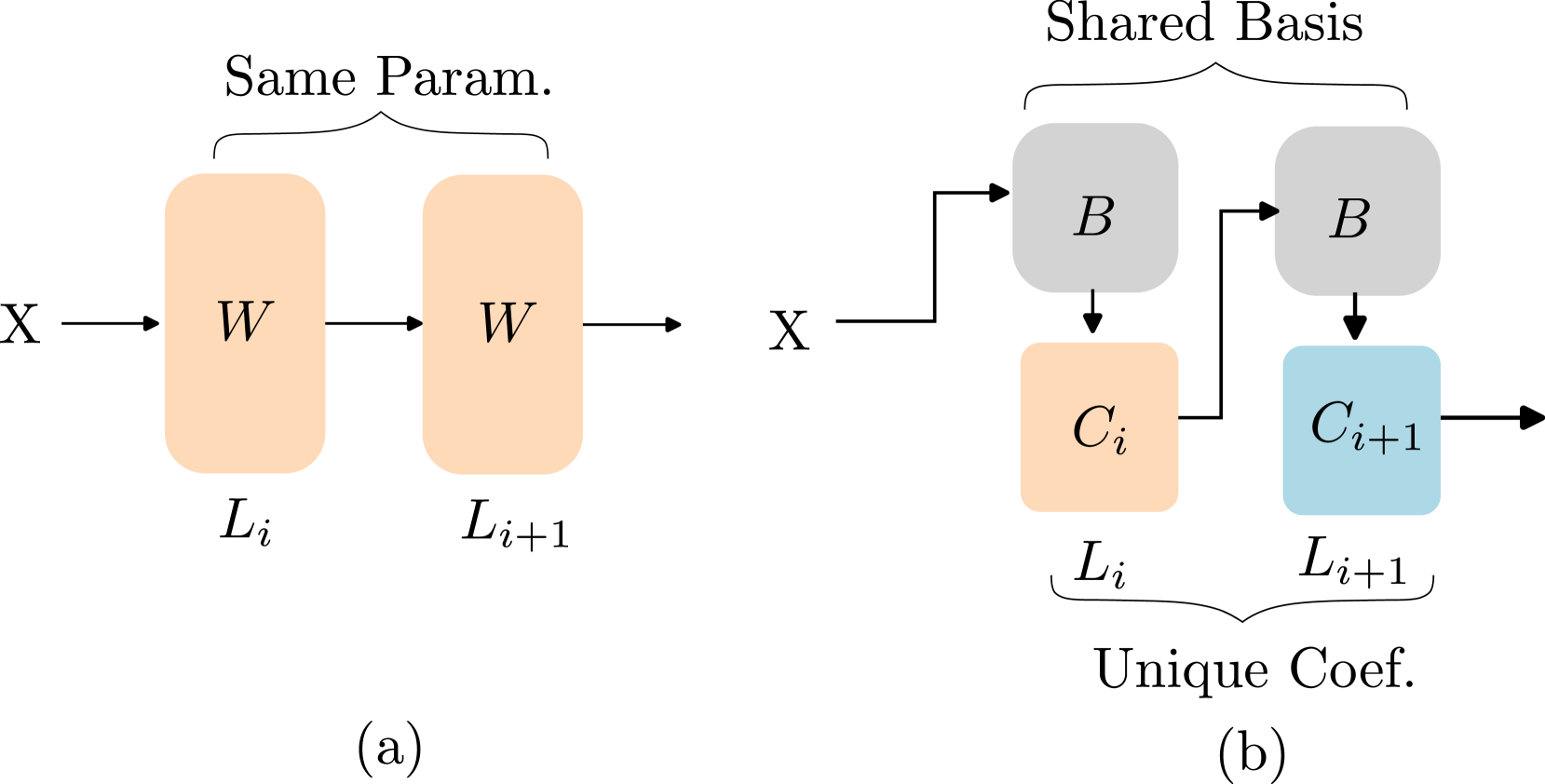

Basis Sharing: Cross-Layer Parameter Sharing for Large Language Model Compression Jingcun Wang, Yu-Guang Chen, Ing-Chao Lin, Bing Li, Grace Li Zhang |

|

Github Paper |

| Rodimus*: Breaking the Accuracy-Efficiency Trade-Off with Efficient Attentions Zhihao He, Hang Yu, Zi Gong, Shizhan Liu, Jianguo Li, Weiyao Lin |

|

Paper |

| Compress, Gather, and Recompute: REFORMing Long-Context Processing in Transformers Woomin Song, Sai Muralidhar Jayanthi, Srikanth Ronanki, Kanthashree Mysore Sathyendra, Jinwoo Shin, Aram Galstyan, Shubham Katiyar, Sravan Babu Bodapati |

|

Paper |

| Title & Authors | Introduction | Links |

|---|---|---|

ESPACE: Dimensionality Reduction of Activations for Model Compression Charbel Sakr, Brucek Khailany |

|

Paper |

Natural GaLore: Accelerating GaLore for memory-efficient LLM Training and Fine-tuning Arijit Das |

Github Paper |

|

| CompAct: Compressed Activations for Memory-Efficient LLM Training Yara Shamshoum, Nitzan Hodos, Yuval Sieradzki, Assaf Schuster |

|

Paper |

| Title & Authors | Introduction | Links |

|---|---|---|

| Closer Look at Efficient Inference Methods: A Survey of Speculative Decoding Hyun Ryu, Eric Kim |

|

Paper |

LLM-Inference-Bench: Inference Benchmarking of Large Language Models on AI Accelerators Krishna Teja Chitty-Venkata, Siddhisanket Raskar, Bharat Kale, Farah Ferdaus et al |

Github Paper |

|

Prompt Compression for Large Language Models: A Survey Zongqian Li, Yinhong Liu, Yixuan Su, Nigel Collier |

|

Github Paper |

| Large Language Model Inference Acceleration: A Comprehensive Hardware Perspective Jinhao Li, Jiaming Xu, Shan Huang, Yonghua Chen, Wen Li, Jun Liu, Yaoxiu Lian, Jiayi Pan, Li Ding, Hao Zhou, Guohao Dai |

|

Paper |