| title | description |

|---|---|

Terraform Remote State Datasource Demo |

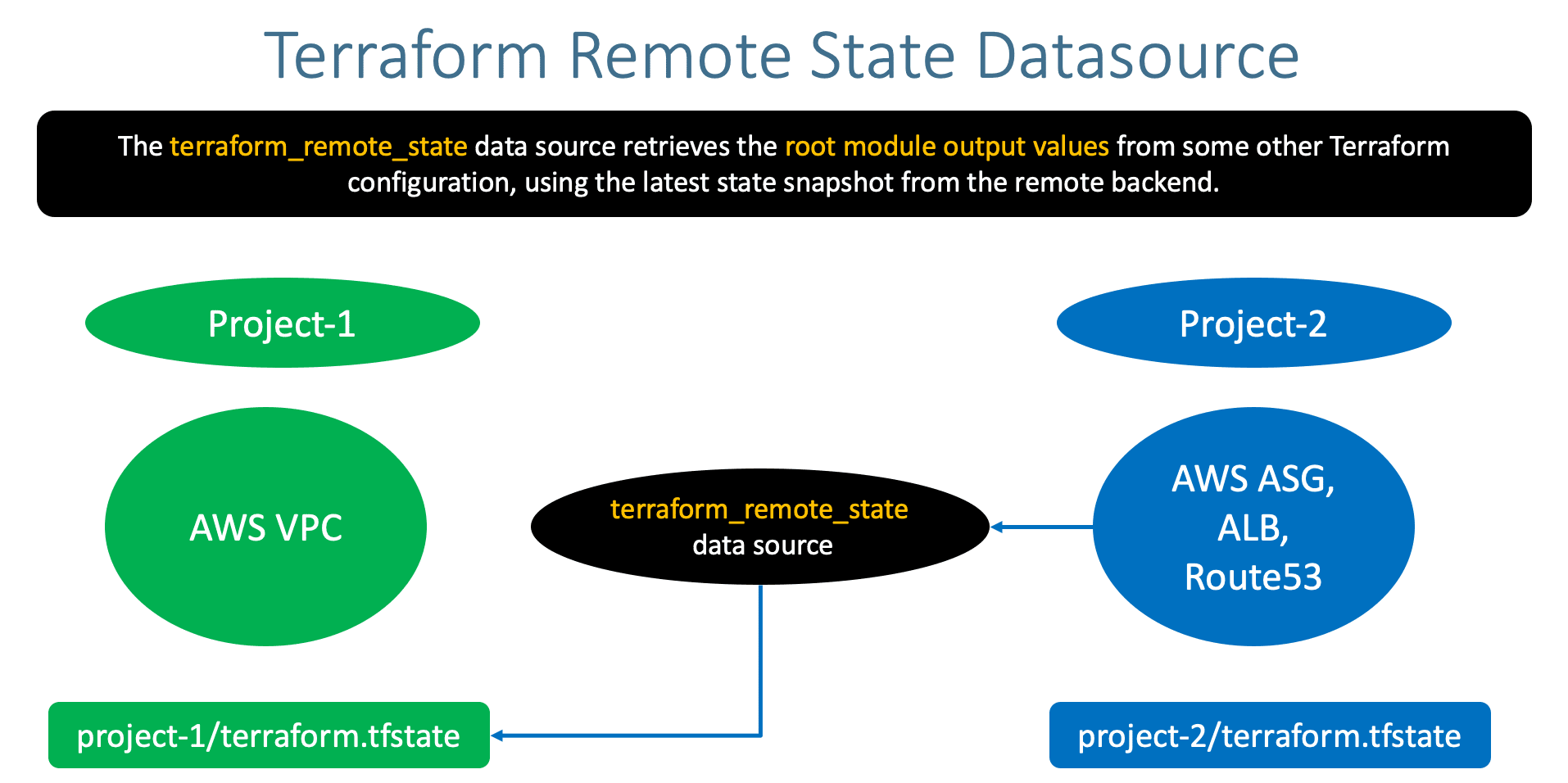

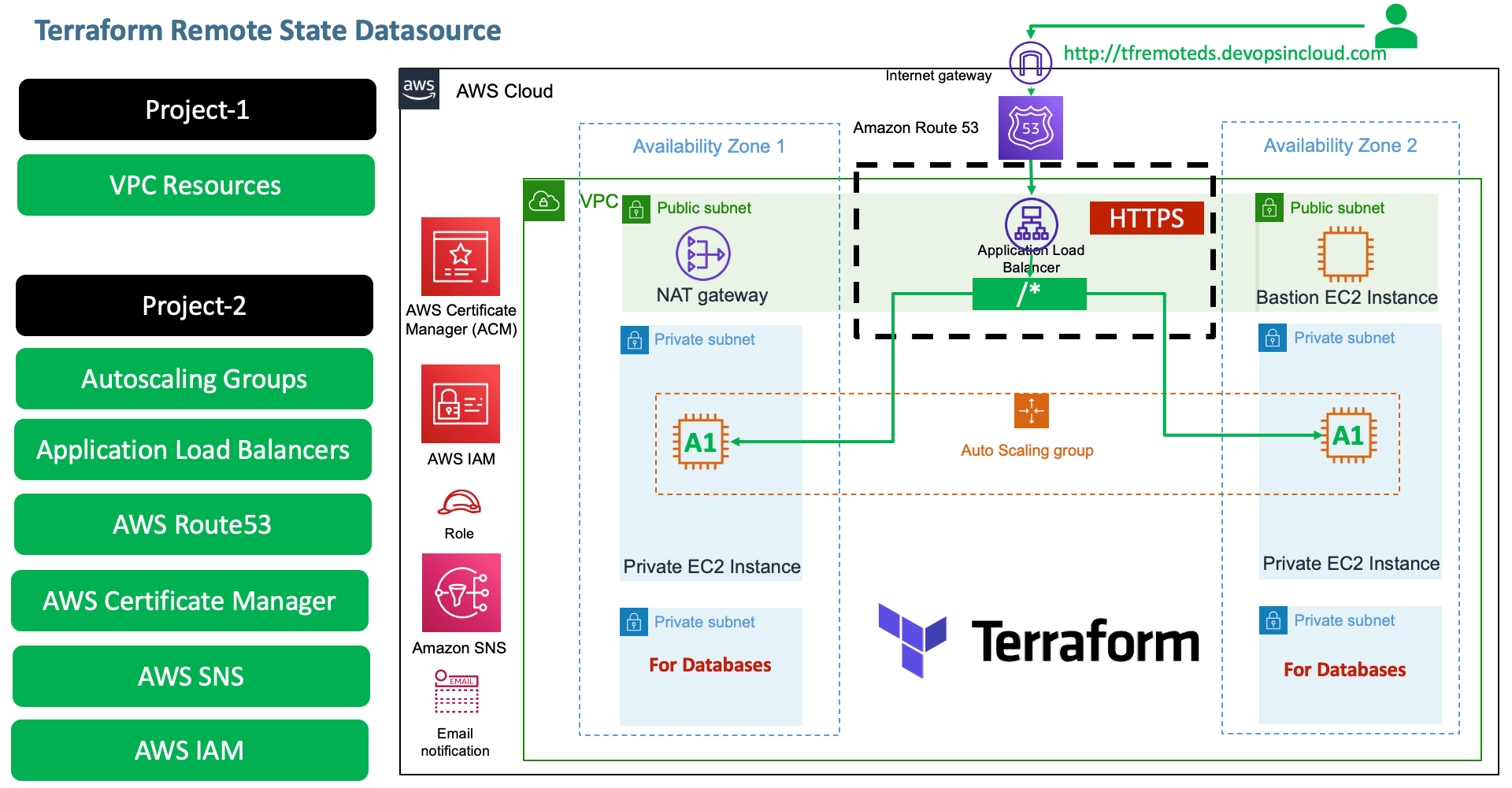

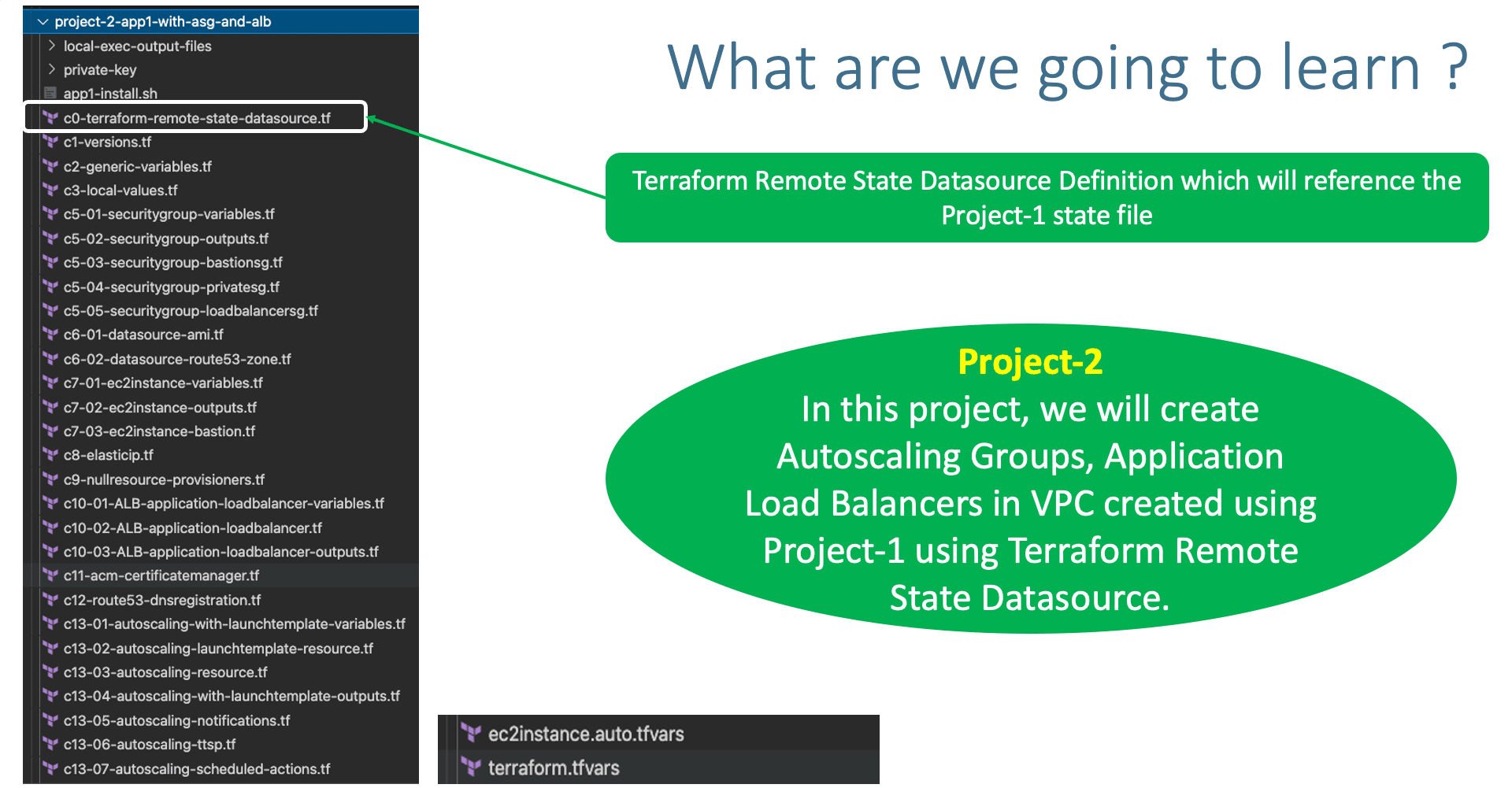

Terraform Remote State Datasource Demo with two projects |

- Understand Terraform Remote State Storage

- Terraform Remote State Storage Demo with two projects

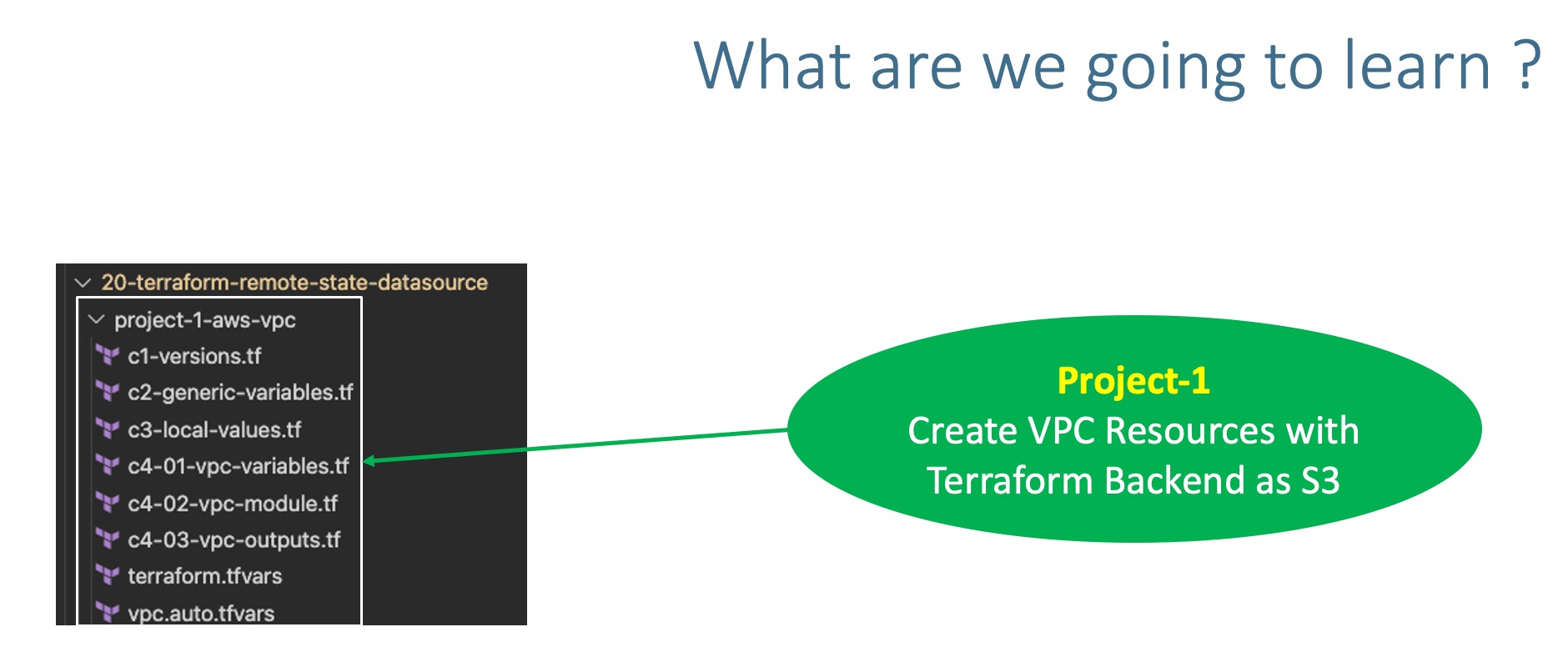

- Copy

project-1-aws-vpcfrom19-Remote-State-Storage-with-AWS-S3-and-DynamoDB

- Copy

terraform-manifests\*all files from Section15-Autoscaling-with-Launch-Templatesand copy toproject-2-app1-with-asg-and-alb

- Remove the following 4 files related to VPC from Project-2

project-2-app1-with-asg-and-alb - c4-01-vpc-variables.tf

- c4-02-vpc-module.tf

- c4-03-vpc-outputs.tf

- vpc.auto.tfvars

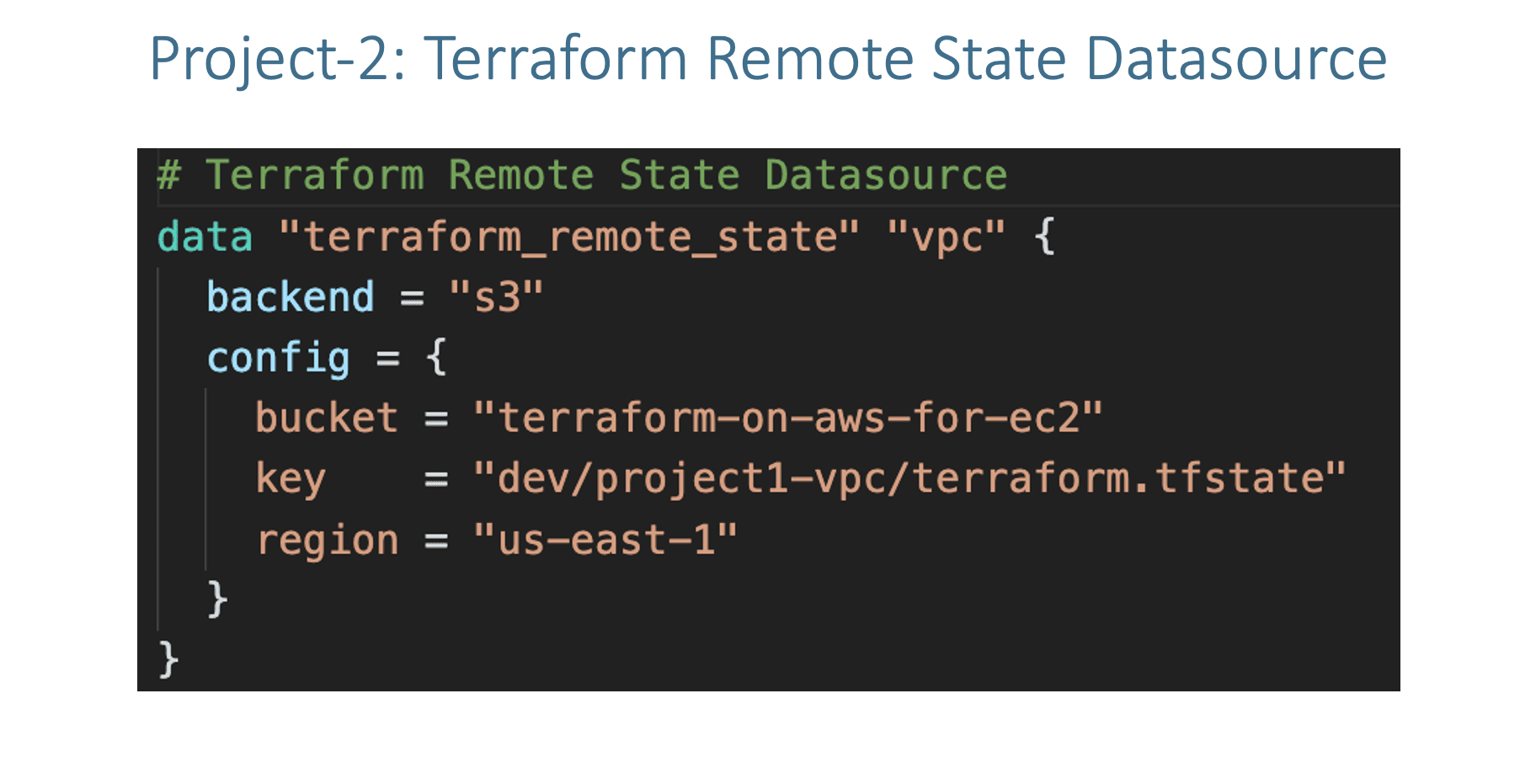

- Create terraform_remote_state Datasource

- In this datasource, we will provide the Terraform State file information of our Project-1-AWS-VPC

# Terraform Remote State Datasource

data "terraform_remote_state" "vpc" {

backend = "s3"

config = {

bucket = "terraform-on-aws-for-ec2"

key = "dev/project1-vpc/terraform.tfstate"

region = "us-east-1"

}

}- c5-03-securitygroup-bastionsg.tf

- c5-04-securitygroup-privatesg.tf

- c5-05-securitygroup-loadbalancersg.tf

# Before

vpc_id = module.vpc.vpc_id

# After

vpc_id = data.terraform_remote_state.vpc.outputs.vpc_id - c7-03-ec2instance-bastion.tf

# Before

subnet_id = module.vpc.public_subnets[0]

# After

subnet_id = data.terraform_remote_state.vpc.outputs.public_subnets[0]# Before

depends_on = [ module.ec2_public, module.vpc ]

# After

depends_on = [ module.ec2_public, /*module.vpc*/ ]# Before

vpc_id = module.vpc.vpc_id

subnets = module.vpc.public_subnets

# After

vpc_id = data.terraform_remote_state.vpc.outputs.vpc_id

subnets = data.terraform_remote_state.vpc.outputs.public_subnets# Add DNS name relevant to demo

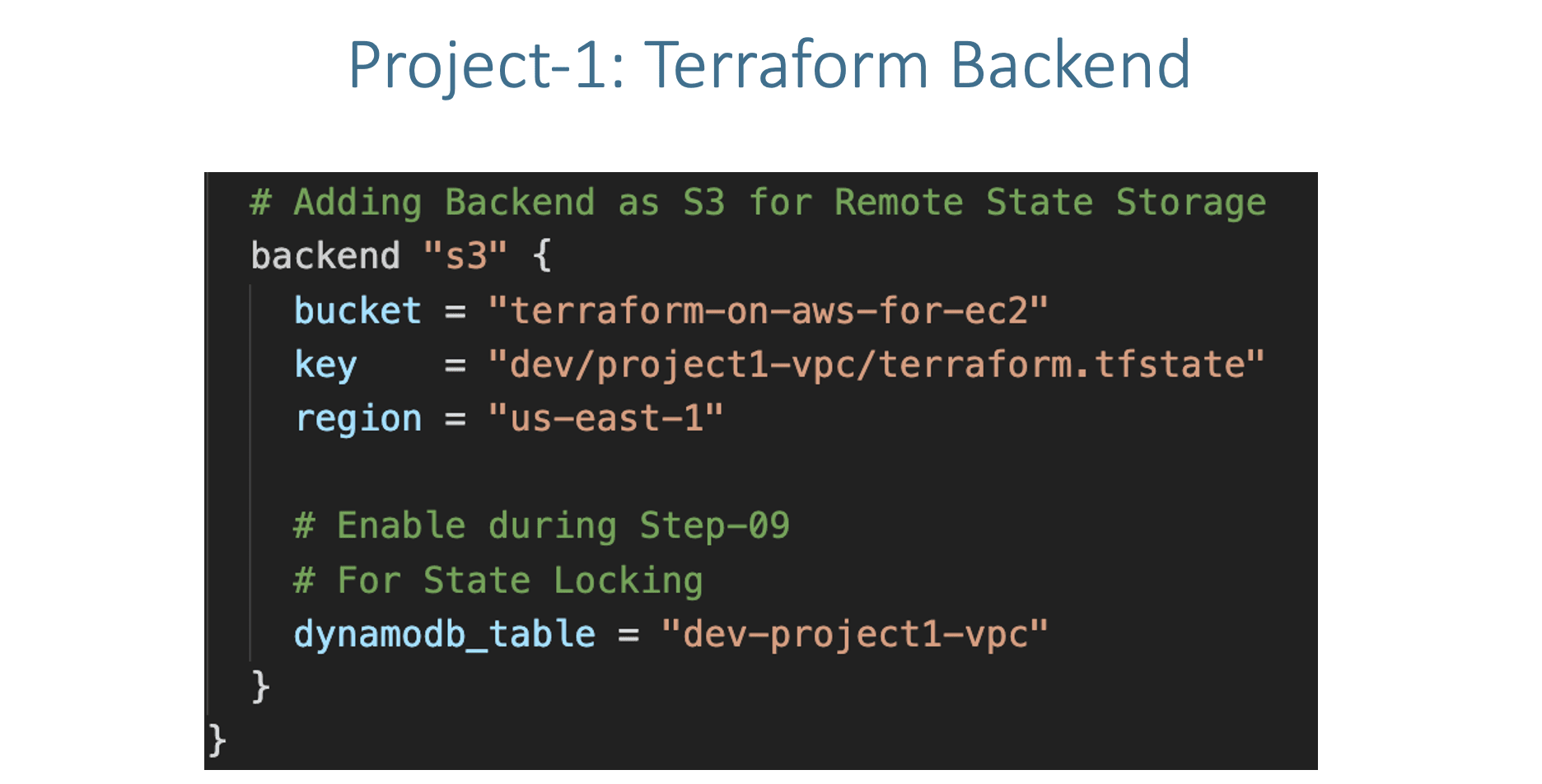

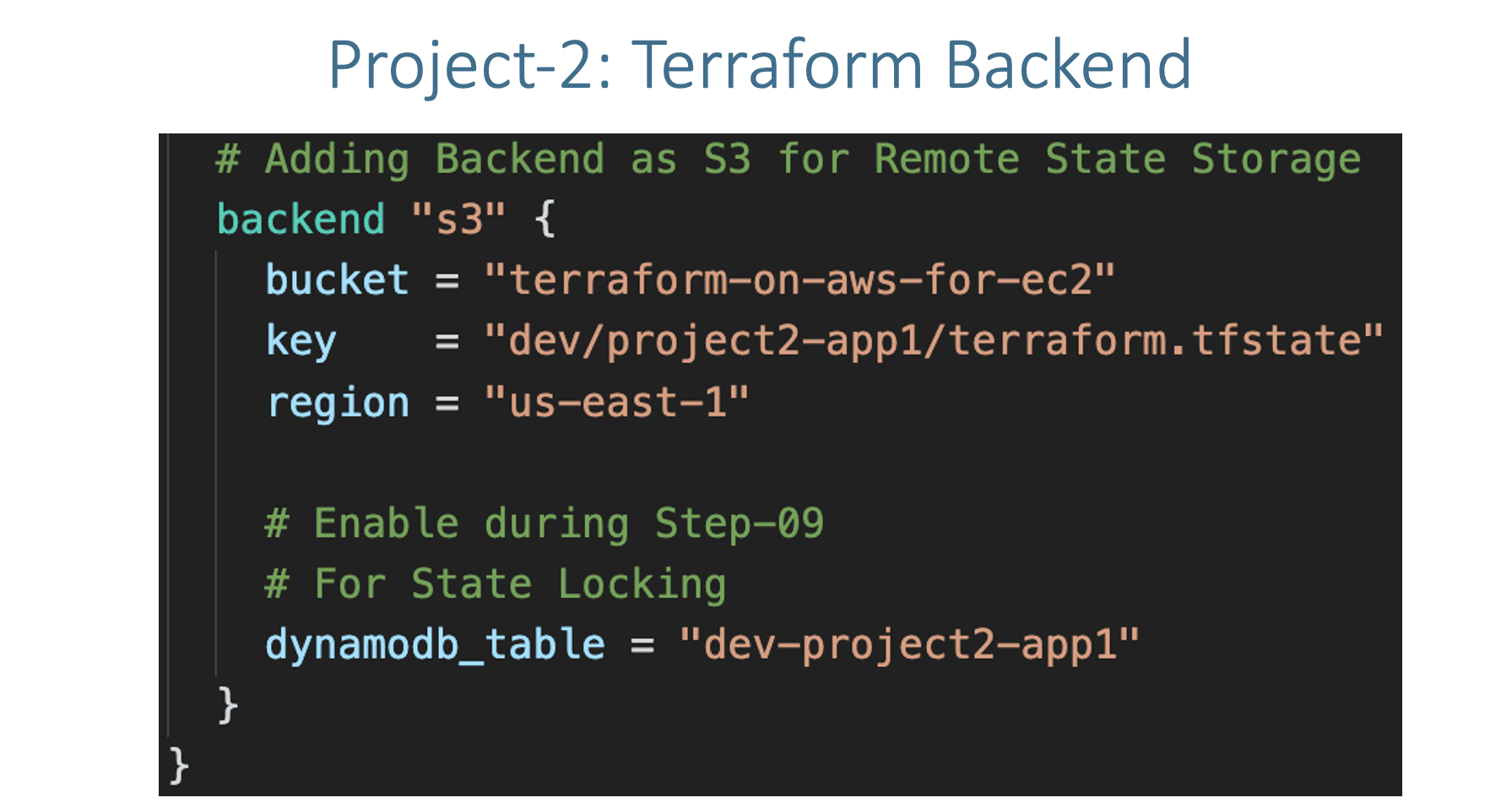

name = "tf-multi-app-projects.devopsincloud.com"- Create S3 Bucket and DynamoDB Table for Remote State Storage

- Leverage Same S3 bucket

terraform-on-aws-for-ec2with different folder for project-2 state filedev/project2-app1/terraform.tfstate - Also create a new DynamoDB Table for project-2

- Create Dynamo DB Table

- Table Name: dev-project2-app1

- Partition key (Primary Key): LockID (Type as String)

- Table settings: Use default settings (checked)

- Click on Create

- Update

c1-versions.tfwith Remote State Backend

# Adding Backend as S3 for Remote State Storage

backend "s3" {

bucket = "terraform-on-aws-for-ec2"

key = "dev/project2-app1/terraform.tfstate"

region = "us-east-1"

# Enable during Step-09

# For State Locking

dynamodb_table = "dev-project2-app1"

} # Before

vpc_zone_identifier = module.vpc.private_subnets

# After

vpc_zone_identifier = data.terraform_remote_state.vpc.outputs.private_subnets

- Create Project-1 Resources (VPC)

# Terraform Initialize

terraform init

# Terraform Validate

terraform validate

# Terraform Plan

terraform plan

# Terraform Apply

terraform apply -auto-approve

# Terraform State List

terraform state list

# Observations

1. Verify VPC Resources created

2. Verify S3 bucket and terraform.tfstate file for project-1- Create Project-2 Resources (VPC)

# Terraform Initialize

terraform init

# Terraform Validate

terraform validate

# Terraform Plan

terraform plan

# Terraform Apply

terraform apply -auto-approve

# Terraform State List

terraform state list- Verify S3 bucket and terraform.tfstate file for project-2

- Verify Security Groups

- Verify EC2 Instances (Bastion Host and ASG related EC2 Instances)

- Verify Application Load Balancer and Target Group

- Verify Autoscaling Group and Launch template

- Access Application and Test

# Access Application

https://tf-multi-app-projects1.devopsincloud.com

https://tf-multi-app-projects1.devopsincloud.com/app1/index.html

https://tf-multi-app-projects1.devopsincloud.com/app1/metadata.html# Change Directory

cd project-2-app1-with-asg-and-alb

# Terraform Destroy

terraform destroy -auto-approve

# Delete files

rm -rf .terraform*# Change Directory

cd project-1-aws-vpc

# Terraform Destroy

terraform destroy -auto-approve

# Delete files

rm -rf .terraform*