-

Notifications

You must be signed in to change notification settings - Fork 872

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

test all with full-remote-verification shows "Extra" hashes from error in compact #4693

Comments

More precisely, I got one while I was writing up the previous one above, but it was late, so I decided to continue and amend. A problem with a problem-alarm and an unfixed problem is that the alarm goes off annoyingly often, slowing more findings. Issue-fixers, unfortunately, are scarce at the moment, but if anyone wants a reproducible backup corruption, here's one case. To illustrate how the time-series (one per backup) of databases makes this problem more visible, read my Extra: hash history. Seeing that a compact has just run is relatively simple. It gets a section in the job log summary, with relevant details inside. Searching through the job's About --> Show log --> Stored can be slow. Looking in database can be easy but needs tools. |

|

This issue has been mentioned on Duplicati. There might be relevant details there: https://forum.duplicati.com/t/is-there-a-work-arround-for-google-drive-403/14148/8 |

|

I'm leaving the errored backup alone (in case it needs further examination) and switching back to an older one which I think was used in earlier analysis. That one had 10 issues, so hit the reporting limit. Trying to get the rest did a compact and cleared up the problem for now. We'll see if it comes back. I don't consider this issue to be super serious if it ends at I think this is as good a test case as one could reasonably get. At least it was my intent to make it easily reproducible by anyone. Anybody want to try? It doesn't have to be one of the "regulars". Duplicati is always encouraging new people to step up to help. Lucky winner may then see a |

|

The other backup got 22 errors each in a dindex and dblock today. The problem ending looks just like with previous problems, where the Extra: blocks were in both the DeletedBlock and the Block table, then compact ran on a dblock containing the block, which propagated into the new volume erroneously because it was in the Block table. Problem is it was in it for another dblock. |

I think this is enough looking to demonstrate that this problem pattern holds. |

|

This issue has been mentioned on Duplicati. There might be relevant details there: https://forum.duplicati.com/t/test-command-produces-extra-errors/13773/19 |

|

This issue has been mentioned on Duplicati. There might be relevant details there: https://forum.duplicati.com/t/best-way-to-verify-backup-after-each-incremental-run/14925/2 |

|

I can reproduce this in the latest version. The error luckily does not break database recreate, it seems that will choose one of the volumes containing the block. The other is put in the From the comments on the schema, the That is good, but it also means any space computations on recreated databases are incorrect. This should be reproducible by getting a dblock file close to the compact threshold, recreating the database and then deleting enough data to get over the threshold. From what I understand, the recreated database would not run compact, but the original database would. So, to fix this I would suggest two changes:

If 1 is implemented, there should be no situation where a duplicate block is added, so the Edit:To note, this is definitely not an issue in the compact algorithm. I was able to simplify the reproduction of the extra hashes on test command to this:

It seems, if the deleted blocks are in the deleted table, test does not complain. Database recreate (and probably compact also) screw up the deleted block table which is why it complains about the extra block. Nevertheless, the fixes above are still valid, it just shows that compact is not to blame. |

It shows a method not needing a compact. The original steps were quite a bit different, with compact rather than recreate.

I do find it an appealing idea to recycle rather than having one-way trip to waste (and duplicated block at the destination).

Block table has performance help DeletedBlock now lacks, such as many INDEX, and use-block-cache memory to avoid DB. |

|

The reason why test does not always complain about extra blocks is that the extra blocks are in the After a bit more digging, you are correct that the compact error is related, but different: In short, compact assumes there are no duplicate blocks and can break if there are. To prevent this, we could either trust that changes to

The main reason why the block table performs so poorly is because it is so large. I don't think the deleted block table would get so big (although maybe you could check that in a long running backup of yours with a retention policy, mine keep all versions). In addition, these checks would only be performed after the block memory cache or table are checked, so only for new blocks. To test the worst case performance, I could imagine a scenario where large amounts of new files are added every backup, and 25% of those are deleted for every version (just less than the compact threshold). That should give a good idea how much of an impact this has. I am going to make a draft PR until the performance impact is evaluated. If it is too bad we could still add an index as well.

It seems like that option exists, but it is never used anywhere in the code. There is even a Dictionary for the cache, but it is always set to null and never used. |

Handling the maybe-easier strange finding first. It's possible it's an incomplete removal in Remove unsed method and call #3584 where

We have some way-over-recommended-block-count backups in the user community, and the thought of doing linear SQL scans through 25% (default compact threshold) of them on any new block addition sounds slow. You've seemingly looked at the waste computations a little. Anyway, testing is in order and I agree an index could help if it's needed, so on the right track, and we'll see.

Fix database issue where block was not recognized as being used #3801 comes to mind. although I'd have to study both awhile longer. It's just a feeling so far about the perils of block tracking, spread across different tables, and the assumptions one makes. BTW I appreciate you looking at this and making good progress. I did have another test case partly written. Not sure it's needed. |

I would love to have more test cases, also to catch old bugs that might reappear. The problem is that they already run so slow (1h) because it is all I/O bound. |

This prevents duplicated blocks after a block was deleted and re-added (duplicati#4693). Also fix RemoveMissingBlocks in LocalListBrokenFilesDatabase, which did not clear the DeletedBlock table.

Check that blocks which are moved are recorded for the volume to be deleted. If duplicate blocks exist and one is in the DeletedBlock table, this can erase a block entry on an unrelated volume (duplicati#4693).

This prevents duplicated blocks after a block was deleted and re-added (duplicati#4693). Also fix RemoveMissingBlocks in LocalListBrokenFilesDatabase, which did not clear the DeletedBlock table.

Check that blocks which are moved are recorded for the volume to be deleted. If duplicate blocks exist and one is in the DeletedBlock table, this can erase a block entry on an unrelated volume (duplicati#4693).

|

This issue has been mentioned on Duplicati. There might be relevant details there: https://forum.duplicati.com/t/database-recreation-not-really-starting/16948/87 |

Environment info

Description

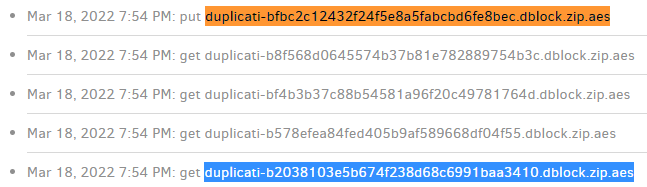

Running the test command over

allfiles with the full-remote-verification option may find errors, displayed as Extra: <hash>This has had quite a few forum reports, has been thought harmless, has bothered some people a lot, but was not understood.

Tracking it down well took a time-series (move/copy) of databases and an

rclone syncwith --backup-dir option to save files.After each backup, I sync, test, and error if exit code is nonzero. I can then trace hash to compact, and with luck can go earlier.

A profiling log is not enough because even with profile-all-database-queries, some details one might want are just not visible.

Steps to reproduce

.zipfiles. Consider having.zipand.sqlitetools around to look.testcommand onallfiles to see if the backup 3 files and backup 4 files coexist, or both claim to have A.txtExtra: VZrq0IJk1XldOQlxjN0Fq9SVcuhP5VWQ7vMaiKCP3/0= from dindex file and dblock file from the colliding backups

No errors

The test sequence has each backup have a large file to make wasted space on demand, plus a small file to stop full deletion.

The A.txt file is special because it comes, goes, and returns. When it goes, then backup 2 happens, it goes into DeletedBlock.

When it returns, then backup 3 happens, it is not recovered from DeletedBlock (should it be?) thus gets a new entry in Block.

Deleting the large files initially in backups 1 and 2 makes wasted space to compact backups 1 and 2 together after backup 4.

Compact checks which blocks are still used, moving those to the new volume. A.txt came in backup 1 and again in backup 3.

Code confusion appears to be that the compact check looks in the Block table, sees the second A.txt, and so also keeps first.

duplicati/Duplicati/Library/Main/Operation/CompactHandler.cs

Lines 158 to 162 in 2f3b0e8

duplicati/Duplicati/Library/Main/Database/LocalDeleteDatabase.cs

Lines 315 to 332 in 2f3b0e8

I don't understand the design theory well enough to define a fix, but it seems like there were two possible points to stop this.

First might be to not let the A.txt block get duplicated from the second appearance of A.txt. Another might be to fix compact.

Once the error gets into the destination files, it can survive database recreate. 2.0.2.1 breaks backups but

testdoesn't detail.Pointing a more recent Duplicati (e.g. 2.0.4.5) at the 2.0.2.1 destination for DB recreate and then test command details "Extra".

I'm still not sure if any further damage comes from this problem though. Now that I look for it, I see that I get these regularly.

Screenshots

Debug log

The text was updated successfully, but these errors were encountered: