Releases: facebook/zstd

Zstandard v1.3.4 - faster everything

The v1.3.4 release of Zstandard is focused on performance, and offers nice speed boost in most scenarios.

Asynchronous compression by default for zstd CLI

zstd cli will now performs compression in parallel with I/O operations by default. This requires multi-threading capability (which is also enabled by default).

It doesn't sound like much, but effectively improves throughput by 20-30%, depending on compression level and underlying I/O performance.

For example, on a Mac OS-X laptop with an Intel Core i7-5557U CPU @ 3.10GHz, running time zstd enwik9 at default compression level (2) on a SSD gives the following :

| Version | real time |

|---|---|

| 1.3.3 | 9.2s |

| 1.3.4 --single-thread | 8.8s |

| 1.3.4 (asynchronous) | 7.5s |

This is a nice boost to all scripts using zstd cli, typically in network or storage tasks. The effect is even more pronounced at faster compression setting, since the CLI overlaps a proportionally higher share of compression with I/O.

Previous default behavior (blocking single thread) is still available, accessible through --single-thread long command. It's also the only mode available when no multi-threading capability is detected.

General speed improvements

Some core routines have been refined to provide more speed on newer cpus, making better use of their out-of-order execution units. This is more sensible on the decompression side, and even more so with gcc compiler.

Example on the same platform, running in-memory benchmark zstd -b1 silesia.tar :

| Version | C.Speed | D.Speed |

|---|---|---|

| 1.3.3 llvm9 | 290 MB/s | 660 MB/s |

| 1.3.4 llvm9 | 304 MB/s | 700 MB/s (+6%) |

| 1.3.3 gcc7 | 280 MB/s | 710 MB/s |

| 1.3.4 gcc7 | 300 MB/s | 890 MB/s (+25%) |

Faster compression levels

So far, compression level 1 has been the fastest one available. Starting with v1.3.4, there will be additional choices. Faster compression levels can be invoked using negative values.

On the command line, the equivalent one can be triggered using --fast[=#] command.

Negative compression levels sample data more sparsely, and disable Huffman compression of literals, translating into faster decoding speed.

It's possible to create one's own custom fast compression level

by using strategy ZSTD_fast, increasing ZSTD_p_targetLength to desired value,

and turning on or off literals compression, using ZSTD_p_compressLiterals.

Performance is generally on par or better than other high speed algorithms. On below benchmark (compressing silesia.tar on an Intel Core i7-6700K CPU @ 4.00GHz) , it ends up being faster and stronger on all metrics compared with quicklz and snappy at --fast=2. It also compares favorably to lzo with --fast=3. lz4 still offers a better speed / compression combo, with zstd --fast=4 approaching close.

| name | ratio | compression | decompression |

|---|---|---|---|

| zstd 1.3.4 --fast=5 | 1.996 | 770 MB/s | 2060 MB/s |

| lz4 1.8.1 | 2.101 | 750 MB/s | 3700 MB/s |

| zstd 1.3.4 --fast=4 | 2.068 | 720 MB/s | 2000 MB/s |

| zstd 1.3.4 --fast=3 | 2.153 | 675 MB/s | 1930 MB/s |

| lzo1x 2.09 -1 | 2.108 | 640 MB/s | 810 MB/s |

| zstd 1.3.4 --fast=2 | 2.265 | 610 MB/s | 1830 MB/s |

| quicklz 1.5.0 -1 | 2.238 | 540 MB/s | 720 MB/s |

| snappy 1.1.4 | 2.091 | 530 MB/s | 1820 MB/s |

| zstd 1.3.4 --fast=1 | 2.431 | 530 MB/s | 1770 MB/s |

| zstd 1.3.4 -1 | 2.877 | 470 MB/s | 1380 MB/s |

| brotli 1.0.2 -0 | 2.701 | 410 MB/s | 430 MB/s |

| lzf 3.6 -1 | 2.077 | 400 MB/s | 860 MB/s |

| zlib 1.2.11 -1 | 2.743 | 110 MB/s | 400 MB/s |

Applications which were considering Zstandard but were worried of being CPU-bounded are now able to shift the load from CPU to bandwidth on a larger scale, and may even vary temporarily their choice depending on local conditions (to deal with some sudden workload surge for example).

Long Range Mode with Multi-threading

zstd-1.3.2 introduced the long range mode, capable to deduplicate long distance redundancies in a large data stream, a situation typical in backup scenarios for example. But its usage in association with multi-threading was discouraged, due to inefficient use of memory.

zstd-1.3.4 solves this issue, by making long range match finder run in serial mode, like a pre-processor, before passing its result to backend compressors (regular zstd). Memory usage is now bounded to the maximum of the long range window size, and the memory that zstdmt would require without long range matching. As the long range mode runs at about 200 MB/s, depending on the number of cores available, it's possible to tune compression level to match the LRM speed, which becomes the upper limit.

zstd -T0 -5 --long file # autodetect threads, level 5, 128 MB window

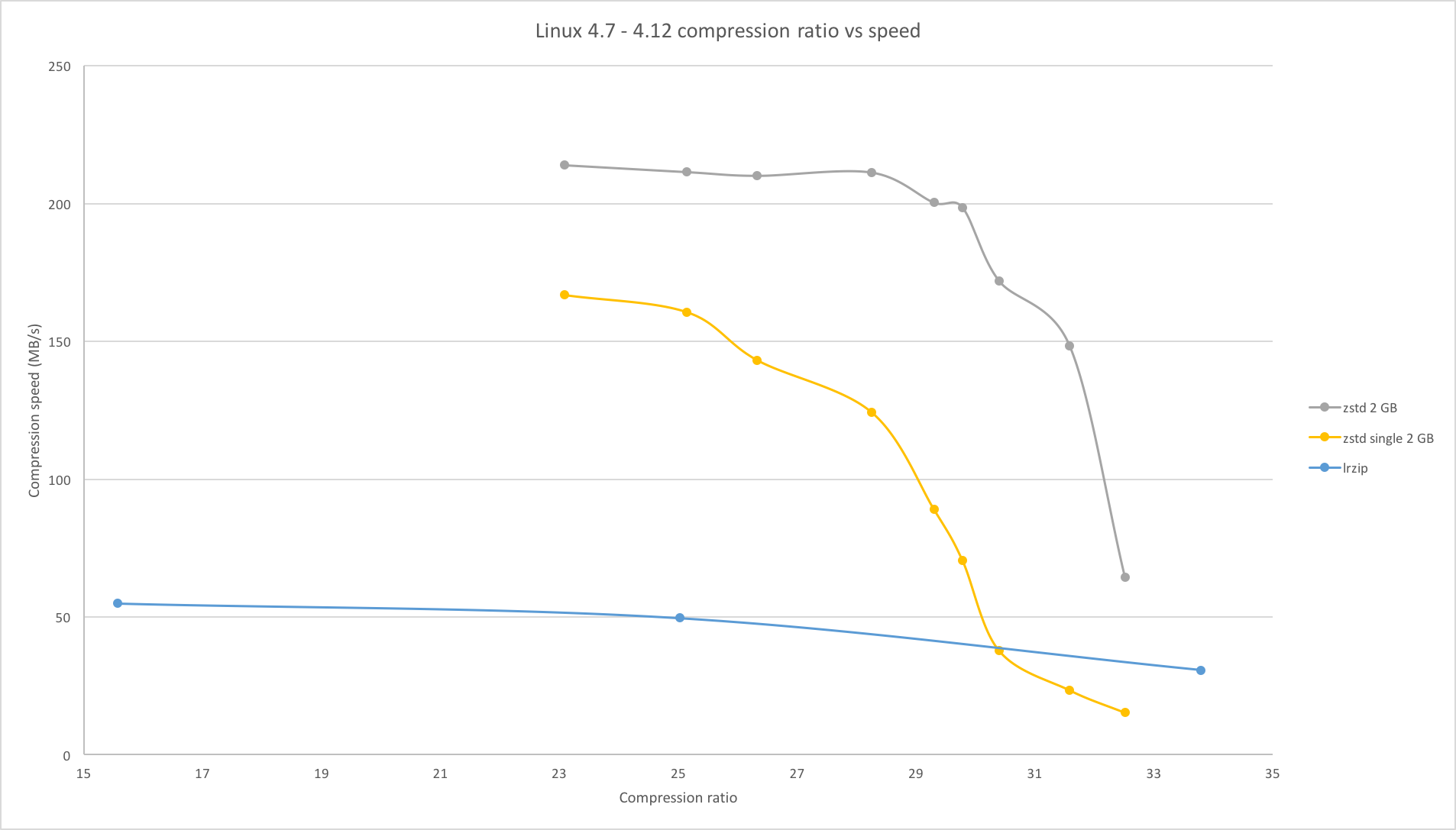

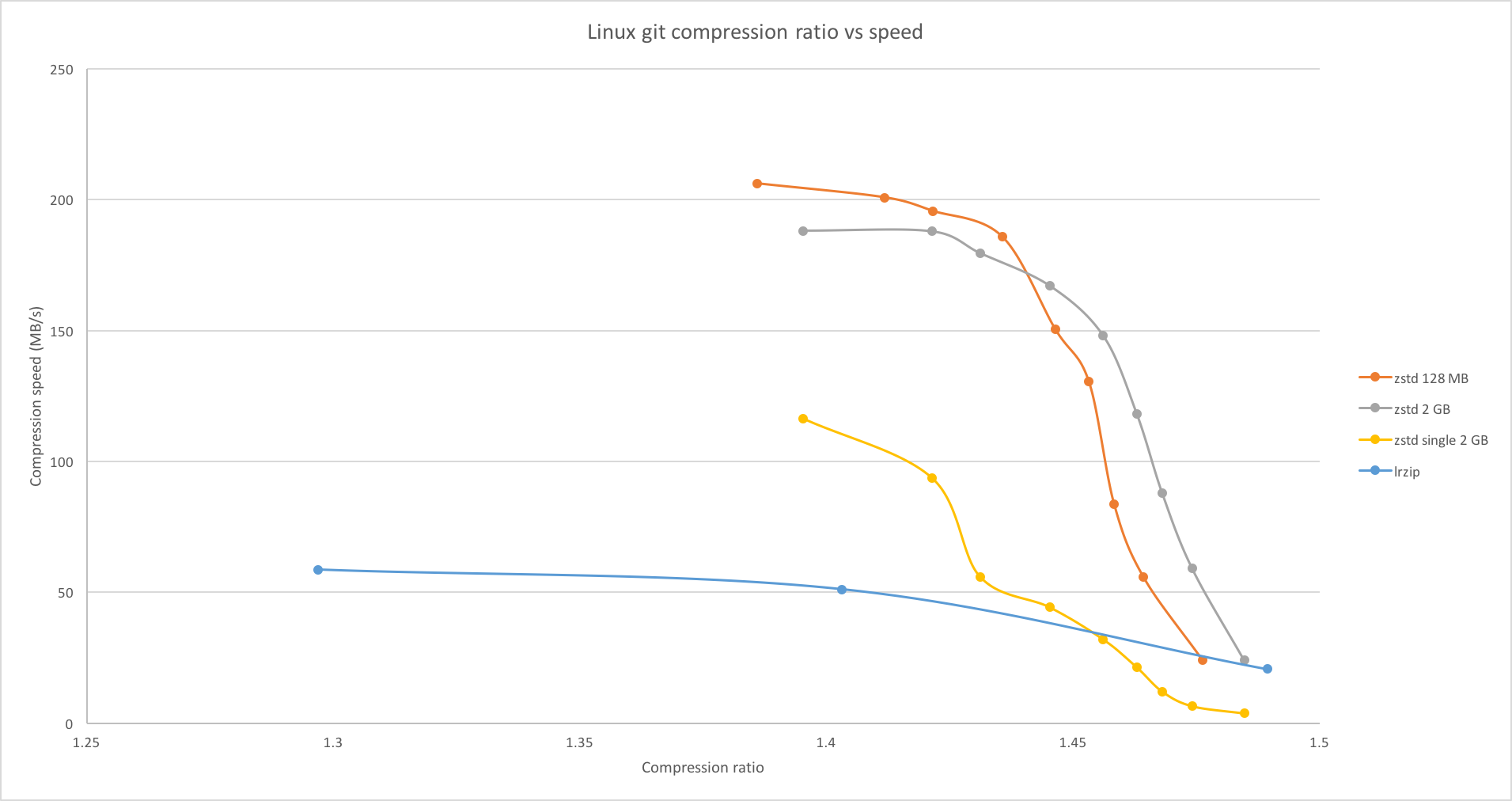

zstd -T16 -10 --long=31 file # 16 threads, level 10, 2 GB windowAs illustration, benchmarks of the two files "Linux 4.7 - 4.12" and "Linux git" from the 1.3.2 release are shown below. All compressors are run with 16 threads, except "zstd single 2 GB". zstd compressors are run with either a 128 MB or 2 GB window size, and lrzip compressor is run with lzo, gzip, and xz backends. The benchmarks were run on a 16 core Sandy Bridge @ 2.2 GHz.

The association of Long Range Mode with multi-threading is pretty compelling for large stream scenarios.

Miscellaneous

This release also brings its usual list of small improvements and bug fixes, as detailed below :

- perf: faster speed (especially decoding speed) on recent cpus (haswell+)

- perf: much better performance associating

--longwith multi-threading, by @terrelln - perf: better compression at levels 13-15

- cli : asynchronous compression by default, for faster experience (use

--single-threadfor former behavior) - cli : smoother status report in multi-threading mode

- cli : added command

--fast=#, for faster compression modes - cli : fix crash when not overwriting existing files, by Pádraig Brady (@pixelb)

- api :

nbThreadsbecomesnbWorkers: 1 triggers asynchronous mode - api : compression levels can be negative, for even more speed

- api :

ZSTD_getFrameProgression(): get precise progress status of ZSTDMT anytime - api : ZSTDMT can accept new compression parameters during compression

- api : implemented all advanced dictionary decompression prototypes

- build: improved meson recipe, by Shawn Landden (@shawnl)

- build: VS2017 scripts, by @HaydnTrigg

- misc: all

/contribprojects fixed - misc: added

/contrib/dockerscript by @gyscos

Zstandard v1.3.3

This is bugfix release, mostly focused on cleaning several detrimental corner cases scenarios.

It is nonetheless a recommended upgrade.

Changes Summary

- perf: improved

zstd_optstrategy (levels 16-19) - fix : bug #944 : multithreading with shared ditionary and large data, reported by @gsliepen

- cli : change :

-ocan be combined with multiple inputs, by @terrelln - cli : fix : content size written in header by default

- cli : fix : improved LZ4 format support, by @felixhandte

- cli : new : hidden command

-b -S, to benchmark multiple files and generate one result per file - api : change : when setting

pledgedSrcSize, useZSTD_CONTENTSIZE_UNKNOWNmacro value to mean "unknown" - api : fix : support large skippable frames, by @terrelln

- api : fix : re-using context could result in suboptimal block size in some corner case scenarios

- api : fix : streaming interface was adding a useless 3-bytes null block to small frames

- build: fix : compilation under rhel6 and centos6, reported by @pixelb

- build: added

checktarget - build: improved meson support, by @shawnl

Zstandard v1.3.2 - Long Range Mode

Zstandard Long Range Match Finder

Zstandard has a new long range match finder written by Facebook's intern Stella Lau (@stellamplau), which specializes on finding long matches in the distant past. It integrates seamlessly with the regular compressor, and the output can be decompressed just like any other Zstandard compressed data.

The long range match finder adds minimal overhead to the compressor, works with any compression level, and maintains Zstandard's blazingly fast decompression speed. However, since the window size is larger, it requires more memory for compression and decompression.

To go along with the long range match finder, we've increased the maximum window size to 2 GB. The decompressor only accepts window sizes up to 128 MB by default, but zstd -d --memory=2GB will decompress window sizes up to 2 GB.

Example usage

# 128 MB window size

zstd -1 --long file

zstd -d file.zst

# 2 GB window size (window log = 31)

zstd -6 --long=31 file

zstd -d --long=31 file.zst

# OR

zstd -d --memory=2GB file.zst

ZSTD_CCtx *cctx = ZSTD_createCCtx();

ZSTD_CCtx_setParameter(cctx, ZSTD_p_compressionLevel, 19);

ZSTD_CCtx_setParameter(cctx, ZSTD_p_enableLongDistanceMatching, 1); // Sets windowLog=27

ZSTD_CCtx_setParameter(cctx, ZSTD_p_windowLog, 30); // Optionally increase the window log

ZSTD_compress_generic(cctx, &out, &in, ZSTD_e_end);

ZSTD_DCtx *dctx = ZSTD_createDCtx();

ZSTD_DCtx_setMaxWindowSize(dctx, 1 << 30);

ZSTD_decompress_generic(dctx, &out, &in);Benchmarks

We compared the zstd long range matcher to zstd and lrzip. The benchmarks were run on an AMD Ryzen 1800X (8 cores with 16 threads at 3.6 GHz).

Compressors

- zstd — The regular Zstandard compressor.

- zstd 128 MB — The Zstandard compressor with a 128 MB window size.

- zstd 2 GB — The Zstandard compressor with a 2 GB window size.

- lrzip xz — The lrzip compressor with default options, which uses the xz backend at level 7 with 16 threads.

- lrzip xz single — The lrzip compressor with a single-threaded xz backend at level 7.

- lrzip zstd — The lrzip compressor without a backend, then its output is compressed by zstd (not multithreaded).

Files

- Linux 4.7 - 4.12 — This file consists of the uncompressed tarballs of the six Linux kernel release from 4.7 to 4.12 concatenated together in order. This file is extremely compressible if the compressor can match against the previous versions well.

- Linux git — This file is a tarball of the linux repo, created by

git clone https://github.com/torvalds/linux && tar -cf linux-git.tar linux/. This file gets a small benefit from long range matching. This file shows how the long range matcher performs when there isn't too many matches to find.

Results

Both zstd and zstd 128 MB don't have large enough of a window size to compress Linux 4.7 - 4.12 well. zstd 2 GB compresses the fastest, and slightly better than lrzip-zstd. lrzip-xz compresses the best, and at a reasonable speed with multithreading enabled. The place where zstd shines is decompression ease and speed. Since it is just regular Zstandard compressed data, it is decompressed by the highly optimized decompressor.

The Linux git file shows that the long range matcher maintains good compression and decompression speed, even when there are far less long range matches. The decompression speed takes a small hit because it has to look further back to reconstruct the matches.

| Compression Ratio vs Speed | Decompression Speed |

|---|---|

|

|

|

|

Implementation details

The long distance match finder was inspired by great work from Con Kolivas' lrzip, which in turn was inspired by Andrew Tridgell's rzip. Also, let's mention Bulat Ziganshin's srep, which we have not been able to test unfortunately (site down), but the discussions on encode.ru proved great sources of inspiration.

Therefore, many similar mechanisms are adopted, such as using a rolling hash, and filling a hash table divided into buckets of entries.

That being said, we also made different choices, with the goal to favor speed, as can be observed in benchmark. The rolling hash formula is selected for computing efficiency. There is a restrictive insertion policy, which only inserts candidates that respect a mask condition. The insertion policy allows us to skip the hash table in the common case that a match isn't present. Confirmation bits are saved, to only check for matches when there is a strong presumption of success. These and a few more details add up to make zstd's long range matcher a speed-oriented implementation.

The biggest difference though is that the long range matcher is blended into the regular compressor, producing a single valid zstd frame, undistinguishable from normal operation (except obviously for the larger window size). This makes decompression a single pass process, preserving its speed property.

More details are available directly in source code, at lib/compress/zstd_ldm.c.

Future work

This is a first implementation, and it still has a few limitations, that we plan to lift in the future.

The long range matcher doesn't interact well with multithreading. Due to the way zstd multithreading is currently implemented, memory usage will scale with the window size times the number of threads, which is a problem for large window sizes. We plan on supporting multithreaded long range matching with reasonable memory usage in a future version.

Secondly, Zstandard is currently limited to a 2 GB window size because of indexer's design. While this is a significant update compared to previous 128 MB limit, we believe this limitation can be lifted altogether, with some structural changes in the indexer. However, it also means that window size would become really big, with knock-off consequences on memory usage. So, to reduce this load, we will have to consider memory map as a complementary way to reference past content in the uncompressed file.

Detailed list of changes

- new : long range mode, using

--longcommand, by Stella Lau (@stellamplau) - new : ability to generate and decode magicless frames (#591)

- changed : maximum nb of threads reduced to 200, to avoid address space exhaustion in 32-bits mode

- fix : multi-threading compression works with custom allocators, by @terrelln

- fix : a rare compression bug when compression generates very large distances and bunch of other conditions (only possible at

--ultra -22) - fix : 32-bits build can now decode large offsets (levels 21+)

- cli : added LZ4 frame support by default, by Felix Handte (@felixhandte)

- cli : improved

--listoutput - cli : new : can split input file for dictionary training, using command

-B# - cli : new : clean operation artefact on Ctrl-C interruption (#854)

- cli : fix : do not change /dev/null permissions when using command

-twith root access, reported by @Mike155 (#851) - cli : fix : write file size in header in multiple-files mode

- api : added macro

ZSTD_COMPRESSBOUND()for static allocation - api : experimental : new advanced decompression API

- api : fix :

sizeof_CCtx()used to over-estimate - build: fix : compilation works with

-mbmi(#868) - build: fix : no-multithread variant compiles without

pool.cdependency, reported by Mitchell Blank Jr (@mitchblank) (#819) - build: better compatibility with reproducible builds, by Bernhard M. Wiedemann (@bmwiedemann) (#818)

- example : added

streaming_memory_usage - license : changed /examples license to BSD + GPLv2

- license : fix a few header files to reflect new license (#825)

Warning

bug #944 : v1.3.2 is known to produce corrupted data in the following scenario, requiring all these conditions simultaneously :

- compression using multi-threading

- with a dictionary

- on "large enough" files (several MB, exact threshold depends on compression level)

Note that dictionary is meant to help compression of small files (a few KB), while multi-threading is only useful for large files, so it's pretty rare to need both at the same time. Nonetheless, if your application happens to trigger this situation, it's recommended to skip v1.3.2 for a newer version. At the time of this warning, the dev branch is known to work properly for the same scenario.

Zstandard Fuzz Corpora

Zstandard Fuzz Corpora

Zstandard v1.3.1

- New license : BSD + GPLv2

- perf: substantially decreased memory usage in Multi-threading mode, thanks to reports by Tino Reichardt (@mcmilk)

- perf: Multi-threading supports up to 256 threads. Cap at 256 when more are requested (#760)

- cli : improved and fixed

--listcommand, by @ib (#772) - cli : command

-vVlists supported formats, by @ib (#771) - build : fixed binary variants, reported by @svenha (#788)

- build : fix Visual compilation for non x86/x64 targets, reported by @GregSlazinski (#718)

- API exp : breaking change :

ZSTD_getframeHeader()provides more information - API exp : breaking change : pinned down values of error codes

- doc : fixed huffman example, by Ulrich Kunitz (@ulikunitz)

- new :

contrib/adaptive-compression, I/O driven compression level, by Paul Cruz (@paulcruz74) - new :

contrib/long_distance_matching, statistics tool by Stella Lau (@stellamplau) - updated :

contrib/linux-kernel, by Nick Terrell (@terrelln)

Zstandard v1.3.0

cli : new : --list command, by @paulcruz74

cli : changed : xz/lzma support enabled by default

cli : changed : -t * continue processing list after a decompression error

API : added : ZSTD_versionString()

API : promoted to stable status : ZSTD_getFrameContentSize(), by @iburinoc

API exp : new advanced API : ZSTD_compress_generic(), ZSTD_CCtx_setParameter()

API exp : new : API for static or external allocation : ZSTD_initStatic?Ctx()

API exp : added : ZSTD_decompressBegin_usingDDict(), requested by @Crazee (#700)

API exp : clarified memory estimation / measurement functions.

API exp : changed : strongest strategy renamed ZSTD_btultra, fastest strategy ZSTD_fast set to 1

Improved : reduced stack memory usage, by @terrelln and @stellamplau

tools : decodecorpus can generate random dictionary-compressed samples, by @paulcruz74

new : contrib/seekable_format, demo and API, by @iburinoc

changed : contrib/linux-kernel, updated version and license, by @terrelln

Zstandard v1.2.0

Major features :

- Multithreading is enabled by default in the cli. Use

-T#to select nb of thread. To disable multithreading, build targetzstd-nomtor compile withHAVE_THREAD=0. - New dictionary builder named "cover" with improved quality (produces better compression ratio), by @terrelln. Legacy dictionary builder remains available, using

--train-legacycommand.

Other changes :

cli : new : command -T0 means "detect and use nb of cores", by @iburinoc

cli : new : zstdmt symlink hardwired to zstd -T0

cli : new : command --threads=# (#671)

cli : new : commands --train-cover and --train-legacy, to select dictionary algorithm and parameters

cli : experimental targets zstd4 and xzstd4, supporting lz4 format, by @iburinoc

cli : fix : does not output compressed data on console

cli : fix : ignore symbolic links unless --force specified,

API : breaking change : ZSTD_createCDict_advanced() uses compressionParameters as argument

API : added : prototypes ZSTD_*_usingCDict_advanced(), for direct control over frameParameters.

API : improved: ZSTDMT_compressCCtx() reduced memory usage

API : fix : ZSTDMT_compressCCtx() now provides srcSize in header (#634)

API : fix : src size stored in frame header is controlled at end of frame

API : fix : enforced consistent rules for pledgedSrcSize==0 (#641)

API : fix : error code GENERIC replaced by dstSizeTooSmall when appropriate

build: improved cmake script, by @Majlen

build: enabled Multi-threading support for *BSD, by @bapt

tools: updated paramgrill. Command -O# provides best parameters for sample and speed target.

new : contrib/linux-kernel version, by @terrelln

Zstandard v1.1.4

cli : new : can compress in *.gz format, using --format=gzip command, by @inikep

cli : new : advanced benchmark command --priority=rt

cli : fix : write on sparse-enabled file systems in 32-bits mode, by @ds77

cli : fix : --rm remains silent when input is stdin

cli : experimental xzstd target, with support for xz/lzma decoding, by @inikep

speed : improved decompression speed in streaming mode for single pass scenarios (+5%)

memory : DDict (decompression dictionary) memory usage down from 150 KB to 20 KB

arch : 32-bits variant able to generate and decode very long matches (>32 MB), by @iburinoc

API : new : ZSTD_findFrameCompressedSize(), ZSTD_getFrameContentSize(), ZSTD_findDecompressedSize()

API : changed : dropped support of legacy versions <= v0.3 (can be selected by modifying ZSTD_LEGACY_SUPPORT value)

build: new: meson build system in contrib/meson, by @dimkr

build: improved cmake script, by @Majlen

build: added -Wformat-security flag, as recommended by @pixelb

doc : new : doc/educational_decoder, by @iburinoc

Warning : the experimental target zstdmt contained in this release has an issue when using multiple threads on large enough files, which makes it generate buggy header. While fixing the header after the fact is possible, it's much better to avoid the issue. This can be done by using zstdmt in pipe mode :

cat file | zstdmt -T2 -o file.zst

This issue is fixed in current dev branch, so alternatively, create zstdmt from dev branch.

Note : pre-compiled Windows binaries attached below contain the fix for zstdmt

Zstandard v1.1.3

cli : zstd can decompress .gz files (can be disabled with make zstd-nogz or make HAVE_ZLIB=0)

cli : new : experimental target make zstdmt, with multi-threading support

cli : new : improved dictionary builder "cover" (experimental), by @terrelln, based on previous work by @ot

cli : new : advanced commands for detailed parameters, by @inikep

cli : fix zstdless on Mac OS-X, by @apjanke

cli : fix #232 "compress non-files"

API : new : lib/compress/ZSTDMT_compress.h multithreading API (experimental)

API : new : ZSTD_create?Dict_byReference(), requested by Bartosz Taudul

API : new : ZDICT_finalizeDictionary()

API : fix : ZSTD_initCStream_usingCDict() properly writes dictID into frame header, by @indygreg (#511)

API : fix : all symbols properly exposed in libzstd, by @terrelln

build : support for Solaris target, by @inikep

doc : clarified specification, by @iburinoc

Sample set for reference dictionary compression benchmark

# Download and expand sample set

wget https://github.com/facebook/zstd/releases/download/v1.1.3/github_users_sample_set.tar.zst

zstd -d github_users_sample_set.tar.zst

tar xf github_users_sample_set.tar

# benchmark sample set with and without dictionary compression

zstd -b1 -r github

zstd --train -r github

zstd -b1 -r github -D dictionary

# rebuild sample set archive

tar cf github_users_sample_set.tar github

zstd -f --ultra -22 github_users_sample_set.tar

Zstandard v1.1.2

new : programs/gzstd , combined *.gz and *.zst decoder, by @inikep

new : zstdless, less on compressed *.zst files

new : zstdgrep, grep on compressed *.zst files

fixed : zstdcat

cli : new : preserve file attributes

cli : fixed : status displays total amount decoded, even for file consisting of multiple frames (like pzstd)

lib : improved : faster decompression speed at ultra compression settings and 32-bits mode

lib : changed : only public ZSTD_ symbols are now exposed in dynamic library

lib : changed : reduced usage of stack memory

lib : fixed : several corner case bugs, by @terrelln

API : streaming : decompression : changed : automatic implicit reset when chain-decoding new frames without init

API : experimental : added : dictID retrieval functions, and ZSTD_initCStream_srcSize()

API : zbuff : changed : prototypes now generate deprecation warnings

zlib_wrapper : added support for gz* functions, by @inikep

install : better compatibility with FreeBSD, by @DimitryAndric

source tree : changed : zbuff source files moved to lib/deprecated