New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Need basic help with installation - need more details #34

Comments

|

Hello Brian, Here's how I got jlesage's crashplan pro docker image up and running on my Synology and adopted my existing backup set created from when I was using Patter's CrashPlan package (EDIT: This reflects my personal setup [I have a Synology DS1515+ running DSM 6.1.5 and Docker package version 17.05.0-0367; I have a single volume and it's set up with Synology's SHR-2 RAID configuration] and is intended to be a step-by-step example of how I deployed the docker image on my system. Your individual Synology setup may differ from mine, so please understand that your deployment of the docker image may differ as a result.): From Synology DSM:

From PuTTY:

From Synology DSM:

From your Web Browser:

I hope this helps you get up and running! |

|

You can also look at Synology's documentation: |

|

Thank you for the step by step instructions. I am a new to docker too. Everything installed and all working fine :) cheers But I have a problem when I do a restore to the original folder, nothing restored? is this something to do with folder structures? many thanks |

|

I haven't yet had to restore from within the Docker Container, but I'm fairly certain the reason the restore doesn't work is because Volume1 is being mapped as Read Only: If you change the @jlesage: is that correct? would the user need to remove the docker container and re-create it with the read/write tag? |

|

Yes that's correct @excalibr18. Re-creating the container with the R/W permission for the volume would allow the restore to work properly. |

|

Perfect! Thanks guys |

|

Is there a way to map other external drives, as most of my Crashplan files are on drives other than the Volume 1 internal disks? Thank in advance for any help |

|

I am having problems connecting with the web service. After running the command and going to the webpage I get Code42 cannot connect to its background service. Retry If I create the container via the UI it works but I can't create the storage volume to map to /volume1 where all my data is contain in different shares. Any guidance would be appreciated. Also, as a note, I used the default instructions and it's moved all my files on CrashPlan to Deleted. so be careful when setting $HOME:/storage as your location if you already have a backup set. I am not sure if I am going to have to just upload everything again 2.1TB or if it will de-dup and mark the files as not deleted. |

|

@Slartybart, yes it's possible. You just need to map additional folders to the container (using the |

|

@jedinite13, did you follow instructions indicated at https://github.com/jlesage/docker-crashplan-pro#taking-over-existing-backup? |

|

@Slartybart The Synology maps USB external drives as If you're looking to mount the entire USB external drive (in a similar way as Volume1 internal disks are mapped), then you can use |

|

@jedinite13 The Synology UI won't allow you to map Volume1, which is why you need to do it via the command line. As for not being able to connect to the background service, try doing this:

That has seemed to worked for other users (see https://github.com/jlesage/docker-crashplan-pro/issues/14) |

|

One other thing to be aware of: if on the Synology you are experiencing the iNotify Max Watch Limit issue, please refer to this solution: https://github.com/jlesage/docker-crashplan-pro/issues/23 Also be aware that you'll have to repeat setting the max watch limit in |

|

@excalibr18 & @jlesage Totally AWESOME, folks! Thank you so much! Just followed the instructions instead of fussing with the client app on my MAC. I have the following questions... Kind of dumb ones I think ;-)

Once again, thank you! |

Correct. I had the local client installed on my Win10 machine and after going with jlesage's docker solution, I no longer need to use that machine. I just need any machine on my local network with a web browser to access the docker container's web GUI.

I would assume this is correct, however when used Patter's package I always opted to use the system Java, not Patter's internal one.

Assuming you upgraded you CrashPlan subscription to the Pro/Small Business plan, and jlesage's docker solution is confirmed to be working for you (i.e. you have adopted your backup set and it is working correctly), then I don't see a need to keep the CrashPlan Home (Green) package.

Correct. With this Docker solution, you don't need the packages from Patter's. |

|

@excalibr18 Thanks for responding so quickly to my questions. Another Question: My migration worked fine per the above instructions and the backup is running now looking at the web GUI. However, I cannot seem to browse the files and/or folders that have been backed up using "Manage Files". I can see that volume1 is listed, but browsing under it can't seem to find my file structure that's set up for backup. Any tips? Thank you in advance! |

|

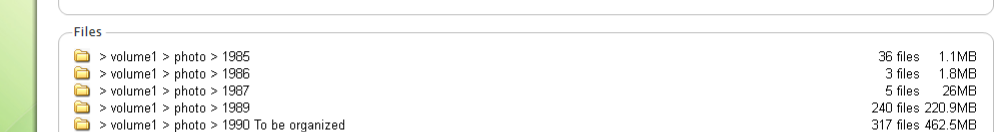

To make sure I understand you correctly, are you saying that when you click "Manage Files" in the GUI, there's nothing listed under volume1? Or are there folders listed, but you just can't find the folders you are backing up? Did you map volume1 using |

|

What were the folder paths for your backup when you were using Patters' package? If you scroll down in that window, do you see any folders with a check mark next to it? |

|

Web GUI shows that the backup is running and the files that are new and hadn't been backed up for the last 25 days (yes, I hadn't done this upgrade for so long after the backup had stopped) are being backed up (can't see the files that are being backed up themselves... Just from the size of the backup remaining |

|

Ah, LoL, I didn't notice the scroll bar to the right side in the GUI and thought those were the only folders what I sent you :-) I do see all the other folders when I scroll down. Thanks a million for your help. And, sorry for bugging you for this silly thing and taking up your time. Best, |

|

No worries. Glad it's all sorted out and working for you! The new GUI for CrashPlan Version 6 is less intuitive than the one before it unfortunately. |

|

Thanks for the installation steps. I've just transitioned to the docker from Patter's solution. Everything went well and the container is running CP pro, however I can't access the web gui. I've followed this step: Any suggestions would be appreciated. |

|

Which parameters did you give to the |

|

@lenny81: Are you accessing the web GUI of the Docker on the same LOCAL network as the Synology? |

|

I'm trying to access it on the same network as the synology. jlesage: No idea. I'm pretty clueless with all this. I can't remember which guide I used to set it up or how I check if I mapped the port... how do I check this? |

|

Any thoughts on why my local install doesn't show any files, even though it does show my folders? |

|

I had this when my new container arguments were wrong. It looked like I could see my folders, but it was really just showing me the structure from my prior backup. I would double-check your -v paths to make sure you have them correct. |

|

Likely a permission issue. Did you set the |

|

Okay, I think between the two of you that you've helped highlight where I went wrong. I'm going to have to delete the container and recreate it to update some of the variables. I'll let you know the results. |

|

On the plus side, I can see the full directory structure and the files on my network now. The downside seems to be that it's acting like it's starting over instead of resuming where it left off. I saw this because it claims to have backed up only 13GB of a multi-TB dataset. Now maybe it's only reporting what it's done since I restarted it, but it reads as if we're back at square one. |

|

I tried again to delete the container and recreate it, only this time I read somewhere to remove any files from the system related to the old container. So yes, for good or for bad I did that. This time it started up much like when I created it the very first time by asking me to log in, but now it's stuck there. It just sits on that screen and says "Signing in..." and it's been that way for 7 hours now. |

|

Try to restart the container. |

|

The Restart seemed to do some good, I had to log in again but I'm able to navigate around, it's not just stuck on "Signing in". However, it still looks like it's started over telling me that it's only 13% complete, that should be more like 50-60%. As requested here is my startup script. |

|

Looking at the progression doesn't tell if your data is actually uploaded or deduplicated. You can look at the history (Tools->History) for more details. |

|

I'm having trouble mapping additional volumes, the first one is fine and works perfectly but I have volumes 2, 3, 4 and 5 that I need to map. This works and gets Vol1 mapped But adding to that to map the additional volumes doesn't Using that returns the following error: The Container is created but can't be started. I will admit that I don't really know what I'm doing Can anyone help? |

|

You have too much |

Thank you :) |

|

I have a question about permissions: should I use -e USER_ID=0 -e GROUP_ID=0, or is this a security risk? Am running this on a Synology. Also am I or am I not supposed to run the "docker run -d ..." command as sudo? |

|

You are running as root when using To create the container, you have the choice: either you manually run the |

|

When using putty to build my containers, I log in with my Synology admin user, but I'm always required to run the And concerning not running as root, what would be best practice? Make a 'docker' group and a 'docker' user, that has read/write in both the /volume1/docker folder and /volume1/share and nothing else? |

|

Correct, the And yes, creating an additional user with restricted permissions is a solution. |

|

And one other question: I just ran the docker with |

|

I think it should not be a problem to stop the container before it ends the synchronization. Once you re-start the container, CrashPlan should just continue where it left off. |

|

Thanks jlesage, you are a one-woman-tripple-A-customer support. Very much appreciated ! 🥇 edit: So sorry for being so sexist. I assumed you were a guy. |

|

I recently had to reset my Synology from the ground up, which of course meant having to reset CrashPlan. So after I got the Synology running I added Docker followed closely by adding CrashPlan. Having had to reset CrashPlan once before I made sure to keep the setting I used. docker run -d But having done this all CrashPlan will do is constantly scan files. It goes for a while then restarts over and over. Today is 27 days since it performed any sort of backup. I've tried to delete it to reinstall, but when I try that I'm told that there are containers dependant on CrashPlan and it won't let me. Any thoughts or suggestion would be welcome. Thank you Mark |

|

I would try to look at the history (View -> History) and at |

|

Like taking a car to a mechanic, it spent a month scanning for files and seems to be "happy" now. Under Tools -> History all it would appear to complain about it failing to upgrade to a newer version. As I said I had to re-do my entire Synology, That involves downloading and installing Docker again, so I would assume it is the latest and greatest version available. I also had to get the CrashPlan package again, and again I would have assumed that to be the latest as well, but maybe not since it's trying to update so soon. Right now it looks like it's backing up files, what still seems off though is it doesn't seem to think anything was backed up previously like it didn't sync with my previous backup after the reinstall. That doesn't quite match what I see when I log in to view my archive online, there it shows a good chunk of data with my last activity within the past 24 hours. I'm still a bit confused, but it seems to be running, maybe. :-) |

|

For your information, I just published a new docker image containing the latest version of CP. |

|

My CrashPlan installation on my Synology NAS has been running fine for months, actually nearly a year since my last post. Now I'm getting emails regularly that there hasn't been any backup, the email today said nothing has been backed up for 8 days. So my first question is why would something that has been running fine for nearly a year just stop? And if it stopped because it's missing some update, why can't it tell me that instead of just stopping? Second, when I try to bring up the local web interface I have to log in, which I do, and then I do again and again because it will sit there and say it's scanning files, then go to a black screen and sit there until asking me to log in again. This happens so quickly I'm not actually able to do anything when I get logged in, but I did get a look at the log which says there is an upgrade I need. My guess is I have the nuke the whole thing and reinstall CrashPlan, but I wanted to check here first because that's not a process I enjoy. Thank you for your help. |

|

CrashPlan will not perform any backup if its version is too old. So if you didn't upgraded your container recently, then you need to do it to re-enable backup. If you don't want to manually bother with upgrading your container, you could try to install Watchtower (https://github.com/containrrr/watchtower), a container which allows to seamlessly upgrade your containers. |

|

Thank you for taking the time on a holiday to respond. I truly appreciate that, and I'll look into what you suggested since my efforts to follow the directions to update always seem to result in grief. I will say again if the reason for the program to stop working is known, such as a version is too old, that perhaps that should be explicitly reported along with the email saying that the backup hasn't happened. Thank you again. Mark |

You should forward this feedback to CrashPlan, since there is nothing I can do for that... |

|

PNMarkW2 - I have a suggestion for your notification problem. You can join GitHub and "watch" jlesage's project for updates, e.g. a new Docker container with the latest CrashPlan version. Go here, and click on "watch" at the top of the page: https://github.com/jlesage/docker-crashplan-pro |

I am fairly new to Linux and Docker. I need more instructions that those given in the quick start:

Launch the CrashPlan PRO docker container with the following command:

docker run -d

--name=crashplan-pro

-p 5800:5800

-p 5900:5900

-v /docker/appdata/crashplan-pro:/config:rw

-v $HOME:/storage:ro

jlesage/crashplan-pro

Where do I enter the above commands?

Is this all I need to do?

Any tips would be greatly appreciated. Not sure how to begin. I have installed Docker on the Synology NAS, but that's as far as I got.

Thanks,

Brian

The text was updated successfully, but these errors were encountered: