New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

opentapioca long-due contributions #53

Comments

|

Hey @ziodave, that is really impressive work! Thanks a lot for offering to upstream it. Here are some broad comments about your points:

That's really nice! The managed-schema configuration is really impressive - getting the pipeline right for so many languages is a ton of work.

It's really nice too and I do not see any reason not to have this upstream. The extra dependencies are worth it.

We already have a mechanism to fetch all the subclasses of a given type (well, all the ones we can get via a SPARQL query without timeout…) and caching those more aggressively can make sense in some situations. How to upstream your changesI am keen to review and merge any PR you make around those topics. I don't have a good overview of your fork - I do not know to what extent it contains other changes which you might not be ready to upstream yet, or changes which are too specific to your own use case to be worth including upstream. I can imagine that it is significant work for you to break down the changes you made in your fork into topical PRs. Perhaps it is a useful exercise to ensure that the changes are well documented, tested, and that the CI remains green. But if you judge that your fork is generic enough and free from such "un-upstreamable" changes, we could simply decide to make your fork the official thing. I'd be happy to tag along as a co-maintainer of course. The way I generally do this is creating a GitHub org for the project, transfer the project from my account to the org and add you as an owner. Then you can just push the changes from your fork there, and done. |

Right now the list of languages is hard-coded but it's easy to move it to a configuration file, so that one may run only english (or any other preferred language): BTW in the process I also moved the configuration to an AppConfig class which makes it easier to deploy on managed environments (e.g. K8s), by using environment variables (or an .env file).

Agree, I was thinking that we can also provide an API that, given a Q will tell you whether it'll be indexed or not along with some helpful information.

I ran the UTs and CI is still green with 2 exceptions:

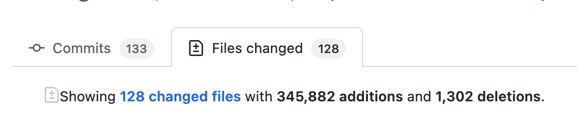

If I try to open a PR now, there are quite some changes and I am afraid I am to the point where I wouldn't be able to split it into smaller PRs: If you agree, I would like to get to a point where I can create a copy of opentapioca.org adding a language selector, so that we can run some e2e tests. And if we like it, we can then move to a separate org 🚀 |

|

Sounds like a plan, looking forward to it! :) |

|

Just a quick update: I have the instance ready, I am performing the latest task to prepare and then train the classifier (as a matter of fact it already works, but it doesn't populate the |

|

Another quick update: I have a version running here, https://opentapioca.wordlift.io/, atm w/o classifier. Pending actions:

|

I'll look at preparing a new classifier 👆 later on - for the time being I am using the sample one |

|

@wetneb new logo ☝️ I'll move forward with updating the repo 🙏 |

|

I love it! Thanks for the initiative :) |

|

Hi everyone, Thanks for this amazing project! I have one question, while running @ziodave's fork and trying to annotate some text, I get If that's relevant, I'm using |

Hello @wetneb

I worked intensively on opentapioca in the last days and I wanted to summarize the major points. The general aim is to add proper multilingual support to OpenTapioca, improve its performance (in relations to its bootstrap, like creating the index) and including more entities into the indexing by relying on the type inheritance.

As I started this work a long time ago and only recently got back to it, some of the things might not make 100% sense :-)

In short:

Multiple language support

In order to properly support multiple languages I created a different structure for doc where the labels would be stored in tag_{language}name and the aliases in tag{language}_alias.

This is fundamental to ensure that I can tag targeting a specific language (set as a parameter to the annotate api).

In order to enable this I created a custom solr image (based on the official one) which includes the required extra libs and support files:

https://github.com/wordlift/docker-solr-opentapioca

You can see the managed-schema configuration here:

https://github.com/wordlift/docker-solr-opentapioca/blob/master/tapioca/conf/managed-schema

The image is published to docker hub as well:

https://hub.docker.com/r/wordlift/solr-opentapioca/tags

You can spot the updated structure in my own fork:

Performance (train-bow and index-dump)

I tried to boost as much as possible the performance of

train-bowandindex-dumpsince I would like to be able to run many tests and I can't wait one week before I can recreate an environment.I used

cProfileto profile the app and remove bottlenecks, working in particular on the readers (removing the json loads from there) and replacing the system json.loads with the more efficient msgspecs package, while defining the schema that we need.I also added rxpy and added multithreading support for index-dump (i.e. not waiting for Solr to reply while moving forward processing new docs):

https://github.com/ziodave/opentapioca/blob/master/opentapioca/taggerfactory.py#L146-L159

The result is that I believe that I would be able to index the full wikidata dump in ~4 hours (compared to the 3-4 days I needed before) on my M1.

This is in conjuction with the type inheritance cache (more on this on the next paragraph).

Wikidata type inheritance

More than a year ago I noticed that some of the entities I needed where not in the index (e.g. ingredients). By investigating I found out that we needed to do some sparql queries to Wikidata for the type inheritance. TBH I don't recall how much was already in opentapioca and what I contributed :-)

Anyway as sparql queries are what they are, I added here a

@cachehof https://github.com/ziodave/opentapioca/blob/e44fa870ca12373a5bb0f51694a756974c600799/opentapioca/sparqlwikidata.py#L9 which stores the results to disk for future references.And then I created a GH repo holding this cache:

https://github.com/wordlift/opentapioca-wikidata-query-cache

The increase of speed is drastic and I think the Wikidata folks may also be happy that I don't repeat the same queries.

Summary

In short I really like OpenTapioca and I am trying to grow it according to my needs (and from some conversation I had, also other people needs).

My aim now would be to share this work with you, see if you have the time to review it and eventually merge it upstream.

Thanks for your time 🙏

The text was updated successfully, but these errors were encountered: