New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add Beam Racing Algorithm for lagless VSYNC ON #133

Comments

|

Sounds like an interesting technique but I do not understand why you would want to refresh the buffer 5000 times per second when that represents 1/100th of a 50Hz frame. Surely, 1/10th of a frame would be largely enough and absolutely imperceptible to humans. Moreover it would provide even more protection against jitter. |

|

(Contacted Toni by email, who has expressed interest in this, as well) 10 slices is probably a great compromise, more may be overkill for most people, but I'm a perfectionist: Provide a configurable granularity option where possible :D ... Indeed, a millisecond is directly imperceptible to humans but in the eSports/competitive leagues -- one needs to mathematically and scientifically consider the "cross-the-finish-line-first" effect: In such situations, a millisecond can make a difference even if one is no longer able to feel it. In a statistical point of view, it can mean frag/nofrag in a simultaneous-draw situation (see each other, frag each other at same time, tight human reaction time spreads between the best human players -- so latency differences of devices begin to play a bigger role at the upper leagues). Since it is metaphorically similar to an Olympics race where crossing the finish line first wins -- where a millisecond matters even if you can't feel the millisecond. That said, you're right -- for more applications -- 100 slices is overkill. A GroovyMame developer posted a mod here for beam chasing: BTW, I have a successful beam chasing test on using Monogame engine here: Upon further tests (for cross platform friendliness), I have found out I can easily predict raster position accurately without access to a raster register, as a percentage between vblank exit and vblank entry, or a linear time-based value between two VBI events (minus common VBI size, e.g. 45/1125ths of a refresh cycle) -- I use my mouse to drag a VSYNC OFF tearline from a completely software-guessed raster position in this video: YOUTUBE: https://www.youtube.com/watch?v=OZ7Loh830Ec This is a 20 slice test in C#. Initially I'll be publishing source code for a raster estimator calculator that might be of use to emu devs (for future, need to port C# to C++). I've found all the inaccuracy sources for a software-based raster estimation without a hardware raster poll -- and managing to get near-pixel-exact raster guess with a mouse-draggable VSYNC OFF tearline. In this test, the pixels are appearing in only 2-3ms between API call and photons hitting my eyeballs (!!!) but if I stay away from those tearline artifacts by rendering a little later further below outside of the tearing region (adding one additional frameslice margin), input lag increases slightly. Certainly it seems like 10 slices is more than enough for most applications, but that's still 4ms of input lag at 50 Hz. The input lag is equal to two tearslices, due to the need to aim the jitter margin here: ((1/50sec) / 10 slices) * 2 = 4ms input lag (In reality, if you rasterplot over the previous emulator refresh cycle, you have a large jitter safe region size equalling one full refresh cycle minus one slice. Nontheless, the above is the "jitter goal" region) Not bad, not bad, considering that's less than a refresh cycle. But fast GPU users like 1080 Ti can do better than 10 slices quite easily. I find I can do 1000 slices per second (16 to 20 slices per frame) with less than 10% GPU overhead on my GTX 1080 Ti. And when graphics vendors are eventually persuaded to bring back front-buffer rendering for all D3D/OGL apps (they recently did for virtual reality ) -- then one can simply eliminate slice granularity and simply "raster-plot" emulator scanlines directly to the front buffer! And simply use a simple user-configurable (or jitter-autodetected) time-based chase distance for beam racing. Either way, going to 20 frame slices per refresh cycle reduces input lag to ~2ms with little additional overhead on a GeForce 1080Ti, still worthwhile in past BlurBusters lag testing. So, ideally, make it configurable, since "first-to-finish-lnie-effect" makes milliseconds matter in eSports even if you cannot feel the millisecond -- e.g. if you're running a theoretical competition between a real console & a PC based emulator. Making a software-based emulator reach lag parity (within less than 1ms) requires many frame slices -- approximately 50+ -- to more closely match "cross-the-finish-line-style" latency-sensitive tests in figurative head-vs-head tests between a real device and the emulator. That said, overkill for 99%+ of situations, but the configurable option should at least be provided... Yeah, I'm nuts -- Latency purist here -- just providing the mathematics and context... |

|

I am going to implement this (It is almost guaranteed to be in next version) but probably only max 10 (will be configurable) or so sections because it fits very well with current emulator behavior where it emulates few milliseconds worth of emulated time in single burst, then it waits (sleeps if wait time is more than 2ms), repeat. "Only" missing part is forcing frame (re-)rendering between each step. I don't support purists without 100% proof that it really makes a noticeably real world (not benchmarks designed to do it) difference :) Acceptable real world test for example is Pinball Dreams. Input latency difference is really easy to notice just by playing it. |

|

Great to hear that it's going to be easy to implement into WinUAE because of its current architecture! Yes, 10 frameslices is a fine goal. But I'd argue for configurable compatibility for up to 20 or 40 slices for higher performing systems. 10 is fine, but here's some proof that you asked: Microsoft determined that 1ms makes a difference (see YouTube video https://www.youtube.com/watch?v=vOvQCPLkPt4 ...). Another simple real-world game example is if you're aiming archery at a moving target or such, a 4ms sudden error in a 1000/second moving target means a 4 pixel miss-the-bullseye. So you want constant-lag, not random-varying-lag, for aimwork like that. You can see how simple that math is. A person subconsciously trains towards a constant lag offset, even if they cannot feel that. Anyway, it's just common-sense mathematics. I can give more proof if desired, but you know how wordy I can get on these matters. Simply on variable-lag effects caused by surge-execution methods if the game does input reads at random locations during the scanout (very rare). Or if an input read aligns at the boundary and jitters back-and-fourth between the next or previous slice -- you'll have a random "lag noise" effect that a human cannot feel but causes a "hmmm, I seemed to be scoring better a few minutes ago". Most won't notice, but a few do, once the "lag noise" begins to become big enough. Even though BlurBusters started as hobbyist and being a little bit more "commercial" (mind you!) I still donate a lot of effort to the cause of input lag. Because of my huge interest, I even paid a commission to a researcher (lots of peer reviewed stuff on ResearchGate) and the BlurBusters commissioned article on input lag research now: Foreword Part1 Part2 Part3. I am willing to hire/fund real input lag research, preferably from peer-reviewed researchers -- I'm still recruiting (mark@blurbusters.com). That is how serious I talk about input lag! 😄 That said, I'm here personally, because of personal interest, and donating code and knowledge to the cause, to make it as easy as possible, and as painless as possible, at least to implement a rudimentary beam chasing algorithm. While my general preferred goal is 1ms lag + 1ms lag jitter before pixels are being output on the video output. That said... even 10 slices is a huge improvement over the status quo, and you're doing a huge step just by bothering to implement a beam chasing algorithm -- which I give mucho kudos and I'll leave it at that even if it's just 10 slices! 👍 I have no desire to annoy developers, even with my personal over-enthusiasm. Tip on thread sleeping: Make sure you use a sub-millisecond thread sleeper, or do a busywait on a high performance counter. (Personally, I do both -- I thread-sleep till right before goal counter, then I busywait for precise time-alignment to the final microsecond.) I find that using only the millisecond-accurate thread sleeper is not accurate enough and causes too much beam chasing artifacts. |

|

Some 100% OPTIONAL common-sense tips to prevent future hair-pulling: -- The pause between refresh cycles. Basically the VSYNC/VBI -- can be different between emulated and real world. Realworld VBI size can be assumed as 45/1125th of a refresh cycle by default for the average computer system (1080p with a 45-line VBI). However, a 45:1125 VBI ratio is not the same ratio as 45:525 of NTSC (525 lines total, 480 visible). So you'll have a very slight beam chasing asymmetry effect if you don't mathematically compensate for for VBI size differences (I do in my demo) between the emulated VBI and realworld VBI -- that's how I'm able to get my software raster guess to be near scanline exact. -- OPTIONAL: Platform-specific cake frosting. For more beam-chasing accuracy, if you want the actual VSYNC size or the speed of the raster -- Windows uses QueryDisplayConfig() to get the useful data in .totalSize.cy / .activeSize.cy / .hSyncFreq.Numerator / .hSyncFreq.Denominator .... With these you can either get VBI size (if targeting fixed Hz) and the horizontal scanrate in number of scanlines per second (works with both fixed Hz and variable refresh rate). However, I found this is not critically necessary if you're just aiming at 10-frameslice granularity on most fixed-Hz monitors -- you can simply use a constant for "45/1125" for any computer monitor, even 1440p and 4K modes and it'd work really well with only a ~1% error. -- OPTIONAL: Beam chasing works with variable refresh rate (GSYNC/FreeSync) + VSYNC OFF to get less input lag in your emulator than the original device! You'd have a very fast beamchase on a very fast scanout, followed by a long pause between refresh cycles. e.g. "60Hz" refresh cycles scanned out in 1/144sec or 1/240sec. Combining the two is lower lag than beam-chasing alone or VRR alone, so combining the two gives you an amplified lag-reduction effect. Basically variable refresh rate + VSYNC OFF mode (e.g. GSYNC + VSYNC OFF). Obviously, not essential nor necessary, but it's fun to mathematically understand beamchasing + variable refresh rate works (GSYNC and FreeSync + VSYNC OFF mode -- slow frame deliveries starts new refresh cycles and fast frame deliveries interrupts the currently-scanning-out frame with a VSYNC OFF tearline. It's all in timing of Present() for the hybrid "GSYNC+VSYNC OFF" mode (there are also some NVAPI calls that can force this special mode of operation, too). As soon as you Present() the display immediately or almost immediately exits Vblank (e.g. .InVBlank == false instantly on Direct3D Present()). That's because Present() immediately begins a refresh cycle on a variable refresh rate display. Now, we know that the scan rate of a variable refresh rate display can be really fast -- e.g. 144Hz style scanrate or 240Hz style scanrate -- even if you're only doing "60Hz" via 60 frames per second. Theoretically, you can have an average of up to half a refresh cycle less input lag than the original machine, depending on when the game does its own input read. Very funny when you think about it. You simply piggyback on that understanding to beam-chase a (faster) variable refresh cycle). If you aim for VRR compatibility in your beamchaser algorithm -- the possible "best practice" is simply beginning to deliver the first frameslice to trigger the beginning of a new software-triggered refresh cycle. Then verify .InVBlank exited (maybe spin just in case, I have a niggling suspicion some drivers take a hundred microseconds before actually beginning to exit the VBI). Once it exits VBI, then that's your starting pistol to beam-race the rest of the emulator refresh cycle -- it will scanout at the constant horizontal scan rate (always remains fixed, even on a VRR display -- only the VBI interval varies). The easiest way to understand a VRR display is that they are simply variable-size blanking intervals, and that the API call to deliver a frame, simply begins a new refresh cycle if the display isn't already in the middle of a refresh cycle. The display waits for your software, instead of the other way around. As long as the refresh interval is within the VRR range (e.g. 30Hz-240Hz). Slow refreshes simply causes it to keep repeating the last delivered refresh cycle. Fast refreshes receive either the VSYNC ON (waits) or VSYNC OFF treatment (interrupts) depending on how it's configured. My algorithms, by default, stick to a cross-platform "estimate the raster" approach, since more developers will implement beam racing if I keep it to as generic-as-possible, but one can easily use strategic platform #ifdefs to improve accuracy of beam chasing in a platform-specific way. This additional "more complex knowledge" stuff, at the end of the day, is optional. Skip this post if you just want to focus on simpler beam chasing :) |

|

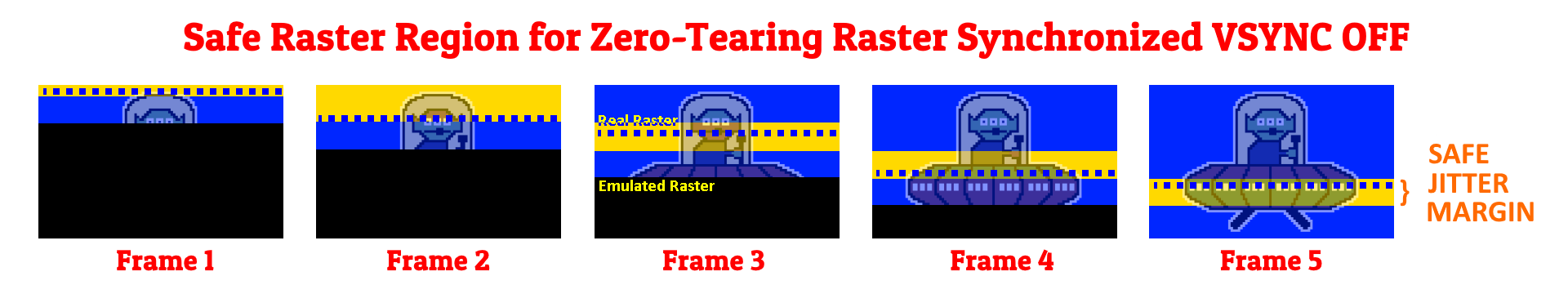

just my 50c: as someone not familiar with the issues related to vsync, the graphics in the original post make little to no sense. i just see a half-drawn frame with a funny yellow line in it, repeated for 5 frames with differing level of completeness, lacking any explanation about what that means. |

Good point! I upvoted you, because I see you are a 'Contributor', so....this peaks my interest: Have you ever done any raster-related work in your lifetime? Raster interrupts, copper, CRT scanning effects, etc. This bug tracking system is only understandable by people who have done raster work (raster interrupts, vsync, etc). So let's begin with a glossary: GLOSSARYSo let me fire off a quick glossary, since you might be familiar with some of the names already under other different terms...

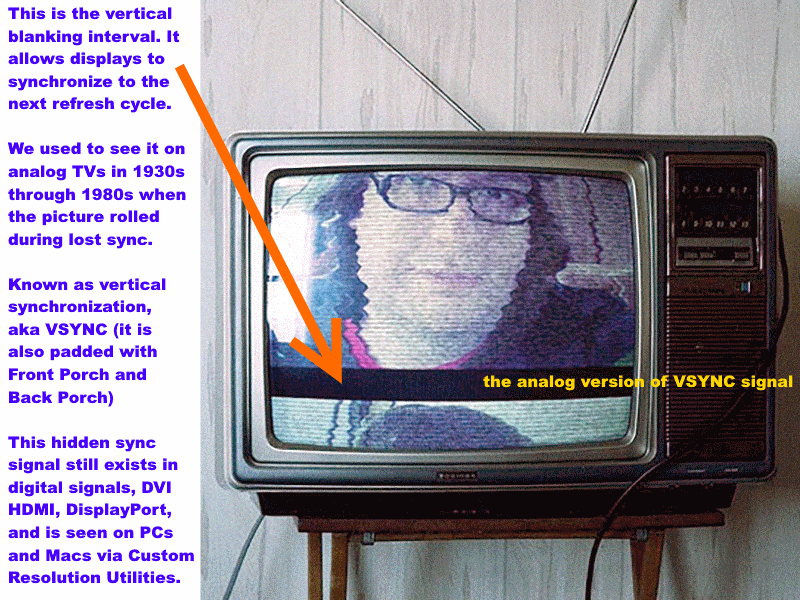

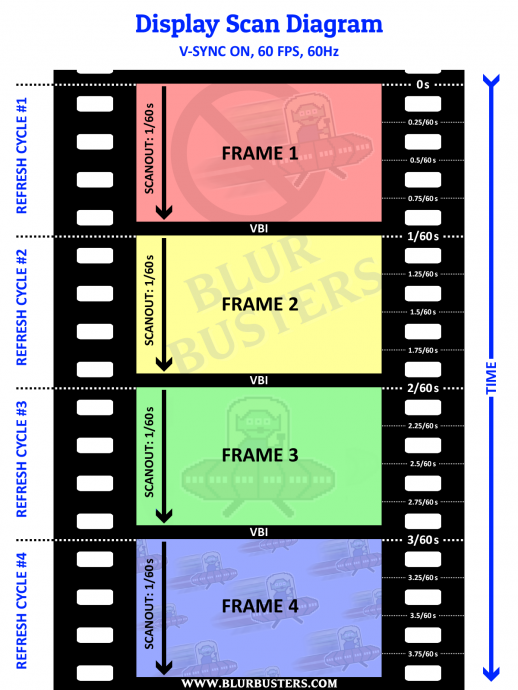

...This a photograph of the old analog version of the "VBI", "VSYNC", "VBLANK" when a television is unsynchronized with a rolling picture because of a misadjusted "VHOLD" knob (VHOLD = Vertical Hold). That black bar still exists in all digital signals as an electronic safeguard delay between pixel transmissions of refresh cycles in tomorrow's 8K HDTV. People who write the video routines for 8-bit emulators necessarily have to do raster work -- basically simulate the scanout at the single-pixel level. This is necessary when emulating a lot of classic chips, to accurately emulate graphics. They traditionally simulate their scanout to a hidden emulator frame buffer before transmitting the frame buffer to the display. In the lagless example, cable scanout is laglessly display scanout too. This also happens with the best gaming monitors, the panel scanout is often synchronous with the cable scanout. Beam chasing is essentially real-time streaming of the pixels from the software. At least at frameslice-granularity level, via ultra-high-framerate VSYNC OFF. If you open a modern Custom Resolution Utility (screenshot), you will see weird stuff like "Horizontal Total", "Horizontal Sync", "Vertical Total", "Vertical Porch", "Scan Rate", etc. See stackexchange answer for some of why that was used in the analog days. You've just figuratively opened the hood to modifying your graphics card's video signal when you do that. All this analog-era terminology is still used in the digital era for different purpose -- essentially synchronization markers representing start/end of a row of pixels, and start/end of a refresh cycle, guard delay between refresh cycles to help a display prepare to begin a new refresh cycle, etc. They're much smaller now (e.g. VESA CVT-R Version 2 signal timing standard) than the analog era, but they still exist as things like small pauses between refresh cycle scanouts -- important when doing manual math calculations for beam chasing applications -- often obtainable via standard API calls nowadays (e.g. GetDisplayInfo on Windows). But the bottom line, all this 1930s stuff is still carried over into a 2010s-era video output. We may now packetize it in DisplayPort micropackets (e.g. compress a whole row of pixels into a packet) but it's still calendar-style sequential pixel scanout at the end of the day. (pun intended). Every single digital refesh cycle is scanned out. Usually top-to-bottom, but can be a different scan-direction (e.g. certain tablets, phones, etc) such as right-to-left or left-to-right. If you have a 60Hz Dell LCD computer monitor, check out www.testufo.com/scanskew -- watch the lines tilt! That's a demonstration of an artifact from display scanout skewing the lines because the top edge and bottom edge pixels are displayed at different times. By definition, at least one of the writers of the emulator, generally has the deep understanding of rasters necessary to understand this bug-tracking issue item without much further explanation. Obviously, author Toni without a doubt. Admittedly, I was light on explanations for the rest of people, so this follow up aims to address that deficiency. Most 8-bit and 16-bit era emulators simulate scanning internally, basically, plotting pixels to a frame buffer, left-to-right, top-to-bottom, to emulate an original CRT scanning. This has to be done because classic software often famously piggybacked on this to create many display effects. (like "raster interrupts"). Figuratively, beam chasing is the art of synchronizing the emulator raster beam & the real-world pixel scanning. To literally zero-out input lag. Incredible timing precision is needed for beam chasing, but it's now possible on modern systems. The VR people are doing it (in a 4-tile granularity extent), and the successful 10-tile beam chasing modification for GroovyMAME -- the first classic emulator to implement successful beam chasing in this modern era. Traditional "VSYNC ON" mode in a graphics driver means a computer you render a whole emulator refresh cycle, THEN deliver the frame to the display. That's how emulators did it for years. The Game of Beam ChasingReal-time beam chasing is simply real-time scanout of the refresh cycle to the display WHILE you're still rendering the SAME emulator refresh cycle. The input lag thusly, becomes a tiny fraction of a refresh cycle! It wasn't practical until recently. Now that my experiments, and also GroovyMAME modification, has successfully demonstrated that near-lagless output of frames can indeed be done. As noticed, the earlier YouTube video demonstrates real-time raster control. We simply use VSYNC OFF (tearing) to bypass the graphics drivers VSYNC ON behaviours, and simulate VSYNC ON with VSYNC OFF, in a sub-refresh-cycle way. In the digrams, the topmost line is the real-world display's currently-being-displayed row of pixels (light hitting human eyes). The bottommost visible line is the emulator's raster. Whatever is below the topmost line isn't yet displayed on the realworld display yet. You're simply rendering and appending new scanlines or strips (tiles) to the bottom of the "not-yet-transmitted-to-display" emulator refresh cycle -- even while the top part of the emulator frame buffer is currently being transmitted to the display (pixels are transmitted serially). So the name of the game is for the emulator to "keep drawing" below that topmost beam. The tighter the distance between the topmost line (real world pixels-to-photons) and bottommost line (emulator frame being drawn), the smaller the input lag. Good classic emulators draw frames, one scanline at a time, left-to-right, top-to-bottom. We simply synchronize that as closely as possible to the real world display scanout, at realtime sub-refresh-cycle levels. I'm gong to improve that Blur Busters article (once I finish the open source demos), so perhaps I can use you as a bouncing-off point to improve my introductions/technical explanations. Firstly, are you familiar with any raster-related terminology? Does any of these terms ring a bell: display scanning, raster interrupt, VHOLD, blanking interval, vertical blanking interval, VBI, VSYNC, graphics driver VSYNC ON. graphics driver VSYNC OFF, etc. These terminology are heavily related to each other, and I probably need to create a glossary to explain how these terms play off each other. An explanation for technical people who are only vaguely familiar with how displays work -- it's amazing that how a 21st century 8K experimental HDTVs still uses top-to-bottom display scanning concepts originally found in analog signals used by 1930s televisions. This is a rule forced by having to sequentially transmit pixels through one wire, so the calendar-style scan was standardized almost a century ago (left-to-right, top-to-bottom scan). I'll have to create an introduction/glossary of the concept of display scanning (and all the terminologies, VBI, VSYNC, raster, etc) but I've created some display scanout diagrams that will be useful in such a future article. On a time-based manner, this is how display refresh cycles are transmitted over a video cable or transmission, whether it be DisplayPort, VGA, DVI, HDMI, Component, NTSC, PAL, etc. Whether it's a 1930s TV broadcast, or a 2018-era 8K computer desktop -- the above scan-out diagram is fully applicable. And you can also see high-speed video of an LCD display scanning -- I made a test that flashes black-white-black-white on a screen -- and then pointed a high speed camera at it. This video shows how an LCD display still scans top-to-bottom, just like an old-fashioned CRT too. Emulators scan top-to-bottom. So beam chasing is the art of real-time delivery of pixels to the display, without creating a full frame first. To technical readers/programmers who are newbies to display scanning/rasters: After I wrote the above, any questions? What other concepts/terminologies should I explain? I definitely need to write a better introduction (like a further-improved-version of the above) to help explain beam-chasing technology to fellow programmers like you who are technically minded but is unfamiliar with display behavior. I will be copy-and-pasting this reply into a BlurBusters article as an introduction to display scanning technology. |

|

well... thanks for the effort, but this is quite a wall of text (i.e. tl;dr). my point when asking was how those 5 frames illustrate an algorithm when according to my understanding they just illustrate that there's a difference between the real raster and the emulated raster while a frame is drawn.

this screenshot is behind a login wall

nope |

|

No worries. Raster stuff is a complex topic so I wasn't sure what you were confused by at first. I'm recycling my above text to be copy-and-paste (an edited version thereof) into an article for the blurbusters.com website. So the effort wasn't wasted. People ask me about elements all the time, so I might as well... Gotchya, I'll fix the link. [fixed] |

|

[OPTIONAL INFO FOR VRR IMPLEMENTATION] As Toni confirmed implementing beam chasing in WinUAE, I've gotten emails from multiple developers who are monitoring this, for their own respective emulators. So I'm adding this: It has come to my attention that many software developers do not realize what variable refresh rate does. I keep re-re-re-rexplaining variable refresh rate to software developers, and also explaining why it reduces input lag of low-framerate material such as emulators. I understand exactly why, but many people don't. So here goes: A variable refresh rate display (GSYNC, FreeSync), running in variable refresh rate mode, in summary:

Now that you understand VRR better, let me explain how VRR is combined with VSYNC ON and VSYNC OFF simultaneously.

Generally, that's why BlurBusters articles advocate frame rate caps slightly below VRR. Caps too tight against VRR, 144fps can mean some frametimes are 1/140sec, and other frametimes are 1/150sec, since frame rate caps aren't always perfect in many games. One frame gets the VRR treatment and the other frame gets either the VSYNC ON or VSYNC OFF treatment. For the perspective of emulator development, that means you ideally want to run your emulator at ~59 to ~59.5 frames per second (slow down your emulation slightly) if you're running on a VRR display whose maximum refresh rate is 60 Hz (e.g. 4K 60 Hz FreeSync displays). There's been reported input lag problems trying to run 60fps on a 60Hz VRR display. Fortunately, most VRR displays run at above 60Hz, so you probably don't need to care or worry about this situation, but I only mention this additional item, to be familiar with the considerations. In all situations, Present() can also be glutSwapBuffers() in OpenGL, or any equivalent call that generates a tearline during VSYNC OFF. As long as you have access to an API call on your platform, that can generate a tearing artifact. Controlling the exact location of tearing is simply clock mathematics as an offset from the last VSYNC timestamp. Tearing artifacts during VSYNC OFF are raster-based. They correspond to the time of the API call to flip a frame buffer. What changed is that today's computers have microsecond-accuracy performance counters these days. This makes it possible for software to control the exact location of tearing artifacts -- just like in my YouTube video: https://www.youtube.com/watch?v=OZ7Loh830Ec That means, if you want to make your beam-racing algorithm compatible with VRR mode, simply do the first Present() after you render your first tile. Do the Present() at your emulator framerate interval (e.g. 1/60sec) after your last first-tile Present(). These Present() calls will trigger the beginnings of those refresh calls, and this is your figurative "starting pistol" for beam racing because at that point, RasterStatus.ScanLine suddenly starts incrementing as a result of your Present() call. Variable refresh rate displays also makes random-looking framerates smooth. If you've never seen a variable refresh display, it has the uncanny ability to synchronize a random frame rate with a random refresh rate -- the refresh interval can change every single refresh cycle, exactly matching gametime deltas (frame times), keeping things moving smoothly despite erratic framerates. Framerate changes are de-suttered. But variable refresh rate displays are also great for emulators for a different reason: They function as a defacto "Quick Frame Transport" mechanism, in the form of fast-scanouts between long blanking intervals. HDMI recently standardized the "Quick Frame Transport" (QFT) technique for Version 2.1 of HDMI. This is one of the multiple features that is part of the new Auto-Low-Latency mode for automatic Game Mode operation (which also, supposedly, allows consoles to signal TVs to automatically switch into "Game Mode", and ability to do VRR and/or QFT. The mathematics of this is the same -- it's simply a higher scanrate signal while keeping Hz same. This is excellent for reducing input lag for VSYNC ON material (as most consoles are VSYNC ON). Don't worry about this complicated spec stuff. Just try (on a best-effort-basis) to query the system's Vertical Total or Horizontal Scan Rate. What happens if you switch to a smaller VRR range with a lower max than the display capability? This is what happens: Switching to a lower fixed Hz and enabling VRR simply uses a slower scanout and narrower VRR range (e.g. 100Hz giving VRR range 30Hz thru 100Hz, does a ~1/100sec scanout). Scanline increments more slowly, but still at a constant rate, too. For lowest lag, always choose max scanrate / max Hz (e.g. 144Hz) and intentionally frame pace your Present() or glutSwapBuffers() calls to exactly emulator refresh rate, e.g. 60 fps (emulator Hz) -- frame pace to preferably 100us accuracy or better frame pacing jitter. 1ms of frame pacing error means 4 pixels microstutter at 4000 pixels/sec horizontal panning on a 4K display, so framepace well when you map a fixed perfect simulated Hz onto a variable refresh rate display to avoid microstutters during VRR. The best practice is a waterfall accuracy approach:

In all situations, I still need a hook to a VSYNC event (but it can come from anything -- a DWM loop, a 2nd 3D instance in background, a hardware poll, a pre-existing kernal event, etc). But other than that, as long as the 3D API is VSYNC OFF comp That keeps everything cross-platform, while optionally improved-accuracy on platforms with a hardware poll (fixed Hz or VRR) or scanrate knowledge (fixed Hz or VRR) or vertical total knowledge (fixed Hz). By programming things in a sensible common-sense fashion, your beam chasing algorithm becomes compatible with almost everything. No access to RasterStatus? No problem, as long as I can listen to VSYNC events. No access to modelines? No problem, as long as I can listen to VSYNC events. No access to QueryDisplayConfig? No problem, as long as I can listen to VSYNC events. Etc. Do the calculations correctly, and then everything else is optional cake frosting. For developers reading this, all this is optional however, you don't have to make your beam-chasing algorithm compatible with VRR. But it's quite easy to do so if you already have working beam chasing. (It's only a minor modification to an existing beam chasing algorithm once you've gotten it working for fixed-Hz displays) [/OPTIONAL INFO FOR VRR IMPLEMENTATION] |

|

First test versions are now available here: http://eab.abime.net/showthread.php?t=88777 |

|

More exact link for githubbers wanting beta: http://eab.abime.net/showthread.php?t=88777&page=8 It is working well -- As far as I can tell. That is an amazing lag magican disappearing trick. 40ms input lag down to less than 5ms in WinUAE! That is the emulator lag "holy grail" as Toni spoke in his words. |

|

Has anyone tried this on Nvidia 700 or 900 series cards. I have had major issues with these cards and inconstant timing of the frame-buffer. The time at which the frame-buffer is actually sampled can vary by as much as half a frame making racing the beam completely impossible. The problem stems from an over-sized video output buffer and also memory compression of some kind. As soon as the active scan starts the output buffer is filled at an unlimited rate (really fast), this causes the read position in the frame-buffer to pull way ahead of the real beam position. The output buffer seems to store compressed pixels, for a screen of mostly solid color about half a frame can fit in the output buffer, for a screen of incompressible noise only a small number of lines can fit and therefor has much more normal timing. This issue has plagued my mind for several years (I gave my 960 away because it bothered me so much), but I have yet to see any other mentions of this issue. I only post this here now because its relevant. |

|

UPDATE: @tonioni I had changed my github account to a business account from personal account, so the 'ghost' account is me. Kind of an unintenced-consequences of my account-type switch. Ugh. WinUAE beam racing is working extremely well, so I consider this a completed Issue now, perhaps you should flag it as such, instead of "In Progress". @TheDeadFish -- Use more frame slices on high performance computers. The frameslices are supposed to throttle themselves, so it should only surge-scanout one frameslice-heightful during frame slice beam racing. So more frameslices means more throttle opportunities. 10 frame slices per refresh cycle at 60 Hz would be 600 frame slices per second, at 1/600sec throttle opportunities per frame slices. The more frame slices, the more latency symmetry between emulator-raster and real-raster. This will give you more symmetry between emu raster and real raster. Also, you'll have more beam-race reliablity in FSE on the primary monitor, so when connecting a 2nd display, make it the primary. |

UPDATE: Toni has released a WinUAE beta with this! Click here

The algorithm simple diagram:

The algorithm documentation:

https://www.blurbusters.com/blur-busters-lagless-raster-follower-algorithm-for-emulator-developers/

Basically, ultra-high-page-flip-rate raster-synchronized VSYNC OFF with no tearing artifacts, to achieve a lagless VSYNC ON. Achievable using standard Direct 3D calls.

Ideally rendering to front buffer is preferred, but modern graphics cards can now do thousands of buffer swaps per second of redundant frame buffers (to simulate front buffer rendering) -- so this is achievable within the sphere of standard graphics APIs utilizing VSYNC OFF and access to the graphics card's current raster.

You'd map the real raster (e.g. #540 of 1080p) to the emulated raster (e.g. #120 of 240p) for beam chasing of scaled resolutions.

Basically, works with raster-accurate emulators (line-accurate or cycle-accurate) to simulate a rolling-window frame slice buffer, for synchronizing emulator raster to real world raster (tight beam racing).

EDIT: After I posted this, I have learned at least one other have simultaneously invented a (formerly-before-unreleased) jitter-forgiving beam-chasing algorithm, I'm happy to share due credit. I'm open sourcing a raster demo soon, keep tuned. (see subsequent posts)

The text was updated successfully, but these errors were encountered: