-

Are any of these necessary to get code running? No!

- Are these necessary to get write elegant and beautiful idiomatic Haskell? Absolutely.

-

Think of them as Design Patterns.

- What really matters in the end, is being able to use them in programs.

- Suppose I have a list of strings, and I want to

concat, them. How do I express that using a fold?foldr (++) strings ""

- I have a bunch of pictures, I want to put them on top of each other. How do I do that with the Diagram library?

- We have an empty diagram, the diagram of nothing. We have an empty string.

- We have a way to compose together diagrams, by drawing them one after the other. We have a way to compose lists, by concatenating lists.

- Composition is associative, but not necessarily commutative. For example, given three lists, we might concatenate them in two ways:

[1,2,3] ++ [4,5,6] ++ [7,8,9] == [1,2,3,4,5,6] ++ [7,8,9] == [1..9][1,2,3] ++ [4,5,6] ++ [7,8,9] == [1,2,3] ++ [4,5,6,7,8,9] == [1..9]- But changing the order of the lists changes things.

- Here are the signatures:

class Monoid a where

mempty :: a

mappend :: a -> a -> a

mconcat :: [a] -> a

mconcat = foldr mappend mempty

-

We'll call

mappendas(<>)as an infix operator, for simplicity of notation.- Available from

Data.Monoid

- Available from

-

And, these are the laws:

(x <> y) <> z = x <> (y <> z) -- associativity

mempty <> x = x -- left identity

x <> mempty = x -- right identity

- But what are laws, again?

- Laws are really just definitions. When we call a type a monoid, what we really mean by that is that it has a

<>and amemptythat follow the laws. - Therefore, it is the duty of the programmer to prove the Monoid laws when he writes a monoid instance.

- Laws are really just definitions. When we call a type a monoid, what we really mean by that is that it has a

Bool, withTrueasmemptyand&&asmappend.- What other ways are there to write Monoid instances of

Bool?

- What other ways are there to write Monoid instances of

- How can you form a Monoid out of Integers?

- Functions of type

a -> a(called Endomorphisms) form a monoid. Can you guess what themappendandmemptyare?

- Elegant pattern that you can see everywhere.

- You can do

mconcatwhenever there is a list of values which belong to teh same Monoid.- Can have genearlized

mconcats, which isfold :: Monoid m => t m -> m. For example, you maybe able to fold a tree up, somehow.

- Can have genearlized

fis a monoid homomorphism if it respects(<>). That is,f (x <> y) = f x <> f y. Recognizing a homomorphism might help you refactor code nicely.- For example, say that rotating a diagram

rotateis expensive. Then rather than usingrotate diagram1 <> rotate diagram2, it might be a better idea to dorotate (diagram1 <> diagram2)instead, if you knew thatrotateis a homomorphism.

- For example, say that rotating a diagram

- The following is valid:

map (+1) [1,2,3] - It goes inside the list, and does (+1) to every element.

- Wouldn't it be nice if we could write, in the same way, say,

fmap (+1) (Just 2)? - Or something like that for Sets, Trees, and more?

- Functors are abstractions of

maps.

fmap id = id

fmap (g . f) = fmap g . fmap f

We shall use the infix shorthand (<$>) for fmap.

import Data.Char

capitalize = map toUpper

getLoudLine = capitalize <$> getLine

We have seen a lot of IO last time

Chains of Computations. You have seen this in class.

Look at Footnote 14 for a discussion on this.

- The List monad somehow corresponds to non-deterministic computations.

- This is an example from Learn You a Haskell. Suppose I want to find out all the squares in a chessboard which the knight could be at after three moves.

type KnightPos = (Int,Int)

moveKnight :: KnightPos -> [KnightPos]

moveKnight (c,r) = filter onBoard

[(c+2,r-1),(c+2,r+1),(c-2,r-1),(c-2,r+1)

,(c+1,r-2),(c+1,r+2),(c-1,r-2),(c-1,r+2)

]

where onBoard (c,r) = c `elem` [1..8] && r `elem` [1..8]

in3 :: KnightPos -> [KnightPos]

in3 start = moveKnight `concatMap` (moveKnight `concatMap` (moveKnight `concatMap` [start]) )

- In the list Monad,

return x = [x]and(>>=) = flip concatMap. - Let's rewrite this in Monadic style.

in3 start = return start >>= moveKnight >>= moveKnight >>= moveKnight

- In fact, we can write

moveKnightas a non-deterministic computation usingdonotation.

moveKnight :: KnightPos -> [KnightPos]

moveKnight (c,r) = do

(c',r') <- [(c+2,r-1),(c+2,r+1),(c-2,r-1),(c-2,r+1)

,(c+1,r-2),(c+1,r+2),(c-1,r-2),(c-1,r+2)

]

if (c' `elem` [1..8] && r' `elem` [1..8])

then return (c',r')

else [] --ignoring this c

in3 :: KnightPos -> [KnightPos]

in3 start = do

first <- moveKnight start

second <- moveKnight first

moveKnight second

type Stack = [Int]

pop :: Stack -> (Int,Stack)

pop (x:xs) = (x,xs)

push :: Int -> Stack -> ((),Stack)

push a xs = ((),a:xs)

- But how do you do a bunch of operations to a stack in a nice way? Say, you want to push

3and pop the last two items.

stackManip :: Stack -> (Int, Stack)

stackManip stack = let

((),newStack1) = push 3 stack

(a ,newStack2) = pop newStack1

in pop newStack2

- This is not elegant. What if we wanted to do 10 operations?

-

How does a computer generate Random Numbers?

- Well, it cannot, until it has the ability to get some randomness from some source.

- However, it can generate "random looking" numbers, i.e, given a sequence of numbers that it generates, it'd be "hard" to predict what the next number is.

-

Start with some seed value. Everytime you want a new number, you update it.

-

A very simple example is the Linear Congruential Generator.

- Fix parameters

m,c,modulus. - Initialize the "state" of the generator with

seed. - When asked for a number, output the current state, and update state to

(state * m + c) `mod` modulus.

- Fix parameters

data RandGen = RandGen {curState :: Int, multiplier :: Int, increment :: Int, modulus :: Int}

runRandom :: RandGen -> (Int, RandGen)

runRandom gen1 = (v, gen2)

where

v = curState gen1

v2 = (v * (multiplier gen1) + (increment gen1)) `mod` (modulus gen1)

gen2 = gen1 { curState = v2}

threeRandoms :: RandGen -> (Int, Int, Int)

threeRandoms gen =

let (r1, g2) = runRandom gen

(r2, g3) = runRandom g2

(r3, _ ) = runRandom g3

in (r1, r2, r3)

- Again, this is not elegant.

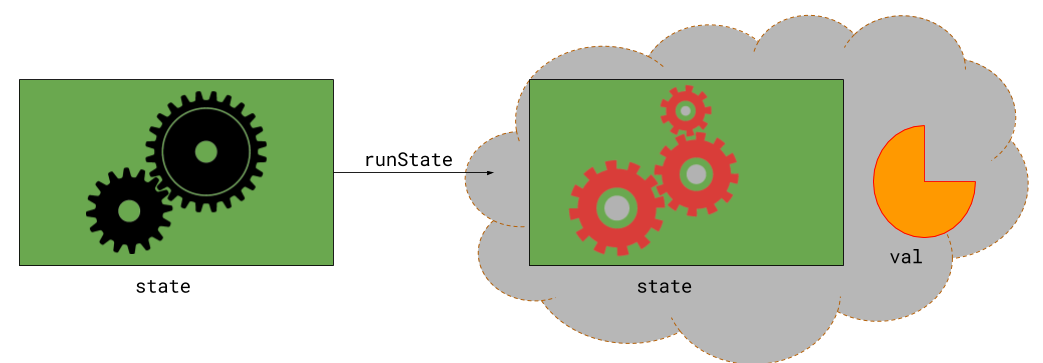

- Think of a stateful computation as a function that takes a

state, and chages extracts avalueof it, and changes thestate.

newtype State s v = State { runState :: s -> (v,s) }

- Notice the resemblance between

runState :: s -> (v,s),runRandom :: RandGen -> (Int, RandGen),pop :: Stack -> (Int,Stack).- We'll have

State Stack Int, which means, we'll use theStacks for maintaining the states, and our values will beInts. - We'll have

State RandGen Int, which means we'll use theRandGens to keep track of the Generators, and will getInts out of them.

- We'll have

- Guess what? We'll form a

Monadout ofState s.return valshould give you a stateful computation that does not alter the state at all, and just produces theval(>>=) :: State s a -> (a -> State s b) -> State s b, tries to chain together two stateful computations.

instance Monad (State s) where

return x = State $ \s -> (x,s)

(State h) >>= f = State $ \s -> let (a, newState) = h s -- run the first stateful computation, and call the result a

(State g) = f a -- now, use this result to create a new stateful computation

in g newState

- Instead of writing

Stack -> (a, Stack), we'll writeState Stack a

pop :: State Stack Int

pop = State $ \(x:xs) -> (x,xs)

push :: Int -> State Stack ()

push a = State $ \xs -> ((),a:xs)

- Now,

stackManipis really just a stateful computation

stackManip :: State Stack Int

stackManip = do

push 3

pop

pop

ghci> runState stackManip [5,8,2,1]

(5,[8,2,1])

- Let's think of another stack manipulation, which involves some conditionals.

stackStuff :: State Stack ()

stackStuff = do

a <- pop

if a == 5

then push 5

else do

push 3

push 8

ghci> runState stackStuff [9,0,2,1,0]

((),[8,3,0,2,1,0])

- Again, we can rewrite

runRandomwithState.

runRandom :: State RandGen Int

runRandom = State $ f

where

f gen1 = (v, gen2)

where

v = curState gen1

v2 = (v * (multiplier gen1) + (increment gen1)) `mod` (modulus gen1)

gen2 = gen1 { curState = v2 }

- Then, we can rewrite

threeRandomsas follows:

threeRandoms :: State RandGen (Int, Int, Int)

threeRandoms = do

a <- runRandom

b <- runRandom

c <- runRandom

return (a,b,c)

ghci> let r = RandGen 10 6152761 122 1000007

ghci> runState threeRandoms r

((10,527305,928721),RandGen {curState = 349697, multiplier = 6152761, increment = 122, modulus = 1000007})

- We can even have an infinite random number stream:

infiniteRandoms :: State RandGen [Int]

infiniteRandoms = do

x <- runRandom

xs <- infiniteRandoms

return (x:xs)

ghci> take 10 $ fst $ runState infiniteRandoms r

[10,527305,928721,349697,2430,104695,803911,624734,84012,138940]

- Find one here.

- I put together some simple implementation for the purpose of the tutorial, but the common practice is to use

Control.Monad.State

- Monads are a design pattern that is frequently used in Haskell code.

- Formally, Monad is a typeclass that has the following type signature and follow the following rules:

class Monad m where

return :: a -> m a

(>>=) :: m a -> (a -> m b) -> m b

(This is not the signature actually used. But I am using this here to avoid technicalities)

m >>= return = m -- right unit

return x >>= f = f x -- left unit

(m >>= f) >>= g = m >>= (\x -> f x >>= g) -- associativity

- With

(>=>), defined as follows, associativity makes more sense.

(>=>) :: Monad m => (a -> m b) -> (b -> m c) -> (a -> m c)

f >=> g = \x -> f x >>= g

return >=> f = f >=> return -- identity of return

(f >=> g) >=> h = f >=> (g >=> h) -- associativity

- Functors, Applicatives, and Monads in Pictures

- Monad in Non-Programming Terms

- Monads are like Burritos

That said, the analogies are never substitute for actual examples. And monads might be elegant, but they are not mysterious anyway.