Gang Wu (吴刚), Junjun Jiang (江俊君), Kui Jiang (江奎), and Xianming Liu (刘贤明)

AIIA Lab, Harbin Institute of Technology, Harbin 150001, China.

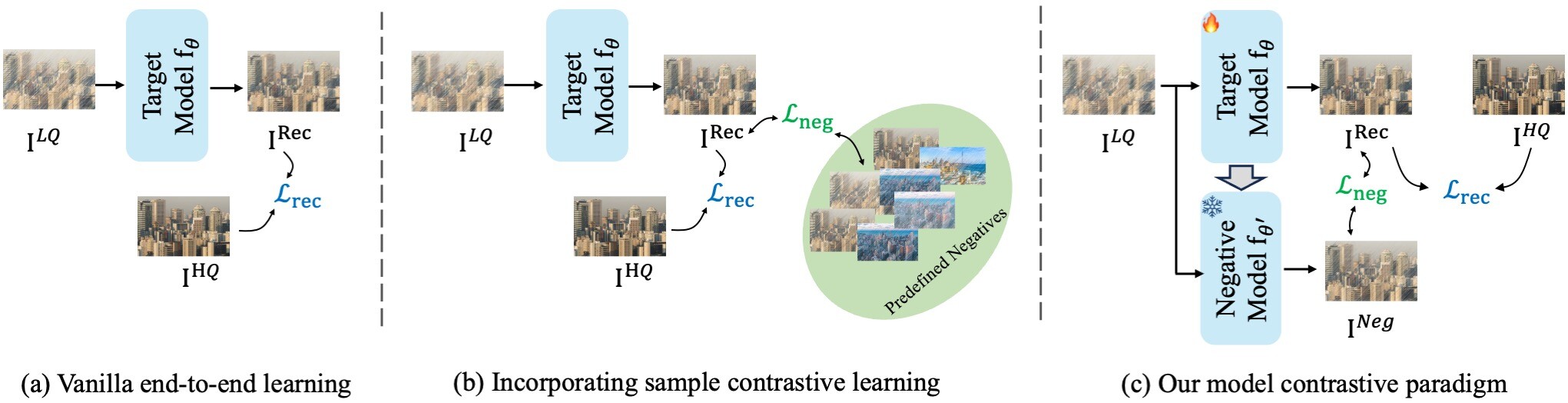

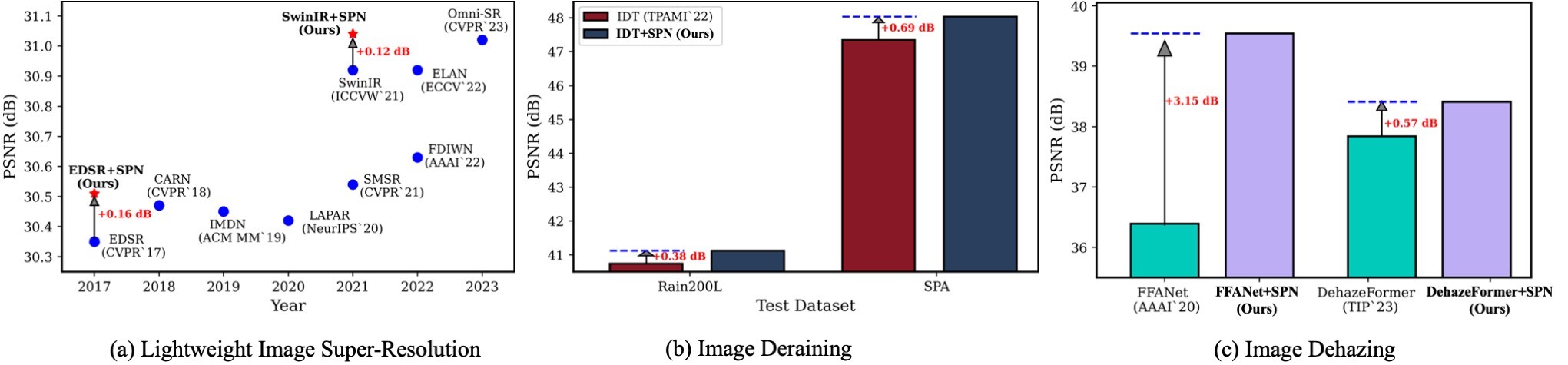

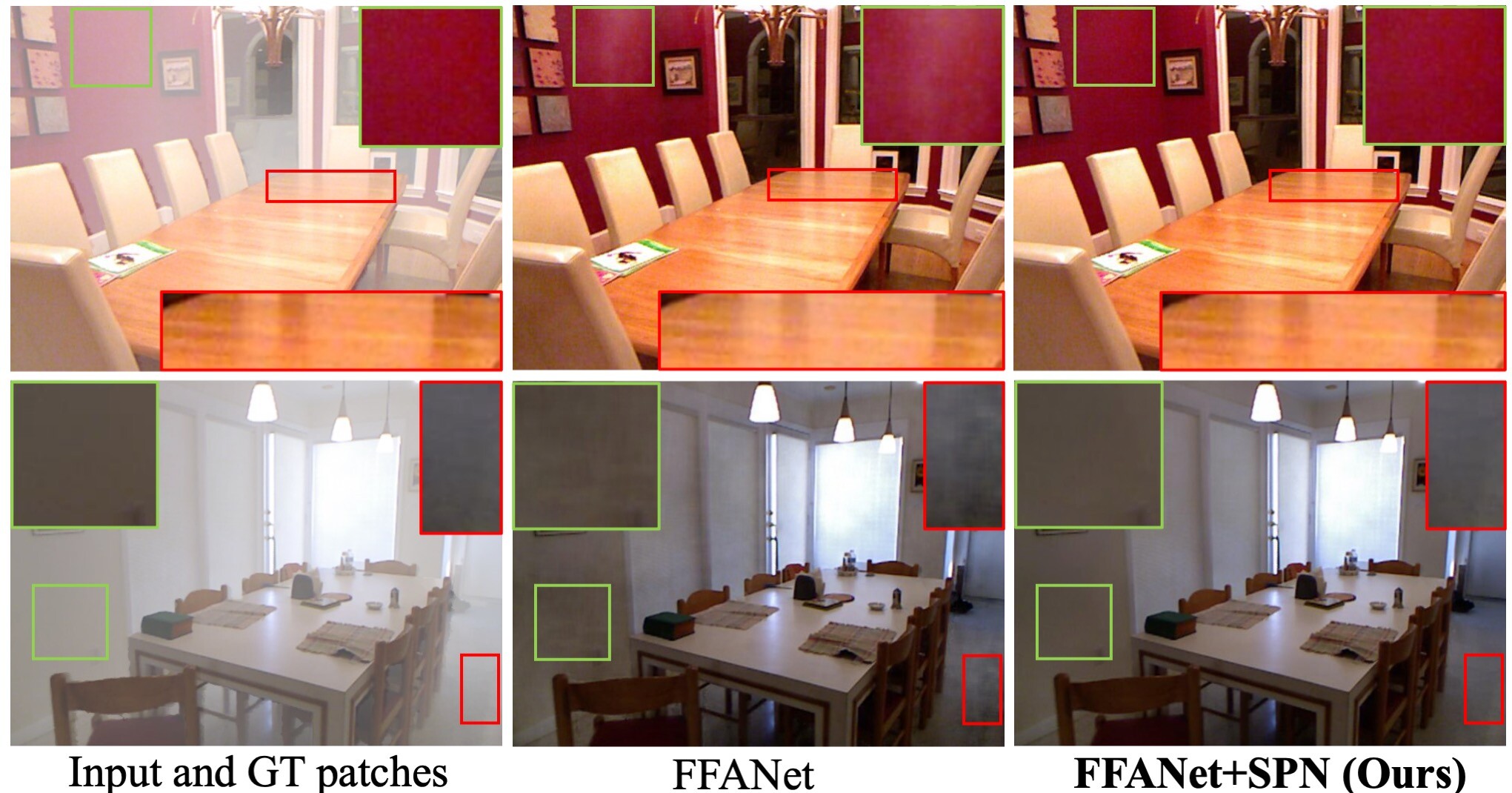

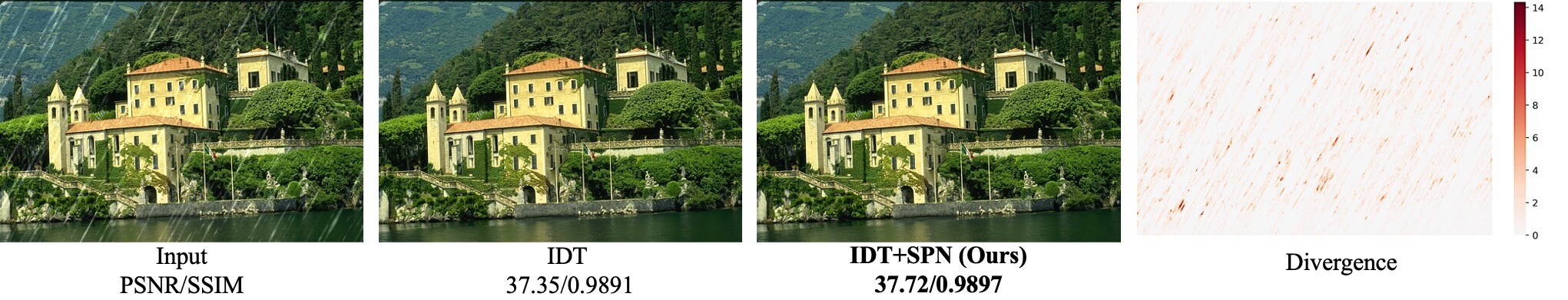

Contrastive learning has emerged as a prevailing paradigm for high-level vision tasks, which, by introducing properly negative samples, has also been exploited for low-level vision tasks to achieve a compact optimization space to account for their ill-posed nature. However, existing methods rely on manually predefined and task-oriented negatives, which often exhibit pronounced task-specific biases. To address this challenge, our paper introduces an innovative method termed 'learning from history', which dynamically generates negative samples from the target model itself. Our approach, named Model Contrastive paradigm for Image Restoration (MCIR), rejuvenates latency models as negative models, making it compatible with diverse image restoration tasks. We propose the Self-Prior guided Negative loss (SPN) to enable it. This approach significantly enhances existing models when retrained with the proposed model contrastive paradigm. The results show significant improvements in image restoration across various tasks and architectures. For example, models retrained with SPN outperform the original FFANet and DehazeFormer by 3.41 dB and 0.57 dB on the RESIDE indoor dataset for image dehazing. Similarly, they achieve notable improvements of 0.47 dB on SPA-Data over IDT for image deraining and 0.12 dB on Manga109 for a 4x scale super-resolution over lightweight SwinIR, respectively.

| Methods | Task & Dataset | PSNR | SSIM |

|---|---|---|---|

| FFANet (Qin et al. 2020b) | 36.39 | 0.9886 | |

| +CR (Wu et al. 2021) | Image Dehazing | 36.74 | 0.9906 |

| +CCR (Zheng et al. 2023) | (SOTS-indoor) | 39.24 | 0.9937 |

| +SPN (Ours) | 39.80 | 0.9947 | |

| EDSR (Lim et al. 2017) | 26.04 | 0.7849 | |

| +PCL (Wu, Jiang, and Liu 2023) | SISR | 26.07 | 0.7863 |

| +SPN (Ours) | (Urban100) | 26.12 | 0.7878 |

There is a simple implementtation of our Model Contrastive Paradigm and Self-Prior Guided Negative Loss.

-def train_iter(model, lq_input, hq_output, current_iter)

+def train_iter(model, negative_model, lq_input, hq_output, current_iter, lambda, update_step):

optimizer.zero_grad()

output = target_model(lq_input)

L_rec = l1_loss(output, hq_gt)

+ ## Add Negative Sample

+ neg_sample = negative_model(lq_input)

+ ## Add Negative Loss

+ L_neg = perceptual_vgg_loss(output, neg_sample)

+ Loss = L_rec + lambda * L_neg

Loss.backward()

optimizer.step()

+ if current_iter % update_step == 0:

+ update_model_ema(negative_model, target_model)

With just a few modifications to your own training scripts, you can easily integrate our approach. Enjoy it!

| Method | Architecture | Scale | Avg. | Set14 | B100 | Urban100 | Manga109 |

| PSNR/SSIM | PSNR/SSIM | PSNR/SSIM | PSNR/SSIM | PSNR/SSIM | |||

| EDSR-light | x2 | 32.06/0.9303 | 33.57/0.9175 | 32.16/0.8994 | 31.98/0.9272 | 30.54/0.9769 | |

| +SPN (Ours) | CNN | 32.19/0.9313 | 33.67/0.9182 | 32.21/0.9001 | 32.23/0.9297 | 30.64/0.9772 | |

| EDSR-light | x4 | 28.14/0.8021 | 28.58/0.7813 | 27.57/0.7357 | 26.04/0.7849 | 30.35/0.9067 | |

| +SPN (Ours) | 28.21/0.8040 | 28.63/0.7829 | 27.59/0.7369 | 26.12/0.7878 | 30.51/0.9085 | ||

| SwinIR-light | x4 | 28.46/0.8099 | 28.77/0.7858 | 27.69/0.7406 | 26.47/0.7980 | 30.92/0.9151 | |

| +SPN (Ours) | Transformer | 28.55/0.8114 | 28.85/0.7874 | 27.72/0.7414 | 26.57/0.8010 | 31.04/0.9158 | |

| SwinIR | x4 | 28.88/0.8190 | 28.94/0.7914 | 27.83/0.7459 | 27.07/0.8164 | 31.67/0.9226 | |

| +SPN (Ours) | 28.93/0.8198 | 29.01/0.7923 | 27.85/0.7465 | 27.14/0.8176 | 31.75/0.9229 |

| Methods | SOTS-indoor | SOTS-mix | ||

| PSNR | SSIM | PSNR | SSIM | |

| (ICCV'19) GridDehazeNet | 32.16 | 0.984 | 25.86 | 0.944 |

| (CVPR'20) MSBDN | 33.67 | 0.985 | 28.56 | 0.966 |

| (ECCV'20) PFDN | 32.68 | 0.976 | 28.15 | 0.962 |

| (AAAI'20) FFANet | 36.39 | 0.989 | 29.96 | 0.973 |

| (Ours) FFANet+SPN | 39.80 | 0.995 | 30.65 | 0.976 |

| (TIP'23) DehazeFormer-T | 35.15 | 0.989 | 30.36 | 0.973 |

| (Ours) DehazeFormer-T+SPN | 35.51 | 0.990 | 30.44 | 0.974 |

| (TIP'23)DehazeFormer-S | 36.82 | 0.992 | 30.62 | 0.976 |

| (Ours) DehazeFormer-S+SPN | 37.24 | 0.993 | 30.77 | 0.978 |

| (TIP'23) DehazeFormer-B | 37.84 | 0.994 | 31.45 | 0.980 |

| (Ours) DehazeFormer-B+SPN | 38.41 | 0.994 | 31.57 | 0.981 |

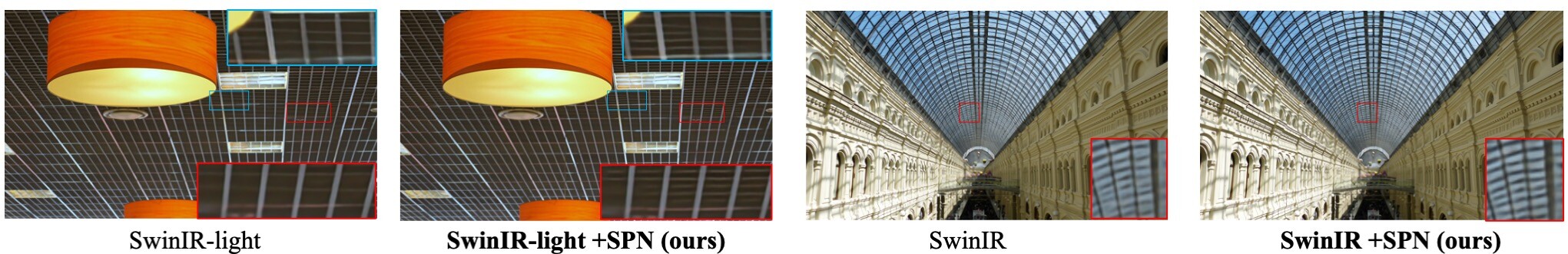

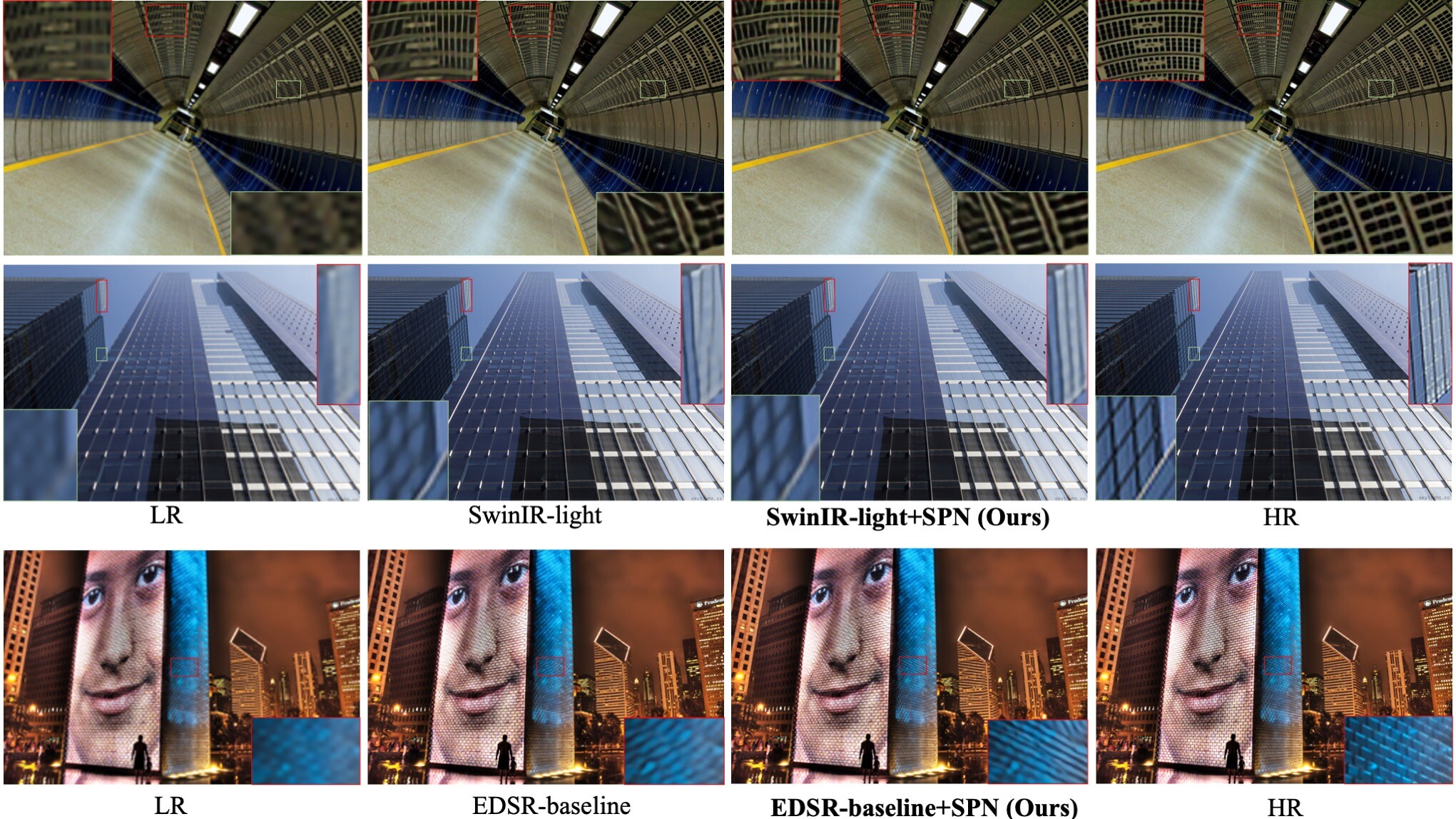

A divergence map delineates the differences, highlighting the improvement achieved by ours, particularly in degraded regions.

A divergence map delineates the differences, highlighting the improvement achieved by ours, particularly in degraded regions.

| Method | Avg. | Rain100L | Rain100H | DID | DDN | SPA |

| undefined | PSNR/SSIM | PSNR/SSIM | PSNR/SSIM | PSNR/SSIM | PSNR/SSIM | PSNR/SSIM |

| (CVPR21) MPRNet | 36.17/0.9543 | 39.47/0.9825 | 30.67/0.9110 | 33.99/0.9590 | 33.10/0.9347 | 43.64/0.9844 |

| (AAAI'21) DualGCN | 36.69/0.9604 | 40.73/0.9886 | 31.15/0.9125 | 34.37/0.9620 | 33.01/0.9489 | 44.18/0.9902 |

| (ICCV'21) SPDNet | 36.54/0.9594 | 40.50/0.9875 | 31.28/0.9207 | 34.57/0.9560 | 33.15/0.9457 | 43.20/0.9871 |

| (ICCV'21) SwinIR | 36.91/0.9507 | 40.61/0.9871 | 31.76/0.9151 | 34.07/0.9313 | 33.16/0.9312 | 44.97/0.9890 |

| (CVPR'22) Uformer-S | 36.95/0.9505 | 40.20/0.9860 | 30.80/0.9105 | 34.46/0.9333 | 33.14/0.9312 | 46.13/0.9913 |

| (CVPR'22) Restormer | 37.49/0.9530 | 40.58/0.9872 | 31.39/0.9164 | 35.20/0.9363 | 34.04/0.9340 | 46.25/0.9911 |

| (TPAMI'23) IDT | 37.77/0.9593 | 40.74/0.9884 | 32.10/0.9343 | 34.85/0.9401 | 33.80/0.9407 | 47.34/0.9929 |

| (Ours) IDT+SPN | 38.03/0.9610 | 41.12/0.9893 | 32.17/0.9352 | 34.94/0.9424 | 33.90/0.9442 | 48.04/0.9938 |

| Method | MIMO-UNet | HINet | MAXIM | Restormer | UFormer | NAFNet | NAFNet+SPN (Ours) |

| PSNR | 32.68 | 32.71 | 32.86 | 32.92 | 32.97 | 32.87 | 32.93 |

| SSIM | 0.959 | 0.959 | 0.961 | 0.961 | 0.967 | 0.9606 | 0.9619 |

| EDSR baseline | SwinIR-light | SwinIR-Large | FFANet |

|---|---|---|---|

| Download | Download | Download | Download |

Quick Evaluation Guide

For quickly evaluating, download the retrained models enhanced by our Model Contrastive Learning. The test scripts for each model are available in their respective repositories: BasicSR, FFANet, DehazeFormer, IDT, and NAFNet. Our gratitude goes out to the authors for their nice sharing of these projects.

- Xu Qin, Zhilin Wang, Yuanchao Bai, Xiaodong Xie, Huizhu Jia: FFA-Net: Feature Fusion Attention Network for Single Image Dehazing. AAAI 2020: 11908-11915

- Bee Lim, Sanghyun Son, Heewon Kim, Seungjun Nah, Kyoung Mu Lee: Enhanced Deep Residual Networks for Single Image Super-Resolution. CVPR Workshops 2017: 1132-1140

- G. Wu, J. Jiang and X. Liu, "A Practical Contrastive Learning Framework for Single-Image Super-Resolution," in IEEE Transactions on Neural Networks and Learning Systems, doi: 10.1109/TNNLS.2023.3290038

- Haiyan Wu, Yanyun Qu, Shaohui Lin, Jian Zhou, Ruizhi Qiao, Zhizhong Zhang, Yuan Xie, Lizhuang Ma: Contrastive Learning for Compact Single Image Dehazing. CVPR 2021: 10551-10560

- Yu Zheng, Jiahui Zhan, Shengfeng He, Junyu Dong, Yong Du: Curricular Contrastive Regularization for Physics-aware Single Image Dehazing. CVPR 2023

If you find this project useful, please consider citing:

@inproceedings{MCLIR,

author = {Gang Wu and

Junjun Jiang and

Kui Jiang and

Xianming Liu},

title = {Learning from History: Task-agnostic Model Contrastive Learning for

Image Restoration},

booktitle = {AAAI},

year = {2024}

}