-

Notifications

You must be signed in to change notification settings - Fork 7.9k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

YOLOv3 low accuracy #5257

Comments

|

|

Thanks for replying. I'll train again with that config. Im using NVIDIA Tesla T4 (1 GPU) on google cloud. But I actually need it to be fast enough for NVIDIA Gtx 1050ti |

|

Also, is there any documentation for all the hyper parameters? |

|

@Devin97 |

|

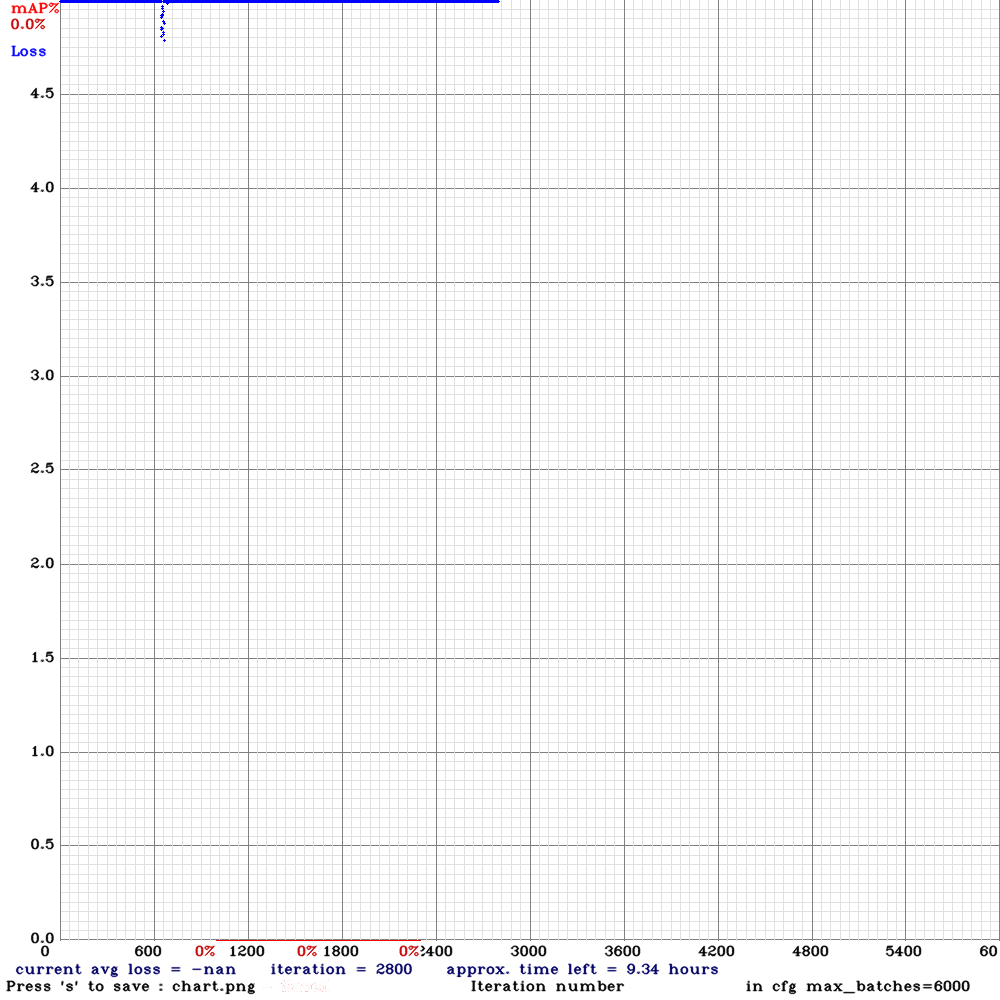

Hey @AlexeyAB This time I have around 6000 images and training for 6000 iterations. Recompiled darknet with these enabled: After 1000 iterations, mAP is 0% and avg loss is -nan. Is this common? |

|

Something goes wrong.

and train again |

Also, is it ok to directly use the full pretrained weights? Do I have to get a portion of these weights using "darknet partial" command? |

|

Better if you will use partial: darknet/build/darknet/x64/partial.cmd Line 12 in 4786d55

|

Getting Segmentation Fault error. Tried to train with final weights again and added these parameters as you suggested Still facing the same issue Here's my cfg file |

|

|

I see, I'll train again with learning_rate=0.001 |

|

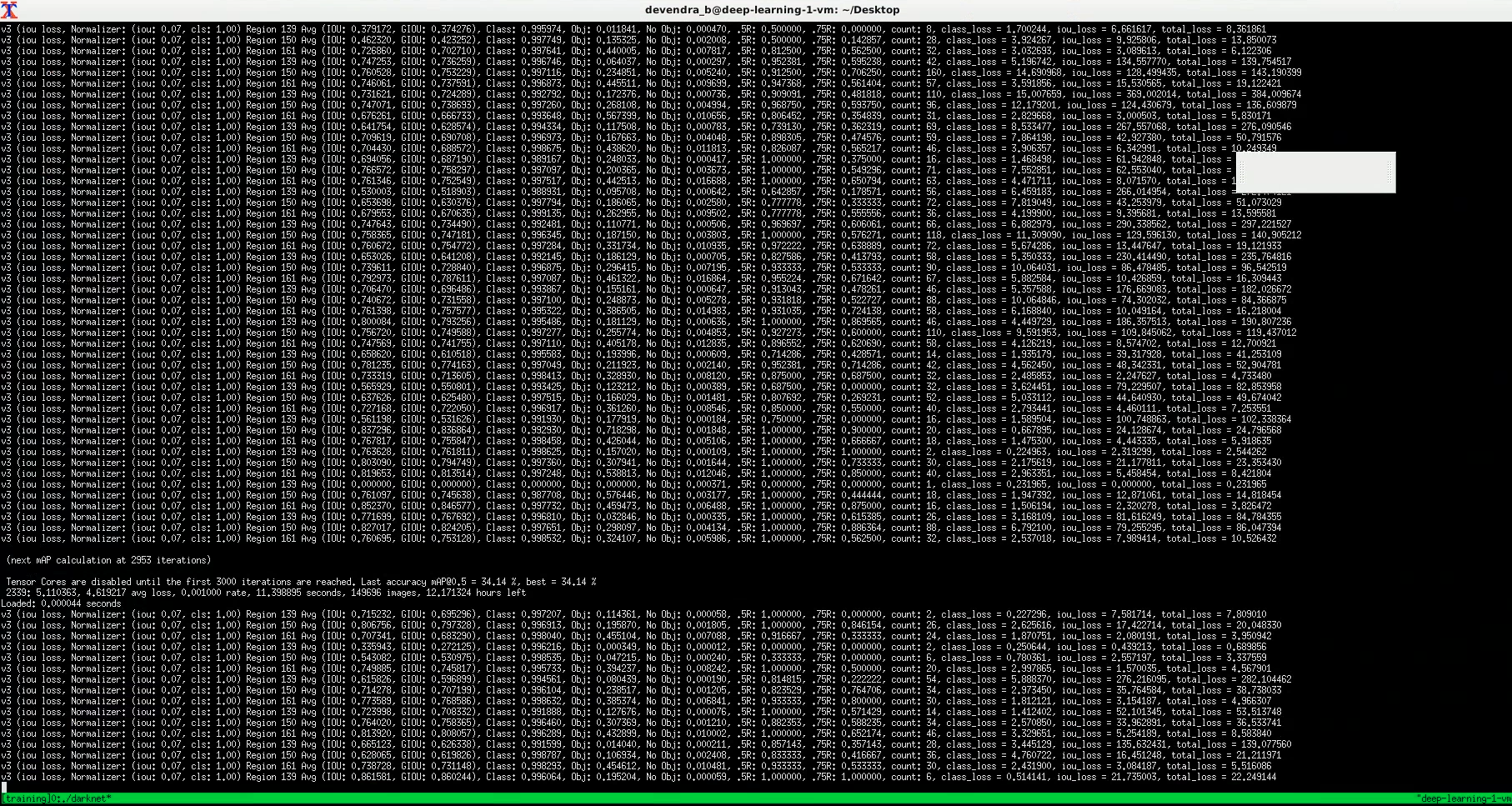

Exploding gradients(backward) / features(forward): https://machinelearningmastery.com/exploding-gradients-in-neural-networks/ To solve this:

|

|

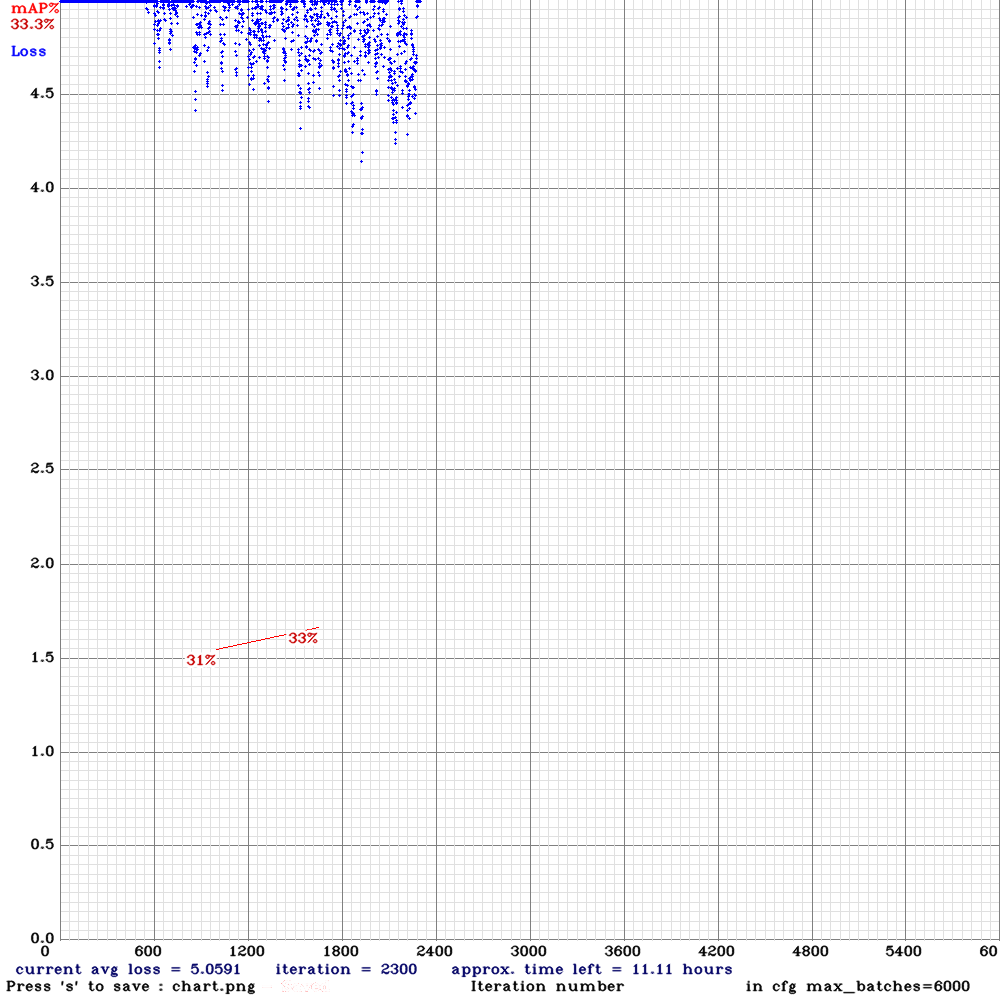

Hey @AlexeyAB Avg loss seems to be not decreasing much. Is it normal for this to happen at the beginning of the training with this cd53paspp config? or something's wrong? |

|

avg loss for cd53paspp is higher than for yolov3, but the mAP is also higher. Also the lower learning_rate= - the more stable training - but training is slower. So you should find optimal learning rate.

|

|

My current configurations has and I've added max_delta = 3 in yolo layers.

I'll also try this out on next training session. I'll post my results when the training is finished. Thanks for the help @AlexeyAB ! |

|

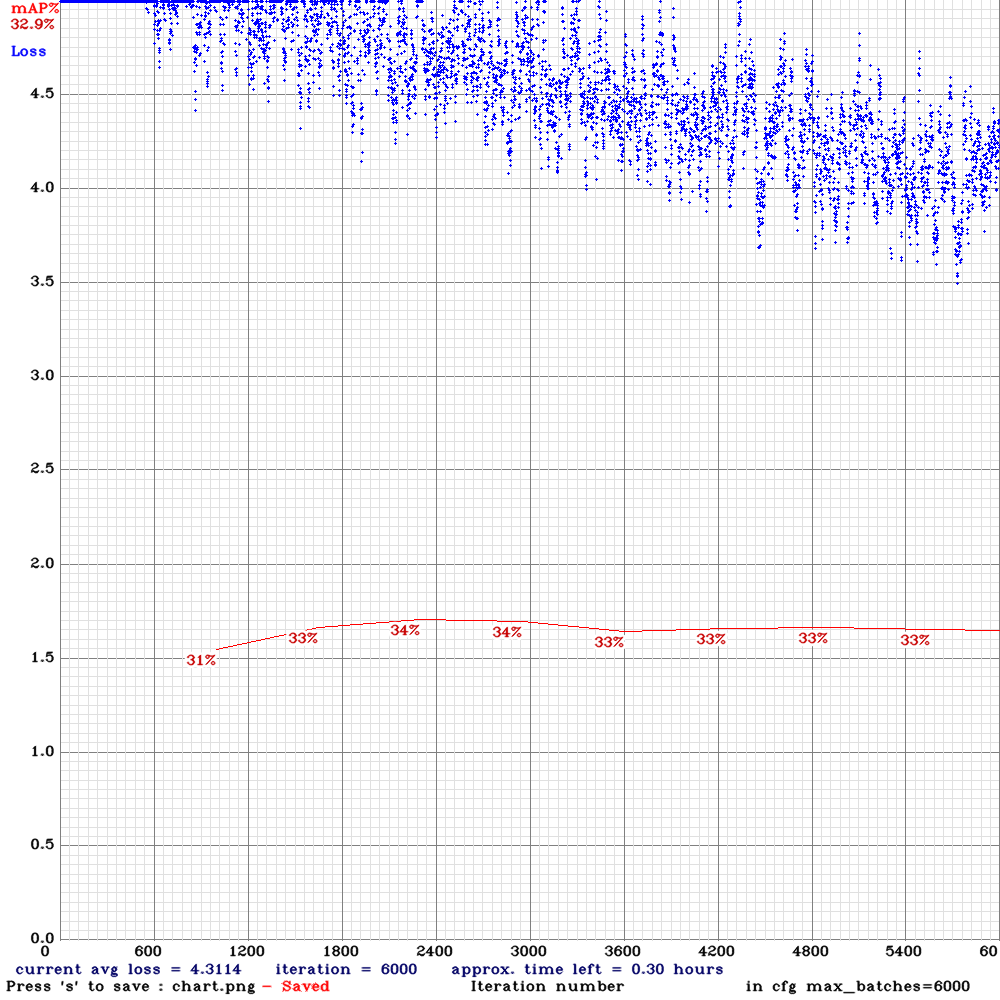

Accuracy didn't improve, its almost close to yolov3 config. Performed detection on a 1min clip. The FPS is around 9, but detection is somewhat more stable as compared to yolov3. Video: https://drive.google.com/open?id=1mMe-S2XL2InaTjhzEQAc3O50fpyPRxlF It might be overfitting.. |

|

Try to train again: use And set 2x higher max_batches=12000 and steps=... If the avg loss Nan will occur, then train with |

So Im training with the parameters above for 12000 iterations. While its training, I wanted to ask, what is the expected FPS rate with cd53paspp-gamma cfg. On previous training I got around 9 FPS, which does makes sense, since it has more layers, detections are supposed to be slow? Is this correct? |

|

0.9x speed, but 1.3x higher accuracy. |

|

How did you let mAP show on that chart? |

@AlexeyAB whats the -clear mean? and is this flag necessary ?ts |

|

@Jureong

Use -map flag |

Hey @AlexeyAB I've trained full yolov3 with pedestrian dataset from OpenImages. [Downloaded using OIDToolkitv4]

Train: 2400

Valid: 600

(Split from same dataset)

Trained for 6000 Iterations, used darknet53.conv.74 pretrained weights

batch = 64

subdivisions = 32

width & height = 416

The text was updated successfully, but these errors were encountered: