New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Blob upload results in excessive memory usage/leak #894

Comments

|

we're about to upgrade from v8 to v10. But then I saw this, it looks like a show stopper? |

Depends on the size of the files you want to upload and the memory available - the memory is released when the upload is complete, it is only during uploading the memory is not released. |

|

I ran into the same problem when upload large file (> 5G) .Net Core 3.0.0-preview6 & Microsoft.Azure.Storage.Blob version 10.0.3

|

@pawboelnielsen May I ask in what condition that files will be corrupted in Version 9.3.3? I think I have to go back to 9.3.3. |

|

@djj0809 There was an issue if an upload was aborted before completed, then upon retrying the upload with the same file name we would get an error: The specified blob or block content is invalid. |

A big thank you for your really quick response:) |

|

I'm seeing the same issue (OutOfMemoryException) uploading a 3.5GB file with UploadFromFileAsync in v10.0.0.3 |

|

Can't even work around it with using (var s = File.OpenRead("large-file.zip"))

{

await targetBlob.UploadFromStreamAsync(s);

} |

Internally both methods are using the same upload implementation. |

|

I'd be interested to know if there are any plans to fix this issue soon, or whether there is an underlying technical limitation that requires the entire file to be held in memory. I recently updated our application to the latest version of Microsoft.Azure.Storage.Blob following the advice given here, but this high memory usage is an absolute showstopper for us, and looks like I'm going to have to roll everything back to WindowsAzure.Storage 9.3.3. Interestingly it doesn't seem to be a problem if the source isn't from a file. I can use |

I browsed some pieces of code (trying to find the bug), I think if we wrap FileStream with some un-seekable stream (aka CanSeek = false), it may not have this problem. Code internally seems will determine if it's a seekable stream, and will do some optimization for seekable stream (which sauses this issue). |

|

Yeah I had a quick browse through the code, with Not 100% sure about whether just setting |

|

@markheath What's the memory profile usage for blob to blob sample. I would like someone on the team to officially consider this a break in functionality. |

|

From watching task manager, it slowly ramps up to about 200Mb-300Mb during the copy, and then presumably a GC collect occurs and memory usage drops back down to 50Mb. Managed to copy the whole 3.5GB blob using the technique shown above in 12 minutes with the memory usage of the app not going above about 300MB most of the time, although towards the end of the copy it did reach 500Mb at one point. Presumably though that's just about when GC's occur. It's only when I try to upload the same thing from a local file that I get the memory leak issue. |

|

Pretty healthy then. Could try increasing the block size closer to the upper bound (100mb) to reduce the number of parallel streams, the 4mb default could be modelling smaller payloads. But to be honest the implementation seems a little sketchy. |

Using V9.3.3 with my simple upload code at no point the memory consumption goes above 30K even if the file being uploaded is 50GB. That is where we should be when this hopefully gets fixed. |

|

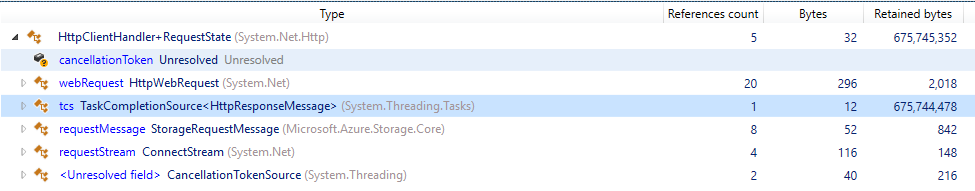

Here's a trace from DotMemory half way through an upload of a large file. As you can see it's being held onto by the I'm wondering if its this code in Task cleanupTask = uploadTask.ContinueWith(finishedUpload =>

{

localBlock.Dispose();

});Update: Having explored a bit more, I'm not sure the multi-stream code is running at all during the memory leak. Looks like |

|

Still trying to get to the bottom of this, and made an interesting discovery. I noticed that the docs say that Trying to understand from the code why that should be the case has been a bit harder. It looks like I've yet to try with a really huge file (e.g. 50GB) yet, so not sure if upping the |

|

I've managed to upload a 50GB file successfully with a thread count of 2, so the high memory usage clearly only applies when thread count is 1. And it looks like from #909 you can also avoid the high memory usage by increasing |

|

Thanks @markheath ! |

|

Setting |

|

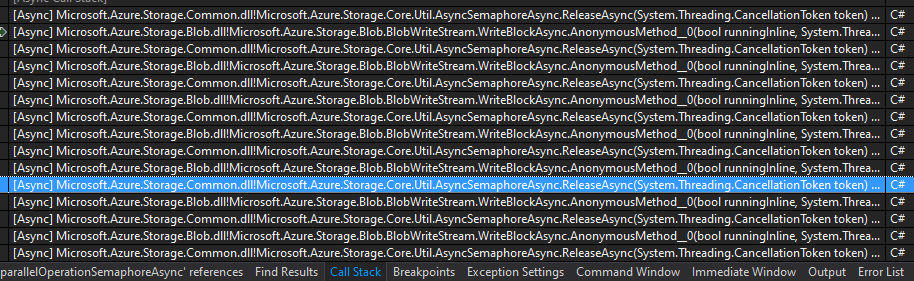

@markheath -- Thanks for your work looking into this. I'm now freed up and looking into this on our end, and it's been helpful. Your stack trace involving the async semaphore's ReleaseAsync method are interesting. In fact, I think it's the likely culprit here. There is a simple issue with its implementation which could lead to unnecessary recursion. If you've got the source, see AsyncSemaphore.Common: Awaiting a task and returning the results is unnecessary: just return the task. A 5GB file no longer blows up memory. I've got a debug run with a 50GB file and I'm seeing much better behavior than before. |

|

Nope... the multibuffer is still accumulating. I'll keep looking at this. |

|

I found cases of the multibuffer not being disposed of. I've added some tests to verify the delta peak working set is < 128 MB (what I have been seeing in testing on my machine is an absolute peak of < 64 MB). After further checks, I'll see about getting this in place for our next release. |

|

That sounds great, thank you for looking into this. I look forward to trying out the updated version. |

|

Fixed in v11.0.0. |

|

Hi @seanmcc-msft, just to clarify: was this fixed for uploading a file using |

|

Hi @GFoley83 , if I recall correctly, it was fixed in both. -Sean |

|

Hi @seanmcc-msft , @kfarmer-msft , Could you confirm that the StackOverflow issue is resolved entirely with the blob.UploadFromStreamAsync(stream) API? Still seeing some StackOverflow issues with larger file uploads on v11.1.3? |

|

I am still getting the StackOverflow exception when trying to upload large blobs (i.e. more than 2GB) with ParallelOperationThreadCount=1. When using ParallelOperationThreadCount > 1 the operation is successful. I am using Microsoft.Azure.Storage.Blob nuget version 11.0.0 but it also happens with the latest version (11.2.2). |

|

@CooperRon have you tried increasing the block size (setting the |

I was always trying with retry policy (exponential) and now I have tested with different StreamWriteSizeInBytes values:

|

Which service(blob, file, queue, table) does this issue concern?

Blob

Which version of the SDK was used?

Microsoft.Azure.Storage.Blob -Version 10.0.3

Which platform are you using? (ex: .NET Core 2.1)

.net core 2.2.300

What problem was encountered?

OutOfMemory / excessive memory usage

How can we reproduce the problem in the simplest way?

Run the program, open Task Manager and "enjoy" how the memory usage of dotnet.exe explodes as the file gets uploaded, the memory usages increases directly with amount of bytes uploaded sofar. So if you attempt to upload a 50GB file you will need +50GB of free memory.

Have you found a mitigation/solution?

Last working version is WindowsAzure.Storage -Version 9.3.3, newer versions contains the issue.

The text was updated successfully, but these errors were encountered: