-

Notifications

You must be signed in to change notification settings - Fork 1.1k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Feature Request: Add Mish activation #484

Comments

|

function mish(x) { function derivativeOfMish(y) { |

|

Can you please add mish function,that i provided, i already tested it on my custom neural network and it works great,better than sigmoid and tanh ! on XOR |

|

That sounds great. Would love to see as contributor though. |

|

Keep in mind we have the GPU implementations as well. |

|

@mubaidr how can i apply this function as contributor? |

|

@extremety1989 are you planning to submit a PR? |

|

@digantamisra98 no, sometimes it returns NaN when learning rate is 0.1, i do not know what is the probleme,maybe javascript |

|

@extremely1989 Mish has a Softplus operator which needs proper threshold to fix that NaN issue you might be facing. |

|

@digantamisra98 my threshold is 0.5, i how much should i turn ? |

|

@extremety1989 the Softplus operator thresholds that Tensorflow use is in the range of [0,20] |

Mish is a new novel activation function proposed in this paper.

It has shown promising results so far and has been adopted in several packages including:

All benchmarks, analysis and links to official package implementations can be found in this repository

It would be nice to have Mish as an option within the activation function group.

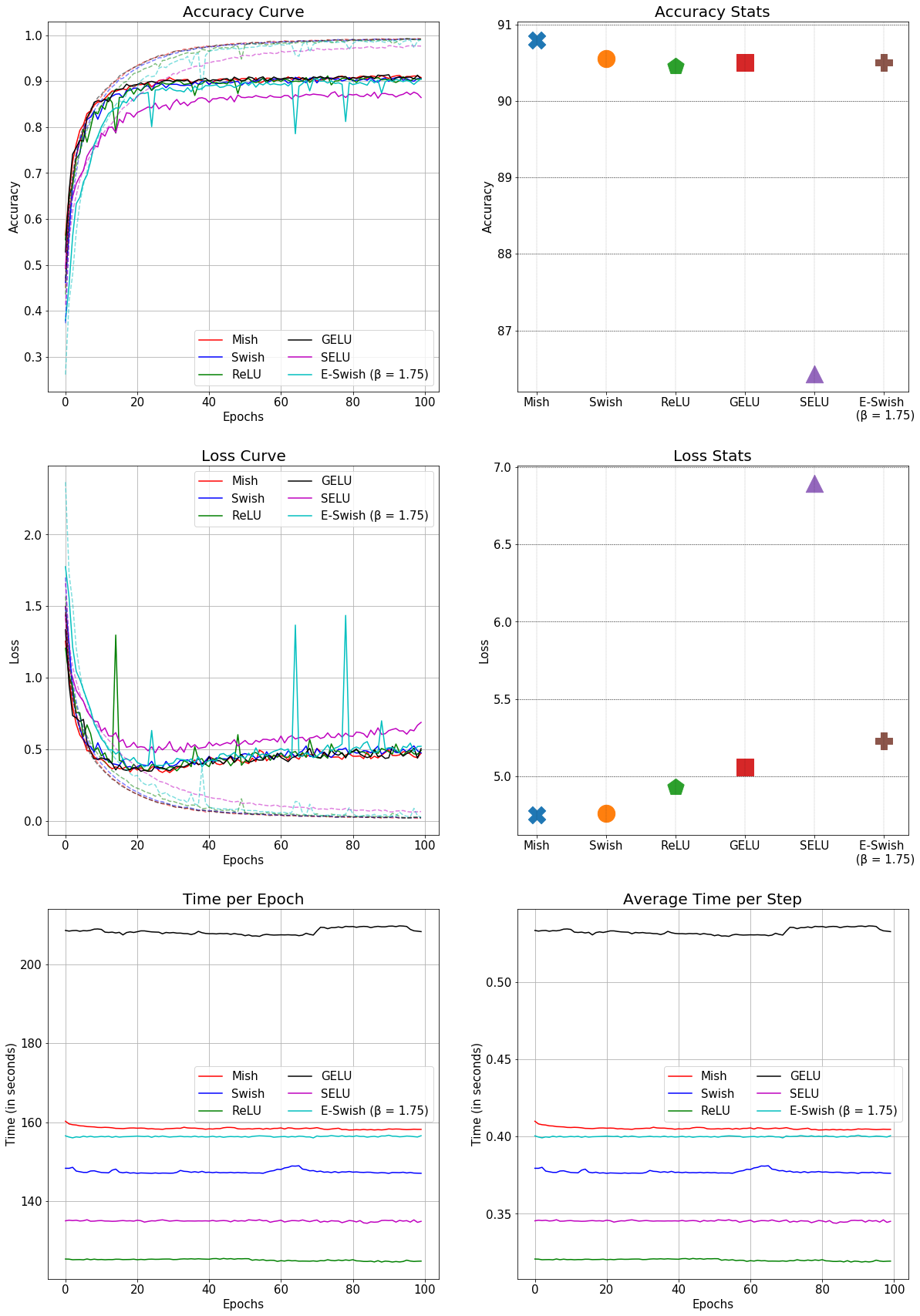

This is the comparison of Mish with other conventional activation functions in a SEResNet-50 for CIFAR-10:

The text was updated successfully, but these errors were encountered: